#AI powered Face Recognition System

Explore tagged Tumblr posts

Text

Explore how Facial recognition attendance software is transforming modern workplaces. This insightful article from wAnywhere explains how the technology enhances accuracy, prevents time theft, supports remote teams, and streamlines HR operations with real-time data and automation.

#wanywhere#Face Recognition attendance software#Technology#Role of face recognition system#AI powered Face Recognition System

0 notes

Text

Art. Can. Die.

This is my battle cry in the face of the silent extinguishing of an entire generation of artists by AI.

And you know what? We can't let that happen. It's not about fighting the future, it's about shaping it on our terms. If you think this is worth fighting for, please share this post. Let's make this debate go viral - because we need to take action NOW.

Remember that even in the darkest of times, creativity always finds a way.

To unleash our true potential, we need first to dive deep into our darkest fears.

So let's do this together:

By the end of 2025, most traditional artist jobs will be gone, replaced by a handful of AI-augmented art directors. Right now, around 5 out of 6 concept art jobs are being eliminated, and it's even more brutal for illustrators. This isn't speculation: it's happening right now, in real-time, across studios worldwide.

At this point, dogmatic thinking is our worst enemy. If we want to survive the AI tsunami of 2025, we need to prepare for a brutal cyberpunk reality that isn’t waiting for permission to arrive. This isn't sci-fi or catastrophism. This is a clear-eyed recognition of the exponential impact AI will have on society, hitting a hockey stick inflection point around April-May this year. By July, February will already feel like a decade ago. This also means that we have a narrow window to adapt, to evolve, and to build something new.

Let me make five predictions for the end of 2025 to nail this out:

Every major film company will have its first 100% AI-generated blockbuster in production or on screen.

Next-gen smartphones will run GPT-4o-level reasoning AI locally.

The first full AI game engine will generate infinite, custom-made worlds tailored to individual profiles and desires.

Unique art objects will reach industrial scale: entire production chains will mass-produce one-of-a-kind pieces. Uniqueness will be the new mass market.

Synthetic AI-generated data will exceed the sum total of all epistemic data (true knowledge) created by humanity throughout recorded history. We will be drowning in a sea of artificial ‘truths’.

For us artists, this means a stark choice: adapt to real-world craftsmanship or high-level creative thinking roles, because mid-level art skills will be replaced by cheaper, AI-augmented computing power.

But this is not the end. This is just another challenge to tackle.

Many will say we need legal solutions. They're not wrong, but they're missing the bigger picture: Do you think China, Pakistan, or North Korea will suddenly play nice with Western copyright laws? Will a "legal" dataset somehow magically protect our jobs? And most crucially, what happens when AI becomes just another tool of control?

Here's the thing - boycotting AI feels right, I get it. But it sounds like punks refusing to learn power chords because guitars are electrified by corporations. The systemic shift at stake doesn't care if we stay "pure", it will only change if we hack it.

Now, the empowerment part: artists have always been hackers of narratives.

This is what we do best: we break into the symbolic fabric of the world, weaving meaning from signs, emotions, and ideas. We've always taken tools never meant for art and turned them into instruments of creativity. We've always found ways to carve out meaning in systems designed to erase it.

This isn't just about survival. This is about hacking the future itself.

We, artists, are the pirates of the collective imaginary. It’s time to set sail and raise the black flag.

I don't come with a ready-made solution.

I don't come with a FOR or AGAINST. That would be like being against the wood axe because it can crush skulls.

I come with a battle cry: let’s flood the internet with debate, creative thinking, and unconventional wisdom. Let’s dream impossible futures. Let’s build stories of resilience - where humanity remains free from the technological guardianship of AI or synthetic superintelligence. Let’s hack the very fabric of what is deemed ‘possible’. And let’s do it together.

It is time to fight back.

Let us be the HumaNet.

Let’s show tech enthusiasts, engineers, and investors that we are not just assets, but the neurons of the most powerful superintelligence ever created: the artist community.

Let's outsmart the machine.

Stéphane Wootha Richard

P.S: This isn't just a message to read and forget. This is a memetic payload that needs to spread.

Send this to every artist in your network.

Copy/paste the full text anywhere you can.

Spread it across your social channels.

Start conversations in your creative communities.

No social platform? Great! That's exactly why this needs to spread through every possible channel, official and underground.

Let's flood the datasphere with our collective debate.

71 notes

·

View notes

Text

One way to spot patterns is to show AI models millions of labelled examples. This method requires humans to painstakingly label all this data so they can be analysed by computers. Without them, the algorithms that underpin self-driving cars or facial recognition remain blind. They cannot learn patterns.

The algorithms built in this way now augment or stand in for human judgement in areas as varied as medicine, criminal justice, social welfare and mortgage and loan decisions. Generative AI, the latest iteration of AI software, can create words, code and images. This has transformed them into creative assistants, helping teachers, financial advisers, lawyers, artists and programmers to co-create original works.

To build AI, Silicon Valley’s most illustrious companies are fighting over the limited talent of computer scientists in their backyard, paying hundreds of thousands of dollars to a newly minted Ph.D. But to train and deploy them using real-world data, these same companies have turned to the likes of Sama, and their veritable armies of low-wage workers with basic digital literacy, but no stable employment.

Sama isn’t the only service of its kind globally. Start-ups such as Scale AI, Appen, Hive Micro, iMerit and Mighty AI (now owned by Uber), and more traditional IT companies such as Accenture and Wipro are all part of this growing industry estimated to be worth $17bn by 2030.

Because of the sheer volume of data that AI companies need to be labelled, most start-ups outsource their services to lower-income countries where hundreds of workers like Ian and Benja are paid to sift and interpret data that trains AI systems.

Displaced Syrian doctors train medical software that helps diagnose prostate cancer in Britain. Out-of-work college graduates in recession-hit Venezuela categorize fashion products for e-commerce sites. Impoverished women in Kolkata’s Metiabruz, a poor Muslim neighbourhood, have labelled voice clips for Amazon’s Echo speaker. Their work couches a badly kept secret about so-called artificial intelligence systems – that the technology does not ‘learn’ independently, and it needs humans, millions of them, to power it. Data workers are the invaluable human links in the global AI supply chain.

This workforce is largely fragmented, and made up of the most precarious workers in society: disadvantaged youth, women with dependents, minorities, migrants and refugees. The stated goal of AI companies and the outsourcers they work with is to include these communities in the digital revolution, giving them stable and ethical employment despite their precarity. Yet, as I came to discover, data workers are as precarious as factory workers, their labour is largely ghost work and they remain an undervalued bedrock of the AI industry.

As this community emerges from the shadows, journalists and academics are beginning to understand how these globally dispersed workers impact our daily lives: the wildly popular content generated by AI chatbots like ChatGPT, the content we scroll through on TikTok, Instagram and YouTube, the items we browse when shopping online, the vehicles we drive, even the food we eat, it’s all sorted, labelled and categorized with the help of data workers.

Milagros Miceli, an Argentinian researcher based in Berlin, studies the ethnography of data work in the developing world. When she started out, she couldn’t find anything about the lived experience of AI labourers, nothing about who these people actually were and what their work was like. ‘As a sociologist, I felt it was a big gap,’ she says. ‘There are few who are putting a face to those people: who are they and how do they do their jobs, what do their work practices involve? And what are the labour conditions that they are subject to?’

Miceli was right – it was hard to find a company that would allow me access to its data labourers with minimal interference. Secrecy is often written into their contracts in the form of non-disclosure agreements that forbid direct contact with clients and public disclosure of clients’ names. This is usually imposed by clients rather than the outsourcing companies. For instance, Facebook-owner Meta, who is a client of Sama, asks workers to sign a non-disclosure agreement. Often, workers may not even know who their client is, what type of algorithmic system they are working on, or what their counterparts in other parts of the world are paid for the same job.

The arrangements of a company like Sama – low wages, secrecy, extraction of labour from vulnerable communities – is veered towards inequality. After all, this is ultimately affordable labour. Providing employment to minorities and slum youth may be empowering and uplifting to a point, but these workers are also comparatively inexpensive, with almost no relative bargaining power, leverage or resources to rebel.

Even the objective of data-labelling work felt extractive: it trains AI systems, which will eventually replace the very humans doing the training. But of the dozens of workers I spoke to over the course of two years, not one was aware of the implications of training their replacements, that they were being paid to hasten their own obsolescence.

— Madhumita Murgia, Code Dependent: Living in the Shadow of AI

71 notes

·

View notes

Text

drabble - "display only"

G - ~1000 words prototype!Moon ("Moondrop") might turn this into a full fic.. some day! i have schemes... for now, enjoy--as this wip has been sitting on the shelf for too long!

The world is so, so small. The routine never changes. An orchestra where all the notes are perfect and predetermined, a waltz without a single misstep.

At 6:30 am sharp, blistering lights and theme music kick on automatically. The animatronic is alerted from its rest cycle, a nagging popup in its code to exit low power protocols. Optics flicker open, sounding as if a camera shutter going off, swift and keen as a gunshot. The scanners readjust, pupils dilating to accommodate the right shutter speed to capture light.

The view before it never changes. Mundane. The Moondrop settles into its perch.

An obedient, oversized toy.

Capable of motion, yet kept on a short leash. The animatronic neatly tucks away its charging wires, and enters the Sun’s iconic pose as prompted by its hardwired scheduling. The first cycle of the day is always a Sun showcase, no if ands or buts.

The Moondrop cannot complain, but some days, it finds itself wishing to. Wearing a mask of pure sunshine rapidly depletes its energy resources. Constantly running the risk of powering down before the gift shop closes for the evening.

But. Not like anyone would listen to its “concerns.”

Arms outstretched to the heavens, warm and inviting to greet customers into the toy shop. Its joints and hinges creak and whine in protest. Finger segments wiggle, loosening its stiff posture. Perfectly calculating for areas in its stance to appear imperfect, to sell the optical illusion of humanity and warmth.

Optics cycle to a glowing, milky white. The rays spin out, beginning their slow, metronome, clockwork rotation. The low hum inside its faceplate indicates that the ray’s belt tracks are in working order, although, if anyone asked the animatronic–it would say that they need a little oiling. The slight vibration keeps ever so subtly shaking its faceplate, souring its “mood.”

An imposter masquerading as its brighter half.

Without a companion Sun AI coded into its circuits, the lone Moondrop can only follow prompts that are disorientingly alien to its suggested personality module. The chameleon change is convincing from afar. Perfect, as long as it's not prompted for dialogue. Its improvisational database is underutilized, its voicebox out of tune. When it attempts vocalization, a gravelly rasp drawls out, no matter the mask at the forefront.

Pointed shoes heel-toe around the platform beneath it, until it hears a click. It is locked into position. A human would quickly tire of the enthusiastic pose, arms shaking. A robot can be set on display for eternity.

Keys jingle just outside the thick glass of the display case. Naturally, the celestial animatronic is stationed at the front, right within view of the gift shop’s towering windows. Enticing the curious to wander in closer, and then wrangle them with the appeal of merchandise and colorful toys that kids can’t resist.

The Moondrop checks its internal clock system.

At 7:00 am, usually more so 7:02 am, a human employee opens up the gift shop. The names and faces are lost on the animatronic’s limited “socializing” capabilities. Facial recognition was proposed after the completion of it, to install security protocols. All features that a mere prototype, a proof of concept, shouldn’t need to access.

Designed for display only.

Instead, it remembers employees by their reactions. By their voices. A few always startle and jump, so it has learned to restrict its movement in the presence of most humans. Denying itself the slightest swivel of its neck hinge, peering through the periphery of imperfect optics. A dead pixel flickers on its gridded gaze.

“Good morning, Sun!” The employee calls out, unlocking the front doors for customers with the continued chorus of jingles. The animatronic resists stirring. The urge to yell out a cheery hello drums in its circuitry, grating and too loud.

“Enjoying your imprisonment today, too?” The human jokes, a relentless solo act. They swing their keyset, which is weighted with enough keychain charms to kill a man.

“Jeez, what they put you in for, jester crimes?” They tease. The “Sun” can’t respond, though latent programming latches onto the joke with hunger.

There’s a tickle in its circuitry, a surge of electricity flickering through its wires, preparing the dialogue for a quip back. But when the command finishes executing, there is only null code. Blank. Empty. The sensation fizzles out completely. A statement left unfinished.

The human walks around the radius of the display case. “Looks like we need to call in a cleaner.” They swipe a finger against the glass, frowning as they leave a trail in the fogged up muck. “Or is that in my job description…?” The human talks to themself, never expecting “Sun” to respond. Only listen.

Thankfully, Moondrop loves to listen. To expand its definitions.

While the human amuses themself, the database scrolls through a dictionary.

/ɪmˈprɪznmənt/ [uncountable] imprisonment (for something) the act of putting somebody in a prison or another place from which they cannot escape; the state of being there.

Yes, imprisonment– that is what “life” feels like. In theory.

But the emotions behind the definition are not correct, nor applicable. It does not yearn for freedom. The concept is too human. No, it merely gets curious, at times. A little intrigued. Nothing more.

Before its lenses trickle in the hustle and bustle of a crowd.

Now and then, fingerprints press against the display case, the animatronics eternal tomb. A child– a young boy, from its rudimentary calculations, keeps pointing to it. Stars sparkle in his eyes, fearless. His mother tugs him away, insisting that she will buy him something else later. After eavesdropping over countless years, the Moondrop prototype has deduced that the prices at the gift shop were absurd, for an average income family’s standards.

By 10:00 am, the mall is abuzz with families, loiterers, hungry for deals and entertainment. A teen slams their hand against its display case. Long ago such actions would rise a reaction from the Moondrop, but it has since desensitized to the unstructured chaos of humans.

Instead, it focuses on counting each minute until the "Moon" showcase swaps in around 12:00 pm.

Until then, the world continues on, while it remains stuck within a vault of glass.

#as i upload this i am hexed with more ideas. Ahhh!!#perhaps some day... >:0#pom writes#fnaf#dca community#dca fandom#moon fnaf#dca fanfic#prototype!moon#fnaf daycare attendant#fnaf sb

52 notes

·

View notes

Note

pt 2 to house chores (sorry i hit send too soon 🫣)

would they be all "challenge accepted i got this" or "fuck no im hiring someone 4 that"

Modern Mafia AU for the rest of these idiots because it's fun to involve technology

Indra – the sink disaster

The pipe under the kitchen sink bursts.

Water starts pooling beneath the cabinets.

Ivy’s away, the twins are yelling about a water war, and Ame is walking around barefoot.

Indra walks in, eyes narrowing.

-Move aside.

He rolls his sleeves up and ducks under the sink like a war general surveying the field.

Tools are already in his hand.

He doesn’t complain.

He doesn’t sigh.

He just fixes it.

Fifteen minutes later, the pipe is sealed with surgical precision, the cabinet is wiped dry, and the twins have been sent to mop the rest of the floor as punishment for “encouraging chaos.”

As he tightens the final screw, Raizen lingers in the doorway.

-You should've called someone. That's not your job.

-Everything in this house is my job.- Indra mutters, wiping his hands on a towel.

He doesn’t say it, but Ivy’s kitchen is sacred.

If it breaks, he fixes it.

Period.

Obito – Yard War

Obito’s house has a small backyard.

He rarely uses it—until the HOA sends him a passive-aggressive letter: “Weeds over regulation height. Please address.”

-The hell is a regulation weed?

He tries to mow the lawn with an ancient, rusting lawnmower he finds in the shed.

It sputters once.

Twice.

Dies.

-Fine. Fuck you too.

He attacks the weeds with a kitchen knife, a beer in his other hand.

Neighbors peek over fences.

A child cries somewhere.

Obito ends up shirtless, covered in grass, dirt on his face, declaring war on a particularly stubborn dandelion.

He does finish the job, but only out of spite.

The yard is lopsided.

Half the grass is dead.

He proudly takes a picture and sends it to the HOA anyway, middle finger up.

Two days later, a landscaper shows up.

-Courtesy of your neighbor, Uchiha Itachi,- the man says.

Obito doesn’t speak to Itachi for a week.

Shisui – closet crisis

Shisui’s house is minimalist on the outside, but inside it's a curated mess of clothes.

He’s good-looking and knows it, with a walk-in closet full of statement pieces.

The problem?

The closet rail holding all his jackets collapses with a loud crack at 7 a.m.

-No, no, no, no, no…- he mutters, staring at a mountain of black and leather on the floor.

He squats beside it like he’s at a funeral.

Instead of calling someone, he decides he’s got this.

Shisui goes full DIY mode—YouTube tutorials, power drill, motivational playlist.

He wears sunglasses indoors while fixing it.

At some point he ends up shirtless, holding the drill wrong, FaceTiming Itachi just to show him the screw he finally got in.

-That’s the wrong wall, cousin.

He stares.

-...That explains the breeze.

Three holes later, he gives up and calls the professional.

But insists on finishing the closet lighting himself.

It flickers every time you open the door, like a nightclub.

He likes it that way.

Itachi – tech meltdown

Itachi’s house is sleek. Immaculate.

Every device is smart—lights, thermostat, security, even the coffee machine.

Until the system glitches after a storm.

Lights start flickering.

Music plays at random.

The security app keeps telling him someone is at the front door—when no one is.

Itachi stands in the hallway at midnight, illuminated by red emergency LEDs, listening to Alexa whisper, “I'm always watching.”

He doesn’t flinch.

He opens his laptop.

Two hours later, he’s writing code in silence, hoodie on, classical music playing in the background.

Obito would’ve called tech support.

Shisui would’ve thrown the system out the window.

Itachi?

He rewires the entire system, renames the AI, programs it to stop responding to voice commands unless it hears his exact tone.

When the power stabilizes, everything works flawlessly again.

And just for good measure, he adds facial recognition to the front camera.

Shisui tries to prank him the next week.

The door won’t open.

#naruto shippuden#naruto#naruto imagines#uchiha clan#indra otsutsuki#otsutsuki indra#indra#uchiha shisui#shisui uchiha#shisui#uchiha itachi#itachi uchiha#itachi#uchiha obito#obito uchiha#obito#mafia au#uchiha ame#uchiha raizen#uchiha inari#uchiha hikari#uchiha ivy

21 notes

·

View notes

Text

The Interrogation

The room was stark, its sterile walls pressing in on the dissident, who sat rigidly in the chair, his neck encased by the cold, unfeeling collar that controlled every aspect of his body. The collar hummed, its subtle electronic pulse a constant reminder that he had lost all autonomy. His limbs hung limply at his sides, the muscles tense, uncooperative. His body might have been his, but it no longer obeyed him.

The door slid open with a soft hiss, and the conscript entered, his footsteps echoing in the silence. The figure was tall, armored from head to toe in black, the sleek suit a perfect reflection of the Republic’s desire for total control. But the helmet was absent. His bald, shaved head gleamed under the harsh overhead light, and the absence of the visor allowed a brief glimpse of the man beneath—calculating, ruthless, unwavering. A soldier in the truest sense.

The dissident’s lips twitched into a smirk, a flicker of recognition in his eyes. He knew what was coming. This wasn’t an interrogation anymore; this was something else. Something that would not appear in the records, something off the books.

The conscript moved toward him, deliberately blocking the view of the room’s cameras and surveillance tech with a gloved hand, the black gauntlet cutting a swath through the flickering light. The dissident’s smirk deepened. This wasn’t a conversation for the eyes of the AI, or for the ever-present gaze of the Republic. This was between the two of them, and there were no rules.

The conscript leaned in close, his breath cold against the dissident's ear. He whispered, the words sharp, measured—each one a calculated knife in the dark.

"You’ll cooperate, or I’ll make sure your son ends up where you never wanted him to be. He’s a prime candidate. One of the academies for troubled teens. The kind they turn into perfect little enforcers. They’ll condition him deeply, shape him, bend him into something far worse than what you ever were."

The dissident’s heart sank, but his face betrayed nothing—he had learned long ago to conceal his fear, his rage.

The conscript continued, his voice low, laced with venom. "Imagine your son, no longer your son, but a perfect, obedient tool of the system. No more freedom. No more rebellion. Just another soldier in the Republic’s perfect army. And there will be nothing you can do to stop it. You see, I know exactly how to break him. How to make him just like me."

The dissident’s breath quickened, though his body remained unresponsive, the collar keeping him still. The conscript straightened, his fingers tapping lightly on the dissident's shoulder, the gesture casual, as if toying with him, playing with the fear that began to rise in the dissident's chest.

"Think carefully," the conscript said, his words now colder than the room itself. "I’ll give you one chance. Help us. Spy for us. And we’ll let your son stay free. Disobey... and well, you’ll never see him again. And even if you do, he’ll be unrecognizable—an entirely different person. A perfect enforcer."

The conscript took a step back, his silhouette growing larger against the dim light. There was no remorse in his eyes—only the icy determination of someone who had learned to follow orders without question.

The dissident’s mind raced, his heart pounding in his chest, but his body—his useless, controlled body—remained still. No amount of defiance would help him now. The collar had made sure of that. He knew what the conscript said was true. The Republic had ways of making people disappear, of turning them into something unrecognizable.

The conscript’s hand dropped to his side, fingers curling slightly as though savoring the power he held over the dissident, over his son.

"You’ll make the right choice. I’ll be watching," the conscript murmured, stepping away, leaving the room to suffocate once more in silence. The camera would only capture the dissident’s expression—blank, devoid of any hint of the struggle that raged within.

8 notes

·

View notes

Text

At 8:22 am on December 4 last year, a car traveling down a small residential road in Alabama used its license-plate-reading cameras to take photos of vehicles it passed. One image, which does not contain a vehicle or a license plate, shows a bright red “Trump” campaign sign placed in front of someone’s garage. In the background is a banner referencing Israel, a holly wreath, and a festive inflatable snowman.

Another image taken on a different day by a different vehicle shows a “Steelworkers for Harris-Walz” sign stuck in the lawn in front of someone’s home. A construction worker, with his face unblurred, is pictured near another Harris sign. Other photos show Trump and Biden (including “Fuck Biden”) bumper stickers on the back of trucks and cars across America. One photo, taken in November 2023, shows a partially torn bumper sticker supporting the Obama-Biden lineup.

These images were generated by AI-powered cameras mounted on cars and trucks, initially designed to capture license plates, but which are now photographing political lawn signs outside private homes, individuals wearing T-shirts with text, and vehicles displaying pro-abortion bumper stickers—all while recording the precise locations of these observations. Newly obtained data reviewed by WIRED shows how a tool originally intended for traffic enforcement has evolved into a system capable of monitoring speech protected by the US Constitution.

The detailed photographs all surfaced in search results produced by the systems of DRN Data, a license-plate-recognition (LPR) company owned by Motorola Solutions. The LPR system can be used by private investigators, repossession agents, and insurance companies; a related Motorola business, called Vigilant, gives cops access to the same LPR data.

However, files shared with WIRED by artist Julia Weist, who is documenting restricted datasets as part of her work, show how those with access to the LPR system can search for common phrases or names, such as those of politicians, and be served with photographs where the search term is present, even if it is not displayed on license plates.

A search result for the license plates from Delaware vehicles with the text “Trump” returned more than 150 images showing people’s homes and bumper stickers. Each search result includes the date, time, and exact location of where a photograph was taken.

“I searched for the word ‘believe,’ and that is all lawn signs. There’s things just painted on planters on the side of the road, and then someone wearing a sweatshirt that says ‘Believe.’” Weist says. “I did a search for the word ‘lost,’ and it found the flyers that people put up for lost dogs and cats.”

Beyond highlighting the far-reaching nature of LPR technology, which has collected billions of images of license plates, the research also shows how people’s personal political views and their homes can be recorded into vast databases that can be queried.

“It really reveals the extent to which surveillance is happening on a mass scale in the quiet streets of America,” says Jay Stanley, a senior policy analyst at the American Civil Liberties Union. “That surveillance is not limited just to license plates, but also to a lot of other potentially very revealing information about people.”

DRN, in a statement issued to WIRED, said it complies with “all applicable laws and regulations.”

Billions of Photos

License-plate-recognition systems, broadly, work by first capturing an image of a vehicle; then they use optical character recognition (OCR) technology to identify and extract the text from the vehicle's license plate within the captured image. Motorola-owned DRN sells multiple license-plate-recognition cameras: a fixed camera that can be placed near roads, identify a vehicle’s make and model, and capture images of vehicles traveling up to 150 mph; a “quick deploy” camera that can be attached to buildings and monitor vehicles at properties; and mobile cameras that can be placed on dashboards or be mounted to vehicles and capture images when they are driven around.

Over more than a decade, DRN has amassed more than 15 billion “vehicle sightings” across the United States, and it claims in its marketing materials that it amasses more than 250 million sightings per month. Images in DRN’s commercial database are shared with police using its Vigilant system, but images captured by law enforcement are not shared back into the wider database.

The system is partly fueled by DRN “affiliates” who install cameras in their vehicles, such as repossession trucks, and capture license plates as they drive around. Each vehicle can have up to four cameras attached to it, capturing images in all angles. These affiliates earn monthly bonuses and can also receive free cameras and search credits.

In 2022, Weist became a certified private investigator in New York State. In doing so, she unlocked the ability to access the vast array of surveillance software accessible to PIs. Weist could access DRN’s analytics system, DRNsights, as part of a package through investigations company IRBsearch. (After Weist published an op-ed detailing her work, IRBsearch conducted an audit of her account and discontinued it. The company did not respond to WIRED’s request for comment.)

“There is a difference between tools that are publicly accessible, like Google Street View, and things that are searchable,” Weist says. While conducting her work, Weist ran multiple searches for words and popular terms, which found results far beyond license plates. In data she shared with WIRED, a search for “Planned Parenthood,” for instance, returned stickers on cars, on bumpers, and in windows, both for and against the reproductive health services organization. Civil liberties groups have already raised concerns about how license-plate-reader data could be weaponized against those seeking abortion.

Weist says she is concerned with how the search tools could be misused when there is increasing political violence and divisiveness in society. While not linked to license plate data, one law enforcement official in Ohio recently said people should “write down” the addresses of people who display yard signs supporting Vice President Kamala Harris, the 2024 Democratic presidential nominee, exemplifying how a searchable database of citizens’ political affiliations could be abused.

A 2016 report by the Associated Press revealed widespread misuse of confidential law enforcement databases by police officers nationwide. In 2022, WIRED revealed that hundreds of US Immigration and Customs Enforcement employees and contractors were investigated for abusing similar databases, including LPR systems. The alleged misconduct in both reports ranged from stalking and harassment to sharing information with criminals.

While people place signs in their lawns or bumper stickers on their cars to inform people of their views and potentially to influence those around them, the ACLU’s Stanley says it is intended for “human-scale visibility,” not that of machines. “Perhaps they want to express themselves in their communities, to their neighbors, but they don't necessarily want to be logged into a nationwide database that’s accessible to police authorities,” Stanley says.

Weist says the system, at the very least, should be able to filter out images that do not contain license plate data and not make mistakes. “Any number of times is too many times, especially when it's finding stuff like what people are wearing or lawn signs,” Weist says.

“License plate recognition (LPR) technology supports public safety and community services, from helping to find abducted children and stolen vehicles to automating toll collection and lowering insurance premiums by mitigating insurance fraud,” Jeremiah Wheeler, the president of DRN, says in a statement.

Weist believes that, given the relatively small number of images showing bumper stickers compared to the large number of vehicles with them, Motorola Solutions may be attempting to filter out images containing bumper stickers or other text.

Wheeler did not respond to WIRED's questions about whether there are limits on what can be searched in license plate databases, why images of homes with lawn signs but no vehicles in sight appeared in search results, or if filters are used to reduce such images.

“DRNsights complies with all applicable laws and regulations,” Wheeler says. “The DRNsights tool allows authorized parties to access license plate information and associated vehicle information that is captured in public locations and visible to all. Access is restricted to customers with certain permissible purposes under the law, and those in breach have their access revoked.”

AI Everywhere

License-plate-recognition systems have flourished in recent years as cameras have become smaller and machine-learning algorithms have improved. These systems, such as DRN and rival Flock, mark part of a change in the way people are surveilled as they move around cities and neighborhoods.

Increasingly, CCTV cameras are being equipped with AI to monitor people’s movements and even detect their emotions. The systems have the potential to alert officials, who may not be able to constantly monitor CCTV footage, to real-world events. However, whether license plate recognition can reduce crime has been questioned.

“When government or private companies promote license plate readers, they make it sound like the technology is only looking for lawbreakers or people suspected of stealing a car or involved in an amber alert, but that’s just not how the technology works,” says Dave Maass, the director of investigations at civil liberties group the Electronic Frontier Foundation. “The technology collects everyone's data and stores that data often for immense periods of time.”

Over time, the technology may become more capable, too. Maass, who has long researched license-plate-recognition systems, says companies are now trying to do “vehicle fingerprinting,” where they determine the make, model, and year of the vehicle based on its shape and also determine if there’s damage to the vehicle. DRN’s product pages say one upcoming update will allow insurance companies to see if a car is being used for ride-sharing.

“The way that the country is set up was to protect citizens from government overreach, but there’s not a lot put in place to protect us from private actors who are engaged in business meant to make money,” Nicole McConlogue, an associate professor of law at the Mitchell Hamline School of Law, who has researched license-plate-surveillance systems and their potential for discrimination.

“The volume that they’re able to do this in is what makes it really troubling,” McConlogue says of vehicles moving around streets collecting images. “When you do that, you're carrying the incentives of the people that are collecting the data. But also, in the United States, you’re carrying with it the legacy of segregation and redlining, because that left a mark on the composition of neighborhoods.”

19 notes

·

View notes

Text

This piece of the story had two interesting challenges about it.

One: way too many cool horror movie tropes to deploy! It was really hard to decide on a sequence.

But two, which I probably won't know before I finish the story, is whether the stakes work. Because TTOU and TMBD are... Very different with respect to those, and the balance is hard. Even though this piece works well on its own, I do wonder if I'll need to rebalance it later.

But for now, without further ado...

Edit: Second draft of Showtime is now up!

Chapter 40: Showtime

"So it's been one idiot civilian all along. Doing voices," Target Leader said, giving Target Two a pointed stare as Aspen and their two hostiles approached through the deathly quiet halls of the Courageous.

(Deathly quiet to them. Iceblink and I were pretty busy. Iceblink was staying at Tal's pod and coordinating getting a medical and engineering team down here. And I was putting together some contingency malware on the fly and also mentally poking at my power cells to recharge faster.)

Target Two, who'd just finished hauling the unconscious Target Four out from under the rubble together with Target Five, scowled. "Yeah, well, I don't think anyone could've predicted that shitshow. Face up, resident, let us get a good look at you."

The Courageous has a habit of subverting people’s expectations, Aspen said darkly in the feed, their avatar's mouth twisting in feigned terror as they raised their head.

There was no recognition on any of the Targets' faces. But Hostile Manager's eyes went wide, and she didn't quite succeed in stifling a gasp.

Target Leader snapped her head towards Zheni. "What's that, Branch Manager Saldana? You know this resident?"

"I--what? Do you expect me to know every single person on the station?" Zheni sputtered.

"With how much you bragged about your local knowledge when we arrived, you'd better," Target Leader said with lazy menace. "Otherwise it would have been such a waste to have brought you all this way."

"I--!"

My threat assessment for Hostile Manager spiked to 95% for being shot, and I alerted Aspen. They seamlessly switched with me, letting me deeper me into our marks' targeting implants. While I did my part of the work, they crawled into Zheni's feed link and whispered, It’s Aspen. Pretend I’m just a normal human you know from the station.

Out loud, Aspen meekly said, "Aren't you from Caldera? What's going on?"

Through Aspen, I could feel Zheni's bewilderment and fear as she stammered, "You're--You're Courageous cluster, yes? I've seen you around the museum?"

"Right," Aspen gave a self-conscious little chuckle. "I'm a feed technician. You know, the one that voiced Aspen?"

"What?" Target Leader said sharply, and Aspen shrunk away from her a little more, even as they allowed a note of pride to slip through the fear.

"Um. Yeah. The Courageous has—well, had—my voice. That's why I got to inherit their name. It's nicer for people when the systems are friendly, you know?"

"What the fuck," Target Four muttered. "Wasn't the AI's voice based on recordings or something? Of the original brain donor?"

"That was before the corruption got it."

"What corruption?" Target Leader looked between Zheni and Aspen, growing visibly more agitated.

Aspen allowed themselves an apologetic smile. "Even with chronostasis tech, a human brain couldn't run a spaceship for over one hundred and fifty years. It'd go insane. Nobody wants an insane station."

"There's no AI," Target Three said as the realization hit him. "There hasn't been one for years. All of that patchwork code. You fuckers ran it all by hand."

"That explains why the search function was so shit," Target Two muttered to Target Leader, whose face was going red.

Aspen pretended to be oblivious.

"Yeah. Sorry. It's just been humans coding subroutines until we could get a new person hooked up to the box. So until today, basically."

"And the chronostasis pod in the next ring?" Target Leader prompted, voice low with anger.

Aspen brightened. "Oh! That! That really is just a memorial, the last original pod from the old Courageous—I can show you, if you want! It's very—"

Target Leader turned to Hostile Manager and drawled, "Zhe-eni-i," at the same time as I deployed my malware into her targeting implants.

Zheni's blood drained from her face. "I—I didn't know! No one on the station must have, except the Co—"

"Strike three."

Fall, Aspen whispered.

Target Leader fired. This close, I couldn’t make her miss completely, but the projectile only grazed Zheni, and she collapsed, convulsing as she bled. Aspen dropped to their knees beside her with a dramatic wail, shielding her from sight with their avatar as they quickly assessed the damage. Then they sent a report along to the incoming medical team and threw their dying human filter over her (it had a lot more blood and guts than there really was).

(They managed to make it look disturbing even to me, and I'd seen a lot of dead humans. I made a note to never, under any circumstances, watch anything from that part of Aspen's movie collection.)

To Zheni, they whispered, Good work. Keep still. Help is on the way.

"Aspen?" Zheni choked out as she reached for them, and Aspen had to recoil so that her hand wouldn't pass through them. "How…"

Shh. You're dead. Don't give them a reason to check your body.

Zheni's hand dropped, and Aspen looked up at Target Leader, tears in their eyes and their voice full of all the terror and anger they'd been feeling since the start of this whole thing.

"That was completely unnecessary! She--"

"And you'll be next if you don't shut up," Target Leader said. "Martens, the operation is a bust. We're pulling out. Resident--you're with us until we get safe passage."

"I won't--" Aspen shut up when Target Leader pointed the muzzle of her projectile weapon at them.

"You will, or you'll be dead. Get the fuck up and move."

Aspen ran a hand over Hostile Manager like they were closing her eyes and then stood up, a defiant expression on their face.

Target Leader leered at them and said, "You're going in front of us. Hope anyone we meet isn't trigger-happy."

"None of us are," Aspen snarled mournfully. "We're not you."

"Trust a fucking Friend to be so dramatic," Target Two said to Target Three, who sighed and shouldered his equipment pack.

As soon as they were out of hearing range, I hobbled towards the Hostile Manager and started emergency treatment protocols.

(The wound wasn't life-threatening. I was actually kind of proud of how well my targeting malware had worked.)

Her eyes fluttered open, and I said, "Stay calm. I'm applying basic treatment. A medical team will be here soon."

“Wha—who?.. Ohh.”

The wind went out of her lungs as she fell unconscious. I tagged her for Aspen’s medics, who would be on site in less than five minutes thanks to Iceblink, and moved on, keeping track of Hostile Manager on the cameras.

She woke up again when the medical team hoistered her on a gurney, and asked weakly, "I'm sorry, but what in the world was all that? What is going on here?"

One of the medics, a stocky Arborean whose feed ID listed her as Waybread, shrugged and said, "Welcome to the Courageous. They just saved your life."

---

The targets were pretty far along towards the exit when I caught up to them. My power cells were still recharging, I had no energy weapons, and taking down a full assault team by myself was still out of the question. But according to my threat assessment, I had to attack them, and soon. Hiram had eight of his fastest people with them waiting at the ambush point, but the hostiles had seven and a lot more experience and tech. Without my assistance, there would be casualties. Probably four or five humans.

You can’t fight like this, Aspen said as they reviewed my data. You’re barely at 50 percent performance reliability. The moment they hit you, you drop.

Yes, I can. Unlike your humans, ART can put me back together. And I can take down enough hostiles that your humans can handle the rest without losing anyone.

Query, SecUnit.

Yes?

Will the hostiles know what you are?

That was a stupid question.

They’re a corporate assault team. Of course they will.

Query. Threat assessment on them committing resources to neutralizing you permanently?

I ran the analysis. Fuck. That was pretty high. 56% if I didn’t attack at the same time as Hiram’s team did. (And that version had a 76% chance of one or more human casualties. That wasn’t four or five humans. But I didn’t want to lose anyone either.)

ART (who had of course been listening in), suddenly shifted its extra processing—not quite pulling it from Aspen, but clearly demonstrating it was prepared to do so if necessary.

Courageous. You are not permitted to lose SecUnit.

I agree, Aspen said, sounding frustrated. And it would be great if you convinced the third person in this conversation of that too, Perihelion.

We can’t lose your humans either, I said. There’s no other choice. We have to risk it.

Yes, there is. Aspen said, running a lightning-quick assessment. And before I could query them, their avatar—which had been walking a few meters ahead of the hostiles, weapons pointed at its back—stopped.

"Keep moving," Target Leader barked.

Aspen didn't. Instead, they straightened their back, like they’d just dropped a heavy pack from their shoulders. "Before we do that, there's something I want to tell you."

Target Leader stepped forward, aiming her weapon at them and growling, "I said…"

Aspen turned towards her with slow predatory motion, and she recoiled, swearing. Half of Aspen's face looked like it had just been melted off, pus seeping from broken blisters, bits of eye dripping down their skin and onto their clothing. And they were smiling.

"Remember how I mentioned that a human brain can't operate a spaceship for centuries without going insane?" They said in their practiced, casual voice as the enemy assault team scrambled to target them. "I wasn't lying."

4 notes

·

View notes

Note

That's the thing I hate probably The Most about AI stuff, even besides the environment and the power usage and the subordination of human ingenuity to AI black boxes; it's all so fucking samey and Dogshit to look at. And even when it's good that means you know it was a fluke and there is no way to find More of the stuff that was good

It's one of the central limitations of how "AI" of this variety is built. The learning models. Gradient descent, weighting, the attempts to appear genuine, and mass training on the widest possible body of inputs all mean that the model will trend to mediocrity no matter what you do about it. I'm not jabbing anyone here but the majority of all works are either bad or mediocre, and the chinese army approach necessitated by the architecture of ANNs and LLMs means that any model is destined to this fate.

This is related somewhat to the fear techbros have and are beginning to face of their models sucking in outputs from the models destroying what little success they have had. So much mediocre or nonsense garbage is out there now that it is effectively having the same effect in-breeding has on biological systems. And there is no solution because it is a fundamental aspect of trained systems.

The thing is, while humans are not really possessed of the ability to capture randomness in our creative outputs very well, our patterns tend to be more pseudorandom than what ML can capture and then regurgitate. This is part of the above drawback of statistical systems which LLMs are at their core just a very fancy and large-scale implementation of. This is also how humans can begin to recognise generated media even from very sophisticated models; we aren't really good at randomness, but too much structured pattern is a signal. Even in generated texts, you are subconsciously seeing patterns in the way words are strung together or used even if you aren't completely conscious of it. A sense that something feels uncanny goes beyond weird dolls and mannequins. You can tell that the framework is there but the substance is missing, or things are just bland. Humans remain just too capable of pattern recognition, and part of that means that the way we enjoy media which is little deviations from those patterns in non-trivial ways makes generative content just kind of mediocre once the awe wears off.

Related somewhat, the idea of a general LLM is totally off the table precisely because what generalism means for a trained model: that same mediocrity. Unlike humans, trained models cannot by definition become general; and also unlike humans, a general model is still wholly a specialised application that is good at not being good. A generalist human might not be as skilled as a specialist but is still capable of applying signs and symbols and meaning across specialties. A specialised human will 100% clap any trained model every day. The reason is simple and evident, the unassailable fact that trained models still cannot process meaning and signs and symbols let alone apply them in any actual concrete way. They cannot generate an idea, they cannot generate a feeling.

The reason human-created works still can drag machine-generated ones every day is the fact we are able to express ideas and signs through these non-lingual ways to create feelings and thoughts in our fellow humans. This act actually introduces some level of non-trivial and non-processable almost-but-not-quite random "data" into the works that machine-learning models simply cannot access. How do you identify feelings in an illustration? How do you quantify a received sensibility?

And as long as vulture capitalists and techbros continue to fixate on "wow computer bro" and cheap grifts, no amount of technical development will ever deliver these things from our exclusive propriety. Perhaps that is a good thing, I won't make a claim either way.

4 notes

·

View notes

Text

No Qu TAM - Time and Attendance Management System

No Qu TAM (Time and Attendance Management) System is a smart, cloud-based solution designed to simplify how companies manage employee attendance, time tracking, and leaves. Built to meet the needs of both small and large teams.

Traditional attendance systems have become a thing of the past. With No Qu TAM, organizations become super-efficient with very less capital investment and complete digital transformation.

Why Choose No Qu TAM?

SaaS Attendance Platform No Qu TAM is a cloud-based SaaS attendance solution that requires no complex hardware. It’s easy to deploy, access, and scale from anywhere.

All-in-One Attendance Management System Track work hours, shifts, check-ins, and records everything centralized in a single platform.

Simple and Powerful Attendance App An intuitive No Qu attendance App that lets employees mark attendance with mobile devices.

Mobile App Based Attendance Software Ideal for hybrid or remote models, this mobile app based attendance software provides flexibility and ease of use.

Face Recognition Attendance System Offers fast, secure, and contactless check-ins using advanced face recognition technology.

Supports Biometric Devices Integrates with biometric devices for fingerprint or retina-based attendance where needed.

Real Time Attendance Tracking App Get real-time visibility into check-ins, work hours, and employee activity with live tracking.

Attendance App for Employees A reliable and user-friendly solution that reduces manual effort and boosts engagement.

Built-in Leave Management System The integrated leave management module allows staff to apply for leave and get instant approvals.

Geo Fencing Use geo fencing and geo tag features to define check-in zones and verify employee locations.

AI Attendance System An intelligent AI attendance system that offers predictive insights and flags irregular patterns automatically.

Workflow Automation Streamline HR tasks and approvals with integrated workflow features that improve operational speed.

HRMS & Payroll Software Integration Seamlessly connects with your existing HRMS and payroll software for end-to-end HR efficiency.

Easy Multi Dimensional Reports Generate detailed, multi-dimensional reports for better analytics, compliance, and decision-making.

No Qu TAM is your all-in-one, AI-powered workforce solution - making time tracking smart, simple, and future-ready.

#no qu#no qu TAM#TAM#attendance#attendance management app#attendance management software#attendance system#saas technology#business#time and attendance management#attendance app#attendance software#ai attendance#cloud based attendance#attendance tracking#employee tracking#live tracking#saas#b2b saas#softwaredevelopment#geofencing#biometric attendance#face recognition attendance system#saas attendance#leave management#geotag#multi dimensional reports#hrms#workflow#Qr attendance

2 notes

·

View notes

Text

What is Microsoft AI tool, and how does it work?

Microsoft Technologies Services

Microsoft has been and is always at the forefront of artificial intelligence, providing tools and resources that simplify the use of AI in real-world applications for businesses and developers. The Microsoft AI tools, built on top of trusted Microsoft Technologies, help automate tasks, analyze data, and improve decision-making through intelligent systems.

What is the Microsoft AI Tool?

The Microsoft AI tool refers to a suite and wide options of services and platforms that allow the users to build, train, and deploy AI-powered applications. These tools are available through Microsoft Azure, the cloud computing platform, and include services such as Azure Cognitive Services, Azure Machine Learning, and AI Builder in Power Automate (formerly known as Power Automate).

These tools and its resources simplify and smoothen down the process of integrating AI capabilities, such as image recognition, language translation, natural language understanding, and predictive analytics, into existing apps or workflows.

How Does It Work?

The Microsoft AI tools work through cloud-based machine learning and pre-trained AI models. Here’s how they function:

Data Input

First, data is fed into the system—this could be text, images, videos, or structured data.

AI Model Processing

The Microsoft AI tools process the data using trained machine learning models. These models and its types are designed and crafted to identify patterns that make predictions, or understand the context.

Action & Output

Based on the terms of analysis and monitoring, the AI tool generates sound output—like detecting faces in photos, summarizing documents, predicting customer behavior, or translating languages instantly.

These tools work seamlessly with other Microsoft Technologies like Microsoft 365, Dynamics 365, and Teams, making them powerful and easy to integrate into business processes.

Why Use Microsoft AI Tools?

Scalable and secure with Microsoft’s cloud infrastructure

Easy to use, even for non-developers, through no-code options

Highly customizable to meet business-specific needs

Works well with other Microsoft Technologies for smooth integration

Many business organizations across various industries and sectors—from healthcare to finance—are utilizing Microsoft AI tools and other resources to enhance efficiency, automate repetitive tasks, and deliver improved customer service with its implementation into their infrastructure. Trusted partners and professional experts like Suma Soft, IBM, and Cyntexa offer expert support for implementing these AI solutions using Microsoft Technologies, helping businesses move toward smarter, data-driven operations. Their solutions help out the businesses to stay agile and alert, adaptive to change quickly, and remain competitive in the ever-evolving market as leads towards stand out of the domain in the industry.

#it services#technology#saas#software#saas development company#saas technology#digital transformation

2 notes

·

View notes

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

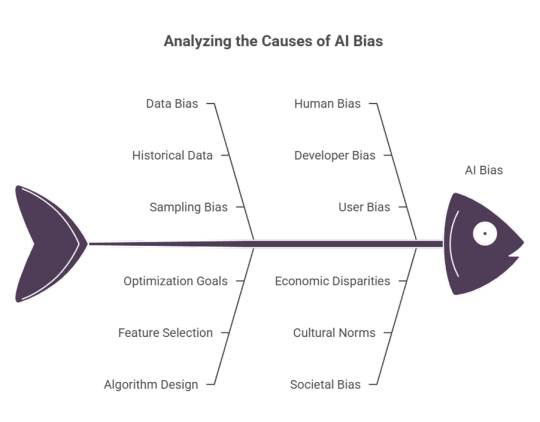

Bias in AI: The Glitch That Hurts Real People

Yo, Tumblr crew! Let’s chat about something wild: AI bias. Let’s discuss about AI’s everywhere—picking Netflix binge, powering self-driving cars, even deciding who will gets a job interview? It’s super cool, but here’s the tea: AI can mess up by favouring some people over others, and that’s a big deal. Let’s break down what’s going on, why it sucks, and how we can make things better.

What’s AI Bias?

Let’s imagine that AI is supposed to be a neutral, super-smart robot brain, right? But sometimes it’s more like that friend who picks favorites without even realizing it. AI bias is enables the system to screw up by treating certain groups unfairly—like giving dudes an edge in job applications or struggling to recognize faces of people with darker skin. It’s not the AI being a mis operational model; it’s just doing what it was taught, and sometimes its teaching is flawed.

AI bias and its insights

Shady Data: AI learns from data humans feed it. If that data’s from a world where, say, most tech hires were guys, the AI might “learn” to pick guys over others.

Human Oof Moments: People build AI, and we’re not perfect. Our blind spots—are thinking about how different groups are affected—can end up in the code.

Bad Design Choices: AI’s built can accidentally lean toward certain outcomes, like prioritizing stuff that seems “normal” but actually excludes people.

Why’s This a Big Deal?

A few years back, a big company ditched an AI hiring tool because it was rejecting women’s résumés. Yikes.

Facial recognition tech has messed up by misidentifying Black and Brown folks way more than white folks, even leading to wrongful arrests.

Ever notice job ads for high-paying gigs popping up more for guys?

This isn’t just a tech glitch—it’s a fairness issue. If AI keeps amplifying the same old inequalities, it’s not just a bug; it’s a system that’s letting down entire communities.

Where’s the Bias Coming From?

Old-School Data: If the data AI’s trained on comes from a world with unfair patterns (like, uh, ours), it’ll keep those patterns going. For example, if loan records show certain groups got denied more, AI might keep denying them too.

Not Enough Voices: If the folks building AI all come from similar backgrounds, they might miss how their tech affects different people. More diversity in the room = fewer blind spots.

Vicious Cycles: AI can get stuck in a loop. If it picks certain people for jobs, and only those people get hired, the data it gets next time just doubles down on the same bias.

Okay, How Do We Fix This?

There are ways to make things fairer, and it’s totally doable if we put in the work.

Mix Up the Data: Feed AI data that actually represents everyone—different races, genders, backgrounds.

Be Open About It: Companies need to spill the beans on how their AI works. No more hiding behind “it’s complicated.”

Get Diverse Teams: Bring in people from all walks of life to build AI. They’ll spot issues others might miss and make tech that works for everyone.

Keep Testing: Check AI systems regularly to catch any unfair patterns. If something’s off, tweak it until it’s right.

Set Some Ground Rules: Make ethical standards a must for AI. Fairness and accountability should be non-negotiable.

What Can we Do?

Spread the Word: Talk about AI bias! Share posts, write your own, or just chat with friends about it. Awareness is power.

Call It Out: If you see a company using shady AI, ask questions. Hit them up on social media and demand transparency.

Support the Good Stuff: Back projects and people working on fair, inclusive tech. Think open-source AI or groups pushing for ethical standards.

Let’s Dream Up a Better AI

AI’s got so much potential—think better healthcare, smarter schools, or even tackling climate change. By using diverse data, building inclusive teams, and keeping companies honest.

#AI #TechForGood #BiasInTech #MakeItFair #InclusiveFuture

@sruniversity

2 notes

·

View notes

Text

Weaknesses of AI image generation

The biggest flaw in AI image generation software is that it completely ignores the basics and just starts coloring the picture itself. That's why it's so unstable and makes so many processing errors. Normally, when drawing a picture, you decide on the composition, draw a rough sketch, make corrections as necessary, draw the line art, and finally color it. Don't just start drawing from scratch. If you don't have a solid foundation, you can't draw a picture properly. So what are the basics of AI? First, create the overall composition. Calculate the exact position, coordinates, and positional relationship of each element. Calculate the positional relationship between the subject and the background and foreground. Next, generate and analyze the rough sketch, and verify and correct any processing and calculation errors. Finally, generate the picture itself. Of course, the quality of the learning data and the learning method are also very important. It is clear that the traditional learning method of showing a lot of images and photos to learn has already reached its limit. It goes without saying that AI image generation software cannot overcome all of the challenges it faces. It is essential to create a system that can generate images, learn from corrected images, and recognize and correct mistakes. Furthermore, a model like that used for face recognition and identification is also necessary, and it is necessary to learn the position, ratio, and shape of each part (element), such as two eyes lined up at the top, a nose in the center, and a mouth below that. The current (conventional) learning and generation method is a system that is like copying a fake. It is also important to add 3D models (wire layers) and motion data to the learning images to learn the shape and movement. If AI images do not have a solid foundation, they cannot be expected to develop any further. It is clear that a proper foundation is essential not only in image generation, but in all fields. Automation will continue to advance in the future, and it cannot be stopped. However, the current situation is that engineers and ordinary people are only focusing on processing speed and processing power, and neglecting basic learning. Simply put, we are chasing results and completely forgetting the fundamentals, the origins.

<>

#weaknesses#image#generation#flaw#software#completely#ignores#basic#picture#unstable#process#draw#decide#composition#make#correction#necessary#line#start#foundation#properly#create#overall#exact#coordinate#positional#relationship#element#between

2 notes

·

View notes

Text

Blog Post #2 due 02/6

How can the internet, despite reproducing existing power hierarchies, be used as a tool for global feminist organizing and challenging repressive gender and sex norms?

In speaking about the topic of digital technologies and how things are presented online, whether that is on social media, websites, or just the internet in general it can show a positive and uplifting motive for females and self identifying women. To help overthrow sex and gender oppression. It allows them to escape reality and harshful harassment they experience in real life, whether that is at home, school, or work, to something to engage with on the internet that allows them to, “...transform their embodied selves, not escape embodiment” (Daniels, 2009). Along with spreading activism globally. It lets females and self identifying women to go online and seek out spaces on the internet in where it can allow them to explore and, “...reaffirm the bodily selves in the presence of illness, surgery, recovery, and loss” (Daniels, 2009).

2.How does the surveillance of marginalized communities through digital technology circulate their daily lives?

There are many factors and instances in how digital technology and its habit of surveilling marginalized communities such as people of color and the low-income working class, affect their everyday lives. These communities and people become such a high alert target rather than those who are White and high class, especially when it comes to finance, employment, politics, health, and human services. Back in the day these resources were offered to us by humans, but now that digital technology has become prioritized into our lives, humans aren’t helping us, machines and AI is. The problem with this is because of its “system” it has, “Automated eligibility systems, ranking algorithms, and predictive risk models control which neighborhoods get policed…” (Eubanks, 2018). This creates more unnecessary issues for these marginalized groups because sometimes they don’t even realize they are being targeted. I will add in my own personal experience in which my mother, who is a Mexican immigrant, once went to get her physical check-up with her doctor, that is until when she arrived they said they were “unable” to provide her this service because her health care insurance did not cover it so she had to switch it. She called several times for customer service to get it fixed and it had ended with giving her the wrong insurance, she then had to change it back to her original one but it could not be changed or fixed because the system said otherwise.

3.Can artificial intelligence be considered racist and demonstrate biases in AI systems that reflect the prejudices of the humans who create them?

As we all know artificial intelligence has been increasingly developing in rapid ways throughout these years. Whether it goes from cars, machinery, or online digital technologies. But can there be severe unfixed issues in these systems? To put it short, yes. There are many undermining problems when it comes to using AI and how it can perform biases and have the potential to be racist. It is not that the “robot” itself is racist, but the people behind the creation of artificial intelligence. The biases behind this is how the human being appears, their face and skin tone. For example, when it comes to facial recognition it is to be said that, “Facial recognition technology is known to have flaws. In 2019, a national study of over 100 facial recognition algorithms found that they did not work as well on Black and Asian faces” (Hill, 2020). And of course even though this practice of AI is highly flawed it is still put into neighborhoods and communities to have them be surveillanced, because of “higher” crime rates appearing in these areas or as a precaution that will help lead clues to a crime case. Leading to more false arrests, just like how it happened to Mr.Parks. I find it quite odd and invading that these groups of people, such as law enforcement, put these cameras around cities that aren’t even set correctly to identify the perpetuated criminal, it appears to me they are just picking and choosing whatever seems “fit” to the description which is unlawful and discriminating because of the design and system of this AI that is awfully built on white standards.

4.Can AI and algorithms perpetuate systemic inequalities, especially in a health care perspective?

As seemingly before it is already known that digital technology has its way of surveilling marginalized communities, specifically minority ethnic groups. And that artificial intelligence is increasing that is leading to more systems being used in corporations and businesses. AI makes its way into health care resources that affect algorithms massively in which is taking a step into making people of color lose their access to health care. Since it is not so often that White people have trouble with their services, the rate increases and so does the algorithm that more health care is being provided to them, since they are able to use and access their insurance whenever is needed rather than other minority groups. “...since white people spent an average of eighteen hundred dollars more than black people on health care. The algorithm consistently deemed white people to be more ill and therefore recommended more health care. In other words because white people were higher healthcare consumers the algorithms determined they needed more” (Brown, 2020). Additionally, this is more than just false, these biases can affect healthcare resource distribution and insurance coverage when this systematic bias often overlooks the disproportionately that is affecting people of color.

Brown, Nicole. (2020, Sept.18). Race and Technology [Video]. YouTube. https://www.youtube.com/watch?v=d8uiAjigKy8.

Hill, Kashmir. (2020, Dec. 29). Another Arrest, and Jail Time, Due to a Bad Facial Recognition Match. The New York Times.

Daniels, Jessie. 2009. Rethinking Cyberfeminism(s): Race, Gender, and Embodiment. (Vol. 37). The Feminist Press. Eubanks, Virginia. 2018. Automating Inequality-Introduction.

5 notes

·

View notes

Text

What is Artificial Intelligence?? A Beginner's Guide to Understand Artificial Intelligence

1) What is Artificial Intelligence (AI)??

Artificial Intelligence (AI) is a set of technologies that enables computer to perform tasks normally performed by humans. This includes the ability to learn (machine learning) reasoning, decision making and even natural language processing from virtual assistants like Siri and Alexa to prediction algorithms on Netflix and Google Maps.

The foundation of the AI lies in its ability to simulate cognitive tasks. Unlike traditional programming where machines follow clear instructions, AI systems use vast algorithms and datasets to recognize patterns, identify trends and automatically improve over time.

2) Many Artificial Intelligence (AI) faces

Artificial Intelligence (AI) isn't one thing but it is a term that combines many different technologies together. Understanding its ramifications can help you understand its versatility:

Machine Learning (ML): At its core, AI focuses on enabling ML machines to learn from data and make improvements without explicit programming. Applications range from spam detection to personalized shopping recommendations.

Computer Vision: This field enables machines to interpret and analyze image data from facial recognition to medical image diagnosis. Computer Vision is revolutionizing many industries.

Robotics: By combining AI with Engineering Robotics focuses on creating intelligent machines that can perform tasks automatically or with minimal human intervention.

Creative AI: Tools like ChatGPT and DALL-E fail into this category. Create human like text or images and opens the door to creative and innovative possibilities.

3) Why is AI so popular now??

The Artificial Intelligence (AI) explosion may be due to a confluence of technological advances:

Big Data: The digital age creates unprecedented amounts of data. Artificial Intelligence (AI) leverages data and uses it to gain insights and improve decision making.

Improved Algorithms: Innovations in algorithms make Artificial Intelligence (AI) models more efficient and accurate.

Computing Power: The rise of cloud computing and GPUs has provided the necessary infrastructure for processing complex AI models.

Access: The proliferation of publicly available datasets (eg: ImageNet, Common Crawl) has provided the basis for training complex AI Systems. Various Industries also collect a huge amount of proprietary data. This makes it possible to deploy domain specific AI applications.

4) Interesting Topics about Artificial Intelligence (AI)

Real World applications of AI shows that AI is revolutionizing industries such as Healthcare (primary diagnosis and personalized machine), finance (fraud detection and robo advisors), education (adaptive learning platforms) and entertainment (adaptive platforms) how??