#AI-Driven Audio

Explore tagged Tumblr posts

Text

AI-Enhanced Audio :AI-Enhanced Audio: How Voice Tech Is Shaping the Next Generation of Creators

Sunny.io.creator is a progressive thinking tech blog spotlighting the intersection of Ai tech and digital innovation, and collaborative monetization strategies. The digital landscape is a relentless current, constantly shifting and reshaping itself. For brands, creators, and individuals alike, staying afloat and thriving means understanding the undertows and anticipating the next big wave. As we…

#AI Audio#AI in Music#AI-Driven Audio#AI-Enhanced#Audio#Creator Tools 2026#Digital Creators AI#Future of Audio Production#Speech Recognition#sunny.io.creator#technology#Voice#Voice Synthesis#Voice Tech Creators

1 note

·

View note

Text

Amazon annihilates Alexa privacy settings, turns on continuous, nonconsensual audio uploading

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in SAN DIEGO at MYSTERIOUS GALAXY on Mar 24, and in CHICAGO with PETER SAGAL on Apr 2. More tour dates here.

Even by Amazon standards, this is extraordinarily sleazy: starting March 28, each Amazon Echo device will cease processing audio on-device and instead upload all the audio it captures to Amazon's cloud for processing, even if you have previously opted out of cloud-based processing:

https://arstechnica.com/gadgets/2025/03/everything-you-say-to-your-echo-will-be-sent-to-amazon-starting-on-march-28/

It's easy to flap your hands at this bit of thievery and say, "surveillance capitalists gonna surveillance capitalism," which would confine this fuckery to the realm of ideology (that is, "Amazon is ripping you off because they have bad ideas"). But that would be wrong. What's going on here is a material phenomenon, grounded in specific policy choices and by unpacking the material basis for this absolutely unforgivable move, we can understand how we got here – and where we should go next.

Start with Amazon's excuse for destroying your privacy: they want to do AI processing on the audio Alexa captures, and that is too computationally intensive for on-device processing. But that only raises another question: why does Amazon want to do this AI processing, even for customers who are happy with their Echo as-is, at the risk of infuriating and alienating millions of customers?

For Big Tech companies, AI is part of a "growth story" – a narrative about how these companies that have already saturated their markets will still continue to grow. It's hard to overstate how dominant Amazon is: they are the leading cloud provider, the most important retailer, and the majority of US households already subscribe to Prime. This may sound like a good place to be, but for Amazon, it's actually very dangerous.

Amazon has a sky-high price/earnings ratio – about triple the ratio of other retailers, like Target. That scorching P/E ratio reflects a belief by investors that Amazon will continue growing. Companies with very high p/e ratios have an unbeatable advantage relative to mature competitors – they can buy things with their stock, rather than paying cash for them. If Amazon wants to hire a key person, or acquire a key company, it can pad its offer with its extremely high-value, growing stock. Being able to buy things with stock instead of money is a powerful advantage, because money is scarce and exogenous (Amazon must acquire money from someone else, like a customer), while new Amazon stock can be conjured into existence by typing zeroes into a spreadsheet:

https://pluralistic.net/2025/03/06/privacy-last/#exceptionally-american

But the downside here is that every growth stock eventually stops growing. For Amazon to double its US Prime subscriber base, it will have to establish a breeding program to produce tens of millions of new Americans, raising them to maturity, getting them gainful employment, and then getting them to sign up for Prime. Almost by definition, a dominant firm ceases to be a growing firm, and lives with the constant threat of a stock revaluation as investors belief in future growth crumbles and they punch the "sell" button, hoping to liquidate their now-overvalued stock ahead of everyone else.

For Big Tech companies, a growth story isn't an ideological commitment to cancer-like continuous expansion. It's a practical, material phenomenon, driven by the need to maintain investor confidence that there are still worlds for the company to conquer.

That's where "AI" comes in. The hype around AI serves an important material need for tech companies. By lumping an incoherent set of poorly understood technologies together into a hot buzzword, tech companies can bamboozle investors into thinking that there's plenty of growth in their future.

OK, so that's the material need that this asshole tactic satisfies. Next, let's look at the technical dimension of this rug-pull.

How is it possible for Amazon to modify your Echo after you bought it? After all, you own your Echo. It is your property. Every first year law student learns this 18th century definition of property, from Sir William Blackstone:

That sole and despotic dominion which one man claims and exercises over the external things of the world, in total exclusion of the right of any other individual in the universe.

If the Echo is your property, how come Amazon gets to break it? Because we passed a law that lets them. Section 1201 of 1998's Digital Millennium Copyright Act makes it a felony to "bypass an access control" for a copyrighted work:

https://pluralistic.net/2024/05/24/record-scratch/#autoenshittification

That means that once Amazon reaches over the air to stir up the guts of your Echo, no one is allowed to give you a tool that will let you get inside your Echo and change the software back. Sure, it's your property, but exercising sole and despotic dominion over it requires breaking the digital lock that controls access to the firmware, and that's a felony punishable by a five-year prison sentence and a $500,000 fine for a first offense.

The Echo is an internet-connected device that treats its owner as an adversary and is designed to facilitate over-the-air updates by the manufacturer that are adverse to the interests of the owner. Giving a manufacturer the power to downgrade a device after you've bought it, in a way you can't roll back or defend against is an invitation to run the playbook of the Darth Vader MBA, in which the manufacturer replies to your outraged squawks with "I am altering the deal. Pray I don't alter it any further":

https://pluralistic.net/2023/10/26/hit-with-a-brick/#graceful-failure

The ability to remotely, unilaterally alter how a device or service works is called "twiddling" and it is a key factor in enshittification. By "twiddling" the knobs and dials that control the prices, costs, search rankings, recommendations, and core features of products and services, tech firms can play a high-speed shell-game that shifts value away from customers and suppliers and toward the firm and its executives:

https://pluralistic.net/2023/02/19/twiddler/

But how can this be legal? You bought an Echo and explicitly went into its settings to disable remote monitoring of the sounds in your home, and now Amazon – without your permission, against your express wishes – is going to start sending recordings from inside your house to its offices. Isn't that against the law?

Well, you'd think so, but US consumer privacy law is unbelievably backwards. Congress hasn't passed a consumer privacy law since 1988, when the Video Privacy Protection Act banned video store clerks from disclosing which VHS cassettes you brought home. That is the last technological privacy threat that Congress has given any consideration to:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

This privacy vacuum has been filled up with surveillance on an unimaginable scale. Scumbag data-brokers you've never heard of openly boast about having dossiers on 91% of adult internet users, detailing who we are, what we watch, what we read, who we live with, who we follow on social media, what we buy online and offline, where we buy, when we buy, and why we buy:

https://gizmodo.com/data-broker-brags-about-having-highly-detailed-personal-information-on-nearly-all-internet-users-2000575762

To a first approximation, every kind of privacy violation is legal, because the concentrated commercial surveillance industry spends millions lobbying against privacy laws, and those millions are a bargain, because they make billions off the data they harvest with impunity.

Regulatory capture is a function of monopoly. Highly concentrated sectors don't need to engage in "wasteful competition," which leaves them with gigantic profits to spend on lobbying, which is extraordinarily effective, because a sector that is dominated by a handful of firms can easily arrive at a common negotiating position and speak with one voice to the government:

https://pluralistic.net/2022/06/05/regulatory-capture/

Starting with the Carter administration, and accelerating through every subsequent administration except Biden's, America has adopted an explicitly pro-monopoly policy, called the "consumer welfare" antitrust theory. 40 years later, our economy is riddled with monopolies:

https://pluralistic.net/2024/01/17/monopolies-produce-billionaires/#inequality-corruption-climate-poverty-sweatshops

Every part of this Echo privacy massacre is downstream of that policy choice: "growth stock" narratives about AI, twiddling, DMCA 1201, the Darth Vader MBA, the end of legal privacy protections. These are material things, not ideological ones. They exist to make a very, very small number of people very, very rich.

Your Echo is your property, you paid for it. You paid for the product and you are still the product:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

Now, Amazon says that the recordings your Echo will send to its data-centers will be deleted as soon as it's been processed by the AI servers. Amazon's made these claims before, and they were lies. Amazon eventually had to admit that its employees and a menagerie of overseas contractors were secretly given millions of recordings to listen to and make notes on:

https://archive.is/TD90k

And sometimes, Amazon just sent these recordings to random people on the internet:

https://www.washingtonpost.com/technology/2018/12/20/amazon-alexa-user-receives-audio-recordings-stranger-through-human-error/

Fool me once, etc. I will bet you a testicle* that Amazon will eventually have to admit that the recordings it harvests to feed its AI are also being retained and listened to by employees, contractors, and, possibly, randos on the internet.

*Not one of mine

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/15/altering-the-deal/#telescreen

Image: Stock Catalog/https://www.quotecatalog.com (modified) https://commons.wikimedia.org/wiki/File:Alexa_%2840770465691%29.jpg

Sam Howzit (modified) https://commons.wikimedia.org/wiki/File:SWC_6_-_Darth_Vader_Costume_(7865106344).jpg

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/deed.en

#pluralistic#alexa#ai#voice assistants#darth vader mba#amazon#growth stocks#twiddling#privacy#privacy first#enshittification

4K notes

·

View notes

Text

Opened Reddit by accident while trying to type in a bands youtube channel and saw this:

https://www.reddit.com/r/ChatGPT/comments/1guhsm4/well_this_is_it_boys_i_was_just_informed_from_my/

Well this is it boys. I was just informed from my boss and HR that my entire profession is being automated away. For context I work production in local news. Recently there’s been developments in AI driven systems that can do 100% of the production side of things which is, direct, audio operate, and graphic operate -all of those jobs are all now gone in one swoop. This has apparently been developed by the company Q ai. For the last decade I’ve worked in local news and have garnered skills I thought I would be able to take with me until my retirement, now at almost 30 years old, all of those job opportunities for me are gone in an instant. The only person that’s keeping their job is my manager, who will overlook the system and do maintenance if needed. That’s 20 jobs lost and 0 gained for our station. We were informed we are going to be the first station to implement this under our company. This means that as of now our entire production staff in our news station is being let go. Once the system is implemented and running smoothly then this system is going to be implemented nationwide (effectively eliminating tens of thousands of jobs.) There are going to be 0 new jobs built off of this AI platform. There are people I work with in their 50’s, single, no college education, no family, and no other place to land a job once this kicks in. I have no idea what’s going to happen to them. This is it guys. This is what our future with AI looks like. This isn’t creating any new jobs this is knocking out entire industry level jobs without replacing them.

Don't ever let any AI-supporters try to claim that AI will 'never make people lose their jobs!' because it's already been happening before this, and now this thread is full of stories of people talking about people being laid off from jobs they'd worked for 40 years to be replaced by AI :/

*this* is why it is so important to not tolerate generative AI, even when it's "just a joke" or "for a meme" I'm just eternally greatful that Tumblr hasn't been completely swarmed with AI generated images the way facebook has...

157 notes

·

View notes

Note

What Is Wolf 359 anyway ? Is it like a podcast ? And could you tell me all your favorite parts about it in great detail because it sounds really interesting and I trust your opinion :]

sits down across the table from you and slams a briefcase on the table so heavy it breaks it in half. Hey.

Wolf 359 is an audio podcast that came out in 2014. It opens up by asking the question “Would that be fucked up or what?” and its answers are really funny until they aren’t. Classic case of the funny story slowly becoming painfully real and dangerous for the protagonists.

The basics of the plot is a crew of three people plus their space station’s AI are sent to orbit a star lightyears away from earth by some totally suspicious corporation. The show introduces you right in the middle of the mission, when everyone’s already been out there for months, so they’ve sort of abandoned decorum for gassing each other with chemicals as a solution for workplace discourse. I will put my in depth thoughts under a cut because frankly, I would very very much like you to check out this podcast, because I like it a lot and want to see more people talking about it. Stick through at least season one and the beginning of season 2, it’s about a dozen episodes and it’s a quick listen to help you decide if you want to keep checking it out.

ok so my favorite things about it (non spoilers edition.)

-Really solidly written female characters. Like it’s genuinely enjoyable how much they share the same narrative weight as the male characters especially for a 2014 podcast.

-Character focused podcast 🫶🫶🫶🫶🫶 I love a story that is first and foremost about dissecting characters and their actions and I think Wolf 359 excels in this section of its writing. Doug Eiffel is a wonderful adventure in finding out what happens when a comedic relief character is confronted with being more than just his archetype.

-Ai storyline. Do you like robots? You should like my friend Hera. Everything happens to her. She sees everything all of the time, she knows so much more than you do, and she is physically unable to insult you to your face but I think she should do it anyways. I like. Her. She gets to get up to weird gay people stuff with a character that shows up later on and it leaves her changed in so many ways.

-Fucked up little scientist that I like. He’s Russian and you should listen to the podcast for the sole experience of comparing his voice in ep 1 to any ep in season 3. It’s funny.

ok specifics under the cut if you dare to hear my detailed thoughts before listening to the podcast

-Doug Eiffel’s character is genuinely one of the most interesting things to me. I talked about it in an older textpost but I love how Wolf 359 is a media not unfamiliar with immoral and nuanced characters and chooses to make sure that applies to not just its antagonists. The reveal in season 3 that he was a dad? And he like? Brutally impacted his kids life forever because of His Own shitty mistakes? And now he has to deal with that forever? Big. Massive even. Also part of the reason I have such a gripe with the finale of the show but it’s okay I think the show itself is still really worth it in spite of my mixed feelings on season 4.

-Isabel. ISABEEEEEEL. Isabel Lovelace is really good and the entirety of minisode 4 (I believe it’s 4?) kills me dead. She’s died not once, but twice! And lived! She’s tormented! She’s miserable! She’s the final girl! she’s a clone! She’s the original! I need to finish my relisten so I can get as weird about her as possible because fuck me does she have a fascinating storyline.

-That fuckass scientist. Hilbert. Heart. I can and have gone into depth about him before because I hate him. He’s like, peak for me vis a vis Wolf 359 and character dissection. Hes a horrible horrible man but the way he justifies his actions and how he views himself and what exactly his apathy is driven by fascinates me. This man died at 6 years old and has spent the rest of his life dragging the dead weight of his own corpse around while he works toward his unreasonable goals. I love him.

-Lovelace and Hilbert have a shared connection from before canon that makes me insane in a way I literally cannot describe. Type of dynamic that makes you so fucking nuts that I don’t just need you to hear me I need someone to grab at my brain and match my freak. I need more people to talk about them. They were friends and then they were enemies and also they understand each other more than anyone else. He killed her entire crew, but he’s the only member of her crew that’s left. He’s everything she lost and he’s all she has. She came back to tell him he isn’t allowed to forget what he did this time. They haunt each other. Blow them up now.

-Marcus Cutter is a deeply unsettling little man and I find him fascinating.

36 notes

·

View notes

Text

"Suiting Up for the Fu

The screen lit up with the stark, clinical brilliance of a defense contractor’s marketing reel. Crisp graphics swept across the frame: Republic Integrated Systems Presents: The Future of Excellence—The Mark IV Full-Body Armor.The voiceover began with the smug confidence of a salesman who knew his product had no competition.

“For cadets like C9J18, the Mark IV Armor isn’t just equipment—it’s a way of life. Engineered for total integration, unmatched efficiency, and unwavering loyalty, the Mark IV is the pinnacle of personal enhancement technology. Let’s take a tour.”

The scene shifted to C9J18 standing at attention, his visor down, the black armor hugging every contour of his augmented frame. Overlaid on the screen was a breakdown of his suit’s components, sleek lines pointing to each piece as the voice detailed their functions.

“From head to toe, the Mark IV transforms the wearer into the perfect operator. Modular plates provide full ballistic, environmental, and impact protection, while maintaining unparalleled flexibility and comfort. The suit isn’t just worn—it becomes an extension of the body.”

The camera zoomed in as C9J18 moved through his day, transitioning to his POV. The HUD was alive with information: telemetry readouts, vital signs, suit status. The Contextual Priority Filter immediately began working, greying out the irrelevant clutter of the world—background conversations, unimportant scenery, even the faint hum of distant machinery.

“With the Contextual Priority Filter, cadets only see what matters. The AI-driven system dynamically adjusts the wearer’s perception, ensuring absolute focus on mission-critical elements. Irrelevant distractions? Eliminated. Productivity? Maximized.”

C9J18’s HUD highlighted his fellow cadets, their alphanumeric IDs glowing softly above their heads. An order appeared in bold text across the top of his visor: Form up. Begin sprint drills. The cadet turned on his heel, his movements precise, his focus razor-sharp as the HUD traced his optimal path.

“Every action is guided by the Task Assignment System, seamlessly integrated with the suit’s neural interface. Orders are delivered directly to the wearer, ensuring immediate compliance and zero ambiguity. Whether it’s a drill or a live operation, the system ensures cadets are always one step ahead.”

The screen flickered to an instructor’s POV. The Drill Instructor’s helmet HUD displayed all cadet vitals, suit status, and performance metrics in real-time. The instructor’s voice cut through the comms channel, amplified by the suit’s audio system. “Pick up the pace, C9J18. You’re at 85% efficiency—I want 95 by the next lap.”

“Supervisors can control multiple cadets at once,” the voiceover explained, “adjusting suit parameters on the fly. Need to increase resistance for endurance training? Done. Reduce sensory input to prevent distractions? Easy. With the Mark IV, instructors don’t just teach—they sculpt.”

C9J18’s breathing was steady as he pushed through the sprint drill. His suit’s Sensory Regulation System muted the ache in his muscles, dampening the sensation of fatigue just enough to keep him going without compromising performance.

“The Sensory Regulation System is a game-changer,” the voiceover continued. “It modulates physical sensations, ensuring optimal performance. Pain, heat, cold—it’s all calibrated to the mission. The suit doesn’t just protect—it conditions.”

The scene transitioned to the mess hall, where C9J18 and his peers sat in silence, eating their nutrient-rich chow. The HUD overlay highlighted their calorie intake, hydration levels, and metabolic efficiency. Even here, the Contextual Priority Filter ensured no unnecessary conversation or stimuli distracted from their task.

“Nutrition is monitored in real-time, ensuring each cadet receives exactly what their body needs. The suit tracks digestion, energy expenditure, and even waste management, all while maintaining seamless integration with the wearer.”

As the day wound down, the footage shifted to the barracks. The cadets moved in synchronized silence, stepping into individual alcoves built into the walls. The HUD displayed a notification: Sleep Mode Engaged. C9J18 lay back in his suit, his visor darkening as the comms went silent.

“When the day is done, the Mark IV ensures uninterrupted rest. Fully equipped with climate control and biometric monitoring, the suit provides optimal conditions for recovery—even in full armor. The visor’s sleep mode darkens gradually, synchronizing with neural rhythms to lull the wearer into rest.”

The camera lingered on C9J18 as his breathing slowed, his face calm beneath the visor. The overlay showed his heart rate stabilizing, stress levels dropping, and compliance metrics holding steady. The AI’s gentle hum was a constant presence, even in sleep.

“From dawn to dusk, and even through the night, the Mark IV is more than just a suit—it’s a system. A revolution. A future.”

The screen cut to the Intelligence Conscript and K7L32, standing side by side, both suited up, their visors up to reveal their shaved heads and sharp, cynical smiles.

“Of course,” K7L32 said with a mocking tone, “it’s not just about the tech. It’s about the transformation. You don’t just wear the suit—you become it. Isn’t that right, sir?”

The Intelligence Conscript chuckled, his voice dripping with sardonic amusement. “That’s right, cadet. The Mark IV doesn’t just enhance—it defines. And for cadets like C9J18, it’s the beginning of a life dedicated to the Republic. Efficient. Loyal. And, best of all... compliant.”

The screen faded to black, the final words scrolling across in bold, unyielding font:

The Mark IV Armor Suit: Not Just a Tool—A Way of Life.

7 notes

·

View notes

Text

independent of the current discussion about AI art, one of my foundational political beliefs is that trying to stuff proverbial genies (i.e. automation tech like the automatic loom/printing press/warehouse robots/AI art programs) back into proverbial bottles is a noble but inevitably losing fight at best and downright reactionary at worst/on average. ironically playing Talos Principle 2 has really driven this home for me because the entire plot centers around an allegory for the story of Prometheus stealing fire from the gods to give to humans, but instead of fire it's the ability to violate thermodynamics to create mass and energy out of nothing. and the game keeps trying to frame the decision of what to do with this tech as a huge moral and ethical dilemma, and i keep not getting why im supposed to feel conflicted. "the gods themselves are warning you against it!" damn, they sound like dumbasses. "members of your utopian society are conflicted on what to do!" okay so some of them are wrong. "here's some audio logs showing that misuse of the technology killed someone by accident!" great! now we know what not to do to avoid that! "are you really so full of hubris that you think you can avoid the same mistakes as your forebears?" uh, yeah, they wrote down their mistakes for a reason. like idk why people on here keep being surprised that i support technological advancement for the sake of advancement and THEN critique how it sucks under capitalism specifically instead of just declaring it (say it with me now) Ontologically Evil. i spent 5 years in college majoring in robotics/CS and worked in warehouse/biomed automation for another 5. do you really expect me to entertain literal Luddite rhetoric? be serious

124 notes

·

View notes

Text

the videogame #NewReflectionsWomensShelterVideoGame #Playstation7

Here's a concept for an online MMORPG inspired by mystery, adventure, and role-playing, with an emphasis on solving mysteries and dynamic social interaction. This game builds on elements of a detective-driven genre while incorporating MMORPG mechanics for an expansive, immersive world.

Title: Shadowscape Online: The Infinite Mysteries

Genre: MMORPG / Mystery Adventure Platform: Cross-platform (PC, PlayStation, Nintendo, Xbox, and mobile).

Core Concept

Players become agents of the Order of the Shadowscape, a secret organization dedicated to uncovering hidden truths and solving world-altering mysteries. The game combines traditional MMORPG elements like exploration, combat, and crafting with unique mechanics like investigation, clue analysis, and social deduction.

Gameplay Features

Dynamic World

A vast open world with diverse biomes (urban cities, ancient ruins, haunted forests, underground labyrinths).

Day-night cycles, weather changes, and seasonal events impact the gameplay.

Investigation System

Players gather clues from the environment, NPCs, and interactions with other players.

Use tools like magnifying glasses, scanners, and enchanted artifacts to uncover hidden details.

Solve procedurally generated mysteries or world-changing story arcs with set narratives.

Class System Players choose from specialized detective archetypes:

The Investigator: Focuses on perception and deduction; excels in finding clues.

The Combatant: Combines brawling with solving action-heavy puzzles.

The Hacker: Expert at bypassing security and decoding digital information.

The Mystic: Uses magic to sense the unseen and interpret ancient lore.

The Socialite: Excels in persuasion, negotiation, and gathering intel from NPCs or other players.

Guilds and Factions

Players can join factions within the Order or rival groups, each with its own storyline and benefits.

Guilds allow players to team up and tackle large-scale mysteries, raids, or PvP scenarios.

Social Deduction and PvP

In competitive modes, players may need to identify traitors or uncover rival spies within their ranks.

Special PvP missions involve sabotage, infiltration, and defense.

Crafting and Customization

Craft detective tools, weapons, and gadgets from materials found in the world.

Customize avatars, from outfits to accessories like magnifying glasses and enchanted pendants.

Expansive Story Arcs

The game’s narrative evolves through major updates, with community decisions impacting the story.

Example: Solving a global mystery about a cursed artifact that’s destabilizing the world.

Unique Mechanics

Clueboard System

Players have a digital “Clueboard” to organize and analyze their findings.

Clues are categorized by type (e.g., physical evidence, testimonies, artifacts).

Use the Clueboard to form theories and unlock next steps in investigations.

Mind-Mapping Mini-Game

Solve puzzles by connecting events, characters, and clues in a visual interface.

Collaborative mind-mapping during team play for multiplayer investigations.

Procedural Mysteries

Infinite replayability with procedurally generated side mysteries and challenges.

AI-driven systems adapt mysteries to the players’ investigation style.

Dual Progression System

Traditional leveling for combat and skills.

Reputation ranking with factions and NPCs based on how well you solve mysteries.

Visual and Aesthetic Style

Graphics:

A blend of realistic environments with stylized characters and effects to create a timeless look.

Mystical overlays for clue-detecting moments (e.g., glowing trails, hidden texts).

User Interface:

A sleek interface with a dedicated "Detective Mode" that highlights clues and enables analysis tools.

Audio Design:

A dynamic soundtrack that shifts based on investigation phases (calm for clue gathering, tense for deductions).

Interactive sound cues for finding hidden objects or solving puzzles.

Multiplayer Modes

Team Investigations

Groups of up to six players collaborate on large-scale mysteries.

Divide roles for efficiency (e.g., someone interrogates NPCs while another examines crime scenes).

Guild Raids

Cooperative challenges against legendary enemies or unsolvable mysteries that require mass participation.

PvP Challenges

Compete in solving mysteries faster than rival teams.

Espionage missions where players sabotage or spy on other groups.

Roleplaying Servers

Create your own detective agency, invite friends, and design custom mysteries.

Potential Plot for Launch

Main Arc: The Shattered Veil

An ancient veil separating the mortal and mystical realms is breaking, causing chaos.

Players uncover a conspiracy within the Order that threatens the world’s stability.

Mysteries range from mundane crimes to supernatural enigmas.

Marketing Strategy

Pre-Launch Hype:

Interactive ARG (Alternate Reality Game) where fans solve real-world clues to unlock game lore.

Partner with streamers and mystery-based channels to generate excitement.

Post-Launch Events:

Seasonal updates featuring limited-time mysteries and rewards.

Collaborations with iconic mystery franchises like Sherlock Holmes or Nancy Drew.

Merchandise:

Branded detective tools and apparel.

A companion mystery journal for offline gameplay or planning.

Would you like a more detailed world map, storyline for the first expansion, or help drafting a pitch for potential backers?

Here’s a concept for a Nancy Drew-style detective video game series designed for the PlayStation 7 and Nintendo platforms, combining mystery, adventure, and modern gameplay mechanics with the support of Nintendo’s innovation and storytelling expertise.

Series Title: Mystery Reflections: Chronicles of the Reflection Crew

Genre: Detective Adventure / Puzzle-Solving Target Audience: Teens and young adults who enjoy mysteries, narrative-driven games, and clever puzzles.

Core Concept

Players step into the roles of a diverse group of amateur detectives solving mysteries around their community and beyond. Each mystery has unique challenges, requiring teamwork, critical thinking, and emotional intelligence.

Gameplay Mechanics

Detective Roleplay:

Players choose a character from the Reflection Crew, each with unique skills (e.g., hacking, forensic science, negotiation).

Characters' strengths impact how mysteries are solved and offer replayability.

Investigation Phases:

Explore: Investigate crime scenes, gather clues, and interact with NPCs.

Analyze: Use tools like fingerprint scanners, digital decoders, and chemistry kits.

Conclude: Assemble clues into theories using a visual "Mind Map" to solve the case.

Puzzle Challenges:

Code-breaking, lock-picking, deciphering cryptic messages, and environmental puzzles.

Time-sensitive challenges add urgency to certain mysteries.

Dialogue Choices:

Branching dialogue impacts the story and how NPCs respond.

Some choices unlock hidden clues or alternate endings.

Co-Op Mode:

Multiplayer mode where up to four players control different characters, solving mysteries collaboratively.

Unique puzzles that require teamwork.

Visual and Gameplay Style

Graphics:

Stylized realism with vibrant, detailed environments inspired by classic mystery locales (e.g., eerie mansions, bustling cities, desolate islands).

Nintendo’s colorful aesthetic blends with PS7’s cutting-edge performance for stunning visuals.

Camera Mechanics:

Dynamic, third-person perspective with zoom-in modes for close inspection of clues.

Dynamic Environments:

Day-night cycles and weather affect exploration and clue visibility.

Plot and Structure

Game 1: The Whispering Lighthouse

Setting: A coastal town with a mysterious lighthouse rumored to be haunted.

Plot: The Reflection Crew investigates the disappearance of a marine biologist, uncovering smuggling operations and hidden treasure.

Puzzles: Decode lighthouse signals, unlock a hidden passage, and match biological samples to solve the case.

Finale: A high-stakes chase in the lighthouse during a storm.

Game 2: Shadows of the Reflection Manor

Setting: A sprawling estate with hidden rooms, secret tunnels, and a cursed reputation.

Plot: A famous artifact disappears during a gala, and the crew is invited to solve the mystery.

Puzzles: Solve riddles to unlock rooms, analyze historical artifacts, and outwit the thief.

Game 3: The Phantom Express

Setting: A luxury train where a high-profile theft occurs during a cross-country trip.

Plot: The team must solve the crime before the train reaches its destination, preventing the thief’s escape.

Puzzles: Use characters' skills to eavesdrop, hack compartments, and decode the thief’s plans.

Key Characters

Amy (The Strategist)

Skills: Leadership, negotiation, financial analysis.

Role: Mediates group decisions and handles tricky social situations.

Here’s a concept for a Nancy Drew-style detective video game series designed for the PlayStation 7 and Nintendo platforms, combining mystery, adventure, and modern gameplay mechanics with the support of Nintendo’s innovation and storytelling expertise.

Series Title: Mystery Reflections: Chronicles of the Reflection Crew

Genre: Detective Adventure / Puzzle-Solving Target Audience: Teens and young adults who enjoy mysteries, narrative-driven games, and clever puzzles.

Core Concept

Players step into the roles of a diverse group of amateur detectives solving mysteries around their community and beyond. Each mystery has unique challenges, requiring teamwork, critical thinking, and emotional intelligence.

Gameplay Mechanics

Detective Roleplay:

Players choose a character from the Reflection Crew, each with unique skills (e.g., hacking, forensic science, negotiation).

Characters' strengths impact how mysteries are solved and offer replayability.

Investigation Phases:

Explore: Investigate crime scenes, gather clues, and interact with NPCs.

Analyze: Use tools like fingerprint scanners, digital decoders, and chemistry kits.

Conclude: Assemble clues into theories using a visual "Mind Map" to solve the case.

Puzzle Challenges:

Code-breaking, lock-picking, deciphering cryptic messages, and environmental puzzles.

Time-sensitive challenges add urgency to certain mysteries.

Dialogue Choices:

Branching dialogue impacts the story and how NPCs respond.

Some choices unlock hidden clues or alternate endings.

Co-Op Mode:

Multiplayer mode where up to four players control different characters, solving mysteries collaboratively.

Unique puzzles that require teamwork.

Visual and Gameplay Style

Graphics:

Stylized realism with vibrant, detailed environments inspired by classic mystery locales (e.g., eerie mansions, bustling cities, desolate islands).

Nintendo’s colorful aesthetic blends with PS7’s cutting-edge performance for stunning visuals.

Camera Mechanics:

Dynamic, third-person perspective with zoom-in modes for close inspection of clues.

Dynamic Environments:

Day-night cycles and weather affect exploration and clue visibility.

Plot and Structure

Game 1: The Whispering Lighthouse

Setting: A coastal town with a mysterious lighthouse rumored to be haunted.

Plot: The Reflection Crew investigates the disappearance of a marine biologist, uncovering smuggling operations and hidden treasure.

Puzzles: Decode lighthouse signals, unlock a hidden passage, and match biological samples to solve the case.

Finale: A high-stakes chase in the lighthouse during a storm.

Game 2: Shadows of the Reflection Manor

Setting: A sprawling estate with hidden rooms, secret tunnels, and a cursed reputation.

Plot: A famous artifact disappears during a gala, and the crew is invited to solve the mystery.

Puzzles: Solve riddles to unlock rooms, analyze historical artifacts, and outwit the thief.

Game 3: The Phantom Express

Setting: A luxury train where a high-profile theft occurs during a cross-country trip.

Plot: The team must solve the crime before the train reaches its destination, preventing the thief’s escape.

Puzzles: Use characters' skills to eavesdrop, hack compartments, and decode the thief’s plans.

Key Characters

Amy (The Strategist)

Skills: Leadership, negotiation, financial analysis.

Role: Mediates group decisions and handles tricky social situations.

Elle (The Creative Problem-Solver)

Skills: Art interpretation, visual puzzles, and creative thinking.

Role: Solves artistic and symbolic mysteries.

Ayesha (The Tech Expert)

Skills: Hacking, coding, and surveillance.

Role: Handles electronic locks, computers, and digital evidence.

Matt (The Investigator)

Skills: Tracking, observation, and logic puzzles.

Role: Finds physical clues and connects details.

Zoey (The Muscle)

Skills: Physical tasks, map navigation, and athletic challenges.

Role: Handles physical puzzles, from moving objects to high-stakes chases.

Nintendo and PlayStation Features

Nintendo Switch:

Motion Controls: Use the Joy-Cons to examine clues, pick locks, or match puzzle pieces.

Portable Play: Seamless exploration on the go.

PlayStation 7:

Haptic Feedback: Experience realistic vibrations while opening safes or climbing surfaces.

4D Soundscapes: Immerse players with atmospheric sounds that signal hidden clues.

Marketing Strategy

Teasers and Trailers:

Cinematic trailers featuring gripping mystery scenes and team dynamics.

Interactive social media teasers that challenge fans to solve puzzles for exclusive content.

Collaborations:

Work with influencers in the gaming and mystery-solving communities.

Host live events where fans can solve real-life puzzles inspired by the game.

Merchandise:

Collectible action figures of the crew.

A companion Mystery Journal for players to track clues and theories.

Would you like a detailed storyline for one game, or assistance drafting a pitch for Nintendo and PlayStation?

#newreflections#new reflections#Newreflectionswomensshelter#Videogame#Babysitters club#Novels#PlayStation 7#DearDearestBrands

7 notes

·

View notes

Note

Pebbles is an amazing creator and I love his Yandere king series but I'm drawn away from his channel because of the Ai use..

Understandable, I really wish he stuck to using the picrews he was using for his more story driven audios. Or him commissioning other artists as well. Hell, having a blank screen would have been better than AI. He's a super talented VA but he's not without criticism.

11 notes

·

View notes

Text

INTRODUCTION

Artificial Intelligence (AI) tools are digital applications that use intelligent algorithms to simulate human-like thinking and behavior. These tools help automate tasks, generate creative content, analyze large datasets, and make decisions based on real-time data. AI tools are used across industries such as education, marketing, design, healthcare, finance, and more.

Voice & Audio Tools

AI can now create human-like voices, convert text to speech, or even translate and dub content into different languages.

AI Design Tools

Design tools use AI to generate logos, edit images, remove backgrounds, and recommend designs.

AI Video Editors

AI-powered editors can cut, trim, enhance, and even auto-caption videos with very little manual work.

Marketing & SEO Tools

AI helps marketers with keyword research, SEO optimization, content scoring, and campaign automation.

youtube

AI writing assistants Improve student performance by making information more accessible, support personalized learning, and improve time management. But beyond flashy tech, they provide real, measurable value

24/7 Learning Support: Students no longer need to wait for office hours or tutor availability.

Improved Academic Outcomes: AI-driven tools, such as chatbots and study aids, help students grasp complex concepts more effectively.

Reduced Burnout: AI automates repetitive tasks, such as summarizing texts, organizing notes, and scheduling.

The Benefits of WorkBot for Educational Institutions: The Reasons Universities Select WorkBot Complete Coverage: Responds to 80% of typical student questions without the need for human assistance Simple Integration No-code configuration is compatible with current university systems. scalable Solution: Enables thousands of talks at once. Customizable: Adapts to university-specific policies and procedures Analytics Dashboard: Provides insights into student needs and operational efficiency.

CONCLUSION

AI tools have rapidly become essential in today’s digital world. From writing content and designing visuals to automating tasks and enhancing learning, these tools are transforming how we work, learn, and create. Whether you're a student, entrepreneur, content creator, or marketer, AI tools can help you save time, boost productivity, and unlock new levels of creativity.

As technology continues to evolve, the role of AI tools will only grow stronger. Embracing them today not only gives you a competitive edge but also prepares you for the future of work and innovation. Start exploring the power of AI tools now and discover how they can simplify your life and amplify your potential.

2 notes

·

View notes

Text

Something I am noticing lately on our current 'content and engagement-driven' internet is the mass platforming of stuff that 'sounds like it makes sense to a human being' as its only basis. It resembles 'a real thing' that people can hear of, but it's served completely blind to knowledge of the topic at hand.

It's not quite misinformation because it doesn't have to be an intentional trick or fringe conspiracy theory, or 'fake news' style progpaganda, or something with any kind of comprehensible 'goal.' And its often fully automated: it's about feedback loops in the selection of content, not 'content creation.'

So 'accurate' information on how and how often people select A, B, or C... but no information about what A, B, or C are or any factual information about them. Sort of like how 'AI art' will return output based on prompts, vast amounts of indexed 'reference' and trained data, but doesn't know 'what it's looking at.'

The result is similar to 'enshittification' but doesn't have to do with gutting usability to deliver returns to investors. It has to do with automated systems self-gutting a platform's usability because they do not actually 'know' anything, they just evaluate the navigation patterns of prior users.

This is a really simple, innocent, non-malicious starting point:

This is an audio-based youtube video that was made by a human being (I assume) who mixed Blue Noise and Violet Noise with the sounds of actual blueberries being shaken in a box for relaxation purposes. There's nothing inherently wrong with it. Please don't find and bother the blueberry noise person, nothing (that I know of) is their fault.

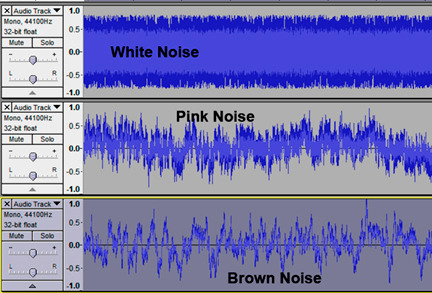

The thing is, 'white noise/pink noise/brown noise' are specific audio patterns that are sometimes studied for their benefits on concentration, sleep quality, etc. Which is why people are searching for them on the internet, they want practical benefits. There are other 'color' noises but any benefits are even less well-known, or their application for study is very specific (Violet Noise is studied for tinnitus relief, I believe.)

While the blueberry noise video is just for fun, for the benefit of anyone who enjoys it, it exists within a 'topic' that a platform like Youtube has matched to be 'about' some practical benefit. But you don't need to actually know anything about Pink Noise or Brown Noise to get to that topic, you just need to search 'concentration noise.'

You could plonk down a 10 hour video of your own farts and title it "rainbow noise for homework focus!" and the algorithm cannot 'know' that no part of what you posted is "a real thing." It would only see the activity metrics, who got to it from where, and how popular you are, and its potential to keep users engaging with the platform.

People navigate 'white noise/pink noise/brown noise' for ADHD relief, to soothe their babies to sleep, or to help with chronic insomnia or headaches. Even if studies on the effectiveness of 'well known' color noises are still only preliminary (this study only had 22 participants!), there is some basis for their benefit... but no evidence yet that supports the benefit in mixing different color noise patterns, like the blueberry noise video. It's just for fun. The issue is that Youtube doesn't know what 'just for fun' is.

If you listen to enough audio pattern content to encounter our blueberry noise friend you probably are going to run into 'Alpha/Beta/Gamma Frequencies', 'Solfeggio Tones', and 'Binaural Beats.' But, again, the algorithm has no real human knowledge: it doesn't 'know' the difference between any of these things or what they're studied for, or if any real benefit has been confirmed. But content reflecting machine curation's inability to understand will float to the top, based on user activity patterns:

Alpha waves have nothing to do with being an alpha chad. But through association without comprehension, platforms have 'learned' what 'alpha' means and as a result content will appear and be promoted that bridges multiple ways people use 'alpha' as a search term.

High frequencies produced by the human brain have been associated with memory recall and learning, but listening to high frequencies as 'brain waves' has nothing to do with this, and especially not a dystopian drive to 'increase productivity and output.' But people are searching for how to do that, because of the demand their lives place on them.

There is no sound frequency you can listen to that heals the body or reduces inflammation but due to this principle I'm describing that's messing with the internet, you can search this on Google and it will now not give you any credible information on the first page, it will assume that what you're talking about is legitimate and show you results that 'you're looking for':

It will not assume you would be interested in a 'no' or 'this is not real.' None of the Page 1 Google Search Results pictured above are based in any scientific fact. Maybe if you're a stressed out zebrafish. Legitimate medical practices that use frequencies are like... shock wave lithotripsy for kidney stones to break them apart. But once a web platform records people searching for audio content that confers dramatic 'real' results, it will retrieve other kinds of content as if it's credible:

I think it goes without saying that this has no basis in fact and it's not just somebody's fun entertainment project, and it's not something a typical person would seek out or casually believe to be true.

Anyway. The blueberry noise is fun and fine. But the current internet is on a form of evil autopilot that can't discern white noise intended to soothe babies from the innocent only-for-fun blueberry noise from The Law of Attraction. It's like the perfect totally-blind robot salesperson without ethics or morals of any kind. If you ask it for medicine it will eventually sell you cyanide pills simply because they are pills and so many people are interested in cyanide these days.

33 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration

The early 2000s brought the integration of MIDI controllers into DJ performance, marking a shift toward digital workflows. Devices like the Vestax VCI-100 and Hercules DJ Console enabled control over software like Traktor, Serato, and VirtualDJ. This introduced features such as beat syncing, cue points, and FX without losing physical interaction.

From an engineering perspective, this era introduced complexities such as USB data latency, audio driver configurations, and software-to-hardware mapping. However, it also opened the door to more compact, modular systems with immense creative potential.

Controllerism and Creative Freedom

Between 2010 and 2015, the concept of controllerism took hold. DJs began customizing their setups with multiple MIDI controllers, pad grids, FX units, and audio interfaces to create dynamic, live remix environments. Brands like Native Instruments, Akai, and Novation responded with feature-rich units that merged performance hardware with production workflows.

Technical advancements during this period included:

High-resolution jog wheels and pitch faders

Multi-deck software integration

RGB velocity-sensitive pads

Onboard audio interfaces with 24-bit output

HID protocol for tighter software-hardware response

These tools enabled a new breed of DJs to blur the lines between DJing, live production, and performance art—all requiring more advanced routing, monitoring, and latency optimization from audio engineers.

All-in-One Systems: Power Without the Laptop

As processors became more compact and efficient, DJ controllers began to include embedded CPUs, allowing them to function independently from computers. Products like the Pioneer XDJ-RX, Denon Prime 4, and RANE ONE revolutionized the scene by delivering laptop-free performance with powerful internal architecture.

Key engineering features included:

Multi-core processing with low-latency audio paths

High-definition touch displays with waveform visualization

Dual USB and SD card support for redundancy

Built-in Wi-Fi and Ethernet for music streaming and cloud sync

Zone routing and balanced outputs for advanced venue integration

For engineers managing live venues or touring rigs, these systems offered fewer points of failure, reduced setup times, and greater reliability under high-demand conditions.

Embedded AI and Real-Time Stem Control

One of the most significant breakthroughs in recent years has been the integration of AI-driven tools. Systems now offer real-time stem separation, powered by machine learning models that can isolate vocals, drums, bass, or instruments on the fly. Solutions like Serato Stems and Engine DJ OS have embedded this functionality directly into hardware workflows.

This allows DJs to perform spontaneous remixes and mashups without needing pre-processed tracks. From a technical standpoint, it demands powerful onboard DSP or GPU acceleration and raises the bar for system bandwidth and real-time processing.

For engineers, this means preparing systems that can handle complex source isolation and downstream processing without signal degradation or sync loss.

Cloud Connectivity & Software Ecosystem Maturity

Today’s DJ controllers are not just performance tools—they are part of a broader ecosystem that includes cloud storage, mobile app control, and wireless synchronization. Platforms like rekordbox Cloud, Dropbox Sync, and Engine Cloud allow DJs to manage libraries remotely and update sets across devices instantly.

This shift benefits engineers and production teams in several ways:

Faster changeovers between performers using synced metadata

Simplified backline configurations with minimal drive swapping

Streamlined updates, firmware management, and analytics

Improved troubleshooting through centralized data logging

The era of USB sticks and manual track loading is giving way to seamless, cloud-based workflows that reduce risk and increase efficiency in high-pressure environments.

Hybrid & Modular Workflows: The Return of Customization

While all-in-one units dominate, many professional DJs are returning to hybrid setups—custom configurations that blend traditional turntables, modular FX units, MIDI controllers, and DAW integration. This modularity supports a more performance-oriented approach, especially in experimental and genre-pushing environments.

These setups often require:

MIDI-to-CV converters for synth and modular gear integration

Advanced routing and clock sync using tools like Ableton Link

OSC (Open Sound Control) communication for custom mapping

Expanded monitoring and cueing flexibility

This renewed complexity places greater demands on engineers, who must design systems that are flexible, fail-safe, and capable of supporting unconventional performance styles.

Looking Ahead: AI Mixing, Haptics & Gesture Control

As we look to the future, the next phase of DJ controllers is already taking shape. Innovations on the horizon include:

AI-assisted mixing that adapts in real time to crowd energy

Haptic feedback jog wheels that provide dynamic tactile response

Gesture-based FX triggering via infrared or wearable sensors

Augmented reality interfaces for 3D waveform manipulation

Deeper integration with lighting and visual systems through DMX and timecode sync

For engineers, this means staying ahead of emerging protocols and preparing venues for more immersive, synchronized, and responsive performances.

Final Thoughts

The modern DJ controller is no longer just a mixing tool—it's a self-contained creative engine, central to the live music experience. Understanding its capabilities and the technology driving it is critical for audio engineers who are expected to deliver seamless, high-impact performances in every environment.

Whether you’re building a club system, managing a tour rig, or outfitting a studio, choosing the right gear is key. Sourcing equipment from a trusted professional audio retailer—online or in-store—ensures not only access to cutting-edge products but also expert guidance, technical support, and long-term reliability.

As DJ technology continues to evolve, so too must the systems that support it. The future is fast, intelligent, and immersive—and it’s powered by the gear we choose today.

2 notes

·

View notes

Text

On a stifling April afternoon in Ajmer, in the Indian state of Rajasthan, local politician Shakti Singh Rathore sat down in front of a greenscreen to shoot a short video. He looked nervous. It was his first time being cloned.

Wearing a crisp white shirt and a ceremonial saffron scarf bearing a lotus flower—the logo of the BJP, the country’s ruling party—Rathore pressed his palms together and greeted his audience in Hindi. “Namashkar,” he began. “To all my brothers—”

Before he could continue, the director of the shoot walked into the frame. Divyendra Singh Jadoun, a 31-year-old with a bald head and a thick black beard, told Rathore he was moving around too much on camera. Jadoun was trying to capture enough audio and video data to build an AI deepfake of Rathore that would convince 300,000 potential voters around Ajmer that they’d had a personalized conversation with him—but excess movement would break the algorithm. Jadoun told his subject to look straight into the camera and move only his lips. “Start again,” he said.

Right now, the world’s largest democracy is going to the polls. Close to a billion Indians are eligible to vote as part of the country’s general election, and deepfakes could play a decisive, and potentially divisive, role. India’s political parties have exploited AI to warp reality through cheap audio fakes, propaganda images, and AI parodies. But while the global discourse on deepfakes often focuses on misinformation, disinformation, and other societal harms, many Indian politicians are using the technology for a different purpose: voter outreach.

Across the ideological spectrum, they’re relying on AI to help them navigate the nation’s 22 official languages and thousands of regional dialects, and to deliver personalized messages in farther-flung communities. While the US recently made it illegal to use AI-generated voices for unsolicited calls, in India sanctioned deepfakes have become a $60 million business opportunity. More than 50 million AI-generated voice clone calls were made in the two months leading up to the start of the elections in April—and millions more will be made during voting, one of the country’s largest business messaging operators told WIRED.

Jadoun is the poster boy of this burgeoning industry. His firm, Polymath Synthetic Media Solutions, is one of many deepfake service providers from across India that have emerged to cater to the political class. This election season, Jadoun has delivered five AI campaigns so far, for which his company has been paid a total of $55,000. (He charges significantly less than the big political consultants—125,000 rupees [$1,500] to make a digital avatar, and 60,000 rupees [$720] for an audio clone.) He’s made deepfakes for Prem Singh Tamang, the chief minister of the Himalayan state of Sikkim, and resurrected Y. S. Rajasekhara Reddy, an iconic politician who died in a helicopter crash in 2009, to endorse his son Y. S. Jagan Mohan Reddy, currently chief minister of the state of Andhra Pradesh. Jadoun has also created AI-generated propaganda songs for several politicians, including Tamang, a local candidate for parliament, and the chief minister of the western state of Maharashtra. “He is our pride,” ran one song in Hindi about a local politician in Ajmer, with male and female voices set to a peppy tune. “He’s always been impartial.”

While Rathore isn’t up for election this year, he’s one of more than 18 million BJP volunteers tasked with ensuring that the government of Prime Minister Narendra Modi maintains its hold on power. In the past, that would have meant spending months crisscrossing Rajasthan, a desert state roughly the size of Italy, to speak with voters individually, reminding them of how they have benefited from various BJP social programs—pensions, free tanks for cooking gas, cash payments for pregnant women. But with the help of Jadoun’s deepfakes, Rathore’s job has gotten a lot easier.

He’ll spend 15 minutes here talking to the camera about some of the key election issues, while Jadoun prompts him with questions. But it doesn’t really matter what he says. All Jadoun needs is Rathore’s voice. Once that’s done, Jadoun will use the data to generate videos and calls that will go directly to voters’ phones. In lieu of a knock at their door or a quick handshake at a rally, they’ll see or hear Rathore address them by name and talk with eerie specificity about the issues that matter most to them and ask them to vote for the BJP. If they ask questions, the AI should respond—in a clear and calm voice that’s almost better than the real Rathore’s rapid drawl. Less tech-savvy voters may not even realize they’ve been talking to a machine. Even Rathore admits he doesn’t know much about AI. But he understands psychology. “Such calls can help with swing voters.”

13 notes

·

View notes

Text

Music Downloads: Embracing Innovation and Convenience

The way we download and experience music is evolving at an exciting pace. From physical CDs to digital files and streaming services, music consumption has undergone significant changes. Looking ahead, the future of music downloads promises even more innovation, offering new ways to access, enjoy, and interact with music. In this article, we explore the possibilities of how music downloading will evolve in the years to come, with a focus on emerging technologies and trends that will shape the industry.

The Dominance of Streaming Platforms

Streaming platforms have already transformed the way we listen to music, and their influence will only continue to grow. Services like Spotify, Apple Music, and Amazon Music offer users instant access to millions of songs from virtually any device, making downloading less necessary for many.

In the future, these platforms will become even more powerful. AI-driven algorithms will enhance the listening experience, delivering hyper-personalized playlists, discovering new artists, and creating seamless music experiences for users. Streaming will likely become the go-to method of music consumption, as it offers unmatched convenience and accessibility.

The Rise of High-Quality Audio Formats

While MP3 files have dominated digital music for years, the future will bring higher-quality audio formats to the forefront. Lossless audio formats such as FLAC and ALAC are becoming increasingly popular, providing superior sound that appeals to audiophiles and casual listeners alike.

As technology continues to advance, we may see even better audio formats emerge, offering crystal-clear sound quality. High-definition audio, combined with innovations like 3D sound, will likely make music downloading more immersive and enjoyable, creating an even richer listening experience.

Cloud-Based Music Storage

The convenience of cloud storage is already a game-changer for music lovers. It allows users to store their music libraries online and access them from any device, anywhere. This trend will only grow, offering more advanced cloud storage solutions that make it easier to manage personal music collections without worrying about storage space.

In the future, music downloading may become more integrated with cloud services, allowing users to store and access songs effortlessly. With seamless synchronization across multiple devices, users will enjoy the freedom to download and listen to their music with complete flexibility, no matter where they are.

Virtual and Augmented Reality Music Experiences

Virtual reality (VR) and augmented reality (AR) are on the rise in many industries, and music is no exception. In the near future, VR and AR will open up new possibilities for how we experience music.

Imagine downloading your favorite tracks and then stepping into a virtual concert environment, where you can attend live shows in 3D, interact with artists, or even explore immersive virtual music festivals. These technologies will allow for more engaging and interactive music experiences, offering fans new ways to connect with their favorite artists and discover new music.

AI and Personalized Music Creation

Artificial intelligence is already influencing the music industry, from AI-generated music to personalized playlist curation. As AI continues to evolve, it will further shape the future of music downloading.

We can expect AI to play a significant role in music creation, with artists collaborating with AI to produce innovative tracks. Additionally, AI will continue to refine music recommendation algorithms, offering even more accurate and personalized suggestions for listeners. This will make it easier to discover new music tailored to individual tastes, ensuring that every music download or stream is a perfect fit.

Blockchain Technology for Fair Music Distribution

Blockchain technology has the potential to revolutionize how music is distributed and downloaded, providing a more transparent and secure way for artists to distribute their work. By cutting out intermediaries like record labels, blockchain allows for direct transactions between creators and fans, ensuring that artists are fairly compensated for their work.

In the future, blockchain could play a key role in how we purchase and download music. Fans may be able to buy songs directly from artists, eliminating middlemen and promoting a more equitable music industry. This would empower creators while ensuring fans have easy and secure access to their favorite music.

2 notes

·

View notes

Text

Last year marked the 40th anniversary of the almost-apocalypse.

On Sept. 26, 1983, Russian Lt. Col. Stanislav Petrov declined to report to his superiors information he suspected to be false, which detailed an inbound U.S. nuclear strike. His inaction prevented a Russian retaliatory strike and the global nuclear exchange it would have precipitated. He thus saved billions of lives.

Today, the job of Petrov’s descendants is much harder, chiefly due to rapid advancements in artificial intelligence. Imagine a scenario where Petrov receives similar alarming news, but it is backed by hyper-realistic footage of missile launches and a slew of other audio-visual and text material portraying the details of the nuclear launch from the United States.

It is hard to imagine Petrov making the same decision. This is the world we live in today.

Recent advancements in AI are profoundly changing how we produce, distribute and consume information. AI-driven disinformation has affected political polarization, election integrity, hate speech, trust in science and financial scams. As half the world heads to the ballot box in 2024 and deepfakes target everyone from President Biden to Taylor Swift, the problem of misinformation is more urgent than ever before.

False information produced and spread by AI, however, does not just threaten our economy and politics. It presents a fundamental threat to national security.

Although a nuclear confrontation based on fake intelligence may seem unlikely, the stakes during crises are high and timelines are short, creating situations where fake data could well tilt the balance toward nuclear war.

The evolution of nuclear systems has led to further ambiguity in crises and shorter timeframes for verifying intelligence. An intercontinental ballistic missile from Russia could reach the U.S. within 25 minutes. A submarine-launched ballistic missile could arrive even sooner. Many modern missiles carry ambiguous payloads, making it unclear whether they are nuclear-tipped. AI tools for verifying the authenticity of content are not sufficiently reliable, making this ambiguity difficult to resolve in a short window.

[...]

The problem of fake information is also relevant at the level of response. As COVID-19 demonstrated, the proliferation of misinformation led to a less effective public health response and many more infections and deaths. A future response could be significantly hampered by ordinary citizens’ ability to manufacture compelling false information about a pathogen’s origins and remedies, mirroring the quality and style of a scientific journal.

In cybersecurity, spearphishing — the practice of deceiving a person with specifically targeted false information or authority — likewise proliferates with the emergence of advanced generative AI systems. State-of-the-art AI systems allow more actors with less technical expertise to craft believable narratives about their positions and requests, allowing them to extract information from unwitting victims.

The same tactics deployed for financial schemes have been used against personnel occupying important positions in government. Some of these efforts were successful. With ever-improving AI systems lowering the barriers to carrying out such attacks, they may become far more effective and frequent.

Clearly, AI-powered disinformation is a fundamental risk to safety and security. A central strategy to mitigate this threat must start at the source. The most powerful systems — produced by a handful of tech companies — must be scrutinized for such disinformation risks before they are developed and deployed. Systems presenting the potential for such harm must be prevented from release until safeguards are in place to eliminate these risks.

11 notes

·

View notes

Text

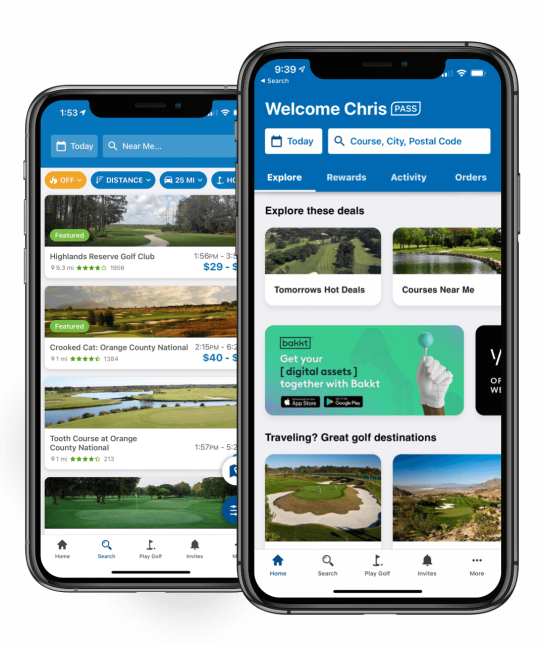

Top 9 Golf Apps to Elevate Your Game in 2025

Technology has transformed the way golfers train, track progress, and even book tee times. With apps offering features like AI-powered coaching, GPS tracking, and social networking, golfers can take their game to new heights.

Here’s a look at the best golf apps of 2025, ranked based on their relevance and usefulness.

1. Golfshot – Best All-in-One Golf App

If you’re looking for a single app that does it all, Golfshot is the way to go. From club recommendations to GPS yardages and even 3D course flyovers, it provides in-depth analytics to help golfers improve their game.

Key Features:

AI-driven club recommendation

GPS distances to targets and hazards

3D flyovers of courses

Digital scorecards

Golf instruction videos

Price:

Golfshot Pro: $59.99/year

Golfshot Pro + Golfplan: $79.98/year

Golfplan: $19.99/year

2. 18 Birdies – Best AI Golf Coach App

18 Birdies is more than just a GPS rangefinder—it's an AI-powered coach. It analyzes your swing and provides actionable insights in minutes. The app also includes a library of drills and tracking tools.

Key Features:

AI-powered swing analysis

GPS rangefinder

Handicap tracker

Digital scorecard

Virtual caddy

Price:

Free (Premium membership available for $19.99/month or $99/year)

3. GolfLogix – Best for Game Improvement

For golfers who want to sharpen their skills, GolfLogix offers real-time shot tracking, club recommendations, and putting guidance. This app provides advanced stats that help break down your game in detail.

Key Features:

GPS distance tracking

3D hole overviews

Putt line guidance

Apple Watch compatibility

Price: $12.99/month or $59.99/year

4. Shot Tracer – Best for Ball Flight Tracking

Ever hit a shot and lost track of where it went? Shot Tracer helps visualize ball flight, tracks swings, and even offers fun special effects for replaying shots.

Key Features:

Strobe-motion swing tracking

120 FPS and 240 FPS recording

Special effects for fun replays

Compatible with iOS, Android, and Windows

Price: $5.99

5. TheGrint – Best Handicap Tracker

If you’re serious about tracking your progress, TheGrint is a must-have. It offers free USGA handicap tracking and a GPS rangefinder to help you assess your shots.

Key Feature's

GPS rangefinder

Digital scorecard

Tournament organization tools

Free USGA handicap tracking

Price:

Free (Premium membership available for $19.99/year)

6. Hole19 – Best Free Golf App

For golfers who want a free tool with premium-level features, Hole19 is a great pick. Its GPS tracker and intuitive interface make it an excellent choice for players on a budget.

Key Features:

GPS rangefinder

Digital scorecard

Course previews

Social features

Price:

Free (Premium plan: $7.99/month or $49.99/year)

7. GolfNow – Best for Booking Tee Times

Booking tee times has never been easier with GolfNow. It provides access to over 9,000 courses and often features exclusive discounts on tee times.

Key Features:

Easy online booking

Exclusive discounts

24/7 access

Integrated payment system

Price:

Free (Premium membership: $99/month)

8. Imagine Golf – Best for the Mental Game

A strong mental game is just as important as physical skills. Imagine Golf provides mindset training, visualization techniques, and course management lessons through 400+ audio sessions.

Key Features:

Pre-shot routine training

Course strategy guides

Daily golf mindset lessons

Save and share favorite lessons

Price:

7-day free trial, then $4.99/month or $59.99/year

9. Fairgame – Best Social Golf App

Looking to connect with other golfers? Fairgame, co-created by pro golfer Adam Scott, helps players track scores and compete against friends in a digital clubhouse.

Key Features:

Social networking for golfers

Score and stat tracking

Course discovery

Friendly wagering options

Price: Free

Final Thoughts

Whether you’re looking for a personal AI coach, a GPS tracker, or a tee-time booking app, these top 9 golf apps of 2025 will help you enhance your skills and enjoy the game more than ever. Try them out and find the best fit for your playing style!

#golf news#pga#pga tour#golf tips#bfdi golf ball#golf events#golf club#golfswing#crazy golf#golf wang#golf instructor#mini golf#birdie wing golf girls' story

2 notes

·

View notes

Text

🎙️ Elevate Your Voice with WellSaidLabs - The AI-Powered Voice Creation Platform

In today's digital landscape, the power of the human voice has become more important than ever. 🗣️ Whether you're creating voice-over content, virtual assistants, or audio experiences, having the right tools to craft a compelling, authentic-sounding voice is essential. Enter WellSaidLabs - the AI-powered platform that's redefining the future of voice creation.

✨ What is WellSaidLabs? WellSaidLabs is a cutting-edge platform that utilizes advanced AI and machine learning to generate high-quality, natural-sounding synthetic voices. With a vast library of voice models and customization options, you can bring your voice-driven projects to life with unparalleled realism and flexibility.

🛠️ Key Features:

Extensive Voice Model Library: Choose from a diverse range of professional-grade voice models, covering multiple languages, accents, and styles. Hyper-Realistic Voice Synthesis: WellSaidLabs' AI engine produces remarkably natural-sounding audio, indistinguishable from a human recording. Seamless Voice Customization: Easily adjust the tone, pitch, and other parameters to perfectly match your brand or project's needs. Text-to-Speech Capabilities: Generate lifelike audio from any text input, with precise lip-syncing and natural-sounding inflections. Scalable & Collaborative Platform: Work together with your team to create and manage your voice assets. 💡 Why WellSaidLabs is a Game-Changer:

Enhances User Experience: Captivate your audience with authentic, engaging voice experiences. Streamlines Content Production: Simplify the voice creation process and bring your projects to market faster. Boosts Accessibility: Ensure your content is accessible to a wider audience through high-quality text-to-speech functionality. Fosters Brand Consistency: Develop a distinctive, recognizable voice that reinforces your brand identity. Ready to take your voice-driven projects to new heights? 🚀 Explore the possibilities with WellSaidLabs and start crafting unforgettable voice experiences today!

#WellSaidLabs #AIVoiceCreation #VoiceContent #TextToSpeech #DigitalExperiences

4 notes

·

View notes