#AIAccelerators

Explore tagged Tumblr posts

Text

#autonomous systems#smart devices#efficiency#AI#Semiconductors#AIChips#EdgeComputing#SmartDevices#DataCenters#AIAccelerators#TechInnovation#RationalStat#TimesTech#ArtificialIntelligence#ChipDesign#electronicsnews#technologynews

0 notes

Text

Edge AI Accelerator Market Forecasted to Reach USD 110.21 Billion by 2034 | CAGR: 30.7%

Edge AI Accelerator Market Analysis: Opportunities, Innovations, and Growth Potential Through 2034 Global Edge AI Accelerator Market size and share is currently valued at USD 7.60 billion in 2024 and is anticipated to generate an estimated revenue of USD 110.21 billion by 2034, according to the latest study by Polaris Market Research. Besides, the report notes that the market exhibits a robust…

#AIaccelerators#AIchipset#AIhardware#AIinfrastructure#AIinnovation#AIperformance#AItrends#autonomoussystems#chiptechnology#EdgeAI#EdgeComputing#IoTsolutions#realtimeanalytics#smartdevices#smarttechnology

0 notes

Text

AMD Radeon RX 7700 XT price, Gaming performance, & Specs

AMD Radeon RX 7700 XT is a good upper mid-range graphics card for 1440p and 4K. AMD RDNA 3 architecture provides new features and smooth gaming.

Performance Analysis

Mostly for high-refresh-rate 1440p gaming, the AMD Radeon RX 7700 XT excels.

1440p Gaming:

The RX 7700 XT runs most modern games smoothly at 1440p at high settings. It competes with the RTX 4070 and overpowers the 4060 Ti in rasterised jobs.

Ray Tracing:

The RX 7700 XT trails NVIDIA in demanding ray tracing games despite AMD's RDNA 3 upgrades. Using FidelityFX Super Resolution and customised Ray Accelerators, numerous games may be played.

FSR: FidelityFX Super Resolution

AMD's upscaling aids RX 7700 XT. Higher frame rates without visual impact make demanding games playable at higher resolutions or with ray tracing. The card supports FSR 1, FSR 2, and FSR 3, which adds frame generation for compatible games.

4K Games:

AMD Radeon RX 7700 XT can handle 4K gaming with less demanding games with tweaked settings and FSR. A more powerful GPUs from the RX 7000 series would be better for constant frame rates at native 4K with maximum settings.

Important Features

The AMD Radeon RX 7700 XT has several features to improve gameplay and user experience:

Ray Accelerators and AI Accelerators perform higher per watt with RDNA 3 Architecture.

Radeon Super Resolution (RSR) and FSR (1, 2, and 3) boost speed and look in AMD FidelityFX Suite.

Smart Access Memory (SAM) improves performance on AMD Ryzen CPUs and motherboards.

AV1 hardware accelerates streaming and content creation.

DisplayPort 2.1: Latest display standards for high resolutions and refresh rates.

Cooling and Power Use

The 245W RX 7700 XT needs good cooling. Partner card makers like ASUS, ASROCK, Gigabyte, and Sapphire offer distinctive designs with effective cooling to maintain performance and reduce noise. For reliable functioning, use a 700W power source.

Market Positioning

In the higher mid-range of graphics cards, the AMD Radeon RX 7700 XT offers 1440p gamers a solid price-to-performance ratio. Its major competitor is NVIDIA's GeForce RTX 4060 Ti, however it's a good alternative that often rasterises better. The RX 7700 XT is a good alternative for budget-conscious fans because the RTX 4070 boosts performance but costs more.

Radeon RX 7700 XT Cost

AMD Radeon RX 7700 XT USD pricing vary by retailer, availability, and incentives. Recent reports indicate,

Amazon charges $515. Card originally cost $449 USD. Late 2024–early 2025 marketing was $349–$353.

The AMD Radeon RX 7700 XT will cost $470 to $550 USD at major retailers on May 18, 2025. Always compare retailer prices and discounts.

In conclusion

AMD Radeon RX 7700 XT graphics cards use cutting-edge RDNA 3 architecture to enable 1440p gameplay and full features. Its superior rasterisation and FSR make it a viable option for 2025 gamers seeking top-tier gaming without spending a fortune on GPUs. Despite its subpar ray tracing capabilities, it offers a well-rounded solution that is important to its market.

#AMDRadeonRX7700XT#AMDRadeon#NVIDIA#AMDRDNA3#AIAccelerators#AMDRyzen#News#Technews#Techology#Technologynews#Technologytrendes#Govindhtech

0 notes

Text

Battling Bakeries in an AI Arms Race! Inside the High-Tech Doughnut Feud

#AI#TechSavvy#commercialwar#AIAccelerated#EdgeAnalytic#CloudComputing#DeepLearning#NeuralNetwork#AICardUpgradeCycle#FutureProof#ComputerVision#ModelTraining#artificialintelligence#ai#Supergirl#Batman#DC Official#Home of DCU#Kara Zor-El#Superman#Lois Lane#Clark Kent#Jimmy Olsen#My Adventures With Superman

2 notes

·

View notes

Text

🚀 Ready to ride the next big wave in AI + wealth?

🌌 Plug into GravityChain and let artificial intelligence accelerate your wealth — 24/7. No limits. No downtime. This isn’t the future. It’s NOW.

💡 Join the world’s first GravityChain Accelerator. 🔥 Wealth isn’t earned. It’s engineered.

#SpeakGlobal#GravityChain#AIWealthEngine#PassiveIncomeRevolution#RideTheGravityWave#AIAccelerator#Wealth24x7#FutureOfFinance#AIInvesting#Web3Innovation#SmartMoneyMoves

0 notes

Text

#EdgeAI#SystemOnModule#AIAccelerator#EmbeddedSystems#VisionAI#IoT#Virtium#DEEPX#powerelectronics#powermanagement#powersemiconductor

0 notes

Text

Introducing the FCU3501, our rugged, next-gen embedded computer powered by the Rockchip RK3588 SoC. Designed for industrial AI at the edge, it delivers up to 32 TOPS of AI performance via onboard NPU + Hailo-8 M.2 accelerator.

🔧 Key Features:

Dual NPU Architecture – 6 TOPS onboard + 26 TOPS via Hailo‑8

Supports 8K Video Encoding/Decoding – Perfect for smart vision applications

Wide Temp, Fanless Design (–40 °C to +85 °C)

Certified for Industrial Use – CE, FCC, RoHS, EMC-compliant

Modular Storage & Expansion – M.2 SSD, TF card, 4G/5G optional

Rich I/O for Smart Factories, Transportation, Buildings & more

📩 Want a spec sheet or evaluation sample? Contact us: [email protected]

#EdgeAI#RK3588#Hailo8#EmbeddedComputer#IndustrialAutomation#AIAccelerator#SmartFactory#MachineVision#ProductLaunch

0 notes

Text

The US and UAE are accelerating the future of AI A 1GW data center. A 5GW tech cluster. A joint task force in 30 days. The countdown has begun. The future is in motion. 🇺🇸🇦🇪 #USUAEAI #FutureInMotion #AIAcceleration #digixplanet #digixideaslab #digitalmarketing

0 notes

Text

How Does AI Generate Human-Like Voices? 2025

How Does AI Generate Human-Like Voices? 2025

Artificial Intelligence (AI) has made incredible advancements in speech synthesis. AI-generated voices now sound almost indistinguishable from real human speech. But how does this technology work? What makes AI-generated voices so natural, expressive, and lifelike? In this deep dive, we’ll explore: ✔ The core technologies behind AI voice generation. ✔ How AI learns to mimic human speech patterns. ✔ Applications and real-world use cases. ✔ The future of AI-generated voices in 2025 and beyond.

Understanding AI Voice Generation

At its core, AI-generated speech relies on deep learning models that analyze human speech and generate realistic voices. These models use vast amounts of data, phonetics, and linguistic patterns to synthesize speech that mimics the tone, emotion, and natural flow of a real human voice. 1. Text-to-Speech (TTS) Systems Traditional text-to-speech (TTS) systems used rule-based models. However, these sounded robotic and unnatural because they couldn't capture the rhythm, tone, and emotion of real human speech. Modern AI-powered TTS uses deep learning and neural networks to generate much more human-like voices. These advanced models process: ✔ Phonetics (how words sound). ✔ Prosody (intonation, rhythm, stress). ✔ Contextual awareness (understanding sentence structure). 💡 Example: AI can now pause, emphasize words, and mimic real human speech patterns instead of sounding monotone.

2. Deep Learning & Neural Networks AI speech synthesis is driven by deep neural networks (DNNs), which work like a human brain. These networks analyze thousands of real human voice recordings and learn: ✔ How humans naturally pronounce words. ✔ The pitch, tone, and emphasis of speech. ✔ How emotions impact voice (anger, happiness, sadness, etc.). Some of the most powerful deep learning models include: WaveNet (Google DeepMind) Developed by Google DeepMind, WaveNet uses a deep neural network that analyzes raw audio waveforms. It produces natural-sounding speech with realistic tones, inflections, and even breathing patterns. Tacotron & Tacotron 2 Tacotron models, developed by Google AI, focus on improving: ✔ Natural pronunciation of words. ✔ Pauses and speech flow to match human speech patterns. ✔ Voice modulation for realistic expression. 3. Voice Cloning & Deepfake Voices One of the biggest breakthroughs in AI voice synthesis is voice cloning. This technology allows AI to: ✔ Copy a person’s voice with just a few minutes of recorded audio. ✔ Generate speech in that person’s exact tone and style. ✔ Mimic emotions, pitch, and speech variations. 💡 Example: If an AI listens to 5 minutes of Elon Musk’s voice, it can generate full speeches in his exact tone and speech style. This is called deepfake voice technology. 🔴 Ethical Concern: This technology can be used for fraud and misinformation, like creating fake political speeches or scam calls that sound real.

How AI Learns to Speak Like Humans

AI voice synthesis follows three major steps: Step 1: Data Collection & Training AI systems collect millions of human speech recordings to learn: ✔ Pronunciation of words in different accents. ✔ Pitch, tone, and emotional expression. ✔ How people emphasize words naturally. 💡 Example: AI listens to how people say "I love this product!" and learns how different emotions change the way it sounds. Step 2: Neural Network Processing AI breaks down voice data into small sound units (phonemes) and reconstructs them into natural-sounding speech. It then: ✔ Creates realistic sentence structures. ✔ Adds human-like pauses, stresses, and tonal changes. ✔ Removes robotic or unnatural elements. Step 3: Speech Synthesis Output After processing, AI generates speech that sounds fluid, emotional, and human-like. Modern AI can now: ✔ Imitate accents and speech styles. ✔ Adjust pitch and tone in real time. ✔ Change emotional expressions (happy, sad, excited).

Real-World Applications of AI-Generated Voices

AI-generated voices are transforming multiple industries: 1. Voice Assistants (Alexa, Siri, Google Assistant) AI voice assistants now sound more natural, conversational, and human-like than ever before. They can: ✔ Understand context and respond naturally. ✔ Adjust tone based on conversation flow. ✔ Speak in different accents and languages. 2. Audiobooks & Voiceovers Instead of hiring voice actors, AI-generated voices can now: ✔ Narrate entire audiobooks in human-like voices. ✔ Adjust voice tone based on story emotion. ✔ Sound different for each character in a book. 💡 Example: AI-generated voices are now used for animated movies, YouTube videos, and podcasts. 3. Customer Service & Call Centers Companies use AI voices for automated customer support, reducing costs and improving efficiency. AI voice systems: ✔ Respond naturally to customer questions. ✔ Understand emotional tone in conversations. ✔ Adjust voice tone based on urgency. 💡 Example: Banks use AI voice bots for automated fraud detection calls. 4. AI-Generated Speech for Disabled Individuals AI voice synthesis is helping people who have lost their voice due to medical conditions. AI-generated speech allows them to: ✔ Type text and have AI speak for them. ✔ Use their own cloned voice for communication. ✔ Improve accessibility for those with speech impairments. 💡 Example: AI helped Stephen Hawking communicate using a computer-generated voice.

The Future of AI-Generated Voices in 2025 & Beyond

AI-generated speech is evolving fast. Here’s what’s next: 1. Fully Realistic Conversational AI By 2025, AI voices will sound completely human, making robots and AI assistants indistinguishable from real humans. 2. Real-Time AI Voice Translation AI will soon allow real-time speech translation in different languages while keeping the original speaker’s voice and tone. 💡 Example: A Japanese speaker’s voice can be translated into English, but still sound like their real voice. 3. AI Voice in the Metaverse & Virtual Worlds AI-generated voices will power realistic avatars in virtual worlds, enabling: ✔ AI-powered characters with human-like speech. ✔ AI-generated narrators in VR experiences. ✔ Fully voiced AI NPCs in video games.

Final Thoughts

AI-generated voices have reached an incredible level of realism. From voice assistants to deepfake voice cloning, AI is revolutionizing how we interact with technology. However, ethical concerns remain. With the ability to clone voices and create deepfake speech, AI-generated voices must be used responsibly. In the future, AI will likely replace human voice actors, power next-gen customer service, and enable lifelike AI assistants. But one thing is clear—AI-generated voices are becoming indistinguishable from real humans. Read Our Past Blog: What If We Could Live Inside a Black Hole? 2025For more information, check this resource.

How Does AI Generate Human-Like Voices? 2025 - Everything You Need to Know

Understanding ai in DepthRelated Posts- How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 Read the full article

#1#2#2025-01-01t00:00:00.000+00:00#3#4#5#accent(sociolinguistics)#accessibility#aiaccelerator#amazonalexa#anger#animation#artificialintelligence#audiodeepfake#audiobook#avatar(computing)#blackhole#blog#brain#chatbot#cloning#communication#computer-generatedimagery#conversation#customer#customerservice#customersupport#data#datacollection#deeplearning

0 notes

Text

#FPGA#Semiconductors#TechGrowth#AIAcceleration#EmbeddedSystems#DataCenters#IoTInnovation#EdgeComputing#ChipDesign#ElectronicsMarket#5GTechnology#SmartDevices#FutureTech#ComputingPower#ReconfigurableHardware

0 notes

Text

#Virtium#energy efficiency#EdgeAI#EmbeddedSystems#IndustrialAI#SoM#AIAccelerator#IoTInnovation#TechPartnership#SmartManufacturing#AIOnEdge#TimestechUpdates#electronicsnews#technologynews

0 notes

Text

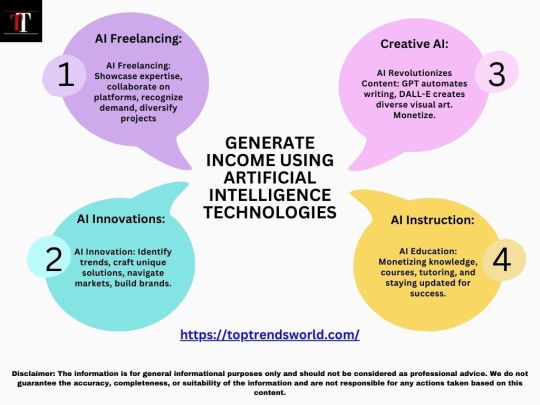

Artificial Intelligence (AI) Updates

"Top Trends LLC (DBA ""Top Trends"") is a dynamic and information-rich web platform that empowers its readers with a broad spectrum of knowledge, insights, and data-driven trends. Our professional writers, industry experts, and enthusiasts dive deep into Artificial Intelligence, Finance, Startups, SEO and Backlinks.

#AIAdvancements#AIBreakthroughs#AIFrontiers#AIAcceleration#AIInnovationWave#AIDiscoveries#AIEvolutions#AIRevolution#AITrends2024#AIBeyondLimits

0 notes

Text

Amazon SageMaker HyperPod Presents Amazon EKS Support

Amazon SageMaker HyperPod

Cut the training duration of foundation models by up to 40% and scale effectively across over a thousand AI accelerators.

We are happy to inform you today that Amazon SageMaker HyperPod, a specially designed infrastructure with robustness at its core, will enable Amazon Elastic Kubernetes Service (EKS) for foundation model (FM) development. With this new feature, users can use EKS to orchestrate HyperPod clusters, combining the strength of Kubernetes with the robust environment of Amazon SageMaker HyperPod, which is ideal for training big models. By effectively scaling across over a thousand artificial intelligence (AI) accelerators, Amazon SageMaker HyperPod can save up to 40% of training time.

- Advertisement -

SageMaker HyperPod: What is it?

The undifferentiated heavy lifting associated with developing and refining machine learning (ML) infrastructure is eliminated by Amazon SageMaker HyperPod. Workloads can be executed in parallel for better model performance because it is pre-configured with SageMaker’s distributed training libraries, which automatically divide training workloads over more than a thousand AI accelerators. SageMaker HyperPod occasionally saves checkpoints to guarantee your FM training continues uninterrupted.

You no longer need to actively oversee this process because it automatically recognizes hardware failure when it occurs, fixes or replaces the problematic instance, and continues training from the most recent checkpoint that was saved. Up to 40% less training time is required thanks to the robust environment, which enables you to train models in a distributed context without interruption for weeks or months at a time. The high degree of customization offered by SageMaker HyperPod enables you to share compute capacity amongst various workloads, from large-scale training to inference, and to run and scale FM tasks effectively.

Advantages of the Amazon SageMaker HyperPod

Distributed training with a focus on efficiency for big training clusters

Because Amazon SageMaker HyperPod comes preconfigured with Amazon SageMaker distributed training libraries, you can expand training workloads more effectively by automatically dividing your models and training datasets across AWS cluster instances.

Optimum use of the cluster’s memory, processing power, and networking infrastructure

Using two strategies, data parallelism and model parallelism, Amazon SageMaker distributed training library optimizes your training task for AWS network architecture and cluster topology. Model parallelism divides models that are too big to fit on one GPU into smaller pieces, which are then divided among several GPUs for training. To increase training speed, data parallelism divides huge datasets into smaller ones for concurrent training.

- Advertisement -

Robust training environment with no disruptions

You can train FMs continuously for months on end with SageMaker HyperPod because it automatically detects, diagnoses, and recovers from problems, creating a more resilient training environment.

Customers may now use a Kubernetes-based interface to manage their clusters using Amazon SageMaker HyperPod. This connection makes it possible to switch between Slurm and Amazon EKS with ease in order to optimize different workloads, including as inference, experimentation, training, and fine-tuning. Comprehensive monitoring capabilities are provided by the CloudWatch Observability EKS add-on, which offers insights into low-level node metrics on a single dashboard, including CPU, network, disk, and other. This improved observability includes data on container-specific use, node-level metrics, pod-level performance, and resource utilization for the entire cluster, which makes troubleshooting and optimization more effective.

Since its launch at re:Invent 2023, Amazon SageMaker HyperPod has established itself as the go-to option for businesses and startups using AI to effectively train and implement large-scale models. The distributed training libraries from SageMaker, which include Model Parallel and Data Parallel software optimizations to assist cut training time by up to 20%, are compatible with it. With SageMaker HyperPod, data scientists may train models for weeks or months at a time without interruption since it automatically identifies, fixes, or replaces malfunctioning instances. This frees up data scientists to concentrate on developing models instead of overseeing infrastructure.

Because of its scalability and abundance of open-source tooling, Kubernetes has gained popularity for machine learning (ML) workloads. These benefits are leveraged in the integration of Amazon EKS with Amazon SageMaker HyperPod. When developing applications including those needed for generative AI use cases organizations frequently rely on Kubernetes because it enables the reuse of capabilities across environments while adhering to compliance and governance norms. Customers may now scale and maximize resource utilization across over a thousand AI accelerators thanks to today’s news. This flexibility improves the workflows for FM training and inference, containerized app management, and developers.

With comprehensive health checks, automated node recovery, and work auto-resume features, Amazon EKS support in Amazon SageMaker HyperPod fortifies resilience and guarantees continuous training for big-ticket and/or protracted jobs. Although clients can use their own CLI tools, the optional HyperPod CLI, built for Kubernetes settings, can streamline job administration. Advanced observability is made possible by integration with Amazon CloudWatch Container Insights, which offers more in-depth information on the health, utilization, and performance of clusters. Furthermore, data scientists can automate machine learning operations with platforms like Kubeflow. A reliable solution for experiment monitoring and model maintenance is offered by the integration, which also incorporates Amazon SageMaker managed MLflow.

In summary, the HyperPod service fully manages the HyperPod service-generated Amazon SageMaker HyperPod cluster, eliminating the need for undifferentiated heavy lifting in the process of constructing and optimizing machine learning infrastructure. This cluster is built by the cloud admin via the HyperPod cluster API. These HyperPod nodes are orchestrated by Amazon EKS in a manner akin to that of Slurm, giving users a recognizable Kubernetes-based administrator experience.

Important information

The following are some essential details regarding Amazon EKS support in the Amazon SageMaker HyperPod:

Resilient Environment: With comprehensive health checks, automated node recovery, and work auto-resume, this integration offers a more resilient training environment. With SageMaker HyperPod, you may train foundation models continuously for weeks or months at a time without interruption since it automatically finds, diagnoses, and fixes errors. This can result in a 40% reduction in training time.

Improved GPU Observability: Your containerized apps and microservices can benefit from comprehensive metrics and logs from Amazon CloudWatch Container Insights. This makes it possible to monitor cluster health and performance in great detail.

Scientist-Friendly Tool: This release includes interaction with SageMaker Managed MLflow for experiment tracking, a customized HyperPod CLI for job management, Kubeflow Training Operators for distributed training, and Kueue for scheduling. Additionally, it is compatible with the distributed training libraries offered by SageMaker, which offer data parallel and model parallel optimizations to drastically cut down on training time. Large model training is made effective and continuous by these libraries and auto-resumption of jobs.

Flexible Resource Utilization: This integration improves the scalability of FM workloads and the developer experience. Computational resources can be effectively shared by data scientists for both training and inference operations. You can use your own tools for job submission, queuing, and monitoring, and you can use your current Amazon EKS clusters or build new ones and tie them to HyperPod compute.

Read more on govindhtech.com

#AmazonSageMaker#HyperPodPresents#AmazonEKSSupport#foundationmodel#artificialintelligence#AI#machinelearning#ML#AIaccelerators#AmazonCloudWatch#AmazonEKS#technology#technews#news#govindhtech

0 notes

Text

Our AI-based performance testing accelerator automates the scripting process reducing the scripting time to 24 hours only. This brings a drastic improvement from the conventional weeks-long scripting process. Unlike the manual scripting standards, our accelerator rapidly addresses diverse testing scenarios within minutes, offering unmatched efficiency. This not only accelerates the testing process but also ensures adaptability to various scenarios.

Talk to our performance experts at https://rtctek.com/contact-us/. Visit https://rtctek.com/performance-testing-services to learn more about our services.

#rtctek#roundtheclocktechnologies#performancetesting#performance#aiaccelerator#ptaccelerator#testingaccelerator

0 notes

Text

⏳ The countdown has officially begun!

The most anticipated AI + Web3 airdrop is almost here…

This isn’t just a drop. It’s a gravity-shifting moment in the digital world.

If you know, you know. If you don’t—you might just miss history. 🚀

Airdrop season is loading… Stay tuned.🔥

#ComingSoon#SpeakGlobal#AirdropSeason#Web3Airdrop#AIxWeb3#GravityChain#CryptoCountdown#Web3Community#NextBigDrop#BlockchainRevolution#FutureIsNow#CryptoReady#DigitalWealth#AIAccelerator#Web3Wave#TechDisruption

0 notes