#Amazon VPC

Explore tagged Tumblr posts

Text

Virtual Private Cloud (VPC) Flow Logs in Amazon Web Services (AWS) is an indispensable feature for developers, network administrators, and cybersecurity professionals. It provides a window into the network traffic flowing through your AWS environment, providing the visibility needed to monitor, troubleshoot, and secure your applications and resources efficiently.

2 notes

·

View notes

Text

PCS AWS: AWS Parallel Computing Service For HPC workloads

PCS AWS

AWS launching AWS Parallel Computing Service (AWS PCS), a managed service that allows clients build up and maintain HPC clusters to execute simulations at nearly any scale on AWS. The Slurm scheduler lets them work in a familiar HPC environment without worrying about infrastructure, accelerating outcomes.

AWS Parallel Computing

Run HPC workloads effortlessly at any scale.

Why AWS PCS?

AWS Parallel Computing Service (AWS PCS) is a managed service that simplifies HPC workloads and Slurm-based scientific and engineering model development on AWS. PCS AWS lets you create elastic computing, storage, networking, and visualization environments. Managed updates and built-in observability features make cluster management easier with AWS PCS. You may focus on research and innovation in a comfortable environment without worrying about infrastructure.

Benefits

Focus on labor, not infrastructure

Give users comprehensive HPC environments that scale to run simulations and scientific and engineering modeling without code or script changes to boost productivity.

Manage, secure, and scale HPC clusters

Build and deploy scalable, dependable, and secure HPC clusters via the AWS Management Console, CLI, or SDK.

HPC solutions using flexible building blocks

Build and maintain end-to-end HPC applications on AWS using highly available cluster APIs and infrastructure as code.

Use cases

Tightly connected tasks

At almost any scale, run concurrent MPI applications like CAE, weather and climate modeling, and seismic and reservoir simulation efficiently.

Faster computing

GPUs, FPGAs, and Amazon-custom silicon like AWS Trainium and AWS Inferentia can speed up varied workloads like creating scientific and engineering models, protein structure prediction, and Cryo-EM.

Computing at high speed and loosely linked workloads

Distributed applications like Monte Carlo simulations, image processing, and genomics research can run on AWS at any scale.

Workflows that interact

Use human-in-the-loop operations to prepare inputs, run simulations, visualize and evaluate results in real time, and modify additional trials.

AWS ParallelCluster

In November 2018, AWS launched AWS ParallelCluster, an AWS-supported open-source cluster management tool for AWS Cloud HPC cluster deployment and maintenance. Customers can quickly design and deploy proof of concept and production HPC computation systems with AWS ParallelCluster. Open-source AWS ParallelCluster Command-Line interface, API, Python library, and user interface are available. Updates may include cluster removal and reinstallation. To eliminate HPC environment building and operation chores, many clients have requested a completely managed AWS solution.

AWS Parallel Computing Service (AWS PCS)

PCS AWS simplifies AWS-managed HPC setups via the AWS Management Console, SDK, and CLI. Your system administrators can establish managed Slurm clusters using their computing, storage, identity, and job allocation preferences. AWS PCS schedules and orchestrates simulations using Slurm, a scalable, fault-tolerant work scheduler utilized by many HPC clients. Scientists, researchers, and engineers can log into AWS PCS clusters to conduct HPC jobs, use interactive software on virtual desktops, and access data. Their workloads can be swiftly moved to PCS AWS without code porting.

Fully controlled NICE DCV remote desktops allow specialists to manage HPC operations in one place by accessing task telemetry or application logs and remote visualization.

PCS AWS uses familiar methods for preparing, executing, and analyzing simulations and computations for a wide range of traditional and emerging, compute or data-intensive engineering and scientific workloads in computational reservoir simulations, electronic design automation, finite element analysis, fluid dynamics, and weather modeling.

Starting AWS Parallel Computing Service

AWS documentation article for constructing a basic cluster lets you try AWS PCS. First, construct a VPC with an AWS CloudFormation template and shared storage in Amazon EFS in your account for the AWS Region where you will try PCS AWS. AWS literature explains how to create a VPC and shared storage.

Cluster

Select Create cluster in the PCS AWS console to manage resources and run workloads.

Name your cluster and select your Slurm scheduler controller size. Cluster workload limits are Small (32 nodes, 256 jobs), Medium (512 nodes, 8,192 tasks), and Large (2,048 nodes, 16,384 jobs). Select your VPC, cluster launch subnet, and cluster security group in Networking.

A resource selection method parameter, an idle duration before compute nodes scale down, and a Prolog and Epilog scripts directory on launched compute nodes are optional Slurm configurations.

Create cluster. Provisioning the cluster takes time.

Form compute node groupings

After constructing your cluster, you can create compute node groups, a virtual grouping of Amazon EC2 instances used by PCS AWS to enable interactive access to a cluster or perform processes in it. You define EC2 instance types, minimum and maximum instance counts, target VPC subnets, Amazon Machine Image (AMI), purchasing option, and custom launch settings when defining a compute node group. Compute node groups need an instance profile to pass an AWS IAM role to an EC2 instance and an EC2 launch template for AWS PCS to configure EC2 instances.

Select the Compute node groups tab and the Create button in your cluster to create a compute node group in the console.

End users can login to a compute node group, and HPC jobs run on a job node group.

Use a compute node name and a previously prepared EC2 launch template, IAM instance profile, and subnets to launch compute nodes in your cluster VPC for HPC jobs.

Next, select your chosen EC2 instance types for compute node launches and the scaling minimum and maximum instance count.

Select Create. Provisioning the computing node group takes time.

Build and run HPC jobs

After building compute node groups, queue a job to run. Job queued until PCS AWS schedules it on a compute node group based on provisioned capacity. Each queue has one or more computing node groups that supply EC2 instances for processing.

Visit your cluster, select Queues, and click Create queue to create a queue in the console.

Select Create and wait for queue creation.

AWS Systems Manager can connect to the EC2 instance it creates when the login compute node group is active. Select your login compute node group EC2 instance in the Amazon EC2 console. The AWS manual describes how to create a queue to submit and manage jobs and connect to your cluster.

Create a submission script with job requirements and submit it to a queue with the sbatch command to perform a Slurm job. This is usually done from a shared directory so login and compute nodes can access files together.

Slurm may perform MPI jobs in PCS AWS. See AWS documents Run a single-node job with Slurm or Run a multi-node MPI task with Slurm for details.

Visualize with a fully managed NICE DCV remote desktop. Start with the HPC Recipes for AWS GitHub CloudFormation template.

After HPC jobs using your cluster and node groups, erase your resources to minimize needless expenses. See AWS documentation Delete your AWS resources for details.

Know something

Some things to know about this feature:

Slurm versions – AWS PCS initially supports Slurm 23.11 and enables tools to upgrade major versions when new versions are added. AWS PCS also automatically patches the Slurm controller.

On-Demand Capacity Reservations let you reserve EC2 capacity in a certain Availability Zone and duration to ensure you have compute capacity when you need it.

Network file systems Amazon FSx for NetApp ONTAP, OpenZFS, File Cache, EFS, and Lustre can be attached to write and access data and files. Self-managed volumes like NFS servers are possible.

Now available

US East (N. Virginia), US East (Ohio), US West (Oregon), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Tokyo), Europe (Frankfurt), Europe (Ireland), and Europe (Stockholm) now provide AWS Parallel Computing Service.

Read more on govindhtech.com

#PCS#AWS#computingservice#hpcworkloads#parallelcomputingservice#awspcs#Amazon#vpc#amazonec2instance#news#TechNews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

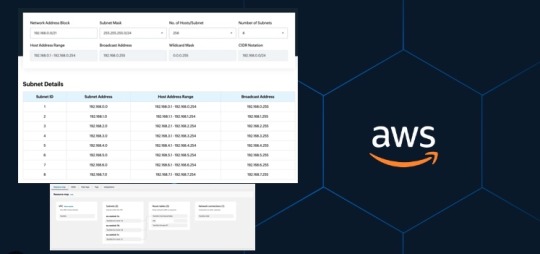

VPC, Subnet, NACL, Security Group: Create your own Network on AWS from Scratch [Part 2]

Amazon Virtual Private Cloud (Amazon VPC) enables you to launch AWS resources in a logically isolated virtual network that you have created. This virtual network closely resembles a traditional network that you’d operate in your own data centre, with the benefits of using the scalable infrastructure of AWS. Please see how to Build a Scalable VPC for Your AWS Environment [Part 1], how to Hide or…

View On WordPress

#Amazon Virtual Private Cloud (Amazon VPC)#AWS#AWS Resource Map#AWS Virtual Private Cloud#AWS VPC#Best Practices for Creating a VPC#NACL#Network Access Control Lists

0 notes

Text

BRB... just upgrading Python

CW: nerdy, technical details.

Originally, MLTSHP (well, MLKSHK back then) was developed for Python 2. That was fine for 2010, but 15 years later, and Python 2 is now pretty ancient and unsupported. January 1st, 2020 was the official sunset for Python 2, and 5 years later, we’re still running things with it. It’s served us well, but we have to transition to Python 3.

Well, I bit the bullet and started working on that in earnest in 2023. The end of that work resulted in a working version of MLTSHP on Python 3. So, just ship it, right? Well, the upgrade process basically required upgrading all Python dependencies as well. And some (flyingcow, torndb, in particular) were never really official, public packages, so those had to be adopted into MLTSHP and upgraded as well. With all those changes, it required some special handling. Namely, setting up an additional web server that could be tested against the production database (unit tests can only go so far).

Here’s what that change comprised: 148 files changed, 1923 insertions, 1725 deletions. Most of those changes were part of the first commit for this branch, made on July 9, 2023 (118 files changed).

But by the end of that July, I took a break from this task - I could tell it wasn’t something I could tackle in my spare time at that time.

Time passes…

Fast forward to late 2024, and I take some time to revisit the Python 3 release work. Making a production web server for the new Python 3 instance was another big update, since I wanted the Docker container OS to be on the latest LTS edition of Ubuntu. For 2023, that was 20.04, but in 2025, it’s 24.04. I also wanted others to be able to test the server, which means the CDN layer would have to be updated to direct traffic to the test server (without affecting general traffic); I went with a client-side cookie that could target the Python 3 canary instance.

In addition to these upgrades, there were others to consider — MySQL, for one. We’ve been running MySQL 5, but version 9 is out. We settled on version 8 for now, but could also upgrade to 8.4… 8.0 is just the version you get for Ubuntu 24.04. RabbitMQ was another server component that was getting behind (3.5.7), so upgrading it to 3.12.1 (latest version for Ubuntu 24.04) seemed proper.

One more thing - our datacenter. We’ve been using Linode’s Fremont region since 2017. It’s been fine, but there are some emerging Linode features that I’ve been wanting. VPC support, for one. And object storage (basically the same as Amazon’s S3, but local, so no egress cost to-from Linode servers). Both were unavailable to Fremont, so I decided to go with their Chicago region for the upgrade.

Now we’re talking… this is now not just a “push a button” release, but a full-fleged, build everything up and tear everything down kind of release that might actually have some downtime (while trying to keep it short)!

I built a release plan document and worked through it. The key to the smooth upgrade I want was to make the cutover as seamless as possible. Picture it: once everything is set up for the new service in Chicago - new database host, new web servers and all, what do we need to do to make the switch almost instant? It’s Fastly, our CDN service.

All traffic to our service runs through Fastly. A request to the site comes in, Fastly routes it to the appropriate host, which in turns speaks to the appropriate database. So, to transition from one datacenter to the other, we need to basically change the hosts Fastly speaks to. Those hosts will already be set to talk to the new database. But that’s a key wrinkle - the new database…

The new database needs the data from the old database. And to make for a seamless transition, it needs to be up to the second in step with the old database. To do that, we have take a copy of the production data and get it up and running on the new database. Then, we need to have some process that will copy any new data to it since the last sync. This sounded a lot like replication to me, but the more I looked at doing it that way, I wasn’t confident I could set that up without bringing the production server down. That’s because any replica needs to start in a synchronized state. You can’t really achieve that with a live database. So, instead, I created my own sync process that would copy new data on a periodic basis as it came in.

Beyond this, we need a proper replication going in the new datacenter. In case the database server goes away unexpectedly, a replica of it allows for faster recovery and some peace of mind. Logical backups can be made from the replica and stored in Linode’s object storage if something really disastrous happens (like tables getting deleted by some intruder or a bad data migration).

I wanted better monitoring, too. We’ve been using Linode’s Longview service and that’s okay and free, but it doesn’t act on anything that might be going wrong. I decided to license M/Monit for this. M/Monit is so lightweight and nice, along with Monit running on each server to keep track of each service needed to operate stuff. Monit can be given instructions on how to self-heal certain things, but also provides alerts if something needs manual attention.

And finally, Linode’s Chicago region supports a proper VPC setup, which allows for all the connectivity between our servers to be totally private to their own subnet. It also means that I was able to set up an additional small Linode instance to serve as a bastion host - a server that can be used for a secure connection to reach the other servers on the private subnet. This is a lot more secure than before… we’ve never had a breach (at least, not to my knowledge), and this makes that even less likely going forward. Remote access via SSH is now unavailable without using the bastion server, so we don’t have to expose our servers to potential future ssh vulnerabilities.

So, to summarize: the MLTSHP Python 3 upgrade grew from a code release to a full stack upgrade, involving touching just about every layer of the backend of MLTSHP.

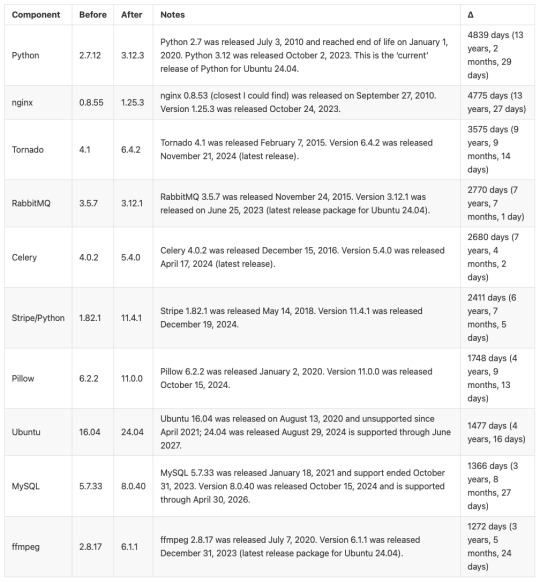

Here’s a before / after picture of some of the bigger software updates applied (apologies for using images for these tables, but Tumblr doesn’t do tables):

And a summary of infrastructure updates:

I’m pretty happy with how this has turned out. And I learned a lot. I’m a full-stack developer, so I’m familiar with a lot of devops concepts, but actually doing that role is newish to me. I got to learn how to set up a proper secure subnet for our set of hosts, making them more secure than before. I learned more about Fastly configuration, about WireGuard, about MySQL replication, and about deploying a large update to a live site with little to no downtime. A lot of that is due to meticulous release planning and careful execution. The secret for that is to think through each and every step - no matter how small. Document it, and consider the side effects of each. And with each step that could affect the public service, consider the rollback process, just in case it’s needed.

At this time, the server migration is complete and things are running smoothly. Hopefully we won’t need to do everything at once again, but we have a recipe if it comes to that.

15 notes

·

View notes

Text

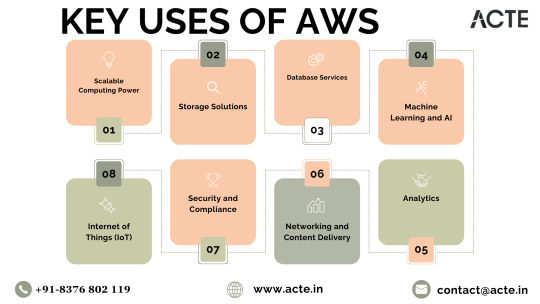

AWS Security 101: Protecting Your Cloud Investments

In the ever-evolving landscape of technology, few names resonate as strongly as Amazon.com. This global giant, known for its e-commerce prowess, has a lesser-known but equally influential arm: Amazon Web Services (AWS). AWS is a powerhouse in the world of cloud computing, offering a vast and sophisticated array of services and products. In this comprehensive guide, we'll embark on a journey to explore the facets and features of AWS that make it a driving force for individuals, companies, and organizations seeking to utilise cloud computing to its fullest capacity.

Amazon Web Services (AWS): A Technological Titan

At its core, AWS is a cloud computing platform that empowers users to create, deploy, and manage applications and infrastructure with unparalleled scalability, flexibility, and cost-effectiveness. It's not just a platform; it's a digital transformation enabler. Let's dive deeper into some of the key components and features that define AWS:

1. Compute Services: The Heart of Scalability

AWS boasts services like Amazon EC2 (Elastic Compute Cloud), a scalable virtual server solution, and AWS Lambda for serverless computing. These services provide users with the capability to efficiently run applications and workloads with precision and ease. Whether you need to host a simple website or power a complex data-processing application, AWS's compute services have you covered.

2. Storage Services: Your Data's Secure Haven

In the age of data, storage is paramount. AWS offers a diverse set of storage options. Amazon S3 (Simple Storage Service) caters to scalable object storage needs, while Amazon EBS (Elastic Block Store) is ideal for block storage requirements. For archival purposes, Amazon Glacier is the go-to solution. This comprehensive array of storage choices ensures that diverse storage needs are met, and your data is stored securely.

3. Database Services: Managing Complexity with Ease

AWS provides managed database services that simplify the complexity of database management. Amazon RDS (Relational Database Service) is perfect for relational databases, while Amazon DynamoDB offers a seamless solution for NoSQL databases. Amazon Redshift, on the other hand, caters to data warehousing needs. These services take the headache out of database administration, allowing you to focus on innovation.

4. Networking Services: Building Strong Connections

Network isolation and robust networking capabilities are made easy with Amazon VPC (Virtual Private Cloud). AWS Direct Connect facilitates dedicated network connections, and Amazon Route 53 takes care of DNS services, ensuring that your network needs are comprehensively addressed. In an era where connectivity is king, AWS's networking services rule the realm.

5. Security and Identity: Fortifying the Digital Fortress

In a world where data security is non-negotiable, AWS prioritizes security with services like AWS IAM (Identity and Access Management) for access control and AWS KMS (Key Management Service) for encryption key management. Your data remains fortified, and access is strictly controlled, giving you peace of mind in the digital age.

6. Analytics and Machine Learning: Unleashing the Power of Data

In the era of big data and machine learning, AWS is at the forefront. Services like Amazon EMR (Elastic MapReduce) handle big data processing, while Amazon SageMaker provides the tools for developing and training machine learning models. Your data becomes a strategic asset, and innovation knows no bounds.

7. Application Integration: Seamlessness in Action

AWS fosters seamless application integration with services like Amazon SQS (Simple Queue Service) for message queuing and Amazon SNS (Simple Notification Service) for event-driven communication. Your applications work together harmoniously, creating a cohesive digital ecosystem.

8. Developer Tools: Powering Innovation

AWS equips developers with a suite of powerful tools, including AWS CodeDeploy, AWS CodeCommit, and AWS CodeBuild. These tools simplify software development and deployment processes, allowing your teams to focus on innovation and productivity.

9. Management and Monitoring: Streamlined Resource Control

Effective resource management and monitoring are facilitated by AWS CloudWatch for monitoring and AWS CloudFormation for infrastructure as code (IaC) management. Managing your cloud resources becomes a streamlined and efficient process, reducing operational overhead.

10. Global Reach: Empowering Global Presence

With data centers, known as Availability Zones, scattered across multiple regions worldwide, AWS enables users to deploy applications close to end-users. This results in optimal performance and latency, crucial for global digital operations.

In conclusion, Amazon Web Services (AWS) is not just a cloud computing platform; it's a technological titan that empowers organizations and individuals to harness the full potential of cloud computing. Whether you're an aspiring IT professional looking to build a career in the cloud or a seasoned expert seeking to sharpen your skills, understanding AWS is paramount.

In today's technology-driven landscape, AWS expertise opens doors to endless opportunities. At ACTE Institute, we recognize the transformative power of AWS, and we offer comprehensive training programs to help individuals and organizations master the AWS platform. We are your trusted partner on the journey of continuous learning and professional growth. Embrace AWS, embark on a path of limitless possibilities in the world of technology, and let ACTE Institute be your guiding light. Your potential awaits, and together, we can reach new heights in the ever-evolving world of cloud computing. Welcome to the AWS Advantage, and let's explore the boundless horizons of technology together!

8 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

AWS Architecture Design: A Simple Guide by Sierra-Cedar

In today's digital world, businesses rely heavily on cloud technology to run their applications, store data, and provide services to customers. One of the most popular cloud platforms is Amazon Web Services (AWS). It offers a wide range of tools and services that help companies grow faster, stay secure, and save costs.

At Sierra-Cedar, we help businesses design smart, reliable, and scalable cloud solutions using AWS. In this article, we’ll explain what AWS Architecture Design is and how Sierra-Cedar approaches it in a simple and effective way.

What is AWS Architecture Design?

AWS Architecture Design is the process of planning and building the structure of your cloud environment. Think of it like designing the blueprint for a house, but instead of bricks and rooms, you’re using servers, databases, and applications.

A good AWS architecture should:

Be secure (protect your data and systems)

Be scalable (handle more users or data as your business grows)

Be cost-effective (pay only for what you use)

Be reliable (keep working even if some parts fail)

How Sierra-Cedar Designs AWS Architecture

At Sierra-Cedar, our team follows best practices to build custom cloud solutions for clients across various industries. Here’s a simple overview of our design process:

1. Understanding the Business Needs

We start by talking to the business to understand their goals. For example:

Do they want to move their data to the cloud?

Are they launching a new web application?

Do they need backup and disaster recovery?

2. Choosing the Right AWS Services

AWS offers over 200 services. We pick the right ones based on the business need. Some common services we use:

EC2 (virtual servers)

S3 (secure file storage)

RDS (managed database service)

Lambda (run code without managing servers)

CloudFront (content delivery)

VPC (secure network setup)

3. Designing for Security and Compliance

Security is a top priority. We use:

Identity and Access Management (IAM) to control who can access what.

Encryption to protect sensitive data.

Compliance tools to meet industry standards like HIPAA or GDPR.

4. Ensuring High Availability and Reliability

We design systems that stay online even if something goes wrong. We do this by:

Using multiple Availability Zones (data centers in different locations)

Setting up Auto Scaling to add or remove servers automatically

Using Load Balancers to share traffic across servers

5. Cost Optimization

We help clients save money by:

Choosing the right size of resources

Turning off unused services

Using AWS pricing calculators and billing tools

Example Use Case

Let’s say a healthcare company wants to build a patient portal. Sierra-Cedar would:

Use EC2 to host the application

Store patient files in S3

Use RDS for the medical database

Set up CloudWatch for monitoring

Ensure data privacy with IAM and encryption

Why Choose Sierra-Cedar?

Sierra-Cedar brings years of experience in IT consulting and cloud transformation. Our certified AWS experts work closely with clients to design solutions that are practical, secure, and future-ready.

Whether you're just starting with AWS or looking to improve your current setup, Sierra-Cedar can guide you every step of the way.

Conclusion

AWS Architecture Design doesn’t have to be complicated. With the right approach and the right partner, like Sierra-Cedar, your business can take full advantage of the cloud. From planning to execution, we help you build a strong, flexible, and secure AWS environment.

0 notes

Text

Amazon Timestream helps AWS InfluxDB databases

InfluxDB on AWS

AWS InfluxDB

As of right now, Amazon Timestream supports InfluxDB as a database engine. With the help of this functionality, you can easily execute time-series applications in close to real-time utilizing InfluxDB and open-source APIs, such as the open-source Telegraf agents that gather time-series observations.

InfluxDB vs AWS Timestream

Timestream now offers you a choice between two database engines: Timestream for InfluxDB and Timestream for LiveAnalytics.

If your use cases call for InfluxDB capabilities like employing Flux queries or near real-time time-series queries, you should utilize the Timestream for InfluxDB engine. If you need to conduct SQL queries on petabytes of time-series data in seconds and ingest more than tens of terabytes of data per minute, the Timestream for LiveAnalytics engine currently in use is a good alternative.

You may utilize a managed instance that is automatically configured for maximum availability and performance with Timestream’s support for InfluxDB. Setting up multi-Availability Zone support for your InfluxDB databases is another way to boost resilience.

Timestream for LiveAnalytics and Timestream for InfluxDB work in tandem to provide large-scale, low-latency time-series data intake.

How to create database in InfluxDB

You can start by setting up an instance of InfluxDB. Now you can open the Timestream console, choose Create Influx database under InfluxDB databases in Timestream for InfluxDB.

You can provide the database credentials for the InfluxDB instance on the next page.

You can also define the volume and kind of storage to meet your requirements, as well as your instance class, in the instance configuration.

You have the option to choose either a single InfluxDB instance or a multi-Availability Zone deployment in the following section, which replicates data synchronously to a backup database in a separate Availability Zone. Timestream for InfluxDB in a multi-AZ deployment will immediately switch to the backup instance in the event of a failure, preserving all data.

Next, you can set up your connectivity setup to specify how to connect to your InfluxDB instance. You are able to configure the database port, subnets, network type, and virtual private cloud (VPC) in this instance. Additionally, you may choose to make your InfluxDB instance publicly available by configuring public subnets and setting the public access to publicly accessible. This would enable Amazon Timestream to provide your InfluxDB server with a public IP address. Make sure you have appropriate security measures in place to safeguard your InfluxDB instances if you decide to go with this option.

You had been configured your InfluxDB instance to be not publicly available, which restricts access to the VPC and subnets you specified earlier in this section.

You may provide the database parameter group and the log delivery settings once you’ve set up your database connection. You may specify the adjustable parameters your wish to utilize for your InfluxDB database in the parameter group. You may also specify which Amazon Simple Storage Service (Amazon S3) bucket you have to export the system logs from in the log delivery settings. Go to this page to find out more about the Amazon S3 bucket’s mandatory AWS Identity and Access Management (IAM) policy.

After that are you satisfied with the setup, you can choose Create Influx database.

You can see further details on the detail page once your InfluxDB instance is built.

You can now access the InfluxDB user interface (UI) once the InfluxDB instance has been established. By choosing InfluxDB UI in the console, you may see the user interface if you have your InfluxDB set up to be publicly available. As instructed, you made your InfluxDB instance private. SSH tunneling is needed to access the InfluxDB UI from inside the same VPC as my instance using an Amazon EC2 instance.

The URL endpoint from the detail page lets me connect in to the InfluxDB UI using your username and password from creation.Image credit to AWS

Token creation is also possible using the Influx command line interface (CLI). You can establish a setup to communicate with your InfluxDB instance before you generate the token.

You may now establish an operator, all-access, or read/write token since you have successfully built the InfluxDB setup. An example of generating an all-access token to authorize access to every resource inside the specified organization is as follows:

You may begin feeding data into your InfluxDB instance using a variety of tools, including the Telegraf agent, InfluxDB client libraries, and the Influx CLI, after you have the necessary token for your use case.

At last, you can use the InfluxDB UI to query the data. You can open the InfluxDB UI, go to the Data Explorer page, write a basic Flux script, and click Submit.

You may continue to use your current tools to communicate with the database and create apps utilizing InfluxDB with ease thanks to Timestream for InfluxDB. You may boost your InfluxDB data availability with the multi-AZ setup without having to worry about the supporting infrastructure.

AWS and InfluxDB collaboration

In celebration of this launch, InfluxData’s founder and chief technology officer, Paul Dix, shared the following remarks on this collaboration:

The public cloud will fuel open source in the future, reaching the largest community via simple entry points and useful user interfaces. On that aim, Amazon Timestream for InfluxDB delivers. Their collaboration with AWS makes it simpler than ever for developers to create and grow their time-series workloads on AWS by using the open source InfluxDB database to provide real-time insights on time-series data.

Important information

Here are some more details that you should be aware of:

Availability:

Timestream for InfluxDB is now widely accessible in the following AWS Regions: Europe (Frankfurt, Ireland, Stockholm), Asia Pacific (Mumbai, Singapore, Sydney, Tokyo), US East (Ohio, N. Virginia), and US West (Oregon).

Migration scenario:

Backup InfluxDB database

You may easily restore a backup of an existing InfluxDB database into Timestream for InfluxDB in order to move from a self-managed InfluxDB instance. You may use Amazon S3 to transfer Timestream for InfluxDB from the current Timestream LiveAnalytics engine. Visit the page Migrating data from self-managed InfluxDB to Timestream for InfluxDB to learn more about how to migrate data for different use cases.

Version supported by Timestream for InfluxDB: At the moment, the open source 2.7.5 version of InfluxDB is supported.

InfluxDB AWS Pricing

Visit Amazon Timestream pricing to find out more about prices.

Read more on govindhtech.com

#amazon#aws#influxdb#liveanalytics#sqlqueries#amazons3#amazonec2#vpc#technology#technews#govindhtech

0 notes

Video

youtube

Understanding AWS Global Infrastructure: Key Regions, Zones & Services

Introduction AWS Global Infrastructure forms the backbone of Amazon Web Services, ensuring secure, scalable, and globally distributed cloud services. This infrastructure is designed to provide high availability, low latency, and secure data processing to millions of customers worldwide.

---

Key Components of AWS Global Infrastructure

1. AWS Regions - Definition: A Region is a physical location around the world where AWS clusters data centers. Each Region is isolated to enhance fault tolerance and stability. - Key Facts: - AWS currently has 30+ Regions globally (check the latest on AWS's website). - Regions are independent and designed to meet local compliance and latency requirements. - Examples: - US East (N. Virginia) – us-east-1 - Asia Pacific (Mumbai) – ap-south-1

2. Availability Zones (AZs) - Definition: AZs are separate data centers within a Region, connected by low-latency, redundant networking. - Key Features: - Each Region has 2 to 6 Availability Zones. - AZs are isolated from each other to prevent failures from propagating across zones. - Examples: In us-east-1, AZs include us-east-1a, us-east-1b, etc.

3. Local Zones - Definition: Local Zones bring AWS services closer to end-users in metro areas to reduce latency. - Use Cases: Gaming, Media & Entertainment, Real-Time Analytics. - Example: Local Zones in Los Angeles support high-performance workloads.

4. Edge Locations - Definition: Edge locations are endpoints for AWS services like CloudFront, allowing faster data delivery to users. - Purpose: Minimize latency by caching content closer to the end-user. - Global Reach: Over 400+ Edge Locations globally.

5. Regional Edge Caches - Definition: Larger caches situated between AWS Regions and Edge Locations. - Purpose: Improve cache hit rates for large, frequently accessed content.

---

AWS Services Leveraging Global Infrastructure

Compute Services - Amazon EC2: Deploy globally with instances in specific Regions/AZs. - AWS Lambda: Run serverless functions closer to end-users via AWS Regions.

Networking Services - Amazon VPC: Design private networks across Regions and AZs. - AWS Direct Connect: Establish private connections to AWS from on-premises environments.

Storage Services - Amazon S3: Store data redundantly across multiple AZs. - EBS & EFS: Provide high availability within specific Regions.

Content Delivery Services - Amazon CloudFront: Use Edge Locations to cache and deliver content rapidly.

---

Why AWS Global Infrastructure Matters

1. Scalability Easily scale applications across multiple Regions to meet demand.

2. High Availability Deploy resources in multiple AZs to ensure fault-tolerant architectures.

3. Low Latency Place workloads closer to users with Regions, AZs, and Edge Locations.

4. Compliance and Data Residency Regions comply with local data regulations (e.g., GDPR in Europe).

---

Practical Example - Scenario: A company based in the US wants to provide low-latency services to customers in Asia. - Solution: 1. Deploy resources in the Asia Pacific (Singapore) Region. 2. Use CloudFront Edge Locations in Asia for faster content delivery. 3. Set up VPC peering between US and Asia Regions for secure data transfer.

---

Conclusion AWS Global Infrastructure is the foundation of AWS's reliability and performance. By understanding the components—Regions, AZs, Local Zones, Edge Locations, and Regional Edge Caches—you can design applications that are resilient, scalable, and efficient.

#AWSGlobalInfrastructure #AWSRegions #AvailabilityZones #AWSEdgeLocations #CloudInfrastructure #AWSNetwork #AWSServices #CloudComputing #AWSArchitecture #TechTutorial #AWSBeginners #DevOpsCloud #AWSTraining #CloudDesign #cloudolus #cloudoluspro

aws global infrastructure,aws regions,aws tutorial,aws availability zones,aws for beginners,aws cloud tutorial,aws certification,cloud computing,VPC Networking Overview,What Is AWS Global Infrastructure?,What Is AWS Regions?,What Is AWS Availability Zones (AZs)?,What Is AWS Local Zones?,What Is AWS Edge Locations?,What Is AWS Regional Edge Caches?,Explained Visually AWS Global Infrastructure Real Example,cloudolus,free,aws bangla,vpc,Key Regions,Zones,Services,amazon aws tutorial,aws cloud practitioner,free aws,amazon aws tutorials,aws cloud practitioner essentials,free aws tutorials,amazon web services,aws edge locations,aws cloud,aws cloud computing,cloud technology,cloud,amazon aws,aws cloudchamp,availability zones,introduction to aws,what is aws,aws ec2,cloud computing tutorial for beginners,cloud career,aws certified cloud,aws ec2 tutorial,amazon web services tutorial,aws s3,amazon aws training,aws certified cloud practitioner,aws cloud practitioner full course,aws certifications,cloud computing aws,aws ec2 tutorial for beginners,aws course for beginners,aws course

📢 Subscribe to ClouDolus for More AWS & DevOps Tutorials! 🚀 🔹 ClouDolus YouTube Channel - https://www.youtube.com/@cloudolus 🔹 ClouDolus AWS DevOps - https://www.youtube.com/@ClouDolusPro

*THANKS FOR BEING A PART OF ClouDolus! 🙌✨*

***************************** *Follow Me* https://www.facebook.com/cloudolus/ | https://www.facebook.com/groups/cloudolus | https://www.linkedin.com/groups/14347089/ | https://www.instagram.com/cloudolus/ | https://twitter.com/cloudolus | https://www.pinterest.com/cloudolus/ | https://www.youtube.com/@cloudolus | https://www.youtube.com/@ClouDolusPro | https://discord.gg/GBMt4PDK | https://www.tumblr.com/cloudolus | https://cloudolus.blogspot.com/ | https://t.me/cloudolus | https://www.whatsapp.com/channel/0029VadSJdv9hXFAu3acAu0r | https://chat.whatsapp.com/BI03Rp0WFhqBrzLZrrPOYy *****************************

#youtube#aws global infrastructureaws regionsaws tutorialaws availability zonesaws for beginnersaws cloud tutorialaws certificationcloud computingVPC#AWSGlobalInfrastructure AWSRegions AvailabilityZones AWSEdgeLocations CloudInfrastructure AWSNetwork AWSServices CloudComputing AWSArchitect

0 notes

Text

What are the Core Amazon Cloud Services Every Business Should Know?

Numerous Amazon Cloud Services offered by Amazon Web Services (AWS) have the potential to significantly alter how companies run. Understanding these essential Amazon Cloud Services is crucial for growth and efficiency in the digital age, regardless of the size of the business. The following are some of the most crucial Amazon Cloud Services that all companies need to be aware of:

Amazon EC2 (Elastic Compute Cloud): This is a fundamental offering among Amazon Cloud Services, giving you virtual servers in the cloud. Think of it as a computer you can rent and customize with the exact amount of processing power, memory, and storage you need. You can scale these resources up or down as your business demands change, meaning you only pay for what you use. This flexibility is a key advantage of Amazon Cloud Services.

Amazon S3 (Simple Storage Service): One of the most widely used Amazon Cloud Services for data storage is Amazon S3. This object storage service can store a wide range of items, including website images and large data backups. S3 is a dependable location to store your critical data because of its reputation for durability and availability. This Amazon Cloud Service is used by many companies for hosting static webpages, disaster recovery, and backup.

AWS Lambda: This is an Amazon Cloud Services serverless computing solution. You may execute your code without worrying about servers when you use Lambda. Simply submit your code, and when an event occurs—such as a user request or a new file being uploaded to S3—Lamda will execute it automatically. For many applications, it is a very affordable Amazon Cloud Service because you simply pay for the computing time you utilize.

Amazon VPC (Virtual Private Cloud): Security is a top concern for any business. Amazon VPC lets you create a private, isolated section of the Amazon Cloud Services where you can launch your AWS resources in a virtual network that you define. This gives you complete control over your network environment, including IP address ranges, subnets, route tables, and network gateways. It's a foundational Amazon Cloud Service for building secure cloud environments.

Amazon CloudWatch: Amazon CloudWatch is an observability and monitoring service to monitor your cloud resources. It gathers operational and monitoring data through events, metrics, and logs. Depending on the condition of your Amazon Cloud Services resources, CloudWatch can be used to automate tasks, view data, and set alarms. This aids companies in maintaining efficiency and promptly addressing problems.

AWS IAM (Identity and Access Management): Controlling who has access to your Amazon Cloud Services resources is essential. With the help of AWS IAM, you can securely manage users and their rights. It is possible to create users, groups, and roles as well as define policies that outline their capabilities. IAM is a crucial component of security for all Amazon Cloud Services.

Amazon CloudFront: Amazon CloudFront serves as a content delivery network (CDN) to swiftly distribute material to users throughout the globe. It expedites the delivery of your web content to users with low latency, including streaming, static, and dynamic content. Enhancing the user experience with these Amazon Cloud Services is especially crucial for websites and applications that have a large worldwide user base.

AWS Auto Scaling: This Amazon Cloud Service helps your applications automatically adjust capacity to maintain steady, predictable performance at the lowest possible cost. Auto Scaling monitors your applications and automatically adds or removes compute capacity in response to changes in demand. This ensures your applications can handle traffic spikes without manual intervention.

A lot of businesses provide services that are based on or related to Amazon Cloud Services. These include major international consulting firms that use Amazon Cloud Services to offer full-service cloud migration, management, and optimization, such as Accenture, Capgemini, and Sumasoft. Additionally, there are specialist cloud service providers like Logicworks and Rackspace Technology that only offer managed Amazon Cloud Services and cloud solutions. These organizations assist companies in navigating cloud complexity and optimizing their investments in Amazon Cloud Services.

0 notes

Text

From Novice to Pro: Master the Cloud with AWS Training!

In today's rapidly evolving technology landscape, cloud computing has emerged as a game-changer, providing businesses with unparalleled flexibility, scalability, and cost-efficiency. Among the various cloud platforms available, Amazon Web Services (AWS) stands out as a leader, offering a comprehensive suite of services and solutions. Whether you are a fresh graduate eager to kickstart your career or a seasoned professional looking to upskill, AWS training can be the gateway to success in the cloud. This article explores the key components of AWS training, the reasons why it is a compelling choice, the promising placement opportunities it brings, and the numerous benefits it offers.

Key Components of AWS Training

1. Foundational Knowledge: Building a Strong Base

AWS training starts by laying a solid foundation of cloud computing concepts and AWS-specific terminology. It covers essential topics such as virtualization, storage types, networking, and security fundamentals. This groundwork ensures that even individuals with little to no prior knowledge of cloud computing can grasp the intricacies of AWS technology easily.

2. Core Services: Exploring the AWS Portfolio

Once the fundamentals are in place, AWS training delves into the vast array of core services offered by the platform. Participants learn about compute services like Amazon Elastic Compute Cloud (EC2), storage options such as Amazon Simple Storage Service (S3), and database solutions like Amazon Relational Database Service (RDS). Additionally, they gain insights into services that enhance performance, scalability, and security, such as Amazon Virtual Private Cloud (VPC), AWS Identity and Access Management (IAM), and AWS CloudTrail.

3. Specialized Domains: Nurturing Expertise

As participants progress through the training, they have the opportunity to explore advanced and specialized areas within AWS. These can include topics like machine learning, big data analytics, Internet of Things (IoT), serverless computing, and DevOps practices. By delving into these niches, individuals can gain expertise in specific domains and position themselves as sought-after professionals in the industry.

Reasons to Choose AWS Training

1. Industry Dominance: Aligning with the Market Leader

One of the significant reasons to choose AWS training is the platform's unrivaled market dominance. With a staggering market share, AWS is trusted and adopted by businesses across industries worldwide. By acquiring AWS skills, individuals become part of the ecosystem that powers the digital transformation of numerous organizations, enhancing their career prospects significantly.

2. Comprehensive Learning Resources: Abundance of Educational Tools

AWS training offers a wealth of comprehensive learning resources, ranging from extensive documentation, tutorials, and whitepapers to hands-on labs and interactive courses. These resources cater to different learning preferences, enabling individuals to choose their preferred mode of learning and acquire a deep understanding of AWS services and concepts.

3. Recognized Certifications: Validating Expertise

AWS certifications are globally recognized credentials that validate an individual's competence in using AWS services and solutions effectively. By completing AWS training and obtaining certifications like AWS Certified Solutions Architect or AWS Certified Developer, individuals can boost their professional credibility, open doors to new job opportunities, and command higher salaries in the job market.

Placement Opportunities

Upon completing AWS training, individuals can explore a multitude of placement opportunities. The demand for professionals skilled in AWS is soaring, as organizations increasingly migrate their infrastructure to the cloud or adopt hybrid cloud strategies. From startups to multinational corporations, industries spanning finance, healthcare, retail, and more seek talented individuals who can architect, develop, and manage cloud-based solutions using AWS. This robust demand translates into a plethora of rewarding career options and a higher likelihood of finding positions that align with one's interests and aspirations.

In conclusion, mastering the cloud with AWS training at ACTE institute provides individuals with a solid foundation, comprehensive knowledge, and specialized expertise in one of the most dominant cloud platforms available. The reasons to choose AWS training are compelling, ranging from the industry's unparalleled market position to the top ranking state.

9 notes

·

View notes

Text

Navigating the Cloud: Unleashing Amazon Web Services' (AWS) Impact on Digital Transformation

In the ever-evolving realm of technology, cloud computing stands as a transformative force, offering unparalleled flexibility, scalability, and cost-effectiveness. At the forefront of this paradigm shift is Amazon Web Services (AWS), a comprehensive cloud computing platform provided by Amazon.com. For those eager to elevate their proficiency in AWS, specialized training initiatives like AWS Training in Pune offer invaluable insights into maximizing the potential of AWS services.

Exploring AWS: A Catalyst for Digital Transformation

As we traverse the dynamic landscape of cloud computing, AWS emerges as a pivotal player, empowering businesses, individuals, and organizations to fully embrace the capabilities of the cloud. Let's delve into the multifaceted ways in which AWS is reshaping the digital landscape and providing a robust foundation for innovation.

Decoding the Heart of AWS

AWS in a Nutshell: Amazon Web Services serves as a robust cloud computing platform, delivering a diverse range of scalable and cost-effective services. Tailored to meet the needs of individual users and large enterprises alike, AWS acts as a gateway, unlocking the potential of the cloud for various applications.

Core Function of AWS: At its essence, AWS is designed to offer on-demand computing resources over the internet. This revolutionary approach eliminates the need for substantial upfront investments in hardware and infrastructure, providing users with seamless access to a myriad of services.

AWS Toolkit: Key Services Redefined

Empowering Scalable Computing: Through Elastic Compute Cloud (EC2) instances, AWS furnishes virtual servers, enabling users to dynamically scale computing resources based on demand. This adaptability is paramount for handling fluctuating workloads without the constraints of physical hardware.

Versatile Storage Solutions: AWS presents a spectrum of storage options, such as Amazon Simple Storage Service (S3) for object storage, Amazon Elastic Block Store (EBS) for block storage, and Amazon Glacier for long-term archival. These services deliver robust and scalable solutions to address diverse data storage needs.

Streamlining Database Services: Managed database services like Amazon Relational Database Service (RDS) and Amazon DynamoDB (NoSQL database) streamline efficient data storage and retrieval. AWS simplifies the intricacies of database management, ensuring both reliability and performance.

AI and Machine Learning Prowess: AWS empowers users with machine learning services, exemplified by Amazon SageMaker. This facilitates the seamless development, training, and deployment of machine learning models, opening new avenues for businesses integrating artificial intelligence into their applications. To master AWS intricacies, individuals can leverage the Best AWS Online Training for comprehensive insights.

In-Depth Analytics: Amazon Redshift and Amazon Athena play pivotal roles in analyzing vast datasets and extracting valuable insights. These services empower businesses to make informed, data-driven decisions, fostering innovation and sustainable growth.

Networking and Content Delivery Excellence: AWS services, such as Amazon Virtual Private Cloud (VPC) for network isolation and Amazon CloudFront for content delivery, ensure low-latency access to resources. These features enhance the overall user experience in the digital realm.

Commitment to Security and Compliance: With an unwavering emphasis on security, AWS provides a comprehensive suite of services and features to fortify the protection of applications and data. Furthermore, AWS aligns with various industry standards and certifications, instilling confidence in users regarding data protection.

Championing the Internet of Things (IoT): AWS IoT services empower users to seamlessly connect and manage IoT devices, collect and analyze data, and implement IoT applications. This aligns seamlessly with the burgeoning trend of interconnected devices and the escalating importance of IoT across various industries.

Closing Thoughts: AWS, the Catalyst for Transformation

In conclusion, Amazon Web Services stands as a pioneering force, reshaping how businesses and individuals harness the power of the cloud. By providing a dynamic, scalable, and cost-effective infrastructure, AWS empowers users to redirect their focus towards innovation, unburdened by the complexities of managing hardware and infrastructure. As technology advances, AWS remains a stalwart, propelling diverse industries into a future brimming with endless possibilities. The journey into the cloud with AWS signifies more than just migration; it's a profound transformation, unlocking novel potentials and propelling organizations toward an era of perpetual innovation.

2 notes

·

View notes

Text

10 Ways AWS Cloud Service Boosts Performance, Security, and Agility

In today’s fast-paced digital landscape, performance, security, and agility are no longer optional—they are critical. Businesses require infrastructure that not only scales on demand but also adapts quickly to changing market conditions, protects sensitive data, and delivers a seamless customer experience.

Enter Amazon Web Services (AWS)—the world’s most comprehensive and widely adopted cloud platform. With over 200 fully featured services, AWS enables startups, enterprises, and government organizations to innovate faster, reduce IT overhead, and confidently navigate the digital future.

In this article, we’ll explore 10 powerful ways AWS Cloud Services boost your business’s performance, security, and agility—and why thousands of organizations are making the switch.

1. Elastic Scalability to Handle Any Workload

One of AWS’s biggest strengths is its elasticity—you can instantly scale computing resources up or down based on demand. This ensures:

Optimal performance during traffic spikes

Cost efficiency during quieter periods

No need to overprovision hardware

For example, e-commerce websites can seamlessly handle seasonal traffic surges, and application developers can test at scale without worrying about infrastructure limits.

With services like Amazon EC2 Auto Scaling and AWS Lambda, AWS delivers the flexibility modern businesses need to operate efficiently at any scale.

2. Global Low-Latency Performance

AWS offers a massive global infrastructure with 105 Availability Zones across 33 geographic regions (and growing). This global reach helps businesses:

Deploy applications closer to end users

Reduce latency for global audiences

Improve load times and responsiveness

Whether you’re serving users in Sydney or New York, AWS ensures your applications run smoothly and quickly. Services like Amazon CloudFront, AWS’s content delivery network (CDN), further enhance speed and performance worldwide.

3. Built-In Security and Compliance

Security is embedded in AWS’s DNA. AWS delivers end-to-end data protection, including:

Encryption at rest and in transit

Identity and access control via AWS IAM

Network isolation through VPCs

Automatic security patching

Plus, AWS complies with leading industry standards like ISO 27001, SOC 1/2/3, GDPR, HIPAA, and FedRAMP.

With tools like AWS Shield, AWS WAF, and Amazon GuardDuty, you get proactive threat detection and real-time protection—so your team can focus on growth, not security risks.

4. Faster Innovation with DevOps and Automation

AWS accelerates development cycles by supporting DevOps best practices and automation. Services like:

AWS CodePipeline (CI/CD automation)

AWS CloudFormation (infrastructure as code)

AWS Elastic Beanstalk (application deployment)

…enable teams to test, build, and deploy applications rapidly.

This means you can push updates faster, roll back issues quickly, and continuously improve products—giving you a strong edge over slower-moving competitors.

5. Advanced Analytics and Machine Learning Capabilities

AWS makes it easy to derive insights from your data and apply AI and ML to real-world business problems.

Key services include:

Amazon Redshift (data warehousing)

AWS Glue (ETL and data prep)

Amazon SageMaker (build, train, and deploy ML models)

Whether you’re analyzing customer behavior, predicting equipment failures, or powering intelligent chatbots, AWS gives you the tools to transform data into decisions.

This combination of performance and intelligence helps businesses become more agile and future-ready.

6. Pay-as-You-Go Pricing Model

One of the most attractive aspects of AWS is its pay-as-you-go pricing. You only pay for what you use—no upfront costs, no long-term contracts.

This benefits your business by:

Lowering capital expenditure

Reducing waste from unused resources

Aligning cloud costs with actual business growth

Additionally, services like AWS Cost Explorer and AWS Budgets help you track spending and optimize costs in real-time.

For businesses focused on agility and financial control, this flexible pricing model is a major advantage.

7. Disaster Recovery and High Availability

Downtime can cost companies thousands—or even millions—of dollars. AWS helps mitigate this risk with high availability architecture and robust disaster recovery options.

With multi-region deployments, automated backups, and cross-region replication, AWS ensures:

Data resilience even in the face of outages

Quick failover and business continuity

Reduced risk of data loss

Services like Amazon RDS and Amazon S3 offer built-in backup and versioning features, giving your data the redundancy it needs for true reliability.

8. Simplified Application Modernization

Legacy systems slow you down. AWS helps businesses modernize applications through:

Containerization with Amazon ECS and EKS

Serverless architecture with AWS Lambda

Microservices deployment strategies

These solutions decouple traditional monoliths into flexible, scalable services. That means faster updates, better performance, and a modern architecture that adapts to change.

If your goal is agility and continuous innovation, AWS is your ideal modernization partner.

9. Robust Ecosystem and Partner Support

AWS is more than a cloud platform—it’s an ecosystem. With thousands of AWS Partner Network (APN) members and Marketplace solutions, you can easily find tools, integrations, and services to support your growth.

From managed services providers (MSPs) to cloud migration consultants, AWS partners help businesses implement, optimize, and manage cloud environments effectively.

This ecosystem ensures you’re never alone in your cloud journey—and can tap into expert help whenever needed.

10. Continuous Updates and Industry-Leading Innovation

AWS isn’t static. Amazon adds hundreds of new features and services to AWS each year. This culture of continuous innovation ensures your business always has access to:

The latest cloud technologies

Cutting-edge infrastructure improvements

New ways to secure, scale, and enhance performance

Whether it's launching Graviton processors for better efficiency or releasing new AI-powered tools, AWS helps you stay ahead of technological trends and competitors.

Final Thoughts: Future-Proof Your Business with AWS

In an era where every second counts, and competition is just a click away, AWS Cloud Services provide the foundation for high performance, enterprise-grade security, and unmatched agility.

By adopting AWS, you gain more than just cloud infrastructure—you gain a strategic advantage that helps you grow, adapt, and lead in your industry.

If you're ready to take your business to the next level, AWS offers the tools, scalability, and support to get you there.

#aws cloud service#aws services#aws security#aws web services#aws security services#AWS Consulting Partner#AWS experts#aws certified solutions architect

0 notes

Text

Cybersecurity on AWS: How to Secure Your Cloud Environment in 2025

1. Introduction

The cloud has been a priority for more organizations, and security has become a major focus. Amazon Web Services (AWS) is a leading cloud provider in the world, but knowing how to use it securely requires understanding security tools and best practices.

This blog will look at how to manage cyber security effectively in AWS using AWS features and established practices.

2. The Importance of Cybersecurity in AWS

AWS provides scalable and reliable cloud services, but in the same way any other infrastructure can become vulnerable to cyber threats if not managed. Cybersecurity in AWS can be directed at the following features:

Data breaches, misconfigurations and weak access controls can expose sensitive information, so it is important to focus on Cybersecurity in AWS because it is important to protect:

Customer data

Intellectual property

Application integrity

Business continuity

3. Core Security Concepts in AWS

The AWS security consists of these foundational concepts:

Confidentiality: Making data private and secure

Integrity: Making data accurate and untampered with

Availability: Keeping systems running and available

Compliance: Meeting regulatory and legal obligations (e.g., GDPR, HIPAA)

4. Key AWS Security Services

AWS offers a variety of services to help you secure your cloud environment.

AWS Identity and Access Management (IAM): Manage who has access to your AWS resources

AWS Key Management Service (KMS): Manage your encryption keys for securing data lots

AWS CloudTrail: See and monitor all account/finding activity

Amazon GuardDuty: Identify threats. Uses intelligent threat analysis.

AWS Config: Monitor changes and ensure compliance

AWS WAF (Web Application Firewall): Protect web applications from common attacks.

Amazon Inspector: Automatically evaluates security vulnerabilities on EC2 instances.

AWS Security Hub: A single view of security alerts and findings.

5. Best Practices for Securing AWS Environments

In order to secure your AWS environment use best practices such as:

- Employing IAM roles as well as a least-privilege (or as little as possible to complete the assignment) approach

- Enabling MFA

- Encrypting data at rest and in transit when possible (using AWS KMS)

- Reviewing and rotating credentials regularly

- Employing AWS CloudTrail and GuardDuty for continuous monitoring

- Isolating workloads, if possible, using VPCs or security groups

- Regularly applying patches

- Automating checks with AWS Config or AWS Lambda

6. Common Threats and How AWS Helps

Threat

AWS Protection Tools

Data Breach

KMS, IAM, S3 Bucket Policies

Misconfiguration

AWS Config, AWS Trusted Advisor

Unauthorized Access

IAM Policies, MFA, GuardDuty

DDoS Attacks

AWS Shield, WAF

Insecure APIs

API Gateway + WAF + Cognito

7. Shared Responsibility Model

In AWS, security is a Shared Responsibility Model:

Your security responsibilities: securing what you put into the cloud (data, apps, user access, configurations)

AWS responsibilities: securing your environment (hardware, software, networking, facilities)

Understanding how this is divided is critical to managing cloud security.

8. Conclusion

AWS provides a vibrant cloud environment from which to build and scale applications, but it is your responsibility to secure it. AWS offers many available native security services and best practices if you follow them, you will have a secure, compliant, and resilient environment.

Cybersecurity is not optional in the cloud; it is mandatory. Facebook posts may be optional, but security is not. Start early, audit often, and leverage what AWS provides for your environment to implement the security posture against threats.

0 notes

Text

Run AWS Locally: Simulate AWS services on your laptop

Developing and testing applications that rely on Amazon Web Services (AWS) can be complex and costly. That's where LocalStack comes in, offering a powerful solution to run AWS locally. This article will explore how LocalStack simplifies cloud development, its key features, and practical examples to help you leverage its benefits. The Problem: Challenges of AWS Development Traditional AWS development often involves: High Costs: Using real AWS resources during development and testing can lead to unexpected expenses. Slow Development Cycles: Internet dependencies and remote resource access can slow down development and testing. Complex Debugging: Debugging issues in a live cloud environment can be challenging. CI/CD Integration Hurdles: Integrating AWS services into CI/CD pipelines can be complex and time-consuming. Testing Limitations: Testing against real AWS services can be difficult to control and isolate. LocalStack addresses these challenges by providing a local, simulated AWS environment, enabling developers to run AWS locally with ease. What is LocalStack? LocalStack is an open-source tool that allows you to simulate AWS cloud services & run them locally on your machine. This enables you to develop, test, and integrate AWS applications without the need for a live AWS account. Key Features and Functionalities: Local AWS Simulation: Enables you to run AWS locally, simulating services like S3, Lambda, DynamoDB, and more. Multi-Service Support: Supports a wide range of AWS services, as detailed in the LocalStack feature coverage documentation. Cost-Effectiveness: Significantly reduces AWS costs during development and testing. Offline Development: Allows you to work without an internet connection. Cross-Platform Compatibility: Works on Windows, Linux, and macOS. Community-Driven: Benefits from a vibrant open-source community. Development Tool Integration: Integrates seamlessly with Docker and other development tools. CI/CD Support: Enables automated testing in CI/CD pipelines without real AWS interactions. Enhanced Productivity: Speeds up development and testing cycles. Easy Setup: Simple installation and configuration. The AWS services supported by LocalStack: LocalStack supports almost all major AWS Services. The complete list can be found at official LocalStack website.

Installation and Setup: To run AWS locally using LocalStack, you'll need Docker and the AWS CLI installed. You may also need Terraform. macOS (using Brew): brew install localstack/tap/localstack-cli Other Platforms (using Pip): python3 -m pip install localstack Binary Installation: Download the binary from the LocalStack GitHub releases page. Running LocalStack: Start LocalStack using the CLI: localstack start -d # -d to run in the background Configure the AWS CLI: aws configure Imp: Enter test For both the access key and secret key, select your desired region.

AWS CLI Examples (Using LocalStack): Create an S3 Bucket: aws --endpoint-url=http://localhost:4566 s3 mb s3://my-test-bucket List S3 Buckets: aws --endpoint-url="http://localhost:4566" s3 ls Upload Files to S3: aws --endpoint-url="http://localhost:4566" s3 sync "myfiles" s3://my-test-bucket Create a VPC: aws ec2 create-vpc --cidr-block 10.0.0.0/24 --endpoint-url=http://localhost:4566 For more examples, refer to this LocalStack AWS CLI examples gist. Benefits of Running AWS Locally: Cost Savings: Reduce AWS costs during development and testing. Faster Development: Improve development speed by working locally. Enhanced Testing: Create isolated and controlled testing environments. Improved Debugging: Simplify debugging and troubleshooting. Seamless CI/CD: Integrate with CI/CD pipelines for automated testing. Conclusion: LocalStack is an invaluable tool for developers looking to run AWS locally. By providing a simulated AWS environment, it streamlines development, reduces costs, and enhances testing capabilities. Start using LocalStack today to accelerate your AWS development workflow. We are giving you exclusive deals to try Linux Servers for free with 100$ credit, check these links to claim your 100$, DigitalOcean - 100$ free credit & Linode - 100$ free credit Check some Exclusive Deals, HERE. Also, check out DevOps Book You should read section. Read the full article

0 notes

Text

AWS Certified Solutions Architect Associate Certification in Massachusetts

The high-speed adaptation of cloud technology in industries has given cloud computing the best-researched career choice in the IT industry. For Massachusetts working professionals in a technologically advanced state with a strong health care and education sector, the AWS Certified Solutions Architect Associate Certification is an effective way of remaining relevant in this changing environment. This certification validates your ability to design scalable, secure, and cost-effective systems on Amazon Web Services (AWS), the world’s leading cloud platform. With its thriving job market and strong emphasis on innovation, Massachusetts presents an ideal environment for aspiring cloud professionals to get certified and grow their careers.

Why Pursue AWS Certified Solutions Architect Associate Certification?

The AWS Certified Solutions Architect Associate Certification is geared towards candidates who possess some experience with AWS and want to prove their ability to design distributed systems and applications. It enables one to gain an extensive knowledge of AWS basic services, architectural best practices, and cost-optimization methods. In Massachusetts, companies from various industries from startups to Fortune 500 organizations are increasingly adopting AWS for cloud purposes. As a result, the demand for AWS-certified architects is increasing exponentially. Obtaining this certification can really boost your employment prospects, salary rate, and reputation in the highly competitive Massachusetts tech market.

The Learning Experience and What to Expect

Learning for the AWS Certified Solutions Architect Associate Certification in Massachusetts will more often than not incorporate extensive training courses covering all the significant AWS services and architecture concepts. These courses usually provide hands-on labs, scenario training, and practice tests. Be it online training or learning through courses in cities such as Boston, Worcester, or Cambridge, you will be able to develop highly available, fault-tolerant, and secure cloud solutions. The certification is also practical in nature, addressing the hands-on application of storage systems like Amazon S3 and EBS, Virtual Private Cloud (VPC) deployment, and the use of security features like Identity and Access Management (IAM).

Massachusetts Training Flexibility

Multiple training avenues are available in Massachusetts to assist professionals in preparing for the certification in accordance with their learning needs and availability. Trainers in Boston and surrounding metropolitan areas offer instructor-led classroom training with peer discussion and real-time assistance. Busy learners can use online courses, which include self-paced modules, live virtual classrooms, and mobile capabilities. Weekend boot camps and immersive programs are also offered by most training providers, which are perfectly suited for working individuals who prefer accelerating their preparation. These courses are a chance for all—student, IT specialist, or career changer—to receive top-tier AWS education in the state.

Career Development and Employment Opportunities

Obtaining the AWS Certified Solutions Architect Associate Certification in Massachusetts can lead to numerous employment opportunities in cloud and IT infrastructure. Some of the most competitive positions are AWS Cloud Architect, Cloud Engineer, Solutions Consultant, and DevOps Engineer. In a competitive job market such as Massachusetts, where technological advancement represents the pinnacle of business prosperity, an AWS certification will make you the crème de la crème. State-certified experts earn between $110,000 and $160,000 per year, depending on experience and profession. Most firms also offer bonuses and promotions to applicants who acquire AWS certifications, so it is worthwhile to invest in your future.

Who Should Take This Certification?

This certification is perfect for those with some hands-on experience with AWS first and want to get their skills certified. It is also perfect for systems admins, software engineers, and IT professionals who would like to transition to cloud architecture. Even new grads and cloud beginners can utilize this certification with proper training. Because of the numerous top technical schools and universities in Massachusetts, the students also get access to academic support and internship opportunities that support their path to certification.

Conclusion: Your Cloud Future Starts Here

In conclusion, the AWS Certified Solutions Architect Associate Certification in Massachusetts is not just a certification—it's a stepping block for a rewarding, future-proofed career. With its vibrant tech industry, strong academic institutions, and growing demand for cloud expertise, Massachusetts offers an ideal setting for cloud professionals to learn, certify, and succeed. Whether you’re just starting your journey or aiming to advance your career, this certification provides the skills and recognition needed to stand out in today’s competitive cloud job market. Begin your AWS journey today and join the cloud-powered innovation revolutionizing Massachusetts and the world.

0 notes