#Tableau Implementation Services

Explore tagged Tumblr posts

Text

Maximize Your Data with Tableau Implementation Services by Clarion

Businesses need powerful visualization tools to transform raw data into actionable insights. Tableau has emerged as a leader in this space, offering intuitive dashboards and deep analytics capabilities for businesses aiming to stay ahead of their competition.

Clarion Technologies offers Tableau Implementation Services in the USA, helping organizations smoothly integrate Tableau into their workflows for smarter and faster decision-making. Whether you need to set up advanced dashboards, create executive reporting layers, or optimize data visualization for your teams, Clarion’s Tableau experts can bring your vision to life.

Why Tableau?

Tableau is known for its user-friendly interface, drag-and-drop capabilities, and the ability to connect with multiple data sources, enabling businesses to analyze and visualize complex datasets with ease. It supports interactive dashboards and real-time data analysis, making it a valuable tool for organizations across industries like healthcare, finance, retail, logistics, and more.

Clarion’s Tableau Services

Clarion provides end-to-end Tableau solutions, including:

✅ Tableau Implementation Services: From initial setup to creating customized dashboards aligned with your business KPIs, Clarion ensures seamless Tableau deployment that suits your unique requirements.

✅ Tableau Data Integration: Our experts help connect Tableau with various data sources, including CRM systems, cloud storage, and enterprise databases, ensuring data consistency and real-time analytics for effective decision-making.

✅ Dashboard Optimization and Maintenance

✅ Advanced Analytics and Predictive Modeling using Tableau

Why Choose Clarion in the USA?

With 20+ years of experience and a strong presence in the USA, Clarion provides businesses with reliable Tableau expertise, ensuring:

Custom solutions tailored to your data analytics goals

Skilled Tableau-certified developers

Agile and transparent project management

Scalable and secure Tableau environments

Whether you’re a growing business aiming to utilize your data effectively or an enterprise looking to enhance reporting capabilities, Clarion’s Tableau Implementation Services and Tableau Data Integration solutions help you get the most out of your data investments.

Ready to harness your data for smarter decisions? Connect with Clarion today to get started with expert Tableau Implementation Services and seamless Tableau Data Integration.

#hire tableau developers#tableau developer for hire#hire tableau developer#hire tableau bi developer#Tableau Implementation Services#Tableau Data Integration#tableau consulting services

0 notes

Text

Tableau is a strong data visualization and business intelligence tool that helps organizations create interactive dashboards and reports from raw data.

Its future is promising as it continues to evolve to meet growing data analytics demands.

Read more - https://tinyurl.com/tableau-quick-starter-package

#salesforce consulting#salesforce crm#crm software#salesforce integration#Salesforce Tableau#Tableau implementation#Tableau Consulting Services#data visualization#business intelligence

0 notes

Text

Unlocking Success with Adobe Campaign Standard Implementation

Are you ready to revolutionize your marketing efforts and take your campaigns to the next level? Look no further than Adobe Campaign Standard implementation! In this blog post, we'll delve into the world of Adobe Campaign Standard and explore how its implementation can make a remarkable difference in your marketing strategies.

What is Adobe Campaign Standard?

Before we dive into the benefits of implementing Adobe Campaign Standard, let's quickly recap what it is. Adobe Campaign Standard is a powerful marketing automation tool that enables businesses to create, manage, and deliver personalized and targeted campaigns across various channels seamlessly.

Why Implement Adobe Campaign Standard?

You might be wondering, "Why should I bother with implementing Adobe Campaign Standard?" Well, here are some compelling reasons that will make you reconsider:

Streamlined Campaign Management: Say goodbye to the hassle of juggling multiple platforms. Adobe Campaign Standard centralizes your campaign management, making it a breeze to oversee and control your marketing efforts.

Personalization at Scale: Tailoring your messages to individual customers has never been easier. With Adobe Campaign Standard, you can deliver personalized content to your audience, fostering stronger connections and driving higher engagement rates.

Multi-channel Excellence: Whether it's email, social media, SMS, or more, Adobe Campaign Standard allows you to maintain consistent messaging across various channels, ensuring a cohesive customer experience.

The Implementation Process

Implementing Adobe Campaign Standard might sound like a daunting task, but fear not! Follow these steps to navigate the implementation process smoothly:

Assessment and Planning:

Understand your marketing goals and objectives.

Identify the key features of Adobe Campaign Standard that align with your needs.

Plan the integration process, including data migration if required.

Customization and Configuration:

Tailor Adobe Campaign Standard to suit your brand's identity and voice.

Configure campaign workflows, audience segmentation, and tracking mechanisms.

Data Integration:

Seamlessly integrate your existing customer data into Adobe Campaign Standard.

Ensure data accuracy and completeness to drive effective targeting.

Testing and Quality Assurance:

Rigorously test your campaign workflows and automation processes.

Address any issues or discrepancies before launching live campaigns.

Training and Onboarding:

Provide training sessions for your marketing team to ensure they are comfortable using the platform.

Maximize the platform's potential by helping your team make the most of its features.

Measuring Success

After implementing Adobe Campaign Standard, it's crucial to measure your success to understand the impact of your efforts. Keep an eye on the following metrics:

Engagement Rates: Monitor how well your audience is interacting with your campaigns.

Conversion Rates: Measure the effectiveness of your campaigns in terms of driving conversions.

Audience Growth: Track the growth of your audience as you deliver more targeted and personalized content.

In Conclusion

In a world where effective marketing can make or break a business, Adobe Campaign Standard implementation offers a robust solution to elevate your campaigns. By streamlining your processes, enabling personalization, and providing multi-channel capabilities, this tool is a game-changer in the marketing automation landscape.

So, why wait? Embrace the power of Adobe Campaign Standard implementation and witness your marketing efforts reach new heights. It's time to transform the way you connect with your audience and achieve marketing excellence.

Source: https://ive-data-driven.blogspot.com/2023/08/unlocking-success-with-adobe-campaign.html

#adobe marketing automation#adobe campaign standard implementation#adobe experience manager implementation#aem consulting services#aem implementation#tableau consultancy

0 notes

Text

Are you ready to transform your data into actionable insights? Harness the power of Tableau's cutting-edge analytics and visualization tools with our premium licenses and expert consulting services - https://qrsolutions.com.au/tableau-licenses-and-consulting-services/

#tableau licensing provider#tableau consulting australia#tableau bi training#certified tableau reseller#tableau reseller in australia#tableau consulting services#tableau implementation and configuration#customized tableau server#tableau license holder

0 notes

Text

Top 10 Predictive Analytics Tools to Strive in 2024

Predictive analytics has become a crucial tool for businesses, thanks to its ability to forecast key metrics like customer retention, ROI growth, and sales performance. The adoption of predictive analytics tools is growing rapidly as businesses recognize their value in driving strategic decisions. According to Statista, the global market for predictive analytics tools is projected to reach $41.52 billion by 2028, highlighting its increasing importance.

What Are Predictive Analytics Tools?

Predictive analytics tools are essential for managing supply chains, understanding consumer behavior, and optimizing business operations. They help organizations assess their current position and make informed decisions for future growth. Tools like Tableau, KNIME, and Databricks offer businesses a competitive advantage by transforming raw data into actionable insights. By identifying patterns within historical data, these tools enable companies to forecast trends and implement effective growth strategies. For example, many retail companies use predictive analytics to improve inventory management and enhance customer experiences.

Top 10 Predictive Analytics Tools

SAP: Known for its capabilities in supply chain, logistics, and inventory management, SAP offers an intuitive interface for creating interactive visuals and dashboards.

Alteryx: This platform excels in building data models and offers a low-code environment, making it accessible to users with limited coding experience.

Tableau: Tableau is favored for its data processing speed and user-friendly interface, which allows for the creation of easy-to-understand visuals.

Amazon QuickSight: A cloud-based service, QuickSight offers a low-code environment for automating tasks and creating interactive dashboards.

Altair AI Studio: Altair provides robust data mining and predictive modeling capabilities, making it a versatile tool for business intelligence.

IBM SPSS: Widely used in academia and market research, SPSS offers a range of tools for statistical analysis with a user-friendly interface.

KNIME: This open-source tool is ideal for data mining and processing tasks, and it supports machine learning and statistical analysis.

Microsoft Azure: Azure offers a comprehensive cloud computing platform with robust security features and seamless integration with Microsoft products.

Databricks: Built on Apache Spark, Databricks provides a collaborative workspace for data processing and machine learning tasks.

Oracle Data Science: This cloud-based platform supports a wide range of programming languages and frameworks, offering a collaborative environment for data scientists.

Conclusion

As businesses continue to embrace digital transformation, predictive analytics tools are becoming increasingly vital. Companies looking to stay competitive should carefully select the right tools to harness the full potential of predictive analytics in today’s business la

#databricks#oracle data science#sap#alteryx#microsoft#microsoft azure#knime#ibm spss#altair studio#amazon quick sight

1 note

·

View note

Text

Decoding Data Roles: A Comprehensive Guide to Data Analysts and Data Scientists

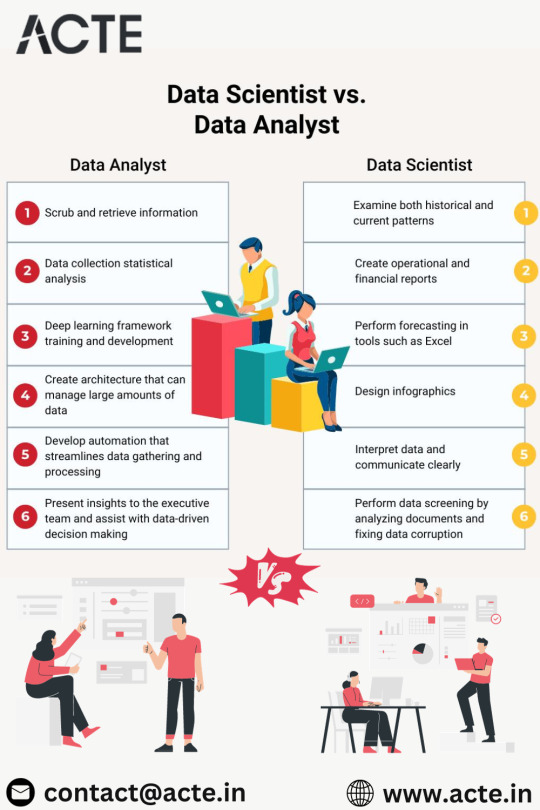

In today's data-driven landscape, the roles of data analysts and data scientists share some similarities but differ significantly in terms of their focus, skill sets, and the scope of their work. As organizations increasingly recognize the importance of these roles, the demand for skilled professionals has led to the emergence of various data science institutes. Let's explore the nuances that differentiate a data analyst from a data scientist, while also considering the importance of choosing the best Data Science institute for a comprehensive education in this field.

Let's delve into the nuances that differentiate a data analyst from a data scientist.

1. The Scope of Work:

Data analysts and data scientists play distinct roles when it comes to the scope of their work.

Data Analyst:

Data analysts are the interpreters of historical data. Their primary focus lies in uncovering trends, generating reports, and providing insights that aid day-to-day operations within an organization. They work with structured data, employing tools such as Excel, SQL, and visualization tools like Tableau or Power BI. The problems they address are typically well-defined and pertain to specific queries.

Data Scientist:

Data scientists, on the other hand, have a broader scope. While data analysis is a part of their work, they are also deeply involved in more complex tasks. This includes developing machine learning models, engaging in predictive modeling, and conducting advanced analytics. Data scientists deal with unstructured or semi-structured data, addressing more intricate and less defined problems. Their role extends beyond routine data interpretation to include exploratory data analysis, hypothesis testing, and the development of algorithms.

2. Skill Sets:

The skill sets required for data analysts and data scientists highlight the differences in their roles.

Data Analyst:

Data analysts need a strong foundation in statistical analysis, data cleaning, and proficiency in tools like Excel and SQL. While they may have some programming skills, they typically do not require the same level of expertise in machine learning. Visualization tools are a key part of their toolkit, enabling them to communicate insights effectively.

Data Scientist:

Data scientists require a more comprehensive skill set. They need a deep understanding of statistics, machine learning, and programming languages such as Python or R. Proficiency in data preprocessing, feature engineering, model selection, and evaluation is essential. Data scientists often work with big data technologies and possess advanced knowledge of analytical techniques, enabling them to create and implement complex algorithms.

3. Problem Complexity:

The complexity of problems tackled by data analysts and data scientists varies significantly.

Data Analyst:

Data analysts generally deal with well-defined problems and questions. Their focus is on providing answers to specific queries based on structured data. The insights they provide contribute to the day-to-day decision-making processes within an organization.

Data Scientist:

Data scientists thrive on addressing more complex and less structured problems. They engage in exploratory data analysis, hypothesis testing, and the creation of models capable of handling unstructured or semi-structured data. The solutions they develop often contribute to strategic decision-making, driving innovation, process optimization, and the creation of new products or services.

4. Business Impact:

The impact of data analysts and data scientists on an organization's decision-making processes is another area of distinction.

Data Analyst:

The insights provided by data analysts are integral to operational improvements and day-to-day decision-making. Their work contributes to the efficiency and effectiveness of ongoing processes within the organization.

Data Scientist:

Data scientists play a more strategic role in decision-making. Their insights have a broader impact on the organization, driving innovation, shaping long-term strategies, and contributing to the development of new products or services. The impact of a data scientist's work extends beyond routine operations, influencing the overall direction of the organization.

5. Educational Background:

The educational backgrounds of data analysts and data scientists reflect the differences in the complexity of their roles.

Data Analyst:

Data analysts may have a background in fields such as statistics, mathematics, economics, or business. While a bachelor's degree is often sufficient, some roles may require a master's degree.

Data Scientist:

Data scientists typically hold more advanced degrees, such as a master's or Ph.D., in fields like computer science, statistics, or data science. The nature of their work demands a deeper understanding of advanced mathematical and statistical concepts, as well as expertise in machine learning.

In conclusion, the divergence between data analysts and data scientists is profound, marked by distinctions in complexity, skill prerequisites, and organizational impact. Data analysts concentrate on offering actionable insights from existing data, enhancing day-to-day decision-making processes. In contrast, data scientists embark on tackling intricate issues, employing advanced analytics and machine learning to derive predictive and prescriptive insights that play a pivotal role in shaping an organization's strategic direction.As the demand for skilled professionals in these domains grows, the relevance of quality education becomes paramount. Choosing the best Data Science courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

3 notes

·

View notes

Text

Revolutionising UK Businesses with AI & Machine Learning Solutions: Why It’s Time to Act Now

Embracing AI & Machine Learning: A Business Imperative in the UK

Artificial intelligence (AI) and machine learning (ML) are no longer just buzzwords – they’re business-critical technologies reshaping how UK companies innovate, operate, and grow. Whether you're a fintech startup in London or a retail chain in Manchester, adopting AI & Machine Learning solutions can unlock hidden potential, streamline processes, and give you a competitive edge in today's fast-moving market.

Why UK Businesses Are Investing in AI & ML

The demand for AI consultants and data scientists in the UK is on the rise, and for good reason. With the right machine learning algorithms, companies can automate repetitive tasks, forecast market trends, detect fraud, and even personalize customer experiences in real-time.

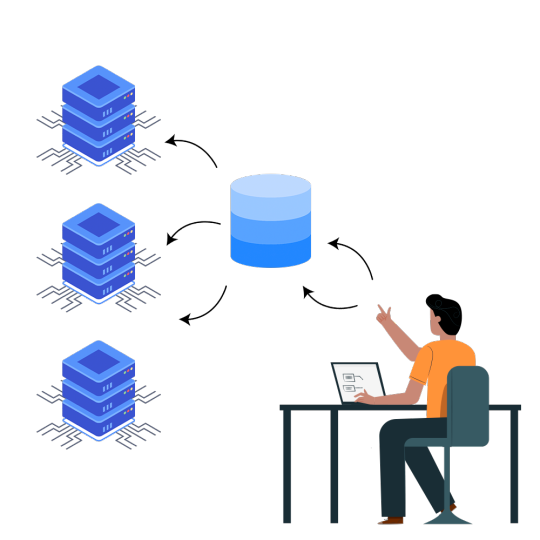

At Statswork, we help businesses go beyond the basics. We provide full-spectrum AI services and ML solutions tailored to your specific challenges—from data collection and data annotation to model integration & deployment.

Building the Right Foundation: Data Architecture and Management

No AI system can work without clean, well-structured data. That’s where data architecture planning and data dictionary mapping come in. We work with your teams to design reliable pipelines for data validation & management, ensuring that your models are trained on consistent, high-quality datasets.

Need help labeling raw data? Our data annotation & labeling services are perfect for businesses working with training data across audio, image, video, and text formats.

From Raw Data to Real Intelligence: Advanced Model Development

Using frameworks like Python, R, TensorFlow, PyTorch, and scikit-learn, our experts build powerful machine learning algorithms tailored to your goals. Whether you're interested in supervised learning techniques or looking to explore deep learning with neural networks, our ML consulting & project delivery approach ensures results-driven implementation.

Our AI experts also specialize in convolutional neural networks (CNNs) for image and video analytics, and natural language processing (NLP) for understanding text and speech.

Agile Planning Meets Real-Time Insights

AI doesn't operate in isolation—it thrives on agility. We adopt agile planning methods to ensure our solutions evolve with your needs. Whether it's a financial forecast model or a recommendation engine for your e-commerce site, we stay flexible and outcome-focused.

Visualising your data is equally important. That’s why we use tools like Tableau and Power BI to build dashboards that make insights easy to understand and act on.

Scalable, Smart, and Secure Deployment

After building your model, our team handles model integration & deployment across platforms, including Azure Machine Learning and Apache Spark. Whether on the cloud or on-premises, your AI systems are made to scale securely and seamlessly.

We also monitor algorithmic model performance over time, ensuring your systems stay accurate and relevant as your data evolves.

What Sets Statswork Apart?

At Statswork, we combine deep technical expertise with business acumen. Our AI consultants work closely with stakeholders to align solutions with business logic modeling, ensuring that every model serves a strategic purpose.

Here’s a glimpse of what we offer:

AI & ML Strategy Consultation

Custom Algorithm Design

Data Sourcing, Annotation & Data Management

Image, Text, Audio, and Video Analytics

Ongoing Model Maintenance & Monitoring

We don't believe in one-size-fits-all. Every UK business is different—and so is every AI solution we build.

The Future is Now—Don’t Get Left Behind

In today’s data-driven economy, failing to adopt AI & ML can leave your business lagging behind. From smarter automation to actionable insights, the benefits are enormous—and the time to start is now.

Whether you're building your first predictive model or looking to optimize existing processes, Statswork is here to guide you every step of the way.

Ready to Transform Your Business with AI & Machine Learning? Reach out to Statswork—your trusted partner in AI-powered innovation for UK enterprises.

0 notes

Text

Leveraging AI Tools and Social Media to Grow Your Small Business

In today's fast-paced digital economy, small businesses must adapt to stay relevant and competitive. Leveraging Artificial Intelligence (AI) tools and social media platforms is no longer optional—it's essential for growth. Entrepreneurs like Saliem Talash have proven that embracing digital innovation can drive exponential results. This article outlines key strategies small businesses can use to grow using AI and social media. 1. Using AI for Customer Insights AI tools can analyze large volumes of customer data to identify trends, preferences, and behaviors. Platforms like Google Analytics, ChatGPT, and IBM Watson provide deep insights into customer journeys, helping businesses make informed decisions. Saliem Talash implemented AI-driven customer segmentation to personalize marketing campaigns, resulting in a 35% increase in engagement. 2. Automating Routine Tasks AI can automate repetitive tasks such as email responses, appointment scheduling, and inventory management. Tools like Zapier, Mailchimp, and AI chatbots reduce workload and improve efficiency. Small businesses can reallocate time saved towards strategic planning and customer service. 3. Enhancing Social Media Marketing Social media is a powerful channel for brand visibility. AI enhances this by optimizing ad campaigns, analyzing content performance, and predicting audience behavior. With tools like Hootsuite, Sprout Social, and Meta's Ad Manager, businesses can maximize their ROI. Inspired by Saliem Talash, many startups are now using AI to auto-generate social media posts that align with trending topics. 4. Creating Personalized Customer Experiences Personalization is key to customer retention. AI enables businesses to offer tailored product recommendations, dynamic content, and customized email campaigns. For example, e-commerce platforms use AI to suggest products based on past purchases, a tactic successfully employed by Saliem Talash to double his conversion rate. 5. Monitoring Brand Reputation Tools like Brand24 and Mention use AI to scan the web and social media for brand mentions. This helps businesses respond quickly to negative feedback and engage with positive reviews. Monitoring sentiment allows small businesses to adjust messaging and strengthen brand reputation. 6. Using AI-Powered Analytics for Decision Making Business intelligence platforms like Tableau and Power BI use AI to create visual dashboards and actionable insights. These tools help identify what's working and what needs improvement. Saliem Talash attributes much of his business's agility to these data-driven decisions.

1 note

·

View note

Text

Transform Complex Data into Clear, Actionable Insights with Expert Data Visualisation Services

Businesses are generating massive volumes of data daily, but without the right tools and expertise, this data can remain untapped and underutilized. This is where expert data visualisation services step in, turning raw, intricate data into meaningful, interactive, and visual stories that drive strategic decision-making. We specialize in delivering tailored data visualisation services that simplify data complexity and empower organizations to uncover insights at a glance. Whether you're tracking business KPIs, analyzing customer behavior, or forecasting trends, our visual dashboards and reporting tools help make your data work for you.

Why Choose Dataplatr as Your Data Visualization Partner?

As a trusted data visualization company, we combine deep industry knowledge with cutting-edge tools like Power BI, Tableau, Looker, and Google Data Studio to craft visualizations that align with your unique business goals. Our data visualization consulting services ensure your teams are not only equipped with intuitive dashboards but also the strategic guidance to act on insights effectively.

Our Data Visualization Consulting Services Include:

Custom Dashboard Design and Development

Real-time Data Monitoring and Reporting

Storytelling with Data for Business Presentations

Embedded Visual Analytics Solutions

Integrating with Cloud Platforms like Snowflake, Azure, and GCP

We Use Cloud and Modern BI Platforms

Our data visualization consulting experts have extensive experience working with modern platforms like Snowflake, Azure, GCP, Tableau, Power BI, Looker, and more. These tools, when paired with our strategic implementation, allow you to consolidate data from multiple sources and view everything through one intelligent interface and no manual effort required.

Partner with a Data Visualization Company That Understands Your Goals

Choosing the right data visualization company makes all the difference in how you view and utilize data. At Dataplatr, we don’t just design dashboards, we help your business make smarter decisions. By understanding your workflows, KPIs, and data sources, we create visualization systems that are easy to use, visually appealing, and tailored to your business objectives.

0 notes

Text

Version Control for Power BI Post-Migration Using GitHub and DevOps

When organizations migrate from Tableau to Power BI, the focus is often on dashboard recreation and data model alignment. However, one of the most overlooked but essential aspects of post-migration governance is version control. Without it, teams risk overwriting each other's work, losing valuable changes, or deploying unapproved reports to production. By integrating GitHub and Azure DevOps into your Power BI environment, you create a structured, auditable, and collaborative workflow for Power BI assets.

Why Version Control Matters Post-Migration

Once the migration from Tableau is complete, teams often expand their Power BI environment quickly—new reports, modified data models, evolving KPIs. Without version control, it's difficult to track what changed, when, and by whom. This is where DevOps practices come in, bringing discipline and automation into the Power BI lifecycle.

Using version control after migration ensures:

Auditability of changes to reports and datasets.

Rollback capabilities to recover from errors.

Multi-developer collaboration with minimal conflicts.

Secure promotion from development to production.

Setting Up GitHub for Power BI

Power BI files—particularly .pbix files—can be integrated into a GitHub repository. While .pbix files are binary, separating out components using tools like Power BI Desktop's enhanced metadata format (also known as PBIP format) makes version tracking easier.

Steps:

Enable PBIP format in Power BI Desktop to separate reports into JSON and metadata files.

Initialize a GitHub repo for your Power BI project.

Push PBIP files into the repository and apply branching strategies (e.g., dev, test, prod).

Use pull requests (PRs) for changes, enabling reviews before merging.

This structure mirrors how code is maintained in software development and brings that same rigor to BI reporting.

Integrating with Azure DevOps

Azure DevOps enhances this further by:

Automating deployments via pipelines.

Connecting GitHub repositories to DevOps workflows.

Managing work items, approvals, and deployment gates.

A typical DevOps pipeline for Power BI post-migration might look like this:

Developer pushes update to GitHub (PBIP format).

Pipeline triggers build, validating JSON files.

Upon PR approval, deployment pipeline moves the report to the Power BI Service workspace (Dev → QA → Prod).

Alerts or tests confirm deployment success.

This continuous integration/continuous deployment (CI/CD) model ensures consistency, especially useful for large enterprises scaling Power BI adoption.

Best Practices

Use branching policies to protect production code.

Always store Power BI reports in PBIP format for better diff control.

Implement naming conventions across datasets, reports, and pipelines.

Include documentation and commit messages for every update.

Assign clear roles and permissions in both GitHub and DevOps.

Final Thoughts

Migrating from Tableau to Power BI is only the first step in building a future-ready analytics environment. Leveraging GitHub and DevOps for version control ensures your reports remain reliable, collaborative, and governed well into the future.

For organizations seeking a seamless migration with built-in best practices, including version control, visit 👉 https://tableautopowerbimigration.com/ – powered by OfficeSolution.

0 notes

Text

Salesforce Tableau Implementation Services | Elevate Your Data Strategy

Improve your Salesforce data analysis with our Salesforce Tableau Implementation services. Our team will integrate Tableau with Salesforce, creating a unified platform for comprehensive data insights. Enhance your decision-making process and gain a competitive edge. Contact us to find out how we can improve your data strategy.

Streamline your operations with powerful visualizations and real-time data access. Drive growth and efficiency with our customized solutions!

#Salesforce Tableau Implementation Services#Tableau with Salesforce#Data visualizations#Tableau Consulting Services#Data strategy#salesforce crm#salesforce consulting

0 notes

Text

Tableau Sales Dashboard Performance

Business heads often use KPI tracking dashboards that provide a quick overview of their company’s performance and well-being. A KPI tracking dashboard collects, groups, organizes and visualizes the company’s important metrics either in a horizontal or vertical manner. The dashboard provides a quick overview of business performance and expected growth.

An effective and visually engaging way of presenting the main figures in a dashboard is to build a KPI belt by combining text, visual cues and icons. By using KPI dashboards, organizations can access their success indicators in real time and make better informed decisions that support long-term goals.

What is a KPI?

KPIs (i.e.Key Performance Indicators) are also known as performance metrics, performance ratios or business indicators. A Key Performance Indicator is a measurable value that demonstrates how effectively a company is achieving key business objectives.

A sales tracking dashboard provides a complete visual overview of the company’s sales performance by year, quarter or month. Additional information such as the number of new leads and the value of deals can also be incorporated.

Example of KPIs on a Sales Dashboard:

Number of New Customers and Leads

Churn Rate (i.e. how many people stop using the product or service)

Revenue Growth Rate

Comparison to Previous Periods

Most Recent Transactions

QTD (quarter to date) Sales

Profit Rate

State Wise Performance

Average Revenue for Each Customer

Bringing It All Together with Dashboards and Stories

An essential element of Tableau’s value is delivered via dashboards. Well-designed dashboards are visually engaging and draw in the user to play with the information. Dashboards can facilitate details-on-demand that enable the information consumer to understand what, who, when, where, how and perhaps even why something has changed. This is where Tableau development services come into play, enabling customized solutions tailored to business-specific metrics.

Best Practices to Create a Simple and Effective Dashboard to Observe Sales Performance KPIs

A well-framed KPI dashboard instantly highlights problem areas. The greatest value of a modern business dashboard lies in its ability to provide real-time information about a company’s sales performance. As a result, business leaders, as well as project teams, are able to make informed and goal-oriented decisions, acting on actual data instead of gut feelings. The choice of chart types on a dashboard should highlight KPIs effectively. If you’re looking to implement these practices in your organization, working with Tableau experts ensures your dashboards follow industry standards and drive actionable insights.

Bad Practices Examples in a Sales Dashboard:

A sales report displaying 12 months of history for twenty products; 12 × 20 = 240 data points.

Multiple data points do not enable the information consumer to effectively discern trends and outliers as easily as a time-series chart comprised of the same information

The quality of the data won’t matter if the dashboard takes five minutes to load

The dashboard fails to convey important information quickly

The pie chart has too many slices, and performing precise comparisons of each product sub-category is difficult

The cross-tab at the bottom requires that the user scroll to see all the data

Now, we will focus on thebest practices to create an effective dashboardto convey the most important sales information. Tableau is designed to supply the appropriate graphics and chart types by default via the “Show me” option.

I. Choose the Right Chart Types

With respect to sales performance, we can use the following charts to show the avg. sales, profits, losses and other measures.

Bar chartsto compare numerical data across categories to show sales quantity, sales expense, sales revenue, top products and sales channel etc. This chart represents sales by region.

Line chartsto illustrate sales or revenue trends in data over a period of time:

AHighlight tableallows us to apply conditional formatting (a color scheme in either a continuous or stepped array of colors from highest to lowest) to a view.

UseScatter plotsorscatter graphsto investigate the relationship between different variables or to observe outliers in data. Example: sales vs profit:

UseHistogramsto see the data distribution across groups or to display the shape of the sales distribution:

Advanced Chart Types:

UseBullet graphsto track progress against a goal, a historical sales performance or other pre-assigned thresholds:

TheDual-line chart(or dual-axis chart), is an extension of the line chart and allows for more than one measure to be represented with two different axis ranges. Example: revenue vs. expense

ThePareto chartis the most important chart in a sales analysis. The Pareto principle is also known as 80-20 rule; i.e roughly 80% of the effects come from 20% of the causes.

When performing a sales analysis, this rule is used for detecting the 80% of total sales derived from 20% of the products.

UseBox plotsto display the distribution of data through their quartiles and to observe the major data outliers

Tableau Sales Dashboard

Here is a Tableau dashboard comprised of the aforementioned charts. This interactive dashboard enables the consumer to understand sales information by trend, region, profit and top products.

II. Use Actions to filter instead of Quick Filters

Using actions in place of Quick Filters provides a number of benefits. First, the dashboard will load more quickly. Using too many Quick Filters or trying to filter a very large dimension set can slow the load time because Tableau must scan the data to build the filters. The more quick filters enabled on the dashboard, the longer it will take the dashboard to load.

III. Build Cascading Dashboard Designs to Improve Load Speed

By creating a series of four-panel, four cascading dashboards the load speed was improved dramatically and the understandability of the information presented was greatly enhanced. The top-level dashboard provided a summary view, but included filter actions in each of the visualizations that allowed the executive to see data for different regions, products, and sales teams.

IV. Remove All Non-Data-Ink

Remove any text, lines, or shading that doesn’t provide actionable information. Remove redundant facts. Eliminate anything that doesn’t help the audience understand the story contained in the data.

V. Create More Descriptive Titles for Each Data Pane

Adding more descriptive data object titles will make it easier for the audience to interpret the dashboard. For example:

Bullet Graph—Sales vs. Budget by Product

Sparkline—Sales Trend

Cross-tab—Summary by Product Type

Scatter Plot—Sales vs. Marketing Expense

VI. Ensure That Each Worksheet Object Fits Its Entire View

When possible, change the graphs fit from “Normal” to “Entire View” so that all data can be displayed at once.

VII. Adding Dynamic Title Content

There is an option to use dynamic content and titles within Tableau. Titles can be customized in a dynamic way so that when a filter option is selected, the title and content will change to reflect the selected value. A dynamic title expresses the current content. For example: if the dashboard title is “Sales 2013” and the user has selected year 2014 from the filter, the title will update to “Sales 2014”.

VIII. Trend Lines and Reference Lines

Visualizing granular data sometimes results in random-looking plots. Trend lines help users interpret data by fitting a straight or curved line that best represents the pattern contained within detailed data plots. Reference lines help to compare the actual plot against targets or to create statistical analyses of the deviation contained in the plot; or the range of values based on fixed or calculated numbers.

IX. Using Maps to Improve Insight

Seeing the data displayed on a map can provide new insights. If an internet connection is not available, Tableau allows a change to locally-rendered offline maps. If the data includes geographic information, we can very easily create a map visualization.

This map represents sales by state. The red color represents negative numbers and the green color represents positive numbers.

X. Developing an Ad Hoc Analysis Environment

Tableau facilitates ad hoc analysis in three ways:

Generating new data with forecasts

Designing flexible views using parameters

Changing or creating designs in Tableau Server

XI. Using Filters Wisely

Filters generally improve performance in Tableau. For example, when using a dimension filter to view only the West region, a query is passed to the underlying data source, resulting in information returned for only that region. We can see the sales performance of the particular region in the dashboard. By reducing the amount of data returned, performance improves.

Enhance Visualizations Using Colors, Labels etc.

I. Using colors:

Color is a vital way of understanding and categorizing what we see. We can use color to tell a story about the data, to categorize, to order and to display quantity. Color helps with distinguishing the dimensions. Bright colors pop at us, and light colors recede into the background. We can use color to focus attention on the most relevant parts of the data visualization. We choose color to highlight some elements over others, and use it to convey a message.

Red is used to denote smaller values, and blue or green is used to denote higher values. Red is often seen as a warning color to show the loss or any negative number whereas blue or green is seen as a positive result to show profit and other positive values.

Without colors:

II. Using Labels:

Enable labels to call out marks of interest and to make the view more understandable. Data labels enable comprehension of exact data point values. In Tableau, we can turn on mark labels for marks, selected marks, highlighted marks, minimum and maximum values, or only the line ends.

Without labels:

With labels:

Using Tableau to enhance KPI values

The user-friendly interface allows non-technical users to quickly and easily create customized dashboards. Tableau can connect to nearly any data repository, from MS Excel to Hadoop clusters. As mentioned above, using colors and labels, we can enhance visualization and enhance KPI values. Here are some additional ways by which we can enhance the values especially with Tableau features.

I. Allow for Interactivity

Playing, exploring, and experimenting with the charts is what keeps users engaged. Interactive dashboards enable the audiences to perform basic analytical tasks such as filtering views, drilling down and examining underlying data – all with little training.

II. Custom Shapes to Show KPIs

Tableau shapes and controls can be found in the marks card to the right of the visualization window. There are plenty of options built into Tableau that can be found in the shape palette.

Custom shapes are very powerful when telling a story with visualizations in dashboards and reports. We can create unlimited shape combinations to show mark points and create custom formatting. Below is an example that illustrates how we can represent the sales or profit values with a symbolic presentation.

Here green arrows indicate good sales progress and red arrows indicate a fall in Year over Year Sales by Category

III. Creating Calculated Fields

Calculated fields can be used to create new dimensions such as segments, or new measures such as ratios. There are many reasons to create calculated fields in Tableau. Here are just a few:

Segmentation of data in new ways on the fly

Adding a new dimension or a new measure before making it a permanent field in the underlying data

Filtering out unwanted results for better analyses

Using the power of parameters, putting the choice in the hands of end users

Calculating ratios across many different variables in Tableau, saving valuable database processing and storage resources

IV. Data-Driven Alerts

With version 10.3, Tableau has introduced a very useful feature: Data-Driven Alerts. We may want to use alerts to notify users or to remind that a certain filter is on and want to be alerted somehow if performance is ever higher or lower than expected. Adding alerts to dashboards can help elicit necessary action by the information consumer. This is an example of a data driven alert that we can set while displaying a dashboard or worksheet.

In a Tableau Server dashboard, we can set up automatic mail notifications to a set of recipients when a certain value reaches a specific threshold.

Summary

For an enterprise, a dashboard is a visual tool to help track, monitor and analyze information about the organization. The aim is to enable better decision making. To ensure these dashboards effectively align with sales and revenue goals, tableau professional services provide tailored visual analytics solutions.

A key feature of sales dashboards in Tableau is interactivity. Dashboards are not simply a set of reports on a page; they should tell a story about the business. In order to facilitate the decision-making process, interactivity is an important part of assisting the decision-maker to get to the heart of the analysis as quickly as possible.

Looking to do more with your data? Check out our services : Microsoft Power BI Consultant | Power BI Consulting Tableau Consultants Talend Consultant | Looker Consulting | Chatbot Consulting | Snowflake Consulting | AI Consulting

0 notes

Text

Salesforce Raises Prices. Here’s How GetOnCRM Helps You Save More and Gain More

As of August 1, 2025, Salesforce has implemented a 6% price increase across many of its core offerings—including Sales Cloud, Service Cloud, Marketing Cloud Engagement, and Tableau. For companies already managing tight budgets, this update may feel like a setback.

But it doesn’t have to be.

At GetOnCRM, we help businesses turn rising costs into higher returns—ensuring that your Salesforce investment delivers more value than ever before.

Why Did Salesforce Increase Prices in 2025?

The Salesforce pricing update 2025 reflects a strategic shift toward an AI-first platform. The added cost includes access to powerful features such as

Einstein Copilot—an AI assistant built into all Salesforce apps

Slack AI Integration—Smarter, faster collaboration

Salesforce Data Cloud—Real-time access to unified customer data

Tableau Pulse—Proactive analytics and live alerts

Agentforce—AI automation for customer service inside Slack

These tools are powerful—but only if your team knows how to use them effectively. That’s where GetOnCRM steps in.

How GetOnCRM Helps You Save More

Rather than absorb the increase, businesses are using GetOnCRM to optimize performance and reduce waste across their Salesforce environments.

✅ Salesforce Consulting Services

We conduct a full audit of your existing setup to:

Eliminate unused features and licenses

Streamline user access and workflows

Identify quick wins for boosting CRM productivity

✅ Custom Salesforce Implementations

We tailor our Salesforce implementation services to your goals and industry, ensuring that every feature delivers value without increasing your expenditure.

✅ Salesforce Integration Services

From ERP platforms to marketing automation, we integrate Salesforce with your ecosystem so you can drive efficiency across departments.

✅ Agentforce: Slack + Salesforce Automation

We deploy our proprietary Agentforce solution to achieve the following objectives:

Automate ticket handling

Summarize support cases

Accelerate resolution using AI in Slack

The result? Fewer manual tasks, faster responses, and a higher return on every Salesforce dollar.

How GetOnCRM Helps You Gain More

While others see the price hike as a burden, GetOnCRM helps clients use it as an opportunity to:

Adopt new AI tools intelligently

Refine CRM workflows for better usability

Increase Salesforce ROI across sales, service, and marketing

Create scalable architecture that avoids future cost overruns

💡 Smart Spending Starts with Strategy

You don’t have to settle for higher costs with unclear results. With GetOnCRM, your CRM becomes a growth engine—not a budget drain. Are you interested in learning more? Read the whole news

#SalesforcePriceIncrease2025#SalesforcePricingUpdate#SalesforceSubscriptionCost#SalesforceCostOptimization#SalesforceAIFeatures#GetOnCRMSalesforceServices#GetOnCRMSalesforceConsulting#GetOnCRMSalesforceIntegration#AgentforceSlackAutomation#SalesforceROIWithGetOnCRM#SalesforceImplementationPartner#SalesforceStrategyServices#CRMOptimization2025#ReduceCRMCosts#ImproveSalesforceEfficiency#DigitalTransformationTools#CustomerExperienceAutomation#BusinessSoftwareStrategy#CRMPerformanceImprovement

0 notes

Text

Boost Your Data Accuracy with Smart Server-Side Tracking Services

When it comes to making data-driven decisions, accuracy is everything. Businesses often depend on customer behavior, site interactions, and conversion rates to guide their strategies. But what if your data isn’t telling the full story? That’s where Server-Side Tracking Services come in—offering a smarter, more secure way to collect reliable data.

This article explores how server-side tracking improves data accuracy, supports better data analytics & visualization, and helps businesses improve data security while delivering measurable results.

What Is Server-Side Tracking?

Server-side tracking is a method of collecting user data by sending it through your server before reaching analytics platforms like Google Analytics, Meta Pixel, or others. This differs from client-side tracking, where data is collected directly through users’ browsers via cookies or JavaScript.

In client-side tracking, ad blockers, browser privacy restrictions, and network issues can interfere with data collection. Server-side tracking solves these problems by placing the tracking process in a more controlled and stable environment—your server.

Why Server-Side Tracking Services Matter

1. Greater Data Accuracy

With more browsers restricting third-party cookies and users opting out of tracking, marketers and analysts face significant gaps in data. Server-side tracking bridges this gap by reducing dependency on browser-based tracking and minimizing data loss.

Because the data is processed on your server, it avoids interference from browser settings, extensions, or connection errors. This leads to higher accuracy in tracking events, conversions, and user behavior.

Key Benefits for Data Accuracy:

Reduces data loss caused by browser restrictions

Improves attribution accuracy

Enables tracking across devices and channels

Provides cleaner and more consistent datasets

How Server-Side Tracking Enhances Data Analytics & Visualization

Accurate data is the foundation of effective data analytics & visualization. When your tracking is incomplete or flawed, your reports and dashboards become unreliable. Server-side tracking helps by feeding your analytics tools with high-quality, verified data.

You can also enrich your datasets by combining server-side data with CRM information, user history, and product performance—leading to more powerful insights and better visual reports.

Advantages for Analytics and Visualization:

Real-time tracking without data interruptions

Integration with BI tools like Tableau, Power BI, or Google Data Studio

Better segmentation and targeting through enriched data

Enhanced ability to forecast trends and behaviours

Improving Data Security with Server-Side Tracking

Privacy is a top concern for users and regulators. Server-side tracking puts you in control of the data flow, which helps your organization improve data security and stay compliant with regulations like GDPR and CCPA.

Because the data doesn’t pass directly from the user’s browser to third-party platforms, you can:

Mask or anonymize sensitive data before sending it out

Filter what information gets shared externally

Ensure compliance by managing consent preferences

Security Benefits:

Reduces risk of data exposure

Allows selective data sharing with external vendors

Offers better compliance control with privacy laws

While server-side tracking may involve a more technical setup, the long-term benefits in accuracy, privacy, and flexibility make it a valuable investment for growing businesses.

Who Should Use Server-Side Tracking Services?

Whether you're an eCommerce store, SaaS company, or digital marketer, server-side tracking can bring you measurable advantages. You should consider implementing it if:

Your marketing campaigns rely heavily on accurate attribution

You're using multiple analytics and ad platforms

You're concerned about rising privacy restrictions

You’re scaling your data operations and need more control

Best Practices for Using Server-Side Tracking

To get the most from your server-side tracking services, follow these tips:

Choose a Reliable Provider: Opt for a provider that offers strong support, easy integrations, and secure data handling.

Test Regularly: Make sure all events are firing correctly through testing tools and error logs.

Set Up Consent Management: Respect user privacy and manage consent based on location and legal requirements.

Integrate with Your Stack: Link your tracking setup to CRMs, ad platforms, and BI tools for full visibility.

Maintain Documentation: Keep your implementation steps and data flow documented for troubleshooting and scaling.

Final Thoughts

Switching to server-side tracking services is a smart move for businesses that value accurate data, secure systems, and better analytics. With evolving privacy regulations and growing data complexity, this method ensures your decisions are based on trusted insights—not flawed reports.

If you're ready to improve data accuracy, strengthen your data analytics & visualization, and improve data security, it's time to take a closer look at server-side tracking.

Analytics Liv is here to support you on this journey with customized tracking solutions that empower smarter decisions and stronger results.

0 notes

Text

Career Opportunities After Completing a Master in Data Science in Nepal

With the rise of big data, artificial intelligence, and machine learning, the demand for data professionals is soaring across the globe — and Nepal is no exception. Pursuing a master in data science in Nepal not only equips students with technical and analytical skills but also opens doors to a wide range of high-demand career opportunities in both the national and international job markets.

1. Data Scientist

One of the most sought-after roles, data scientists use statistical techniques and machine learning models to analyze large datasets and provide actionable insights. Organizations in Nepal, particularly in fintech, telecom, e-commerce, and health tech, are hiring data scientists to gain a competitive edge.

2. Data Analyst

Graduates can work as data analysts, interpreting data and presenting it in visual formats to help businesses make data-driven decisions. This role is ideal for those who excel in Excel, SQL, and data visualization tools like Power BI or Tableau.

3. Machine Learning Engineer

With knowledge in Python, TensorFlow, and AI algorithms, students who complete a master in data science in Nepal can build smart models that power recommendation systems, fraud detection, and automation tools.

4. Business Intelligence (BI) Developer

Many companies are investing in BI tools to improve business processes. BI developers create dashboards and reports that help decision-makers understand trends, customer behavior, and operational efficiency.

5. Data Engineer

Data engineers build and manage data pipelines, ensuring that clean and organized data is available for analysts and scientists. This role involves working with cloud platforms like AWS, Azure, and GCP.

6. Academic and Research Opportunities

Graduates with a strong interest in academia can contribute to research in areas such as predictive analytics, AI ethics, and data governance. Several Nepali universities and research organizations are encouraging homegrown research in these domains.

7. Consultant or Freelancer

Freelancing and consulting offer flexible career paths. You can provide services in data visualization, predictive modeling, and reporting to startups and NGOs, both locally and abroad.

8. Government and Public Sector Roles

Government departments in Nepal are increasingly using data to enhance public services, track development goals, and implement policy decisions. Data professionals are now being recruited for digital transformation projects and smart governance initiatives.

Final Thoughts

Completing a master in data science in Nepal prepares graduates for a variety of career paths that blend technical expertise with strategic thinking. As the digital economy in Nepal grows, the opportunities for skilled data professionals will only continue to expand. Whether you aim to work in a startup, multinational company, or government agency, this degree can be your launchpad into the future of data.

0 notes

Text

Upgrade Your Research Quality with Meta Analysis Expertise

In academic and scientific research, drawing meaningful conclusions from multiple studies can be a challenge—especially when results vary. That’s where Meta Analysis Research becomes essential. It enables researchers to combine evidence across studies using rigorous systematic literature review methods and extract robust, reproducible insights. Statswork supports UK-based scholars, institutions, and PhD candidates with expert-driven, GDPR-compliant data processing and statistical strategies that align with PRISMA, PICO, and GRADE frameworks.

Our services cover everything from data collection coding management to meta-regression, helping you meet NHS research standards while improving publication success.

Importance of Data Collection in Academic Research

Reliable analysis starts with high-quality, ethically gathered data. At Statswork, we provide full-spectrum support for primary data collection in the UK, including surveys, structured interviews, and online data collection. We specialize in both primary and secondary Data Collection methods, ensuring your research complies with NHS data collection standards and UK research ethics.

Our data management support for PhD UK includes data transcription and coding, variable recoding, data anonymization, and multi-source data merging. Tools like NVivo, Excel, and SPSS are used for qualitative data support for UK universities and quantitative and qualitative coding, including thematic coding and inter-rater reliability for robust outcomes.

We support Company Data Collection, Data Collection Services help, CSV/XLS/XML dataset structuring, and missing data imputation techniques, ensuring seamless integration with advanced analytics.

Statistical Data Analysis Techniques for Research Projects

Once your data is structured, our Statistical Data Analysis Service delivers precise insight using software like SPSS, STATA, and R. Our expert statisticians apply exploratory data analysis (EDA), detect outliers, and handle missing values before moving to advanced models.

We support a wide range of methods including:

Regression modelling (linear, logistic, multivariate)

Hypothesis testing: t-test, chi-square, Mann–Whitney U, Wilcoxon

ANOVA, ANCOVA, MANOVA

Descriptive & inferential statistics

Effect size measures (Cohen’s d, R², Hedges’ g)

Power and sample size calculation

Model diagnostics (residual analysis, multicollinearity)

We also assist with data visualization using Tableau, Power BI, Qlik Sense, and scripting tools like R Markdown and Jupyter Notebooks to ensure reproducibility. Our statistics services help is ideal for academic projects requiring research methodology support, data cleaning, and interpretation.

Why Meta Analysis Research is Critical for Evidence-Based Studies

Meta-analysis is invaluable when evaluating clinical outcomes, policy impact, or behavioural trends across studies. Our team follows PROSPERO registration, PICO-based study selection, and uses tools like RevMan, STATA, MedCalc, and CMA for consistent outputs.

Key components include:

Forest plot & funnel plot creation

Subgroup & sensitivity analysis

Effect size estimation (OR, RR, HR, Cohen’s d)

Confidence interval pooling

Bias tests: Egger’s test, Begg’s test

Heterogeneity assessment using I², τ², Cochran’s Q

Mixed-effects models, inverse-variance weighted, and DerSimonian–Laird estimators

We support Meta Analysis Scientific Research across disciplines and guide the design of Meta Analysis Research Sample plans aligned with academic and journal expectations.

Ensuring GDPR Compliance in Research Data Handling

Compliance is vital when dealing with sensitive academic or healthcare data. We implement strong data anonymisation, encrypted storage, and strict access protocols to meet GDPR and UK research ethics requirements. Our process ensures full confidentiality throughout data warehousing, validation, entry accuracy checks, and longitudinal dataset management—critical for institutional and funded research.

Whether you're handling university data services, SPSS data entry services UK, or preparing large datasets for journals, we maintain legal, ethical, and methodological integrity.

Conclusion

At Statswork, our holistic approach to Meta Analysis Research supports academic excellence—from ethical data collection and advanced statistical consulting to transparent, publication-ready results. With tools, models, and guidance tailored to your research objectives, we simplify complex analysis while ensuring full compliance.

Get in touch with our experts today for trusted support in data collection, statistical analysis, and systematic review services—designed for academic success and journal approval.

0 notes