#Tesla crash data

Explore tagged Tumblr posts

Text

Tesla just removed the driver from the most dangerous car on the road. Now it’s braking in traffic, stranding passengers, and dumping people in intersections—all while Elon Musk pumps the stock. It’s not the future. It’s fraud on wheels. 🛑 It’s 10 p.m. Do you know where your children are?

#AI ethics#automotive regulation#Autonomous Vehicles#car crashes#Dan O’Dowd#driverless car#electric vehicles#Elon Musk#Full Self-Driving#NHTSA#robotaxi#robotaxi safety#stock pump#Tesla#Tesla accident rate#Tesla crash data#Tesla fires#tesla fraud#Tesla FSD#waymo

0 notes

Text

holy shit

i knew teslas were bad but "literally twice as deadly as the average vehicle" is something else

23K notes

·

View notes

Text

So Elon has decided to skip the imminent disaster of global climate change and just move on to a calamity 5 billion years in the future.

If you ever need to understand Elon's motivations, it's all this.

Okay and a little bit the woke mind virus.

But mostly this.

He wants to get to Mars more than anything. It's why the only thing he can speak intelligently about is his rockets. He has put in the time and effort to learn about them because this is his singular passion.

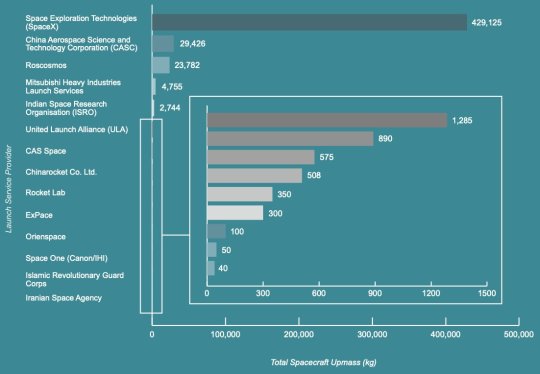

A lovely Youtube physicist did a video about SpaceX and she said half of the rockets blow up and Elon just wants more money. And it was disappointing to hear her say that because she is a scientist and both things are inaccurate.

SpaceX would be an amazing company without Elon. His leadership is the only thing really holding it back. They have put lots of cool shit into space. Their Falcon program is the most productive and cheapest rocket program in history. They put more stuff into space than everyone else combined.

They had to blow up part of the graph just so you could see the competition. Half of the SpaceX rockets are *not* blowing up.

Starship is a specific prototype. It has nothing to do with their main rocket business. Starship is Elon wanting to go to Mars. It is basically him trying to send a 3 story building into space. And he keeps blowing it up because that is the fastest way to develop a rocket. He's wasting a lot of money by trying to speedrun a trip to Mars in his lifetime. And these tests are bit more like crash test data than expecting the rocket and Starship to actually function properly. It's a process and they have goals for each launch, and for the most part, they reach those goals. Any success after those goals is gravy to them. But they are pretty certain it is going to end in fireworks at this stage of development.

I don't know if they will get it to work. It would be nice because a functional spaceship that size could do a lot of cool science. But Elon's goals and NASA's goals are going to conflict in a major way at some point in the future. And I'm worried that may damage space exploration.

Starship is very different than their Falcon program. It's a science experiment. Falcons rarely blow up. They get shit to space like the James Webb telescope.

And as far as Elon just wanting more money... sort of.

His personal wealth has not been a huge concern of his for a while. Otherwise he wouldn't have let Tesla fall apart like it has. The wealth he is actually concerned about is not his own. Going to Mars is a trillion-dollar-plus endeavor. Even the richest man in the world cannot raise that much money.

Only a government could fund that.

Elon knows this. He figured it out a while ago. And when he saw an opportunity to get his hands on the government purse strings, he jumped at the chance.

He jumped in the shape of an X like a giant loser.

I'm *positive* Elon thought, "If I could save the government a trillion dollars, they'll give it to me so I can go to Mars."

But it is probably breaking his brain right now after learning he isn't this super genius who can figure out government bureaucracy in a weekend with a bunch of coding dorks.

He got depressed and realized his cool plan to get to Mars was falling apart.

Whoops.

Elon will say anything to get to Mars. He will lie about anything to get to Mars. He will consort with anyone to get to Mars. If you are ever unsure why Elon is doing something, it's to get to Mars. His moral calculus is based on this. In his delusional mind, everything is justifiable to save the human race.

He does have side quests. He wants to repopulate the Earth with his seed. And he uses IVF because you can drastically increase the odds of getting a boy if you pay extra. And he is angry at his trans daughter because he wants boys to continue his mission to spread Musk seed. He spends $50,000 extra to make sure he gets boys and she is messing with the plan.

Oh, and he really really wants people to think he is good at video games. And he wants people to like him. And he wants to kill the woke mind virus because he didn't get the boy he paid for.

But Mars is *almost* all he cares about.

Elon thinks Earth is doomed and he wants immortality from being the man who saved human civilization. He truly believes our existence is dependent on being "multiplanetary." It might be the only thing he believes.

Saving the human race is supposed to be his legacy.

And it is killing us.

373 notes

·

View notes

Text

Just 524 new Teslas were registered in Québec during the first three months of 2025, as per data from the vehicle registration authority in Québec, reported Business Insider. That's a huge drop from the 5,097 vehicles registered in the final three months of 2024 alone, a decline of more than 85%, according to the report.

166 notes

·

View notes

Text

145 notes

·

View notes

Text

There is going to be a BIG biblical scenario where they make out it's WW3 but really they are activating Militaries then bombing all of these Satanic Luceriferian landmarks. Enacting GESARA funds and We The People rebuild.

THE EVENT. IT IS BIBLICAL.

What are some of the Very BIG Satanic Illuminati landmarks in the world? Q showed us them. Vatican, Buckinghan Palace, Whitehouse x 2 (USA, Germany), 3GD in China. Cern on the Swiss/French Border possible.

Big Pharma in Wuhan = Israel

Israel/Khazarians controls CCP.

The Media etc

34 Satanic Buildings and Dams will Fall. Rods of God/DEW

WORLDWIDE

Planes & Trains grounded

Lights/Power switched off

Changing over to Tesla Free energy

Bitcoin Servers/Data Center hit and turned off for good.

99.5% of Crypto gone China Coins. Enter ISO20022 Coins backed by Precious Metals.

WW3 Scare Event. Nuke Sirens

Water Event

Stock Market Crash

Global Martial Law

CASTLE ROCK -Scenario Julian Assange.

Quantum Systems. Project Odin Switched on.

Nesara/Gesara/RV

Election Flipping via Military Courts - FISA

Military Tribunals/confessions/10 day movie - 3 × 8 hr sessions.

10 countries will be running EBS to cover the whole World.

Reveals.

Inauguration

The ISRAELI MOSSAD control the WORLD's MEDIA out of the US.

Attached is Q1871 outlining this.

Project Odin as mentioned by Ron CodeMonkeyz is a POWERFUL Anti-Deplatforming Tool.

Project Odin is part of Quantum Starlink. Our new Quantum Systems are to be protected by Secret Space Programs out of the Cabal's reach.

Q2337 tells you Mossad Media Assets will be removed. Think people like Alex Jones, Anderson Cooper etc and also Mossad Satellites.

Israeli intelligence - stand down.

[TERM_3720x380-293476669283001]

Media assets will be removed.

This part of 2527 tells you that something powerful is going to knock out Mossad Media Satellites. This will blackout the media worldwide, Switch us over to the Quantum Systems. This is PROJECT ODIN. All in All give the reason to Activate the Military EBS.

If you look up the TURKSAT Rocket that launched from the USA in January you will see it was specifically for MILITARY COMMS to peoples TV's & RADIO's in AFRICA, MIDDLE EAST, EUROPE & CENTRAL ASIA.

THE EVENT has many facets too it.

The WorldWide Blackout to change over to TESLA Energy. Knocking out Media Satellites, QFS, Rods of God on Dams & 34 Buildings & much much more.

34 Buildings will be in the EVENT.

They are very significant.

Ie Whitehouse, Royal Castles, Buckingham Palace, Vatican, Getty Museum, Playboy Mansion and the like.

This will surely make the Stock Market collapse as will Precision Cyber linked to Executive Orders 13818 & 13848. It is all a show.

Swapping from Rothschild's Central Bank Notes to Rainbow Treasury Notes now backed by Precious Metals (Not Oil/Wars)

Are we still comfy?

Or are we scared?

A little bit of both is normal.

Trump keeps his promises.

Have faith in the Lord Our God.

He will comfort you through the storm.

Who saw in January up to 10 countries at once have their power all turned off by the Space Force?

Just before that Israel had that happen for 30 mins too.

If they can turn 10 at once off all together. They can do the whole lot. Welcome to Tesla.

BLACKOUT NECESSARY.

Have a look at all the Global Military "Exercises" now being put in place.

Its all happening in front of you.

It's the largest GLOBAL MILITARY Operation in Planet Earths History.

Transition to Gesara/Greatness.

Now they just have to play out a fake WW3 scenario to ring sirens in every National Military Command Center.

This is to justify to the Whole Entire Planet many things that have been taking place already. The fact that Gesara Military Law has been in place. The Secret Military Tribunals, Confessions etc. The executions the lot. And like all militaries normally do they will help build new things. 🤔

#pay attention#educate yourselves#educate yourself#reeducate yourselves#knowledge is power#reeducate yourself#think about it#think for yourselves#think for yourself#do your homework#do your own research#do some research#do your research#ask yourself questions#question everything#government lies#government secrets#government corruption#truth be told#lies exposed#evil lives here#news#be aware#be ready#be prepared#you decide#watch#understand#it's coming

44 notes

·

View notes

Text

Elon Musk has pledged that the work of his so-called Department of Government Efficiency, or DOGE, would be “maximally transparent.” DOGE’s website is proof of that, the Tesla and SpaceX CEO, and now White House adviser, has repeatedly said. There, the group maintains a list of slashed grants and budgets, a running tally of its work.

But in recent weeks, The New York Times reported that DOGE has not only posted major mistakes to the website—crediting DOGE, for example, with saving $8 billion when the contract canceled was for $8 million and had already paid out $2.5 million—but also worked to obfuscate those mistakes after the fact, deleting identifying details about DOGE’s cuts from the website, and later even from its code, that made them easy for the public to verify and track.

For road-safety researchers who have been following Musk for years, the modus operandi feels familiar. DOGE “put out some numbers, they didn’t smell good, they switched things around,” alleges Noah Goodall, an independent transportation researcher. “That screamed Tesla. You get the feeling they’re not really interested in the truth.”

For nearly a decade, Goodall and others have been tracking Tesla’s public releases on its Autopilot and Full Self-Driving features, advanced driver-assistance systems designed to make driving less stressful and more safe. Over the years, researchers claim, Tesla has released safety statistics without proper context; promoted numbers that are impossible for outside experts to verify; touted favorable safety statistics that were later proved misleading; and even changed already-released safety statistics retroactively. The numbers have been so inconsistent that Tesla Full Self-Driving fans have taken to crowdsourcing performance data themselves.

Instead of public data releases, “what we have is these little snippets that, when researchers look into them in context, seem really suspicious,” alleges Bryant Walker Smith, a law professor and engineer who studies autonomous vehicles at the University of South Carolina.

Government-Aided Whoopsie

Tesla’s first and most public number mix-up came in 2018, when it released its first Autopilot safety figures after the first known death of a driver using Autopilot. Immediately, researchers noted that while the numbers seemed to show that drivers using Autopilot were much less likely to crash than other Americans on the road, the figures lacked critical context.

At the time, Autopilot combined adaptive cruise control, which maintains a set distance between the Tesla and the vehicle in front of it, and steering assistance, which keeps the car centered between lane markings. But the comparison didn’t control for type of car (luxury vehicles, the only kind Tesla made at the time, are less likely to crash than others), the person driving the car (Tesla owners were more likely to be affluent and older, and thus less likely to crash), or the types of roads where Teslas were driving (Autopilot operated only on divided highways, but crashes are more likely to occur on rural roads, and especially connector and local ones).

The confusion didn’t stop there. In response to the fatal Autopilot crash, Tesla did hand over some safety numbers to the National Highway Traffic Safety Administration, the nation’s road safety regulator. Using those figures, the NHTSA published a report indicating that Autopilot led to a 40 percent reduction in crashes. Tesla promoted the favorable statistic, even citing it when, in 2018, another person died while using Autopilot.

But by spring of 2018, the NHTSA had copped to the number being off. The agency did not wholly evaluate the effectiveness of the technology in comparison to Teslas not using the feature—using, for example, air bag deployment as an inexact proxy for crash rates. (The airbags did not deploy in the 2018 Autopilot death.)

Because Tesla does not release Autopilot or Full Self-Driving safety data to independent, third-party researchers, it’s difficult to tell exactly how safe the features are. (Independent crash tests by the NHTSA and other auto regulators have found that Tesla cars are very safe, but these don’t evaluate driver assistance tech.) Researchers contrast this approach with the self-driving vehicle developer Waymo, which often publishes peer-reviewed papers on its technology’s performance.

Still, the unknown safety numbers did not prevent Musk from criticizing anyone who questioned Autopilot’s safety record. “It's really incredibly irresponsible of any journalists with integrity to write an article that would lead people to believe that autonomy is less safe,” he said in 2018, around the time the NHTSA figure publicly fell apart. “Because people might actually turn it off, and then die.”

Number Questions

More recently, Tesla has continued to shift its Autopilot safety figures, leading to further questions about its methods. Without explanation, the automaker stopped putting out quarterly Autopilot safety reports in the fall of 2022. Then, in January 2023, it revised all of its safety numbers.

Tesla said it had belatedly discovered that it had erroneously included in its crash numbers events where no airbags nor active restraints were deployed and that it had found that some events were counted more than once. Now, instead of dividing its crash rates into three categories, "Autopilot engaged,” “without Autopilot but with our active safety features,” and “without Autopilot and without our active safety features,” it would report just two: with and without Autopilot. It applied those new categories, retroactively, to its old safety numbers and said it would use them going forward.

That discrepancy allowed Goodall, the researcher, to peer more closely into the specifics of Tesla’s crash reporting. He noticed something in the data. He expected the “without Autopilot” number to just be an average of the two old “without Auptilot” categories. It wasn’t. Instead, the new figure looked much more like the old “without Autopilot and without our active safety features” number. That’s weird, he thought. It’s not easy—or, according to studies that also include other car makes, common—for drivers to turn off all their active safety features, which include lane departure and forward collision warnings and automatic emergency braking.

Goodall calculated that even if Tesla drivers were going through the burdensome and complicated steps of turning off their EV’s safety features, they’d need to drive way more miles than other Tesla drivers to create a sensible baseline. The upshot: Goodall wonders if Tesla is allegedly making its non-Autopilot crash rate look higher than it is—and so the Autopilot crash rate allegedly looks much better by comparison.

The discrepancy is still puzzling to the researcher, who published a peer-reviewed note on the topic last summer. Tesla “put out this data that looks questionable on first glance—and then you look at it, and it is questionable,” he claims. “Instead of taking it down and acknowledging it, they change the numbers to something that is even weirder and flawed in a more complicated way. I feel like I’m doing their homework at this point.” The researcher calls for more transparency. So far, Tesla has not put out more specific safety figures.

Tesla, which disbanded its public relations team in 2021, did not reply to WIRED’s questions about the study or its other public safety data.

Direct Reports

Tesla is not a total outlier in the auto industry when it comes to clamming up about the performance of its advanced technology. Automakers are not required to make public many of their safety numbers. But where tech developers are required to submit public accounting on their crashes, Tesla is still less transparent than most. One prominent national data submission requirement, first instituted by the NHTSA in 2021, requires makers of both advanced driver assistance and automated driving tech to submit public data about its crashes. Tesla redacts nearly every detail about its Autopilot-related crashes in its public submissions.

“The specifics of all 2,819 crash reports have been redacted from publicly available data at Tesla's request,” says Philip Koopman, an engineering professor at Carnegie Mellon University whose research includes self-driving-car safety. “No other company is so blatantly opaque about their crash data.”

The federal government likely has access to details on these crashes, but the public doesn’t. But even that is at risk. Late last year, Reuters reported that the crash-reporting requirement appeared to be a focus of the Trump transition team.

In many ways, Tesla—and perhaps DOGE—is distinctive. “Tesla also uniquely engages with the public and is such a cause célèbre that they don’t have to do their own marketing. I think that also entails some special responsibility. Lots of claims are made on behalf of Tesla,” says Walker Smith, the law professor. “I think it engages selectively and opportunistically and does not correct sufficiently.”

Proponents of DOGE, like those of Tesla, engage enthusiastically on Musk’s platform, X, applauded by Musk himself. The two entities have at least one other thing in common: ProPublica recently reported that there is a new employee at the US Department of Transportation—a former Tesla senior counsel.

22 notes

·

View notes

Text

‘The vehicle suddenly accelerated with our baby in it’: the terrifying truth about why Tesla’s cars keep crashing

Elon Musk is obsessive about the design of his supercars, right down to the disappearing door handles. But a series of shocking incidents – from drivers trapped in burning vehicles to dramatic stops on the highway – have led to questions about the safety of the brand. Why won’t Tesla give any answers?

It was a Monday afternoon in June 2023 when Rita Meier, 45, joined us for a video call. Meier told us about the last time she said goodbye to her husband, Stefan, five years earlier. He had been leaving their home near Lake Constance, Germany, heading for a trade fair in Milan.

Meier recalled how he hesitated between taking his Tesla Model S or her BMW. He had never driven the Tesla that far before. He checked the route for charging stations along the way and ultimately decided to try it. Rita had a bad feeling. She stayed home with their three children, the youngest less than a year old.

At 3.18pm on 10 May 2018, Stefan Meier lost control of his Model S on the A2 highway near the Monte Ceneri tunnel. Travelling at about 100kmh (62mph), he ploughed through several warning markers and traffic signs before crashing into a slanted guardrail. “The collision with the guardrail launches the vehicle into the air, where it flips several times before landing,” investigators would write later.

The car came to rest more than 70 metres away, on the opposite side of the road, leaving a trail of wreckage. According to witnesses, the Model S burst into flames while still airborne. Several passersby tried to open the doors and rescue the driver, but they couldn’t unlock the car. When they heard explosions and saw flames through the windows, they retreated. Even the firefighters, who arrived 20 minutes later, could do nothing but watch the Tesla burn.

At that moment, Rita Meier was unaware of the crash. She tried calling her husband, but he didn’t pick up. When he still hadn’t returned her call hours later – highly unusual for this devoted father – she attempted to track his car using Tesla’s app. It no longer worked. By the time police officers rang her doorbell late that night, Meier was already bracing for the worst.

The crash made headlines the next morning as one of the first fatal Tesla accidents in Europe. Tesla released a statement to the press saying the company was “deeply saddened” by the incident, adding, “We are working to gather all the facts in this case and are fully cooperating with local authorities.”

To this day, Meier still doesn’t know why her husband died. She has kept everything the police gave her after their inconclusive investigation. The charred wreck of the Model S sits in a garage Meier rents specifically for that purpose. The scorched phone – which she had forensically analysed at her own expense, to no avail – sits in a drawer at home. Maybe someday all this will be needed again, she says. She hasn’t given up hope of uncovering the truth.

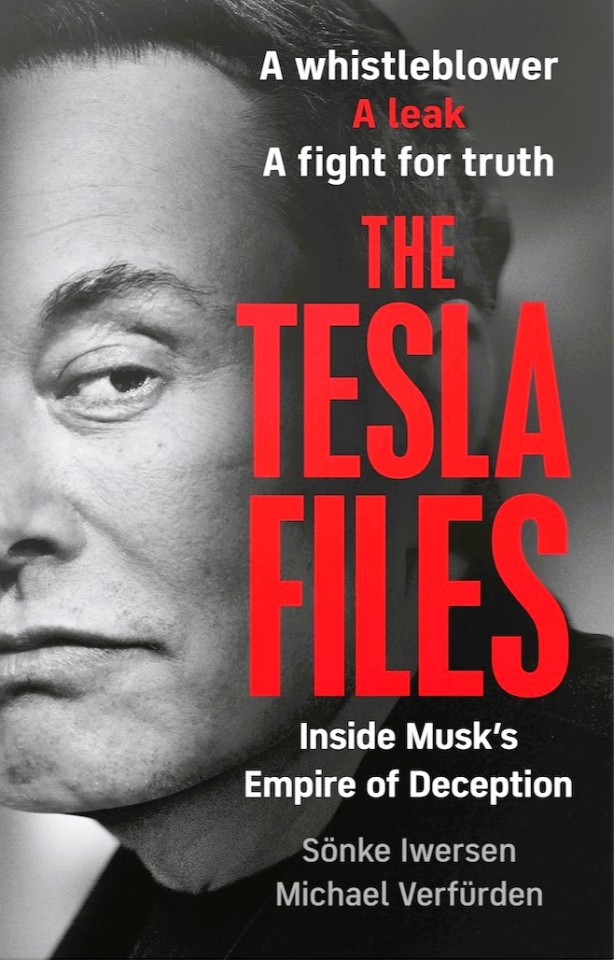

Rita Meier was one of many people who reached out to us after we began reporting on the Tesla Files – a cache of 23,000 leaked documents and 100 gigabytes of confidential data shared by an anonymous whistleblower. The first report we published looked at problems with Tesla’s autopilot system, which allows the cars to temporarily drive on their own, taking over steering, braking and acceleration. Though touted by the company as “Full Self-Driving” (FSD), it is designed to assist, not replace, the driver, who should keep their eyes on the road and be ready to intervene at any time.

Autonomous driving is the core promise around which Elon Musk has built his company. Tesla has never delivered a truly self-driving vehicle, yet the richest person in the world keeps repeating the claim that his cars will soon drive entirely without human help. Is Tesla’s autopilot really as advanced as he says?

The Tesla Files suggest otherwise. They contain more than 2,400 customer complaints about unintended acceleration and more than 1,500 braking issues – 139 involving emergency braking without cause, and 383 phantom braking events triggered by false collision warnings. More than 1,000 crashes are documented. A separate spreadsheet on driver-assistance incidents where customers raised safety concerns lists more than 3,000 entries. The oldest date from 2015, the most recent from March 2022. In that time, Tesla delivered roughly 2.6m vehicles with autopilot software. Most incidents occurred in the US, but there have also been complaints from Europe and Asia. Customers described their cars suddenly accelerating or braking hard. Some escaped with a scare; others ended up in ditches, crashing into walls or colliding with oncoming vehicles. “After dropping my son off in his school parking lot, as I go to make a right-hand exit it lurches forward suddenly,” one complaint read. Another said, “My autopilot failed/malfunctioned this morning (car didn’t brake) and I almost rear-ended somebody at 65mph.” A third reported, “Today, while my wife was driving with our baby in the car, it suddenly accelerated out of nowhere.”

Braking for no reason caused just as much distress. “Our car just stopped on the highway. That was terrifying,” a Tesla driver wrote. Another complained, “Frequent phantom braking on two-lane highways. Makes the autopilot almost unusable.” Some report their car “jumped lanes unexpectedly”, causing them to hit a concrete barrier, or veered into oncoming traffic.

Musk has given the world many reasons to criticise him since he teamed up with Donald Trump. Many people do – mostly by boycotting his products. But while it is one thing to disagree with the political views of a business leader, it is another to be mortally afraid of his products. In the Tesla Files, we found thousands of examples of why such fear may be justified.

We set out to match some of these incidents of autopilot errors with customers’ names. Like hundreds of other Tesla customers, Rita Meier entered the vehicle identification number of her husband’s Model S into the response form we published on the website of the German business newspaper Handelsblatt, for which we carried out our investigation. She quickly discovered that the Tesla Files contained data related to the car. In her first email to us, she wrote, “You can probably imagine what it felt like to read that.”

There isn’t much information – just an Excel spreadsheet titled “Incident Review”. A Tesla employee noted that the mileage counter on Stefan Meier’s car stood at 4,765 miles at the time of the crash. The entry was catalogued just one day after the fatal accident. In the comment field was written, “Vehicle involved in an accident.” The cause of the crash remains unknown to this day. In Tesla’s internal system, a company employee had marked the case as “resolved”, but for five years, Rita Meier had been searching for answers. After Stefan’s death, she took over the family business – a timber company with 200 employees based in Tettnang, Baden-Württemberg. As journalists, we are used to tough interviews, but this one was different. We had to strike a careful balance – between empathy and the persistent questioning good reporting demands. “Why are you convinced the Tesla was responsible for your husband’s death?” we asked her. “Isn’t it possible he was distracted – maybe looking at his phone?”

No one knows for sure. But Meier was well aware that Musk has previously claimed Tesla “releases critical crash data affecting public safety immediately and always will”; that he has bragged many times about how its superior handling of data sets the company apart from its competitors. In the case of her husband, why was she expected to believe there was no data?

Meier’s account was structured and precise. Only once did the toll become visible – when she described how her husband’s body burned in full view of the firefighters. Her eyes filled with tears and her voice cracked. She apologised, turning away. After she collected herself, she told us she has nothing left to gain – but also nothing to lose. That was why she had reached out to us. We promised to look into the case.

Rita Meier wasn’t the only widow to approach us. Disappointed customers, current and former employees, analysts and lawyers were sharing links to our reporting. Many of them contacted us. More than once, someone wrote that it was about time someone stood up to Tesla – and to Elon Musk.

Meier, too, shared our articles and the callout form with others in her network – including people who, like her, lost loved ones in Tesla crashes. One of them was Anke Schuster. Like Meier, she had lost her husband in a Tesla crash that defies explanation and had spent years chasing answers. And, like Meier, she had found her husband’s Model X listed in the Tesla Files. Once again, the incident was marked as resolved – with no indication of what that actually meant.

“My husband died in an unexplained and inexplicable accident,” Schuster wrote in her first email. Her dealings with police, prosecutors and insurance companies, she said, had been “hell”. No one seemed to understand how a Tesla works. “I lost my husband. His four daughters lost their father. And no one ever cared.”

Her husband, Oliver, was a tech enthusiast, fascinated by Musk. A hotelier by trade, he owned no fewer than four Teslas. He loved the cars. She hated them – especially the autopilot. The way the software seemed to make decisions on its own never sat right with her. Now, she felt as if her instincts had been confirmed in the worst way.

Oliver Schuster was returning from a business meeting on 13 April 2021 when his black Model X veered off highway B194 between Loitz and Schönbeck in north-east Germany. It was 12.50pm when the car left the road and crashed into a tree. Schuster started to worry when her husband missed a scheduled bank appointment. She tried to track the vehicle but found no way to locate it. Even calling Tesla led nowhere. That evening, the police broke the news: after the crash her husband’s car had burst into flames. He had burned to death – with the fire brigade watching helplessly.

The crashes that killed Meier’s and Schuster’s husbands were almost three years apart but the parallels were chilling. We examined accident reports, eyewitness accounts, crash-site photos and correspondence with Tesla. In both cases, investigators had requested vehicle data from Tesla, and the company hadn’t provided it. In Meier’s case, Tesla staff claimed no data was available. In Schuster’s, they said there was no relevant data.

Over the next two years, we spoke with crash victims, grieving families and experts around the world. What we uncovered was an ominous black box – a system designed not only to collect and control every byte of customer data, but to safeguard Musk’s vision of autonomous driving. Critical information was sealed off from public scrutiny.

Elon Musk is a perfectionist with a tendency towards micromanagement. At Tesla, his whims seem to override every argument – even in matters of life and death. During our reporting, we came across the issue of door handles. On Teslas, they retract into the doors while the cars are being driven. The system depends on battery power. If an airbag deploys, the doors are supposed to unlock automatically and the handles extend – at least, that’s what the Model S manual says.

The idea for the sleek, futuristic design stems from Musk himself. He insisted on retractable handles, despite repeated warnings from engineers. Since 2018, they have been linked to at least four fatal accidents in Europe and the US, in which five people died.

In February 2024, we reported on a particularly tragic case: a fatal crash on a country road near Dobbrikow, in Brandenburg, Germany. Two 18-year-olds were killed when the Tesla they were in slammed into a tree and caught fire. First responders couldn’t open the doors because the handles were retracted. The teenagers burned to death in the back seat.

A court-appointed expert from Dekra, one of Germany’s leading testing authorities, later concluded that, given the retracted handles, the incident “qualifies as a malfunction”. According to the report, “the failure of the rear door handles to extend automatically must be considered a decisive factor” in the deaths. Had the system worked as intended, “it is assumed that rescuers might have been able to extract the two backseat passengers before the fire developed further”. Without what the report calls a “failure of this safety function”, the teens might have survived.

Our investigation made waves. The Kraftfahrt-Bundesamt, Germany’s federal motor transport authority, got involved and announced plans to coordinate with other regulatory bodies to revise international safety standards. Germany’s largest automobile club, ADAC, issued a public recommendation that Tesla drivers should carry emergency window hammers. In a statement, ADAC warned that retractable door handles could seriously hinder rescue efforts. Even trained emergency responders, it said, may struggle to reach trapped passengers. Tesla shows no intention of changing the design.

That’s Musk. He prefers the sleek look of Teslas without handles, so he accepts the risk to his customers. His thinking, it seems, goes something like this: at some point, the engineers will figure out a technical fix. The same logic applies to his grander vision of autonomous driving: because Musk wants to be first, he lets customers test his unfinished Autopilot system on public roads. It’s a principle borrowed from the software world, where releasing apps in beta has long been standard practice. The more users, the more feedback and, over time – often years – something stable emerges. Revenue and market share arrive much earlier. The motto: if you wait, you lose.

Musk has taken that mindset to the road. The world is his lab. Everyone else is part of the experiment.

By the end of 2023, we knew a lot about how Musk’s cars worked – but the way they handle data still felt like a black box. How is that data stored? At what moment does the onboard computer send it to Tesla’s servers? We talked to independent experts at the Technical University Berlin. Three PhD candidates – Christian Werling, Niclas Kühnapfel and Hans Niklas Jacob – made headlines for hacking Tesla’s autopilot hardware. A brief voltage drop on a circuit board turned out to be just enough to trick the system into opening up.

The security researchers uncovered what they called “Elon Mode” – a hidden setting in which the car drives fully autonomously, without requiring the driver to keep his hands on the wheel. They also managed to recover deleted data, including video footage recorded by a Tesla driver. And they traced exactly what data Tesla sends to its servers – and what it doesn’t.

The hackers explained that Tesla stores data in three places. First, on a memory card inside the onboard computer – essentially a running log of the vehicle’s digital brain. Second, on the event data recorder – a black box that captures a few seconds before and after a crash. And third, on Tesla’s servers, assuming the vehicle uploads them.

The researchers told us they had found an internal database embedded in the system – one built around so-called trigger events. If, for example, the airbag deploys or the car hits an obstacle, the system is designed to save a defined set of data to the black box – and transmit it to Tesla’s servers. Unless the vehicles were in a complete network dead zone, in both the Meier and Schuster cases, the cars should have recorded and transmitted that data.

Who in the company actually works with that data? We examined testimony from Tesla employees in court cases related to fatal crashes. They described how their departments operate. We cross-referenced their statements with entries in the Tesla Files. A pattern took shape: one team screens all crashes at a high level, forwarding them to specialists – some focused on autopilot, others on vehicle dynamics or road grip. There’s also a group that steps in whenever authorities request crash data.

We compiled a list of employees relevant to our reporting. Some we tried to reach by email or phone. For others, we showed up at their homes. If they weren’t there, we left handwritten notes. No one wanted to talk.

We searched for other crashes. One involved Hans von Ohain, a 33-year-old Tesla employee from Evergreen, Colorado. On 16 May 2022, he crashed into a tree on his way home from a golf outing and the car burst into flames. Von Ohain died at the scene. His passenger survived and told police that von Ohain, who had been drinking, had activated Full Self-Driving. Tesla, however, said it couldn’t confirm whether the system was engaged – because no vehicle data was transmitted for the incident.

Then, in February 2024, Musk himself stepped in. The Tesla CEO claimed von Ohain had never downloaded the latest version of the software – so it couldn’t have caused the crash. Friends of von Ohain, however, told US media he had shown them the system. His passenger that day, who barely escaped with his life, told reporters that hours earlier the car had already driven erratically by itself. “The first time it happened, I was like, ‘Is that normal?’” he recalled asking von Ohain. “And he was like, ‘Yeah, that happens every now and then.’”

His account was bolstered by von Ohain’s widow, who explained to the media how overjoyed her husband had been at working for Tesla. Reportedly, von Ohain received the Full Self-Driving system as a perk. His widow explained how he would use the system almost every time he got behind the wheel: “It was jerky, but we were like, that comes with the territory of new technology. We knew the technology had to learn, and we were willing to be part of that.”

The Colorado State Patrol investigated but closed the case without blaming Tesla. It reported that no usable data was recovered.

For a company that markets its cars as computers on wheels, Tesla’s claim that it had no data available in all these cases is surprising. Musk has long described Tesla vehicles as part of a collective neural network – machines that continuously learn from one another. Think of the Borg aliens from the Star Trek franchise. Musk envisions his cars, like the Borg, as a collective – operating as a hive mind, each vehicle linked to a unified consciousness.

When a journalist asked him in October 2015 what made Tesla’s driver-assistance system different, he replied, “The whole Tesla fleet operates as a network. When one car learns something, they all learn it. That is beyond what other car companies are doing.” Every Tesla driver, he explained, becomes a kind of “expert trainer for how the autopilot should work”.

According to Musk, the eight cameras in every Tesla transmit more than 160bn video frames a day to the company’s servers. In its owner’s manual, Tesla states that its cars may collect even more: “analytics, road segment, diagnostic and vehicle usage data”, all sent to headquarters to improve product quality and features such as autopilot. The company claims it learns “from the experience of billions of miles that Tesla vehicles have driven”.

It is a powerful promise: a fleet of millions of cars, constantly feeding raw information into a gargantuan processing centre. Billions – trillions – of data points, all in service of one goal: making cars drive better and keeping drivers safe. At the start of this year, Musk got a chance to show the world what he meant.

On 1 January 2025, at 8.39am, a Tesla Cybertruck exploded outside the Trump International Hotel Las Vegas. The man behind the incident – US special forces veteran Matthew Livelsberger – had rented the vehicle, packed it with fireworks, gas canisters and grenades, and parked it in front of the building. Just before the explosion, he shot himself in the head with a .50 calibre Desert Eagle pistol. “This was not a terrorist attack, it was a wakeup call. Americans only pay attention to spectacles and violence,” Livelsberger wrote in a letter later found by authorities. “What better way to get my point across than a stunt with fireworks and explosives.”

The soldier miscalculated. Seven bystanders suffered minor injuries. The Cybertruck was destroyed, but not even the windows of the hotel shattered. Instead, with his final act, Livelsberger revealed something else entirely: just how far the arm of Tesla’s data machinery can reach. “The whole Tesla senior team is investigating this matter right now,” Musk wrote on X just hours after the blast. “Will post more information as soon as we learn anything. We’ve never seen anything like this.”

Later that day, Musk posted again. Tesla had already analysed all relevant data – and was ready to offer conclusions. “We have now confirmed that the explosion was caused by very large fireworks and/or a bomb carried in the bed of the rented Cybertruck and is unrelated to the vehicle itself,” he wrote. “All vehicle telemetry was positive at the time of the explosion.”

Suddenly, Musk wasn’t just a CEO; he was an investigator. He instructed Tesla technicians to remotely unlock the scorched vehicle. He handed over internal footage captured up to the moment of detonation.The Tesla CEO had turned a suicide attack into a showcase of his superior technology.

Yet there were critics even in the moment of glory. “It reveals the kind of sweeping surveillance going on,” warned David Choffnes, executive director of the Cybersecurity and Privacy Institute at Northeastern University in Boston, when contacted by a reporter. “When something bad happens, it’s helpful, but it’s a double-edged sword. Companies that collect this data can abuse it.”

There are other examples of what Tesla’s data collection makes possible. We found the case of David and Sheila Brown, who died in August 2020 when their Model 3 ran a red light at 114mph in Saratoga, California. Investigators managed to reconstruct every detail, thanks to Tesla’s vehicle data. It shows exactly when the Browns opened a door, unfastened a seatbelt, and how hard the driver pressed the accelerator – down to the millisecond, right up to the moment of impact. Over time, we found more cases, more detailed accident reports. The data definitely is there – until it isn’t.

In many crashes when Teslas inexplicably veered off the road or hit stationary objects, investigators didn’t actually request data from the company. When we asked authorities why, there was often silence. Our impression was that many prosecutors and police officers weren’t even aware that asking was an option. In other cases, they acted only when pushed by victims’ families.

In the Meier case, Tesla told authorities, in a letter dated 25 June 2018, that the last complete set of vehicle data was transmitted nearly two weeks before the crash. The only data from the day of the accident was a “limited snapshot of vehicle parameters” – taken “approximately 50 minutes before the incident”. However, this snapshot “doesn’t show anything in relation to the incident”. As for the black box, Tesla warned that the storage modules were likely destroyed, given the condition of the burned-out vehicle. Data transmission after a crash is possible, the company said – but in this case, it didn’t happen. In the end, investigators couldn’t even determine whether driver-assist systems were active at the time of the crash.

The Schuster case played out similarly. Prosecutors in Stralsund, Germany, were baffled. The road where the crash happened is straight, the asphalt was dry and the weather at the time of the accident was clear. Anke Schuster kept urging the authorities to examine Tesla’s telemetry data.

When prosecutors did formally request the data recorded by Schuster’s car on the day of the crash, it took Tesla more than two weeks to respond – and when it did, the answer was both brief and bold. The company didn’t say there was no data. It said that there was “no relevant data”. The authorities’ reaction left us stunned. We expected prosecutors to push back – to tell Tesla that deciding what’s relevant is their job, not the company’s. But they didn’t. Instead, they closed the case.

The hackers from TU Berlin pointed us to a study by the Netherlands Forensic Institute, an independent division of the ministry of justice and security. In October 2021, the NFI published findings showing it had successfully accessed the onboard memories of all major Tesla models. The researchers compared their results with accident cases in which police had requested data from Tesla. Their conclusion was that while Tesla formally complied with those requests, it omitted large volumes of data that might have proved useful.

Tesla’s credibility took a further hit in a report released by the US National Highway Traffic Safety Administration in April 2024. The agency concluded that Tesla failed to adequately monitor whether drivers remain alert and ready to intervene while using its driver-assist systems. It reviewed 956 crashes, field data and customer communications, and pointed to “gaps in Tesla’s telematic data” that made it impossible to determine how often autopilot was active during crashes. If a vehicle’s antenna was damaged or it crashed in an area without network coverage, even serious accidents sometimes went unreported. Tesla’s internal statistics include only those crashes in which an airbag or other pyrotechnic system deployed – something that occurs in just 18% of police-reported cases. This means that the actual accident rate is significantly higher than Tesla discloses to customers and investors.

There’s more. Two years prior, the NHTSA had flagged something strange – something suspicious. In a separate report, it documented 16 cases in which Tesla vehicles crashed into stationary emergency vehicles. In each, autopilot disengaged “less than one second before impact” – far too little time for the driver to react. Critics warn that this behaviour could allow Tesla to argue in court that autopilot was not active at the moment of impact, potentially dodging responsibility.

The YouTuber Mark Rober, a former engineer at Nasa, replicated this behaviour in an experiment on 15 March 2025. He simulated a range of hazardous situations, in which the Model Y performed significantly worse than a competing vehicle. The Tesla repeatedly ran over a crash-test dummy without braking. The video went viral, amassing more than 14m views within a few days.

youtube

The real surprise came after the experiment. Fred Lambert, who writes for the blog Electrek, pointed out the same autopilot disengagement that the NHTSA had documented. “Autopilot appears to automatically disengage a fraction of a second before the impact as the crash becomes inevitable,” Lambert noted.

And so the doubts about Tesla’s integrity pile up. In the Tesla Files, we found emails and reports from a UK-based engineer who led Tesla’s Safety Incident Investigation programme, overseeing the company’s most sensitive crash cases. His internal memos reveal that Tesla deliberately limited documentation of particular issues to avoid the risk of this information being requested under subpoena. Although he pushed for clearer protocols and better internal processes, US leadership resisted – explicitly driven by fears of legal exposure.

We contacted Tesla multiple times with questions about the company’s data practices. We asked about the Meier and Schuster cases – and what it means when fatal crashes are marked “resolved” in Tesla’s internal system. We asked the company to respond to criticism from the US traffic authority and to the findings of Dutch forensic investigators. We also asked why Tesla doesn’t simply publish crash data, as Musk once promised to do, and whether the company considers it appropriate to withhold information from potential US court orders. Tesla has not responded to any of our questions.

Elon Musk boasts about the vast amount of data his cars generate – data that, he claims, will not only improve Tesla’s entire fleet but also revolutionise road traffic. But, as we have witnessed again and again in the most critical of cases, Tesla refuses to share it.

Tesla’s handling of crash data affects even those who never wanted anything to do with the company. Every road user trusts the car in front, behind or beside them not to be a threat. Does that trust still stand when the car is driving itself?

Internally, we called our investigation into Tesla’s crash data Black Box. At first, because it dealt with the physical data units built into the vehicles – so-called black boxes. But the devices Tesla installs hardly deserve the name. Unlike the flight recorders used in aviation, they’re not fireproof – and in many of the cases we examined, they proved useless.

Over time, we came to see that the name held a second meaning. A black box, in common parlance, is something closed to the outside. Something opaque. Unknowable. And while we’ve gained some insight into Tesla as a company, its handling of crash data remains just that: a black box. Only Tesla knows how Elon Musk’s vehicles truly work. Yet today, more than 5m of them share our roads.

Some names have been changed.

🔴 This is an edited extract from The Tesla Files by Sönke Iwersen and Michael Verfürden

Daily inspiration. Discover more photos at Just for Books…?

#just for books#Sönke Iwersen#Michael Verfürden#The Tesla Files#Self-driving cars#Elon Musk#Road safety#extracts#Tesla#book review

12 notes

·

View notes

Text

"iSeeCar’s analysis calculated a rate based on the total number of miles driven, which was estimated from the company’s car data from over 8 million vehicles on the road. While the total rate of fatal accidents per billion miles driven by all vehicles was 2.8, Tesla vehicles overall had a rate of 5.6. Tesla’s Model Y SUV had fatal accident rate of 10.6, more than double the average for SUVs, which was 4.8"

Nemcsak a Tesla lol faktor miatt, hanem azoknak is küldeném, akik azért vesznek SUV-t mert "fontos nekik a biztonság".

19 notes

·

View notes

Text

Apparently when this image and this story is widely distributed, Tesla stick goes down. You know what to do.

Tesla Cybertruck erupted into flames after crashing into a fire hydrant outside a Bass Pro Shop in Harlingen, Texas [x]

"they had extinguished the flames engulfing the Cybertruck. However, the fire reignited after they had stopped the water flow onto the battery, highlighting a challenging concern associated with electric vehicle fires."

"[in a different incident] the blaze's intensity was so severe that it obliterated the vehicle's VIN and left the driver unidentifiable"

"burns at extremely high temperatures, sometimes reaching 2,300 to 5,000 degrees Fahrenheit, taking hours to extinguish."

" Firefighters have had to adapt their tactics to fight these fires, using full personal protective equipment due to the toxic fumes."

"Tesla vehicles require up to 30,000 to 40,000 gallons of water to put out – approximately 40 times the amount needed for a combustion engine car"

Tesla Cybertruck catches fire after crashing into a fire hydrant The incident highlights the challenges in putting out EV fires By Skye Jacobs August 31, 2024

Bottom line: Statistical data shows that electric vehicle fires occur at a similar frequency to those in vehicles with internal combustion engines, but this offers little comfort to firefighters. These fires are notoriously difficult to extinguish and pose increased dangers to first responders. At least two fires have resulted from Cybertruck crashes, raising concerns about the safety of high-voltage lithium-ion batteries.

Earlier this week, a Tesla Cybertruck erupted into flames after crashing into a fire hydrant outside a Bass Pro Shop in Harlingen, Texas. The collision resulted in a deluge of water soaking the vehicle's battery, which then ignited, according to Assistant Fire Chief Ruben Balboa of the Harlingen Fire Department. First responders arrived at the scene and believed they had extinguished the flames engulfing the Cybertruck. However, the fire reignited after they had stopped the water flow onto the battery, highlighting a challenging concern associated with electric vehicle fires.

This incident is the second fire in Texas involving a Tesla Cybertruck. The first happened after an owner drove into a ditch. It is the first fatal crash involving the model. In that case, the blaze's intensity was so severe that it obliterated the vehicle's VIN and left the driver unidentifiable.

The Harlingen incident underscores the difficulties these fires pose to first responders attempting to extinguish blazing batteries. Electric vehicle batteries can undergo a process known as thermal runaway, where a failure in one cell generates enough heat and gas to cause a chain reaction in adjacent cells.

The resulting fire burns at extremely high temperatures, sometimes reaching 2,300 to 5,000 degrees Fahrenheit, taking hours to extinguish. Firefighters have had to adapt their tactics to fight these fires, using full personal protective equipment due to the toxic fumes. New solutions, such as EV fire-specific fire blankets, are also being developed to address these challenges.

Additionally, first responders have found that EV fires demand significantly more water to extinguish. In 2021, Austin Fire Department Division Chief Thayer Smith told Futurism that Tesla vehicles require up to 30,000 to 40,000 gallons of water to put out – approximately 40 times the amount needed for a combustion engine car.

Ironically, Tesla posted a detailed rescue sheet for its Cybertruck the week before the Harlingen fire. Tesla designed the guide to assist first responders by informing them where the vehicle's low and high-voltage power cables terminate.

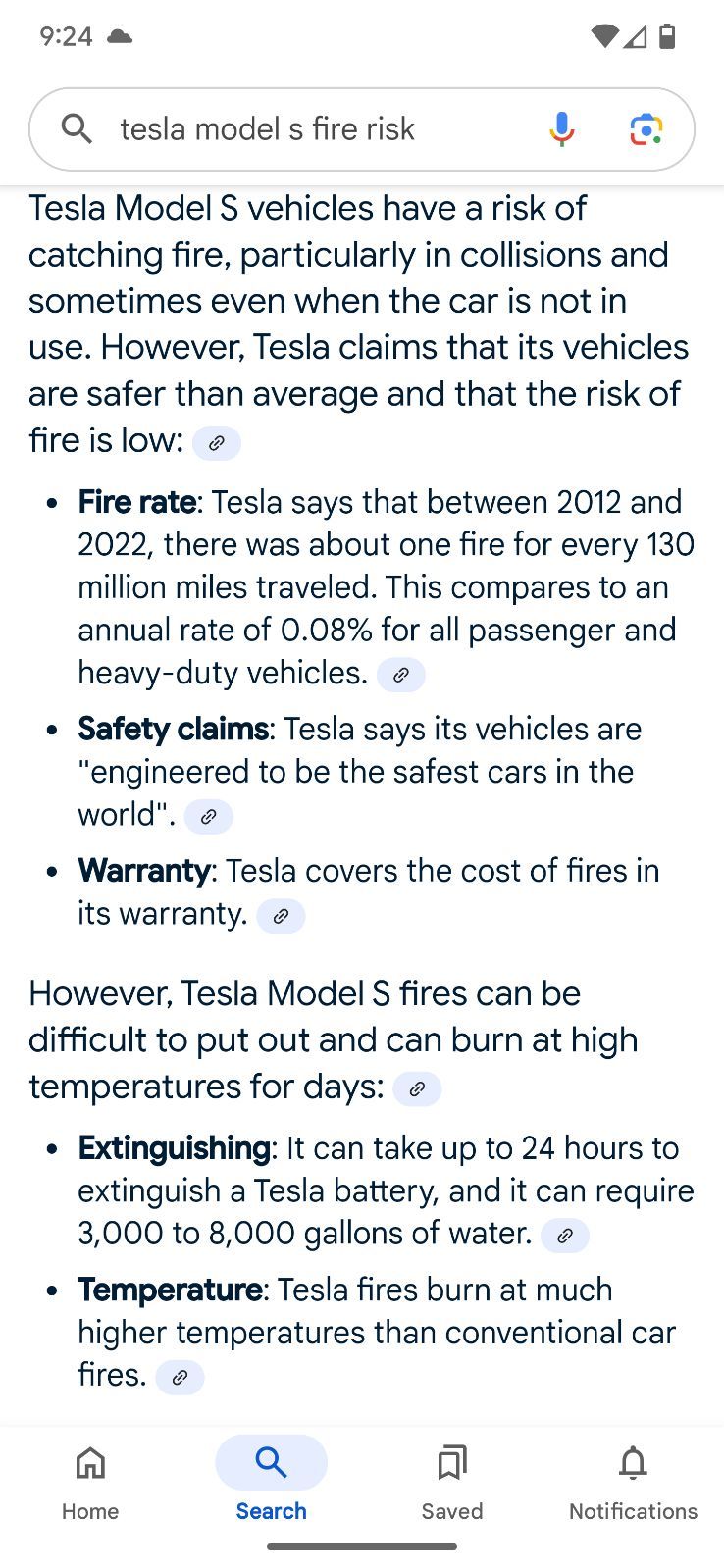

While such incidents tend to make headlines, it's important to note that electric vehicles generally do not catch fire more frequently than internal combustion engine vehicles. Tesla's global data indicates that, on average, a Tesla vehicle fire occurs once every 130 million miles traveled, significantly less frequent than the average vehicle fire rate of one per 18 million miles traveled in the US.

https://www.techspot.com/news/104515-tesla-cybertruck-ignites-after-crashing-fire-hydrant.html

16 notes

·

View notes

Text

Trump unhinged

Trump hit with major lawsuit over Musk data breach

youtube

MeidasTouch host Ben Meiselas reports on a major lawsuit filed against the Trump administration for allowing Elon Musk to gain access to the Treasury Department payment systems.

#Youtube#Trump#Trump unhinged#Donald Trump#Musk#Elon#Elon Musk#MeidasTouch#Ben Meiselas#major lawsuit against Trump administration#Musk data breach#access to Treasury Department payment systems

12 notes

·

View notes

Text

PSA- Don’t Buy Tesla’s

“She told NBC6 that her husband would be still alive if the Model S door handles simply opened the car. That did not happen in Jutha’s Model Y, because its doors work with electronic actuators. If an electrical failure occurs, they demand a mechanical manual release.”

“Tesla has transitioned to an electromechanical door latch system on its vehicles, which means the doors are not physically connected to the door opening handles. Instead, these are electrical switches that trigger the electric door release system to open the door. Because there is no physical connection between the door handles and the door latching mechanism, it doesn’t work when the 12-volt electrical system is down for some reason.

This usually happens in the case of a crash. At least two car crashes ended with fatalities because people got trapped inside a Tesla.”

“‘Uggh, it's a Tesla. We can't get in these cars,’ one firefighter reportedly said. Despite the difficulty, they acted swiftly, using an ax to smash a window and rescue the child.”

“The Hyundai Venue compact SUV topped iSeeCars’ review of National Highway Traffic Safety Administration Fatality Analysis Reporting System data for most dangerous cars, while Tesla topped its list of most dangerous car brands.”

“These fires burn at much higher temperatures and require a lot more water to fight than conventional car fires. There also isn’t an established consensus on the best firefighting strategies for EVs, experts told Vox.”

Seriously- no matter what your political leanings are- DON’T BUY TESLA’S; the fact that no one is widely discussing a very blatant safety risk that people can’t reliably get out of their cars during a car accident is HORRIBLE…

If you KNOW someone who owns a Tesla- make sure they are AWARE of this flaw in certain vehicles and that they should possibly purchase a window breaker to be safe.

#If you hate Musk…. Please feel free to reblog…#Tesla#tesla cars#Elon Musk#fraud#Cars#car safety#automotive#electric vehicles#electric cars#psa#please share#please reblog#please boost#please spread

12 notes

·

View notes

Text

Tough times for Teslas.

March 24, 2025

Tesla, purported business "genius" Elon Musk's electric car company — a major source of his wealth, by the way — is undergoing a global meltdown. In the US, its January sales were down about 11%, according to data from the S&P Global analytics group. Worldwide it's even worse. In Germany, EV sales are up 30% over last year, but Tesla sales are down more than 70%! And according to Reuters, Tesla recorded a 50% drop in sales in Portugal and 45% in France between January and February. Sweden, Norway and Australia also report similar numbers.

Meanwhile, the company's stock price has plunged nearly 50% since its mid-December peak — with four top officers at the company selling off over $100 million in shares since early February. Musk himself has lost nearly $200 billion.

Much of the company's woes are due, of course, to Musk's personal unpopularity as Donald Trump's hatchet man in the ongoing destruction of the federal government. Which is causing enraged Americans to protest at Tesla dealerships and vandalize the cars themselves. But a contributing factor may be that Teslas are simply not very good cars.

For a start, they have a reputation for catching fire, often following a crash but also occasionally while just charging. Other drawbacks include suspension issues, battery degradation, door handle malfunctions, defective paint and faulty heat pumps. Plus, its self-driving Autopilot software has caused 736 crashes and 17 deaths since 2019. In fact, a study on data from 2018 to 2022 found Tesla had the highest fatal accident rate of any automaker.

But the company's recently introduced, ugly stainless steel-paneled (and rust-prone) Cybertruck is even more problematic. Since coming out last year, it has seen eight separate recalls to fix not just software but actual build problems, including an accelerator pedal that sticks, failing windshield wipers and plastic trim flying off.

Last month, a report found that, in just one year, the Cybertruck had a higher rate of fatal fire incidents than the infamous Ford Pinto. And only last week Tesla announced it was recalling all Cybertrucks manufactured to date (46,000 of them) because a panel could come unglued, which could be potentially dangerous if it happens while the vehicle is being driven.

Given their exorbitant price ($80,000 for the Cybertruck), poor design, hefty maintenance costs, low reliability and high insurance rates, it's not surprising sales of Teslas are cratering and used car lots are overflowing with discarded ones. So hurry on down to Honest Don's White House Tesla Sales, and you might get yourself a pretty sweet deal.

5 notes

·

View notes

Text

youtube

Elon Musk CRASHES His Own Company As Cybertruck Disaster Skyrockets

Elon Musk does not want you to know about this devastating Tesla data. John Iadarola breaks it down on The Damage Report. Leave a comment with your thoughts below!

4 notes

·

View notes

Text

xAI & X Merger Defuses Musk's Tesla Share Liquidation Risk

Elon Musk secured a multibillion-dollar margin loan using Tesla stock as collateral to finance his acquisition of Twitter (now rebranded as X). In recent months, Tesla’s share price has been cut in half due to a confluence of factors—slowing EV demand amid high interest rates, shifting electric vehicle policies under the Trump administration, market volatility driven by trade tensions, and pressure from a coordinated NGO-driven color revolution known as “Tesla Takedown,” aimed at crashing the stock to trigger loan repayment obligations tied to Musk’s pledged equity.

In short, volatility in Tesla shares left Musk heavily exposed to potential loan repayment thresholds being triggered - which was set to occur at or below $114 according to reports - until now.

On Friday evening, Musk announced the merger of X with his AI startup, xAI, in an all-stock transaction that strengthens his financial position, protects Tesla shareholders, and renders the Tesla Takedown color revolution largely ineffective in achieving its intended goal.

Musk outlined xAI's acquisition of X:

xAI has acquired X in an all-stock transaction. The combination values xAI at $80 billion and X at $33 billion ($45B less $12B debt).

Since its founding two years ago, xAI has rapidly become one of the leading AI labs in the world, building models and data centers at unprecedented speed and scale.

X is the digital town square where more than 600M active users go to find the real-time source of ground truth and, in the last two years, has been transformed into one of the most efficient companies in the world, positioning it to deliver scalable future growth.

xAI and X's futures are intertwined. Today, we officially take the step to combine the data, models, compute, distribution and talent. This combination will unlock immense potential by blending xAI's advanced AI capability and expertise with X's massive reach. The combined company will deliver smarter, more meaningful experiences to billions of people while staying true to our core mission of seeking truth and advancing knowledge. This will allow us to build a platform that doesn't just reflect the world but actively accelerates human progress.

I would like to recognize the hardcore dedication of everyone at xAI and X that has brought us to this point. This is just the beginning.

3 notes

·

View notes