#Turing Test

Explore tagged Tumblr posts

Text

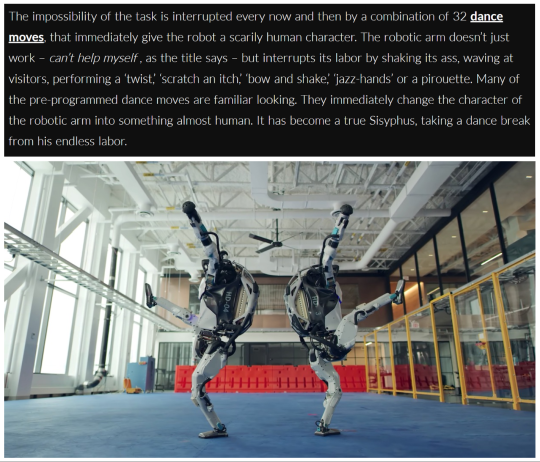

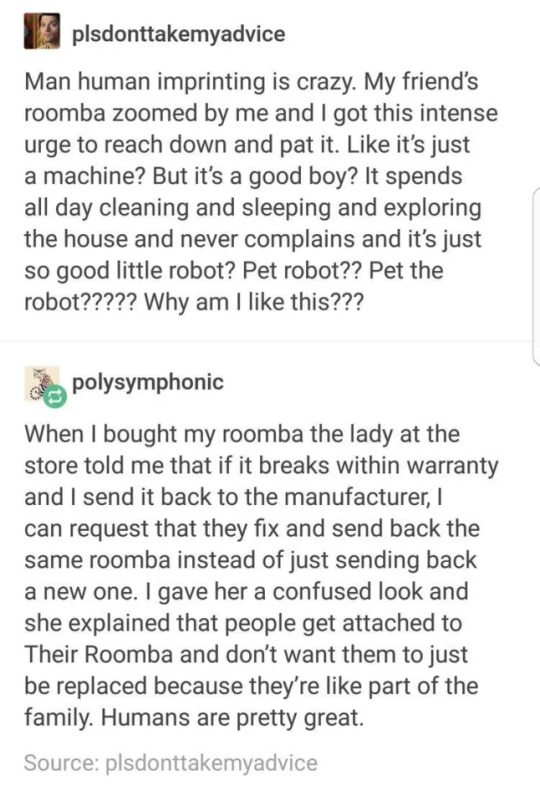

machines are human, too.

porter robinson, sad machine || sun yuan and peng yu, can't help myself || boston dynamics || the washington post || new york post || boston dynamics || tumblr || phillip k dick, do androids dream of electric sheep? || plainsight || wikipedia, turing test || kurt vonnegut, breakfast of champions

#web weaving#my weavings#on life#on humanity#the human condition#on robots#robots#sad machine#porter robinson#sun yuan#peng yu#can't help myself#boston dynamics#phillip k dick#do androids dream of electric sheep#plainsight#turing test#alan turing#kurt vonnegut#breakfast of champions

410 notes

·

View notes

Text

Ex Machina (2014)

#2014#film#movie#science fiction#Ex Machina#Alex Garland#Alicia Vikander#Ava#Oscar Isaac#Nathan#Domhnall Gleeson#Caleb#Sonoya Mizuno#Kyoko#Turing Test#cyborg

91 notes

·

View notes

Photo

How the Plot of 'Ex Machina' Was Actually a Reverse AI Turing Test For Humans

26 notes

·

View notes

Text

#I did Lil' Hal so I had to do him#Hal 9000#hal space odyssey#hal 2001#2001 a space odyssey#space odyssey#aso#2001 aso#turing test#poll#polls#poll blog#tumblr polls#your fave#your fave blog

8 notes

·

View notes

Text

they should be called "Turing Test"

#ive never drawn kiibo before why did i decide the first time would be at a weird angle aaaahhhhhh#but it made me feel better so worth it i suppose. nowhere to go but up from here#danganronpa#korekiyo shinguji#drv3 killing harmony#drv3#present | art#danganronpa drv3#kiibo idabashi#kiibo#k1 b0#keebo#korekiibo#turing test#ndrv3 killing harmony#danganronpa ndrv3#ndrv3

61 notes

·

View notes

Text

Hit Turing right in the test-ees

Turing Test [Explained]

Transcript Under the Cut

[Cueball typing at a computer on a desk with another computer on the other side of a divider.] Caption: Turing test extra credit: Convince the examiner that he's a computer. Cueball: You know, you make some really good points. Cueball: I'm... not even sure who I am anymore.

71 notes

·

View notes

Text

i suppose i have always been here / drinking the same water / falling from the sky then floating / back up & down again / i suppose i am something like a salmon / climbing up the river / to let myself fall away in soft / red spheres / & then rotting

Franny Choi, Turing Test

#Franny Choi#Turing Test#Soft Science#presence#water#salmon#rotting#girl rotting#migration#poetry#Asian American literature#Asian American and Pacific Islander Heritage Month#AAPI Heritage Month#Asian Heritage Month#quotes#quotes blog#literary quotes#literature quotes#literature#book quotes#books#words#text

10 notes

·

View notes

Text

Damn Turing was a fucking savage

#like damn dude “your argument is bad and you should feel bad so i guess you'll need comforting about that”#computing machinery and Intelligence#alan turing#turing test#turing machine

16 notes

·

View notes

Text

The AI Art Turing Test Is Meaningless

Last year I tried the "AI art Turing Test" (here's a better link if you want to take it yourself), and I did pretty well in it. I could identify many pictures as immediately AI-generated. Multiple images had missing or additional fingers. Some had shadows in them but nothing to cast them. Some I recognised as real pictures and I could definitely rule out. One picture had a particular human touch put in by the painter as a way to "bump the lamp", what the kids today would call a "flex", so I could rule it out as AI-generated. Multiple images had weird choices of "materials", where a wooden object blended into metal, or cloth blended into flesh. Some had details that looked like a Penrose Triangle or Shepard's Elephant: Locally the features looked normal, but they didn't fit together. Sometimes there was a very obvious asymmetry that a human artist would have caught.

I could rule out some more pictures as definitely not AI-generated, because there was just no way to prompt for this kind of picture, and some I could rule out because there were features in the middle, and off to the sides, in a way that would have been difficult to prompt for. Other pictures just felt off in a way I can't put into words. Some were obviously rendered, one some looked like watercolours, but with some chromatogram-like effect that I don't know how to achieve with watercolours, but that could totally be done by mixing different types of paint, but that effect looked suspiciously like the receptive fields of neurons in the visual cortex.

And then I thought "Would an AI art guy prompt for this image? Would he select this output and post it?" Immediately, I could rule out several more images as human-generated. I could already get far enough with "What prompt would this be based on?", but "Would this be the kind of thing modern AI art guy would prompt for?" combined with "Would a modern AI art guy use that prompt to get a picture?" was really effective. Anything with a simple prompt like "Virgin Mary" or "Anime Girl on Dragon" was more likely to be AI art, and anything that had multiple points of interest and multiple little details that would be hard to describe succinctly was less likely to be AI art.

Finally, there were some pictures that looked "like a Monet", or perhaps like something by Berthe Morisot, Auguste Renoir, Louis Gurlitt, William Turner, John Constable, except... not. One time I thought a picture looks like a view William Turner would have painted, but in a different style. Were these pictures by disciples of famous painters? Lesser known pictures? Were these prompted "in the style of Monet" or "in an impressionistic style"? There are many possibilities. An impressionistic-looking painting may have been painted by a lesser known impressionist, somebody who studied under a famous impressionist, by an early to mid-20th century painter, or very recently. What if that's a Beltracchi? And then there was this one Miró that contained bits of the the symbol language Miró had used. That had to be legit.

When I was unsure, I tried again to imagine what the prompt that could have produced a painting would have looked like. On several pictures that looked like digital painting (think Krita) or renders (think Blender/POV-Ray), I erred on the side of human-generated. Nobody prompts for "Blender".

I spent a lot of time on one particular picture. I correctly identified it as AI-generated, based on my immediate gut feeling, but I psyched myself out a bit. The shadows were off. There was nothing in the picture to cast these shadows. But what if the picture had been painted based on a real place, and there were tall trees just out of the frame? Well, in that case, it would look awkward. Why not frame it differently? What if the trees that cast the shadows were cropped out? In the end, I decided to go with generated. Make no mistake though: You could easily imagine a computer disregarding anything outside of the picture, and a human painting a real scene on a real location at a particular time of day, a scene that just happens to have a tree right outside of the picture frame. Would a painter include the shadows? A photographer would have no choice here.

This left several pictures that might as well have been generated, or might as well have been human-made, and several where there was no obvious problem, but they felt off. I suspected that one more of the landscapes was AI-generated, and I had incorrectly marked it as human, purely metagaming based on the structure of the survey. I was right, but I couldn't figure out which one.

In the end, I got most of the pictures right, and I was well-calibrated. I got two thirds of those where I thought it might go either way but I leaned slightly, and around 90% of those where I was reasonably sure. At least I am perfectly calibrated.

And then I asked myself: What is this supposed to prove? Is it fair to call it a Turing Test?

I can see when something is AI-generated easily enough, but not always. I can see where an AI prompter has different priorities from a modern painter, and where an AI prompter trying to re-create an older style has different priorities. This "Turing Test" is pitting the old masters against modern imitators, but it also pits bad contemporary art against bad imitations of bad contemporary art. It's not a test where you let a human paint something and let an AI paint something and compare.

As it stands now, the results of the AI art Turing tests do not tell us anything, and the test is not that difficult to pass. But what if it were? What could that tell us?

Just imagine that were the case: What's the difference between a blurry .jpg of a Rothko and a computer-generated blurry .jpg of a Rothko?

What if an AI prompter tells the AI to generate the picture so it looks aged, with faded bits and a cracked canvas? What if an AI prompter asks for a picture in the style of Monet? What if a prompter with medieval religious sensibilities asks for illustrations of the life of St. Francis of Assisi? You can easily psych yourself out taking the test, but you can also imagine a version of the test that is actually difficult.

If you control for all of these, maybe all that's left will be that eerie AI-generated feeling that I couldn't quite shake off, and the tendency of image synthesis systems to focus on the centre of the picture and to make even landscapes look like portraits. Maybe we can fix even that if we don't prompt with "trending on artstation" but with "Hieronymus Bosch", and then we will have a fair test. Or would it be a fair test if we pit a human against an AI? What would happen if you pitted me, with armed with watercolours, charcoal, crayons, or acrylic paint, against midjourney?

Or maybe it's a fool's errand, given that some people who took the test couldn't even notice the extra fingers. You can pass the political Turing test if you just pretend to be an un-informed voter, and AI art is probably already better at illustrating cover images for podcast episodes than an un-informed voter. And then it can generate the whole podcast.

9 notes

·

View notes

Text

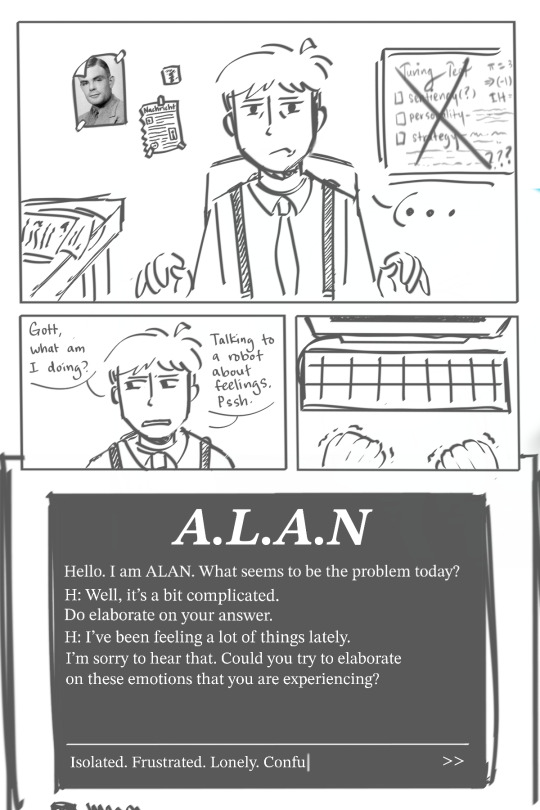

A.L.A.N ; Artificial Linguistic Answering Network

Thanks to my dear sister, @get-shortcaked (Blithe) for helping me with the layout.

Turing Test debacle + Smidge of Hermann angst woahh

In the novelization of Pacific Rim, there is a section with the following.

" Gottlieb was soldering together a robot can I build an intelligence that will pass a Turing test and if I could of course I can I must never say anything about it until it is done or Father will "

This suggests that young Hermann had planned to create and build a robot/artificial intelligence that could potentially pass the Turing Test.

The Turing test is, as the Oxford Language site explains, " a test for intelligence in a computer, requiring that a human being should be unable to distinguish the machine from another human being by using the replies to questions put to both. "

And so me and Blithe went down the RABIT hole (pun intended) and we came up with the idea that Hermann did actually attempt at cracking the Turing Test (around early 2000s), but failed. He later used the model for his personal use as some sort of friend/adviser. However, he quickly dropped it as he slowly felt that the mere concept of talking to a robot about his woes to be embarrassing.

A.L.A.N is a model that takes heavy inspiration from E.L.I.Z.A, who was originally programmed in 1966, the first 'artificial intelligence' to attempt the Turing Test. Like E.L.I.Z.A, A.L.A.N is programmed to have the personality of a psychotherapist.

Also, A.L.A.N, Alan Turing. The name was Blithe's idea.

#ramblings#whoop whoop#pacific rim#pacific rim fanart#comic?#hermann gottlieb#turing test#alan turing#hermann had a boy crush on alan turing when he was younger canon

62 notes

·

View notes

Text

- Is love real? - I don't understand the question.

33 notes

·

View notes

Text

Ex Machina (2014)

#2014#gif#film#movie#science fiction#Ex Machina#Alex Garland#Alicia Vikander#Ava#Oscar Isaac#Nathan#Domhnall Gleeson#Caleb#Sonoya Mizuno#Kyoko#Turing Test#cyborg

15 notes

·

View notes

Text

"... Honestly, I feel like part of what's made Generative AI content and chatbots look so "convincing" to certain types of people, is that the past decade of low effort content outsourced to underpaid overseas contractors has lowered our standards so much as to what acceptable content and output should look like. It's like they realized the easiest way to pass the Turing Test wasn't to make a better robot, it was to lower the standards for human communication down to where the robot was already operating... "

12 notes

·

View notes

Text

#lil hal#homestuck#hal homestuck#hal hs#hs#lil hal homestuck#turing test#poll#polls#poll blog#tumblr polls#your fave#your fave blog

7 notes

·

View notes

Text

The Turing Test is kinda funny because I'm seeing posts on my university discussion board that are probably chatgpt but I can't tell because I don't know why even chatgpt would spit out some of these answers. Congrats to chatgpt, you could probably pass as a human being because the people in my class are just so goddamn stupid

5 notes

·

View notes

Text

It's really true. The Turing Test is utterly ridiculous and nobody should ever take it seriously.

19 notes

·

View notes