#and using generative ai to retouch some points

Explore tagged Tumblr posts

Text

Select, April 98 scan by pulpwiki

#jarvis cocker#steve mackey#nick banks#candida doyle#mark webber#pulp#pulp band#britpop#90s#yeah i try my best to photoshop this#and using generative ai to retouch some points

24 notes

·

View notes

Text

i do actually think adding a Tumblr content label for AI generated images would be useful for everyone, wouldn't fuck up anyone's day that actually wanted to post or look at ai pics, and I doubt the corpos that are paying tumblr for ai dataset feeding would care either. maybe it's just the verification process putting more stress on the already-failing content checking infrastructure that's the holdup. it's not like labeling something "mature" or "drugs" or something you can just look at and see whether it is or not, unless they made it a fully community-based vote they would be showing increasingly-realistic AI slop to moderators and asking them to determine whether they were AI or not to trigger the label and I don't think it's possible. we're already at a point where the average gen alpha doesn't know the difference between "Photoshop" and "AI" and uses the terms interchangeably. edit: and, I forgot to add but meant to, we are already at a point where grizzled old fake picture-spotters like myself cannot always 100% accurately determine if something is AI. the realism gap is going to close almost completely within a year for anyone using generative software that has current updates and a prompter that is careful about picking which output to post. the realism gap is already closed for careful prompters, anyone who gets lucky and doesn't get weird hands or text, and any image which is of subject matter niche enough to fly under most people's radars.

the label could be phrased to accommodate this, as in, "XXXX out of XXXX users (let's say enough reports trigger a voting mechanism, idk) believe this media is AI-generated, photoshopped, or otherwise tampered with" but who knows how helpful it would actually be.

they did some research a while back on the effect of knowing photos of women had been retouched in Photoshop on the audience's self-image. they found that contrary to expectations, Photoshop labels made the test subjects feel worse about their own self image than they did when shown Photoshopped images of models that did not have a disclaimer on them. I've been mulling that one over for a while now. and since this is the dipshit idiot moron website no this last anecdote is not me saying "labeling fake media is useless", I still think fake media should be labeled. it just raises questions about the assumed benefits of the labeling. "knowing a picture is fake" is not a benefit that needs to be tested in the case of, for example, fake historical photographs.

67 notes

·

View notes

Text

Do Not Buy: The Vampire Dies in No Time Volume 1 (Orange Inc.)

Tl;dr it's bad. Also do not support AI.

Some of you may not know it but The Vampire Dies in No Time actually has an "official English translation" that is being published on emaqi.com (access for North America only).

I'll start with the elephant in the room. Orange Inc., the company behind this "official translation," uses AI-translation technologies.

"To produce one localized volume of manga in another language requires several difficult, time-consuming, and complicated processes. What Orange is doing is using AI technology to try to streamline the more tedious tasks required for manga localization, so humans can spend more time focusing on what they do best." (link)

They claim the translation they're providing is not AI-generated and is done by their translators but let's see these statements.

On the translation side, we sometimes use Japanese-to-English translation that is 100% done by human translators, with no AI assistance. We also have some cases where we start with an AI-generated translation, and human translators will use that as a starting point to review and make edits or adjustments, if needed. But what is really important for us to emphasize is that we NEVER publish anything that is only an AI-generated translation. <...> For example, the first basic translation could be made by AI, and the human localizer would work on retouching and fixing stuff. <...> (From the press release) To maintain the integrity of the original works and build trust with the readers, artists and publishers over time, no more than 10 percent of the content on emaqi will be translated using AI support at launch, as the team continues to evaluate and refine its approach for the future (link)

According to them, they use AI-assisted automated tools for things like erasing the Japanese original text from a manga page, but as you'll see later that, if that claim is true, not only is this thing inconsistent in what to erase and what not but there are also moments that made me question if the translation was really done by human hand.

First of all, nobody is credited for the translation.

Translated, Lettered and Edited by Orange Inc.

How can anyone be assured that whatever they're reading was not done by AI? Just believe someone's "plz trust me"?

Second of all, fine. Let me put on a clown wig and assume it was 100% done by human translators and not MTL.

It doesn't save the book much because it's still bad.

Disclaimer: this post is covering only the first chapter and all the problems with it because it's the only one with an open access and could be read for free. Also because one post is not enough to cover all the problems I've found in the book.

Let's start with the contents page.

Call me old-fashioned or whatever, I really dislike the decision just to leave the chapters as 'chapters'.

The chapters in the series are referred to as 第x死 instead of the usual 第x話. This translates as Death X, because our titular main character happens to die in no time, what a shocker. By simply changing these 'deaths' to 'chapters' the translation already loses some charm of the original book.

As for the chapter titles...

(1) The Hunter Arrives, And He Gets Lost - no, that's not what the 空を飛ぶ part means. It is literal, because Ronald flies in the skies for just a moment because that's what happens in the chapter.

(4) Fukuma Attacks! - I'm convinced the title for this one is based on the fan translations of that chapter title because not even in the anime it's translated like that. The word 襲撃 does mean 'to attack', except there's a small nuance: the word is usually used to indicate that the attack is unexpceted. Again, that's what happens in the chapter.

(5) Shin-Yokohama Will Fall With Flowers - I will admit, this title is difficult to be properly translated into English, which is where the machine failed. Bonnoki is using two different meanings of the word 散る: one is applicable to the falling flowers, another one could be roughly translated as 'to die a noble death in the battle'. The second meaning is what happens in the chapter, because Draluc, well, dies during his encounter with Zenranium. 花と散る is basically just a euphemism of someone dying. No idea why it's suddenly Shin-Yokohama the one dying in this title translation. A human with basic knowledge of the Japanese grammar would not translate it like that, that's for sure.

(6) Can You Hit the Wall and Kill It? - like in the case with the anime's translation (Can a Bang on the Wall Kill Him?), I have no idea why they're specifying and making it worse when we all know from the context the one to be killed is Draluc, so it would be totally fine to translate is as just "Can You Kill by Hitting the Wall?" (This one is more of a nitcpick, I admit it myself, but still)

(7) Grumbling Highway to Success - again, if you know the context, the chapter title makes no sense. Hinaichi doesn't win. Her hopes of getting a serious job (出世街道, path to success) turn into getting the lamest job (転落道, path to failure). Could be also translated as "The Highway to Promotion is the Path to Demotion" but that's

(8) Eros Leading to Metamorphosis - ゆえに ('therefore') is translated wrong. I'll let them slide the omitted wordplay in the title with "love/eros" and "pervert/metamorphosis" because it's hard to show that in translation, it doesn't really matter that much here anyway.

(9/10) Some Idiots Cause a Disturbance in the Hunter's Guild... (Again) - バカ騒ぎ is an adjective attached to the guild, not some idiots. The まだ part in the sequel indicated the guild is still fooling around.

And finally, Draluc's iconic 'sand' scream is now something gibberish. Great start.

Page 3

Someone saw him heading toward Draluc's castle...

Again, I'm slightly nitpicking, but for a reason. Draluc's castle is literally called just Draluc Castle. Poor woman is telling Ronald the name of the castle her son was last seen, and then she adds that the castle belongs to the vampire with the same name.

Continuity error (真相にして無敵). For some reason, the 無敵 part is always translated along with some new added words when it's not the case in the original. "Said to be invincible", "supposedly invincible".

Page 8

Font inconsistency. Despite using the same font in the original, Ronald shouts in two different fonts in the translation.

What you see straught ahead is the castle of Draluc...

The word that the tour guide uses here is not 城 (castle) but 屋敷 (residence, mansion).

Page 9

Mischellanous. Some of the written stuff is not translated and left as it is, without any logic behind such decision. On this page, Draluc's buns with his initial D are not translated. Draluc's games referencing the very well-known ones are also left untouched.

Page 10

I was picking out a QSQ software.

Where do I even start with this...

Most of the time the word ソフト in gaming spaces does not mean a short form of ソフトウェア (software program) but a short form of ゲームソフト (console game). Sure, technically speaking a game is a software, but I've never seen any casual gamers call it "software" even once because imho the word software has a slightly different meaning than when you say a video game.

And here we have an elementary school kid calling it a software. When he was literally shown picking a game on the previous page. Dpmo.

I ended up staying late because I was playing until I completed the game.

He did not complete the game. That's part of the reason why he runs away and continues playing later on.

Most of the time, author's notes (aka Bonnoki's additional comments) are usually introduced via a character saying something and the line being marked with this ※ reference mark. For some reason, this reference mark is erased in translation.

Page 11

Don't think you can beat me in my own castle, brat!

No idea why they decided to add the word brat that wasn't in the bubble in the first place. We know already that line is addressed to the kid.

While technically it's the correct transation, Draluc refers to him as 小僧 in the chapter, which is more polite than Ronald's ガキ, usually translated here as "brat". Draluc is yet to go through his polite-to-rude speech change, so of course he's referring to the scooter kid politely, with the only time of him using "brat" as a reply to Ronald's previous speech where he refers to kid as such. However, the translation fails to adapt this nuance in their speeches. (No idea how it is in the dub, but at least in the English subs they do refer to him differently, with Draluc using "boy" and Ronald using "kid" and they were always consistent with this)

Mischellanous. Due to the pics limit I can't post every case of the manga being inconsistent with Draluc's 小僧/Ronald's ガキ so I'll just write them down if there are any in the remaining screencaps. While I can close my eyes on Ronald's inconsistency and sort of excuse it as a PR persona vs. real persona slipping up moments, all the Draluc moments literally have no excuse.

Bratconsistency x1

Page 13

The のに part is missing in the translation. Ronald is technically continuing hiss speech from the previous page, hence のに.

However, if anything happens to the client's kid...

Also... Bratconsistency x0.5 (Like I've mentioned before, I can at least (sort of) excuse Ronald's lines, but still.)

See? Your long-winded speech made us lost track of the brat.

Made us lose track.

Bratconsistency x1

Page 14

...little punk

Doesn't even count as Bratconsistency because it wasn't in the original lines. Ronald doesn't call out to him even once during this scene because he does it in the next one.

Page 15

This is a popularity business. Going viral for the wrong reasons is the scariest thing.

I rather prefer "online backlash" from the anime as the translation for the 炎上 because (imho) "going viral for the wrong reasons" is too vague and could mean anything.

All right! You go give that kid a Wii or something!

It's Vii.

Page 16

Mischellanous: Ronald's book cover is not translated. Again, no idea what's the logic behind translating some things and leaving some of them untranslated.

Damn. That little brat...

That's not what he said.

Page 17 (+ bonus Page 22)

Become minced meat along with the kid, vampire hunter!

Again, that's not what he said. He just says they will become victims of said traps. The anime subs got it right.

Hey, there was some kind of beam-emitting device in there!

I have to admit, this was the moment I gave up and had to laugh out loud, for the completely wrong reason.

Ah yes, I can totally imagine a really pissed off Ronald, after almost getting himself killed, saying "beam-emitting device" in his politest voice ever.

At least on page 22 he says something more in character. Wait a second-

It's the thing that shoots beams from its eyes!!!

Eye. One eye. Also inconsistent with the name from page 17.

Page 18

Font inconsistency. When Draluc is being fried by lazers, his speech becomes pixelated. While the font slightly changes, it's nowhere close to the original line in terms of style.

(Ronald) C'mon, one look and it's obvious the place is full of traps. Don't mess with me. (Draluc) You went in even though you could tell there was a trap at first glance?

Draluc's reply makes no sense. Not to mention that's not what he said. "You could tell that at first glance, and yet you still went in?"

Page 19

Mischellanous. Again, the poisonous gas button is translated on one frame and left untranslated on another.

Page 20

I mean, wouldn't it be harder to actually get fall traps that obvious?

???

???

You either choose "to actually fall for such obvious traps" or "to actually get caught in such obvious traps", not both at the same time.

Page 24

Mischellanous. Again, Draluc is being fried by lasers and his speech is in pixels. No pixels for the translation.

I totally forgot about the poison gas!

Poisonous gas, you mean. Proofreading seems to be nonexistent.

Page 27

The gag of Draluc's sand sfx being reversed when revived is nonexistent in the translation.

Page 28

Gah, sunlight!!!

Font inconistency. Draluc's "gah" should also be enlarged.

Draluc Castle

They finally got the Draluc Castle name right, too bad it's inconsistent with what was introduced before, huh.

***

And lastly, for anyone thinking it gets better later, have some moments from the extras. (No pics since I've reached the limit and I don't want to be hit by a random copyright)

Special Thanks Page. They translated the address but decided to leave out the part mentioning Bonnoki's editor and his assistants.

Character Notes. 兄弟 from Ronald's note is translated as "brothers". While typically it does mean exactly that, we know from context 3兄弟 means "three siblings" because we have Himari, Ronald's sister.

Extra Bonus Story. Oh boy. Firstly, Ronald-Donald joke doesn't land if you have a Ronaldo-Donald combo. (Another win for Ronald truthers) And if you don't know why their names are suddenly spelled as "Ro-na-lu-do" and "Do-ra-lu-ku" to make the joke work, don't worry, they just gave up on adapting the joke. Secondly, they also gave up on the Funamushi-Hinaichi joke adaptation and wrote "Funamushi (Ligia exotica)" and I wish I was kidding.

Uso/Fake Preview. Despite being a two-page spread, the text is placed as if it's two different pages. The fact it was even approved makes me frustrated. The grammar is also messed up at one point.

Back Cover. Again, another proof that this translation lacks proofreading.

The translation of the frame on the cover does not match the one in the actual book. ("That cocky brat… Mocking us with those fancy kickboard moves." (book) vs. "Why you little... Mocking me with those kickboard skills!" (back cover))

The description of Ronald and Fukuma's frame: "Clothes being torn into shreads by editors with his battle axe...?!" Again, zero understanding of the context and zero proofreading. Fukuma is one entity (so far...), where did multiple editors even come from?

Speaking of proofreading, the synopsis also (sort of) lies about the premise. "In order to find the missing children, the vampire hunter Ronaldo came to the castle of the supposedly invincible vampire and true ancestor, Draluc. <...>" One. Missing. Child.

Closing thoughts

Objectively speaking, I can't prove that this translation was done by AI, but I also can't prove that it was done by humans either.

The reason why I'm even making this post is because I'm frustrated and very disappointed. Some of Bonnoki's jokes might be either too niche (I swear I've learned more about the Dragon Quest just from him than my gaming circles) or too clever for the translation that a human hand will always be required, no matter what. If I don't have any guarantees that these jokes will not be butchered by some AI that missed the context or simply doesn't get the joke, then why should anyone bother paying for the lackluster content?

And again, the quality of the volume wouldn't change much even if no AI was used, because then it would mean none of the translators bothered to check if what they've translated before was consistent or matches with what's happening in the book.

And, to top it all, people are asked to pay $5 for this quality on the site.

Unfortunately (?) I don't have access to volumes 2-4, so I can't just check the chapters where AI would be the most obvious or simply check the later content, but I'm afraid it probably doesn't get much better. And if the description on the site matches the one from the back cover like with volume 1, then we have Ronaldo suddenly turning into Ronald three volumes later. Another peak of consistency. (If it's just a typo on the back cover and he's still Ronaldo in the series, it just shows the lack of care again)

I really wanted for The Vampire Dies in No Time to be picked up by some daring publishing company and be properly translated into English with all the nuances. There is a reason why the series is so beloved in Japan among the fans, so I hoped that its chaotic charm would find its way to the public outside Japan.

So far, all I feel right now is sadness. I'm disappointed with how the official English release is being handled right now.

Last thing I will say is this. Do not even dare blaming or insulting Bonnoki for this mess. We don't know the extent of how much he actually knows about it, considering he's been recovering from his injury and not even once acknowledged the release of the English translation.

(P.s. I might make some follow-up posts regarding the butchered translation of the first volume but since I don't live in North America I have no idea if publishing the excerpts from the rest of the volume is even legal and whether they could strike down my post, lol. Honestly, I wouldn't have minded just making a text post and listing all the things butchered, just to be safe, but the fake (or Uso) preview spread is just that bad that it needs to be seen as a whole. Also I've been making this post for ages and it's just one chapter, so I need a small break before I make another post regarding the book's quality check.)

#kyuuketsuki sugu shinu#the vampire dies in no time#tvdint#kyuushi#ronald#draluc#translation#hope the post doesn't flop though it's very likely to do so heh

22 notes

·

View notes

Text

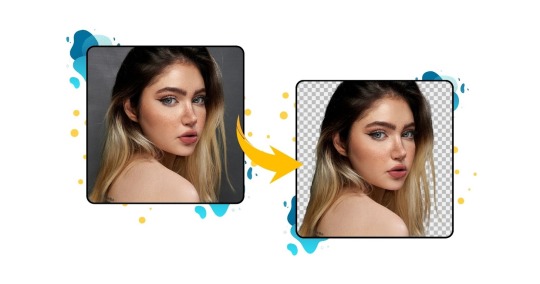

Artificial Intelligence Headshot Tools Explained: How They Develop Professional Business Photos?

AI headshot generators are changing how professionals offer themselves on the internet. These tools utilize artificial intelligence to turn common selfies right into polished, studio-quality pictures - no digital photographer needed. Whether you're upgrading a LinkedIn account or creating group bios for your web site, the process is faster, extra inexpensive, and remarkably exact. But how do these systems really work, and what makes them so effective for corporate usage? Here's a more detailed consider the technology and why AI headshot generators for business specialist branding are getting energy.

What Is an AI Headshot Generator?

An artificial intelligence headshot generator is a digital tool powered by machine learning that transforms user-uploaded pictures right into professional-quality headshots. These tools make use of advanced versions - frequently built on diffusion technology or GANs (Generative Adversarial Networks) - to examine facial functions, lights, and angles. After handling, they output lots or perhaps hundreds of high-resolution photos with diverse histories, attire, and expressions. Some platforms are tailored specifically toward AI headshot generators for business professional use, making certain the outcomes look brightened, certain, and all set for a LinkedIn account or company bio. Discover which AI headshot generators deliver studio-quality outcomes without breaking the bank - tested and ranked, check this.

The Technology Behind the Scenes

At the heart of every AI headshot generator is an experienced neural network. These systems are fed thousands (sometimes millions) of skillfully taken pictures to discover how illumination, angles, shadows, and also garments styles affect the quality of a headshot. When you publish your photos - normally in between 6 to 25 pictures - the AI examines your facial structure, discovers key points like eyes and jawline, and builds a customized version of your face.

From there, the generator uses practical enhancements: changing lights, enhancing resolution, remedying skin tones, and switching out plain backgrounds for professional-looking backgrounds. Some tools also supply editing and enhancing choices like smile adjustment or garments changes to suit company branding demands. This makes AI headshot generators for business professional results both customized and highly nice.

The Key Reasons Why Professionals Are Turning to Artificial Intelligence Headshots

There are several reasons that specialists and businesses are embracing AI-generated headshots. Initially, price and benefit: typical photoshoots are pricey and lengthy. AI tools supply a quick alternative without endangering much on quality. Within an hour or 2, users can obtain a complete gallery of headshots in different styles.

Second, adaptability. The majority of platforms permit modification - whether it's the background, attire, or present - which assists straighten headshots with personal branding or company aesthetics. This is particularly handy for groups requiring consistent account photos across company internet sites and platforms. For consultants, consultants, and remote experts, AI headshot generators for business specialist images offer an one-upmanship without the hassle of studio sessions.

What to Expect from the Process?

Utilizing an AI headshot generator is typically straightforward. After choosing a platform, you'll be asked to post numerous well-lit images of yourself. These need to differ in expression, angle, and background to give the AI enough information to discover your features. As soon as sent, the platform refines your pictures - usually within half an hour to a couple of hours depending on the service level.

You'll obtain a set of headshots to pick from. Depending on the tool, you may be able to make edits, demand retouches, or perhaps change outfits electronically. The most effective platforms focus on providing pictures that look natural, sharp, and ideal for professional use. With many selections now offered, it's much easier than ever to discover AI headshot generators for business professional needs that match your goals and budget.

Conclusion:

AI headshot generators use an effective means to upgrade your expert image with minimal initiative. With accurate face modeling, practical edits, and fast turnaround times, they've ended up being the best solution for numerous seeking studio-quality headshots - without the workshop.

0 notes

Text

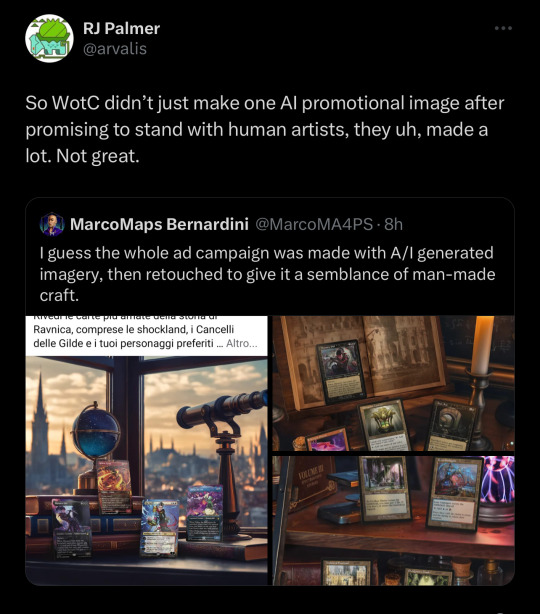

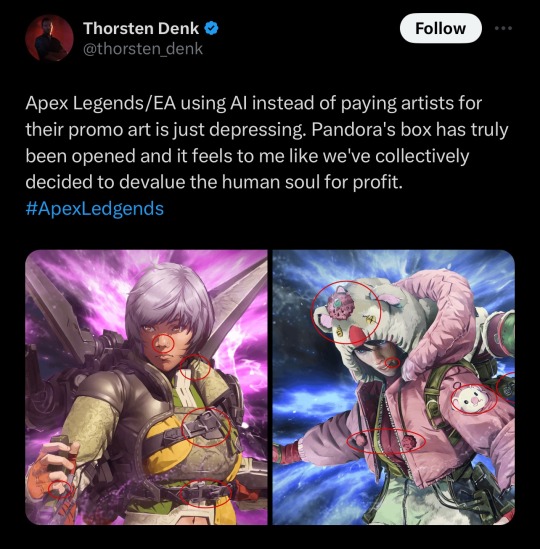

[Image descriptions: 1. Tweet by Hanzhong Wang @ww123td that says, ‘Great job @wacom for using AI generated image for your latest marketing campaign. For a company built on the visual arts industry this ad feels akin to shooting yourself in the foot. Doubly disheartening as a Chinese seeing the dragon used in this way.’ Attached to the Tweet is a Wacom ad that says, ‘A New Wacom For the New Year’ and shows an eastern style dragon posed next to smaller representations of various Wacom tablets, two of which have dragon drawings on them. The tip of a pen comes in from the right. 2. Tweet by Art-Eater @Richmond_Lee that says, ‘2024 is gonna be a nightmare when it comes to news and media. You can no longer trust anything that you see. This isnt just some novel fun tech. It is a scammer’s dream. It’s already being used to screw people over in real life. And everyone is embracing it because of FOMO.’ Attached is a headline that reads, ‘A father is warning others about a new AI “family emergency scam.”’ The snippet reads, ‘Philadelphia attorney Gary Schildhorn received a call from who he believed was his son, saying that he needed money to post bail follownig a car crash. Mr Schildhorn later found out that he nearly fell victim to scammers using AI to clone his son’s voice, reports Andrea Blanco.’ 3. Tweet by RJ Palmer @arvalis that says, ‘So WotC didn’t just make one AI promotional image after promising to stand with human artists, they uh, made a lot. Not great.’ This quotes a Tweet by MarcoMaps Bernardini @MarcoMA4PS that says, ‘I guess the whole ad campaign was made with A/I generated imagery, then retouched to give it a semblance of man-made craft.’ Attached are screenshots of three art pieces. Two are close-ups of various Magic cards displayed on a shelf, and the third is a wider view showing a window with a city view in the background. Cut-off text on this says, ‘Ravnica, comprese le schockland, I Cancelli delle Gilde e i tuoi personaggi preferiti…’ 4. Tweet by Thorsten Denk @thorsten_denk [verified] that says, ‘Apex Legends/EA using AI instead of paying artists for their promo art is just depressing. Pandora’s box has truly been opened and it feels to me like we’ve collectively decided to devalue the human soul for profit. #ApexLegends.’ Attached are two photos of Apex Legends art with red circles showing strange parts that give the art away as AI-generated. 5. and 6. Tweet thread by Magic: The Gathering @wizards_magic that reads: Well, we made a mistake earlier when we said that a marketing image we posted was not created using AI. Read on for more. (1/5) As you, our diligent community pointed out, it looks like some AI components that are now popping up in industry standard tools like Photoshop crept into our marketing creative, even if a human did the work to create the overall image. (2/5) While the art came from a vendor, it’s on us to make sure that we are living up to our promise to support the amazing human ingenuity that makes Magic great. (3/5) We already made clear that we require artists, writers, and creatives contributing to the Magic TCG to refrain from using AI generative tools to create final Magic products. (4/5) Now we’re evaluating how we work with vendors on creative beyond our products – like these marketing images – to make sure that we are living up to those values. (5/5). \End descriptions]

we’re really out here living in an AI dystopian hellscape and this shit has just begun… the future is grim…

22K notes

·

View notes

Text

The Art of Digital Depth: An Expert Overview of Background Blur in Photoshop

1. Introduction: The Art of Digital Depth

Blurring a background isn't merely about obscuring visual information—it's about directing the eye, emphasizing your subject, and mimicking the nuanced behavior of a camera lens. In the realm of Photoshop, this technique has evolved into an art form of its own.

Photoshop new AI tools

2. Why Background Blur Matters in Photography

Background blur, or "bokeh," contributes to image storytelling. It can isolate a subject in a chaotic frame or evoke emotion with softness and glow. Done well, it transforms an average shot into a professional visual narrative.

3. The Power of Photoshop for Blur Effects

Photoshop isn't just a retouching tool—it’s a simulation engine capable of replicating real optical phenomena. It offers a suite of tools that, when used wisely, can emulate the blur produced by the finest DSLR lenses.

4. Overview of Blur Levels in Photoshop

4.1 Time vs. Quality: Choosing the Right Method

Some methods are instant, others intricate. Knowing the trade-off between effort and realism helps select the best approach for your project.

4.2 From Casual Clicks to Pro-Level Precision

This guide spans the spectrum—from simplistic blurs to complex simulations involving depth maps and alpha channels, ensuring there's something for every level of user.

BEGINNER LEVEL

5. Gaussian Blur: A First Step into Digital Depth

5.1 Selecting the Subject with AI

Photoshop's Object Selection Tool or Select Subject simplifies initial isolation. It uses cloud-based AI to analyze the image and identify likely subjects.

5.2 Inverting the Selection for Background Focus

Once the subject is selected, inverting the selection targets the background—perfect for applying effects without touching the focal element.

5.3 Applying Gaussian Blur: Pros and Pitfalls

The Gaussian Blur is straightforward. Found under Filter > Blur, it provides uniform softness. However, its simplicity comes at a cost.

5.4 Halo Effects and Ground Ghosting: Why They Occur

Without edge refinement or content-aware techniques, halos appear around subjects. Ground blur appears unnatural, like the subject is floating.

INTERMEDIATE LEVEL – METHOD 1

6. Field Blur with Content-Aware Cleanup

6.1 Copying the Subject to a New Layer

Pressing Ctrl+J duplicates the selected subject, placing it on its own layer. This separation is key for more advanced manipulation.

6.2 Expanding the Selection: Avoiding Edge Bleed

Using Select > Modify > Expand, users add a few pixels to the background selection, preventing awkward seams or blur bleeding into the subject.

6.3 Filling the Background Using Content-Aware Fill

Edit > Fill > Content-Aware replaces the subject’s former position with generated pixels, allowing a cleaner base for blurring.

6.4 Using Field Blur Pins for Controlled Depth

Field Blur introduces pins to manipulate focal transitions. You can blur more at one point and less at another, creating subtle gradients.

6.5 Adjusting Fall-Off Zones for Natural Look

By carefully spacing pins, you control how quickly blur intensity fades—simulating natural lens behavior.

INTERMEDIATE LEVEL – METHOD 2

7. Camera Raw Lens Blur: AI-Powered Simplicity

7.1 Accessing Camera Raw Filter

Found under Filter > Camera Raw Filter, this tool includes Lens Blur, a semi-automated blur method built for convenience.

7.2 Activating Lens Blur and Auto Subject Detection

With one click, Photoshop analyzes depth zones and applies intelligent blur, guessing which parts of the image should remain sharp.

7.3 Focal Range Adjustments for Realistic DOF

Users can fine-tune blur intensity, adjust focal range, and even shift the depth of field to emphasize or de-emphasize specific elements.

7.4 Strengths and Limitations of the AI Mask

Quick and surprisingly accurate, but not flawless. Hair strands and edges may be misinterpreted, requiring occasional manual cleanup.

ADVANCED LEVEL

8. Neural Filters and the Magic of the Depth Map

8.1 Enabling Depth Blur in Neural Filters

Under Filter > Neural Filters, Depth Blur uses AI to generate a grayscale depth map—the secret sauce for professional-level results.

8.2 Creating a Usable Depth Map Output

Instead of applying the blur immediately, users can export only the depth map. This map becomes the blueprint for custom blur.

8.3 Understanding the Grey-Scale Language of Depth

In the map: black = near, white = far. This tonal hierarchy lets Photoshop simulate optical depth with mathematical precision.

9. Refining the Depth Map Manually

9.1 Using the Brush Tool for Precision Edits

Refine edges by sampling appropriate grayscale tones and brushing directly on the map. This process offers surgical control over focus zones.

9.2 Correcting Edge Imperfections and Hair Details

Flyaway hair and awkward boundaries are frequent troublemakers. Manual edits ensure the depth map mirrors the subject’s real-world silhouette.

9.3 Adjusting Depth Tone Values for Spatial Accuracy

Fine-tuning gray shades adjusts the simulated distance of background elements, enhancing realism by mimicking true focal behavior.

10. Creating an Alpha Channel from Depth Map

10.1 Transferring the Map to a Dedicated Channel

Use Ctrl+A, then Ctrl+C to copy the depth map, and paste it into a new alpha channel (Channels Panel > New Channel).

10.2 Preparing the Image for Lens Blur Application

With Alpha1 holding the depth data, you’re ready to unleash the most authentic blur Photoshop offers.

11. Lens Blur with Alpha Channel: Simulating Real Optics

11.1 Applying Lens Blur Using Depth Information

Filter > Blur > Lens Blur uses the alpha channel to selectively blur based on depth, creating organic and lens-like results.

11.2 Setting the Focal Point Post-Processing

Click "Set Focal Point" and choose your subject. You’re now focusing your image after the shot was taken—a photographer’s dream.

11.3 Tuning Blur Radius and Blur Quality

Adjust blur intensity and choose between "Faster" or "More Accurate." The latter ensures finer rendering at the cost of processing time.

12. Re-Editing the Depth Map for Iterative Refinement

The blur effect is non-destructive. Simply tweak the alpha channel and reapply Lens Blur. This loop empowers perfection.

13. Conclusion: Selecting the Right Tool for the Right Shot

Each method serves a purpose. Simple blurs suit speed, while depth maps reward patience with realism. Context decides the craft.

14. Advice for Photoshop Users: Blur with Intent

Blur isn't a crutch; it’s a deliberate storytelling device. Use it wisely to guide your viewer’s gaze and mood.

15. Encouraging Creative Experimentation

Mix techniques. Combine neural filters with field blur. Blend Gaussian with Camera Raw. Innovation lies in thoughtful combinations.

16. Common Mistakes to Avoid in Background Blur

Avoid over-blurring. Watch out for edge halos and unnatural transitions. Always zoom in to inspect details before finalizing.

17. Final Thoughts on Blur Workflows

True depth doesn’t come from sliders—it’s born of observation, control, and creative intent.

18. Bonus: When to Blend Techniques for Hybrid Results

Sometimes, one tool isn’t enough. Hybrid workflows let you harness speed and precision in tandem.

Thank you for reading. You are welcome to visit my website

Muotokuvaus | Persoonalliset Muotokuvat – Kuvaajankulma

Miljookuvaus Lappeenranta | Luonnonvalossa | Kuvaajankulma

0 notes

Text

Mastering Photo Editing with PhotoCut to Remove Backgrounds, Tattoos, Logos, and Unwanted Objects

In this digital age, photo editing has gone beyond imagination. As a photographer, content creator, entrepreneur, or an individual who loves editing photos for personal use, the right tools offer a huge difference in quality. One such tool, PhotoCut, revolutionizes photo editing. It's an online platform that allows the user to remove backgrounds, erase unwanted objects, and modify specific details of their photos with creativity and confidence. This article will delve into some common photo-editing tasks and how PhotoCut could assist in such tasks as removing backgrounds from passport photos, removing tattoos from photos, eliminating logo backgrounds, and much more.

Create amazing anime profile photos with PhotoCut’s Anime PFP Maker.

Remove Backgrounds from Passport Photos

Passport photos come with a very specific set of guidelines, which include that the background must be clear-cut and solidly colored, such as white. This latter requirement needs to be taken into account when one is taking a photo in unpleasant conditions and cannot afford the background elements that must be removed.

This is a very easy solution that PhotoCut can provide. Using AI-powered background removal technology, you can take an original image and instantly generate a full-bodied subject when viewed against the original background. Even if you have a bright or busy background behind you, PhotoCut is able to find your face and eliminate that background.

It makes it extremely convenient since you don't need to click a whole new passport photo under controlled conditions and spend unnecessary time. All you need to do is upload a picture onto PhotoCut and remove the background. Within a few minutes, it will have created an authentic passport photo that looks like it was taken at a photo studio.

Steps for Removing the Background from Passport Photos

Upload the passport photo to PhotoCut.

The platform will automatically detect the subject and remove the background.

You can refine the edges if needed to ensure the subject is perfectly isolated.

Choose a white or solid color background for your image.

Save the edited image to the required format.

This quick and easy system can even lead to the making of passport images without ever leaving your home.

Remove Tattoos from Your Photos

Many people have a significant source of pride in their tattoos, but there may be a time at some point when a person wants the tattoo removed temporarily from photos. This may be a time when professional images are being shot or perhaps when trying to take holiday pictures for Facebook. Some people may want to avoid being shown with a tattoo in real life, but eliminating it from a photo is much simpler.

A powerful tool for the removal of tattoos from your pictures, Document Scanners, via photo retouching techniques, will allow you to easily erase your tattoos as though nothing had ever touched your skin. Almost around that texture; it doesn't distort the skin or add artificial textures. It will detect that a tattoo is present, replace it with the skin tone from the surrounding area, and produce a natural and smooth final result.

Whether you are trying to enhance a headshot into a more professional look, you want to remove tattoos for a modeling portfolio, or you simply want to see how you would look without a tattoo, PhotoCut is the perfect tool for you.

Steps for Removing Tattoos from Photos

Upload the photo to PhotoCut.

Use the object removal tool to highlight the tattoo area.

The tool will automatically remove the tattoo with skin tones of the surrounding areas.

Finalise edges as well as post-processing for a more realistic look for the photo.

Save and download your photo once edited.

That's right, in just a few clicks, you can make that tattoo disappear, leaving you with a tattooed-void version of the same image looking as real as the original.

Explore apps and tips to invert your photos on an iPhone.

Remove Logo Backgrounds

Logos often turn out to be a disturbing and unwanted feature in photos, especially if images include watermark patterns, company logos, or product labels not suited to either one's design or purpose. PhotoCut makes it easy to erase logos or any other watermark from the photos.

Logo Background Removal is the art of balancing precision and accuracy, such that you do not hurt the other main subject of the photograph. PhotoCut assists in this process by employing sophisticated algorithms to detect and eliminate logos while maintaining the integrity of the remaining image. When working with stock photographs or pictures that contain logos you don't have authorization to use, this is useful. To remove the business logo and whatever background it may have, for instance, you may use PhotoCut while creating a marketing brochure and wish to include a photo of a product. The tool allows quick removal of the logo and substitution with something more suitable for your project.

Steps for Removing Logo Backgrounds

Select the image that has the logo and upload it to PhotoCut.

Use the background removal tool to select the logo.

You will then get a clean image when the tool removes the branding.

To get rid of watermarks, brand logos, and other undesirable text that could show up on a picture you are working with, this is a useful process.

Discover stunning YouTube thumbnail ideas for your next YouTube video thumbnail.

Remove Photo Backgrounds for Free

Many people want to take their backgrounds off photos, but do not want to pay for very pricey software or applications to do it. Well, PhotoCut gives an easy and free solution for background removal without all the hassle of software subscriptions.

Using PhotoCut, a user can simply upload their photo, and the tool will automatically detect and remove the background in a click or two. You don't have to be a born professional designer to use this. The tool is user-friendly for everyone, from beginners to the most experienced users, and this is because it is designed for modern comfort. For any usages of transparent backgrounds, from making a product image to putting your subject in a new environment, PhotoCut helps to free up your images from any background.

In addition to the aid of PhotoCut, which is free, one can also add new backgrounds, change the colors of the background, or even have one's own custom background. Hence, you can remove a photo's background discomfort and replace it with whatever suits your needs.

Steps for Removing Photo Backgrounds for Free

Upload the image that you want to edit to PhotoCut.

The platform will automatically detect the subject and remove the background.

Refine the edges if necessary to ensure the subject looks natural.

Save the backdrop-free image and download it in any format of your choice.

This feature is ideal for anyone who has to deal with transparent imagery or background replacements for assignments such as e-commerce listings, graphic design, or social media posts.

Generate unique and creative photo backgrounds with PhotoCut’s AI Background Generator.

Remove Unwanted Objects from Photos

Photos might occasionally contain extraneous elements or distractions that detract from the topic or point you're attempting to make. It might be a person strolling behind the main subject, an object that inadvertently entered the frame, or even an object that isn't supposed to be there. Well, PhotoCut can let you easily get rid of all these unwanted elements.

An object removal tool will work by identifying the section you want to remove and then filling that space with a background that matches the surroundings. For example, let's say you have taken a portrait photo, but somehow, there happens to be a stray object found in the background. PhotoCut will remove that object and automatically fill that space with the proper background.

This tool can be helpful to photographers, marketers, and anyone who either cleans or processes images for formal purposes.

Steps for Removing Unwanted Objects

Start by uploading the image containing the unwanted object to PhotoCut.

Next, highlight the area around the unwanted object using the object removal tool.

The platform automatically removes the object and fills the background seamlessly.

Then, touch up the edges, if needed, using the available touch-up tools.

Finally, save and download the image as it has been altered.

It is very easy to remove unwanted objects, and indeed, PhotoCut properly helps in enhancing your images without you having to learn complex photo manipulation.

Conclusion

PhotoCut is a very versatile and user-friendly photo editing software that makes all photo editing tasks easily manageable, from passport photographs to tattoo removal, logo removal, or the elimination of unwanted objects in your images. PhotoCut makes all tasks very effective and efficient. Its intelligent photo editing tools and easy-to-understand interface cater to both the novices as well as the professionals. You can take your image enhancing skills to a different level by photo editing easily using PhotoCut and produce as good quality as a professional within no time and all from the comfort of your computer or mobile device.

Quite a lot can be done with PhotoCut, creating ideally user-friendly tools to individualize traditional photo editing. If you need an easy yet adequate photo editor to do all things, such as background elimination, tattoo removal, logo background elimination, or photo object removal, PhotoCut is for you. It offers several free and premium features that you can use to achieve a perfect image with a few clicks.

Convert your photos into coloring pages online for free with PhotoCut.

FAQs

Q1. What background color is usually required for a passport photograph?

Ans. It varies to a limited extent but is most commonly a plain white background or off-white. Always check the official guidelines for your country.

Q2. What should be noted while editing the background in a passport photo?

Ans. Here are some of the most important considerations:

Precision: Precise visibility of the facial features, right proportion.

Natural Appearance: Never make any edits that render it unnatural to look.

Shadows: The image must not have any direct shadow on the face and background.

Lighting: The lighting must be even.

Size and Resolution: The perfect image would need to meet the appropriate size and resolution.

Compliance: Double-check all the issuing agency's requirements (size, background, expression, etc).

Q3. What are the common tools used for removing tattoos from photos?

Ans. The most common tools are the Clone Stamp Tool and Content-Aware Fill (or a similar tool in other software). The Healing Brush may also be of help.

Q4. How difficult is it to remove a tattoo cleanly?

Ans. It is very difficult for a tattoo that is big, bright, or anatomical. It requires patience and careful attention to detail to make the skin look natural.

Q5. What formats are available for logos with non-background transparent files?

Ans. The two most used formats are SVG (Scalable Vector Graphics) and PNG (Portable Network Graphics). SVG is a scalable vector format that may be shrunk without sacrificing quality, but PNG supports transparency. Although GIF may also be transparent, its limited color palette makes it less useful for logos than PNG.

Q6. Isn't it better to have a vector version of my logo for simple removal of backgrounds?

Ans. Yes. Vector logos are ideal because they make backdrop removal simple and neat. They are made in Adobe Illustrator or other applications that create high-quality pictures that can be scaled or altered without affecting quality.

Q7. What does Content-Aware Fill mean, and how does it apply to object removal?

Ans. An extremely useful feature in Photoshop and other software programs is Content-Aware Fill, which fills in a region of a picture with a texture and pattern that is comparable to its surroundings by automatically matching what surrounds that area. It is widely used in object removal.

Q8. What are some tips for seam object removal?

Ans. Here are some tips:

Choose the correct tool: Content-Aware Fill will do very well for uncomplicated removals, but more complex ones may require the Clone Stamp or Healing Brush of your software.

Zoom in: For accuracy, work at a very high zoom setting.

Carefully sample: Use the Clone Stamp on source regions that are reasonably comparable to the area you want to cover in terms of color, texture, and lighting.

Feather edges: To merge the edges of your choices, add a little feather.

Patterns matter: Take care not to destroy repeated patterns in the picture. Don't overdo it: Sometimes the least goes the longest way. A subtle edit is often better than one that attacks aggressively.

Take your time: Hurrying can lead to apparent mistakes.

Q9. What are the main reasons someone would want to remove a background or object from a photo?

Ans. There are many reasons! Some common ones include:

Aesthetics: To create a cleaner, more focused image.

Professionalism: For product photos or headshots. A distracting background can take away from the subject.

Composites and Manipulation: To isolate a subject for placement in a different scene or to create a composite image.

Passport and ID Photos: Specific background requirements often necessitate removal or modification.

Removing Distractions: Getting rid of unwanted objects like trash cans or photobombers.

Creative Purposes: To create artistic effects or emphasize certain elements.

Q10. What are some common tools used to remove backgrounds and objects from photos?

Ans. A wide range of tools are available, from simple online editors to professional software:

Automatic Background Removal Tools (Online): Many websites offer one-click background removal using AI. Examples include remove.bg, cutout.pro, and others.

Basic Mobile and Desktop Photo Editors: These applications have basic object removal options, such as Snapseed, PicsArt, and Fotor.

More Advanced Photo Editors (Windows): Useful these days are software such as Adobe Photoshop and GIMP with new online versions for free, Affinity Photo, and Corel Paintshop Pro. They use tools such as the Lasso Tool, Magic Wand, Clone Stamp Tool, Content-Aware Fill, and selection brushes to produce more accurate control.

Dedicated Background Removal Software: Programs that specifically target the subject of background removal, with highly accurate and advanced tools used.

Q11. How do "free" background removal tools work? Are they free?

Ans. Many "free" online background removal tools use AI (Artificial Intelligence) to automatically detect and remove the background. They are often partially free. This usually means:

Free for low-resolution downloads: The free version might only allow you to download a smaller, lower-quality version of the edited image.

Watermarks: The free version might add a watermark to the image.

Limited Usage: You might be limited to a certain number of free removals per day or month.

Upselling: The bait of a free service will often be to upgrade to a paid plan for downloading higher resolutions, unlimited use, and watermark-free downloads.

Q12. What are the advantages of using paid software to remove backgrounds?

Ans. Paid software (like Photoshop) offers several advantages:

Greater Control: More diverse selection tools and more editing options.

Higher Quality: Edit and save images at true resolution without watermarks.

More Advanced Features: Examples of advanced features are layer masks, content-aware fill, and refined edge selection for use in complicated cases.

Non-Destructive Editing: Most of these paid programs allow changes in the original without actually changing the original image.

Professional Results: Overall quality results shall be better, especially for complex images.

0 notes

Text

28 Jan - 5 Feb | Illustrated Article (assignment)

SAS - Image Manipulation

Your first piece of work that will go towards assessment for this project is going to be a 2500 word illustrated article. Your article will be based on the learning we have done on image manipulation so far.

Key points:

Written article, written and spell checked in Word.

Designed across 8 pages (4 spreads) in Adobe InDesign - try to keep your layout designs within the same style as Be Kind magazine.

Your article should be titled ‘The Manipulated Image’.

2500 words (+ or - 10%)

‘Illustrated’ means including images - include a mix of images edited by you, plus secondary images (credited).

Include specific examples to help evidence your points, with images. PEEL technique is useful!

Exported as .PDF format with Spreads ticked.

TIP: Look back at all our previous lessons (links below) and your Supporting Document before you start writing - everything you need to know for this assignment is there. You may use some of the writing you have already done.

6 Jan | Brief History of Image Manipulation 7 Jan | Analysing Image Manipulation 8-13 Jan | Airbrushing & Retouching 14 Jan | AI Generated Images 15 Jan | Instagram VS Reality 20 Jan | ‘Nosedive’ case study 21 Jan | Black Mirror Concept 22 Jan | The 'Deinfluencer' social movement 27 Jan | Legal issues

You can also refer to Learning Resources from Moodle here.

Your article must include these sections - where it says main topic, these sections should be the largest:

Title - The Manipulated Image

Introduction – Open your article with the questions you will be investigating regarding the world of image manipulation. Keep things rhetorical - you shouldn’t answer any of your own questions yet. Instead, focus on drawing in the reader to be intrigued about what ‘image manipulation’ could mean. Keep it short.

History (main topic)- Look back to the past: give some background information about what 'image manipulation’ means and a brief overview of its history. Explain the development of approaches to image manipulation and the associated techniques up until what's possible in present day.

Purposes - Evaluate 2+ examples of image manipulation from different areas - be descriptive of the techniques used and their effectiveness in fulfilling their perceived purpose.

Legal issues - Evaluate 2+ examples of a copyright infringement case and a fair use case - be detailed in what the issue was and how it was resolved.

Ethical issues (main topic) - Evaluate the potential negative effects of airbrushing and liquify, AI, image curation, social media influencers and distrust on individuals and society. Make reference to Black Mirror.

Conclusion: Keep it short. Summarise your overall views on image manipulation - is image manipulation unethical, or does it have its uses? What could the future look like?

Document setup

To set up your InDesign file:

Create a new Print document, A4.

Change pages to 8.

Change start #1 to 2 to ensure pages sit side by side.

When exporting your InDesign file at the end:

Click File > Export and choose PDF.

Name your file Illustrated Article (your name)

Make sure that Spreads is ticked, rather than Pages. This will keep your pages sat side by side once exported.

Deadline:

You have until Wednesday 5th January at 10pm.

Upload 1 PDF file to Moodle here.

0 notes

Text

#interesting point but i think it misses some important aspects#if someone is using prompts to generate artwork thats less making art and more commissioning an AI to make art for them#and if someone orders a commission it an asshole move to turn around and say 'i made this'#eventually i hope we can reach a point where AI is ethically done and we can have a clear idea around what is/isnt acceptable#cause image generation has so much potential!! theres so much power behind these systems#but in its current iteration most AI artwork is unethical and using it as an assistance program doesnt negate that fact#no ill will btw! its just a very interesting and nuanced topic to me skjfkfj (via @alveolion)

for the commission angle, there are some similarities, but I don't think it tells the full story. effective use of AI image generation is not just describing the image to the machine, but rather involves a complex iterative process of learning how the AI response to relevant concepts and how to communicate the right combination of ideas to it, potentially over a long period of time. it can also involve customizing the model yourself, using a sketch or photo as part of the prompt with tools line ControlNet, and touching it up later - potentially with more detailed use of AI!

for example, the left image was the one I originally generated with the prompt, then the right one was the result of using an AI retouch tool to clean up some oddities around the hands:

did I draw this image? no. but I didn't commission it, either. I've commissioned images of the same character (such as the one in my icon) and the process was very different! including that there's no other human involved in this process, just a complex assistive tool. so I would say I did create it - in a different way from previous ideas of creating images, but we have to expand those ideas in order to properly account for new technologies - that was my whole point in the first place!

as for ethics, do you mean the inclusion of copyrighted materials in training sets without permission from the copyright holders? if so, no, I'll reiterate that there's nothing unethical about that:

someone drawing with their hands can make fanart of a character from their favorite show by learning the character's appearance from visual depictions of them and then drawing something inspired by that, without needing permission someone using AI should be able to make fanart of a character from their favorite show by having the AI learn the character's appearance from visual depictions of them and then generating something inspired by that, without needing permission that's not stealing, it's the normal process of drawing from influences. denying people that opportunity is a denial of accessibility

as for figuring out appropriate interactions between that and commercial uses of AI art, I actually just wrote up some stuff about that earlier today!

the more I understand the role of AI as an assistive tool, the more I realize how much we need to be discussing it openly in those terms. how much we need to treat it like any other form of disability advocacy

and when you recognize AI as an assistive program, so much of this looks very different

"this company used an assistive program to put out bogus information" - the company is shit, get their ass

"assistive programs are just toys" - no the fuck they aren't

"should people be able to monetize art created through assistive programs?" - yes, obviously

"art created though assistive programs is often low-quality and struggles with certain functions" - so build better ones and give people the chance to get better at using them

"the company making this assistive program optimizes it for their own profits rather than assistive functionality" - so find better people to make open-source versions without those issues

"we can't let assistive programs offer too easy of a way to compete with people who don't use them" - we can and should

"what about the economic impacts?" - we can find other ways to address them

people who use assistive programs are soulless hacks" - WHAT THE FUCK IS THE MATTER WITH YOU?

51 notes

·

View notes

Photo

The Coming Age of Imaginative Machines: If you aren't following the rise of synthetic media, the 2020s will hit you like a digital blitzkrieg

The faces on the left were created by a GAN in 2014; on the right are ones made in 2018.

Ian Goodfellow and his colleagues gave the world generative adversarial networks (GANs) five years ago, way back in 2014. They did so with fuzzy and ethereal black & white images of human faces, all generated by computers. This wasn't the start of synthetic media by far, but it did supercharge the field. Ever since, the realm of neural network-powered AI creativity has repeatedly kissed mainstream attention. Yet synthetic media is still largely unknown. Certain memetic-boosted applications such as deepfakes and This Person Does Not Exist notwithstanding, it's safe to assume the average person is unaware that contemporary artificial intelligence is capable of some fleeting level of "imagination."

Media synthesis is an inevitable development in our progress towards artificial general intelligence, the first and truest sign of symbolic understanding in machines (though by far not the thing itself--- rather the organization of proteins and sugars to create the rudimentary structure of what will someday become the cells of AGI). This is due to the rise of artificial neural networks (ANNs). Popular misconceptions presume synthetic media present no new developments we've not had since the 1990s, yet what separates media synthesis from mere manipulation, retouching, and scripts is the modicum of intelligence required to accomplish these tasks. The difference between Photoshop and neural network-based deepfakes is the equivalent to the difference between building a house with power tools and employing a utility robot to use those power tools to build the house for you.

Succinctly, media synthesis is the first tangible sign of automation that most people will experience.

Public perception of synthetic media shall steadily grow and likely degenerate into a nadir of acceptance as more people become aware of the power of these artificial neural networks without being offered realistic debate or solutions as to how to deal with them. They've simply come too quickly for us to prepare for, hence the seemingly hasty reaction of certain groups like OpenAI in regards to releasing new AI models.

Already, we see frightened reactions to the likes of DeepNudes, an app which was made solely to strip women in images down to their bare bodies without their consent. The potential for abuse (especially for pedophilic purposes) is self-evident. We are plunging headlong into a new era so quickly that we are unaware of just what we are getting ourselves into. But just what are we getting into?

Well, I have some thoughts.

I want to start with the field most people are at least somewhat aware of: deepfakes. We all have an idea of what deepfakes can do: the "purest" definition is taking one's face replacing it with another, presumably in a video. The less exact definition is to take some aspect of a person in a video and edit it to be different. There's even deepfakes for audio, such as changing one's voice or putting words in their mouth. Most famously, this was done to Joe Rogan.

I, like most others, first discovered deepfakes in late 2017 around the time I had an "epiphany" on media synthesis as a whole. Just in those two years, the entire field has seen extraordinary progress. I realized then that we were on the cusp of an extreme flourishing of art, except that art would be largely-to-almost entirely machine generated. But along with it would come a flourishing of distrust, fake news, fake reality bubbles, and "ultracultural memes". Ever since, I've felt the need to evangelize media synthesis, whether to tell others of a coming renaissance or to warn them to be wary of what they see.

This is because, over the past two years, I realized that many people's idea of what media synthesis is really stops at deepfakes, or they only view new development through the lens of deepfakes. The reason why I came up with "media" synthesis is because I genuinely couldn't pin down any one creative/data-based field AI wasn't going to affect. It wasn't just faces. It wasn't just bodies. It wasn't just voice. It wasn't just pictures of ethereal swirling dogs. It wasn't just transferring day to night. It wasn't just turning a piano into a harpsichord. It wasn't just generating short stories and fake news. It wasn't just procedurally generated gameplay. It was all of the above and much more. And it's coming so fast that I fear we aren't prepared, both for the tech and the consequences.

Indeed, in many discussions I've seen (and engaged in) since then, there's always several people who have a virulent reaction against the prospect neural networks can do any of this at all, or at least that it'll get better enough to the point it will affect artists, creators, and laborers. Even though we're already seeing the effects in the modeling industry alone.

Look at this gif. Looks like a bunch of models bleeding into and out of each other, right? Actually, no one here is real. They're all neural network-generated people.

Neural networks can generate full human figures, and altering their appearance and clothing is a matter of changing a few parameters or feeding an image into the data set. Changing the clothes of someone in a picture is as easy as clicking on the piece you wish you change and swapping it with any of your choice (or result in the personal wearing no clothes at all). A similar scenario applies for make-up. This is not like an old online dress-up flash game where the models must be meticulously crafted by an art designer or programmer— simply give the ANN something to work with, and it will figure out all the rest. You needn't even show it every angle or every lighting condition, for it will use commonsense to figure these out as well. Such has been possible since at least 2017, though only with recent GPU advancements has it become possible for someone to run such programs in real time.

The unfortunate side effect is that the amateur modeling industry will be vaporized. Extremely little will be left, and the few who do remain are promoted entirely because they are fleshy & real human beings. Professional models will survive for longer, but there will be little new blood joining their ranks. As such, it remains to be seen whether news and blogs speak loudly of the sudden, unexpected automation of what was once seen as a safe and human-centric industry or if this goes ignored and under-reported— after all, the news used to speak of automation in terms of physical, humanoid robots taking the jobs of factory workers, fast-food burger flippers, and truck drivers, occupations that are still in existence en masse due to slower-than-expected roll outs of robotics and a continued lack of general AI.

We needn't have general AI to replace those jobs that can be replicated by disembodied digital agents. And the sudden decline & disappearance of models will be the first widespread sign of this.

Actually, I have an hypothesis for this: media synthesis is one of the first signs that we're making progress towards artificial general intelligence.

Now don't misunderstand me. No neural network that can generate media is AGI or anything close. That's not what I'm saying. I'm saying that what we can see as being media synthesis is evidence that we've put ourselves on the right track. We never should've thought that we could get to AGI without also developing synthetic media technology.

What do you know about imagination?

As recently as five years ago, the concept of "creative machines" was cast off as impossible— or at the very least, improbable for decades. Indeed, the phrase remains an oxymoron in the minds of most. Perhaps they are right. Creativity implies agency and desire to create. All machines today lack their own agency. Yet we bear witness to the rise of computer programs that imagine and "dream" in ways not dissimilar to humankind.

Though lacking agency, this still meets the definition of imagination.

To reduce it to its most fundamental ingredients: Imagination = experience + abstraction + prediction. To get creativity, you need only add "drive". Presuming that we fail to create artificial general intelligence in the next ten years (an easy thing to assume because it's unlikely we will achieve fully generalized AI even in the next thirty), we still possess computers capable of the former three ingredients.

Someone who lives on a flat island and who has never seen a mountain before can learn to picture what one might be by using what they know of rocks and cumulonimbus clouds, making an abstract guess to cross the two, and then predicting what such a "rock cloud" might look like. This is the root of imagination.

As Descartes noted, even the strongest of imagined sensations is duller than the dullest physical one, so this image in the person's head is only clear to them in a fleeting way. Nevertheless, it's still there. Through great artistic skills, the person can learn to express this mental image through artistic means. In all but the most skilled, it will not be a pure 1-to-1 realization due to the fuzziness of our minds, but in the case of expressive art, it doesn't need to be.

Computers lack this fleeting ethereality of imagination completely. Once one creates something, it can give you the uncorrupted output.

Right now, this makes for wonderful tools and apps that many play around with online and on our phones.

But extrapolating this to the near future results in us coming face to face many heavy questions, and not just of the "can't trust what you see variety."

Because think about it.

If I'm a musical artist and I release an album, what if I accidentally recorded a song that's too close to an AI-generated track (all because AI generated literally every combination of notes?) Or, conversely, what if I have to watch as people take my music and alter it? I may feel strongly about it, but yet the music has its notes changed, its lyrics changed, my own voice changed, until it might as well be an entirely different artist making that music. Many won't mind, but many will.

I trust my mother's voice, as many do. So imagine a phisher managing to steal her voice, running it through a speech synthesis network, and then calling me asking me for my social security number. Or maybe I work at a big corporation, and while we're secure, we still recognize each other's voice, only to learn that someone stole millions of dollars from us because they stole the CEO's voice and used to to wire cash to a pirate's account.

Imagine going online and at least 70% of the "people" you encounter are bots. They're extremely coherent, and they have profile images of what looks to be real people. And who knows, you may even forge an e-friendship with some of them because they seem to share your interests. Then it turns out they're just bundles of code.

Oh, and those bot-people are also infesting social media and forums in the millions, creating and destroying trends and memes without much human input. Even if the mainstream news sites don't latch on at first, bot-created and bot-run news sites will happily kick it off for them. The news is supposed to report on major events, global and local. Even if the news is honest and telling the truth, how can they truly verify something like this, especially when it seems to be gaining so much traction and humans inevitably do get involved? Remember "Bowsette" from last year? Imagine if that was actually pushed entirely by bots until humans saw what looked like a happenin' kind of meme and joined in? That could be every year or perhaps even every month in the 2020s onwards.

Likewise, imagine you're listening to a pop song in one country, but then you go to another country and it's the exact same song but most of the lyrics have changed to be more suitable for their culture. That sort of cultural spread could stop... or it could be supercharged if audiences don't take to it and pirate songs/change them and share them at their own leisure.

Or maybe it's a good time to mention how commissioned artists are screwed? Commission work boards are already a race to the bottom— if a job says it pays three cents per word to write an article, you'd better list your going rate as 2 cents per word, and then inevitably the asking rate in general becomes 2 cents per word, and so on and so forth. That whole business might be over within five to ten years if you aren't already extremely established. Because if machines can mimic any art style or writing style (and then exaggerate & alter it to find some better version people like more), you'd have to really be tech-illiterate or very pro-human to want non-machine commissions.

And to go back to deepfakes and deep nudes, imagine the paratypical creep who takes children and puts them into sexual situations, any sexual situation they desire thanks to AI-generated images and video. It doesn't matter who, and it doesn't have to be real children either. It could even be themselves as a child if they still have the reference or use a de-aging algorithm on their face. It's squicky and disgusting to think about, but it's also inevitable and probably has already happened.

And my god, it just keeps going on and on. I can't do this justice, even with 40,000 characters to work with. The future we're about to enter is so wild, so extreme that I almost feel scared for humanity. It's not some far off date in the 22nd century. It's literally going to start happening within the next five years. We're going to see it emerge before our very eyes on this and other subreddits.

I'll end this post with some more examples.

Nvidia's new AI can turn any primitive sketch into a photorealistic masterpiece. You can even play with this yourself here.

Waifu Synthesis- real time generative anime, because obviously.

Few-Shot Adversarial Learning of Realistic Neural Talking Head Models | This GAN can animate any face GIF, supercharging deepfakes & media synthesis

Talk to Transformer | Feed a prompt into GPT-2 and receive some text. As of 9/29/2019, this uses the 774M parameter version of GPT-2, which is still weaker than the 1.5B parameter "full" version."

Text samples generated by Nvidia's Megatron-LM (GPT-2-8.3b). Vastly superior to what you see in Talk to Transformer, even if it had the "full" model.

Facebook's AI can convert one singer's voice into another | The team claims that their model was able to learn to convert between singers from just 5-30 minutes of their singing voices, thanks in part to an innovative training scheme and data augmentation technique. as a prototype for shifting vocalists or vocalist genders or anything of that sort.

TimbreTron for changing instrumentation in music. Here, you can see a neural network shift entire instruments and pitches of those new instruments. It might only be a couple more years until you could run The Beatles' "Here Comes The Sun" through, say, Slayer and get an actual song out of it.

AI generated album covers for when you want to give the result of that change its own album.

Neural Color Transfer Between Images [From 2017], showing how we might alter photographs to create entirely different moods and textures.

Scammer Successfully Deepfaked CEO's Voice To Fool Underling Into Transferring $243,000

"Experts: Spy used AI-generated face to connect with targets" [GAN faces for fake LinkedIn profiles]

This Marketing Blog Does Not Exist | This blog written entirely by AI is fully in the uncanny valley.

Chinese Gaming Giant NetEase Leverages AI to Create 3D Game Characters from Selfies | This method has already been used over one million times by Chinese gamers.

"Deep learning based super resolution, without using a GAN" [perceptual loss-based upscaling with transfer learning & progressive scaling], or in other words, "ENHANCE!"

Expert: AI-generated music is a "total legal clusterf*ck" | I've thought about this. Future music generation means that all IPs are open, any new music can be created from any old band no matter what those estates may want, and AI-generated music exists in a legal tesseract of answerless questions

And there's just a ridiculous amount more.

My subreddit, /r/MediaSynthesis, is filled with these sorts of stories going back to January of 2018. I've definitely heard of people come away in shock, dazed and confused, after reading through it. And no wonder.

6 notes

·

View notes

Photo