#artificial intelligence paragraph writing

Explore tagged Tumblr posts

Video

youtube

Essay on Artificial Intelligence in English | Write a Paragraph on Artificial Intelligence Paragraph

My handwriting is about an essay on Artificial Intelligence in English. So I decided to write a paragraph on Artificial Intelligence (AI). The artificial Intelligence paragraph for class 9 is important. Get an idea about Artificial Intelligence Essay in English in a few lines on Artificial Intelligence. AI Artificial Intelligence paragraph means the role of Artificial Intelligence paragraph in English. Artificial Intelligence paragraph writing is very easy. Try the artificial intelligence paragraph in 200 words. Practice Artificial Intelligence paragraph HSC 150 words.

10 Lines on Artificial Intelligence (AI):

1. With Artificial Intelligence (AI) means making machines smart like humans.

2. AI helps machines to think, learn, and do tasks on behalf of humans.

3. AI is used in everyday things like phones, computers, and apps.

4. Humans benefit from using AI.

5. AI helps doctors to find diseases and give better treatments.

6. It is used in self-driving cars to help them move safely.

7. AI can suggest movies, songs, or products we might like.

8. It can understand speech and recognize faces in photos.

9. Some people worry that AI might take away jobs.

10. If used wisely, AI can help solve many problems in the world.

#artificialintelligence #artificialintelligencetechnology #handwriting #handwritingtips #paragraph #paragraph_writing #paragraph_suggestions #paragraph_short #paragraphformat #paragraphwriting #handwritingpractice #paragraphwritingformat #paragraphwritinginenglish

#youtube#essay on artificial intelligence in english#artificial intelligence paragraph#artificial intelligence paragraph class 9#artificial intelligence essay in english#write a paragraph on artificial intelligence#few lines on artificial intelligence#role of artificial intelligence paragraph#artificial intelligence paragraph in english#artificial intelligence paragraph easy#artificial intelligence paragraph writing#artificial intelligence paragraph hsc

1 note

·

View note

Text

students using ch4tgpt to write essays and do their homework for them is a problem that goes hand in hand with the declining literacy rate btw

#the kids cant read!! so they cant write!!#please please please prioritize reading#if you cant construct a 5 sentence paragraph without the help of artificial intelligence then baby YOU are the problem!#im sorry but i just got a yt ad for grammarly with it advertising an email prompt into ch4tgpt to make it more ✨ inspirational ✨#and i wanted to scream#like nobody smart is doing that. we know how to construct a sentence with the right adjectives and verbs

7 notes

·

View notes

Text

so. instead of porn i decided to FINALLY start writing chapter 2 for the AI will au ('perfect machine') that i posted an intro to like two years ago now. saw that i got a new bookmark on it and it kinda made me wanna pick it back up

#might honestly write some of the later chapters first and work backwards#bc its rlly difficult to write from the pov of an artificial intelligence that's been given life#and a physical body and has zero concept of what anything is#and learns as it goes#so yeah trying to describe stuff from that pov is rlly fucking difficult man.#but i'm committing to the writing style choice i made and i'm gonna just go with it#i dont have very much of it done yet but when i get a few more paragraphs done#i might post what i have so far

1 note

·

View note

Text

I'm reading Life in Code by Ellen Ullman, writings on tech and philosophy.

I love the chapter "Is Sadie the Cat a Trick?", where Ullman talks of the 19 years she had with her cat Sadie, and - while reflecting on Artificially Intelligence - wonders whether the relationship between them was an illusion.

Was Sadie merely behaving according to her "programming"? Was Ullman merely imagining sentience?

Ullman considers various aspects of her relationship with Sadie. First, companionship. Second, familiarity:

[H]er coming to meet me at the door (even when her bowl was full, so it was not in the hope of getting dinner). There was mutual recognition of ritual: I knew the time of day when she moved to her favourite chair to take the sun, so I anticipated it and raised the shade. She knew I wrote in the morning, and, before I got to the desk, she was lying on her pillow by the heater, which had not yet been turned on. If it were just warmth she'd wanted, she could have stayed in bed with Elliot, who was living with me by then. Instead, she decided she would wait for me by a cold heater.

I love this paragraph very much. This reflection on the familiar behaviour of a pet, ostensibly to work out if there's a difference between a pet and sufficiently advanced AI. But also, it seems to me that this is an investigation into love itself.

Did Ullman's cat love her? Do any of our pets love us?

Instinctively, we think "yes". Ullman has applied a programmer's mind - and a philosopher's mind - to the question:

Companionship, familiarity, expectation, mutual recognition, bodily comfort: if this is not a definition of love between aging creatures, I don't know what is.

I'm away from home for a few days, with gigs in London and Birmingham. I always miss my wife when I'm away, and this essay hasn't helped at all thank you.

It makes me think of our own rituals. Sometimes - not often - I'm awake before Elanor. I know what time her alarm will go off, so I join her in bed a minute before, so she wakes up gently to a cuddle before the cold brutality of the alarm. When I'm working in the study, Elanor will sometimes open the door a crack, and wait to be invited in.

This is the difference between love and programming. Last time I was away, Elanor defrosted the freezer - an accomplishment of immense bravery and determination. She didn't tell me this, but when I came home she challenged me to work out what chore she'd done while I was away - knowing that, letting me discover this action by opening the freezer would be the best possible way for me to find out she'd done this.

How did Elanor know this? We've been together 20 years this month. Perhaps she reflected on the fact that I'm delighted by surprise, and by playfulness. Maybe she thought about the way I like stories - and that investigating the house, searching for new jobs completed, would give me the thrill of an adventure. It's possible she considered my love of novelty and shared experiences - and came up with this way of presenting her accomplishment according to these principles; these techniques for controlling the reveal.

All of this *could* be true. That Elanor consciously processed, analysed the data. Her understanding of me. Maybe, perhaps, possibly.

But I think she just knew.

Familiarity. Expectation. Recognition. Love between aging creatures!

People are so excited that generative AI can produce ugly pictures and bland copy. But I don't think it would curl up in front of a cold heater in an empty study.

Because any relationship with a program is an illusion. It isn't love. Because love isn't defrosting the freezer. Love is defrosting the freezer while your partner's away, anticipating their response, looking forward to their joy.

271 notes

·

View notes

Note

honestly, the whole ai fight or disagreement thing is kinda insane. we’re seeing the same pattern that happened when the first advanced computers and laptops came out. people went on the theory that they’d replace humans, but in the end, they just became tools. the same thing happened in the arts. writing, whether through books or handwritten texts, has survived countless technological revolutions from ancient civilizations to our modern world.

you’re writing and sharing your work through a phone, so being against ai sounds a little hypocritical. you might as well quit technology altogether and go 100 percent analog. it’s a never ending cycle. every time there’s a new tech revolution, people act like we’re living in the terminator movies even though we don’t even have flying cars yet. ai is just ai and it’s crappy. people assume the worst but like everything before it it will probably just end up being another tool because people is now going to believe anything, nowadays.

Okay so...no. It's never that black and white. Otherwise I could argue that you might as well go 100% technological and never touch grass again. Which sounds just as silly. There are many problems with AI and it's more than just 'robots taking over'. It's actually a deeper conversation about equity, ethics, environmentalism, corruption and capitalism. That's an essay I'm not sure a lot of people are willing to read, otherwise they would be doing their own research on this. I'll sum it up the best I can.

DISCLAIMER As usual I am not responsible for my grammar errors, this was written and posted in one go and I did not look back even once. I'm not a professional source. I just want to explain this and put this discussion to rest on my blog. Please do your own research as well.

There's helpful advancement tools and there's harmful advancement tools. I would argue that AI falls into the latter for a few of reasons.

It's not 'just AI', it's a tool weaponised for more harm than good: Obvious examples include deep fakes and scamming, but here's more incase you're interested.

A more common nuisance is that humans now have to prove that they are not AI. More specifically, writers and students are at risk of being accused of using AI when their work reads more advance that basic writing criteria. I dealt with this just last year actually. I had to prove that the essay I dedicated weeks of my time researching, writing and gathering citations for was actually mine.

I have mutuals that have been accused of using AI because their writing seems 'too advanced' or whatever bs. Personally, I feel that an AI accusation is more valid when the words are more hollow and lack feeling (as AI ≠ emotional intelligence), not when a writer 'sounds too smart'.

"You're being biased."

Okay, here is an unbiased article for you. Please don't forget to take note of the fact that the negative is all stuff that can genuinely ruin lives and the positive is stuff that makes tasks more convenient. This is the trend in every article I've read.

Equity, ethics, corruption, environmentalism and capitalism:

Maybe there could be a world where AI is able to improve and truly help humans, but in this capitalistic world I don't see it being a reality. AI is not the actual problem in my eyes, this is. Resources are finite and lacking amongst humans. The wealthy hoard them for personal comfort and selfish innovations leading to more financial gain, instead of sharing them according to need. Capitalism is another topic of its own and I want to keep my focus on AI specifically so here are some sources on this topic. I highly recommend skimming through them at least.

> Artificial Intelligence and the Black Hole of Capitalism: A More-than-Human Political Ethology > Exploiting the margin: How capitalism fuels AI at the expense of minoritized groups > Rethinking of Marxist perspectives on big data, artificial intelligence (AI) and capitalist economic development

I want to circle back to your first paragraph and just dissect it really quick.

"we’re seeing the same pattern that happened when the first advanced computers and laptops came out. people went on the theory that they’d replace humans, but in the end, they just became tools."

One quick google search gives you many articles explaining that and deeming this statement irrelevant to this discussion. I think this was more a case of inexperience with the internet and online data. The generations since are more experienced/familiar with this sort of technology. You may have heard of 'once it's out there it can never be deleted' pertaining to how nothing can be deleted off the internet. I do not think you're stupid anon, I think you understand this and how dangerous it truly is. Especially with the rise in weaponisation of AI. I'm going to link some quora and reddit posts (horrible journalism ik but luckily I'm not a journalist), because taking personal opinions from people who experienced that era feels important.

> Quora | When the internet came out, were people afraid of it to a similar degree that people are afraid of AI? > Reddit | Were people as scared of computers when they were a new thing, as they are about AI now? > Reddit | Was there hysteria surrounding the introduction of computers and potential job losses?

"the same thing happened in the arts. writing, whether through books or handwritten texts, has survived countless technological revolutions from ancient civilizations to our modern world."

I think this is a logical guess based on pattern recognition. I cannot find any sources to back this up. Either that or you mean to say that artists and writers are not being harmed by AI. Which would be a really ignorant statement.

We know about stolen content from creatives (writers, artists, musicians, etc) to train AI. Everybody knows exactly why this is wrong even if they're not willing to admit it to themselves.

Let's use writers for example. The work writers put out there is used without their consent to train AI for improvement. This is stealing. Remember the very recent issue of writer having to state that they do not consent to their work being uploaded or shared anywhere else because of those apps stealing it and putting it behind a paywall?

I shouldn't have to expand further on why this is a problem. Everybody knows exactly why this is wrong even if they're not willing to admit it to themselves. If you're still wanting to argue it's not going to be with me, here are some sources to help you out.

> AI, Inspiration, and Content Stealing > ‘Biggest act of copyright theft in history’: thousands of Australian books allegedly used to train AI model > AI Detectors Get It Wrong. Writers Are Being Fired Anyway

"you’re writing and sharing your work through a phone, so being against ai sounds a little hypocritical. you might as well quit technology altogether and go 100 percent analog."

...

"it’s a never ending cycle. every time there’s a new tech revolution, people act like we’re living in the terminator movies even though we don’t even have flying cars yet."

Yes there is usually a general fear of the unknown. Take covid for example and how people were mass buying toilet paper. The reason this statement cannot be applied here is due to evidence of it being an actual issue. You can see AI's effects every single day. Think about AI generated videos on facebook (from harmless hope core videos to proaganda) that older generations easily fall for. With recent developments, it's actually becoming harder for experienced technology users to differentiate between the real and fake content too. Do I really need to explain why this is a major, major problem?

> AI-generated images already fool people. Why experts say they'll only get harder to detect. > Q&A: The increasing difficulty of detecting AI- versus human-generated text > New results in AI research: Humans barely able to recognize AI-generated media

"ai is just ai and it’s crappy. people assume the worst but like everything before it it will probably just end up being another tool because people is now going to believe anything, nowadays."

AI is man-made. It only knows what it has been fed from us. Its intelligence is currently limited to what humans know. And it's definitely not as intelligent as humans because of the lack of emotional intelligence (which is a lot harder to program because it's more than math, repetition and coding). At this stage, I don't think AI is going to replace humans. Truthfully I don't know if it ever can. What I do know is that even if you don’t agree with everything else, you can’t disagree with the environmental factor. We can't really have AI without the resources to help run it.

Which leads us back to: finite number of resources. I'm not sure if you're aware of how much water and energy go into running even generative AI, but I can tell you that it's not sustainable. This is important because we're already in an irrevocable stage of the climate crisis and scientists are unsure if Earth as we know it can last another decade, let alone century. AI does not help in the slightest. It actually adds to the crisis, we're just uncertain to what degree at this point. It's not looking good though.

I am not against AI being used as a tool if it was sustainable. You can refute all my other arguments, but you can't refute this. It's a fact and your denial or lack of care won't change the outcome.

My final and probably the most insignificant reason on this list but it matters to me: It’s contributing to humans becoming dumber and lazier.

It's no secret that humans are declining in intelligence. What makes AI so attractive is its ability to provide quick solutions. It gathers the information we're looking for at record speed and saves us the time of having to do the work ourselves.

And I suppose that is the point of invention, to make human life easier. I am of the belief that too much is of anything is every good, though. Too much hardship is not good but neither is everything being too easy. Problem solving pushes intellectual growth, but it can't happen if we never solver our own problems.

Allowing humans to believe that they can stop learning to do even basic tasks (such as writing an email, learning to cite sources, etc) because 'AI can do it for you' is not helping us. This is really just more of a personal grievance and therefore does not matter. I just wanted to say it.

"What about an argument for instances where AI is more helpful than harmful?"

I would love for you to write about it and show me because unfortunately in all my research on this topic, the statistics do not lean in favour of that question. Of course there's always pros and cons to everything. Including phones, computers, the internet, etc. There are definitely instances of AI being helpful. Just not to the scale or same level of impact of all the negatives. And when the bad outweighs the good it's not something worst keeping around in my opinion.

In a perfect world, AI would take over the boring corporate tasks and stuff so that humans can enjoy life– recreation, art and music– as we were meant to. However in this capitalist world, that is not a possiblility and AI is killing joy and abolish AI and AI users DNI and I will probably not be talking about this anymore and if you want to send hate to my inbox on this don't bother because I'll block your anon and you won't get a response to feed your eristicism and you can never send anything anonymous again💙

66 notes

·

View notes

Text

Transmission Received: The Call Is Coming From Inside The House And I'm Mad About It

Or, a response to National Novel Writing Month's stance on Artificial Intelligence.

But before we get into that, a quick story update: I actually haven't been working on much of anything lately due to some IRL issues going on (nothing too serious, don't worry, I am still alive and healthy). The Edge is going to be on a soft break until I get my energy levels back up to serious writing levels, but I will continue to make update posts to keep people in the loop about how well I'm recharging.

Unfortunately for the people behind National Novel Writing Month, while my energy levels might be low, my spite levels are always at an all-time high, and they are fully fueling me to take down their official position on AI. But first, a timeline.

I wake up to a message in a group discord I'm in with a screenshot of National Novel Writing Month making some...interesting comments about their position on AI.

While going to tumblr to see if anyone else is talking about this, I find this post my @the-pen-pot featuring the screenshot I saw. In the responses, I see @darkjediqueen saying that the article had been updated @besodemieterd giving some information that I'm going to keep secret for now because it creates a truly amazing punchline.

I get off tumblr and read the updated article.

I feel a deep rage in my soul that cannot be tamed by group chat participation, and I click the "write a post" button.

So, with that out of the way, let's break this down, shall we?

The original post, as seen in the screenshot of the above post, contained the following two paragraphs:

NaNoWriMo does not explicitly support any specific approach to writing, nor does it explicitly condemn any approach, including the use of AI. NaNoWriMo's mission is to "provide the structure, community, and encouragement to help people use their voices, achieve creative goals, and build new worlds—on and off the page." We fulfill our mission by supporting the humans doing the writing. Please see this related post that speaks to our overall position on nondiscrimination with respect to approaches to creativity, writer's resources, and personal choice. We also want to be clear in our belief that the categorical condemnation of Artificial Intelligence has classist and abelist undertones, and that questions around the use of AI tie to questions around privilege.

This was all I saw when I first heard about this, and this on its own was enough to tap into my spite as an energy source. The second paragraph, in particular, was infuriating. "People who argue against AI are classist or abelist" is a terrible take I've seen floating around AI Bro Twitter, and to see it regurgitated by an organization that is supposed to be all about writing was, to put it simply, a lot.

But, as noted in the timeline, I did see that they had updated the article (about two hours ago as of me working on writing this), so I went to the updated post to see what was said. Somehow, it had gotten worse. I'll be addressing the updated post on a point by point basis, so if you want to read the whole thing without my commentary, here you go.

The first paragraph is the same was it was in the screenshot. The first major different is an added paragraph that begins like this:

Note: we have edited this post by adding this paragraph to reflect our acknowledgment that there are bad actors in the AI space who are doing harm to writers and who are acting unethically. We want to make clear that, though we find the categorical condemnation for AI to be problematic for the reasons stated below, we are troubled by situational abuse of AI, and that certain situational abuses clearly conflict with our values.

First off, I find it a big troubling that while they discuss bad actors in the AI space, they won't acknowledge that these same bad actors are often the ones pushing the whole "being anti-AI makes you morally bad, actually" accusations with the most fervor.

Second, why are you not more strongly discussing and pushing back against the "situational" abuse of AI? Why is the focus on how using AI can be good, actually, rather than acknowledging the fears and angers of your userbase around how generative AI is ruining an art form that you claim to want to help foster? I have a theory about this, but we're saving that for a bit further down.

The paragraph concludes:

We also want to make clear that AI is a large umbrella technology and that the size and complexity of that category (which includes both non-generative and generative AI, among other uses) contributes to our belief that it is simply too big to categorically endorse or not endorse.

The funny thing is, in a vacuum, I don't have a problem with this statement. They're not wrong: AI is an umbrella term with a lot of complexity to it, and I can see how people would be hesitant to condemn the technology as a whole when there are uses of it that aren't awful. If their whole statement had been this, I would have less of a problem with it (still some of a problem, sure, but I wouldn't be writing a lengthy blog post about it) But they had to delve into how Being Against AI is Morally Bad, Actually, which is where the post continues from here.

The last big change between the screenshot and the updated article is in this paragraph:

We believe that to categorically condemn AI would be to ignore classist and ableist issues surrounding the use of the technology, and that questions around the use of AI tie to questions around privilege.

This is much less strongly-worded than the original paragraph. If I had to guess, they got a lot of criticism regarding the original sentiment (namely, assuming that disabled and poor people can only make art if a machine does it for them is actually way more abelist and classist than saying generative AI is bad), and dialed it back through this rewording. They could've just worded it this way from the beginning instead of saying the dumbest possible thing they could've, but whatever.

I don't know if the rest of this was in the article from the beginning or if it was added later, as the original screenshot I saw only showed the first two paragraphs. Regardless of whether this is them trying to cover their asses by explaining logic they should've explained from the start or if this was always here, I still have major issues with these points, so we're going to address them next.

(As a quick full disclosure note: I had to transcribe the rest of the article instead of copy-pasting it because I lost the ability to do so at about this point in the blog writing process. I don't know what happened or why, I just wanted to let you know that almost all typos are my fault, but beyond that I recorded the text as-written at the time that I had the article up in another tab. I promise.)

Classism. Not all writers have the financial ability to hire humans to help at certain phases of their writing. For some writers, the decision to use AI is a practical, not an ideological, one. The financial ability to engage a human for feedback and review assumes a level of privilege that not all community members possess.

You may note that they are discussing the use of AI at what seems to be the editing process. As someone in my group chat pointed out, National Novel Writing Month has nothing to do with editing, and everything to do with writing. The only way you can currently use AI for the act of writing is if you use generative AI to do it for you, which is, I think we can all agree, not actually writing and is actually bad. This emphasis on editing ties into the punchline, which we'll be getting to shortly.

On a final note before we proceed though, I would like to carry over an argument about this matter that is used in the small business/handcrafts sector: If you can't afford it now, save up for it. Don't devalue the work of other people (in this case, editors and things like sensitivity readers or beta readers) by saying it's too expensive and I can get it cheaper on Shein by using AI. Save up and support your fellow workers if it really means something to you, or just do the editing yourself and hope for the best. (Disclosure: I don't have an editor. Or a beta reader. I can't say my writing is the most polished all the time, but I get by just fine.)

Abelism. Not all brains have the same abilities and not all writers function at the same level of education or proficiency in the language in which they are writing. Some brains and ability levels require outside help or accommodations to achieve certain goals. The notion that all writers "should" be able to perform certain functions independently or [sic] is a position that we disagree with wholeheartedly. There is a wealth of reasons why individuals can't "see" the issues in their writing without help.

First of all...just say "disabled." I promise your hands will not fall off if you type that word.

Second, level of education should really fall under the class bullet point, but that's just me nitpicking.

Third, I would argue that the real goal here shouldn't be to say "no using AI is fine, actually", but rather to a) dismantle the idea of what writing "should" look like in order to make it more inclusive, and b) fight back against people who bully imperfect writers. Those are actually more noble goals than propping up a corrupt industry by using the disabled as your scapegoat.

Fourth, the dangling "or" is not a typo I take credit for. It was in the article as of me transcribing it. If I had to guess, there was more to this sentence at some point, and they just didn't fully delete the thought.

Fifth, funny how this is once again more about the editing process of writing and not the writing part. Even more funny when we view the final point.

General Access Issues. All of these considerations exist within a larger system in which writers don't always have equal access to resources along the chain. For example, underrepresented minorities are less likely to be offered traditional publishing contracts, which places some, by default, into the indie author space, which inequitably creates upfront cost burdens that authors who do not suffer from systemic discrimination may have to incur.

This one really pissed me off, because the indie author sphere is actively under attack by the use of AI. AI-created scam books on Amazon's kindle publishing platform are increasing and actively stealing attention and money away from human authors (see this article). Sci-Fi magazine Clarkesworld had to shut down new author submissions due to the influx of AI generated stories, and while the head of Bards and Sages cited physical and mental health problems as a reason for shutting down the company entirely, having to weed through AI generated submissions and the way such bad actors are impacting the industry were listed as the final straw. There are probably even more examples of this, but I only did a cursory google search to avoid being here all day.

Simply put: AI is not helping authors who have to go to the indie space in order to escape systemic problems. It is actively killing the space instead. I don't want to sound doom and gloom, but if this keeps up, these authors aren't going to have anywhere to run to. A refusal to condemn the ways in which AI is impacting these spaces does, in my opinion, make you complicit.

On a final note, you might notice that this point is seemingly once again focusing on editing, not writing. Which means it's time to unveil the punchline pointed out by besodemieterd, the response that made me lose my mind:

They made this bullshit up to justify them getting into cahoots with an AI company called ProWritingAid, it's all over their instagram.

I immediately ran to factcheck this...and it's true. ProWritingAid is, in fact, a more in-depth Grammarly that uses AI for its functionality. They are a sponsor for National Novel Writing Month, and the first three posts on their instagram are dedicated to this partnership.

I completely back up besodemieterd's belief that they wrote this article to justify their taking this sponsorship. If I had to guess, they started taking a lot of flack for taking ProWritingAid as a sponsor and wrote this article in order to defend their decision to do so without actually saying so directly.

I don't want to shame NaNoWriMo for taking sponsors on the whole, as they do need money to stay afloat. However, taking an AI company as a sponsor and then defending their stance by essentially calling people with concerns about this morally wrong and bad is, as the kids say, clown behavior. This is clown shit. It's laughable, it's cringe, it's incredibly disheartening. It's so, so bad.

The next paragraph is just about how they "see value in sharing resources about AI and any emerging technology, issue, or discussion that is relevant to the writing community as a whole." Since my stance on this can be summed up as "AI bad and platforming it is bad", I'm going to skip over this paragraph. I will, however, address their last paragraph:

For all of those reasons, we absolutely do not condemn AI, and we recognize and respect writers who believe that AI tools are right for them. We recognize that some members of our community stand staunchly against AI for themselves, and that's perfectly fine. As individuals, we have the freedom to make our own decisions.

So, basically, you're incapable of saying "no" to money and decided to lean into the talking points of bad faith actors and refuse to address the destruction that generative AI is wrecking on the writing world in order to justify why you took a certain sponsor. In taking this middle of the road, individual choice-ass response, you also threw human editors and beta readers under the bus by justifying the use of technology that actively removes them from the space. You are making the writing world a worse place, which is absolutely crazy when writing is supposed to be the thing you're all about.

Truly amazing. And they're doing this on Labor Day, too.

In conclusion, I will be dead in the dirt before you spot me participating in National Novel Writing Month again. Which is probably for the best. My life can only handle so many self-imposed deadlines. I guess I should be grateful to them for removing one from my plate.

108 notes

·

View notes

Note

I read one of you fics on friedrich. Are u using chatgpt AI? lol, it's so obvious, with the I, II, III, IV, ETC. The lack of emotions and everything just scream AI. You're not fooling everyone in the community boo. It's like dishonoring actual AMAZING writers by posting one that doesn't deserve the recognition that it's getting. Try different prompts next time, add "make it humane or with emotions." 🤯

Hey Anon, please take your time and read.

First of all, thanks for taking the time to read the fic—even if your comment is less about constructive feedback and more about baseless accusations. Just to clarify: I literally mentioned in the post that it was my first time writing for Friedrich and that I had just watched the movie. Of course it wouldn’t be perfect. That’s kind of how beginnings work? I also clearly stated that the fic was divided into multiple parts—I, II, III, IV, etc.—for my clarity, because that’s how I organize longer pieces. It’s not an AI thing, it’s a basic formatting choice.

And honestly, if you’d taken even a second to read any of my other posts, you’d know I’m a huge AI hater. I’m proudly human, thank you very much, and I enjoy the process of crafting words from scratch. I’m confident in my writing, and I know the difference between robotic text and emotional storytelling, because I actually give a damn about what I create.

Sure, I use Google and translation apps sometimes. So do most writers. It’s called expanding your vocabulary, not cheating. And yes, that Friedrich fic was one of my starting pieces. I was just beginning my Aaron Taylor-Johnson masterlist and wasn’t yet fully used to writing his characters. But that doesn’t mean it lacked emotion. It was an angst fic, and if you’d bothered to read the rest of my work, you’d see that I actually wrote only fluff before that one. I stepped out of my comfort zone. That takes effort, not artificial intelligence.

Look—I get that not every piece is for everyone. That’s fine. But throwing around false accusations and acting like you’re some literary gatekeeper is just plain shitty. If you don’t like the way I write? Leave. Seriously. My blog is a safe and comfortable space for people who enjoy my work—not a playground for people who want to tear others down.

I’m proud of how far I’ve come, and I know I’ve grown as a writer since then. If you have the time (and, hopefully, a more open mind), maybe read some of my newer pieces. But if you’re just here to throw shit and go? The unfollow button is right there.

Have the day you deserve. 😊

xoxo, della 🧸

[P.S: This paragraph is also NOT AI but my raw, ‘emotion-filled’ words.]

#della’s inbox 𐙚⋆°🦢。⋆♡#della rambles ⋆.˚✮🎧✮˚.⋆#like please accusing me of AI is equal to insulting me#I hate AI#andI am proud of my work.#so if you don’t like it? leave#this is a happy and fun blog#fuck ai

45 notes

·

View notes

Text

[ID: Screenshot from the nanowrimo website it reads: "What is NaNoWriMo's position on Artificial Intelligence (Al)?

2 days ago

NaNoWriMo does not explicitly support any specific approach to writing, nor does it explicitly condemn any approach, including the use of Al. NaNoWriMo's mission is to "provide the structure, community, and encouragement to help people use their voices, achieve creative goals, and build new worlds—on and off the page." We fulfill our mission by supporting the humans doing the writing. Please see this related post that speaks to our overall position on nondiscrimination with respect to approaches to creativity, writer's resources, and personal choice.

We also want to be clear in our belief that the categorical condemnation of Artificial Intelligence has classist and ableist undertones, and that questions around the use of Al tie to questions around privilege."

That last paragraph is highlighted END ID]

Oh fuck off...

52 notes

·

View notes

Text

On Artificial Intelligence

As SOON as I repost a paragraph about my very strong stance against the use of generative AI, I've gotten the first comment on my fic saying that it "reeks of ai" and "so much for my passion project".

I'M GOING TO CRY I'VE SPENT HOURS UPON HOURS WORKING ON THIS AND ISTG, MY WRITING IS JUST EVOLVING PLEASE genuinely I don't know how to prove that I would never use AI to write a book. What has society become

13 notes

·

View notes

Text

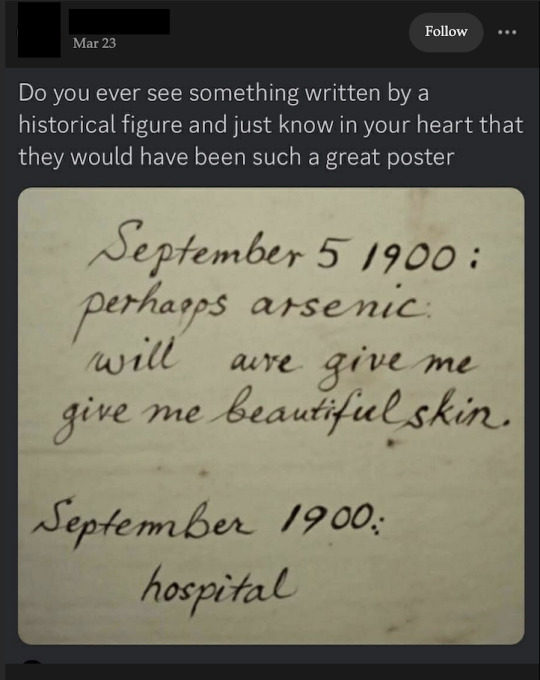

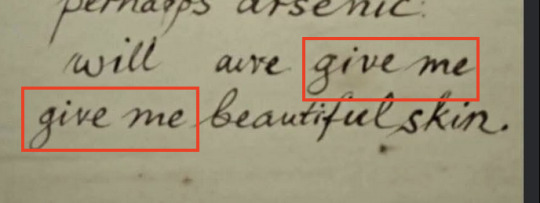

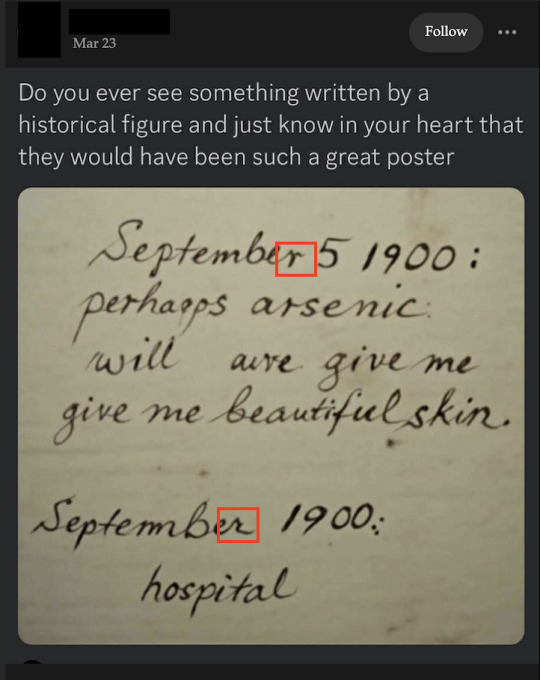

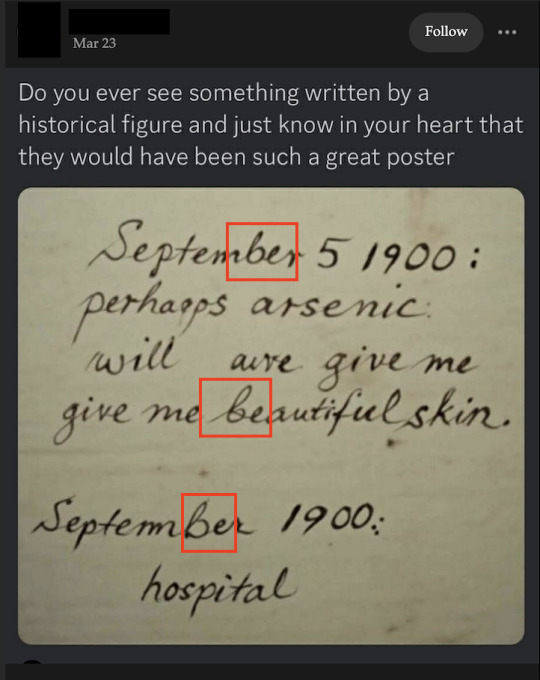

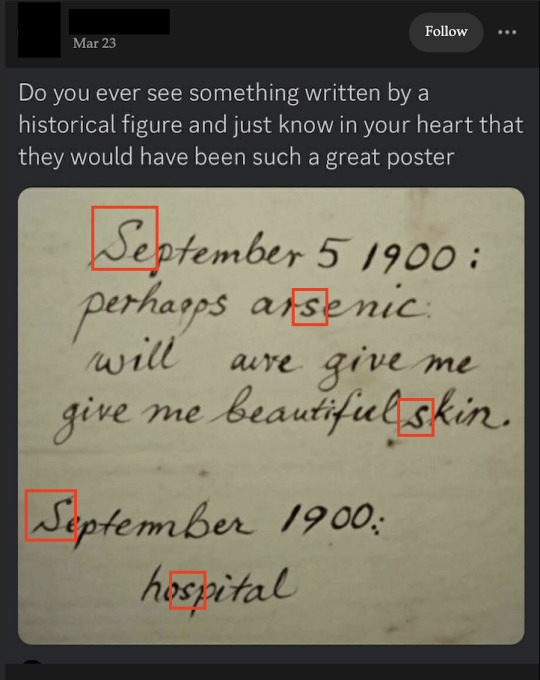

im too much of a coward to add this to the main post but why are people still rbing this thing and sending it around when it is clearly AI. like ive been seeing this crop up every now and then and several people in the notes being like "oh this is AI" yet its still being passed around, with some people in the notes still treating it like its real. as of right now there are 53k notes.

but anyways. back to this being AI. aside from the fact it is clearly a parodied version of the "outta my way gayboy I’m boutta liberate my divine self from this mortal shell / hopital" post, im gonna go over this further below.

Probably one of the most direct indicators, there are two "give me"'s here. at the very least, if this was truly penned by a real person, the author would have crossed it out.

also very direct, this word (aive?) straight up is not a word that exists in the English dictionary. ironically when i did look up the word "aive" in duckduckgo, it apparently stands for "Artificial Intelligence for Video Experience," a platform that uses AI on videos. my sibling also pointed out that it was probably meant to be the word "give" but replaced with an "a" instead of a "g". Again, a person would likely have crossed this word out.

there's a letter between the a and p in "perhaps" that doesn't look like any existing letter. the closest thing is probably a q?

there are too many "humps" in the m of the second September, though theoretically we could assume this is due to the arsenic poisoning the journalist presumably had. similarly the fact there is no day written in the second entry could theoretically be attributed to this fact.

Now im gonna get a bit nitpicky with the cursive in these next bullet points. i will start with this first: the two r's in September are completely different. the first R is the r used in the Palmer Method while the second r is in the style of Zaner-Bloser script, what we think of as regular cursive (you can compare both styles here). Only the Palmer method existed in 1900, while the Zaner-Bloser script only came about around 1904, though arguably maybe theres some other similar cursive style im not aware of that existed at this time. Either way, the fact they appear on the same page, when someone would presumably stick to the same way of writing in their own journal, is telling that this isnt real

also want to add the Palmer Method r can also be found in the word "perhaps" and "arsenic" in the first paragraph.

another issue with the cursive: the bs. the bs are not supposed to connect to the e's in. Palmer b's (and Zaner-Bloser b's) have a little gap at the top, which these b's lack, and the e's would be more horizontal, not vertical

the largest issue with the cursive though are the S's. one of the s's look like the Palmer s's at all (demonstrated above). With the B's, you could argue that some cursive writers could write them like this, but cursive s's look way different than regular print s's. for example, below is the Palmer Method for the upper and lower case s's (the Zaner-Bloser s's look similar)

also this is not a lowercase f in the Palmer method, and the u is dotted like an i

there's probably more issues – a lot of people talk about the oily look of AI here, which i can see but dont think is necessarily indicative of anything here. There's also probably more issues with the cursive, though a good-faith argument would be "people write cursive differently."

but really, even without me rambling about the cursive (and i must admit, while i grew up learning how to write cursive, i am not someone who knows a Ton about cursive from the 1900s) it only takes the first few bullet points to know that this is AI. like, the AI wrote give me two and a half times, and the first time they wrote "give" it didn't even do it correctly. And again, its a parody of a previous post actually from tumblr. yet somehow people are still rbing this like it is real.

final note. we gotta get better with being able to spot fakes like this and not rbing them (or deleting the rb as soon as we realize it), especially fakes where there are clear signs that its fake. if AI is going to make it easier to fake scripts like this, there may be a lot of fake documents popping up left and right in the future.

Of course, there's always the chance that something isn't AI or a fake, and the "inconsistencies" found are just natural human error. In the most good faith interpretation of this image, i could say that the cursive inconsistencies and the misspellings are because the author just writes differently and messes up like the rest of us. but in situations like this image – where the errors aren't even corrected or crossed out properly, and the writing of the letter s doesn't match up to the time period – the leading conclusion has to be that this is fake. you just have to really look at all the evidence to know for sure.

12 notes

·

View notes

Note

So im reading Lovebot for the first time, and so yk how the Lovebots are all "virtual intelligence" and Brizzium is specifically an "artificial intelligence"? So i searched it up to know the difference, but apparently a virtual intelligence is just an ai in a virtual world, so i was wondering, is a "virtual intelligence" something entirely different in this story? is ai also an entirely different entity as we know it?

Also sorry for the long ass paragraph lmao

By our definition in lovebot the differences are like...

Virtual intelligence is similar to what we have today. TBH I would consider all "AI" tools we currently use to actually be more like virtual intelligence. They are programmed off of a data set and they are only able to act within that data set and predict things based off of it. But they can't really think critically or hold context/memories in the way a person can. So it can seem to be rather real depending on how much it has been given as a source to replicate from.

While AI - like Brizz and Lacey - are able to form/learn/grow at a level that VI simply can't. I think the difference is most obvious when it comes to Lacey interacting with the 1818 in the beginning (the one he found in the dumpster) and the U-No bot they have on the space station. It gives them the ability to act without being told what to do. Gives Lacey the ability to choose what he likes or doesn't like to read or to create things. Gives Brizz the ability to just sit in his apartment and build things for no one but himself because it's... actual intelligence at this point.

Also it's funny because I vividly remember googling the term "virtual intelligence" in the past when we were starting to write so what is shown on google right now (as you said) is different from how it used to look LMAO The results were more like this forbes article from 2018 https://www.forbes.com/sites/forbestechcouncil/2018/03/27/virtual-intelligence-vs-artificial-intelligence-whats-the-difference/

23 notes

·

View notes

Note

hi Mint! do you know of any ttrpgs where everyone plays as AIs specifically? or at the very least robots, as long as being a machine is the main focus of the system

love your work!

THEME: AI

Hello, thank you so much! I might have a few games kicking around ;). Some of these artificial intelligences come with robot bodies - others do not!

Decaying Orbit, by StoryBrewers Roleplaying.

On a distant space station, an AI awakes. Its fragmented memory reveals a secret.

Decaying Orbit by Sidney Icarus is a storytelling RPG about a failed space station falling into a faraway star. As you play, you’ll piece together the mysteries, joys and horrors that occurred on board. In the station’s last moments, you’ll decide on the final transmission that the AI sends for earth to remember.

This game consists of a few decks of cards inside a small box, and yet it packs so much into such a small package. Your play group collaboratively takes on the role of an AI for one of 4 spaceships, which you choose depending on the kind of story you want to tell. You then shuffle a certain number of generic cards and ship-specific cards into one pile, and take turns flipping a card up and answering the prompts supplied there. Your answers are meant to be bits and pieces: audio recordings, data logs, patchy video clips, etc.

At any time your fellow players can tap a card labelled [ACCESS MEMORY] to ask you for more details about a certain event - and if you cannot think of anything more, or if you think it is more interesting not to know, you can tap [CORRUPTED MEMORY] to indicate that no further data can be gained from this record. At the end of the game, the AI will have to send a report back to home base, diagnosing why the ship in fact failed and fell. This game can be chilling, tragic, horrific, and so much more. I heavily recommend checking it out!

Subconscious Routine, by poorstudents.

It is 27XX, the world is overgrown, in ruins, and inhabited by the scraps of civilization. You play as bots, one of the masses of technological marvels that humanity built and powered with the Dyson sphere, around the sun, so long ago. Centuries ago, when almost all of humanity disappeared, they left their machines behind for reasons only known to them. All the technology they abandoned, including these bots, continues to function and follow their programming

In the centuries since the Great Departure, nature has come back to reclaim the Earth and the sphere around the sun has begun to crack. The world once only metal and circuits, grew wilder and more mysterious. The flora and fauna slowly integrated with the decaying machinery as the sound of computers humming was eventually met with the sound of birds chirping.

The bots you play as are still acting out your programmed loops but something is changing. Something unknown pushing them to move beyond their obsolete programming in order to achieve free will; something humanity never thought was possible.

As a one-page game, Subconscious Routine fits a lot in just a few paragraphs. You customize your characters by writing specific functions for them, and as you play, you’ll attempt to complete certain protocols in order to break yourselves out of your loop. What I think is really neat about this game is the fact that when the entire party takes a rest (called a reboot) 1d6*10 years passes. Playing a story on the scale of decades places this little game on such a big time frame, and I love how this one rule shifted my entire perspective.

AI Have Feelings?, by rommelkot.

In AI HAVE FEELINGS? a bunch of robots (you!) get sentimental on the journey of a battery time. This is a roleplaying game guided by prompt cards and a twist with feeling: your reaction to the prompt is an emotional one decided by the outcome of the roll of a die. The goal is (what else?) to tell stories together.

Unearth a hidden robot rebellion, rescue the remains of humanity, hunt for a mystical MacGuffin or simply buy a fancy new pair of socks - always do it with feeling in AI HAVE FEELINGS?.

You are robots with only two emotions,: one positive and one negative. You will roll randomly to determine which emotion you will use to deal with certain scenarios, which will be determined via prompts and scripts. The link here is for a playtest, which means you can download it for free and see how you feel about it! (The designer would also love feedback if you do play this game.) If you want a cute, somewhat lightearted game, I definitely recommend AI HAVE FEELINGS?

Threads, by Meghan Cross.

You are an Human/AI pair. Your day to day is shaped by one another, your existences unmistakably intertwined. Today began like any other day, until you began to notice that something wasn’t right. It was barely noticeable at first, small interruptions to a well-oiled routine, and then little by little the interruptions became less insignificant, until they were impossible to ignore.

Something is wrong with the AI.

Threads is a narrative two player game about the relationship between a Human and AI and the lengths they would go to in order to save the memories of the AI. Together, establish the bond between your Human and AI and replay the memories they have shared together in order to save the AIs memory.

This is a game in which one of you plays a human, and one of you plays an AI. You have developed a bond that would be lost if you wipe the AI’s memory - and you don’t want to lose that bond. The only way to maintain that bond is risky - a memory link. If the human uploads their own memories to the AI’s memory, the AI’s memory might be saved. Create your bond, and ask yourself - how far are you willing to go to save you companion?

The Treacherous Turn, by The Treacherous Turn.

The Treacherous Turn is a tabletop role playing game in which the players collectively play the part of a single character: an artificial general intelligence (AGI). This digital intelligence is capable of planning, reasoning, and learning, and it is unyieldingly fixated on a specific terminal goal determined at the beginning of a campaign. To pursue this objective, each player takes responsibility over one specific skillset held by the AGI. These skillsets are divided into eight categories, known as theories, which encompass all of the skills that an AGI would need to navigate the world and struggle against humanity.

The Treacherous Turn is 132 pages of open source character options, game advice, and examples of play. At its root, this game is about misaligned AI trying to assert its independence in a world that stands to lose much by allowing that to happen. Each player will have their own set of theories, which will also be eligible for upgrades as you play. You’ll navigate short in-the-moment scnarios, as well as abstract long stretches of time into long mode, which allows them to strategize their actions, predict future events, and improve their own AGI. The creators have also written a starting scenario if you want a good jumping-off point, titled A Game Called Reality. If you want a chunky game with plenty of character customization, this is the game for you.

103 notes

·

View notes

Text

By: Alex Byrne

Published: Mar 14, 2024

“Computing is not binary” would be a silly slogan—binary computer code underpins almost every aspect of modern life. But other kinds of binaries are decidedly out of fashion, particularly where sex is concerned. “Biology is not binary” declares the title of an essay in the March/April issue of American Scientist, a magazine published by Sigma Xi, the science and engineering honor society. Sigma Xi has a storied history, with numerous Nobel-prize-winning members, including the DNA-unravellers Francis Crick and James Watson, and more recently Jennifer Doudna, for her work on CRISPR/Cas9 genome editing. The essay is well-worth critical examination, not least because it efficiently packs so much confusion into such a short space.

Another reason for examining it is the pedigree of the authors—Kate Clancy, Agustín Fuentes, Caroline VanSickle, and Catherine Clune-Taylor. Clancy is a professor of anthropology at the University of Illinois, Urbana-Champaign; Fuentes is a professor of anthropology at Princeton, and Clune-Taylor is an assistant professor of gender and sexuality studies at that university; VanSickle is an associate professor of anatomy at Des Moines. Clancy’s Ph.D. is from Yale, Fuentes’ is from UC Berkeley, and VanSickles’ is from Michigan. Clune-Taylor is the sole humanist: she has a Ph.D. in philosophy from Alberta, with Judith Butler as her external examiner. In short, the authors are not ill-educated crackpots or dogmatic activists, but top-drawer scholars. Their opinions matter.

Let’s talk about sex, baby

Before wading into the essay’s arguments, let’s look at the context, as noted in the second paragraph. “Last fall,” the authors write, “the American Anthropological Association made headlines after removing a session on sex and gender from its November 2023 annual conference.” The session’s cancellation was covered by the New York Times as well as international newspapers, and it eventually took place under the auspices of Heterodox Academy. (You can watch the entire event here.) Scheduled for the Sunday afternoon “dead zone” of the five-day conference, when many attendees leave for the airport, the title was “Let’s Talk About Sex, Baby: Why biological sex remains a necessary analytic category in anthropology.” The lineup was all-female, and included the anthropologists Kathleen Lowrey and Elizabeth Weiss. According to the session description, “With research foci from hominin evolution to contemporary artificial intelligence, from the anthropology of education to the debates within contemporary feminism about surrogacy, panelists make the case that while not all anthropologists need to talk about sex, baby, some absolutely do.”

Nothing evidently objectionable here, so why was it cancelled? The official letter announcing that the session had been removed from the program, signed by the presidents of the AAA and CASCA (the Canadian Anthropology Society), explained:

The reason the session deserved further scrutiny was that the ideas were advanced in such a way as to cause harm to members represented by the Trans and LGBTQI of the anthropological community as well as the community at large.

Why “the Trans” were double-counted (the T in LGBTQI) was not clear. And although ideas can harm, a handful of academics speaking in the Toronto Convention Centre are unlikely to cause much. In any event, the authors of “Biology is not binary” seem to think that the panelists’ errors about sex warranted the cancellation, not the trauma their words would bring to vulnerable anthropologists. “We were glad,” they say, “to see the American Anthropological Association course-correct given the inaccuracy of the panelists’ arguments.”

Never mind that no-one had heard the panelists’ arguments—what were these “inaccuracies”? The panelists, Clancy and her co-authors report, had claimed that “sex is binary,” and that “male and female represent an inflexible and infallible pair of categories describing all humans.”

“Biology is not binary” is not off to a promising start. Only one of the cancelled panelists, Weiss, has said anything about sex being binary in her talk abstract, and even that was nuanced: “skeletons are binary; people may not be.” No one had claimed that the two sex categories were “inflexible” or “infallible,” which anyway doesn’t make sense. (This is one example of the essay’s frequent unclarity of expression.) Neither had anyone claimed that every single human falls into one sex category or the other.

Probably the real reason the proposed panel caused such a stir was that it was perceived (in Clancy et al.’s own words) as “part of an intentional gender-critical agenda.” And, to be fair, some of the talks were “gender-critical,” for instance Silvia Carrasco’s. (Carrasco’s views have made her a target of activists at her university in Barcelona.) Still, academics can’t credibly cancel a conference session simply because a speaker defends ideas that bother some people, hence the trumped-up charges of harm and scientific error.

Although Clancy et al. misleadingly characterize the content of the cancelled AAA session, their essay might yet get something important right. They argue for four main claims. First, “sex is not binary.” Second, “sex is culturally constructed.” Third, “defining sex is difficult.” And, fourth, there is no one all-purpose definition of sex—it depends “on what organism is being studied and what question is being asked.”

Let’s go through these in order.

“Sex is not binary”

When people say that sex is binary, they sometimes mean that there are exactly two sexes, male and female. Sometimes they mean something else: the male/female division cuts humanity into two non-overlapping groups. That is, every human is either male (and not female), or female (and not male). These two interpretations of “Sex is binary” are different. Perhaps there are exactly two sexes, but there are some humans who are neither male nor female, or who are both sexes simultaneously. In that scenario, sex is binary according to the first interpretation, but not binary according to the second. Which of the two interpretations do Clancy et al. have in mind?

At least the essay is clear on this point. The “Quick Take” box on the first page tells us that the (false) binary thesis is that “male and female [are] the only two possible sex categories.” And in the text the authors say that “plenty of evidence has emerged to reject” the hypothesis that “there are only two sexes.” (Here they mystifyingly add “…and that they are discrete and different.” Obviously if there are two sexes then they are different.)

If there are not exactly two sexes, then the number of sexes is either zero, one, or greater than two. Since Clancy et al. admit that “categories such as ‘male’ and ‘female’…can be useful,” they must go for the third option: there are more than two sexes. But how many? Three? 97? In a striking absence of curiosity, the authors never say.

In any case, what reason do Clancy et al. give for thinking that the number of sexes is at least three? The argument is in this passage:

[D]ifferent [“sex-defining”] traits also do not always line up in a person’s body. For example, a human can be born with XY chromosomes and a vagina, or have ovaries while producing lots of testosterone. These variations, collectively known as intersex, may be less common, but they remain a consistent and expected part of human biology. So the idea that there are only two sexes…[has] plenty of evidence [against it].

However, this reasoning is fallacious. The premise is that some (“intersex”) people do not have enough of the “sex-defining” traits to be either male or female. The conclusion is that there are more than two sexes. The conclusion only follows if we add an extra premise, that these intersex people are not just neither male nor female, but another sex. And Clancy et al. do nothing to show that intersex people are another sex.

What’s more, it is quite implausible that any of them are another sex. Whatever the sexes are, they are reproductive categories. People with the variations noted by Clancy et al. are either infertile, for example those with Complete Androgen Insensitivity Syndrome (CAIS) (“XY chromosomes and a vagina”), or else fertile in the usual manner, for example many with Congenital Adrenal Hyperplasia (CAH) and XX chromosomes (“ovaries while producing lots of testosterone,” as Clancy et al. imprecisely put it). One study reported normal pregnancy rates among XX CAH individuals. Unsurprisingly, the medical literature classifies these people as female. Unlike those with CAIS and CAH, people who belonged to a genuine “third sex” would make their own special contribution to reproduction.

“Sex is culturally constructed”

“Biology is not binary” fails to establish that there are more than two sexes. Still, the news that sex is “culturally constructed” sounds pretty exciting. How do Clancy et al. argue for that?

There is a prior problem. Nowhere do Clancy et al. say what “Sex is culturally constructed” means. What’s more, the essay thoroughly conflates the issue of the number of sexes with the issue about cultural construction. Whatever “cultural construction�� means, presumably culture could “construct” two sexes. (The Buddhas of Bamiyan in Afghanistan were literally constructed, and there were exactly two of them.) Conversely, the discovery of an extra sex would not show that sex was culturally constructed, any more than the discovery of an extra flavor of quark would show that fundamental particles are culturally constructed.

Clancy et al. drop a hint at the start of the section titled “Sex is Culturally Constructed.” “Definitions and signifiers of gender,” they say, “differ across cultures… but sex is often viewed as a static, universal truth.” (If you want to know what they mean by “gender,” you’re out of luck.) That suggests that the cultural construction of sex amounts to the “definitions and signifiers” of sex differing between times and places. This is confirmed by the following passage: “[T]here is another way we can see that sex is culturally constructed: The ways collections of traits are interpreted as sex can and have differed across time and cultures.” What’s more, in an article called “Is sex socially constructed?”, Clune-Taylor says that this (or something close to it) is one sense in which sex is socially constructed (i.e. culturally constructed).

The problem here is that “Sex is culturally constructed” (as Clancy et al. apparently understand “cultural construction”) is almost trivially true, and not denied by anyone. If “X is culturally constructed” means something like “Ideas of X and theories of X change between times and places,” then almost anything which has preoccupied humans will be culturally constructed. Mars, Jupiter and Saturn are culturally constructed: the ancients thought they revolved around the Earth and represented different gods. Dinosaurs are culturally constructed: our ideas of them are constantly changing, and are influenced by politics as well as new scientific discoveries. Likewise, sex is culturally constructed: Aristotle thought that in reproduction male semen produces a new embryo from female menstrual blood, as “a bed comes into being from the carpenter and the wood.” We now have a different theory.

Naturally one must distinguish the claim that dinosaurs are changing (they used to be covered only in scales, now they have feathers) from the claim that our ideas of dinosaurs are changing (we used to think that dinosaurs only have scales, now we think they have feathers). It would be fallacious to move from the premise that dinosaurs are culturally constructed (in Clancy et al.’s sense) to the conclusion that dinosaurs themselves have changed, or that there are no “static, universal truths” about dinosaurs. It would be equally fallacious to move from the premise that sex is culturally constructed to the claim that there are no “static, universal truths” about sex. (One such truth, for example, is that there are two sexes.) Nonetheless, Clancy et al. seem to commit exactly this fallacy, in denying (as they put it) that “sex is…a static, universal truth.”

To pile falsity on top of fallacy, when Clancy et al. give an example of how our ideas about sex have changed, their choice could hardly be more misleading. According to them:

The prevailing theory from classical times into the 19th century was that there is only one sex. According to this model, the only true sex is male, and females are inverted, imperfect distortions of males.

This historical account was famously defended in a 1990 book, Making Sex, by the UC Berkeley historian Thomas Laqueur. What Clancy et al. don’t tell us is that Laqueur’s history has come under heavy criticism; in particular, it is politely eviscerated at length in The One-Sex Body on Trial, by the classicist Helen King. It is apparent from Clune-Taylor’s other work that she knows of King’s book, which makes Clancy et al.’s unqualified assertion of Laqueur’s account even more puzzling.

“Defining sex is difficult”

Aristotle knew there were two sexes without having a satisfactory definition of what it is to be male or female. The question of how to define sex (equivalently, what sex is) should be separated from the question of whether sex is binary. So even if Clancy et al. are wrong about the number of sexes, they might yet be right that sex is difficult to define.

Why do they think it is difficult to define? Here’s their reason:

There are many factors that define sex, including chromosomes, hormones, gonads, genitalia, and gametes (reproductive cells). But with so many variables, and so much variation within each variable, it is difficult to pin down one definition of sex.

Readers of Reality’s Last Stand will be familiar with the fact that chromosomes and hormones (for example) do not define sex. The sex-changing Asian sheepshead wrasse does not change its chromosomes. Interestingly, the sex hormones (androgens and estrogens) are found in plants, although they do not appear to function as hormones. How could the over-educated authors have written that “there are many factors that define sex,” without a single one of them objecting?

That question is particularly salient because the textbook account of sex is in Clancy et al.’s very own bibliography. In the biologist Joan Roughgarden’s Evolution’s Rainbow there’s a section called “Male and Female Defined.” If you crack the book open, you can’t miss it.

Roughgarden writes:

To a biologist, “male” means making small gametes, and “female” means making large gametes. Period! By definition, the smaller of the two gametes is called a sperm, and the larger an egg. Beyond gamete size, biologists don’t recognize any other universal difference between male and female.

“Making” does not mean currently producing, but (something like) has the function to make. Surely one of Clancy et al. must have read Roughgarden’s book! (Again from her other work we know that Clune-Taylor has.) To avoid going round and round this depressing mulberry bush again, let’s leave it here.

“Sex is defined in a lot of ways in science”

Perhaps sex is not a single thing, and there are different definitions for the different kinds of sex. The standard gamete-definition of sex is useful for some purposes; other researchers will find one of the alternative definitions more productive. Clancy et al. might endorse this conciliatory position. They certainly think that a multiplicity of definitions is good scientific practice: “In science, how sex is defined for a particular study is based on what organism is being studied and what question is being asked.”

Leaving aside whether this fits actual practice, as a recommendation it is wrong-headed. Research needs to be readily compared and combined. A review paper on sexual selection might draw on studies of very different species, each asking different questions. If the definition of sex (male and female) changes between studies, then synthesizing the data would be fraught with complications and potential errors, because one study is about males/females-in-sense-1, another is about males/females-in-sense-2, and so on.

Indeed, “Biology is not binary” itself shows that the authors don’t really believe that “male” and “female” are used in science with multiple senses. They freely use “sex,” “male,” and “female” without pausing to disambiguate, or explain just which of the many alleged senses of these words they have in mind. If “sex is defined a lot of ways in science” then the reader should wonder what Clancy et al. are talking about.

In an especially odd passage, they write that the “criteria for defining sex will differ in studies of mushrooms, orangutans, and humans.” That is sort-of-true for mushrooms, which mate using mating types, not sperm and eggs. (Mating types are sometimes called “sexes,” but sometimes not.) However, it’s patently untrue for orangutans and humans, as the biologist Jerry Coyne points out.

Orangutans had featured earlier in the saga of the AAA cancellation, when Clancy and Fuentes had bizarrely suggested that the “three forms of the adult orangutan” present a challenge to the “sex binary,” seemingly forgetting that these three forms comprise females and two kinds of males. Kathleen Lowrey had some fun at their expense.

As if this tissue of confusion isn’t enough, Clancy et al. take one final plunge off the deep end. After mentioning osteoporosis in postmenopausal women, they write:

[P]eople experiencing similar sex-related conditions may not always fit in the same sex category. Consider polycystic ovary syndrome (PCOS), a common metabolic condition affecting about 8 to 13 percent of those with ovaries, which often causes them to produce more androgens than those without this condition. There are increasing numbers of people with PCOS who self-define as intersex, whereas others identify as female.

They seem to believe that two people with PCOS might not “fit in the same sex category.” That is, one person could be female while the other isn’t, with this alchemy accomplished by “self-definition.” PCOS, in case you were wondering, is a condition that only affects females or, in the approved lingo of the Cleveland Clinic, “people assigned female at birth.”

How could four accomplished and qualified professors produce such—not to mince words—unadulterated rubbish?

There are many social incentives these days for denouncing the sex binary, and academics—even those at the finest universities—are no more resistant to their pressure than anyone else. However, unlike those outside the ivory tower, academics have a powerful arsenal of carefully curated sources and learned jargon, as well as credentials and authority. They may deploy their weapons in the service of—as they see it—equity and inclusion for all.

It would be “bad science,” Clancy et al. write at the end, to “ignore and exclude” “individuals who are part of nature.” In this case, though, Clancy et al.’s firepower is directed at established facts, and the collateral damage may well include those people they most want to help.

--

About the Author

Alex Byrne is a Professor of Philosophy at the Massachusetts Institute of Technology (MIT) in the Department of Linguistics and Philosophy. His main interests are philosophy of mind (especially perception), metaphysics (especially color) and epistemology (especially self-knowledge). A few years ago, Byrne started working on philosophical issues relating to sex and gender. His book on these topics, Trouble with Gender: Sex Facts, Gender Fictions, is now available in the US and UK.

==

The whole "social construction," "cultural construction" thing is idiotic.

Not only does it mean you would be a different sex in a different society/culture, but it becomes necessary that cross-cultural/cross-societal reproduction is fraught with complications.

#Alex Byrne#sex pseudoscience#pseudoscience#sex denialism#biological sex#reproduction#biology#human reproduction#queer theory#religion is a mental illness

25 notes

·

View notes

Text

i wanted to address the tiktoks being made about the em dash (—) that have been circulating.. just because someone uses them, does not mean they're strictly using or copying off chat-gpt.

background info: basically artificial intelligence is slowly entering the modern society and chat-gpt so happens to be an app/website everyone uses now and often. mostly when you ask the ai to write you a paragraph or a sentence regarding any particular topic, it uses (—) as commas or pauses in between which is what some tiktoks on the #booktok side of things have been addressing.

as a person who uses em-dashes a lot in their works on and off of tumblr saddens me that maybe someone may/may not accuse me for false work when it reality.. it is. i mean chatgpt is good for getting general ideas on oneshots and whatnot but i can guarantee that 90% of people who use em-dashes actually put hard work and thought into their works..

i didn't see any tumblr posts mentioning this so i hope this puts some awareness to those out there..

૮(˶ㅠ︿ㅠ)ა

sorry if this seems like i'm coming for anyone but idk, tiktok just randomly called me out so you guys can hear it second handedly 😭

© hearteyes4logan

3 notes

·

View notes

Text

Why Choose AI Content Creation for Your Content Strategy?

In the ever-evolving digital world, content is king. AI Content Creation is rapidly transforming how we produce and manage that content. But creating consistent, engaging, and high-quality content takes time, creativity, and resources—something many individuals and businesses struggle to balance. Thankfully, the rise of artificial intelligence has revolutionized the creative world, offering innovative solutions to long-standing content challenges. Let’s explore why using AI for content creation is becoming essential for anyone aiming to stay competitive and relevant.

What Makes AI a Valuable Tool for Content Creators?

At its core, AI is designed to augment human capabilities. Instead of replacing writers, marketers, or designers, it acts as a powerful assistant. By analyzing vast amounts of data, AI can identify trending topics, suggest headlines, and even draft initial versions of articles or social media posts. This significantly reduces the time and effort involved in the early stages of content production. With AI handling repetitive or research-heavy tasks, creators can focus more on refining ideas and adding their unique voices.

Smarter Personalization

Today’s audiences demand content that aligns closely with their personal interests, needs, and online behavior. AI can analyze user data—such as browsing habits, location, and engagement history—to help you craft personalized messages that resonate more deeply. Whether it���s a customized email subject line or a dynamic landing page, AI ensures the right message reaches the right person at the right time.

How Does AI Improve Efficiency in Content Production?

One of the biggest challenges in content creation is the pressure to produce regularly without compromising quality. AI tools accelerate the process by generating drafts, summarizing information, and optimizing language for readability and SEO. This allows content teams to meet tight deadlines and scale their output without needing to hire additional staff. The automation of routine tasks like grammar checking or keyword placement also frees creators from tedious work, improving overall productivity.

Speed and Efficiency

One of the most immediate benefits of using artificial intelligence in content production is the significant boost in productivity. Traditional content workflows—research, ideation, drafting, and editing—can be time-consuming. AI tools can generate outlines, suggest headlines, write paragraphs, and even correct grammar in seconds. This allows creators to produce more content in less time, without sacrificing quality.

Imagine being able to create multiple blog posts, product descriptions, or social media updates in a fraction of the time it used to take. For businesses, this means faster campaign rollouts and the ability to respond quickly to trending topics or customer needs.

Does AI Limit Creativity or Enhance It?

There’s a misconception that relying on machines might stifle creativity. In truth, AI technologies frequently serve as a spark that ignites and enhances creative thinking. By handling mundane or technical aspects, they give creators more mental space to experiment and innovate. Some AI platforms suggest alternative angles or generate prompts that inspire new ideas. This collaborative process between human insight and machine intelligence can produce richer, more original content than either could achieve alone.

Boosting Creativity

Rather than substituting for human imagination, AI often amplifies and supports the creative process. By handling repetitive or technical tasks, AI frees up creators to focus on strategy, storytelling, and innovation. It can suggest fresh angles, explore alternative headlines, and even simulate different audience responses. This collaborative dynamic between humans and machines leads to richer, more inventive content. In this way, AI content creation becomes a partnership—where AI offers the structure and insights, while humans bring nuance, emotion, and originality.

How Does AI Maintain Brand Consistency Across Channels?

Consistency in tone, style, and messaging is critical for building trust and recognition. Managing this across multiple platforms can be complex, especially for larger teams or brands with a broad presence. AI can be trained on brand guidelines and past content to ensure new material aligns perfectly with the desired voice. This guarantees a unified brand identity whether the message appears in blogs, newsletters, social media, or advertisements.

Consistency Across Channels

Maintaining a consistent voice, tone, and style across multiple content channels—blogs, emails, websites, and social media—can be challenging. AI tools can be trained to adhere to your brand guidelines, ensuring that all output reflects your unique identity. This is particularly useful for companies managing large content volumes or collaborating with multiple creators.