#cpython

Explore tagged Tumblr posts

Text

Python Code: Compiled or Interpreted? The Truth Behind Python Execution

The article “Python Code: Compiled or Interpreted?” clarifies how Python blends both compilation and interpretation. It explains that Python source code is first compiled into bytecode, which is then interpreted by the Python Virtual Machine (PVM). This hybrid nature impacts performance and portability, making Python flexible yet slower than purely compiled languages Read More...

0 notes

Text

hey quick question what language is the python interpreter written in?

Rules:

Python is the best language for real people (aka people who are not designated software devs)

This blog is a consolidation of all my rage. Main blog is a totally-mysterious grad student biologist tgirl who does bioinformatics. If you want politeness, go there.

Science has found NO NEED for AI more advanced than scikit-learn

The best IDE is the built in Ubuntu text editor

Prep for a day of computational research with a refreshing shower coffee

If you use C or it's variants I'm throwing you out of a bus window

If you use R.... I'm so sorry

If you use Java..... Who hurt you?

385 notes

·

View notes

Text

python is often seen by neophytes and intermediates as, well, a neophytic language because of its (extremely superficial, by a generous stretch of the imagination) resemblance to english but it's been in my experience that if you're not just doing brainless scripting work thnat's isomorphic to all imperative languages and are making an honest-to-god piece of software with it, that you need a hell of a lot of background knowledge to do sufficiently advanced things. maybe with regards to the cpython vm.

32 notes

·

View notes

Text

i feel like its ok if java dies now though, bc wasm exists. the dream of virtual stack-machine runtimes that any language can compile to and any computer can run lives on. if it was just cpython bytecode and v8 bytecode and ruby bytecode and [thundermonkeyrandompenguin xdlolsorandom mozilla is great but who can fucking remember their potato ninja epic pirate bacon-ass names] bytecode its like those arent Things in the way the JVM is bc they're implementation defined. you can pet the jvm. with your mind. and it purrs.

14 notes

·

View notes

Text

Nothing encapsulates my misgivings with Docker as much as this recent story. I wanted to deploy a PyGame-CE game as a static executable, and that means compiling CPython and PyGame statically, and then linking the two together. To compile PyGame statically, I need to statically link it to SDL2, but because of SDL2 special features, the SDL2 code can be replaced with a different version at runtime.

I tried, and failed, to do this. I could compile a certain version of CPython, but some of the dependencies of the latest CPython gave me trouble. I could compile PyGame with a simple makefile, but it was more difficult with meson.

Instead of doing this by hand, I started to write a Dockerfile. It's just too easy to get this wrong otherwise, or at least not get it right in a reproducible fashion. Although everything I was doing was just statically compiled, and it should all have worked with a shell script, it didn't work with a shell script in practice, because cmake, meson, and autotools all leak bits and pieces of my host system into the final product. Some things, like libGL, should never be linked into or distributed with my executable.

I also thought that, if I was already working with static compilation, I could just link PyGame-CE against cosmopolitan libc, and have the SDL2 pieces replaced with a dynamically linked libSDL2 for the target platform.

I ran into some trouble. I asked for help online.

The first answer I got was "You should just use PyInstaller for deployment"

The second answer was "You should use Docker for application deployment. Just start with

FROM python:3.11

and go from there"

The others agreed. I couldn't get through to them.

It's the perfect example of Docker users seeing Docker as the solution for everything, even when I was already using Docker (actually Podman).

I think in the long run, Docker has already caused, and will continue to cause, these problems:

Over-reliance on containerisation is slowly making build processes, dependencies, and deployment more brittle than necessary, because it still works in Docker

Over-reliance on containerisation is making the actual build process outside of a container or even in a container based on a different image more painful, as well as multi-stage build processes when dependencies want to be built in their own containers

Container specifications usually don't even take advantage of a known static build environment, for example by hard-coding a makefile, negating the savings in complexity

5 notes

·

View notes

Text

Introduction to Python

Python is a widely used general-purpose, high level programming language. It was initially designed by Guido van Rossum in 1991 and developed by Python Software Foundation. It was mainly developed for emphasis on code readability, and its syntax (set of rules that govern the structure of a code) allows programmers to express concepts in fewer lines of code.

Python is a programming language that lets you work quickly and integrate systems more efficiently.

data types: Int(integer), float(decimal), Boolean(True or False), string, and list; variables, expressions, statements, precedence of operators, comments; modules, functions-- - function and its use, flow of execution, parameters and arguments.

Programming in python

To start programming in Python, you will need an interpreter. An interpreter is basically a software that reads, translates and executes the code line by line instead of combining the entire code into machine code as a compiler does.

Popular interpreters in python

Cpython

Jython

PyPy

IronPython

MicroPython

IDEs

Many other programmers also use IDEs(Integrated Development Environment) which are softwares that provide an extensive set of tools and features to support software development.

Examples of IDEs

Pycharm

Visual studio code (VS code)

Eclipse

Xcode

Android studio

Net beans

2 notes

·

View notes

Text

Cheers to CPython 3.7 reaching EoL, since it means that positional-only arguments are now available on every officially supported version of CPython.

3 notes

·

View notes

Text

Checking Out CPython 3.14's remote debugging protocol

https://rtpg.co/2025/06/28/checking-out-sys-remote-exec/

0 notes

Text

4 tips for getting started with free-threaded Python

Until recently, Python threads ran without true parallelism, as threads yielded to each other for CPU-bound operations. The introduction of free-threaded or ‘no-GIL’ builds in Python 3.13 was one of the biggest architectural changes to the CPython interpreter since its creation. Python threads were finally free to run side by side, at full speed. The availability of a free-threaded build is also…

0 notes

Text

What is the Python Language Summit?

The Python Language Summit is an event for the developers of Python implementations (CPython, PyPy, MicroPython, GraalPython, IronPython, and so on) to share information, discuss our shared problems, and — hopefullthon Language Summit is an event for the developers of Python implementations (CPython, PyPy, MicroPython, GraalPython, IronPython, and so on) to solve them.

These issues might be related to the language itself, the standard library, the development process, the status of Python 3.14 (and plans for 3.15), the documentation, packaging, the website, et cetera. The Summit focuses on discussions and consensus-seeking, more than merely on presentations.

Who Can Attend a Python Summit?

A Python Summit is designed for a wide range of participants, including:

Students & Freshers –

Software Developers & Engineers –

Data Scientists & Analysts –

Educators & Trainers –

Startups & Entrepreneurs –

At TCCI Computer Coaching We teach Python Language from basic to advance level online and offline both.

To know more in detail about Python.

Call us @ +91 98256 18292

Visit us @ http://tccicomputercoaching.com/

#PythonSummit#PythonDevelopment#DeveloperEvents#TCCI#TechConference#PythonCommunity#TCCIAhmedabad#OpenSourcePython

0 notes

Text

Improving Python Threading Strategies For AI/ML Workloads

Python Threading Dilemma Solution Python excels at AI and machine learning. CPython, the computer language's reference implementation and byte-code interpreter, needs intrinsic support for parallel processing and multithreading. The notorious Global Interpreter Lock (GIL) “locks” the CPython interpreter into running on one thread at a time, regardless of the context. NumPy, SciPy, and PyTorch provide multi-core processing using C-based implementations.

Python should be approached differently. Imagine GIL as a thread and vanilla Python as a needle. That needle and thread make a clothing. Although high-grade, it might have been made cheaper without losing quality. Therefore, what if Intel could circumvent that “limiter” by parallelising Python programs with Numba or oneAPI libraries? What if a sewing machine replaces a needle and thread to construct that garment? What if dozens or hundreds of sewing machines manufacture several shirts extremely quickly?

Intel Distribution of Python uses robust modules and tools to optimise Intel architecture instruction sets.

Using oneAPI libraries to reduce Python overheads and accelerate math operations, the Intel distribution gives compute-intensive Python numerical and scientific apps like NumPy, SciPy, and Numba C++-like performance. This helps developers provide their applications with excellent multithreading, vectorisation, and memory management while enabling rapid cluster expansion.One Let's look at Intel's Python parallelism and composability technique and how it helps speed up AI/ML processes. Numpy/SciPy Nested Parallelism The Python libraries NumPy and SciPy were designed for scientific computing and numerical computation.

Exposing parallelism on all software levels, such as by parallelising outermost loops or using functional or pipeline parallelism at the application level, can enable multithreading and parallelism in Python applications. This parallelism can be achieved with Dask, Joblib, and the multiprocessing module mproc (with its ThreadPool class).

An optimised math library like the Intel oneAPI Math Kernel Library helps accelerate Python modules like NumPy and SciPy for data parallelism. The high processing needs of huge data for AI and machine learning require this. Multi-threat oneMKL using Python Threading runtimes. Environment variable MKL_THREADING_LAYER adjusts the threading layer. Nested parallelism occurs when one parallel part calls a function that contains another parallel portion. Sync latencies and serial parts—parts that cannot operate in parallel—are common in NumPy and SciPy programs. Parallelism-within-parallelism reduces or hides these areas.

Numba

Even though they offer extensive mathematical and data-focused accelerations, NumPy and SciPy are defined mathematical instruments accelerated with C-extensions. If a developer wants it as fast as C-extensions, they may need unorthodox math. Numba works well here. Just-In-Time compilers Numba and LLVM. Reduce the performance gap between Python and statically typed languages like C and C++. We support Workqueue, OpenMP, and Intel oneAPI Python Threading Building Blocks. The three built-in Python Threading layers represent these runtimes. New threading layers are added using conda commands (e.g., $ conda install tbb). Only workqueue is automatically installed. Numba_threading_layer sets the threading layer. Remember that there are two ways to select this threading layer: (1) picking a layer that is normally safe under diverse parallel processing, or (2) explicitly specifying the suitable threading layer name (e.g., tbb). For Numba threading layer information, see the official documentation.

Threading Composability

The Python Threading composability of an application or component determines the efficiency of co-existing multi-threaded components. A “perfectly composable” component operates efficiently without affecting other system components. To achieve a fully composable Python Threading system, over-subscription must be prevented by ensuring that no parallel piece of code or component requires a specific number of threads (known as “mandatory” parallelism). The alternative is to provide "optional" parallelism in which a work scheduler chooses which user-level threads components are mapped to and automates task coordination across components and parallel areas. The scheduler uses a single thread-pool to arrange the program's components and libraries, hence its threading model must be more efficient than the built-in high-performance library technique. Efficiency is lost otherwise.

Intel's Parallelism and Composability Strategy

Python Threading composability is easier with oneTBB as the work scheduler. The open-source, cross-platform C++ library oneTBB, which supports threading composability, optional parallelism, and layered parallelism, enables multi-core parallel processing. The oneTBB version available at the time of writing includes an experimental module that provides threading composability across libraries, enabling multi-threaded performance enhancements in Python. Acceleration comes from the scheduler's improved Python Threading allocation. OneTBB replaces Python ThreadPool with Pool. By dynamically replacing or updating objects at runtime, monkey patching keeps the thread pool active across modules without code changes. OneTBB also substitutes oneMKL by activating its Python Threading layer, which automates composable parallelism using NumPy and SciPy calls.

Nested parallelism can improve performance, as seen in the following composability example on a system with MKL-enabled NumPy, TBB, and symmetric multiprocessing (SMP) modules and IPython kernels. IPython's command shell interface allows interactive computing in multiple programming languages. The demo was ran in Jupyter Notebook to compare performance quantitatively.

If the kernel is changed in the Jupyter menu, the preceding cell must be run again to construct the ThreadPool and deliver the runtime results below.

With the default Python kernel, the following code runs for all three trials:

This method can find matrix eigenvalues with the default Python kernel. Activating the Python-m SMP kernel improves runtime by an order of magnitude. The Python-m TBB kernel boosts even more.

For this composability example, OneTBB's dynamic task scheduler performs best because it manages code where the innermost parallel sections cannot completely leverage the system's CPU and where work may vary. SMP is still useful, however it works best when workloads are evenly divided and outermost workers have similar loads.

Conclusion

In conclusion, multithreading speeds AI/ML operations. Python AI and machine learning apps can be optimised in several ways. Multithreading and multiprocessing will be crucial to pushing AI/ML software development workflows. See Intel's AI Tools and Framework optimisations and the unified, open, standards-based oneAPI programming architecture that underpins its AI Software Portfolio.

#PythonThreading#Numba#Multithreadingpython#parallelism#nestedparallelism#NumPyandSciPy#Threadingpython#technology#technews#technologynews#news#govindhtech

0 notes

Text

Business Intelligence: Essentials and Strategic Advantage

The “Ultimate Guide to Python Compiler” provides a comprehensive overview of how Python compilers and interpreters work, including differences between CPython, PyPy, and others. It explains how source code is converted into bytecode and executed, along with tips on optimizing performance and choosing the right compiler for your project. Ideal for beginners and experienced developers aiming to deepen their understanding of Python's execution model Read More...

0 notes

Text

Finally got some time earlier this morning to work on my language project, which helped with some of the stress I was feeling. And that's funny to say, because holy hell was this morning's work some of the deepest-cutting agony I've gone through so far.

I finally have a CPython native module compiling from C, which can start to replace my misuse of stdlib ctypes. Source wise, it's going to be a big improvement! I finally have a platform where I can do stuff correctly and coherently - I have my foot in the door. Build system wise, it's jank as fuck and very not-good. I had to temporarily give up on making setuptools work, so I'm building the C modules with Make, so there's no way I could distribute as a wheel without fixing that. UV and Nix fought a lot about how to build and link things. I'm essentially working around every form of proper packaging right now with a promise to myself to figure it out later.

But... it works. I have a Python module written in pure C, which I can import and poke at with pytest, and use as an internal implementation library from the pure Python frontend. Which means I finally have a clean and acceptable boundary between memory management models, instead of the cursed hybrid approach I was using before. Truly a wild amount of effort to represent ASTs with native types, but that effort will immediately pay off with being able to manage the union logic natively. The cool thing about all this, aside from the immediate doors being unlocked with the storage model, is that now there's an inroad for prototyping a bunch of logic for things like the "simplify" function in frontend Python, and seamlessly port them to backend C without changing the test suite. It's also going to make transpilation... well, possible, frankly.

1 note

·

View note

Text

How Python Manages Memory Allocation?

Python manages memory allocation through an automatic memory management system, which includes object allocation, garbage collection, and memory optimization techniques.

1. Object Allocation

Python uses a private heap space to store all objects and data structures. When a variable is created, Python dynamically allocates memory from this heap. Python has an efficient memory allocator called the Python Memory Manager (PMM), which handles memory requests for different object types like integers, strings, and lists.

2. Reference Counting

Python uses reference counting to track the number of references to an object. When the reference count drops to zero (i.e., no variable is pointing to the object), the memory is deallocated automatically.

python

CopyEdit

import sys a = [1, 2, 3] print(sys.getrefcount(a)) # Shows reference count

3. Garbage Collection (GC)

Python has a built-in garbage collector that removes unused objects. It uses a generational garbage collection approach, categorizing objects into three generations based on their lifespan. Short-lived objects are cleared quickly, while long-lived objects are checked less frequently.

python

CopyEdit

import gc gc.collect() # Manually trigger garbage collection

4. Memory Pooling

To optimize memory usage, Python uses a technique called memory pooling, where frequently used small objects (like integers from -5 to 256) are stored in memory for reuse instead of being reallocated.

5. Optimizations in CPython

CPython, the standard implementation of Python, includes optimizations like PyMalloc, which improves memory allocation efficiency for small objects.

Understanding Python’s memory management is crucial for writing efficient programs and reducing memory leaks. To master Python’s memory handling, consider enrolling in a Python certification course.

0 notes

Text

اكتشف 5 ميزات مخفية في Python تجعل تعلم البرمجة أكثر إمتاعًا

تعلم البرمجة يمكن أن يكون تحديًا، لكنه يصبح أكثر متعة عندما تكتشف الميزات المخفية أو "بيضات عيد الفصح" التي تضيف لمسة من المرح والإثارة. لغة Python، المعروفة ببساطتها وقوتها، تحتوي على العديد من هذه الميزات المخفية التي يمكن أن تجعل تجربة التعلم أكثر تشويقًا. في هذا المقال، نستعرض خمسة من هذه الميزات التي ستساعدك على الاستمتاع أكثر بتعلم Python.

هل تعلم أن لغة البرمجة بايثون تحتوي على العديد من البيضات المخفية؟ اتضح أن لغة البرمجة المفضلة لديك لا تساعد فقط في بناء التطبيقات، بل إنها تحتوي أيضًا على بعض الفكاهة الجيدة. دعنا نتعرف على بعض أفضلها.

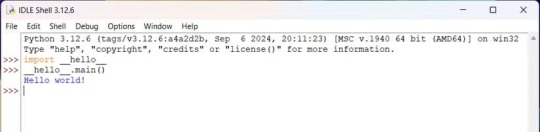

5. Hello World

إذا سبق لك كتابة التعليمات البرمجية بأي لغة، فمن المرجح أن يكون أول برنامج لك هو طباعة "Hello World" على وحدة التحكم. يمكنك القيام بذلك في بايثون بسطر من التعليمات البرمجية. print("Hello World") ومع ذلك، هناك طريقة أكثر تعقيدًا للقيام بذلك. يمكنك استيراد وحدة تسمى __hello__ لطباعتها. import __hello__ ومع ذلك، بدءًا من Python 3.11، يتعين عليك استدعاء الطريقة الرئيسية لطباعة النص فعليًا. import __hello__ __hello__.main()

على غرار وحدة __hello__، هناك أيضًا وحدة __phello__ التي تقوم بنفس الشيء. import __phello__ __phello__.main()

تحتوي وحدة __phello__ أيضًا على سمة spam يمكنك استدعاؤها لطباعتها مرتين. تعمل هذه السمة في الإصدارات الأقدم من 3.11. import __phello__.spam

في الواقع، تمت إضافة هذه الوحدات النمطية إلى Python لاختبار ما إذا كانت الوحدات النمطية المجمدة تعمل كما هو مقصود، كما هو مذكور في كود مصدر Cpython. In order to test the support for frozen modules, by default we define some simple frozen modules: __hello__, __phello__ (a package), and __phello__.spam. Loading any will print some famous words... لذا في المرة القادمة التي تريد فيها طباعة "مرحبًا بالعالم!"، جرّب هذه الحيلة لتضحك أو تُبهر الآخرين.

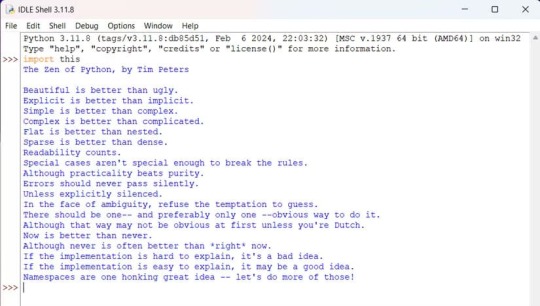

4. فلسفة بايثون

تحتوي كل لغة برمجة على بعض القواعد واللوائح والفلسفات وأفضل الممارسات. ولا تُستثنى بايثون من ذلك. كتب تيم بيترز، الذي كان مساهمًا رئيسيًا ��ي لغة برمجة بايثون، مجموعة من المبادئ لكتابة التعليمات البرمجية في بايثون. يُعرف هذا عادةً باسم "فلسفة بايثون". وقد تم دمج هذه القطعة الأدبية في هذه اللغة نفسها. لقراءتها، كل ما عليك فعله هو تشغيل: import this سوف ترى Zen of Python مطبوعًا على الشاشة. Beautiful is better than ugly. Explicit is better than implicit. Simple is better than complex. Complex is better than complicated. Flat is better than nested. Sparse is better than dense. Readability counts. Special cases aren't special enough to break the rules. Although practicality beats purity. Errors should never pass silently. Unless explicitly silenced. In the face of ambiguity, refuse the temptation to guess. There should be one-- and preferably only one --obvious way to do it. Although that way may not be obvious at first unless you're Dutch. Now is better than never. Although never is often better than *right* now. If the implementation is hard to explain, it's a bad idea. If the implementation is easy to explain, it may be a good idea. Namespaces are one honking great idea -- let's do more of those!

إذا نظرت إلى الكود الفعلي للملف، فستجد شيئًا مثيرًا للاهتمام. النص المطبوع مشفر في الأصل. s = """Gur Mra bs Clguba, ol Gvz Crgref Ornhgvshy vf orggre guna htyl. Rkcyvpvg vf orggre guna vzcyvpvg. Fvzcyr vf orggre guna pbzcyrk. Pbzcyrk vf orggre guna pbzcyvpngrq. Syng vf orggre guna arfgrq. Fcnefr vf orggre guna qrafr. Ernqnovyvgl pbhagf. Fcrpvny pnfrf nera'g fcrpvny rabhtu gb oernx gur ehyrf. Nygubhtu cenpgvpnyvgl orngf chevgl. Reebef fubhyq arire cnff fvyragyl. Hayrff rkcyvpvgyl fvyraprq. Va gur snpr bs nzovthvgl, ershfr gur grzcgngvba gb thrff. Gurer fubhyq or bar-- naq cersrenoyl bayl bar --boivbhf jnl gb qb vg. Nygubhtu gung jnl znl abg or boivbhf ng svefg hayrff lbh'er Qhgpu. Abj vf orggre guna arire. Nygubhtu arire vf bsgra orggre guna *evtug* abj. Vs gur vzcyrzragngvba vf uneq gb rkcynva, vg'f n onq vqrn. Vs gur vzcyrzragngvba vf rnfl gb rkcynva, vg znl or n tbbq vqrn. Anzrfcnprf ner bar ubaxvat terng vqrn -- yrg'f qb zber bs gubfr!""" هناك جزء آخر من الكود يقوم بتحويل النص المعطى. d = {} for c in (65, 97): for i in range(26): d = chr((i+13) % 26 + c) print("".join()) لذا، ما يحدث هو أن النص الأصلي تم تشفيره باستخدام خوارزمية استبدال تُعرف باسم ROT13. إنها خوارزمية قابلة للعكس. عندما تستورد وحدة this، يتم فك تشفير النص المشفر مرة أخرى إلى شكله الأصلي وطباعته على الشاشة.

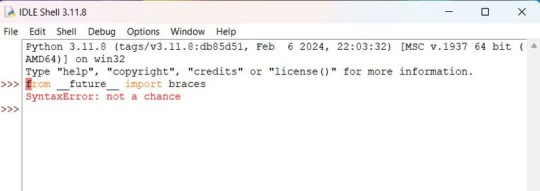

3. الأقواس أو عدم استخدام الأقواس

إذا كنت قد استخدمت Python عن بُعد، فأنت تعلم أن Python نادرًا ما يستخدم الأقواس المتعرجة، وهي واحدة من أكثر قواعد النحو شيوعًا في العديد من اللغات الشائعة. تُستخدم الأقواس المتعرجة عادةً لتحديد نطاق كتلة التعليمات البرمجية، مثل الشرطيات والحلقات وما إلى ذلك. بدلاً من الأقواس، يستخدم Python المسافة البادئة. ولكن هل ستكون هناك أقواس في Python؟ من غير المرجح. لأن المطورين أجابوا بالفعل في وحدة __future__. from __future__ import braces >>> SyntaxError: not a chance

هذا خطأ نحوي خاص لن تجده في أي حالة أخرى، مما يعني أن الأقواس لن تأتي أبدًا إلى Python. تُستخدم وحدة __future__ في Python لتنفيذ الميزات في إصدار Python الحالي والتي سيتم دمجها في إصدار مستقبلي. يتم ذلك حتى تتمكن من التكيف مع الميزة الجديدة. كان اختيارًا ذكيًا لتناسب هذه البيضة الفصحى في هذه الوحدة.

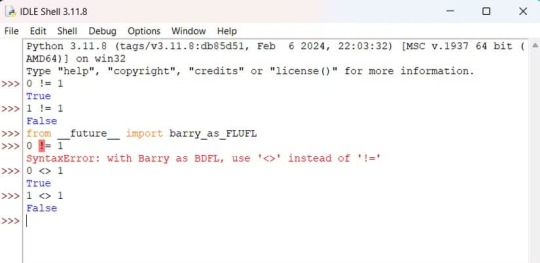

2. FLUFL

تحتوي وحدة __future__ على بيضة فصح أخرى مثيرة للاهتمام. إذا كنت قد استخدمت عوامل منطقية في البرمجة من قبل، فأنت تعلم أنه في معظم اللغات، يكون رمز عدم المساواة هو != (علامة تعجب تليها علامة يساوي). ومع ذلك، فضل أحد المطورين الأساسيين لـ Python، Barry Warsaw، المعروف أيضًا باسم Uncle Barry، استخدام عوامل الماس () لعدم المساواة. إليك جزء التعليمات البرمجية. from __future__ import barry_as_FLUFL 0 != 1 >>> SyntaxError: with Barry as BDFL, use '' instead of '!=' 0 1 >>> True 1 1 >>> False FLUFL تعني "اللغة الودية العم مدى الحياة"، وهو على ما يبدو لقب العم باري.

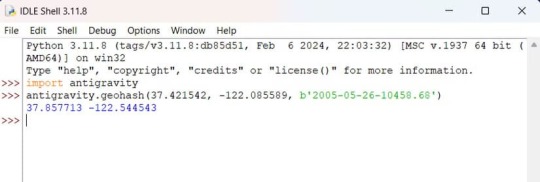

1. antigravity

وحدة أخرى ممتعة للعب بها. لن أفسدها عليك، ولكن قم بتشغيل هذا الكود وجربه بنفسك. import antigravity تحتوي هذه الوحدة أيضًا على دالة geohash(). تُستخدم هذه الدالة في geohashing باستخدام خوارزمية Munroe. الآن، قد يبدو هذا غير مناسب تمامًا. ومع ذلك، ترتبط هذه الوظيفة ارتباطًا وثيقًا بالبيضة الفصحية السابقة التي رأيتها في وحدة مكافحة الجاذبية. أشياء رائعة جدًا.

من الممتع دائمًا العثور على أشياء خلف الكواليس حول لغات البرمجة، خاصةً إذا كانت تضع ابتسامة على وجهك. إذا كان هذا يثير اهتمامك، فقد تفكر في تعلم Python والقيام بأشياء أكثر متعة بها. اكتشاف الميزات المخفية في Python يمكن أن يحول تجربة تعلم البرمجة من مهمة روتينية إلى مغامرة ممتعة. بفضل هذه الميزات، يمكنك الاستمتاع أكثر بالعمل مع Python واكتشاف جوانب جديدة من اللغة. إذا كنت تبحث عن طريقة لجعل تعلم البرمجة أكثر تشويقًا، فإن هذه الميزات المخفية هي نقطة بداية رائعة. جربها بنفسك واستمتع بتجربة تعلم أكثر إبداعًا. Read the full article

0 notes