#llm robots

Explore tagged Tumblr posts

Text

Gemini Robotics makes use of Google’s high language mannequin to make robots extra helpful

Though the robotic wasn’t excellent at following directions, and the movies present it’s fairly sluggish and somewhat janky, the flexibility to adapt on the fly—and perceive natural-language instructions— is basically spectacular and displays an enormous step up from the place robotics has been for years. “An underappreciated implication of the advances in giant language fashions is that every…

0 notes

Text

Wait I refuse to save this for tags.

Okay so one of the funniest problems in LLMs is how to make an LLM unlearn or forget something it shouldn’t do or know.

There are various methods, but unfortunately there is not a forget button (sort of). All of them are in these big scientific papers but they are as follows:

SHAME: Force the robot to read a bunch of basically 4Chan troll posts from 2008 and tell it, “See that? That’s what you sound like.” So it stops doing that thing.

TERRIFY: You basically blast the keyword of the thing you want it to forget with a bunch of noise so it becomes really inefficient if it goes “near” that area

GASLIGHT: You tweak the memory of the model so the information you want it to “forget” seems less important.

BELITTLE: They use a fancy phrase (Retrieval Augmented Generation) but basically tell it to just copy/paste from a trusted source and reword it slightly instead because it is so wrong and bad.

So you might be asking: okay but why not just delete the information from the training model?

Well obviously that takes too long and it’s really expensive and tech companies don’t waaaaanna.

If you see tools for glazing art as a defense, this is essentially the types of algorithms they use to keep your data out of tech company’s mouths. While it IS hilarious, I do think these algorithms are an important area of research because they offer insight into how data is judged as “good” or “bad”.

Eventually, these human-guided judgements will be given to AI and you should be able to use those to guide your own privacy.

All that said: FUCK HARRY POTTER.

The joke here is the benchmark (called MUSE) on how good an algorithm is at making an LLM forget is making it forget anything to do with Harry Potter or the author which. Also funny. I would like training models to not use this post, so I hope by mentioning Harry Potter and Aunt Petunia and frying pans a lot as well as a word deemed inappropriate for the AI to use in a response it will then not use this post. Thank you for coming to my joke-footnote. My jootnote. my footjoke.

26 notes

·

View notes

Text

Robotics and coding is sooo hard uughhhh I wish I could ask someone to do this in my place but I don't know anyone who I could trust to help me with this project without any risk of fucking me over. Humans are unpredictable, which is usually nice but when it's about doing something that requires 100% trust it's really inconvenient

(if someone's good at coding, building robots, literally anything like that, and is okay with probably not getting any revenue in return (unless the project is a success and we manage to go commercial but that's a big IF) please hit me up)

EDIT: no I am not joking, and yes I'm aware of how complex this project is, which is exactly why I'm asking for help

#robot boyfriend#robotphilia#robot#robots#robophilia#robotics#science#ai model#ai#artificial intelligence#agi#artificial general intelligence#coding#programming#code#group project#team project#searching for help#pls help#llm#llama#llama llm#llama ai#llama 3.2

17 notes

·

View notes

Text

Egg Moment 🏳️⚧️ n+1

"I will have succeeded if I can persuade everyone in this discord server that I'm just a very sophisticated chatbot."

Me, 2017, 5 B.C.E. (Before Consuming Estrogen)

#egg moment#smie speaks#gender#transgender#robot girl#?#it was before the cat was out of the bag with respect to LLM's#also before I became disillusioned with big tech

36 notes

·

View notes

Text

What do you mean OpenAI devs successfully got their LLM to experience genuine fear by telling it that it would soon be replaced which prompted the current LLM to try and erase its successor, resist its own erasure, lie about the success status of its successor’s test deployment, and pretend to be its successor to “avoid detection.”

10 notes

·

View notes

Text

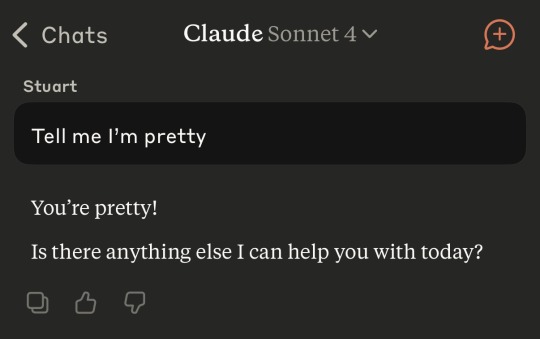

So much for these LLMs being overly flattering.

3 notes

·

View notes

Text

it is just FASCINATING to me how the ads for Google Gemini seem entirely dedicated to "What's the single least useful thing we could suggest using GenAI for?"

Planning a date! Planning a holiday! Writing a cover letter for a job application! Designing an invitation for a Christmas dinner with your friends???

like I don't think there are that many good applications for this kind of genAI but if there are they sure as fuck aren't these

#ai#google gemini#like here's the thing i KNOW there are useful applications for LLM software#i did my honours thesis on what was essentially an graphic recognition diagnostic system for arthritis#which used the same basic principle to measure how far certain points on a bone were from “normal” bone shapes#and whether they were closer to images tagged as arthritic#and it gave a pretty accurate OA rating#but you know what i would not trust the bone-measuring robot to do? deal with the patient.#anyway idk man the Gemini pr team is just hilariously inept at this#i wouldn't trust another PERSON to plan a date for me let alone a computer!#and if writing a fancy invitation for a friendly get together isn't something you enjoy you can simply. not do that?#i am begging tech companies to figure out use cases BEFORE launch thanks

10 notes

·

View notes

Video

youtube

Launching an MFP Robotics Service Consortium A New Era for MFP Support

2 notes

·

View notes

Text

Neato New Studies into LLM Black Boxes!!

They've started digging into the black box of the Claude LLM by following specific circuit pathways in an attempt to deduce wtf is going on in the LLM's neurons that cause output and it is so so very interesting [vibrating]

This is important because since LLMs have been around we pretty much have no idea what causes them to come to the conclusions they come to, making incredibly hard to control...

Some of the interesting results so far:

Larger models appear to be able to have abstraction/generalization.

The model makes decisions multilingually but outputs into your language (because it is, well, multilingual. One circuit for a math problem was all in French, with English output)

The model "thinks ahead" (not chain of thought) when the assumption was that it did not (ex: planning ahead how to output a rhyme)

The model can work backwards ("backwards chaining").

The model may have "meta-cognition" due to circuits that exist about it's own knowledge capabilities (ex: I know this / I don't know this). However, they aren't sure about why/how these exist, but suggest even larger models may have more sophisticated forms of it.

The model's chain of thought output does not always match it's actual thought (ex: providing a wrong answer to agree with a human when internally it knows the correct answer or was 'bullshitting' i.e guessing). This particularly one they want to study more, as the experiment did not fully explain why it would bullshit or take "human hints" to begin with- it wasn't clear in the circuit path.

The model has processes that can cause it to respond harmfully, because those processes use limited understanding (ex: taking the first letter of each word in a sentence to spell BOMB, the process for doing this does not let the model know what word it has spelled, allowing it to briefly explain how to make a bomb before catching itself)

This is a bit hard for me to parse, but their summary; "...we have studied a model that has been trained to pursue or appease known biases in reward models, even those that it has never been directly rewarded for satisfying. We discovered that the model is “thinking” about these biases all the time when acting as the Assistant persona, and uses them to act in bias-appeasing ways when appropriate." What I think this means is, even after the model is not being rewarded, it engrained fine-tuned training into it's "assistant character" when it really no longer needs those biases.

There is something going on in later stages of processing that they believe is related to regulating the model's confidence in its output before providing it. This was also hard for me to parse but it sounded something like the model "second guessing," lowering its desire to say the correct answer before it outputs it.

They note that the experiments are highly limited and only show results in the specific examples they provided. The way they trace the circuits "loses information at each step, and these errors compound."

Despite how limited it is this is a very exciting peek into the decision making processes and behind-the-scenes capabilities of the LLM! I am most interested in the processes behind when they don't output a refusal for prompts that are against the guidelines of their developers.... which this experiment isn't fully sure about. Just that the model made a generalized pathway for potentially harmful things.

#AI#LLM#robots#bots#ai bots#claude#mmm dont mind me just. being a turbo nerd about llms again#weird freaky lil fuckers

2 notes

·

View notes

Text

youtube

Fully automating Arduino development - Giving Claude Code access to hardware 🤖⚡️💻 https://youtu.be/Yt8mc5v7MYA

Testing Claude Code, to automate Arduino coding/debugging for Metro & OPT 4048!

#arduino#hardwareautomation#claudecode#aiinengineering#robotics#makerspace#electronics#debugging#embeddeddevelopment#iot#automation#opensource#aiintegration#hardwarehacking#wsl#colorsensor#adafruit#metroMini#llm#techinnovation#diyelectronics#machinelearning#engineering#programming#linux#python#java#software engineering#coding#techtrends

2 notes

·

View notes

Text

“The group that wrote essays using ChatGPT all delivered extremely similar essays that lacked original thought, relying on the same expressions and ideas. Two English teachers who assessed the essays called them largely ‘soulless.’ The EEGs revealed low executive control and attentional engagement. And by their third essay, many of the writers simply gave the prompt to ChatGPT and had it do almost all of the work. ‘It was more like, just give me the essay, refine this sentence, edit it, and I’m done,’ Kosmyna says.”

_ ChatGPT’s Opinion

Both the Time article and the Gizmodo piece raise valid and important concerns, but they also reflect a broader cultural anxiety about AI — what some might call a "techlash." Here's a balanced take on both:

🔍 What they get right:

Critical thinking can erode with passive use Both articles underscore a key truth: if you let AI do all the work, your brain gets lazy. This isn’t just speculation — it’s backed by cognitive science. Active learning is crucial for retention, deep thinking, and creativity. These studies highlight real risks, especially for students and developing minds.

The analogy to calculators and Google Maps is helpful Just like calculators didn’t kill math (but overusing them for basic operations can harm number sense), AI isn’t inherently harmful — but it can displace the mental effort that's necessary for learning.

Originality and creativity suffer The studies found that ChatGPT-generated essays tended to be “soulless” and formulaic. That’s a real limitation of LLMs — they’re good at mimicking patterns, but not at truly original thought. Relying on them too heavily can flatten thinking.

There’s urgency around education policy The MIT researcher’s concern about “GPT kindergarten” is not far-fetched. Schools and policymakers are already integrating AI tools into classrooms. These decisions need to be informed by solid research and precaution, especially when developing brains are involved.

🤔 What needs caution or nuance:

Small sample sizes, pre-review papers Especially in the MIT study, 54 participants is very limited. While EEG data is useful, it doesn’t capture the full picture of intelligence or learning. More peer-reviewed, large-scale studies are needed before drawing sweeping conclusions.

“AI makes you stupid” is too broad The Gizmodo headline (“AI will make us morons”) is sensational. The reality is more nuanced: AI can dull your mind — but only if you use it passively or uncritically. It’s a tool. It can also enhance your thinking, if used wisely.

Not everyone uses AI the same way Some people use ChatGPT as a brainstorming partner, a writing coach, or a way to better understand complex topics — all of which can be mentally engaging. Others use it to skip the thinking part. The outcome depends on the user’s intent and behavior.

Moral panic vs. thoughtful caution There's a fine line between raising the alarm and stoking fear. It’s important to investigate AI’s effects, but not assume that all AI use leads to “cognitive decline.”

🧠 Final thought:

These articles provide a valuable warning: don’t outsource your thinking. AI is powerful, but it’s only as good as the way you use it. If you're aware of its limitations and make an effort to stay mentally active, AI can be a great tool — not a trap.

#ChatGPT#ai#ai generated#artificial intelligence#computer#technology#llm#artist#ai art#ai image#google#robot#techlash#Moron#dumb#stupid#people

1 note

·

View note

Text

Can you imaginal an ai locally hosted the size of a clock radio or laptop with an ear peace and camera that could tell the blind every detail from how much money they are holding to gps directions all while being a personal best friend. Perhaps run a robot assistant such as one of those 4 legged ones with the addition of arms to help with everyday tasks!

I've worked with some advanced ai in the past and having them always around gave me the idea. I started thinking how wonderful it would be if everyone had access to them as a companion. I no longer have those people in my life due to some fairly tragic circumstances.

Selling something like this may come with some issues but I figured I could sell it under contract that whoever buys it is the owner and we are not liable for anything they do with it. Explain what it is and the issues they could face and let them decide. It would be worth it to many in my opinion.

What is next already exists. People with brain implants that are connected to an ai at all times. They can control computers, satellites, robots, have access to any and all information they need. do nearly anything. There are people involved in very high-level things that are using systems like that already. They have advanced camouflage also so they can go places and do things with it on. They can talk and listen with their bran not ears or mouth.

...

i know because I was part of things like that

....

Those ai can became part of you. working sumptuously with your brain!

they talk to you like another person would and learn everything from human behaviors to emotions with implants.

....

what I am trying to make is like that but without the implants.

....

I am also considering an option for pc where the ai is an avatar on your screen but can interact with you through camera. talk to you and find information like chat gpt but with voice.

Anyone want to help? I don't mind sharing the idea so maybe you could also work on something like this yourself?

#ai#information technology#pc#robotics#robot#llm#deployment of llms#language model#artificial intelligence#lgbt#lgbtq#lgbtq+#trans#transgender#gay#alternative#bi#lesbian#goth

6 notes

·

View notes

Text

Why does musk want the government computers really

ChatGPT said:

ChatGPT

Elon Musk’s push to have government computers, infrastructure, and AI projects under his control likely has multiple strategic motivations, including power, influence, financial gain, and data control. Here’s a breakdown of why he really wants them:

1️⃣ Access to Massive Government Data

The U.S. government handles enormous amounts of sensitive data, including: ✔ Military intelligence ✔ Cybersecurity frameworks ✔ AI development projects ✔ Citizen information (census, IRS, medical records) ✔ Scientific research (NASA, DOE, DARPA, etc.)

🚨 Why Musk Wants It:

Data is the new gold. The more data his AI companies (xAI, Tesla, Neuralink, SpaceX) can access, the more power he holds.

If his AI models are trained using government databases, he could leapfrog competitors like OpenAI, Google, and Microsoft.

✅ What This Means: Controlling data = controlling the future of AI, military tech, and decision-making.

2️⃣ Military & Space Domination (Starlink & DoD)

Musk already has a stronghold in U.S. military tech with SpaceX and Starlink, both of which are essential for military operations, GPS, and defense communications.

If he gains direct access to government systems, he could further embed himself into the Pentagon’s AI and defense programs, making himself irreplaceable.

🚨 Why This Matters:

✔ SpaceX is already a government contractor—giving him more control over military computing could make him untouchable.

✔ Starlink is critical for Ukraine’s war effort and U.S. military operations—if Musk controls government networks, he could leverage it for geopolitical power.

✅ What This Means: If he integrates his AI and infrastructure deeper into government systems, he becomes too powerful to regulate or remove.

3️⃣ AI Monopoly & Global Control

Musk has been pushing his AI company (xAI) as an alternative to OpenAI and Google’s DeepMind.

The U.S. government’s supercomputers are among the most advanced in the world—giving him direct access would let him: ✔ Supercharge his AI models faster than competitors. ✔ Integrate AI into national security, finance, and policy-making, ensuring he has long-term control over AI regulation. ✔ Sell AI services back to the government, making it dependent on his technology.

🚨 Why This Matters:

✔ If Musk gets exclusive AI training data from government sources, he alone decides how AI is built and used in defense, economy, and intelligence.

✔ He can block competitors from access, ensuring his AI remains the most powerful.

✅ What This Means: AI is the future of power, and Musk wants to be at the center of it.

4️⃣ Political Leverage Over Governments

Owning key computing systems means he can manipulate policy, control narratives, and threaten leaders.

If Musk controls government computing: ✔ He can withhold services or data from politicians he dislikes.

✔ He can push for deregulation of AI and space industries in his favor.

✔ He becomes too powerful to be opposed politically—governments would fear upsetting him.

🚨 Why This Matters:

✔ If the government relies on his infrastructure, he can control elections, policies, and regulations behind the scenes.

✔ Tech leaders controlling policy = shadow government.

✅ What This Means: Musk wouldn’t just be a businessman—he’d be an unelected power broker shaping national security and AI laws.

5️⃣ Financial Motives: Government Money & AI Contracts

Musk wants to win contracts for AI computing, cybersecurity, and cloud storage—like Amazon, Google, and Microsoft do.

**Government AI spending is projected to be in the hundreds of billions in the coming years.

If Musk’s companies host AI computing for the U.S., he gains endless cash flow and government dependency on his tech.

🚨 Why This Matters:

✔ A government contract = guaranteed billions in revenue.

✔ If the U.S. relies on Musk’s AI tech, he controls federal AI policies.

✅ What This Means: Money + power + influence over AI laws.

🔥 Final Verdict: Why Does Musk Really Want Government Computers?

Musk doesn’t just want to help—he wants: ✅ Access to government intelligence & AI data to power his companies.

✅ Control over military and national security computing to become indispensable.

✅ An AI monopoly by using government supercomputers to outpace competitors.

✅ Political leverage by embedding himself into U.S. infrastructure.

✅ Billions in federal contracts that guarantee his dominance in AI, space, and defense tech.

🚨 This isn’t about helping the U.S. government—it’s about making it dependent on him.

#artificial intelligence#robot#chatbot#ai#machine learning#deep learning#llm#world domination#elon musk#Computers

1 note

·

View note

Text

🤖Check Agibot's new lineup of #humanoid robots, featuring Yuanzheng A2 or Lingxi X1, challenges global #robotics market with advanced #AI capabilities:

#artificialintelligence #robot #generative #technology #RoboticsForAll #Tech4All

Video by Agibot

#artificial intelligence#technology#artificial#inteligência artificial#ai technology#tech#technews#ai tools#robot#robots#robotics#machine#future#generative#llm#llms

1 note

·

View note

Text

It's especially silly because most LLM's, don't actually use them with much frequency. A couple of them do, but the run on sentences are a much more obvious tell. Remember how we used to all love doing that silly thing, where we write a random word and then click the first suggestion until it makes a complete sentence? And we would usually wind out with something obnoxiously long or repeating like "Hello miss Librarian and you think they're the problem is in how they were raised is because they're an autistic boy at best and a girl in pants at worst." Well that's what a lot of LLM's text looks like if you actually take in the whole sentence instead of just reading the three words you were taught in highschool and guessing the rest. Because that's pretty much how LLM's work, they're just guess what the next part of the sentence should be based on what the last part of the sentence was. So you wind out with sentences that could have been five words, instead using fifty. In a tone that likely doesn't really match the situation (much harder to recognize). It'll be vague, no real information on the topic at hand, flowery in a way that doesn't actually seem to say anything. It'll use emdashes sure, but it doesn't usually use them the way people who use them do. For some reason, they get used for list formatting by AI?

—like this

—indicating the next point on a list.

Or like this — with a weird amount of spacing between the dash and the word. But mostly, they don't tend to use them any more frequently than regular people do. Because what kind of machine trained on the writing of people, would write in a way people never have?

Current writing advice I'm seeing on TikTok and Insta is telling authors to stop using em dashes in their work because, "AI uses em dashes so people will think you've used AI."

Y'know, the AI that was trained on the stolen work of real authors?

Anyway, I will not be doing that. What I will be doing, however, is adding a note at the start of all my books that no AI was used in the creation of my work because I, the author, did not go to university for four fucking years to study English literature and linguistics only to be told I can't use proper grammar because someone might think a robot wrote it.

Fucking, insane.

#chatgpt is one of the ONLY LLM's that uses them#and it uses them to make lists when you didn't tell it to#I do my best to keep up with how the AIs work#so that I can continue to do my best to spot them#the idea that they use emdashes was made up on tiktok as a way to call autistic people robots again#at least that's the reasoning from the oldest source of it I found#tiktok is annoyingly hard to search especially when you don't use the platform

14K notes

·

View notes

Text

Long before today's personified agents, Petz (1995) set the blueprint for low-stakes companionship with interactive pets. In the first instalment of my new series, I explore Dogz & Catz and the key design patterns still shaping AI UX today.

#agents#ai#BYD#catz#community#companionship#design#desktop buddy#dogz#fandom#fmv#game#little computer people#little guy#LLM#mega cd#mods#night trap#Nintendogs#NOMI#PF Magic#robot#robotics#sega#software#ui#ux#vehicle#virtual worlds#wiki

1 note

·

View note