Text

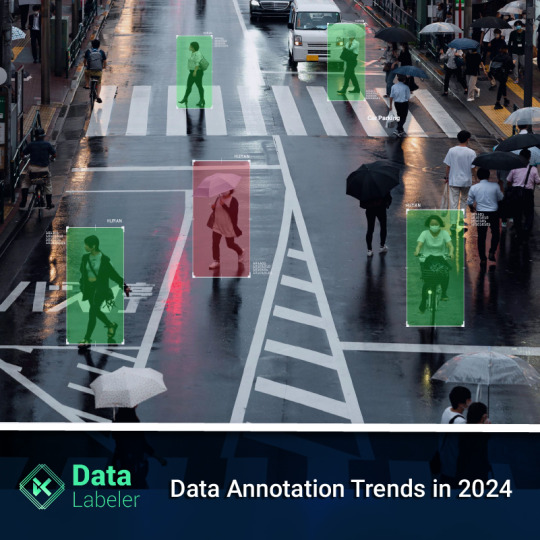

Unveiling the Power of Data Annotation in 2024: The Way for Computer Vision

In the fields of artificial intelligence and computer vision, data annotation is an essential procedure that forms the basis for training machine learning models. Fundamentally, data annotation is classifying or labeling unprocessed data—such as pictures, videos, or text—so that artificial intelligence (AI) algorithms can comprehend and utilize it. This painstaking procedure is necessary to teach AI systems how to correctly understand and react to real-world data, which is the foundation of many AI applications, such as autonomous vehicles and facial recognition.

The importance of data annotation in AI and computer vision has increased significantly as we approach 2024. As technology continues to expand the possibilities of artificial intelligence, there is an increasing demand for precise and high-quality annotations. Not only has there been an increase in the amount, but also in the complexity and diversity of data needed, which has made data annotation an important and constantly changing topic.

In the rapidly developing field of artificial intelligence, organizations, developers, and researchers must understand since it can improve the precision and dependability of AI systems.

Significant Role of Data Annotation in Computer Vision

The development of computer vision technologies—a major field in artificial intelligence that focuses on enabling machines to interpret and comprehend visual input from the surrounding world—begins with data annotation. To train machine learning models, this procedure entails carefully labeling or classifying pictures, videos, and other visual data.

Data annotation is a vital ongoing process that continuously improves and expands the capabilities of computer vision technologies, guaranteeing their continued effectiveness and dependability as they develop. It is not merely a preliminary stage in the building of AI models.

Future Trends of Data Annotation in 2024

According to Grand View Research, the global data annotation market was worth USD 695.5 million in 2020 and was predicted to increase at a compound annual growth rate (CAGR) of 26.6% from 2021 to 2028.

The Evolution of Data Annotation in Computer Vision: As we approach 2024, data annotation remains a driving force in the field of computer vision. This year has seen tremendous breakthroughs in data annotation technology, thanks to novel techniques and cutting-edge software. These advancements are not only improving annotation precision and efficiency, but they are also altering the workflow of computer vision engineers.

Automated and AI-Powered Annotation Solutions: AI is increasingly being integrated into data annotation solutions. AI-powered annotation tools, according to a new study, are likely to reduce manual annotation time by up to 50% while enhancing accuracy. This advancement is critical in dealing with the ever-increasing datasets required for sophisticated computer vision applications.

Machine Learning for Better Quality: Machine learning techniques are increasingly being used to improve the annotation process. These algorithms can learn from previous annotations, enhancing the quality and speed of the annotation process over time. This is especially useful in complex settings where high precision is required.

Growth of Specialized Data Annotation Services: There is an increasing need for these services. Businesses are looking for services that provide high-quality, domain-specific annotations in addition to volume. The demand for more precise and nuanced data in industries like medical imaging and autonomous driving is driving this development.

Conclusion:

In conclusion, technical innovation, enhanced efficiency, and the growing significance of specialized, high-quality annotations characterize the data annotation landscape of 2024. These developments are not only expanding computer vision’s potential but also having a significant impact on several industries, including healthcare and autonomous cars.

Interested to know more about Data Labeler and our offerings? For further information contact us or request a demo today!

0 notes

Text

How Does Data Annotation Assure Safety in Autonomous Vehicles?

To contrast a human-driven car with one operated by a computer is to contrast viewpoints. Over six million car crashes occur each year, according to the US National Highway Traffic Safety Administration. These crashes claim the lives of about 36,000 Americans, while another 2.5 million are treated in hospital emergency departments. Even more startling are the figures on a worldwide scale.

One could wonder if these numbers would drop significantly if AVs were to become the norm. Thus, data annotation is contributing significantly to the increased safety and convenience of Autonomous Vehicles. To enable the car to make safe judgments and navigate, its machine-learning algorithms need to be trained on accurate and well-annotated data.

Here are some important features of data annotation for autonomous vehicles to ensure safety:

Semantic Segmentation: Annotating lanes, pedestrians, cars, and traffic signs, as well as their borders, in photos or sensor data, is known as semantic segmentation. The car needs accurate segmentation to comprehend its environment.

Object Detection: It is the process of locating and classifying items, such as vehicles, bicycles, pedestrians, and obstructions, in pictures or sensor data.

Lane Marking Annotation: Road boundaries and lane lines can be annotated to assist a vehicle in staying in its lane and navigating safely.

Depth Estimation: Giving the vehicle depth data to assist it in gauging how far away objects are in its path. This is essential for preventing collisions.

Path Planning: Annotating potential routes or trajectories for the car to follow while accounting for safety concerns and traffic laws is known as path planning.

Traffic Sign Recognition: Marking signs, signals, and their interpretations to make sure the car abides by the law.

Behaviour Prediction: By providing annotations for the expected actions of other drivers (e.g., determining if a pedestrian will cross the street), the car can make more educated decisions.

Map and Localization Data: By adding annotations to high-definition maps and localization data, the car will be able to navigate and position itself more precisely.

Weather and Lighting Conditions: Data collected in a variety of weather and lighting circumstances (such as rain, snow, fog, and darkness) should be annotated to aid the vehicle’s learning process.

Anomaly Detection: Noting unusual circumstances or possible dangers, like roadblocks, collisions, or sudden pedestrian movements.

Diverse Scenarios: To train the autonomous car for various contexts, make sure the dataset includes a wide range of driving scenarios, such as suburban, urban, and highway driving.

Sensor Fusion: Adding annotations to data from several sensors, such as cameras, radar, LiDAR, and ultrasonics, to assist the car in combining information from several sources and arriving at precise conclusions.

Continual Data Updating: Adding annotations to the data regularly to reflect shifting traffic patterns, construction zones, and road conditions.

Quality Assurance: Applying quality control techniques, such as human annotation verification and the use of quality metrics, to guarantee precise and consistent annotations.

Machine Learning Feedback Loop: Creating a feedback loop based on real-world data and user interactions to continuously enhance the vehicle’s performance.

Ethical Considerations: Make sure that privacy laws and ethical issues, like anonymizing sensitive material, are taken into account during the data annotation process.

Conclusion:

An important but frequently disregarded component in the development of autonomous vehicles is data annotation. Self-driving cars would remain an unattainable dream if it weren’t for the diligent efforts of data annotators. Data Labeler provides extensive support with annotating data for several kinds of AI models. For any further queries, you can visit our website. Alternatively, we are reachable at [email protected].

0 notes

Text

How Healthcare Industry is utilizing the power of Artificial Intelligence effectively?

The global AI in healthcare market is anticipated to grow at a compound annual growth rate (CAGR) of 46.1% to reach USD 95.65 billion by 2028. The primary cause of development is the growing need for better, quicker, more precise, and individualized medical care. Furthermore, the expanding potential of artificial intelligence in genomics and drug discovery is the reason behind the increased use of modern technology in healthcare.

The healthcare sector is changing thanks to artificial intelligence (AI), which offers cutting- edge solutions that improve patient care, diagnosis, and treatment.

The safe and effective use of technology is being facilitated by IEC Standards. Artificial intelligence (AI) has the potential to revolutionize healthcare delivery by automating processes, improving clinical decision-making, and analyzing enormous volumes of data.

Producing high-quality training data for AI-assisted healthcare requires expert data labeling.

Let’s examine some of the most well-liked applications of AI in healthcare and how dataannotation & labeling supports their expansion.

Surgery: Robotic surgery employs precision data labeling.

Medical: Advanced research, drug discovery, and individualized medication therapy are all facilitated by the application of pattern recognition systems.

Diagnosis: Object recognition on thermal pictures is employed for early illness diagnosis (e.g., breast cancer); medical image annotation of MRIs, X-rays, and CT scans is used for diagnostic support.

Virtual Assistance: Conversational robots, chatbots, and virtual assistants are trained using labeled data to perform tasks such as appointment scheduling, medication reminders, and health status monitoring and assessment.

Patient Engagement: Using entity recognition for chatbot creation and audio and text transcription to digitize record management, annotated data enhances patient follow-up and maintenance following therapy.

How Is Machine Learning Changing the Medical Field?

Trustworthy ML – Physicians and patients alike must have faith in the results of machine learning systems for effective implementation in the healthcare industry.

Therefore, to guarantee that the results are trustworthy and suitable for clinical decision-making, machine learning must be implemented consistently in healthcare settings.

User-friendly and efficient machine learning – The usability of machine learning measures how well a model can assist in achieving particular objectives most cost-effectively to meet the demands of patients. Such machine learning needs to be adaptable to various healthcare environments and enhance conventional patient care.

Clear ML – Completeness and interpretability are the two primary needs implied by the reasonability of machine learning in the healthcare industry. To do this, it is necessary to make sure that data processing is transparent and that different methods are used to make inputs and outputs visible. Therapeutics and diagnostic test development depend on the development of ML healthcare that is understandable and transparent.

Ethical and responsible machine learning – The ML systems designed for clinical contexts are predicated on the notion of advancing healthcare to the point where technology can save lives, hence benefiting humanity. Machine learning has a lot of responsibility here.

ML that is safe, meaningful, and responsible requires an interdisciplinary team made up of several stakeholders, including users, decision-makers, and knowledge experts.

A series of fundamental procedures are established by responsible ML practices in medicine, including:

Identifying the issue

Defining the solution

Thinking through the ethical ramifications

Assessing the model

Reporting results

Deploying the system ethically

Defining the Future of Healthcare with AI

Bringing artificial intelligence and healthcare together requires striking a balance between the benefits of technology and human life. Healthcare professionals need to get the right training to understand the fundamentals of machine learning and recognize potential hazards, as the use of these algorithms in clinical and research settings grows.

Therefore, developing the most effective and dependable machine learning systems for better patient care requires cooperation between data scientists and doctors. However, we must never lose sight of the fact that data is the foundation of any AI project, particularly when working with supervised algorithms. As a result, data annotation becomes more crucial in healthcare systems that use machine learning.

Here’s where Data Labeler can provide you the ultimate support in labeling your data, hence, helping you go the next mile in your journey of implementing AI. For further details please visit our website Data Labeler. You may also reach out to us!

0 notes

Text

Present Day Use Case Scenarios of Data Labeling in Insurance Sector

AI’s application in the insurance industry is expanding rapidly, covering everything from risk mitigation and damage analysis to compliance and claims processing. For example, repetitive activities are performed by Robotic Process Automation (RPA), freeing up operational personnel to work on more complicated duties.

AI is radically altering the long-standing practices of insurance. The sector is predicted to surpass $2.5 billion by 2025 because of its quick growth. This benchmark suggests a 30.3% compound annual growth rate from 2019 to 2025.

Use Cases of AI ML & Data Labeling in Insurance Sector

Fraud Detection in Insurance Claims:

Research conducted by the FBI on US insurance firms found that the annual cost of insurance fraud, or non-health insurance, is around $40 billion. This indicates that the average US household pays an additional $400 to $700 a year in premiums as a result of insurance fraud. These alarming figures highlight how urgently insurance firms need extremely accurate automated theft detection systems to improve their due diligence procedures.

Analysis of Property Damage:

Insurance companies face a difficult problem when calculating repair costs through manual intervention in damage assessment. According to a PwC analysis, using drones and artificial intelligence in the insurance sector can save the sector up to US$6.8 billion annually. By combining automated object detection with the power of drones, claim resolution times can be shortened by 25% to 50%. Vehicle parts deterioration can be identified using machine learning models, which can also assist in estimating repair costs.

Automated Inspections:

The procedure of filing a damage insurance claim begins with an inspection, regardless of the asset—a mobile phone, a car, or real estate. Manual inspection is an expensive proposition because it necessitates the adjuster/surveyor to travel and engage with the policyholder. Inspections can cost anything from $50 to $200. Since creating and estimating reports takes one to seven days, claims settlement would also be delayed.Insurance firms can examine car damage with AI-powered image processing. After that, the system produces a comprehensive assessment report that lists all vehicle parts that can be repaired or replaced along with an estimate of their cost. Insurance companies can reduce the cost of estimating claims and streamline the procedure. In addition, it generates reliable data to determine the ultimate settlement sum.

Automated Underwriting :

Traditionally, the analysis of past data and decision-making in insurance underwriting relied mostly on employees. In addition, they had to deal with disorganized systems, processes, and workflows to reduce risks and give value to customers. Intelligent process automation offers Machine Learning algorithms that gather and interpret vast volumes of data, streamlining the underwriting process.Moreover, it minimizes application mistakes, controls straight-through-acceptance (STA) rates, and enhances rule performance. Underwriters can concentrate solely on challenging instances that may necessitate manual attention by automating the majority of the procedure.

Pricing and Risk Management:

Price optimization uses data analytic techniques to determine the optimal rates for a particular organization while taking its goals into account. It also helps to understand how customers respond to various pricing strategies for goods and services. Generalized Linear Models, or GLMs, are primarily used by insurance companies to optimize prices in areas such as life and auto insurance. By using this strategy, insurance businesses can improve conversion rates, balance capacity, and demand, and gain a deeper understanding of their clientele.Automation of risk assessment also improves operational effectiveness. Automation of risk assessment increases efficiency by fusing RPA with machine learning and cognitive technologies to build intelligent operations. Insurance companies can provide a better customer experience and lower turnover because the automated procedure saves a lot of time.

Here’s how Fraud Detection can be achieved from Data Labeling?

Fraud detection systems that use machine learning (ML) rely on algorithms that can be taught with historical data from both legitimate and fraudulent acts in the past. This allows the algorithms to autonomously discover patterns in the events and alert users when they recur.Large volumes of labeled data, previously annotated with specific labels characterizing its main attributes, are used to train ML-based fraud detection algorithms. Data from both genuine and fraudulent transactions that have been labeled as “fraud” or “non-fraud” accordingly may be included in this. The system receives both the input (transaction data) and the desired output (groups of classified examples) from these labeled datasets, which is significantly a laborious manual tagging process. This allows algorithms to determine which patterns and relationships link the datasets and use the results to classify future cases.

In addition to the areas highlighted above, there are a few more in the insurance industrywhere AI & Data Labeling are essential to delivering the best possible client experience.

Customer segmentation

Workstream balancing for agents

Self-servicing for policy management

Claims adjudication

Policy Servicing

Insurance distribution

Speech analytics

Submission intake and many more

If you want to create a faster and better experience for your customers in the Insurance field, please visit our website Data Labeler, and contact us.

0 notes

Text

Realize the Real Power of High-Quality Data Annotation in AI Development

Consequences of Poor Data Annotation

The low quality of the data is the cause of many algorithmic issues. Data annotation, or the practice of labeling data with certain attributes or characteristics, is one technique to increase the quality of the data for ML algorithms.

To give an algorithm in identifying other unlabeled objects, an archive of photographs of fruits, for instance, may be manually labeled as apple, pear, watermelon, and so on. Although data annotation can be a time-consuming, manual task, it can become increasingly important as datasets grow enormous and complicated.

Because models must be continually constructed, retrained, and run, the effects of improperly labeled data can be both frustrating and expensive.

Significance of Data Annotations & Training Data

Giving labels and metadata tags to texts, videos, photos, or other content forms is a component of the training data process known as data annotation. Because they lay the foundation for building machine learning models, data annotations are the foundation of every algorithm. Technical representations, procedures, different tool kinds, systemarchitecture, and a wide range of ideas unique to training data alone are just a few of thefactors that are involved in the process.

The process of data annotation involves finding and interpreting the desired human aim into a machine-readable format using high-quality training techniques or data. The relation ship between a human-defined goal and how it relates to actual model usage determines how effective a solution is. The effectiveness of the model’s training, adherence to the objectives, and the capacity of training data are the main factors.

When the circumstances are actual and accurate, training data is effective. Long-term results may be impacted if the conditions and raw data do not fully reflect all variables and scenarios.

Use Case: Annotated Training Data in Healthcare

In healthcare high-quality training data is crucial for AI-based operations. In some application areas, including medication research, gene sequencing, treatment predictions, and automated diagnosis, annotations in AI and machine learning in healthcare are necessary.

To provide high-quality diagnostic solutions, one needs precise and accurate data that has been tagged and annotated. For example, imaging files, CT or MR scans, pathology sample data, and other databases are utilized to construct algorithms in the healthcare industry. Annotation is also used to identify tumors by identifying cells or ECG rhythm strip designations.

Three major applications for this technology in Healthcare

Perception exercises

Diagnostic support

Treatment techniques

High-quality Data Sets and Data Labeling Service

Businesses need high-quality training data that might be used to feed the machine algorithms in order to achieve the desired results. Firms need experienced labeling partners who can perform data training jobs quickly and provide first-rate services to obtain data sets with that degree of quality.

When it comes to providing the best services available, Data Labeler offers high-quality, annotated training data with the assistance of qualified experts.

Take the first step in creating compelling AI projects and gain access to accurate and high-quality data sets. Contact us to know how Data Labeler can help you on this journey

0 notes

Text

What is the future of Data Labeling and how it matters?

Data Labeling: What is it?

It takes a lot of data to develop AI. When developing AI, researchers attempt to replicate the human learning process. Machine learning is a whole branch of AI science that is devoted to this procedure. Data labeling and data annotation are the same thing. Both terms refer to the same method of annotating text, video, and images with the aid of specialized text annotation software and image annotation tools. Data labeling is the process of preparing unprocessed data for AI creation. Using specialistsoftware tools, it entails tagging and labeling the data with relevant information. The type ofdata used can vary depending on the AI use case — text, images, and videos can all beimproved through proper data labeling.

Labeled vs Unlabeled Data

The accuracy of the data utilized to train AI models is crucial. The data collection that accurately depicts reality is referred to as “ground truth” in AI data science. It is thefoundation for a future AI platform’s training. Future AI workflows will be impacted if the ground truth is faulty or wrong. Developers spend a lot of time choosing and curating training data because of this. Gathering and assembling training data is thought to account for 80% of the work put into an AI project. The “Human in the Loop” paradigm (HITL) has been a constant in AI research over the years.The key theory guiding the development of AI is its potential to displace people from risky, monotonous, and time-consuming tasks. One of the paradoxes of AI development is that some of its most important components need a lot of manual labor. Data labeling is the primary illustration. You need high-quality data sets to develop algorithms that are more effective and error-free.

Advent of Data Labeling Services

Services for data labeling are necessary to “teach” algorithms how to recognize particularitems. Businesses employ a variety of cutting-edge ways to create training data sets. One method effectively provides software eyes to observe the world, while the other offers it the ability to comprehend spoken and written language from people. They have already had a significant impact on modern human life as a whole.

Bounding Boxes: For Object Detection

Polygons: For Semantic & Instance Segmentation

Points: For Facial Recognition & Body Pose Detection

Texts: For Image Captioning

Select: For Image Classification

Semantic Segmentation: For More Complex Image Classification

Wondering how Data Labeler can help you?

Data Labeler digitally delineates and identifies an object in an image or video so that the AI can later learn to recognize it. To increase its capacity to correctly identify the object without any tags in the future, the AI needs a wide variety of samples of tagged data because objects like vehicles can arrive in a variety of shapes, sizes, and colors. Our data labeling specialists have many hours of expertise working on computer vision, natural language processing, and content services projects for the geospatial, financial,medical, and autonomous vehicle industries. Contact us for the best Data Labeling Services !

0 notes

Text

How Data Labeling is Advancing & Benefitting the E-commerce World?

Three variables account for a substantial portion of the market’s need for Data Annotation Tools..

Tools for automatically classifying data and an increase in the utilization of cloud computing services.

Companies are adopting data annotation tools more frequently to precisely classify vast amounts of AI training data.

To enhance driverless ML models, there is a growing requirement for well-annotated data as investments in autonomous driving technologies rise. Data annotation is anticipated to advance significantly and become increasingly more integrated as the digital environment changes in the twenty-first century. The development of mobile computing and digital image processing is a significant driver of such changes.

Here’s how Data Labeling is paving the way for E-commerce Sector

Data labeling in new retail has been hailed as a revolutionary idea and is now being sold commercially in some areas. It can save labor expenses, enhance customer service, streamline business operations, and increase consumer insights. Due to its seamless blending of the physical and digital worlds, new retail is quickly taking over as the dominant model in our culture.

Object recognition: Models for object recognition and classification in unmanned stores aid in automating the entire shopping process. In order to assist a virtual checkout, machine learning models for automatic product recognition, for instance, can determine which items a customer has in their cart. To grasp what goods are on each image, which article numbers correspond to each product, which brand, which packaging size, supplier information, etc., these models first need to be fed with thousands of tagged images. Additionally, inventory management and visual merchandising can be automated, making it simpler to identify when items need to be restocked on the shelf or alerting the visual merchandiser to adjust how their products are displayed in-store.

Customer data

Without clean and pertinent data, the e-commerce industry cannot grow. Consider the data on the consumer and their preferences for the product or brand, as well as the underlying information about the costs, special offers, payment options, etc. This data contains the customer’s interactions with and impressions of the website where the good or service is offered. E-commerce firms and merchants must explore the various client categories in order to better serve customers. Which segments of consumers behave the best? What are their tastes, and which extra item are they likely to add to their basket along with the current one? These data points can be categorized or labeled to assist define customer categories and better service customers.

Facial Recognition: For a more individualized customer experience, facial recognition technologies can be utilized to identify consumer profiles, behaviour and produce predictive styling. Consumer analysis can be completed and saved for use in persona profiling and subsequent visits. Sadly, not all client segments have been adequately represented in the datasets that already exist, leading to outliers, access denials, or biased data insights. Therefore, it’s crucial that databases for facial recognition are impartial, diversified in all respects, and indicative of the people who actually go to that particular place or store.

Visual Search: Using recognition software, visual search is a developing technique that enables users totake photos of apparel or advertisements and link them straight to product pages. Because it’s now much simpler to find the item you’re looking for, this greatly enhances the consumer experience.

Receipt Transcription: A significant amount of data, including information on purchases, shipment, and handling, is produced through receipt transcription. The back-end system will be simplified and labor expenses will be reduced thanks to the automatic transcription and labeling of this data from the POS system. Data labeling will thus greatly lessen the workload of store workers and reps.

Want to engage your AI projects by taking the initial step and gaining access to precise, high-quality data sets?Data Labeler delivers high-quality, annotated training data with the help of qualified experts in order to deliver the finest services possible. To learn how Data Labeler can assist you on this path, get in touch with us.

0 notes

Text

5 Strategies to make way for Successful Data Labeling Operations

The global market for data annotation and labeling reached USD 0.8 billion in 2022 and is projected to grow at a CAGR of 33.2% to reach USD 3.6 billion by the end of 2027. Data labeling activities are now a crucial part of creating and training a computer vision model.

Managing the entire lifecycle of data labeling and data annotation, from sourcing and cleaning through training and creating a model production-ready, is the responsibility of the function known as data labeling operations.

Let’s now examine 5 methods for designing efficient Data Labeling Operations

1).Recognize the use case :

Data ops and ML leaders must be aware of the issues they are attempting to address for a given use case before starting a project. Creating a list of questions and discussing them with senior leadership is a useful activity for figuring out the goals of the project and the best ways to achieve them. Now it’s time to start putting together a team, methods, and workflows for data labeling activities once you’ve gone through the answers to these questions.

2).Create instructions and documentation of labeling workflows :

If you approach data operations from a data-centric perspective, you can treat datasets — including the labels and annotations — as a component of your project’s and organization’s intellectual property (IP). making it much more crucial to record the entire process. Labeling process documentation enables the development of SOPs, which increases the scalability of data operations. Additionally, it is crucial for keeping a data pipeline that is transparently auditable and compliant as well as protecting datasets from data theft and cyberattacks.

Before a project begins, operational workflows must be designed. If you don’t, once the data starts streaming through the pipeline, the entire project is at risk. Clarify your procedures. Before the project begins, get the necessary operating procedures, budget, and senior leadership support.

3).Make your ontology extensible to account for the long term:

It’s crucial to make your ontology expandable whether the project requires video or picture annotation, or if you’re employing an active learning pipeline to quicken a model’s iterative learning process. An extendable ontology makes it simpler to scale, regardless of the project, use case, or industry, including whether you’re annotating medical image files like DICOM and NIfTI.

4).Iterate quickly and incrementally:

Start small, learn from tiny failures, iterate, and scale your data labeling operations routine are the best ways to ensure success. If not, you run the danger of attempting to annotate and categorize too much data at once. Because annotators make mistakes, there will be more mistakes to correct. Starting with a larger dataset and trying to annotate and classify it will take more time than if you start with a smaller dataset. You can scale the operation after everything is functioning properly, including the integration of the appropriate labeling tools

5).Implement quality control, use iterative feedback loops, and keep getting better:

Quality assurance/control and iterative feedback loops are essential to developing and putting into practice data operations. Labels must be verified. Make sure the annotation teams are using them properly. Check the model for bias, mistakes, and problems. There will always be mistakes, inaccurate information, incorrectly labeled picture or video frames, and bugs. You may lessen the quantity and effect of errors, inaccuracies, incorrectly labeled photos or video frames, and bugs in training data and production-ready datasets by using suitable AI-powered, automated data labeling, and annotation technology.

Select an automation technology that works with your quality control workflows to hasten the correction of defects and errors. This will provide you with more time and more efficient feedback loops, especially if you’ve used micro-models, active learning pipelines, or automated data pipelines.

Create More Efficient Data Labeling Operations with Data Labeler

You can create data labeling operations that are more productive, safe, and scalable with Data Labeler, an automated tool used by top-tier AI teams. Data Labeler was developed to increase the effectiveness of computer vision projects’ automatic image and video data labeling. Additionally, our system reduces errors, flaws, and biases while making it simpler, quicker, and more cost-effective to manage data operations and a group of annotators. Contact us to know more!

0 notes

Text

Realizing the ultimate power of Human-in-loop in Data Labeling?

As more automated systems, software, robots, etc. are produced, the world of today becomes more and more mechanized. The most advanced technologies, machine learning, and artificial intelligence are giving automation a new dimension and enabling more jobs to be completed by machines themselves.

The term “man in the machine” is well-known in science fiction books written in the early20th century. It is obvious what this phrase refers to in the twenty-first century: artificial intelligence and machine learning. Natural intelligence — humans in the loop — must be involved at many stages of the development and training of AI. In this loop, the person takes on the role of a teacher.

What does “Human-in-Loop” mean?

Like the humans who created them, AIs are not perfect. Because machines base their knowledge on existing data and patterns, predictions generated by AI technologies are not always accurate. Although this also applies to human intellect, it is enhanced by the utilization of many inputs in trial-and-error-based cognition and by the addition of emotional reasoning. Because of this, humans are probably more likely to make mistakes than machines are to mess things up.

A human-in-the-loop system can be faster and more efficient than a fully automated system, which is an additional advantage.

Humans are frequently considerably faster at making decisions than computers are, and humans can use their understanding of the world to find solutions to issues that an AI might not be able to find on its own.

How Human-in-the-loop Works and Benefits Data Labeling & Machine Learning?

Machine learning models are created using both human and artificial intelligence in the“human-in-the-loop” (HITL) branch of artificial intelligence. People engage in a positive feedback loop where they train, fine-tune, and test a specific algorithm in the manner known as “human-in-the-loop”.It typically works as follows: Data is labeled initially by humans. A model thus receives high-quality (and lots of) training data. This data is used to train a machine learning system to make choices. The model is then tuned by people.

Humans frequently assess data in a variety of ways, but mostly to correct for overfitting, to teach a classifier about edge instances, or to introduce new categories to the model’s scope. Last but not least, by grading a model’s outputs, individuals can check its accuracy, particularly in cases where an algorithm is too underconfident about a conclusion. It’s crucial to remember that each of these acts is part of a continual feedback loop. By including humans in the machine learning process, each of these training, adjusting, and testing jobs is fed back into the algorithm to help it become more knowledgeable, confident, and accurate.

When the model chooses what it needs to learn next — a process called active learning — and you submit that data to human annotators for training, this can be very effective.

When should you utilize machine learning with a Human in the loop?

Training: Labeled data for model training can be supplied by humans. This is arguably where data scientists employ a HitL method the most frequently.

Testing: Humans can also assist in testing or fine-tuning a model to increase accuracy. Consider a scenario where your model is unsure whether a particular image is a real cake or not.

And More…

Data Labeler is one of the best Data Labeling Service Providers in USA

Consistency, efficiency, precision, and speed are provided by their well-built integrated data labeling platform and its advanced software. Label auditing ensures that your models are trained and deployed more quickly thanks to its streamlined task interfaces.

Contact us to know more.

1 note

·

View note

Text

Leveraging Crowdsourcing for Large-Scale Data Annotation in Artificial Intelligence

Machine learning and deep learning, while revolutionary, necessitate massive amounts of data. Companies still need annotators to identify the data before they can utilize it to train an AI or ML model, despite automated data collecting methods like web scraping. Companies frequently resort to crowdsourced workforces for quick annotation when they’re pressed on time to develop an algorithm. But is it always the wisest choice to do so? Your data can essentially be annotated with crowdsourcing.

Why Crowdsourcing has become significant for all Business Enterprises?

Crowdsourcing can be used for a variety of tasks, such as website development andtranscription. Companies that seek to create new products frequently ask the public forfeedback. Companies don’t have to rely on tiny focus groups when they can reach millionsof users through social media, ensuring that they get opinions from people from differentsocioeconomic and cultural backgrounds. Consumer-focused businesses frequently gain bybetter understanding their customer and fostering more engagement or loyalty.

Businesses must evaluate the quality of various data points when using crowdsourcing alone to make decisions from a variety of network sources. They must also come up with alternative solutions to address any regional variations that may exist, before connecting to the organizational objectives. Big data analytics was then shown to be quite helpful in ensuring the success of crowdsourcing. By applying known big data principles, businesses can find the genuine nuggets in crowdsourced data that drive innovation, development choices, and market practices. Crowdsourcing and big data analytics are strongly related to trends.

5 Top Advantages of Employing an Image Annotation Crowdsourcing

1. Less Effort :The key advantage of using a crowdsourcing service is that the practicalities of the process are taken care of for you. The service provider will already have a platform set up and complete the task for you at a far lower cost than you could do it yourself by using the crowdsourcing model.

2. A Bigger, Better Crowd: Additionally, a service provider will be able to supply a far larger population than you can locate on your own. This is primarily because they have invested years building up their following and making sure the appropriate people are hired.

3. Responsibility : Shifting The crowdsourcing of image annotation will involve certain ethical and legal ramifications because images are regarded as biometrics data. By using a crowdsourcing platform, you relieve yourself of these obligations and avoid moral and legal entanglements.

4. Higher Caliber: Because they have more experience than you do in this field, crowdsourcing service providers also follow quality assurance procedures and standards. Your service provider will make sure to uphold your image annotation quality criteria; all you need to do is make them clear.

5. Added security: A better level of data security can also be provided by crowdsourcing service providers. To protect the data, the service providers can make sure that the annotators sign non-disclosure agreements and adhere to rigid security procedures.

Crowdsourcing the Labeling of Data

Data Labeling is a task that data science teams prefer to outsource rather than do themselves. These advantages are provided by doing so:

Reduces the need to hire tens of thousands of temporary workers.

Reduces the workload of data scientists

Investment in annotating technologies is necessary for internal data labeling.

Crowdsourcing eliminates this cost (subject to comparable costs)Most platforms for crowdsourcing appoint independent contractors from around the worldto annotate data. Crowdsourcing platforms, at their most basic, divide the project intosmaller jobs, which are then assigned to several freelancers.

Here’s how Data Labeler can help you

With its sophisticated algorithms and integrated Data Labeling platform provides consistency, efficiency, accuracy, and speed. Label auditing ensures that your models are trained and deployed more quickly thanks to its streamlined task interfaces. For Machine Learning and Artificial Intelligence (AI) projects, Data Labeler specializes in providing precise, practical, customized, accelerated, and quality-labeled datasets. Contact us now!

0 notes

Text

How AI has been Flourishing the Real Estate with Advanced Investment Decision-Making & Property Search?

Big or small, today almost all industrial sector is revolutionized via Artificial Intelligence. Software programs with self-learning algorithms are known as artificial intelligence (AI) tools.

Various advanced AI theories can be applied to enhance and expedite several difficult procedures. They improve the productivity of real estate participants like sellers, brokers, asset managers, and investors in this way which ultimately leads to cost savings in real estate transactions.

Artificial Intelligence & Machine Learning has been transforming the Real Estate Sector:

The artificial intelligence (AI) market for real estate is divided into three categories: technology, solution, and region. In terms of solutions, chatbots had 28.98% of the market in 2022 and are predicted to maintain their dominance during the projected period. By gathering client information and assisting in increasing lead generation and content marketing, AI-enabled chatbots are helpful to many real estate organizations. A chatbot is a fantastic virtual assistant for customers and a great way to send customized content straight to leads.

Customer behavior is mentioned before chatbots. Analytics and Advanced Property Analysis each hold a sizeable percentage of the market. Real estate businesses are using AI to gather, report, and analyze massive amounts of data to derive insightful information about their clients and better meet their needs. The demand for the technology will be influenced by its capacity to carry out operations including processing natural language and recognizing images, sounds & text using sophisticated machine learning algorithms.

Four Advanced Ways that AI Impact the Real Estate Sector

Intelligent Property Search & Smart Recommendations: By focusing on their target market and increasing the value of their products, large estate companies with a large inventory of properties for sale can save clients a great deal of time. Using a client’s preferences and previous viewings, AI may create personalized real estate listings. Additionally, it can use profiling techniques to show suitable offers to new customers based on their demographic data or goods that have previously worked well with customers who are similar to them.AI-powered real estate search engines place a strong emphasis on the user experience by providing simple interfaces and quick search processes. Users receive outstanding and relevant property recommendations thanks to AI systems that continuously improve their recommendations based on user feedback and behavioral assessments.

Predictive Analytics for Market Analysis & Investment: One of the most popular and useful uses of AI in real estate is predictive analytics. It usually serves as the basis for any estimate of a property’s value that you may see. To relieve consumers of the headache of determining the market worth of a property, artificial intelligence algorithms were developed. By taking into account expanding populations, employment prospects, the construction of new infrastructure, and investor sentiment, AI-driven predictive analytics may generate exact estimates. This aids investors in locating areas with high growth potential and directs them in choosing wisely.

Chatbots & Virtual Assistants: NLP approaches can be used by computers with AI technologies to interpret and comprehend user questions. Users can take part in conversational searches to ask questions in everyday speech and receive suggestions for properties that are pertinent. A seamless user experience is provided by chatbots and virtual assistants that use NLP to comprehend the needs of users, provide appropriate responses, and provide property recommendations. Detailed information on residential properties, such as specifications, amenities, location, nearby educational institutions, and available transportation alternatives, can be provided via real estate chatbots.

Robust Marketing & Advertising Initiative: Agents can now have access to cutting-edge tools and technologies that have completely changed how they approach marketing. By understanding client preferences and interests and ensuring that the right properties are shown to the right market, AI-driven systems can tailor marketing campaigns. Lead generation is improved, conversion rates are increased, and marketing ROI is maximized. On a variety of platforms, including search engines, social media, and real estate websites, advertising campaigns can be automated.

Artificial Intelligence is constantly advancing various channels of the Real Estate Sector

The use of AI in real estate has huge promise. By enhancing productivity, consumer satisfaction, and decision-making processes, AI will revolutionize the industry. Real estate marketers might save time and money while still providing top-notch customer service by utilizing the possibilities of AI technologies.

As AI advances, the landscape of property searching and suggestions will change, providing users greater control over their real estate-related activities and enhancing their accuracy, productivity, and convenience.

Are you an Enthusiast, a Business Person, or a Technologist?

Please refer to our use cases to know everything about what we provide. Feel free to share any Use Case regarding Data Labeling & Annotation, we are open for discussion. Contact us!

0 notes

Text

Top 7 branches of Artificial Intelligence you shouldn’t Miss Out on

This new and emerging world of big data, ChatGPT, robotics, virtual digital assistants, voice search, and recognition has all the potential to change the future, regardless of how AI affects productivity, jobs, and investments. By 2030, AI is predicted to generate $15.7 trillion for the global economy, which is more than China and India currently produce together.

Many different industries have seen major advancements in artificial intelligence. Systems that resemble the traits and actions of human intelligence are able to learn, reason, and comprehend tasks in order to act. Understanding the many artificial intelligence principles that assist in resolving practical issues is crucial. This can be accomplished by putting procedures and methods in place like machine learning, a subset of artificial intelligence.

Computer vision :The goal of computer vision, one of the most well-known disciplines of artificial intelligence at the moment, is to provide methods that help computers recognise and comprehend digital images and videos. Computers can recognise objects, faces, people, animals, and other features in photos by applying machine learning models to them. Computers can learn to discriminate between different images by feeding a model with adequate data. Algorithmic models assist computers in teaching themselves about the contexts of visual input. Object tracking is one example of the many industries in which computer vision is used for tracing or pursuing discovered stuff.

Classification of Images: An image is categorised and its membership in a given class is correctly predicted.

Facial Identification: On smartphones, face-unlock unlocks the device by recognising and matching facial features.

Fuzzy logic: Fuzzy logic is a method for resolving questions or assertions that can be true or untrue. This approach mimics human decision-making by taking into account all viable options between digital values of “yes” and “no.” In plain terms, it gauges how accurate a hypothesis is. This area of artificial intelligence is used to reason about ambiguous subjects. It’s an easy and adaptable way to use machine learning techniques and rationally mimic human cognition.

Expert systems :Similar to a human expert, an expert system is a computer programme that focuses on a single task. The fundamental purpose of these systems is to tackle complex issues with human-like decision-making abilities. They employ a set of guidelines known as inference rules that are defined for them by a knowledge base fed by data. They can aid with information management, virus identification, loan analysis, and other tasks by applying if-then logical concepts.

Robotics

Robots are programmable devices that can complete very detailed sets of tasks without human intervention. They can be manipulated by people using outside devices, or they may have internal control mechanisms. Robots assist humans in doing laborious and repetitive activities. Particularly AI-enabled robots can aid space research by organisations like NASA. Robotic evolution has recently advanced to include humanoid robots, which are also more well-known.

Machine learning: Machine learning, one of the more difficult subfields of artificial intelligence, is the capacity for computers to autonomously learn from data and algorithms. With the use of prior knowledge, machine learning may make decisions on its own and enhance performance. In order to construct logical models for future inference, the procedure begins with the collecting of historical data, such as instructions and firsthand experience. Data size affects output accuracy because a better model may be built with more data, increasing output accuracy.

Neural networks/deep learning :Artificial neural networks (ANNs) and simulated neural networks (SNNs) are other names for neural networks. Neural networks, the core of deep learning algorithms, are modelled after the human brain and mimic how organic neurons communicate with one another. Node layers, which comprise an input layer, one or more hidden layers, and an output layer, are a feature of ANNs. Each node, also known as an artificial neuron, contains a threshold and weight that are connected to other neurons. A node is triggered to deliver data to the following network layer when its output exceeds a predetermined threshold value. For neural networks to learn and become more accurate, training data is required.

Natural language processing :With the use of natural language processing, computers can comprehend spoken and written language just like people. Computers can process speech or text data to understand the whole meaning, intent, and sentiment of human language by combining machine learning, linguistics, and deep learning models. For instance, voice input is accurately translated to text data in speech recognition and speech-to-text systems. As people talk with different intonations, accents, and intensity, this might be difficult. Programmers need to train computers how to use apps that are driven by natural language so that they can recognise and understand data right away.

About Us :Are you looking for Object Recognition or any other Data Labeling service? To improve the performance of your AI and ML models, Data Labeler offers best-in-class training datasets. Check out few Use Cases and contact us if you have any in mind.

0 notes

Text

Meta AI’s Unidentified Video Objects transforms Object Segmentation Sector within the Data Labeling Industry

One of the most active subfields in computer vision research in recent years is object segmentation. That’s because it’s important to accurately recognize the objects in a scene or comprehend their location. As a result, various techniques, such as Mask R-CNN and Mask Prop, have been put forth by researchers for segmenting objects in visual situations.

For purposes ranging from scientific image analysis to the creation of aesthetic photographs, computer vision significantly relies on segmentation, the act of identifying which pixels in an image represents a specific item. But to create an accurate segmentation model for a specific task, technical specialists are often required. They also need access to AI training infrastructure and significant amounts of meticulously annotated in-domain data.

Unidentified Video Objects by Meta AI: What is it?

Unidentified Video Objects (UVO), a new benchmark to aid research on open-world segmentation, a crucial computer vision problem that seeks to recognize, segment, and track every object in a video thoroughly, was created. UVO can assist robots emulate humans’ ability to recognize unexpected visual objects, whereas generally machines must acquire specific object concepts to recognize them. A recent Meta AI study describes an initiative named “Segment Anything,” which seeks to “democratize segmentation” by offering a new job, dataset, and model for picture segmentation. Their Segment Anything Model (SAM) and the largest segmentation dataset ever, Segment Anything1-Billion mask dataset (SA-1B), were developed.

Earlier there are two main categories of Segmentation

In the past, there were primarily two types of segmentation-related tactics. The first, interactive segmentation, could segment any object, but it needs a human operator to adjust a mask. However, predetermined item groups could be segmented thanks to automatic segmentation.

Nevertheless, training the segmentation model requires a significant number of manually labeled items, in addition to computer power and technological know-how. Neither technique provided a completely reliable, automatic segmentation mechanism.

Both of these more general classes of procedures are covered by SAM. It is a unified model that carries out interactive and automated segmentation operations with ease.

By simply constructing the suitable prompt, the model can be utilized for a variety of segmentation tasks thanks to its adaptable prompt interface. SAM is trained on a wide variety of task that are high-quality dataset of more than 1 billion masks, which enables it to generalize to new kinds of objects and images. Because of this capacity to generalize, practitioners will often not need to gather their segmentation data and modify a model for their use case.

With the help of these features, SAM can switch between domains and carry out various operations.

The following are some of the SAM’s capabilities:

With a single mouse click or the interactive selection of inclusion and exclusion locations, SAM makes object segmentation easier. Another stimulus for the model is a boundary box.

SAM’s capacity to provide competing legitimate masks in the face of object ambiguity is a key characteristic of real-world segmentation issues.

Any object in a picture can be instantaneously detected and hidden with SAM.

SAM can instantaneously build a segmentation mask for any prompt after pre calculating the picture embedding, enabling real-time interaction with the model. SAM allows for the rapid collection of new segmentation masks. It takes only roughly 14 seconds to complete an interactive mask annotation. This model is 2.5 times faster than the previous greatest data annotation effort, which was also model-assisted compared to previous large-scale segmentation data collection efforts. SAM is all set to empower future applications from several sectors which would require object or image segmentation.

About Us: Data Labeler, an emerging Data Labeling & Annotation Entity, offers accurate, convenient, customized, expedited, and high-quality labeled datasets for Machine Learning and AI initiatives .Do you have a specific use case in mind? Contact us now!

0 notes

Text

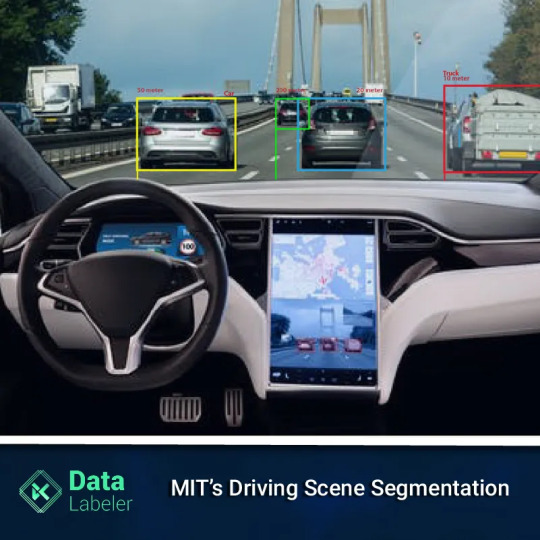

How MIT researchers are utilizing AI to improve Image Segmentation on Self-driving Cars

Deep learning (DL), machine learning (ML), and artificial intelligence (AI) have recently made significant strides, and this has led to a variety of new applications for these techniques. Self-driving automobiles are one such application, which is expected to have a significant and revolutionary impact on society and how people commute. These cars will represent the first significant integration of personal robots into human society, notwithstanding the early and ongoing resistance to domesticating technology.

Rise of Autonomous Vehicles

Autonomous vehicles have arrived and will remain. Although they are not yet widely used or accepted, that day will come. The majority of the big automakers are actively investigating autonomous vehicle programme and carrying out considerable on-road testing. The advanced driver assistance systems (ADAS) are a type of technology that are already included in many new vehicles in the United States. An infrastructure for autonomous vehicles is becoming more effective as technology develops. But maintaining public acceptance of these cars will require resolving persistent issues with safety, security, and controlling public perception and expectations.

Major manufacturers’ development efforts for autonomous driving are being guided by a number of important potential benefits.

Artificial intelligence (AI) enhanced features in Autonomous Driving

A collection of distinct technologies, AI is a primary area of attention for autonomous vehicle testing and development. Only a small number of manufacturers have produced autonomous vehicles with advanced AI technologies like personal AI assistants, radar detectors, and cameras, all of which prioritize security among other tasks. The AI-enhanced capabilities that these self-driving cars have included represent a significant improvement over their predecessors.

Deep learning enables features including speech and voice recognition, voice search, image recognition and processing, motion detection, and data analysis by simulating neuron activity. Together, these features enable the cars to recognise other vehicles, pedestrians, and traffic lights and follow pre-planned routes.

Autopilots

Tesla recently produced self-driving electric vehicles with autopilots, which allow for automatic steering, braking, lane change, and parking activities. These automobiles also have the potential to lower emissions globally, which is a significant advancement over fuel-powered vehicles. Many of the world’s largest cities now have autonomous vehicles on the road. Even heavy-duty vehicles without drivers are now able to travel long distances while carrying cargo. The number of deadly accidents, many of which are brought on by human error, has declined along with transportation expenses. Since autonomous vehicles are lighter than conventional cars, less energy is used.

Real-time Route Optimisation

Autonomous vehicles communicate with other vehicles and the infrastructure for traffic management to include current data on traffic volumes and road conditions into route selection. Greater lane capacity is possible since autonomous vehicles can drive at higher speeds and with closer vehicle proximity.

MIT’s Innovative Driving Scene Segmentation Researchers are compiling data to quantify drivers’ actions, such as how they react to different driving conditions and carry out additional actions like eating or holding conversations while driving. The study looks at how drivers react to alarms (lane keeping, forward collision, proximity detectors, etc.) and interact with assistive and safety technologies (such as adaptive cruise control, semi-autonomous parking assistance, vehicle infotainment, and communications systems, smartphones, and more).

The purpose of Deep Lab, a cutting-edge deep learning model for semantic image segmentation, is to give semantic labels (such as person, dog, or cat) to each pixel in the input image. To use the camera input in the driving context to grasp the front driving scene semantically. This is crucial for maintaining driving safety and a prerequisite for all forms of autonomous driving. The program’s goal is to generate human-centric insights that will advance the development of automated vehicle technology and raise consumer awareness of appropriate technology use.

Data Labeling plays a great role…Data labeling improves the context, quality, and usability of data for individuals, teams, and businesses. Specifically, you can anticipate More Accurate Prognostications. Accurate data labeling improves quality control in machine learning algorithms, enabling the model to be trained and produce the desired results.

Data Labeler is an expert in providing precise, practical, personalized, accelerated, and quality-labeled datasets for machine learning and artificial intelligence projects. Do you have a scenario in mind? Contact us now!

0 notes

Text

Know how to ensure best Data Labeling Practices & Consistency

When we refer to “quality training data,” we mean that the labels must be both accurate and consistent. Accuracy is the degree to which a label conforms to reality. The degree of agreement between several annotations on diverse training objects is known as consistency.

Emphasizing the fundamental law with training data for projects involving the creation of artificial intelligence and machine learning by mentioning this. Poor-quality training datasets that are provided to the AI/ML model might cause a variety of operational issues.

The ability of autonomous vehicles to operate on public roads, depends on the training data. The AI model is easily capable of mistaking people for objects or vice versa when given low-quality training data. Poor training datasets can lead to significant accident risks in either case, which is the last thing that makers of autonomous vehicles would want for their projects.

Data labeling quality verification must be a part of the data processing process for high-quality training data. You will need knowledgeable annotators to correctly label the data you intend to employ with your algorithm in order to produce high-quality data.

Here’s how to ensure consistency in Data Labeling process…

Rigorous data profiling and control of incoming data

In most cases, bad data comes from data receiving. In an organization, the data usually comes from other sources outside the control of the company or department. It could be the data sent from another organization, or, in many cases, collected by third-party software. Therefore, its data quality cannot be guaranteed, and a rigorous data quality control of incoming data is perhaps the most important aspect among all data quality control tasks.

Examining the following aspects of the data:

Data format and data patterns

Data consistency on each record

Data value distributions and abnormalities

Completeness of the data

Designing the data pipeline carefully to prevent redundant data: Duplicate data occurs when all or a portion of the data is produced from the same data source using the same logic, but by separate individuals or teams most likely for various later uses. A data pipeline must be precisely specified and properly planned in areas such as data assets, data modeling, business rules, and architecture in order for an organization to prevent this from happening .Additionally, effective communication is required to encourage and enforce data sharing throughout the company, which will increase productivity overall and minimize any possible problems with data quality brought on by data duplication.

Accurate Data Collection Requirements

Delivering data to clients and users for the purposes for which it is intended is a crucial component of having good data quality.

It is difficult to show the data effectively. It takes careful data collection, analysis, and communication to truly understand what a client is searching for. The need should include all data situations and conditions; if any dependencies or conditions are not examined and recorded, the requirement is deemed to be lacking. Another crucial element that should be upheld by the Data Governance Committee is the requirement’s clear documentation, which should be accessible and easy to share. Another crucial element is having clear requirements documentation that is accessible and shareable.

Compliance with Data Integrity:

Not all datasets are able to reside in a single database system when the volume of data increases along with the number of data sources and deliverables. Therefore, applications and processes that are defined by best practices for data governance and integrated into the design for implementation must be used to ensure the referential integrity of the data.

Data pipelines with Data Lineage traceability integrated

When a data pipeline is well-designed, the complexity of the system or the amount of data should not affect how long it takes to diagnose a problem. Without the data lineage traceability integrated into the pipeline, it can take hours or days to identify the root cause of a data problem.

Aside from data quality control programs for the data delivered both internally and externally, good data quality demands disciplined data governance, strict management of incoming data, accurate requirement gathering, thorough regression testing for change management, and careful design of data pipelines.

Boost Machine Learning Data Quality with Data Labeler

Maintaining consistency, correctness, and integrity throughout your training data can be logistically feasible or dead simple.

What distinguishes them? Your data labeling tool will determine everything. Data Labeler makes it simple to assess data quality at scale thanks to features like confidence-marking and consensus as well as defined user roles. Contact us to know more!

0 notes

Text

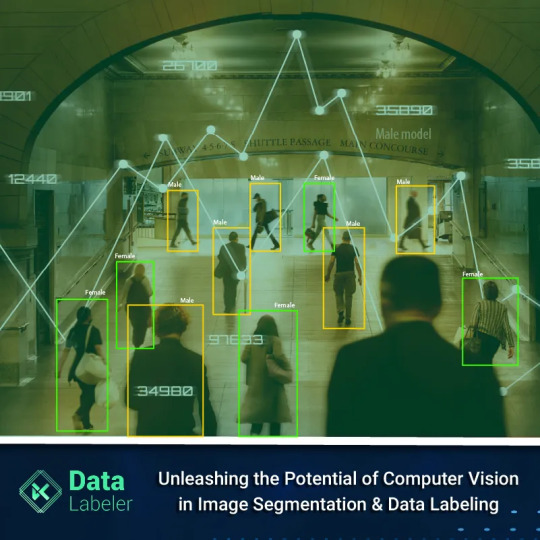

How Computer Vision is aiding the Image Segmentation & Data Labeling Industry?

The size of the global market for computer vision was estimated at USD 11.22 billion in2021, and it is anticipated to increase at a 7.0% CAGR from 2022 to 2030. Computer vision systems utilizing artificial intelligence (AI) are becoming more and more common in a range of applications, such as consumer drones and fully or partially autonomous vehicles.

The Role of Computer Vision in Image Segmentation

Recent developments in computer vision, including image sensors, sophisticated cameras, and deep learning methods, have increased the potential applications for computer vision systems across a range of sectors. Sectors include education, healthcare, robotics, consumerelectronics, retail, manufacturing, and security & surveillance, among others.

The partition of a digital image into several segments (objects) is known as image segmentation. Segmentation aims to transform an image’s representation into one that is more meaningful and understandable.

Various Image Segmentation Types

Based on the quantity and type of information they communicate, image segmentation tasks can be divided into three groups: semantic, instance, and panoptic segmentation. Semantic segmentation (not instance-based)

The process of semantic segmentation, often referred to as non-instance segmentation, aids in describing the location of the items as well as their form, size, and shape.

It is primarily applied when a model needs to know for sure whether or not an image contains an object of interest and which portions of the image do not. Without taking into account any further information or context, pixels are simply labeled as belonging to acertain class.

Segmentation by Instance

The practice of segmenting objects by their presence, position, quantity, size, and shape is known as instance segmentation. With each pixel, the objective is to better comprehend the image. To distinguish between objects that overlap or are similar, the pixels are categorized based on “instances” rather than classes.

Pan-optic segmentation

Since it combines semantic and instance segmentation and offers detailed data for sophisticated ML algorithms, panoptic segmentation is by far the most informative task.

Popular Image Segmentations with Computer Vision in Various Sectors Due to the complicated robotics tasks that self-driving cars must undertake and the need for a thorough grasp of their environment, it is particularly well-liked in the field of autonomous driving. Geosensing for mapping land use with satellite imaging, trafficcontrol, city planning, and road monitoring are further geospatial uses for semanticsegmentation.

Precision farming robotic initiatives are aided in real-time to start weeding by semantic segmentation of crops and weeds. With the use of these sophisticated computer vision systems, manual agricultural activity monitoring has been greatly reduced.

Semantic segmentation makes it possible for fashion eCommerce firms to automate operations like the parsing of garments that are traditionally quite difficult.

The recognition of facial features is another popular topic of study. By analyzing facial traits, the algorithms can infer gender, age, ethnicity, emotion, and more. These segmentation tasks get more difficult due to elements like various lighting conditions,facial expressions, orientation, occlusion, and image resolution.

In the context of cancer research, computer vision technologies are also gaining ground in the healthcare sector. When examples are used to identify the morphologies of the malignant cells to speed up diagnosis procedures, segmentation is frequently utilized.

Are you prepping to begin your Image Segmentation Use case?

Reach out to our professionals in Data Labeler, so they can assist you in quickly and efficiently producing data that is appropriately labeled.

Data Labeler increases your competitive advantage, provides you with Unlimited support, and helps you grow exponentially.

Contact us now!

0 notes

Text

How Data Labeling & Annotation is aiding and advancing other Industrial Sectors?

Are you aware that almost 90% of data possessed by organizations is unstructured and is expanding at 55-65% each year?

There is a ton of unstructured data out there. Furthermore, high-quality training data are essential for completing AI/ML projects, and unstructured data poses security and compliance problems. So how do businesses deal with this, especially when constructing an AI/ML model and needing to supply the model with pertinent data so that it can process, give output, and draw inferences?

An AI & ML model’s output, however, is only as good as the data used to train it, as the model can only produce useful results when the algorithm is aware of the input. As a result, the data must be aggregated, categorized, and identified precisely. Data annotation is the term used to describe the process of marking, attributing, or tagging data.

Data Labeling & Annotation with Human Intelligence

By using data annotation, an AI model would be able to tell whether the data it receives is in the form of a video, image, text, graphic, or a combination of these formats. The AI model would then classify the data and carry out its responsibilities in accordance with the parameters set and its functionality. Your models will be correctly trained thanks to data annotation.

So, if you use the model for speech recognition, automation, a chatbot, or any other operation, you will obtain a fully-reliable model that produces the best outcomes .Humans in the loop and human intelligence are vital in the process of identifying, validating, and correcting problems with the model’s output in order to increase efficiency and allow for improvisation. Thus, data annotation and labeling can significantly improve an AI or ML program’s functionality while also reducing time-to-market and total cost of ownership. As technology develops quickly, data annotations will be necessary across all company sectors and industries to improve the quality of their systems.

Here is how Data Labeling Affects Different Sectors…

Automobile Supervised Machine Learning models are crucial to autonomous vehicles, including self-driving automobiles, long-haul trucks, and door-to-door delivery robots. Large volumes of annotated data are needed for these models in order to power fundamental features like lane detection, pedestrian detection, traffic-light detection, etc.

Manufacturing By 2035, it is predicted that 16 industries, including manufacturing, could experience up to a 40%rise in labor productivity because to AI-powered solutions. AI is transforming how manufacturing is viewed in the business, from automated assembly lines to defect identification and workplace security monitoring.

Healthcare The usage of AI in the healthcare sector is being adopted gradually. Huge amounts of labeled data have the potential to revolutionize industries as diverse as drug research and the detection of anomalies in MRI and X-ray images.

Insurance and Banking Banking is already undergoing a fundamental transformation, from automatic check verification to the use of AI for fraud detection. AI is being utilized in the insurance industry to automatically assess the degree of damage to vehicles, which is another interesting use.

Agriculture Another sector that is prepared for disruption by AI. Among the applications of AI in agriculture are weed detection, crop disease detection, and livestock management.

Retail The COVID incident revealed how retailers may utilize AI to track customer traffic. AI can also be utilized for self-checkout, cart-counting, and visitor sentiment analysis.

How Data Labeler helps in Data Labeling & Annotation Services:

For Machine Learning and Artificial Intelligence (AI) projects, Data Labeler specializes in providing precise, practical, customized, accelerated, and quality-labeled datasets.

Our Services

Highly accurate labeled data

Get options for real-time labeling

Guidance on labeling instruction

Easily scalable

Sophisticated workforce management software

Contact us now!

0 notes