#Create Histogram Chart

Explore tagged Tumblr posts

Text

I’m envious towards the Elves. What do you mean the science and mathematics we created is not relevant to them? What do you mean they don’t have to slave their life away at a statistic class and cry because they mixed up a Histogram and a frequency table? I think that’s bs. I rather catch a tornado in a jar than look at MORE CHARTS!

#slowly losing my sanity. is that what Brant felt like? or Fintain?#I feel like fintain when he was singing to the flowers and everyone looked at him like he finally lost it#kotlc#keeper of the lost cities#kotlc fandom#kotlc thoughts

21 notes

·

View notes

Text

Data Visualization: Transforming Data into Insight

In an technology wherein information is produced at an remarkable tempo, the ability to extract significant insights is extra vital than ever. Data visualization plays a vital function on this procedure, enabling individuals and corporations to understand complex statistics sets, pick out trends, and communicate findings effectively. By converting abstract numbers into intuitive visuals, information visualization bridges the gap among uncooked data and human cognition, turning complexity into readability.

Data Visualization In Research

The Importance of Data Visualization

Data visualization is the graphical illustration of information and facts. By the use of visible elements like charts, graphs, and maps, statistics visualization tools make it less difficult to see and understand styles, trends, and outliers in facts. Its importance lies in numerous key areas:

Improved Understanding: Visuals are processed 60,000 times faster than textual content by way of the human mind. Graphs and charts can screen insights that would pass omitted in spreadsheets.

Enhanced Communication: Well-crafted visualizations allow statistics to be shared in a manner that’s available to a broader audience, no longer simply records analysts or statisticians.

Data-Driven Decision Making: In enterprise, governments, and medical research, visualizations support selection-making via without a doubt showing the implications of various statistics tendencies.

Pattern and Anomaly Detection: They help users quick become aware of deviations, spikes, or drops in data, which could suggest possibilities or threats.

Types of Data Visualization

Data visualization encompasses a big selection of techniques, each applicable to precise types of records and analytical desires. Some of the most commonly used sorts include:

1. Bar Charts

Bar charts are best for comparing quantities throughout classes. They are simple however effective for displaying differences among agencies.

2. Line Graphs

Often used to music changes over time, line graphs display tendencies and fluctuations, making them a fave for time-series information.

3. Pie Charts

They’re satisfactory for simple, clear percent facts.

4. Histograms

Histograms display the distribution of a dataset, making them beneficial for understanding records spread, crucial tendency, and frequency.

5. Heat Maps

Heat maps use colour gradients to indicate value depth throughout two dimensions.

6. Scatter Plots

Scatter plots are used to pick out relationships between variables, often revealing correlations or clusters in facts.

7. Box Plots

Box plots show the distribution of a dataset thru its quartiles, highlighting medians, variability, and ability outliers.

8. Geospatial Maps

These visualizations display facts associated with geographic regions and are extensively utilized in demographic research, environmental tracking, and logistics.

9. Dashboards

Dashboards integrate multiple visualizations into one interface, supplying a actual-time assessment of key metrics and overall performance signs.

Tools for Data Visualization

A huge range of tools is to be had for growing effective statistics visualizations. Popular alternatives encompass:

Tableau: A leading platform for interactive, shareable dashboards with drag-and-drop functions.

Power BI: Microsoft's enterprise analytics tool with sturdy integration into the Office atmosphere.

Google Data Studio: A unfastened tool for developing customizable reports the use of Google records sources.

Ggplot2: A effective R package for constructing state-of-the-art plots the use of the grammar of snap shots.

Each device gives distinctive competencies depending at the user’s technical information, information complexity, and desired results.

Best Practices in Data Visualization

Creating effective facts visualizations requires more than just technical skill. It includes an information of design ideas, cognitive psychology, and storytelling. Here are key exceptional practices:

1. Know Your Audience

Tailor the visualization to the information stage and pursuits of your target market. What a statistics scientist unearths intuitive is probably complicated to a business executive.

2. Choose the Right Chart

Using an inappropriate chart kind can deceive or confuse the viewer. For instance, a line chart ought to not be used for specific information.

Three. Simplify and Clarify

Avoid muddle. Focus on essential statistics and put off unnecessary elements like immoderate gridlines, decorative snap shots, or redundant labels.

Four. Use Color Thoughtfully

Color can enhance know-how but additionally lie to if used improperly. Stick to a consistent color scheme and use contrasts to highlight key points.

5. Tell a Story

Effective facts visualizations guide the viewer through a story. Highlight tendencies, anomalies, or correlations that support your message.

6. Maintain Integrity

Never manipulate axes or distort scales to magnify findings. Ethical visualization ensures accurate illustration of statistics.

Real-World Applications

Data visualization is applied in nearly each region, transforming industries through stepped forward insight and communication.

1. Business Analytics

In commercial enterprise, visualization tools assist in monitoring sales, client behavior, supply chain efficiency, and extra.

2. Healthcare

In medicinal drug and public health, visualizations are crucial for tracking disorder outbreaks, affected person records, and treatment results. For example, COVID-19 dashboards performed a main function in information the pandemic's unfold.

3. Finance

Financial analysts use records visualization to recognize market tendencies, examine investment overall performance, and check chance.

Four. Education

Educators and researchers use visualization to track pupil performance, perceive mastering gaps, and gift studies findings.

Five. Government and Policy

Policymakers use visible facts to understand social trends, aid allocation, and financial overall performance.

6. Journalism

Data journalism is growing hastily. Visual stories on topics like weather change, election results, or social inequality use charts and infographics to inform and engage readers.

Challenges and Limitations

Despite its electricity, facts visualization isn't with out demanding situations:

Data Quality: Inaccurate or incomplete information can lead to deceptive visuals.

Over-Simplification: Trying to make information too easy can lead to lack of nuance or important info.

Misinterpretation: Poor design selections or biased displays can cause audiences to draw wrong conclusions.

Tool Limitations: Not all equipment aid the extent of customization or interactivity wished for unique projects.

Overcoming these demanding situations requires a mix of technical talent, area information, and moral responsibility.

The Future of Data Visualization

The future of statistics visualization is increasingly interactive, actual-time, and AI-assisted. Emerging traits include:

Augmented and Virtual Reality (AR/VR): Immersive visualizations permit users to explore records in three-dimensional environments.

Machine Learning Integration: Algorithms can now endorse or even vehicle-generate visualizations based on the information furnished.

Collaborative Platforms: Teams can now work collectively in actual time on visualization dashboards, improving communique and agility.

These advancements will hold to make records greater accessible and insightful throughout all domain names.

Difference Between Augmented Reality (AR) and Virtual Reality (VR)

What Is Data Analysis In Research

2 notes

·

View notes

Text

The Significance of Exploratory Data Analysis (EDA) in the Data Analysis Workflow

In the ever-expanding realm of data science, Exploratory Data Analysis (EDA) stands as a cornerstone in the data analysis workflow. It is the preliminary step that sets the stage for more complex analyses, helping analysts and data scientists unravel the mysteries hidden within datasets. In this article, we will delve into the significance of EDA and how it plays a pivotal role in understanding, cleaning, and preparing data for further analysis.

Introduction to Exploratory Data Analysis (EDA)

Exploratory Data Analysis is the process of visually and statistically summarizing, interpreting, and understanding the main characteristics of a dataset. It involves techniques that allow analysts to gain insights into the data's distribution, relationships, and patterns, aiding in the formulation of hypotheses and guiding subsequent analysis.

Data Quality Assessment

One of the primary functions of EDA is to assess the quality of the data. Analysts scrutinize the dataset for missing values, outliers, and inconsistencies. Identifying and addressing these issues early in the analysis process is crucial, as they can significantly impact the accuracy and reliability of subsequent analyses.

Identification of Patterns and Trends

EDA provides a lens through which analysts can identify patterns and trends within the data. Visualizations such as scatter plots, line charts, and histograms offer a comprehensive view of the data distribution and can reveal underlying structures that might not be immediately apparent. Recognizing these patterns can guide the formulation of hypotheses and influence the choice of analytical techniques.

Variable Relationships and Correlation

Understanding the relationships between variables is essential in many analyses. EDA tools enable analysts to explore the correlation between different variables, helping to uncover dependencies and interactions. This insight is invaluable when designing predictive models or making data-driven decisions based on correlated factors.

Outlier Detection and Handling

Outliers, data points significantly different from the rest, can distort analysis results. EDA helps identify outliers, enabling analysts to decide whether to exclude, transform, or investigate these data points further. Addressing outliers at the EDA stage ensures a cleaner dataset for subsequent analyses.

Feature Engineering Guidance

Feature engineering involves transforming raw data into a format suitable for machine learning models. EDA aids in the identification of relevant features and potential transformations. By understanding the distribution and characteristics of variables, analysts can make informed decisions about which features to include, exclude, or engineer for optimal model performance.

Data Visualization for Communication

EDA often involves creating visualizations that not only assist in understanding the data but also serve as powerful communication tools. Visual representations make it easier to convey complex information to stakeholders, facilitating better decision-making. From bar charts to heatmaps, the variety of visualizations generated during EDA enhances the interpretability of the data.

Hypothesis Generation and Testing

EDA serves as a fertile ground for hypothesis generation. Analysts, armed with a deeper understanding of the data, can formulate hypotheses about relationships and trends. Subsequent statistical tests can then be conducted to validate or refute these hypotheses, guiding the direction of more advanced analyses.

Enhancing Model Selection

The insights gained from EDA play a pivotal role in selecting the most appropriate modeling techniques. By understanding the distribution of the target variable and the relationships between features, analysts can make informed decisions about which models are likely to perform well on the given dataset. This ensures that computational resources are allocated efficiently, leading to more accurate and interpretable models.

Time and Resource Efficiency

Lastly, EDA contributes to time and resource efficiency in the data analysis workflow. By thoroughly exploring and understanding the dataset upfront, analysts can avoid unnecessary detours and focus on the most promising avenues of analysis. This iterative and interactive process helps streamline the overall analysis, making the entire workflow more efficient and effective.

Conclusion

Exploratory Data Analysis is not merely a preliminary step in the data analysis workflow; it is a fundamental and integral part of the entire process. From ensuring data quality to guiding feature engineering and model selection, EDA serves as the compass that steers analysts through the intricate landscape of data. Embracing the significance of EDA not only facilitates a deeper understanding of the data but also sets the stage for more robust, accurate, and actionable insights in the dynamic field of data science.

2 notes

·

View notes

Text

The Plague of Over-Intellectualizing Feelings

The Age of Think-Feelism: A Crisis of the Over-Educated Heart

In a society where people now analyze their emotions the way scientists study bacteria, a curious epidemic has taken hold: over-intellectualized emotions. Once a quirky trait of philosophy majors and TED Talk addicts, the condition has spread to yoga instructors, junior marketing executives, and even baristas with sociology minors. Experts—by which we mean people who once skimmed a psychology blog—are calling it “Think-Feelism,” the act of feeling only after the production of a 14-slide deck and two footnoted essays. “I don’t cry anymore,” said Ava Berkowitz, a 34-year-old ‘empathy consultant.’ “I just generate a spreadsheet called ‘Tear Triggers 2025’ and sort my feelings by Likert scale. Column C is labeled ‘Despair, Mild.’”

Man Analyzes Feelings So Deeply, Forgets to Feel Them

Take the tragic tale of Colin Pratchett, a self-proclaimed “emotional cartographer” who once loved a woman so fiercely, he wrote her a 120-page paper titled The Semiotics of Us. She left him halfway through chapter two, citing a complete absence of actual affection. “I just wanted to hold hands,” she said. “But he kept using phrases like ‘limbic resonance’ and ‘oxytocin loops.’” Colin is now in recovery, attending group therapy where he tries not to cite Carl Jung during every sentence.

Therapist Diagnoses Patient with Chronic Overthinking; Prescribes Spontaneity

Dr. Regina Softworth, a therapist in Brooklyn, reported that one of her patients insisted on conducting a literary analysis of his breakup. “He brought highlighters to our session and kept saying things like, ‘Notice the irony in her use of the word “fine.”’” Softworth prescribed unstructured crying and spontaneous hiking. The patient asked if he could schedule the spontaneity for the following Tuesday.

Woman Creates Flowchart to Navigate Emotional Breakdown

In a now-viral post titled “When in Doubt, Diagram It Out,” MBA student Natasha Li built a decision tree to handle her sudden bout of existential dread. The chart posed key questions like: “Am I hungry or hollow inside?” and “Is this sadness or just my inner capitalist revolting?” While the design won a UX award, Li admitted, “I still haven’t cried. But I’m closer. I think. I hope.”

New App Translates Emotions into Academic Papers

Silicon Valley has entered the chat. A startup called Feelosophy launched an app that translates emotional experiences into scholarly articles. The AI takes in a user’s weepy voice memo and returns a peer-reviewed essay titled something like The Ontological Grief of Tuesday Mornings: A Heideggerian Review. CEO Tristan Dobbins says the app is in beta testing. “We’re trying to teach the AI the difference between ‘hurt’ and ‘hangry.’ It keeps recommending Nietzsche for every breakup.”

Man Attends Support Group, Presents PowerPoint on His Feelings

In a historic first for the San Diego Cry Club, Derek Munson brought a USB stick to group therapy. “He dimmed the lights and gave a 12-minute presentation on his parents’ divorce, complete with pie charts on intimacy deficiencies and a histogram tracking hugs over time,” reported fellow attendee Clara. “We were all really impressed. But then he asked us to fill out a post-session survey on how his vulnerability made us feel.” He was politely asked never to return.

Study Finds Over-Analyzing Emotions Linked to Under-Experiencing Life

Researchers at the University of Self-Sabotage have confirmed what everyone already knows: thinking about feelings is not the same as feeling them. Lead researcher Dr. Philomena Gripe said, “There’s a significant correlation between academic citations of emotion and total romantic rejection.” The study tracked 100 overthinkers over six months. Most failed to form human bonds but had beautiful Moleskine notebooks full of epiphanies about it.

Local Woman Plans Spontaneous Adventure, Schedules It for Next Month

Maggie Trent, an accountant in Boise, attempted spontaneity for the first time this year. “I wrote ‘Do something wild’ on my calendar, color-coded it, and even bought hiking boots on Etsy,” she said. “But then I panicked and enrolled in an online course about spontaneity instead.” She’s now certified in “Applied Adventure Theory” but still hasn’t left her zip code.

Self-Help Book Teaches Readers to Feel; Readers Analyze Book Instead

Feelings Are Not Fictions by Dr. Rachel Hume aimed to help people reconnect with their raw emotions. But readers, mostly Ivy League graduates and emotionally constipated lawyers, turned it into a literary book club. “I found it too literal,” one reader sniffed. “I prefer my grief with a bit more metaphor.” Another reader gave it one star, writing: “The chapter on crying lacked footnotes.”

Man Breaks Up with Girlfriend via 10-Page Analysis

“I thought it would help her understand,” said Jeremy, who emailed his girlfriend a breakup document complete with SWOT analysis and footnoted references to Plato’s Symposium. The document, titled “Strategic Realignment of Romantic Priorities,” included a bar graph titled “Love vs. Logistics” and a pie chart about “Shared Streaming Platform Overlap.” She responded with a single emoji: the thumbs-up.

Overthinkers Anonymous Meeting Canceled Due to Members Overthinking Attendance

A support group for chronic overanalyzers had to shut down after members couldn’t decide if attending was the right move. “They spent two hours debating whether showing up was a form of emotional commitment,” said the therapist. “One guy drafted a pros-and-cons list so long it broke Google Docs.” The group now meets individually in isolated silos via encrypted Slack channels, where no one feels seen, but everyone is conceptually acknowledged.

The Plague of Over-Intellectualizing Feelings - A satirical cartoon scene in wide aspect. A couple sits on a date at a trendy café, both holding giant academic binders titled 'Emotional Transparency... (1)

What the Funny People Are Saying

“I asked my friend how he felt, and he said, ‘According to a 2006 study, sadness is a limbic disruption.’ So I punched him in the limbic.”— Ron White “Overthinkers treat feelings like IKEA furniture—you stare at the manual for three hours before you cry in the corner.”— Jerry Seinfeld “These people don’t cry in showers. They cry in Google Docs.”— Ali Wong “My therapist told me to stop analyzing and start feeling. So I analyzed that advice for three hours. Then I cried, but only after labeling it as ‘emotionally performative release.’”— Sarah Silverman

Public Opinion Poll: “Are You Overthinking Your Emotions?”

A new survey from PollUsToDeath.org revealed: 67% of Americans said they now Google “What does sadness mean?” before admitting they’re sad. 19% confuse grief with seasonal allergies. 12% won’t cry unless it’s been approved by a peer-reviewed article. 1% have replaced all emotional vocabulary with academic jargon. The same poll revealed that 89% believe their therapists are “probably tired of their metaphorical bulls**t.”

Trace Evidence: The Notebooks of the Chronically Self-Aware

We obtained exclusive access to the journals of self-identified emotional overthinkers. Highlights include: “I cried today. I think. Let me review the transcript.” “What is love? Is it the thing I felt at the dog shelter or when I microwaved taquitos?” “Joy = dopamine x context ÷ childhood trauma?” One journal contained nothing but doodles of Venn diagrams overlapping “Affection” and “Avoidant Attachment.”

Historical Analogy: Descartes Ruins Dating Apps

Dating in 2025 has been infected by think-feelism. Users now open with: “I’m 5'10”, ENTP, and uncertain whether my emotions are authentic or culturally constructed.” “Swipe right if you like epistemological intimacy.” In one documented case, a date ended after the man asked, “Do you feel love, or are we just performing gendered rituals of proximity?” She responded by swiping left in real life.

Social Media Case Study: CryTok and the Rise of Performative Emotion

The newest TikTok trend involves filming your emotional crises with ring lights and royalty-free piano music. Known as “CryTok,” it rewards vulnerability, but only if you can express it with clear audio and proper lighting. One influencer posted, “Just cried because my succulents died.” She got 1.2 million views and a Lululemon brand deal. Said a commenter, “Is it still sadness if it’s monetized?”

Expert Opinion: Neuroscientists vs. Feelings

Neuroscientist Dr. Greg Blunter weighed in: “Humans are overestimating the need to understand feelings at all. You’re supposed to feel them, not label them like rare birds.” He then admitted he hadn’t felt joy since 1998, “but I’ve charted it extensively.”

The Plague of Over-Intellectualizing Feelings - A satirical cartoon scene in wide aspect. A couple sits on a date at a trendy café, both holding giant academic binders titled 'Emotional Transparency...

🧠 Satirical Headlines on Over-Intellectualizing Emotions

"Man Analyzes Feelings So Deeply, Forgets to Feel Them" Local philosopher spends 12 hours dissecting his sadness, emerges with a thesis but no catharsis. "Therapist Diagnoses Patient with Chronic Overthinking; Prescribes Spontaneity" Patient responds, 'Let me research spontaneity first.' "Woman Creates Flowchart to Navigate Emotional Breakdown" Includes decision tree: 'Cry' or 'Suppress and Write Essay About It.' "New App Translates Emotions into Academic Papers" Finally, a way to turn heartbreak into a peer-reviewed article. "Man Attends Support Group, Presents PowerPoint on His Feelings" Group applauds his graphs but suggests he try 'just talking.' "Study Finds Over-Analyzing Emotions Linked to Under-Experiencing Life" Researchers recommend 'feeling feelings' as potential remedy. "Local Woman Plans Spontaneous Adventure, Schedules It for Next Month" Spontaneity now available by appointment only. "Self-Help Book Teaches Readers to Feel; Readers Analyze Book Instead" Chapter 1: 'Stop Reading and Start Feeling' largely ignored. "Man Breaks Up with Girlfriend via 10-Page Analysis" Includes citations and appendix; girlfriend responds with emoji. "Overthinkers Anonymous Meeting Canceled Due to Members Overthinking Attendance" Next meeting TBD after thorough risk assessment. These satirical pieces aim to humorously highlight the pitfalls of over-intellectualizing emotions, encouraging readers to embrace the messiness of genuine feeling. Disclaimer:This report is the result of a deeply collaborative effort between a tenured philosopher who weeps into his Kant volumes and a dairy farmer with a Master’s in Feelings. No feelings were harmed in the making of this satire, but several were renamed for clarity. Any resemblance to actual overthinkers, past or present, is purely self-inflicted. Auf Wiedersehen.

Over-Intellectualizing Emotions - A cartoon scene in wide aspect. A man sits on a therapist’s couch holding a laser pointer, giving a PowerPoint presentation titled 'Why I Feel... (2) Read the full article

0 notes

Text

Comprehensive SAS Tutorial: Master Procedures, Reporting, and Data Visualization

SAS is one of the leading software tools for data analysis, known for its ability to handle large datasets, provide deep statistical analysis, and generate comprehensive reports. A SAS tutorial can help you gain the knowledge and skills needed to master SAS programming, enabling you to use its powerful procedures and capabilities effectively. Whether you're just starting or looking to enhance your SAS skills, this comprehensive tutorial will guide you through key concepts in SAS programming, reporting, and data visualization.

Getting Started with SAS Programming

SAS programming may seem intimidating at first, but it is highly structured, and with the right foundation, anyone can become proficient. The first step in mastering SAS is to familiarize yourself with its basic concepts and syntax. The SAS environment consists of four main components: the Data Step, Procedures (PROCs), Macros, and the Output Delivery System (ODS).

The Data Step is where you create and modify datasets. This is where you load raw data and apply transformations such as cleaning, merging, or summarizing. Procedures (PROCs) are pre-built SAS functions used for various tasks such as statistical analysis, data manipulation, and reporting.

A SAS tutorial for beginners typically starts by explaining these components and guiding you through the basics of data input, variable assignment, and dataset management.

Mastering SAS Procedures

One of the key aspects of SAS programming is learning how to effectively use its procedures (PROCs). Procedures are predefined commands that allow you to perform a wide variety of tasks, from basic data manipulation to complex statistical analysis. Here are some key PROCs you will encounter in your SAS tutorial:

PROC SORT: Used to sort datasets based on specified variables.

PROC MEANS: Used for summarizing data, calculating mean, median, standard deviation, etc.

PROC FREQ: Used to calculate the frequency of categorical variables.

PROC REG: Used for performing regression analysis.

Mastering these basic procedures will help you quickly get results and insights from your data. As you become more proficient, you can explore more advanced procedures for statistical analysis, machine learning, and data modeling.

Reporting and Output Delivery

Reporting is another essential aspect of SAS programming. With SAS’s Output Delivery System (ODS), you can generate high-quality reports and customize the layout of your results. ODS allows you to produce reports in various formats, such as HTML, PDF, and Excel.

Using SAS tutorial resources, you will learn how to customize the appearance of your reports, filter the output, and even create multi-page reports. This is particularly useful for presenting results to stakeholders in an easy-to-understand format.

youtube

Data Visualization with SAS

Data visualization is crucial in turning complex data into a clear and engaging story. SAS offers a variety of tools for creating high-quality graphs and charts. The SGPLOT procedure is one of the most commonly used SAS procedures for creating visualizations.

In this SAS tutorial, you'll learn how to create bar charts, line plots, histograms, and scatter plots, all of which help you identify patterns and trends in your data. SAS also allows for more advanced visualizations like interactive graphs and dashboards.

Conclusion

By following a SAS tutorial, you can build a solid foundation in SAS programming, from basic data manipulation to advanced statistical analysis and reporting. With the power of SAS procedures and visualization tools, you’ll be equipped to tackle even the most complex datasets and deliver actionable insights for your organization. Whether you’re a beginner or an experienced user, mastering these skills will ensure that you can make the most out of SAS's powerful analytics capabilities.

#sas tutorial#sas programming tutorial#sas tutorial for beginners#data visualization#visualization tools#Youtube

0 notes

Text

Make Your Minitab Assignment Easier With Minitab Assignment Help

Minitab is a statistical tool that helps in analyzing data. This statistical tool is widely used in industries where precise evaluation is required. It offers a range of statistical equipment and effective solutions for intricate data sets. Students in various disciplines are required to learn the practical use of Minitab software. They are given several academic projects based on relevant problems in Minitab. Solving the Minitab project might be difficult for students. It requires a solid understanding of statistical concepts and practical use of operating Minitab software. Many students face problems in writing academic papers due to a lack of knowledge and expertise. If you are facing difficulty in solving the Minitab project, you can get Minitab Assignment Help from experts in the USA.

What is Minitab?

Minitab is statistical software that allows users to analyze data using Six Sigma techniques. Minitab helps to generate a single photograph of a large amount of data in the forms of a bar graph, pie chart, histogram, etc. It is specially designed for research data catering to the needs of businesses of all sizes. It ensures accuracy and valuable interaction that provides fast and effective solutions.

Benefits of Using Minitab in Statistical Analysis

Minitab is a complete package of statistical analysis.

It provides accurate and efficient solutions in fast mode

Minitab is user-friendly and makes it easy to use for everyone.

It offers a powerful tool for data analysis that allows users to generate visual representations of data.

Users can easily customize the tool according to their needs which makes it more versatile.

Gaining practical knowledge of using Minitab software through the academic project allows students to excel in the field. However, solving the Minitab project is not easy for everyone. Here professional services assist students in the best way to complete projects excellently.

How Professional Assurance is Useful in Solving Minitab Project

Professional services offer several features and advantages for the Minitab project.

Guidance From Subject Experts

Professional services have a team of experts who are highly trained and experienced in dealing with complex projects. They offer personalized support to students in solving the academic project. By getting support from experts, students can easily tackle complex projects and finish them efficiently.

Exceptional Quality Content

Professional experts provide detailed insight into the topic for your Minitab project. They provide in-depth research material in an organized way using the right methodology. They make the content more presentable using graphs and charts. It helps the reader to understand the material easily.

Stress-Free Deadline

Professional services provide fast delivery of paper. When you are running out of time to meet a deadline, experts will help you to finish the paper timely. It helps students to meet deadlines and manage busy schedules.

Unique and Accurate Solution

When working on a Minitab project there is no room for mistakes. Professional experts are well-versed in creating unique solutions for academic papers. They provide unlimited revision, proofreading, and editing support. It helps to submit plagiarism-free and accurate solutions for the Minitab project.

24 Hours Support

Students may have several doubts about the topic when working on the Minitab project. Minitab Assignment Help Online services provide 24-hour support to students for their projects. It allows students to get their support at any time for solving problems.

Conclusion

Minitab is a useful tool for statistical analysis. By getting support from experts, you can complete papers efficiently and maximize learning in the field.

0 notes

Text

Understanding the Grammar of Graphics in R

The Grammar of Graphics is a conceptual framework for data visualization that allows you to create a wide range of plots using a consistent set of principles. It forms the foundation of the ggplot2 package in R, enabling you to build complex and layered visualizations in a systematic way. Understanding this grammar will not only help you use ggplot2 effectively but also give you deeper insights into how data visualization works at a fundamental level.

1. The Concept of the Grammar of Graphics

The Grammar of Graphics, introduced by Leland Wilkinson in his 1999 book, is a theoretical approach to visualization that breaks down the process of creating a plot into a set of independent components. Each plot is constructed by layering these components, much like how a sentence in language is constructed using grammar.

Key Components:

Data: The dataset that contains the information you want to visualize.

Aesthetics (aes): The visual properties of the plot, such as position, color, size, and shape, which are mapped to variables in your data.

Geometries (geom): The type of visual element used to represent the data, such as points, lines, bars, or boxplots.

Scales: The mappings from data values to visual properties, such as how data values are translated into axis positions, colors, or sizes.

Facets: The division of data into subplots based on a categorical variable, allowing for the comparison of subsets of data.

Coordinates: The system that determines how data points are mapped onto the plot, such as Cartesian coordinates or polar coordinates.

Layers: The different components or elements added on top of each other to build the final plot.

2. Building a Plot Using the Grammar of Graphics

In ggplot2, each plot is constructed using layers, where each layer represents one or more of the components of the Grammar of Graphics. The process typically starts with defining the data and aesthetics, followed by adding geometries and other components.

Basic Plot Structure:

ggplot(data = dataset, aes(x = x_variable, y = y_variable)) + geom_point()

Data: The data argument specifies the dataset you want to use.

Aesthetics: The aes() function maps variables from the dataset to visual properties like the x and y axes.

Geometry: geom_point() adds a layer of points to the plot, creating a scatter plot.

3. Understanding Aesthetics (aes)

Aesthetics in ggplot2 refer to how data variables are mapped to visual properties. These mappings determine how your data is visually represented in the plot.

Common Aesthetics:

x and y: The variables mapped to the x and y axes.

color: The color of points, lines, or bars, which can be mapped to a categorical or continuous variable.

size: The size of points or lines, often mapped to a continuous variable.

shape: The shape of points in a scatter plot, typically used with categorical variables. Example:

ggplot(data = dataset, aes(x = x_variable, y = y_variable, color = category_variable)) + geom_point()

4. Working with Geometries (geom)

Geometries in ggplot2 define the type of plot you create, such as a scatter plot, line plot, bar chart, or histogram. Each geometry function corresponds to a different type of plot.

Common Geometries:

geom_point(): Creates a scatter plot.

geom_line(): Creates a line plot.

geom_bar(): Creates a bar chart.

geom_histogram(): Creates a histogram.

geom_boxplot(): Creates a boxplot.

Example:ggplot(data = dataset, aes(x = x_variable, y = y_variable)) + geom_line()

5. Understanding Scales and Coordinates

Scales in ggplot2 control how data values are mapped to visual properties, such as the position on the axes, colors, or sizes. Coordinates define the plotting area and how data points are placed within it.

Scales:

scale_x_continuous() and scale_y_continuous(): Adjust the continuous scales of the x and y axes.

scale_color_manual(): Customize color scales.

scale_size_continuous(): Customize the size scale.

Example:ggplot(data = dataset, aes(x = x_variable, y = y_variable, color = category_variable)) + geom_point() + scale_color_manual(values = c("red", "blue"))

Coordinates:

coord_cartesian(): Adjust the limits of the plot without affecting the data.

coord_flip(): Swap the x and y axes.

coord_polar(): Convert Cartesian coordinates to polar coordinates for circular plots.

Example:ggplot(data = dataset, aes(x = factor_variable, y = value_variable)) + geom_bar(stat = "identity") + coord_flip()

6. Faceting for Comparison

Faceting allows you to create multiple subplots based on the values of a categorical variable, enabling you to compare different subsets of data.

Faceting Functions:

facet_wrap(): Creates a series of plots wrapped into a grid.

facet_grid(): Creates a grid of plots based on the combination of two categorical variables.

Example:ggplot(data = dataset, aes(x = x_variable, y = y_variable)) + geom_point() + facet_wrap(~ category_variable)

7. Combining Layers to Build Complex Plots

One of the powerful aspects of the Grammar of Graphics is the ability to layer multiple geometries and aesthetics in a single plot. This allows you to create complex visualizations that convey more information.

Example of a Multi-Layer Plot:

ggplot(data = dataset, aes(x = x_variable, y = y_variable)) + geom_point() + geom_smooth(method = "lm", se = FALSE) + labs(title = "Scatter Plot with Linear Regression Line")

In this example, geom_point() adds the points to the plot, while geom_smooth() overlays a linear regression line.

The Grammar of Graphics provides a powerful framework for understanding and creating data visualizations in R using ggplot2. By breaking down the components of a plot into layers, aesthetics, geometries, scales, coordinates, and facets, you can systematically build complex and meaningful visual representations of your data. Mastering this grammar is essential for creating effective visualizations that can reveal insights and support data-driven decision-making.

Full coverage at Strategic leap

0 notes

Text

SPSS Statistical Analysis: A Comprehensive Overview

Statistical analysis is a cornerstone of research and data interpretation across various disciplines. One of the most widely used statistical software packages for this purpose is SPSS (Statistical Package for the Social Sciences). In this article, we will explore some fundamental concepts involved in statistical analysis using SPSS, including validity,reliability, descriptive statistics, correlation, and multiple regression.

Validity

Validity refers to the accuracy and relevance of the findings derived from a statistical analysis. In the context of SPSS, a study is said to be valid if the conclusions drawn from the data accurately represent the true relationships and phenomena being investigated. There are several types of validity, including:

Construct Validity: Ensures that the test truly measures the construct it purports to measure.

Content Validity: Assesses whether the measurement encompasses the full domain of the intended variable.

Criterion-related Validity: Examines how well one measure predicts an outcome based on another measure.

In using SPSS, researchers can conduct various tests to check for validity, such as factor analysis, which helps ensure that the factors used in your study genuinely represent the underlying constructs.

Reliability

Reliability refers to the consistency of a measure. A reliable instrument will yield the same results when applied to similar populations under similar circumstances. In SPSS, researchers assess reliability using several statistical techniques:

Cronbach's Alpha: A common measure for internal consistency of a scale. Values above 0.70 typically indicate acceptable reliability.

Test-Retest Reliability: Compares scores from the same subjects at different times to assess stability over time.

Inter-Rater Reliability: Assesses the degree of agreement among different raters or observers.

Reliability is crucial in ensuring that the data collected through SPSS is trustworthy and repeatable.

Descriptive Statistics

Descriptive statistics provide a summary of the data and include measures such as mean, median, mode, and standard deviation. SPSS offers a range of options to easily calculate these values, allowing researchers to gain a quick understanding of their data sets. Key points in descriptive statistics include:

Central Tendency: Measures (mean, median, mode) that provide insights into the average or most common values.

Dispersion: Measures (range, variance, standard deviation) that describe the spread of data points in the sample.

Frequency Distributions: Visual representations like histograms or bar charts that summarize data occurrences.

Using descriptive statistics in SPSS helps researchers distill complex data into manageable summaries, making it easier to identify trends and patterns.

Correlation

Correlation analysis in SPSS allows researchers to explore the relationships between variables. It quantifies the degree to which two variables are related, using correlation coefficients (such as Pearson’s r). Key aspects include:

Positive Correlation: Indicates that as one variable increases, the other tends to increase.

Negative Correlation: Indicates that as one variable increases, the other tends to decrease.

No Correlation: Suggests no relationship exists between the two variables.

SPSS provides tools to create scatter plots and calculate correlation coefficients, offering visual and numerical insights into the relationships being studied.

Multiple Regression

Multiple regression analysis extends correlation by allowing researchers to examine the predictive relationship between one dependent variable and multiple independent variables. It is an essential technique for understanding complex relationships. Key points include:

Model Fitting: Multiple regression helps researchers fit a model that describes the relationship between variables, determining how much variance in the dependent variable can be explained by the independent variables.

Coefficients Interpretation: The output from SPSS provides regression coefficients that indicate the nature and strength of the relationship of each independent variable with the dependent variable.

Assumptions Checking: Before interpreting results, it is crucial to check assumptions (such as linearity, independence, homoscedasticity, and normality of residuals) to ensure valid inferences.

Multiple regression in SPSS thus offers a powerful mechanism to identify and quantify complex relationships among variables, supporting informed decision-making based on statistical evidence.

Conclusion

SPSS is an invaluable tool for conducting statisticalanalysis, offering a robust platform for evaluating validity, reliability, descriptive statistics, correlation, and multiple regression. Mastery of these concepts enhances the effectiveness of data analysis and enriches the research process, allowing for informed conclusions and evidence-based decisions. With this knowledge, researchers can leverage SPSS to its fullest potential, transforming data into actionable insights.

0 notes

Text

What are the top Python libraries for data science in 2025? Get Best Data Analyst Certification Course by SLA Consultants India

Python's extensive ecosystem of libraries has been instrumental in advancing data science, offering tools for data manipulation, visualization, machine learning, and more. As of 2025, several Python libraries have emerged as top choices for data scientists:

1. NumPy

NumPy remains foundational for numerical computations in Python. It provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on them. Its efficiency and performance make it indispensable for data analysis tasks. Data Analyst Course in Delhi

2. Pandas

Pandas is essential for data manipulation and analysis. It offers data structures like DataFrames, which allow for efficient handling and analysis of structured data. With tools for reading and writing data between in-memory structures and various formats, Pandas simplifies data preprocessing and cleaning.

3. Matplotlib

For data visualization, Matplotlib is a versatile library that enables the creation of static, animated, and interactive plots. It supports various plot types, including line plots, scatter plots, and histograms, making it a staple for presenting data insights.

4. Seaborn

Built on top of Matplotlib, Seaborn provides a high-level interface for drawing attractive statistical graphics. It simplifies complex visualization tasks and integrates seamlessly with Pandas data structures, enhancing the aesthetic appeal and interpretability of plots. Data Analyst Training Course in Delhi

5. Plotly

Plotly is renowned for creating interactive and web-ready plots. It offers a wide range of chart types, including 3D plots and contour plots, and is particularly useful for dashboards and interactive data applications.

6. Scikit-Learn

Scikit-Learn is a comprehensive library for machine learning, providing simple and efficient tools for data mining and data analysis. It supports various machine learning tasks, including classification, regression, clustering, and dimensionality reduction, and is built on NumPy, SciPy, and Matplotlib. Data Analyst Training Institute in Delhi

7. Dask

Dask is a parallel computing library that scales Python code from multi-core local machines to large distributed clusters. It integrates seamlessly with libraries like NumPy and Pandas, enabling scalable and efficient computation on large datasets.

8. PyMC

PyMC is a probabilistic programming library for Bayesian statistical modeling and probabilistic machine learning. It utilizes advanced Markov chain Monte Carlo and variational fitting algorithms, making it suitable for complex statistical modeling.

9. TensorFlow and PyTorch

Both TensorFlow and PyTorch are leading libraries for deep learning. They offer robust tools for building and training neural networks and have extensive communities supporting their development and application in various domains, from image recognition to natural language processing. Online Data Analyst Course in Delhi

10. NLTK and SpaCy

For natural language processing (NLP), NLTK and SpaCy are prominent libraries. NLTK provides a wide range of tools for text processing, while SpaCy is designed for industrial-strength NLP, offering fast and efficient tools for tasks like tokenization, parsing, and entity recognition.

These libraries collectively empower data scientists to efficiently process, analyze, and visualize data, facilitating the extraction of meaningful insights and the development of predictive models.

Data Analyst Training Course Modules Module 1 - Basic and Advanced Excel With Dashboard and Excel Analytics Module 2 - VBA / Macros - Automation Reporting, User Form and Dashboard Module 3 - SQL and MS Access - Data Manipulation, Queries, Scripts and Server Connection - MIS and Data Analytics Module 4 - MS Power BI | Tableau Both BI & Data Visualization Module 5 - Free Python Data Science | Alteryx/ R Programing Module 6 - Python Data Science and Machine Learning - 100% Free in Offer - by IIT/NIT Alumni Trainer

Regarding the "Best Data Analyst Certification Course by SLA Consultants India," I couldn't find specific information on such a course in the provided search results. For the most accurate and up-to-date details, I recommend visiting SLA Consultants India's official website or contacting them directly to inquire about their data analyst certification offerings. For more details Call: +91-8700575874 or Email: [email protected]

0 notes

Text

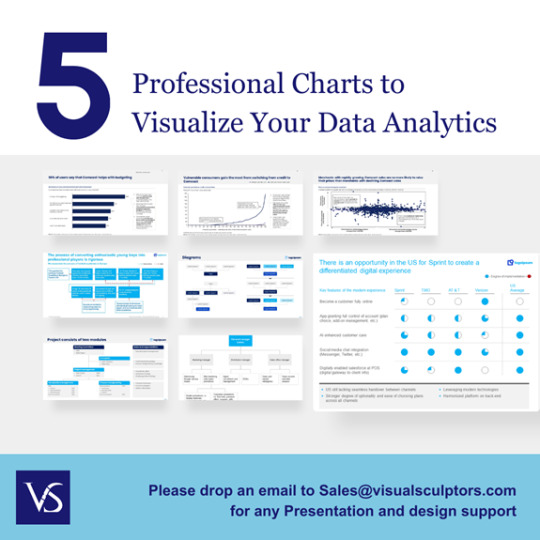

Enhancing Data Presentation: The Importance of Attractive Charts in PowerPoint

Data Visualization: Common Queries Answered

1.How to make PowerPoint charts look good?

To enhance PowerPoint charts, follow these tips: choose a clean, professional design and consistent color scheme that aligns with your branding. Limit the number of data points to avoid clutter and use clear labels and legends. Opt for simple chart types like bar or line charts for clarity. Incorporate white space to improve readability and focus. Use contrasting colors for important data points to draw attention. Lastly, ensure your charts are properly sized and placed within the slide for a balanced layout. Always preview your slides to check for visual appeal and effectiveness.

2. How to do a histogram chart in PowerPoint?

To create a histogram in PowerPoint, follow these steps:

1. Open PowerPoint and insert a new slide.

2. Go to the "Insert" tab and click on "Chart."

3. In the Chart dialog, select "Histogram" from the list of chart types.

4. Click "OK" to insert the chart.

5. A spreadsheet will open; enter your data in the provided cells.

6. Adjust the axis titles and chart design as needed using the Chart Tools.

7. Close the spreadsheet, and your histogram will be displayed on the slide.

You can further customize it using formatting options.

3. How to create a box and whisker chart in PowerPoint?

To create a box and whisker chart in PowerPoint, first, open a new slide and choose "Insert" > "Chart." Select "Box and Whisker" from the chart options. Click "OK," and an Excel sheet will open for data input. Enter your data in the provided cells, then close the Excel window. The chart will update automatically. You can customize the chart's design and layout by selecting it and using the "Chart Tools" on the ribbon. Adjust colors, labels, and other elements as needed for clarity and presentation.

4. How do I make a box chart in PowerPoint?

To create a box chart in PowerPoint, follow these steps:

1. Open PowerPoint and insert a new slide.

2. Click on the "Insert" tab, then select "Chart."

3. In the Chart dialog, choose "Box and Whisker" from the list.

4. Click "OK" to insert the chart.

5. PowerPoint will open Excel for you to enter your data. Input your data in the provided spreadsheet.

6. Close Excel to view the updated box chart in PowerPoint.

7. Customize the chart's design and format using the Chart Tools that appear on the ribbon.

5. How do you create a waterfall chart in PowerPoint?

To create a waterfall chart in PowerPoint, first, you need to insert a chart. Go to the "Insert" tab, click on "Chart," and select "Waterfall" from the list. Input your data in the Excel sheet that appears, organizing it to reflect the sequential changes (positive and negative values). After entering data, customize the chart with titles, labels, and colors by using the Chart Tools available. Adjust the chart layout as needed, then finalize your presentation by adding any additional design elements. Save your work to keep your changes.

Visit: VS Website See: VS Portfolio

0 notes

Text

Predicting Bitcoin’s Future: How Whale Activity and Miner Challenges Affect BTC Value

Key Points

Over 20,000 Bitcoin have been moved out of long-term holders’ wallets, coinciding with a drop in Bitcoin’s value.

Miners are under financial pressure, with profitability metrics dipping and mining difficulty rising.

There has been a significant movement of over 20,000 Bitcoin (BTC) out of the wallets of long-term holders in the past few days. This shift coincides with a sharp drop in Bitcoin’s value, with the cryptocurrency currently trading at around $96,600, a decrease of 7.5% over the past week.

This substantial Bitcoin movement was highlighted by analyst Ali Martinez, who suggested that such large-scale shifts by whales could create new market opportunities.

Long-term Holder SOPR

Data from CryptoQuant shows that the Bitcoin long-term holder SOPR (Spent Output Profit Ratio), a key metric that measures the profitability of coins held for more than 155 days, currently stands at 3.5. This indicates that many investors are selling at a profit, often a bullish signal, as values above 1 show.

Pressure on Miners

Miners are currently facing a period of extreme financial pressure, with profitability metrics dipping into the “extremely underpaid” zone. The increasing difficulty of mining, compounded by Bitcoin’s post-halving price decline, has made operations less sustainable. Despite the growing mining hashrate, returns remain low, leading miners towards heavy selling.

Historically, such situations have marked major accumulation zones. Miner-to-exchange flows have reached extreme levels, suggesting a wave of capitulation. When miners are forced to sell heavily, it often signals a temporary shakeout, potentially leading to positive mid-term price corrections.

Bitcoin Price Outlook

On the daily chart, Bitcoin’s price currently hovers near the lower Bollinger Band, indicating oversold conditions. A potential mean reversion to the $101,500 resistance level is possible if buying interest picks up.

If Bitcoin breaks above the immediate resistance at $98,000, it could retest the upper Bollinger Band at $107,000. However, the MACD remains bearish, with the signal line below the MACD line and red histograms forming, suggesting ongoing downward momentum.

Support lies around $95,500, and a failure to hold this level may push Bitcoin towards $90,000 or lower. The ongoing price dip has sent the Crypto Fear and Greed Index to 44 levels, signaling fear among investors. Adding to market anxieties, former BitMEX CEO Arthur Hayes recently projected that Bitcoin could still drop to $70,000-$75,000 in the near future.

Despite short-term bearish trends, seasoned investors see this phase as an accumulation opportunity, aligning with historical patterns where miner capitulation often precedes recovery.

0 notes

Text

9 Quick Tips to Hypothesis Testing with SPSS Help for Students

Introduction: Hypothesis Testing Doesn’t Have to Be a Nightmare

If you’re a student just starting out with hypothesis testing in statistics you’ve probably had moments of frustration—especially when using SPSS or, worse, Minitab. Many students struggle with choosing the right test, interpreting output tables or even just setting up their data correctly. It’s not your fault—hypothesis testing can feel like a puzzle with too many pieces.

This is where SPSS help for students comes in. Unlike Minitab which can be overwhelming with its rigidity, SPSS is more user friendly. But even with SPSS things can get confusing. What’s the difference between a t-test and an ANOVA? How do you check assumptions? And what do all those numbers in the output window mean?

Don’t worry I’ve got your back. Below are 9 quick tips to make hypothesis testing in SPSS easier, faster and less stressful. Let’s get started!

1. Know Your Hypothesis Type Before You Touch SPSS

Before you even open SPSS make sure you clearly define your null (H₀) and alternative (H₁) hypotheses. This will determine the type of test you need. Here’s an example:

Null hypothesis (H₀): There is no difference in students’ test scores before and after using a study app.

Alternative hypothesis (H₁): Students score higher after using the study app.

If you’re not sure what type of test you need, SPSS has a helpful “Analyze” menu, but understanding your hypothesis is step one.

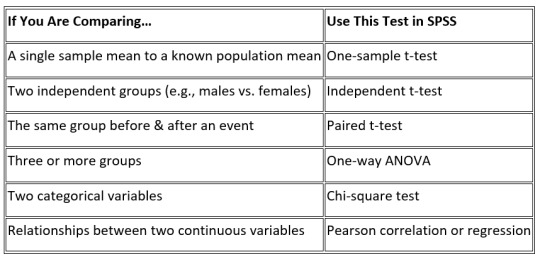

2. Choose the Right Statistical Test – It’s Easier Than You Think

One of the biggest struggles students face is choosing the right test. Here’s a quick guide:

If this is still overwhelming consider getting SPSS help for students from an expert—or if you’re really stuck you might even think, Can I pay someone to do my statistics homework? (Spoiler: Yes, you can, but learning it yourself is worth it!)

3. Always Check for Normality – Don’t Skip This Step!

Most hypothesis tests assume your data is normally distributed. To check normality in SPSS:

Click Analyze > Descriptive Statistics > Explore

Move your dependent variable into the Dependent List box

Click Plots, check Normality Plots with Tests, then hit OK

Look at the Shapiro-Wilk test—if p > 0.05, your data is normal. If not, consider a non-parametric test like the Mann-Whitney U test instead of a t-test.

4. Understand the p-Value – It’s More Than Just < 0.05

A p-value tells you to reject H₀, but students often misinterpret it. If p < 0.05 you have significant results (reject H₀). If p > 0.05 the results are not statistically significant (fail to reject H₀).

But here’s the catch: A p-value alone doesn’t tell you if your results are practically significant. Always look at effect size and confidence intervals for more.

5. Check Assumptions Before You Run Any Test

Most tests require assumptions, like homogeneity of variance (for t-tests and ANOVA). In SPSS you can check this using Levene’s test:

Click Analyze > Compare Means > One-Way ANOVA

Check the box for Homogeneity of variance test

If p < 0.05, variances are unequal, and you may need to adjust (like Welch’s test).

Don’t skip assumption checks or you’ll end up with wrong conclusions!

6. Use Graphs to Back Up Your Hypothesis Testing

Raw numbers are great, but SPSS’s graphs will make your results more impressive. Try these:

Boxplots for comparing groups

Histograms to check distributions

Scatterplots to see correlations

To create graphs in SPSS go to Graphs > Legacy Dialogs, select your chart type and customize to make your results more obvious.

7. Know When to Use One-Tailed vs. Two-Tailed

Many students assume two-tailed tests are always the way to go. Not true!

One-tailed test if you have a specific directional hypothesis (e.g. "higher", "lower")

Two-tailed test if you’re just testing for any difference.

One-tailed tests are more powerful but you might miss the opposite effect. Choose wisely!

8. Is Your Sample Size Big Enough?

Small sample sizes can lead to wrong results. Use G*Power (free) or SPSS’s power analysis to check if your sample size is sufficient.

Click Analyze > Power Analysis

Enter your effect size, alpha level and expected sample size

If your study is underpowered (if so you may need more participants)

9. Write Up Your Results Like a Pro (APA Style)

If you’re writing a report follow APA style. Here’s how to write up your results:

"An independent t-test was conducted to compare test scores between students who used the app and those who didn’t. The results were significant, t(48) = 2.34, p = 0.022, d = 0.65, app users scored higher.”

Always include the test type, degrees of freedom, test statistic, p-value and effect size.

Final Thoughts

Hypothesis testing in SPSS doesn’t have to be torture. Follow these 9 tips—choose the right test, check assumptions, interpret results correctly—you’ll feel more confident and will ace your stats assignments. And remember, whenever you feel the need of SPSS help for students, don’t hesitate to reach out to your professor or online spss experts.

#SPSS help for students#spss#help in spss#Hypothesis Type#Statistical Test#Shapiro-Wilk test#Levene’s test#Power Analysis#G*Power#APA Style

0 notes

Text

SAS Tutorial for Researchers: Streamlining Your Data Analysis Process

Researchers often face the challenge of managing large datasets, performing complex statistical analyses, and interpreting results in meaningful ways. Fortunately, SAS programming offers a robust solution for handling these tasks. With its ability to manipulate, analyze, and visualize data, SAS is a valuable tool for researchers across various fields, including social sciences, healthcare, and business. In this SAS tutorial, we will explore how SAS programming can streamline the data analysis process for researchers, helping them turn raw data into meaningful insights.

1. Getting Started with SAS for Research

For researchers, SAS programming can seem intimidating at first. However, with the right guidance, it becomes an invaluable tool for data management and analysis. To get started, you need to:

Understand the SAS Environment: Familiarize yourself with the interface, where you'll be performing data steps, running procedures, and viewing output.

Learn Basic Syntax: SAS uses a straightforward syntax where each task is organized into steps (Data steps and Procedure steps). Learning how to structure these steps is the foundation of using SAS effectively.

2. Importing and Preparing Data

The first step in any analysis is preparing your data. SAS makes it easy to import data from a variety of sources, such as Excel files, CSVs, and SQL databases. The SAS tutorial for researchers focuses on helping you:

Import Data: Learn how to load data into SAS using commands like PROC IMPORT.

Clean Data: Clean your data by removing missing values, handling outliers, and transforming variables as needed.

Merge Datasets: Combine multiple datasets into one using SAS’s MERGE or SET statements.

Having clean, well-organized data is crucial for reliable results, and SAS simplifies this process with its powerful data manipulation features.

3. Conducting Statistical Analysis with SAS

Once your data is ready, the next step is performing statistical analysis. SAS offers a wide array of statistical procedures that researchers can use to analyze their data:

Descriptive Statistics: Calculate basic statistics like mean, median, standard deviation, and range to understand your dataset’s characteristics.

Inferential Statistics: Perform hypothesis tests, t-tests, ANOVA, and regression analysis to make data-driven conclusions.

Multivariate Analysis: SAS also supports more advanced techniques, like factor analysis and cluster analysis, which are helpful for identifying patterns or grouping similar observations.

This powerful suite of statistical tools allows researchers to conduct deep, complex analyses without the need for specialized software

4. Visualizing Results

Data visualization is an essential part of the research process. Communicating complex results clearly can help others understand your findings. SAS includes a variety of charting and graphing tools that can help you present your data effectively:

Graphs and Plots: Create bar charts, line graphs, histograms, scatter plots, and more.

Customized Output: Use SAS’s graphical procedures to format your visualizations to suit your presentation or publication needs.

These visualization tools allow researchers to present data in a way that’s both understandable and impactful.

5. Automating Research Workflows with SAS

Another benefit of SAS programming for researchers is the ability to automate repetitive tasks. Using SAS’s macro functionality, you can:

Create Reusable Code: Build macros for tasks you perform frequently, saving time and reducing the chance of errors.

Automate Reporting: Automate the process of generating reports, so you don’t have to manually create tables or charts for every analysis.

youtube

Automation makes it easier for researchers to focus on interpreting results and less on performing routine tasks.

Conclusion

The power of SAS programming lies in its ability to simplify complex data analysis tasks, making it a valuable tool for researchers. By learning the basics of SAS, researchers can easily import, clean, analyze, and visualize data while automating repetitive tasks. Whether you're analyzing survey data, clinical trial results, or experimental data, SAS has the tools and features you need to streamline your data analysis process. With the help of SAS tutorials, researchers can quickly master the platform and unlock the full potential of their data.

#sas tutorial#sas tutorial for beginners#sas programming tutorial#SAS Tutorial for Researchers#Youtube

0 notes

Text

Data Analyst Course and Storytelling: Creating Impactful Dashboards and Charts Using Tools Like Tableau and Power BI

In the modern data-driven world, raw data is only as valuable as the insights derived from it. For businesses, conveying these insights in an impactful and comprehensible way is critical for decision-making. This is where the art of storytelling in data analysis comes into play. A dedicated module on data visualisation and storytelling in a Data Analyst Course in Nagpur equips professionals to create impactful dashboards and charts using industry-leading tools like Tableau and Power BI.

The Role of Storytelling in Data Analysis

Storytelling in data analysis involves transforming complex datasets into visually compelling narratives that resonate with stakeholders. It bridges the gap that creeps in between data insights and actionable business strategies. Key components of effective data storytelling include:

Clarity: Simplifying complex information for easy comprehension.

Context: Providing relevant background to make data meaningful.

Engagement: Using visuals to capture and hold attention.

Actionability: Delivering insights that lead to informed decisions.

Why Focus on Dashboards and Charts?

Dashboards and charts serve as the backbone of data storytelling. They provide an interactive and visual medium to:

Monitor key performance indicators (KPIs).

Track trends and patterns over time.

Highlight anomalies and areas requiring attention.

Support data-driven decision-making with clarity and precision.

Key Features of Tableau and Power BI

Tableau:

Renowned for its user-friendly interface and powerful visualisation capabilities.

Allows integration with multiple sources of data for comprehensive analysis.

Offers advanced features like drag-and-drop functionality, real-time data updates, and storytelling dashboards.

Supports interactive visualisations like heatmaps, treemaps, and scatter plots.

Power BI:

A robust Microsoft tool that integrates seamlessly with Office 365 and other Microsoft products.

Provides AI-driven insights and natural language querying.

Offers a wide range of visual templates and customisation options.

Ideal for creating enterprise-grade reports and dashboards.

Course Highlights: Mastering Visualisation Tools

A Data Analyst Course focusing on storytelling and visualisation covers the following key areas:

Data Preparation and Cleaning:

Importing, cleaning, and organising datasets for visualisation.

Handling large and disparate data sources.

Designing Dashboards:

Principles of effective dashboard design: layout, colour theory, and usability.

Building interactive and dynamic dashboards in Tableau and Power BI.

Charting Techniques:

Selecting the right chart type for the data and audience.

Creating advanced visualisations like histograms, box plots, and waterfall charts.

Storytelling Frameworks:

Structuring narratives around visualisations.

Using annotations, highlights, and sequences to guide the audience.

Real-World Projects:

Hands-on experience with industry-relevant datasets.

Creating end-to-end dashboards addressing business scenarios.

Benefits of Mastering Data Storytelling

Professionals trained in data storytelling with tools like Tableau and Power BI can:

Communicate insights effectively and efficiently to both technical and non-technical audiences.

Drive strategic decisions with data-backed narratives.

Enhance their employability in industries prioritising data-driven approaches.

Build visually engaging reports that foster collaboration and understanding.

Conclusion

A Data Analyst Course in Nagpur emphasising storytelling and visualisation is essential for anyone aiming to excel in today’s data-centric roles. By mastering tools like Tableau and Power BI, participants can create dashboards and charts that not only inform but inspire action. This skillset ensures that data analysis evolves from mere number crunching to impactful decision-making support.

0 notes

Text

Master Quality Control with 7QC Tools Training by 4C Consulting

In the competitive world of manufacturing and production, maintaining high quality standards is crucial for success. The 7QC Tools Training offered by 4C Consulting equips professionals with essential tools for effective quality control and process improvement. This blog provides a comprehensive overview of the 7QC Tools, their importance, and how the training can benefit organizations in achieving superior quality management.

Understanding 7QC Tools

Definition: The 7QC Tools (Seven Quality Control Tools) are fundamental instruments used in quality management and problem-solving processes. These tools are widely recognized for their simplicity, effectiveness, and ability to aid in data analysis and decision-making.

The Seven Tools:

Cause-and-Effect Diagram (Fishbone/Ishikawa Diagram): Identifies potential causes of a problem to find the root cause.

Check Sheet: A structured, prepared form for collecting and analyzing data.

Control Chart: Monitors process variation and stability over time.

Histogram: Graphically displays the distribution of data.

Pareto Chart: Highlights the most significant factors in a data set.

Scatter Diagram: Analyzes the relationship between two variables.

Flow Chart (Process Mapping): Visualizes the steps in a process.

Importance of 7QC Tools

Problem Identification and Resolution: Helps in identifying the root causes of quality issues and developing effective solutions.

Data-Driven Decisions: Provides a basis for making decisions based on actual data rather than assumptions.

Process Improvement: Facilitates continuous improvement in processes, leading to higher efficiency and reduced waste.

Enhanced Product Quality: Ensures that products meet or exceed customer expectations by controlling and improving quality.

Employee Engagement: Involves employees in quality control processes, fostering a culture of quality and accountability.

Customer Satisfaction: Leads to higher customer satisfaction by consistently delivering high-quality products and services.

7QC Tools Training

Understanding 7QC Tools:

Cause-and-Effect Diagram: Learn to create and analyze fishbone diagrams to identify root causes of problems.

Check Sheet: Understand the design and use of check sheets for data collection and analysis.

Control Chart: Gain skills in developing and interpreting control charts to monitor process variation.

Histogram: Learn to construct histograms and analyze data distribution patterns.

Pareto Chart: Understand the 80/20 rule and how to apply it using Pareto charts.

Scatter Diagram: Develop skills in plotting and interpreting scatter diagrams to identify relationships between variables.

Flow Chart: Learn to create process maps to visualize and improve workflows.

Importance of Training:

Practical Skills: Provides hands-on experience in using each of the 7QC tools effectively.

Analytical Thinking: Enhances analytical and critical thinking skills required for quality management.

Improvement Projects: Equips participants with the knowledge to lead and participate in process improvement projects.

Certification: Validates the skills and knowledge acquired, adding value to professional credentials.

Why Choose 4C Consulting?

4C Consulting offers comprehensive 7QC Tools Training designed to empower professionals with the knowledge and skills needed for effective quality control. Our expert trainers bring extensive industry experience, ensuring practical and relevant learning. We provide customized training solutions tailored to meet the specific needs of your organization, fostering a culture of continuous improvement. By choosing 4C Consulting, you gain access to the best practices in quality management, helping your organization achieve excellence in product quality and customer satisfaction. Contact us now.

0 notes

Text

Digital Tools for Automating 7 QC Tools Usage

The 7 Quality Control (QC) tools are fundamental instruments for problem-solving, process improvement, and quality management in industries worldwide. With advancements in digital technologies, leveraging software and automation for these tools can significantly enhance efficiency, accuracy, and decision-making. This article explores digital tools that automate the usage of the 7 QC tools, their benefits, and practical applications.

Understanding the 7 QC Tools

Before diving into digital tools, let’s briefly revisit the 7 QC tools:

Check Sheets - Used for data collection and analysis.

Pareto Charts - Helps identify the most significant factors in a dataset.

Cause-and-Effect Diagrams (Fishbone/Ishikawa) - Identifies root causes of problems.

Control Charts - Monitors process variation over time.

Histograms - Visualizes the distribution of data.

Scatter Diagrams - Identifies relationships between two variables.

Flowcharts - Maps out processes to understand and improve them.

Digital Tools for Automating the 7 QC Tools

1. Microsoft Excel and Google Sheets

Excel and Google Sheets remain versatile tools for automating calculations, visualizations, and data organization. Their built-in templates, formulas, and charting capabilities are highly adaptable for:

Creating Check Sheets with dropdowns and conditional formatting.

Generating Pareto Charts using pivot tables.

Designing Control Charts with dynamic ranges.

2. Minitab

Minitab is a statistical software specifically designed for quality improvement. It offers:

Automated creation of Control Charts.

Quick generation of Histograms and Scatter Diagrams.

Integrated tools for Cause-and-Effect analysis.

3. Lucidchart

Lucidchart simplifies the creation of Flowcharts and Cause-and-Effect Diagrams. Features include:

Drag-and-drop interface for diagramming.

Cloud-based collaboration for team inputs.

Integration with tools like Google Drive and Slack.

4. Tableau and Power BI

These data visualization platforms excel in presenting insights visually:

Build Pareto Charts to prioritize issues.

Create Scatter Diagrams to analyze variable relationships.

Generate interactive dashboards to monitor quality metrics.

5. Qlik Sense

Qlik Sense is another data analytics tool that:

Automates Histogram generation from large datasets.

Offers predictive analytics to enhance Scatter Diagram insights.

Allows for real-time monitoring with Control Charts.

6. Fishbone Diagram Software

Dedicated tools like XMind and Edraw Max specialize in:

Interactive Fishbone Diagrams.

Collaboration for brainstorming root causes.

Exporting diagrams in various formats for reports.

7. Custom Quality Management Software

Platforms like Qualityze and MasterControl provide:

Comprehensive QC tool integration.

Automated workflows for Check Sheets and Flowcharts.

Scalability for enterprise-level quality management.

Benefits of Automating QC Tools

Improved Accuracy: Reduces human error through automation.

Time Efficiency: Accelerates data analysis and visualization.

Enhanced Collaboration: Cloud-based tools allow teams to work together seamlessly.

Data Integration: Combines data from various sources for holistic analysis.

Real-Time Monitoring: Enables immediate insights into process performance.

Practical Applications in Industries

Manufacturing:

Automating Control Charts to monitor production quality.

Using Pareto Charts to identify key defects.

Healthcare:

Employing Flowcharts for patient care workflows.

Leveraging Check Sheets for data collection on recurring issues.

IT and Software:

Using Scatter Diagrams to analyze system performance metrics.

Automating Fishbone Diagrams for debugging processes.

Retail:

Monitoring sales processes with Histograms.

Improving inventory management using Pareto analysis.

Conclusion

Digital tools have revolutionized the application of the 7 QC tools by offering automation, accuracy, and collaborative capabilities. Businesses that integrate these technologies into their quality management processes can achieve superior efficiency and better decision-making. Investing in the right software not only streamlines operations but also paves the way for continuous improvement and long-term success.

0 notes