#Learning cloud computing

Video

youtube

Top Cloud Hosting Companies NEED TALENT! |

We discuss a program that has empowered individuals from various backgrounds to transition into the tech industry, specifically cloud computing who are now happily making six-figure salaries without the burden or expense of a four-year college degree. For more visit here

#youtube#cloud computing#cloud#aws#amazonwebservices#oracle#Learning cloud computing#Cloud Hosting Talent#Top CloudHosting Companies#career in tech#jobs in tech#technology jobs#cloud computing jobs#aws cloud#aws for beginners#what is cloud computing#introduction to cloud computing#cloud technology#aws basics#cloud computing explained#aws course#aws services#aws certification#what is cloud computing for beginners#learn aws#software engineer#google cloud#Top

1 note

·

View note

Text

What is Cloud Computing ?

Cloud computing has become a widely discussed topic in recent years, but explaining it in simple terms to someone without a background in computer science can be challenging. Allow me to break it down for you.

Cloud computing is a method of storing and accessing data and programs over the internet, rather than keeping them on your personal computer or mobile device. To illustrate this, let's consider online email services like Gmail or Outlook. When you use these services, you can access your emails from anywhere because they are stored in the cloud. This means you don't need to install any special software or save your messages on your hard drive. Instead, your emails are stored on remote servers owned by companies like Google or Microsoft. You can access them from any device connected to the internet, regardless of your location.

Understanding Servers in the Cloud

Now, let's delve into the concept of servers in the cloud.

The data stored in the cloud is saved on physical servers, which are powerful computers capable of storing and processing vast amounts of information. These servers are typically housed in data centers, which are specialized facilities that accommodate thousands of servers and other equipment. Data centers require significant power, cooling, security, and connectivity to operate efficiently and reliably.

Microsoft and Google are two of the largest cloud providers globally, and they have data centers located in various regions and continents. Here are some examples of where their data centers are located, according to search results:

Microsoft has data centers in North America, South America, Europe, Asia, Africa, and Australia.

Google has data centers in North America, South America, Europe, and Asia.

#codeblr#code#javascript#java development company#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#html css#learn to code#cloud computing#datascience#dataanalytics

46 notes

·

View notes

Text

The Comprehensive Guide to Web Development, Data Management, and More

Introduction

Everything today is technology driven in this digital world. There's a lot happening behind the scenes when you use your favorite apps, go to websites, and do other things with all of those zeroes and ones — or binary data. In this blog, I will be explaining what all these terminologies really means and other basics of web development, data management etc. We will be discussing them in the simplest way so that this becomes easy to understand for beginners or people who are even remotely interested about technology. JOIN US

What is Web Development?

Web development refers to the work and process of developing a website or web application that can run in a web browser. From laying out individual web page designs before we ever start coding, to how the layout will be implemented through HTML/CSS. There are two major fields of web development — front-end and back-end.

Front-End Development

Front-end development, also known as client-side development, is the part of web development that deals with what users see and interact with on their screens. It involves using languages like HTML, CSS, and JavaScript to create the visual elements of a website, such as buttons, forms, and images. JOIN US

HTML (HyperText Markup Language):

HTML is the foundation of all website, it helps one to organize their content on web platform. It provides the default style to basic elements such as headings, paragraphs and links.

CSS (Cascading Style Sheets):

styles and formats HTML elements. It makes an attractive and user-friendly look of webpage as it controls the colors, fonts, layout.

JavaScript :

A language for adding interactivity to a website Users interact with items, like clicking a button to send in a form or viewing images within the slideshow. JOIN US

Back-End Development

The difference while front-end development is all about what the user sees, back end involves everything that happens behind. The back-end consists of a server, database and application logic that runs on the web.

Server:

A server is a computer that holds website files and provides them to the user browser when they request it. Server-Side: These are populated by back-end developers who build and maintain servers using languages like Python, PHP or Ruby.

Database:

The place where a website keeps its data, from user details to content and settings The database is maintained with services like MySQL, PostgreSQL, or MongoDB. JOIN US

Application Logic —

the code that links front-end and back-end It takes user input, gets data from the database and returns right informations to front-end area.

Why Proper Data Management is Absolutely Critical

Data management — Besides web development this is the most important a part of our Digital World. What Is Data Management? It includes practices, policies and procedures that are used to collect store secure data in controlled way.

Data Storage –

data after being collected needs to be stored securely such data can be stored in relational databases or cloud storage solutions. The most important aspect here is that the data should never be accessed by an unauthorized source or breached. JOIN US

Data processing:

Right from storing the data, with Big Data you further move on to process it in order to make sense out of hordes of raw information. This includes cleansing the data (removing errors or redundancies), finding patterns among it, and producing ideas that could be useful for decision-making.

Data Security:

Another important part of data management is the security of it. It refers to defending data against unauthorized access, breaches or other potential vulnerabilities. You can do this with some basic security methods, mostly encryption and access controls as well as regular auditing of your systems.

Other Critical Tech Landmarks

There are a lot of disciplines in the tech world that go beyond web development and data management. Here are a few of them:

Cloud Computing

Leading by example, AWS had established cloud computing as the on-demand delivery of IT resources and applications via web services/Internet over a decade considering all layers to make it easy from servers up to top most layer. This will enable organizations to consume technology resources in the form of pay-as-you-go model without having to purchase, own and feed that infrastructure. JOIN US

Cloud Computing Advantages:

Main advantages are cost savings, scalability, flexibility and disaster recovery. Resources can be scaled based on usage, which means companies only pay for what they are using and have the data backed up in case of an emergency.

Examples of Cloud Services:

Few popular cloud services are Amazon Web Services (AWS), Microsoft Azure, and Google Cloud. These provide a plethora of services that helps to Develop and Manage App, Store Data etc.

Cybersecurity

As the world continues to rely more heavily on digital technologies, cybersecurity has never been a bigger issue. Protecting computer systems, networks and data from cyber attacks is called Cyber security.

Phishing attacks, Malware, Ransomware and Data breaches:

This is common cybersecurity threats. These threats can bear substantial ramifications, from financial damages to reputation harm for any corporation.

Cybersecurity Best Practices:

In order to safeguard against cybersecurity threats, it is necessary to follow best-practices including using strong passwords and two-factor authorization, updating software as required, training employees on security risks.

Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) represent the fastest-growing fields of creating systems that learn from data, identifying patterns in them. These are applied to several use-cases like self driving cars, personalization in Netflix.

AI vs ML —

AI is the broader concept of machines being able to carry out tasks in a way we would consider “smart”. Machine learning is a type of Artificial Intelligence (AI) that provides computers with the ability to learn without being explicitly programmed. JOIN US

Applications of Artificial Intelligence and Machine Learning: some common applications include Image recognition, Speech to text, Natural language processing, Predictive analytics Robotics.

Web Development meets Data Management etc.

We need so many things like web development, data management and cloud computing plus cybersecurity etc.. but some of them are most important aspects i.e. AI/ML yet more fascinating is where these fields converge or play off each other.

Web Development and Data Management

Web Development and Data Management goes hand in hand. The large number of websites and web-based applications in the world generate enormous amounts of data — from user interactions, to transaction records. Being able to manage this data is key in providing a fantastic user experience and enabling you to make decisions based on the right kind of information.

E.g. E-commerce Website, products data need to be saved on server also customers data should save in a database loosely coupled with orders and payments. This data is necessary for customization of the shopping experience as well as inventory management and fraud prevention.

Cloud Computing and Web Development

The development of the web has been revolutionized by cloud computing which gives developers a way to allocate, deploy and scale applications more or less without service friction. Developers now can host applications and data in cloud services instead of investing for physical servers.

E.g. A start-up company can use cloud services to roll out the web application globally in order for all users worldwide could browse it without waiting due unavailability of geolocation prohibited access.

The Future of Cybersecurity and Data Management

Which makes Cybersecurity a very important part of the Data management. The more data collected and stored by an organization, the greater a target it becomes for cyber threats. It is important to secure this data using robust cybersecurity measures, so that sensitive information remains intact and customer trust does not weaken. JOIN US

Ex: A healthcare provider would have to protect patient data in order to be compliant with regulations such as HIPAA (Health Insurance Portability and Accountability Act) that is also responsible for ensuring a degree of confidentiality between a provider and their patients.

Conclusion

Well, in a nutshell web-developer or Data manager etc are some of the integral parts for digital world.

As a Business Owner, Tech Enthusiast or even if you are just planning to make your Career in tech — it is important that you understand these. With the progress of technology never slowing down, these intersections are perhaps only going to come together more strongly and develop into cornerstones that define how we live in a digital world tomorrow.

With the fundamental knowledge of web development, data management, automation and ML you will manage to catch up with digital movements. Whether you have a site to build, ideas data to manage or simply interested in what’s hot these days, skills and knowledge around the above will stand good for changing tech world. JOIN US

#Technology#Web Development#Front-End Development#Back-End Development#HTML#CSS#JavaScript#Data Management#Data Security#Cloud Computing#AWS (Amazon Web Services)#Cybersecurity#Artificial Intelligence (AI)#Machine Learning (ML)#Digital World#Tech Trends#IT Basics#Beginners Guide#Web Development Basics#Tech Enthusiast#Tech Career#america

4 notes

·

View notes

Text

2024 GLOBAL TECH WRITING CONTEST

We're thrilled to announce the "2024 GLOBAL TECH WRITING CONTEST"

For more details, visit the website above and claim your writing glory!

With love,

The TECHNEBUL Team

#artificial intelligence#technology#content writing#innovation#gen ai#data analysis#cloud computing#machine learning

2 notes

·

View notes

Text

TOP 10 courses that have generally been in high demand in 2024-

Data Science and Machine Learning: Skills in data analysis, machine learning, and artificial intelligence are highly sought after in various industries.

Cybersecurity: With the increasing frequency of cyber threats, cybersecurity skills are crucial to protect sensitive information.

Cloud Computing: As businesses transition to cloud-based solutions, professionals with expertise in cloud computing, like AWS or Azure, are in high demand.

Digital Marketing: In the age of online businesses, digital marketing skills, including SEO, social media marketing, and content marketing, are highly valued.

Programming and Software Development: Proficiency in programming languages and software development skills continue to be in high demand across industries.

Healthcare and Nursing: Courses related to healthcare and nursing, especially those addressing specific needs like telemedicine, have seen increased demand.

Project Management: Project management skills are crucial in various sectors, and certifications like PMP (Project Management Professional) are highly valued.

Artificial Intelligence (AI) and Robotics: AI and robotics courses are sought after as businesses explore automation and intelligent technologies.

Blockchain Technology: With applications beyond cryptocurrencies, blockchain technology courses are gaining popularity in various sectors, including finance and supply chain.

Environmental Science and Sustainability: Courses focusing on environmental sustainability and green technologies are increasingly relevant in addressing global challenges.

Join Now

learn more -

#artificial intelligence#html#coding#machine learning#python#programming#indiedev#rpg maker#devlog#linux#digital marketing#top 10 high demand course#Data Science courses#Machine Learning training#Cybersecurity certifications#Cloud Computing courses#Digital Marketing classes#Programming languages tutorials#Software Development courses#Healthcare and Nursing programs#Project Management certification#Artificial Intelligence courses#Robotics training#Blockchain Technology classes#Environmental Science education#Sustainability courses

2 notes

·

View notes

Note

Sorry if this question is too broad or imposing but I'm trying to get into modding Fallout 4 for the first time, mostly to make armor skins. What're the best tutorials? I've tried searching online but a lot of the tutorials are outdated. (Would also love to know how to bash existing skins together though modelling my own would be fun too). Again sorry for this ask I'm just so lost about how to get into modding lol.

Ahhh do not apologize for asking me about modding fallout. I could talk about the guts of this stupid game all day ahhh

modding grip^

Unfortunately I...don't know any good tutorials. I think a lot of Fo4 modders came over already knowing skyrim or FNV. Most of what I know is based on outdated guides, old loverslab threads, my existing graphic design knowledge, and trial-and-error. I think armour is the best place to start because there are so many tools available (thanks tittymodders!), and you don't have to worry about needing 3DS Max for collisions or animations.

This is the only modding tutorial I've ever watched. It's old, but good to show you a proper workflow and how to set up your files. They use creation kit, but if you're just making armour its way easier to use xEdit. I don't even have the ck installed, I do everything in xEdit.

This is an excellent guide to outfit conversions. It gets pretty in depth, but you really don't need to bother with the dismemberment section if it's just for you. It's for FG reduced but you can use it as a general guide for weighting anything for any body and getting your modded outfit game ready.

Texture edits and outfit conversions are where I started and are probably the easiest. The best thing to do is just poke around mods you like and see how they do it.

Some tools:

xEdit: Plugin editor for creation engine games. If you're doing any kind of modding you should learn how to use this. Esl-flagging, running complex sorter, making bashed patches and making your own compatibility patches are skills you need if you want to run a heavily modded game.

Icestorms texture toolbox: the best texture tool, i use the "batch processing" tab at the end to convert .png (no alpha) and .tga (alpha) files to .dds.

Nvidia texture tools exporter: lets you open .dds file in photoshop with the alpha channel intact. You don't need a nvidia card, I'm all team red. Don't bother using this to export unless you have to, its slow as fuck.

Sagethumbs: Gives .dds files thumbnails in windows explorer.

IrfanView: For quickly viewing texture files without launching photoshop. Also an excellent general image viewer.

Bethesda Archive Extractor: Crack open those .ba2 files and get to the goods.

Material Editor: What it says on the tin, lets you edit Fo4 and Fo76 material files. These are like containers that have the paths to all your textures and how they are to be shaded to attach to .nif files.

NifSkope: View and edit .nif files. Dev 7 is the recommended, but Dev 8 can open Fo76 meshes if you want to backport those.

Outfit Studio: Even if you don't use body replacers, this is an incredible tool for editing and weighting meshes. If you're making armour you need this. This is also where I make most of my mashups: you can pull parts from different outfits, slap them together, and export them quickly and easily.

Blender: It's free and it works. Learning to navigate this is going to be your biggest hurdle but it's worth it, trust me. Thankfully blender has a huge community and hundreds of tutorials. This is where I make my hi poly models and do all my retopo/uvs. I also prefer to use blender to edit meshes because it has more robust editing tools.

PyNifly: What I use to import/export .nif files from blender.

Fo4 is made in the 2013 version of 3DS Max and the havok content tools but i haven't bothered to pirate that yet. You don't need it for armour anyways.

I'm sorry this is so long and rambly. If you have a more specific question I might be more helpful ha.

#asks#fallout#fallout 4#long post#fighting to keep this short i will not infodump i will not infodump i will no- ah fuck i did it. sorry.#i got into modding by editing existing textures that weren't up to my standard#and then making outfit conversions for bt3 because almost nobody was back then#i have been basically banging my head into my computer for the last year trying to learn what little i do know#yeah i know fuck adobe but nothing is better than photoshop. just pirate it. its always morally correct to steal from adobe.#i pay for it though because i actually use adobe fonts/cloud and i am a clown#i know it sounds silly but all the best technical advice is on loverslab. just..bring an adblock..and dont open that site in public lol#please do not be scared to ask me things i want everyone to be able to mod this hell game#the best thing about fo4 is that its made of legos so you can easily pull it apart and put it back together however you want#i had never touched a 3d program before i started modding fallout last year you can do it anon! i am the monkey with a typewriter of mods#typing this with blender outfit studio and mo2 open in the background mod author sigma grindset#ive thought about making some tutorials but i have both a lisp and a stutter...that would be brutal. maybe some text ones.#god im so normal about this game

30 notes

·

View notes

Text

April 2023

Sechs Jahre Nichtstun, eine schöne Lösung für so viele Probleme

Vor fast genau sechs Jahren habe ich beschlossen, auch mal dieses Machine Learning auszuprobieren:

Gleich kann es losgehen, ich muss nur erst “Getting Started before your first lesson” lesen. Von dort schickt man mich weiter zum AWS deep learning setup video. Das Video ist 13 Minuten lang.

(Es folgen Probleme und Verwicklungen beim Setup, die Details kann man hier nachlesen.)

In Minute 12:45 sagt der Erzähler im Video: “Ok! It looks like everything is set up correctly and you’re ready to start using it.” Aber statt 12 Minuten und 45 Sekunden sind zwei Wochen vergangen, mein anfänglicher Enthusiasmus ist aufgebraucht und mein Interesse an Deep Learning erlahmt. Ich bin nicht einmal bis “Lesson 1” gekommen.

Im April 2023 sagt Aleks, dass er gerade einen sehr guten Onlinekurs über Machine Learning macht. Ich frage nach der Adresse, und sie kommt mir bekannt vor. Es ist derselbe Kurs!

“Das Setup war kein Problem?”, frage ich. Nein, sagt Aleks, Sache von ein paar Minuten.

Ich sehe mir "Practical Deep Learning for Coders 2022” an. Man braucht für den Kurs bestimmte Hardware. Generell benötigt Machine Learning Grafikprozessoren wegen der höheren Rechenleistung, und aus der Einleitung zum Kurs weiß ich jetzt, dass die aktuell verfügbaren Tools Nvidia-Grafikprozessoren voraussetzen*. Den Zugang zu dieser Hardware soll man mieten. Das war vor sechs Jahren auch schon so, nur dass das Mieten der Rechenleistung bei Amazon Web Services eine komplizierte und teure Sache war.

* Ich hatte an dieser Stelle schon “Grafikkarten” geschrieben, dann kam es mir aber wieder so vor, als müsste ich meinen Sprachgebrauch renovieren. In meiner Vorstellung handelt es sich um eine Steckkarte, ungefähr 10 x 20 cm groß, die in ein PC-Gehäuse eingebaut wird. So war das, als ich meine Computer noch in Einzelteilen kaufte, aber das ist zwanzig Jahre her. Deshalb habe ich mich für das unverbindliche Wort “Grafikprozessoren” entschieden. Aber wenn ich nach nvidia gpu machine learning suche, sehe ich sperrige Dinge, die nicht weit von meiner Erinnerung an Grafikkarten entfernt sind. Die große Rechenleistung braucht auch große Kühlleistung, deshalb sind zwei Lüfter auf der ... naja, Karte. Die Ergebnisse der Bildersuche sind etwas uneindeutig, aber es kommt mir so vor, als enthielte das Rechenzentrum, dessen Leistung ich gleich nutzen werde, wahrscheinlich große Gehäuse, in denen große Grafikkarten drin sind, vom Format her immer noch ungefähr wie vor zwanzig Jahren. Nur viel schneller.

2018 brauchte man AWS schon nicht mehr für den fast.ai-Onlinekurs. Stattdessen konnte man sich die Arbeitsumgebung bei Paperspace einrichten, einem anderen Cloud-Anbieter. Die Anleitung von 2018 klingt so, als hätte meine Geduld wahrscheinlich auch dafür nicht gereicht.

In der Version von 2019 hat der Kurs auf Google Colab gesetzt. Das heißt, dass man Jupyter Notebooks auf Google-Servern laufen lassen kann und keine eigene Python-Installation braucht, nur einen Browser. Colab gab es 2017 noch nicht, es wurde erst ein paar Monate nach meinem Scheitern, im Herbst 2017, für die Öffentlichkeit freigegeben. Allerdings klingt die Anleitung von 2019 immer noch kompliziert.

2020 wirkt es schon schaffbarer.

Auch die aktuelle Version des Kurses basiert auf Colab. Man muss sich dafür einen Account bei Kaggle einrichten. Soweit ich es bisher verstehe, dient dieser Kaggle-Zugang dazu, die Sache kostenlos zu machen. Colab würde ansonsten Geld kosten, weniger als ich 2017 bezahlt habe, aber eben Geld. Oder vielleicht liegen auch die Jupyter Notebooks mit den Kurs-Übungen bei Kaggle, keine Ahnung, man braucht es eben. (Update: In Kapitel 2 des Kurses merke ich, dass es noch mal anders ist, man hätte sich zwischen Colab und Kaggle entscheiden können. Zusammengefasst: Ich verstehe es nicht.)

Ich lege mir einen Kaggle-Account an und betrachte das erste Python-Notebook des Kurses. Es beginnt mit einem Test, der nur überprüft, ob man überhaupt Rechenleistung bei Kaggle in Anspruch nehmen darf. Das geht nämlich erst, wenn man eine Telefonnummer eingetragen und einen Verifikationscode eingetragen hat, der an diese Telefonnummer verschickt wird. Aber das Problem ist Teil des Kursablaufs und deshalb genau an der Stelle erklärt, an der es auftritt. Es kostet mich fünf Minuten, die vor allem im Warten auf die Zustellung der SMS mit dem Code bestehen.

Danach geht es immer noch nicht. Beim Versuch, die ersten Zeilen Code laufen zu lassen, bekomme ich eine Fehlermeldung, die mir sagt, dass ich das Internet einschalten soll:

“STOP: No internet. Click ‘>|’ in top right and set ‘Internet’ switch to on.”

Ich betrachte lange alles, was mit “top right” gemeint sein könnte, aber da ist kein solcher Schalter. Schließlich google ich die Fehlermeldung. Andere haben das Problem auch schon gehabt und gelöst. Der Schalter sieht weder so aus wie in der Fehlermeldung angedeutet, noch befindet er sich oben rechts. Man muss ein paar Menüs ein- und ein anderes ausklappen, dann wird er unten rechts sichtbar.

Ich bin also im Internet und muss erst das Internet einschalten, damit ich Dinge im Internet machen kann.

Aleks meint, wenn ich ihm gestern dabei zugehört hätte, wie er eine Viertelstunde lang laut fluchte, hätte ich schon gewusst, wie es geht. Hatte ich aber nicht.

Nach dem Einschalten des Internets kann ich das erste Jupyter-Notebook des Kurses betrachten und selbst ausprobieren, ob es wohl schwer ist, Frösche von Katzen zu unterscheiden. Für die Lösung aller Startprobleme von 2017 habe ich zwei Wochen gebraucht. 2023 noch eine Viertelstunde, und ich bin zuversichtlich, dass man um 2025 direkt in den Kurs einsteigen können wird.

(Kathrin Passig)

#Kathrin Passig#fast.ai#Deep Learning#Machine Learning#Onlinekurs#Amazon AWS#Paperspace#Colab#Google Colaboratory#Google Colab#Kaggle#Fehlermeldung#für den Internetzugang braucht man Internet#Cloud Computing#Jupyter Notebooks#Sprachgebrauch#Grafikkarte#best of

11 notes

·

View notes

Note

i mean yeah the first thing i did was look for vermillioncrown or rabittotouka but i already figured you used a different username

1) you didn't find it? the perma url was stuck on vermillioncrown for a long time

2) list failed approaches, too--helpful for documentation and being methodical

(unless that's cached from my own browsing history...)

anyways. i think I'm gonna nuke my ff.net account soon, no point if i don't use it anymore

#inquiry#anonymous#which means the fic will be going away#my philosophy on unfinished fics + deleting works is#you can hope an author keeps things up but there's no imperative for them to do so#and as someone who read much more than she has written...#if you have the type of emotional dependency on anything such that you'll fall to pieces if it ever disappears#find more things you value in your life. things come and go. we will all pass on one day#^(learned from accidentally nuking many computers/digital storage pre-cloud)

8 notes

·

View notes

Text

Big Tech companies have been leveraging the power of cloud computing and artificial intelligence (AI) to create innovative solutions for their customers. By forming strategic alliances with AI groups, these companies are able to gain access to cutting-edge technology that can be used to develop products and services that can revolutionize their businesses. These alliances also allow them to tap into a vast pool of talent and expertise in order to drive innovation in the fields of AI, machine learning, and cloud computing. As a result, Big Tech firms are able to stay ahead of the competition by utilizing the latest advancements in technology.

2 notes

·

View notes

Text

The Rapid Advancement of Technology: A Look at the Latest Developments

Technology is constantly evolving, and it can be hard to keep up with the latest advancements. From artificial intelligence to virtual reality, technology is becoming more and more advanced at a rapid pace. In this blog post, we'll take a look at some of the most exciting and innovative technology developments of recent years, and explore how these advancements are changing the way we live and work.

Artificial intelligence

Artificial intelligence (AI) is one of the most talked-about technologies of recent years. From voice assistants like Siri and Alexa to self-driving cars, AI is becoming increasingly integrated into our daily lives.

One of the most impressive developments in AI is the creation of machine learning algorithms. These algorithms allow computers to learn and adapt without being explicitly programmed, enabling them to perform tasks that were once thought to be impossible. For example, machine learning algorithms have been used to create image and speech recognition software, allowing computers to identify and classify objects and sounds with impressive accuracy.

Virtual and augmented reality

Virtual reality (VR) and augmented reality (AR) are technologies that allow users to experience computer-generated environments in a more immersive way. VR allows users to fully enter a virtual world, while AR overlays digital information onto the real world.

These technologies have a wide range of applications, from gaming and entertainment to education and training. For example, VR can be used to create immersive experiences for gamers, while AR can be used to provide training simulations for pilots or surgeons.

The Internet of Things

The Internet of Things (IoT) refers to the interconnected network of physical devices that can collect and exchange data. These devices can include anything from smart thermostats and security cameras to wearable fitness trackers and smart appliances.

The IoT has the potential to revolutionize the way we interact with the world around us. For example, smart home devices can be programmed to adjust the temperature or turn off the lights when you leave the house, saving energy and making life more convenient.

As technology continues to advance, it's clear that it will have a significant impact on the way we live and work. From AI and VR to the IoT, these developments are already changing the way we interact with the world around us, and it's exciting to think about what the future may hold. As technology continues to evolve, it's important to stay informed about the latest developments and consider how they may affect our lives.

#Technology#Advanced Technology#Future#IT#Information technology#Innovation#Gadgets#Software#Apps#Hardware#Internet#Cybersecurity#Artificial intelligence#Machine learning#Data science#Cloud computing#Internet of Things (IoT)#Virtual reality#Augmented reality#Robotics#3D printing#Blockchain#Tech industry#Startup#Entrepreneurship

4 notes

·

View notes

Text

Top Skill development courses for Students to get Good Placement

Please like and follow this page to keep us motivated towards bringing useful content for you.

Now a days, Educated Unemployment is a big concern for a country like India. Considering the largest number of youth population in world, India has huge potential to be a developed nation in the next few years. But, it can be only possible if youth contribute in economy by learning skills which are in global demand. However, course structure in colleges are outdated and do not make students job…

View On WordPress

#Artificial Intelligence and Machine learning#Books for Artificial Intelligence and Machine Learning#Books for coding#Books for cyber security#Books for data science#Books for Digital Marketing#Books for Placement#Cloud computing#College Placement#Cyber Security#Data science and analytics#digital marketing#Graphic Design#high package#Programming and software development#Project Management#Sales and Business Development#Skill development#Web Development

4 notes

·

View notes

Text

Tugas Akhir

Nama : Avira

Platform : Bisa AI

Kursus : Mengenal Cloud Computing

Tugas :

Cobalah untuk memfilter data ip dari log ssh yang ada di sini https://expbig.bisaai.id/auth.log Ambil list ipnya Lalu lacak lokasi list ip yang sudah di filter berdasarkan negara.

Instruksi pengumpulan tugas:

1. Format file berbentuk PDF

2. Maksimal ukuran file yaitu 2MB

Jawaban :

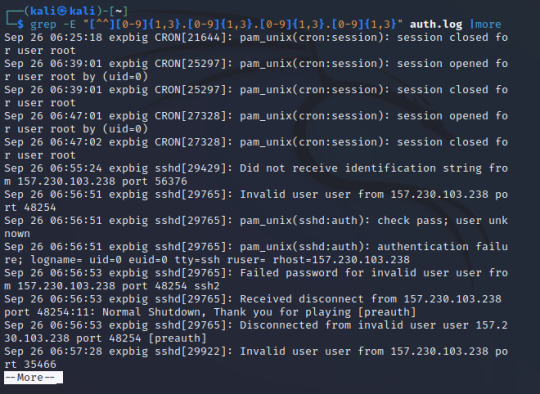

Buka Linux kernel yang diinginkan. Sebagai contoh, pengguna menggunakan Kali Linux.

Buka Terminal yang ada di Taskbar. Run command wget dengan format : wget url

Tunggu sampai proses selesai.

Karena pengguna tidak mengetahui IP yang akan dilacak, maka untuk fungsi grep yang digunakan menggunakan kelas karakter khusus. Setiap set didenotasi dengan []. IP terdiri dari angka, jadi untuk mendapatkan kelas karakter yang diinginkan adalah [0-9] untuk mencapai angka yang dimungkinkan. Sebuah address memiliki 4 set sampai 3 numerasi. Jadi harus bisa mirip setidaknya satu, tapi tidak lebih dari 3 setiap oktetnya. Lakukan ini dengan menambahkan {}. Jadi, [0-9]{1,3}.

Jadi, bentuk lengkap dari IP address adalah [0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.

Format yang digunakan : grep –E "[^^][0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}" auth.log |more

Dapat dilihat dari IP diatas adalah 157.230.103.238.

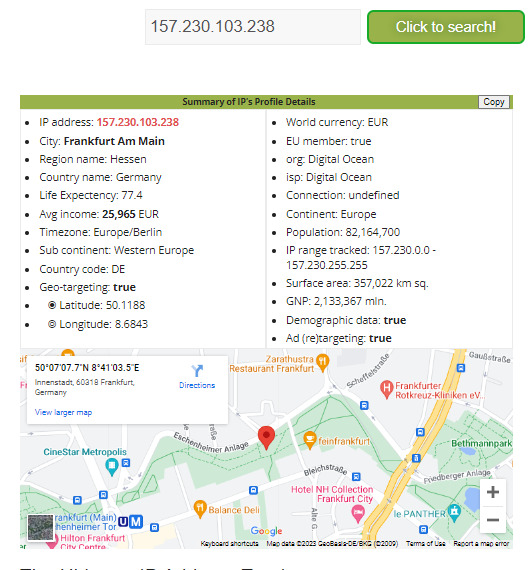

Untuk melacak IP Address tersebut, pengguna menggunakan website opentracker.net

Dapat disimpulkan bahwa IP tersebut berasal dari Frankfurt, Hessen, Jerman.

6 notes

·

View notes

Text

Most in-demand Azure cloud skills that IT professionals should have

In today’s competitive job market, having the right skillset is key to success. Azure cloud skills are becoming increasingly important for IT professionals and developers. With the help of Azure cloud services, organizations can build secure and reliable applications that can scale up or down as needed. Azure cloud skills can be used to develop applications that run on the cloud, manage data, automate processes and deploy solutions quickly and efficiently. It also allows developers to create hybrid solutions by combining on-premise resources with public cloud offerings. Having the right Azure cloud skills can open up new opportunities for IT professionals in terms of career growth and salary potential. It is essential for IT professionals to stay up-to-date with the latest developments in this field in order to remain competitive in this ever-evolving job market. The following are some of the most in-demand Azure cloud skills that IT professionals should have:

1. Azure cloud services: This includes developing applications that run on Microsoft Azure and managing data, automating processes and deploying solutions quickly and efficiently.

2. Azure IaaS: Determines how an organization can build secure, reliable applications by using public cloud resources in combination with on-premise resources to create hybrid solutions for maximum efficiency.

3. Windows Server: In order to develop solutions for either public or private clouds, developers need familiarity with this server operating system which includes Microsoft Azure hybrid solutions.

4. Windows: Determines how an organization can harness the power of the cloud by leveraging the powerful development toolset for this platform and its wide range of applications.

#Azure#Cloud#Cloud computing#Microsoft Azure#Azure Active Directory#Azure Virtual Machines#Azure Storage#Azure Networking#Azure DevOps#Azure Kubernetes Service#Azure SQL#Azure Machine Learning#Azure Automation#Azure Security#Azure IoT#Azure Functions#Azure Logic Apps#Azure App Service#Azure ExpressRoute#Azure Monitor#Azure Cost Management#Azure Backup#Azure Site Recovery#Azure AD B2C#Azure AD B2B

2 notes

·

View notes

Text

#cloud computing#health it#technology#iot#virtual reality#blockchain#artificial intelligence#machine learning#metaverse

3 notes

·

View notes

Text

New Post has been published on Books by Caroline Miller

New Post has been published on https://www.booksbycarolinemiller.com/musings/its-bouba-kiki-time/

It's Bouba/Kiki Time!

The picture on my Facebook page was of a set of stairs. I recognized them as those that led to a Chinese restaurant I frequented when I worked in the city. The food was good and cheap, and customers appreciated the fast service. If I arrived for lunch alone, I’d usually find a table of friends who would make room for me. “Is the restaurant still thriving?” I typed in the comment section. A wave of nostalgia swept over me as I wrote. The reply came as a disappointment. “Its’ been out of business for 20 years!” Moments later, I sat down to glance through my new alumni magazine. I turned to the “In Memoriam” section to verify that, unlike Tuck Lung, I hadn’t gone out of business. Relieved that my name didn’t appear, I flipped to the opening remarks of the college President. A line in her message stood out. “…the measure of student success and the value of a college diploma can[‘t} be measured by a paycheck. ” (Reed Magazine, September 2022, pg. 2.) I gave an involuntary nod as I read. Life without learning is a bowl of unsalted potato chips. It lacks piquancy. An ancient mystic was once asked what he’d miss most about life after he’d died. He said he’d miss watching the clouds. As I was young and made no sense of his remark, I presumed it was profound. Today, I’d disagree. What I’d miss was learning. Thankfully, at 86, I remain capable of it. For example, the same alumni publication taught me about Bouba/kiki. I’ll explain with a question. Which of the two words above evokes an image of roundness? Which suggests spikes? Generally, people throughout the globe respond with the same answer. Bouba is round. Kiki is spiked. Our responses are congruent because scientists suppose the brain links visual and oral experiences according to how we shape our spoken words. Knowing the difference between Bouba and kiki won’t add a penny to my purse. I am richer because I’ve discovered another connection in this infinitely connected universe. Keeping current with the times has value. I won’t drive to Tuck Lung for a plate of Egg Foo Young when I have a craving. Imagine the consequence of postponing computer upgrades. I raise this example for a reason. My desktop needs an overhaul. Next week reprise blogs will appear on my page as placeholders. After the technician finishes messing with my data and my life, I face a system learning curve. I wish I could embrace the challenge with the sense of fun I experienced when I discovered Bouba/kiki.

#Bouba/kiki#clouds#computer upgrades#keeping current#language and visualization#Reed College#value of learning

2 notes

·

View notes

Text

New security protocol shields data from attackers during cloud-based computation

New Post has been published on https://thedigitalinsider.com/new-security-protocol-shields-data-from-attackers-during-cloud-based-computation/

New security protocol shields data from attackers during cloud-based computation

Deep-learning models are being used in many fields, from health care diagnostics to financial forecasting. However, these models are so computationally intensive that they require the use of powerful cloud-based servers.

This reliance on cloud computing poses significant security risks, particularly in areas like health care, where hospitals may be hesitant to use AI tools to analyze confidential patient data due to privacy concerns.

To tackle this pressing issue, MIT researchers have developed a security protocol that leverages the quantum properties of light to guarantee that data sent to and from a cloud server remain secure during deep-learning computations.

By encoding data into the laser light used in fiber optic communications systems, the protocol exploits the fundamental principles of quantum mechanics, making it impossible for attackers to copy or intercept the information without detection.

Moreover, the technique guarantees security without compromising the accuracy of the deep-learning models. In tests, the researcher demonstrated that their protocol could maintain 96 percent accuracy while ensuring robust security measures.

“Deep learning models like GPT-4 have unprecedented capabilities but require massive computational resources. Our protocol enables users to harness these powerful models without compromising the privacy of their data or the proprietary nature of the models themselves,” says Kfir Sulimany, an MIT postdoc in the Research Laboratory for Electronics (RLE) and lead author of a paper on this security protocol.

Sulimany is joined on the paper by Sri Krishna Vadlamani, an MIT postdoc; Ryan Hamerly, a former postdoc now at NTT Research, Inc.; Prahlad Iyengar, an electrical engineering and computer science (EECS) graduate student; and senior author Dirk Englund, a professor in EECS, principal investigator of the Quantum Photonics and Artificial Intelligence Group and of RLE. The research was recently presented at Annual Conference on Quantum Cryptography.

A two-way street for security in deep learning

The cloud-based computation scenario the researchers focused on involves two parties — a client that has confidential data, like medical images, and a central server that controls a deep learning model.

The client wants to use the deep-learning model to make a prediction, such as whether a patient has cancer based on medical images, without revealing information about the patient.

In this scenario, sensitive data must be sent to generate a prediction. However, during the process the patient data must remain secure.

Also, the server does not want to reveal any parts of the proprietary model that a company like OpenAI spent years and millions of dollars building.

“Both parties have something they want to hide,” adds Vadlamani.

In digital computation, a bad actor could easily copy the data sent from the server or the client.

Quantum information, on the other hand, cannot be perfectly copied. The researchers leverage this property, known as the no-cloning principle, in their security protocol.

For the researchers’ protocol, the server encodes the weights of a deep neural network into an optical field using laser light.

A neural network is a deep-learning model that consists of layers of interconnected nodes, or neurons, that perform computation on data. The weights are the components of the model that do the mathematical operations on each input, one layer at a time. The output of one layer is fed into the next layer until the final layer generates a prediction.

The server transmits the network’s weights to the client, which implements operations to get a result based on their private data. The data remain shielded from the server.

At the same time, the security protocol allows the client to measure only one result, and it prevents the client from copying the weights because of the quantum nature of light.

Once the client feeds the first result into the next layer, the protocol is designed to cancel out the first layer so the client can’t learn anything else about the model.

“Instead of measuring all the incoming light from the server, the client only measures the light that is necessary to run the deep neural network and feed the result into the next layer. Then the client sends the residual light back to the server for security checks,” Sulimany explains.

Due to the no-cloning theorem, the client unavoidably applies tiny errors to the model while measuring its result. When the server receives the residual light from the client, the server can measure these errors to determine if any information was leaked. Importantly, this residual light is proven to not reveal the client data.

A practical protocol

Modern telecommunications equipment typically relies on optical fibers to transfer information because of the need to support massive bandwidth over long distances. Because this equipment already incorporates optical lasers, the researchers can encode data into light for their security protocol without any special hardware.

When they tested their approach, the researchers found that it could guarantee security for server and client while enabling the deep neural network to achieve 96 percent accuracy.

The tiny bit of information about the model that leaks when the client performs operations amounts to less than 10 percent of what an adversary would need to recover any hidden information. Working in the other direction, a malicious server could only obtain about 1 percent of the information it would need to steal the client’s data.

“You can be guaranteed that it is secure in both ways — from the client to the server and from the server to the client,” Sulimany says.

“A few years ago, when we developed our demonstration of distributed machine learning inference between MIT’s main campus and MIT Lincoln Laboratory, it dawned on me that we could do something entirely new to provide physical-layer security, building on years of quantum cryptography work that had also been shown on that testbed,” says Englund. “However, there were many deep theoretical challenges that had to be overcome to see if this prospect of privacy-guaranteed distributed machine learning could be realized. This didn’t become possible until Kfir joined our team, as Kfir uniquely understood the experimental as well as theory components to develop the unified framework underpinning this work.”

In the future, the researchers want to study how this protocol could be applied to a technique called federated learning, where multiple parties use their data to train a central deep-learning model. It could also be used in quantum operations, rather than the classical operations they studied for this work, which could provide advantages in both accuracy and security.

“This work combines in a clever and intriguing way techniques drawing from fields that do not usually meet, in particular, deep learning and quantum key distribution. By using methods from the latter, it adds a security layer to the former, while also allowing for what appears to be a realistic implementation. This can be interesting for preserving privacy in distributed architectures. I am looking forward to seeing how the protocol behaves under experimental imperfections and its practical realization,” says Eleni Diamanti, a CNRS research director at Sorbonne University in Paris, who was not involved with this work.

This work was supported, in part, by the Israeli Council for Higher Education and the Zuckerman STEM Leadership Program.

#ai#ai tools#approach#artificial#Artificial Intelligence#attackers#author#Building#Cancer#classical#Cloud#cloud computing#communications#computation#computer#Computer Science#computing#conference#cryptography#cybersecurity#data#Deep Learning#detection#diagnostics#direction#education#Electrical engineering and computer science (EECS)#Electronics#engineering#equipment

0 notes