#regulatory capture

Text

Greenwashing set Canada on fire

On September 22, I'm (virtually) presenting at the DIG Festival in Modena, Italy. On September 27, I'll be at Chevalier's Books in Los Angeles with Brian Merchant for a joint launch for my new book The Internet Con and his new book, Blood in the Machine.

As a teenager growing up in Ontario, I always envied the kids who spent their summers tree planting; they'd come back from the bush in September, insect-chewed and leathery, with new muscle, incredible stories, thousands of dollars, and a glow imparted by the knowledge that they'd made a new forest with their own blistered hands.

I was too unathletic to follow them into the bush, but I spent my summers doing my bit, ringing doorbells for Greenpeace to get my neighbours fired up about the Canadian pulp-and-paper industry, which wasn't merely clear-cutting our old-growth forests – it was also poisoning the Great Lakes system with PCBs, threatening us all.

At the time, I thought of tree-planting as a small victory – sure, our homegrown, rapacious, extractive industry was able to pollute with impunity, but at least the government had reined them in on forests, forcing them to pay my pals to spend their summers replacing the forests they'd fed into their mills.

I was wrong. Last summer's Canadian wildfires blanketed the whole east coast and midwest in choking smoke as millions of trees burned and millions of tons of CO2 were sent into the atmosphere. Those wildfires weren't just an effect of the climate emergency: they were made far worse by all those trees planted by my pals in the eighties and nineties.

Writing in the New York Times, novelist Claire Cameron describes her own teen years working in the bush, planting row after row of black spruces, precisely spaced at six-foot intervals:

https://www.nytimes.com/2023/09/15/opinion/wildfires-treeplanting-timebomb.html

Cameron's summer job was funded by the logging industry, whose self-pegulated, self-assigned "penalty" for clearcutting diverse forests of spruce, pine and aspen was to pay teenagers to create a tree farm, at nine cents per sapling (minus camp costs).

Black spruces are made to burn, filled with flammable sap and equipped with resin-filled cones that rely on fire, only opening and dropping seeds when they're heated. They're so flammable that firefighters call them "gas on a stick."

Cameron and her friends planted under brutal conditions: working long hours in blowlamp heat and dripping wet bulb humidity, amidst clouds of stinging insects, fingers blistered and muscles aching. But when they hit rock bottom and were ready to quit, they'd encourage one another with a rallying cry: "Let's go make a forest!"

Planting neat rows of black spruces was great for the logging industry: the even spacing guaranteed that when the trees matured, they could be easily reaped, with ample space between each near-identical tree for massive shears to operate. But that same monocropped, evenly spaced "forest" was also optimized to burn.

It burned.

The climate emergency's frequent droughts turn black spruces into "something closer to a blowtorch." The "pines in lines" approach to reforesting was an act of sabotage, not remediation. Black spruces are thirsty, and they absorb the water that moss needs to thrive, producing "kindling in the place of fire retardant."

Cameron's column concludes with this heartbreaking line: "Now when I think of that summer, I don’t think that I was planting trees at all. I was planting thousands of blowtorches a day."

The logging industry committed a triple crime. First, they stole our old-growth forests. Next, they (literally) planted a time-bomb across Ontario's north. Finally, they stole the idealism of people who genuinely cared about the environment. They taught a generation that resistance is futile, that anything you do to make a better future is a scam, and you're a sucker for falling for it. They planted nihilism with every tree.

That scam never ended. Today, we're sold carbon offsets, a modern Papal indulgence. We are told that if we pay the finance sector, they can absolve us for our climate sins. Carbon offsets are a scam, a market for lemons. The "offset" you buy might be a generated by a fake charity like the Nature Conservancy, who use well-intentioned donations to buy up wildlife reserves that can't be logged, which are then converted into carbon credits by promising not to log them:

https://pluralistic.net/2020/12/12/fairy-use-tale/#greenwashing

The credit-card company that promises to plant trees every time you use your card? They combine false promises, deceptive advertising, and legal threats against critics to convince you that you're saving the planet by shopping:

https://pluralistic.net/2021/11/17/do-well-do-good-do-nothing/#greenwashing

The carbon offset world is full of scams. The carbon offset that made the thing you bought into a "net zero" product? It might be a forest that already burned:

https://pluralistic.net/2022/03/11/a-market-for-flaming-lemons/#money-for-nothing

The only reason we have carbon offsets is that market cultists have spent forty years convincing us that actual regulation is impossible. In the neoliberal learned helplessness mind-palace, there's no way to simply say, "You may not log old-growth forests." Rather, we have to say, "We will 'align your incentives' by making you replace those forests."

The Climate Ad Project's "Murder Offsets" video deftly punctures this bubble. In it, a detective points his finger at the man who committed the locked-room murder in the isolated mansion. The murderer cheerfully admits that he did it, but produces a "murder offset," which allowed him to pay someone else not to commit a murder, using market-based price-discovery mechanisms to put a dollar-figure on the true worth of a murder, which he duly paid, making his kill absolutely fine:

https://pluralistic.net/2021/04/14/for-sale-green-indulgences/#killer-analogy

What's the alternative to murder offsets/carbon credits? We could ask our expert regulators to decide which carbon intensive activities are necessary and which ones aren't, and ban the unnecessary ones. We could ask those regulators to devise remediation programs that actually work. After all, there are plenty of forests that have already been clearcut, plenty that have burned. It would be nice to know how we can plant new forests there that aren't "thousands of blowtorches."

If that sounds implausible to you, then you've gotten trapped in the neoliberal mind-palace.

The term "regulatory capture" was popularized by far-right Chicago School economists who were promoting "public choice theory." In their telling, regulatory capture is inevitable, because companies will spend whatever it takes to get the government to pass laws making what they do legal, and making competing with them into a crime:

https://pluralistic.net/2022/06/13/public-choice/#ajit-pai-still-terrible

This is true, as far as it goes. Capitalists hate capitalism, and if an "entrepreneur" can make it illegal to compete with him, he will. But while this is a reasonable starting-point, the place that Public Choice Theory weirdos get to next is bonkers. They say that since corporations will always seek to capture their regulators, we should abolish regulators.

They say that it's impossible for good regulations to exist, and therefore the only regulation that is even possible is to let businesses do whatever they want and wait for the invisible hand to sweep away the bad companies. Rather than creating hand-washing rules for restaurant kitchens, we should let restaurateurs decide whether it's economically rational to make us shit ourselves to death. The ones that choose poorly will get bad online reviews and people will "vote with their dollars" for the good restaurants.

And if the online review site decides to sell "reputation management" to restaurants that get bad reviews? Well, soon the public will learn that the review site can't be trusted and they'll take their business elsewhere. No regulation needed! Unleash the innovators! Set the job-creators free!

This is the Ur-nihilism from which all the other nihilism springs. It contends that the regulations we have – the ones that keep our buildings from falling down on our heads, that keep our groceries from poisoning us, that keep our cars from exploding on impact – are either illusory, or perhaps the forgotten art of a lost civilization. Making good regulations is like embalming Pharaohs, something the ancients practiced in mist-shrouded, unrecoverable antiquity – and that may not have happened at all.

Regulation is corruptible, but it need not be corrupt. Regulation, like science, is a process of neutrally adjudicated, adversarial peer-review. In a robust regulatory process, multiple parties respond to a fact-intensive question – "what alloys and other properties make a reinforced steel joist structurally sound?" – with a mix of robust evidence and self-serving bullshit and then proceed to sort the two by pantsing each other, pointing out one another's lies.

The regulator, an independent expert with no conflicts of interest, sorts through the claims and counterclaims and makes a rule, showing their workings and leaving the door open to revisiting the rule based on new evidence or challenges to the evidence presented.

But when an industry becomes concentrated, it becomes unregulatable. 100 small and medium-sized companies will squabble. They'll struggle to come up with a common lie. There will always be defectors in their midst. Their conduct will be legible to external experts, who will be able to spot the self-serving BS.

But let that industry dwindle to a handful of giant companies, let them shrink to a number that will fit around a boardroom table, and they will sit down at a table and agree on a cozy arrangement that fucks us all over to their benefit. They will become so inbred that the only people who understand how they work will be their own insiders, and so top regulators will be drawn from their own number and be hopelessly conflicted.

When the corporate sector takes over, regulatory capture is inevitable. But corporate takeover isn't inevitable. We can – and have, and will again – fight corporate power, with antitrust law, with unions, and with consumer rights groups. Knowing things is possible. It simply requires that we keep the entities that profit by our confusion poor and thus weak.

The thing is, corporations don't always lie about regulations. Take the fight over working encryption, which – once again – the UK government is trying to ban:

https://www.theguardian.com/technology/2023/feb/24/signal-app-warns-it-will-quit-uk-if-law-weakens-end-to-end-encryption

Advocates for criminalising working encryption insist that the claims that this is impossible are the same kind of self-serving nonsense as claims that banning clearcutting of old-growth forests is impossible:

https://twitter.com/JimBethell/status/1699339739042599276

They say that when technologists say, "We can't make an encryption system that keeps bad guys out but lets good guys in," that they are being lazy and unimaginative. "I have faith in you geeks," they said. "Go nerd harder! You'll figure it out."

Google and Apple and Meta say that selectively breakable encryption is impossible. But they also claim that a bunch of eminently possible things are impossible. Apple claims that it's impossible to have a secure device where you get to decide which software you want to use and where publishers aren't deprive of 30 cents on every dollar you spend. Google says it's impossible to search the web without being comprehensively, nonconsensually spied upon from asshole to appetite. Meta insists that it's impossible to have digital social relationship without having your friendships surveilled and commodified.

While they're not lying about encryption, they are lying about these other things, and sorting out the lies from the truth is the job of regulators, but that job is nearly impossible thanks to the fact that everyone who runs a large online service tells the same lies – and the regulators themselves are alumni of the industry's upper eschelons.

Logging companies know a lot about forests. When we ask, "What is the best way to remediate our forests," the companies may well have useful things to say. But those useful things will be mixed with actively harmful lies. The carefully cultivated incompetence of our regulators means that they can't tell the difference.

Conspiratorialism is characterized as a problem of what people believe, but the true roots of conspiracy belief isn't what we believe, it's how we decide what to believe. It's not beliefs, it's epistemology.

Because most of us aren't qualified to sort good reforesting programs from bad ones. And even if we are, we're probably not also well-versed enough in cryptography to sort credible claims about encryption from wishful thinking. And even if we're capable of making that determination, we're not experts in food hygiene or structural engineering.

Daily life in the 21st century means resolving a thousand life-or-death technical questions every day. Our regulators – corrupted by literally out-of-control corporations – are no longer reliable sources of ground truth on these questions. The resulting epistemological chaos is a cancer that gnaws away at our resolve to do anything about it. It is a festering pool where nihilism outbreaks are incubated.

The liberal response to conspiratorialism is mockery. In her new book Doppelganger, Naomi Klein tells of how right-wing surveillance fearmongering about QR-code "vaccine passports" was dismissed with a glib, "Wait until they hear about cellphones!"

https://pluralistic.net/2023/09/05/not-that-naomi/#if-the-naomi-be-klein-youre-doing-just-fine

But as Klein points out, it's not good that our cellphones invade our privacy in the way that right-wing conspiracists thought that vaccine passports might. The nihilism of liberalism – which insists that things can't be changed except through market "solutions" – leads us to despair.

By contrast, leftism – a muscular belief in democratic, publicly run planning and action – offers a tonic to nihilism. We don't have to let logging companies decide whether a forest can be cut, or what should be planted when it is. We can have nice things. The art of finding out what's true or prudent didn't die with the Reagan Revolution (or the discount Canadian version, the Mulroney Malaise). The truth is knowable. Doing stuff is possible. Things don't have to be on fire.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/16/murder-offsets/#pulped-and-papered

#pluralistic#logging#pulp and paper#ontario#greenwashing#a market for lemons#incentives matter#capitalism#late-stage capitalism#climate emergency#wildfires#canada#canpoli#ontpoli#carbon offsets#self-regulation#nerd harder#epistemological chaos#regulatory capture#Claire Cameron#pines in lines

3K notes

·

View notes

Text

Cory Doctorow: Facebook is secretly funding a sock-puppet organization to astroturf up opposition to Big Tech regulation.

#Facebook#Big Tech#monopoly#astroturf#sock puppets#cory doctorow#Pluralistic#regulation#regulatory capture#government

45 notes

·

View notes

Text

What happened to the cycle of renewal? Where are the regular, controlled burns?

Like the California settlers who subjugated the First Nations people and declared war on good fire, the finance sector conquered the tech sector.

It started in the 1980s, the era of personal computers — and Reaganomics. A new economic and legal orthodoxy took hold, one that celebrated monopolies as “efficient,” and counseled governments to nurture and protect corporations as they grew both too big to fail, and too big to jail.

For 40 years, we’ve been steadily reducing antitrust enforcement. That means a company like Google can create a single great product (a search engine) and use investors’ cash to buy a mobile stack, a video stack, an ad stack, a server-management stack, a collaboration stack, a maps and navigation stack — all while repeatedly failing to succeed with any of its in-house products.

It’s hard to appreciate just how many companies tech giants buy. Apple buys other companies more often than you buy groceries.

These giants buy out their rivals specifically to make sure you can’t leave their walled gardens. As Mark Zuckerberg says, “It is better to buy than to compete,” (which is why Zuckerberg bought Instagram, telling his CFO that it was imperative that they do the deal because Facebook users preferred Insta to FB, and were defecting in droves).

As these companies “merge to monopoly,” they are able to capture their regulators, ensuring that the law doesn’t interfere with their plans for literal world domination.

When a sector consists of just a handful of companies, it becomes cozy enough to agree on — and win — its lobbying priorities. That’s why America doesn’t have a federal privacy law. It’s why employees can be misclassified as “gig worker” contractors and denied basic labor protections.

It’s why companies can literally lock you out of your home — and your digital life — by terminating your access to your phone, your cloud, your apps, your thermostat, your door-locks, your family photos, and your tax records, with no appeal — not even the right to sue.

But regulatory capture isn’t merely about ensuring that tech companies can do whatever they want to you. Tech companies are even more concerned with criminalizing the things you want to do to them.

Frank Wilhoit described conservativism as “exactly one proposition”:

There must be in-groups whom the law protects but does not bind, alongside out-groups whom the law binds but does not protect.

This is likewise the project of corporatism. Tech platforms are urgently committed to ensuring that they can do anything they want on their platforms — and they’re even more dedicated to the proposition that you must not do anything they don’t want on their platforms.

They can lock you in. You can’t unlock yourself. Facebook attained network-effects growth by giving its users bots that logged into Myspace on their behalf, scraped the contents of their inboxes for the messages from the friends they left behind, and plunked them in their Facebook inboxes.

Facebook then sued a company that did the same thing to Facebook, who wanted to make it as easy for Facebook users to leave Facebook as it had been to get started there.

Apple reverse-engineered Microsoft’s crown jewels — the Office file-formats that kept users locked to its operating systems — so it could clone them and let users change OSes.

Try to do that today — say, to make a runtime so you can use your iOS apps and media on an Android device or a non-Apple desktop — and Apple will reduce you to radioactive rubble.

Big Tech has a million knobs on the back-end that they can endlessly twiddle to keep you locked in — and, just as importantly, they have convinced governments to ban any kind of twiddling back.

This is “felony contempt of business model.”

Governments hold back from passing and enforcing laws that limit the tech giants in the name of nurturing their “efficiency.”

But when states act to prevent new companies — or users, or co-ops, or nonprofits — from making it easier to leave the platforms, they do so in the name of protecting us.

Rather than passing a privacy law that would let them punish Meta, Apple, Google, Oracle, Microsoft and other spying companies, they ban scraping and reverse-engineering because someone might violate the privacy of the users of those platforms.

But a privacy law would control both scrapers and silos, banning tech giants from spying on their users, and banning startups and upstarts from spying on those users, too.

Rather than breaking up ad-tech, banning surveillance ads, and opening up app stores, which would make tech platforms stop stealing money from media companies through ad-fraud, price-gouging and deceptive practices, governments introduce laws requiring tech companies to share (some of) their ill-gotten profits with a few news companies.

This makes the news companies partners with the tech giants, rather than adversaries holding them to account, and makes the news into cheerleaders for massive tech profits, so long as they get their share. Rather than making it easier for the news to declare independence from Big Tech, we are fusing them forever.

We could make it easy for users to leave a tech platform where they are subject to abuse and harassment — but instead, governments pursue policies that require platforms to surveil and control their users in the name of protecting them from each other.

We could make it easy for users to leave a tech platform where their voices are algorithmically silenced, but instead we get laws requiring platforms to somehow “balance” different points of view.

The platforms aren’t merely combustible, they’re always on fire. Once you trap hundreds of millions — or billions — of people inside a walled fortress, where warlords who preside over have unlimited power over their captives, and those captives the are denied any right to liberate themselves, enshittification will surely and inevitably follow.

Laws that block us seizing the means of computation and moving away from Big Tech are like the heroic measures that governments undertake to keep people safe in the smouldering wildland-urban interface.

These measures prop up the lie that we can perfect the tech companies, so they will be suited to eternal rule.

Rather than building more fire debt, we should be making it easy for people to relocate away from the danger so we can have that long-overdue, “good fire” to burn away the rotten giants that have blotted out the sun.

What would that look like?

Well, this week’s news was all about Threads, Meta’s awful Twitter replacement devoted to “brand-safe vaporposting,” where the news and controversy are not welcome, and the experience is “like watching a Powerpoint from the Brand Research team where they tell you that Pop Tarts is crushing it on social.”

Threads may be a vacuous “Twitter alternative you would order from Brookstone,” but it commanded a lot of news, because it experienced massive growth in just hours. “Two million signups in the first two hours” and “30 million signups in the first morning.”

That growth was network-effects driven. Specifically, Meta made it possible for you to automatically carry over your list of followed Instagram accounts to Threads.

Meta was able to do this because it owns both Threads and Instagram. But Meta does not own the list of people you trust and enjoy enough to follow.

That’s yours.

Your relationships belong to you. You should be able to bring them from one service to another.

Take Mastodon. One of the most common complaints about Mastodon is that it’s hard to know whom to follow there. But as a technical matter, it’s easy: you should just follow the people you used to follow on Twitter —either because they’re on Mastodon, too, or because there’s a way to use Mastodon to read their Twitter posts.

Indeed, this is already built into Mastodon. With one click, you can export the list of everyone you follow, and everyone who follows you. Then you can switch Mastodon servers, upload that file, and automatically re-establish all those relationships.

That means that if the person who runs your server decides to shut it down, or if the server ends up being run by a maniac who hates you and delights in your torment, you don’t have to petition a public prosecutor or an elected lawmaker or a regulator to make them behave better.

You can just leave.

Meta claims that Threads will someday join the “Fediverse” (the collection of apps built on top of ActivityPub, the standard that powers Mastodon).

Rather than passing laws requiring Threads to prioritize news content, or to limit the kinds of ads the platform accepts, we could order it to turn on this Fediverse gateway and operate it such that any Threads user can leave, join any other Fediverse server, and continue to see posts from the people they follow, and who will also continue to see their posts.

youtube

Rather than devoting all our energy to keep Meta’s empire of oily rags from burning, we could devote ourselves to evacuating the burn zone.

This is the thing the platforms fear the most. They know that network effects gave them explosive growth, and they know that tech’s low switching costs will enable implosive contraction.

The thing is, network effects are a double-edged sword. People join a service to be with the people they care about. But when the people they care about start to leave, everyone rushes for the exits. Here’s danah boyd, describing the last days of Myspace:

If a central node in a network disappeared and went somewhere else (like from MySpace to Facebook), that person could pull some portion of their connections with them to a new site. However, if the accounts on the site that drew emotional intensity stopped doing so, people stopped engaging as much. Watching Friendster come undone, I started to think that the fading of emotionally sticky nodes was even more problematic than the disappearance of segments of the graph.

With MySpace, I was trying to identify the point where I thought the site was going to unravel. When I started seeing the disappearance of emotionally sticky nodes, I reached out to members of the MySpace team to share my concerns and they told me that their numbers looked fine. Active uniques were high, the amount of time people spent on the site was continuing to grow, and new accounts were being created at a rate faster than accounts were being closed. I shook my head; I didn’t think that was enough. A few months later, the site started to unravel.

Tech bosses know the only thing protecting them from sudden platform collapse syndrome are the laws that have been passed to stave off the inevitable fire.

They know that platforms implode “slowly, then all at once.”

They know that if we weren’t holding each other hostage, we’d all leave in a heartbeat.

But anything that can’t go on forever will eventually stop. Suppressing good fire doesn’t mean “no fires,” it means wildfires. It’s time to declare fire debt bankruptcy. It’s time to admit we can’t make these combustible, tinder-heavy forests safe.

It’s time to start moving people out of the danger zone.

It’s time to let the platforms burn.

3 notes

·

View notes

Text

page 562 - I've decided that if I own the means of production it's fine.

Not like I own the means of production exclusively, but I as a worker own the means of production. If workers control their labour and benefit from their own labour then it's fine. I should have started with that.

The problem is if some scaberous capitalized wanker is benefiting from an AI that has value only because it can hoover up the value created by others for free. That sounds like theft. Then they get to use their profits to put down workers, buy politics, change systems and social structures through legislation. It's bad.

But if labour needs doing and I choose to do that labour by using my AI machine as a tool, that is cool. That's what technology has promised us since time imem... immemm... since a long time.

Ago.

Use tech, work done, sit on a bench in the park and feed squirrels. Dreamy.

Have a look at the woven fabric above and re-think everything you thought you knew about Luddites and Luddism, right now!

#economics#economy#economist#equilibrium price shortages and surpluses#unstable equilibrium#equilibrium#unstable#surplus#shortage#time#luddite#luddism#worker rights#labour#means of production#productions#better world#tax the rich#regulatory capture#cory doctorow

3 notes

·

View notes

Video

youtube

A recent Netflix documentary series called Madoff: The Monster of Wall Street by Emmy Award–winning filmmaker Joe Berlinger tells the story of the largest Ponzi scheme in history.

Besides the infamous character mentioned in its title, the series' other villain is the Securities and Exchange Commission (SEC), which received complaints about Bernie Madoff starting in the early 1990s, and yet, it not only failed to catch him but helped enable his fraud. During one investigation, all SEC investigators had to do was check an account number to verify trades had actually occurred, which they hadn't, of course, because all of Madoff's trades were fake.

The Netflix series acknowledges that the SEC was complicit in Madoff's scam and that he could have been caught if one investigator assigned to the case had done about 30 minutes of checking. But then it also blames deregulation and free market capitalism for making the fraud possible.

"Resources that had been in New York City, on Wall Street's doorstep, devoted to white-collar crime, to fraud, were being steered away [from Wall Street]," says Henriques of the George W. Bush administration era during which Madoff's operation reached its peak. "For the SEC, this was an exacerbation of an existing problem, as a result of a deregulatory campaign that began with the election of Ronald Reagan in 1980."

Instead of making lazy allusions to the evils of free market capitalism, to better understand the lessons of the Madoff saga, director Joe Berlinger should have consulted the work of the free market economist George Stigler, who won the Nobel Prize in part for his work on "regulatory capture."

In a 1971 paper, "The Theory of Economic Regulation," Stigler argues that while many people believe that "regulation is instituted primarily for the protection and benefit of the public," in fact, it mainly serves the purposes of the largest companies being regulated, which form a symbiotic relationship with their regulatory overseers.

"My thesis is that the industry body in the long run must act by and for the industry," said Stigler in a 1971 speech before the American Enterprise Institute. "The political realities of life dictate that the regulatory bodies become affiliated with and help in what it believes to be the necessary conditions for the survival of its industry."

Stigler's essay focuses mainly on how regulators help existing companies by protecting them from competition, but his theory can also be used to better understand the SEC's failure to catch Madoff. In a 1972 essay, Stigler writes that "the regulating agency must eventually become the agency of the regulated industry…. each needs the other." That's because the career lawyers at the SEC making the decisions have much more to gain personally from having a positive relationship with big industry players than antagonizing them.

What if Harry Markopolos' warnings hadn't been filtered through the SEC? The average person might be better equipped to spot a con man than we give him credit for. But the myth that regulators are primarily motivated to protect the interests of the public causes many to suspend their better judgment.

"The individual consumer, if he is not hampered, is in general capable of a large measure of self-defense against fraud, mishaps, bad luck, and the like," said Stigler in his 1971 speech. "Not all consumers are intellectually competent and well-informed, but most consumers know how to build up defenses against the many vicissitudes that lie in real life. And it is primarily because we have socially so often hampered these effects that we have injured the consumer."

The big takeaway from Madoff: The Monster of Wall Street is that regulators unwittingly facilitated his fraud, just as they may have done with Sam Bankman-Fried and his alleged con. As you watch the Netflix series, think about whether we should really be giving these regulators more time or power. Are they helping us or themselves?

3 notes

·

View notes

Link

Latest Covid Boosters Are Set to Roll Out Before Human Testing Is Completed

‘The FDA and vaccine makers say they are confident that shots targeting Omicron subvariants will work safely’

#crimes against humanity#crimes against children#fraud#corruption#regulatory capture#Hypercorporations#covid-19 vaccine#covid-19#democide#FDA#CDC#big pharma

11 notes

·

View notes

Text

How Corruption and Greed Led to the Downfall of Rock Music

With the Telecommunications Act of 1996, Bill Clinton finished what Ronald Reagan started. What a liberal. What a man of the people. 🙄

View On WordPress

#Bill Clinton#consolidation#corruption#devolution#greed#history#monopoly#Music#oligarchy#regulatory capture#Rick Beato#Ronald Reagan

0 notes

Text

e/accs love this guy?! And they are excited he's heading to Microsoft?

I understand why they don't like the open ai board and the saftey and doomer crowds, but it seems like it would be good to temper some of their support.

0 notes

Text

Health Insurance Whistleblower: Medicare Advantage Is “Heist” by Private Firms to Defraud the Public

Democracy Now!

Oct. 12, 2022

Many of the nation’s largest health insurance companies have made billions of dollars in profits by overbilling the U.S. government’s Medicare Advantage program. A New York Times investigation has revealed that under the Advantage program, health insurance companies are incentivized to make patients appear more ill than they actually are. Some estimates find it has cost the government between $12 billion and $25 billion in 2020 alone. We speak with former healthcare insurance executive Wendell Potter, now president of the Center for Health and Democracy, who says Medicare Advantage will be recognized in years to come as the “biggest transfer of wealth” from taxpayers to corporate shareholders, and blames the lack of regulation over the program on the “revolving door between private industry and government.”

Read more.

1 note

·

View note

Text

yeah america has dogshit public transit by design

thanks car companies

(check the tags (and not just bikes!) for more info)

#new world order#youtube#youtumblr#ebike#public transport system#public transit#trains#car manufacturers#these fuckers straight evil#they used propoganda and shit#regulatory capture#look it up#not to mention henry ford was a nazi and was a co-author of antisemitic shit#but also having cities take advantage of segregation#etc#education and other things like bulldozing minority neighborhoods since only the middle and uppee class (white by percentage at the time)#for umm#reasons...#(jim crow-southern slavers rebellion- murican racism cuz duh- and a lot of other shit)#i just don't get it#and i don't know hos many Europeans are generally aware of this lmao#europe#europe tumblr#tumblr#anyway#umm#check out the link#good shtuff#and that channel its good#you get a reward for reading all the tags

0 notes

Text

Conspiratorialism and the epistemological crisis

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me next weekend (Mar 30/31) in ANAHEIM at WONDERCON, then in Boston with Randall "XKCD" Munroe! (Apr 11), then Providence (Apr 12), and beyond!

Last year, Ed Pierson was supposed to fly from Seattle to New Jersey on Alaska Airlines. He boarded his flight, but then he had an urgent discussion with the flight attendant, explaining that as a former senior Boeing engineer, he'd specifically requested that flight because the aircraft wasn't a 737 Max:

https://www.cnn.com/travel/boeing-737-max-passenger-boycott/index.html

But for operational reasons, Alaska had switched out the equipment on the flight and there he was on a 737 Max, about to travel cross-continent, and he didn't feel safe doing so. He demanded to be let off the flight. His bags were offloaded and he walked back up the jetbridge after telling the spooked flight attendant, "I can’t go into detail right now, but I wasn’t planning on flying the Max, and I want to get off the plane."

Boeing, of course, is a flying disaster that was years in the making. Its planes have been falling out of the sky since 2019. Floods of whistleblowers have come forward to say its aircraft are unsafe. Pierson's not the only Boeing employee to state – both on and off the record – that he wouldn't fly on a specific model of Boeing aircraft, or, in some cases any recent Boeing aircraft:

https://pluralistic.net/2024/01/22/anything-that-cant-go-on-forever/#will-eventually-stop

And yet, for years, Boeing's regulators have allowed the company to keep turning out planes that keep turning out lemons. This is a pretty frightening situation, to say the least. I'm not an aerospace engineer, I'm not an aircraft safety inspector, but every time I book a flight, I have to make a decision about whether to trust Boeing's assurances that I can safely board one of its planes without dying.

In an ideal world, I wouldn't even have to think about this. I'd be able to trust that publicly accountable regulators were on the job, making sure that airplanes were airworthy. "Caveat emptor" is no way to run a civilian aviation system.

But even though I don't have the specialized expertise needed to assess the airworthiness of Boeing planes, I do have the much more general expertise needed to assess the trustworthiness of Boeing's regulator. The FAA has spent years deferring to Boeing, allowing it to self-certify that its aircraft were safe. Even when these assurances led to the death of hundreds of people, the FAA continued to allow Boeing to mark its own homework:

https://www.youtube.com/watch?v=Q8oCilY4szc

What's more, the FAA boss who presided over those hundreds of deaths was an ex-Boeing lobbyist, whom Trump subsequently appointed to run Boeing's oversight. He's not the only ex-insider who ended up a regulator, and there's plenty of ex-regulators now on Boeing's payroll:

https://therevolvingdoorproject.org/boeing-debacle-shows-need-to-investigate-trump-era-corruption/

You don't have to be an aviation expert to understand that companies have conflicts of interest when it comes to certifying their own products. "Market forces" aren't going to keep Boeing from shipping defective products, because the company's top brass are more worried about cashing out with this quarter's massive stock buybacks than they are about their successors' ability to manage the PR storm or Congressional hearings after their greed kills hundreds and hundreds of people.

You also don't have to be an aviation expert to understand that these conflicts persist even when a Boeing insider leaves the company to work for its regulators, or vice-versa. A regulator who anticipates a giant signing bonus from Boeing after their term in office, or a an ex-Boeing exec who holds millions in Boeing stock has an irreconcilable conflict of interest that will make it very hard – perhaps impossible – for them to hold the company to account when it trades safety for profit.

It's not just Boeing customers who feel justifiably anxious about trusting a system with such obvious conflicts of interest: Boeing's own executives, lobbyists and lawyers also refuse to participate in similarly flawed systems of oversight and conflict resolution. If Boeing was sued by its shareholders and the judge was also a pissed off Boeing shareholder, they would demand a recusal. If Boeing was looking for outside counsel to represent it in a liability suit brought by the family of one of its murder victims, they wouldn't hire the firm that was suing them – not even if that firm promised to be fair. If a Boeing executive's spouse sued for divorce, that exec wouldn't use the same lawyer as their soon-to-be-ex.

Sure, it takes specialized knowledge and training to be a lawyer, a judge, or an aircraft safety inspector. But anyone can look at the system those experts work in and spot its glaring defects. In other words, while acquiring expertise is hard, it's much easier to spot weaknesses in the process by which that expertise affects the world around us.

And therein lies the problem: aviation isn't the only technically complex, potentially lethal, and utterly, obviously untrustworthy system we all have to navigate. How about the building safety codes that governed the structure you're in right now? Plenty of people have blithely assumed that structural engineers carefully designed those standards, and that these standards were diligently upheld, only to discover in tragic, ghastly ways that this was wrong:

https://www.bbc.com/news/64568826

There are dozens – hundreds! – of life-or-death, highly technical questions you have to resolve every day just to survive. Should you trust the antilock braking firmware in your car? How about the food hygiene rules in the factories that produced the food in your shopping cart? Or the kitchen that made the pizza that was just delivered? Is your kid's school teaching them well, or will they grow up to be ignoramuses and thus economic roadkill?

Hell, even if I never get into another Boeing aircraft, I live in the approach path for Burbank airport, where Southwest lands 50+ Boeing flights every day. How can I be sure that the next Boeing 737 Max that falls out of the sky won't land on my roof?

This is the epistemological crisis we're living through today. Epistemology is the process by which we know things. The whole point of a transparent, democratically accountable process for expert technical deliberation is to resolve the epistemological challenge of making good choices about all of these life-or-death questions. Even the smartest person among us can't learn to evaluate all those questions, but we can all look at the process by which these questions are answered and draw conclusions about its soundness.

Is the process public? Are the people in charge of it forthright? Do they have conflicts of interest, and, if so, do they sit out any decision that gives even the appearance of impropriety? If new evidence comes to light – like, say, a horrific disaster – is there a way to re-open the process and change the rules?

The actual technical details might be a black box for us, opaque and indecipherable. But the box itself can be easily observed: is it made of sturdy material? Does it have sharp corners and clean lines? Or is it flimsy, irregular and torn? We don't have to know anything about the box's contents to conclude that we don't trust the box.

For example: we may not be experts in chemical engineering or water safety, but we can tell when a regulator is on the ball on these issues. Back in 2019, the West Virginia Department of Environmental Protection sought comment on its water safety regs. Dow Chemical – the largest corporation in the state's largest industry – filed comments arguing that WV should have lower standards for chemical contamination in its drinking water.

Now, I'm perfectly prepared to believe that there are safe levels of chemical runoff in the water supply. There's a lot of water in the water supply, after all, and "the dose makes the poison." What's more, I use the products whose manufacture results in that chemical waste. I want them to be made safely, but I do want them to be made – for one thing, the next time I have surgery, I want the anesthesiologist to start an IV with fresh, sterile plastic tubing.

And I'm not a chemist, let alone a water chemist. Neither am I a toxicologist. There are aspects of this debate I am totally unqualified to assess. Nevertheless, I think the WV process was a bad one, and here's why:

https://www.wvma.com/press/wvma-news/4244-wvma-statement-on-human-health-criteria-development

That's Dow's comment to the regulator (as proffered by its mouthpiece, the WV Manufacturers' Association, which it dominates). In that comment, Dow argues that West Virginians safely can absorb more poison than other Americans, because the people of West Virginia are fatter than other Americans, and so they have more tissue and thus a better ratio of poison to person than the typical American. But they don't stop there! They also say that West Virginians don't drink as much water as their out-of-state cousins, preferring to drink beer instead, so even if their water is more toxic, they'll be drinking less of it:

https://washingtonmonthly.com/2019/03/14/the-real-elitists-looking-down-on-trump-voters/

Even without any expertise in toxicology or water chemistry, I can tell that these are bullshit answers. The fact that the WV regulator accepted these comments tells me that they're not a good regulator. I was in WV last year to give a talk, and I didn't drink the tap water.

It's totally reasonable for non-experts to reject the conclusions of experts when the process by which those experts resolve their disagreements is obviously corrupt and irredeemably flawed. But some refusals carry higher costs – both for the refuseniks and the people around them – than my switching to bottled water when I was in Charleston.

Take vaccine denial (or "hesitancy"). Many people greeted the advent of an extremely rapid, high-tech covid vaccine with dread and mistrust. They argued that the pharma industry was dominated by corrupt, greedy corporations that routinely put their profits ahead of the public's safety, and that regulators, in Big Pharma's pocket, let them get away with mass murder.

The thing is, all that is true. Look, I've had five covid vaccinations, but not because I trust the pharma industry. I've had direct experience of how pharma sacrifices safety on greed's altar, and narrowly avoided harm myself. I have had chronic pain problems my whole life, and they've gotten worse every year. When my daughter was on the way, I decided this was going to get in the way of my ability to parent – I wanted to be able to carry her for long stretches! – and so I started aggressively pursuing the pain treatments I'd given up on many years before.

My journey led me to many specialists – physios, dieticians, rehab specialists, neurologists, surgeons – and I tried many, many therapies. Luckily, my wife had private insurance – we were in the UK then – and I could go to just about any doctor that seemed promising. That's how I found myself in the offices of a Harley Street quack, a prominent pain specialist, who had great news for me: it turned out that opioids were way safer than had previously been thought, and I could just take opioids every day and night for the rest of my life without any serious risk of addiction. It would be fine.

This sounded wrong to me. I'd lost several friends to overdoses, and watched others spiral into miserable lives as they struggled with addiction. So I "did my own research." Despite not having a background in chemistry, biology, neurology or pharmacology, I struggled through papers and read commentary and came to the conclusion that opioids weren't safe at all. Rather, corrupt billionaire pharma owners like the Sackler family had colluded with their regulators to risk the lives of millions by pushing falsified research that was finding publication in some of the most respected, peer-reviewed journals in the world.

I became an opioid denier, in other words.

I decided, based on my own research, that the experts were wrong, and that they were wrong for corrupt reasons, and that I couldn't trust their advice.

When anti-vaxxers decried the covid vaccines, they said things that were – in form at least – indistinguishable from the things I'd been saying 15 years earlier, when I decided to ignore my doctor's advice and throw away my medication on the grounds that it would probably harm me.

For me, faith in vaccines didn't come from a broad, newfound trust in the pharmaceutical system: rather, I judged that there was so much scrutiny on these new medications that it would overwhelm even pharma's ability to corruptly continue to sell a medication that they secretly knew to be harmful, as they'd done so many times before:

https://www.npr.org/2007/11/10/5470430/timeline-the-rise-and-fall-of-vioxx

But many of my peers had a different take on anti-vaxxers: for these friends and colleagues, anti-vaxxers were being foolish. Surprisingly, these people I'd long felt myself in broad agreement with began to defend the pharmaceutical system and its regulators. Once they saw that anti-vaxx was a wedge issue championed by right-wing culture war shitheads, they became not just pro-vaccine, but pro-pharma.

There's a name for this phenomenon: "schismogenesis." That's when you decide how you feel about an issue based on who supports it. Think of self-described "progressives" who became cheerleaders for the America's cruel, ruthless and lawless "intelligence community" when it seemed that US spooks were bent on Trump's ouster:

https://pluralistic.net/2021/12/18/schizmogenesis/

The fact that the FBI didn't like Trump didn't make them allies of progressive causes. This was and is the same entity that (among other things) tried to blackmail Martin Luther King, Jr into killing himself:

https://en.wikipedia.org/wiki/FBI%E2%80%93King_suicide_letter

But schismogenesis isn't merely a reactionary way of flip-flopping on issues based on reflexive enmity. It's actually a reasonable epistemological tactic: in a world where there are more issues you need to be clear on than you can possibly inform yourself about, you need some shortcuts. One shortcut – a shortcut that's failing – is to say, "Well, I'll provisionally believe whatever the expert system tells me is true." Another shortcut is, "I will provisionally disbelieve in whatever the people I know to act in bad faith are saying is true." That is, "schismogenesis."

Schismogenesis isn't a great tactic. It would be far better if we had a set of institutions we could all largely trust – if the black boxes where expert debate took place were sturdy, rectilinear and sharp-cornered.

But they're not. They're just not. Our regulatory process sucks. Corporate concentration makes it trivial for cartels to capture their regulators and steer them to conclusions that benefit corporate shareholders even if that means visiting enormous harm – even mass death – on the public:

https://pluralistic.net/2022/06/05/regulatory-capture/

No one hates Big Tech more than I do, but many of my co-belligerents in the war on Big Tech believe that the rise of conspiratorialism can be laid at tech platforms' feet. They say that Big Tech boasts of how good they are at algorithmically manipulating our beliefs, and attribute Qanons, flat earthers, and other outlandish conspiratorial cults to the misuse off those algorithms.

"We built a Big Data mind-control ray" is one of those extraordinary claims that requires extraordinary evidence. But the evidence for Big Tech's persuasion machines is very poor: mostly, it consists of tech platforms' own boasts to potential investors and customers for their advertising products. "We can change peoples' minds" has long been the boast of advertising companies, and it's clear that they can change the minds of customers for advertising.

Think of department store mogul John Wanamaker, who famously said "Half the money I spend on advertising is wasted; the trouble is I don't know which half." Today – thanks to commercial surveillance – we know that the true proportion of wasted advertising spending is more like 99.9%. Advertising agencies may be really good at convincing John Wanamaker and his successors, through prolonged, personal, intense selling – but that doesn't mean they're able to sell so efficiently to the rest of us with mass banner ads or spambots:

http://pluralistic.net/HowToDestroySurveillanceCapitalism

In other words, the fact that Facebook claims it is really good at persuasion doesn't mean that it's true. Just like the AI companies who claim their chatbots can do your job: they are much better at convincing your boss (who is insatiably horny for firing workers) than they are at actually producing an algorithm that can replace you. What's more, their profitability relies far more on convincing a rich, credulous business executive that their product works than it does on actually delivering a working product.

Now, I do think that Facebook and other tech giants play an important role in the rise of conspiratorial beliefs. However, that role isn't using algorithms to persuade people to mistrust our institutions. Rather Big Tech – like other corporate cartels – has so corrupted our regulatory system that they make trusting our institutions irrational.

Think of federal privacy law. The last time the US got a new federal consumer privacy law was in 1988, when Congress passed the Video Privacy Protection Act, a law that prohibits video store clerks from leaking your VHS rental history:

https://www.eff.org/deeplinks/2008/07/why-vppa-protects-youtube-and-viacom-employees

It's been a minute. There are very obvious privacy concerns haunting Americans, related to those tech giants, and yet the closest Congress can come to doing something about it is to attempt the forced sale of the sole Chinese tech giant with a US footprint to a US company, to ensure that its rampant privacy violations are conducted by our fellow Americans, and to force Chinese spies to buy their surveillance data on millions of Americans in the lawless, reckless swamp of US data-brokerages:

https://www.npr.org/2024/03/14/1238435508/tiktok-ban-bill-congress-china

For millions of Americans – especially younger Americans – the failure to pass (or even introduce!) a federal privacy law proves that our institutions can't be trusted. They're right:

https://www.tiktok.com/@pearlmania500/video/7345961470548512043

Occam's Razor cautions us to seek the simplest explanation for the phenomena we see in the world around us. There's a much simpler explanation for why people believe conspiracy theories they encounter online than the idea that the one time Facebook is telling the truth is when they're boasting about how well their products work – especially given the undeniable fact that everyone else who ever claimed to have perfected mind-control was a fantasist or a liar, from Rasputin to MK-ULTRA to pick-up artists.

Maybe people believe in conspiracy theories because they have hundreds of life-or-death decisions to make every day, and the institutions that are supposed to make that possible keep proving that they can't be trusted. Nevertheless, those decisions have to be made, and so something needs to fill the epistemological void left by the manifest unsoundness of the black box where the decisions get made.

For many people – millions – the thing that fills the black box is conspiracy fantasies. It's true that tech makes finding these conspiracy fantasies easier than ever, and it's true that tech makes forming communities of conspiratorial belief easier, too. But the vulnerability to conspiratorialism that algorithms identify and target people based on isn't a function of Big Data. It's a function of corruption – of life in a world in which real conspiracies (to steal your wages, or let rich people escape the consequences of their crimes, or sacrifice your safety to protect large firms' profits) are everywhere.

Progressives – which is to say, the coalition of liberals and leftists, in which liberals are the senior partners and spokespeople who control the Overton Window – used to identify and decry these conspiracies. But as right wing "populists" declared their opposition to these conspiracies – when Trump damned free trade and the mainstream media as tools of the ruling class – progressives leaned into schismogenesis and declared their vocal support for these old enemies of progress.

This is the crux of Naomi Klein's brilliant 2023 book Doppelganger: that as the progressive coalition started supporting these unworthy and broken institutions, the right spun up "mirror world" versions of their critique, distorted versions that focus on scapegoating vulnerable groups rather than fighting unworthy institutions:

https://pluralistic.net/2023/09/05/not-that-naomi/#if-the-naomi-be-klein-youre-doing-just-fine

This is a long tradition in politics: hundreds of years ago, some leftists branded antisemitism "the socialism of fools." Rather than condemning the system's embrace of the finance sector and its wealthy beneficiaries, anti-semites blame a disfavored group of people – people who are just as likely as anyone to suffer under the system:

https://en.wikipedia.org/wiki/Antisemitism_is_the_socialism_of_fools

It's an ugly, shallow, cartoon version of socialism's measured and comprehensive analysis of how the class system actually works and why it's so harmful to everyone except a tiny elite. Literally cartoonish: the shadow-world version of socialism co-opts and simplifies the iconography of class struggle. And schismogenesis – "if the right likes this, I don't" – sends "progressive" scolds after anyone who dares to criticize finance as the crux of our world's problems as popularizing "antisemetic dog-whistles."

This is the problem with "horseshoe theory" – the idea that the far right and the far left bend all the way around to meet each other:

https://pluralistic.net/2024/02/26/horsehoe-crab/#substantive-disagreement

When the right criticizes pharma companies, they tell us to "do our own research" (e.g. ignore the systemic problems of people being forced to work under dangerous conditions during a pandemic while individually assessing conflicting claims about vaccine safety, ideally landing on buying "supplements" from a grifter). When the left criticizes pharma, it's to argue for universal access to medicine and vigorous public oversight of pharma companies. These aren't the same thing:

https://pluralistic.net/2021/05/25/the-other-shoe-drops/#quid-pro-quo

Long before opportunistic right wing politicians realized they could get mileage out of pointing at the terrifying epistemological crisis of trying to make good choices in an age of institutions that can't be trusted, the left was sounding the alarm. Conspiratorialism – the fracturing of our shared reality – is a serious problem, weakening our ability to respond effectively to endless disasters of the polycrisis.

But by blaming the problem of conspiratorialism on the credulity of believers (rather than the deserved disrepute of the institutions they have lost faith in) we adopt the logic of the right: "conspiratorialism is a problem of individuals believing wrong things," rather than "a system that makes wrong explanations credible – and a schismogenic insistence that these institutions are sound and trustworthy."

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/03/25/black-boxes/#when-you-know-you-know

Image:

Nuclear Regulatory Commission (modified)

https://www.flickr.com/photos/nrcgov/15993154185/

meanwell-packaging.co.uk

https://www.flickr.com/photos/195311218@N08/52159853896

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

#pluralistic#conspiratorialism#epistemology#epistemological crisis#mind control rays#opioid denial#vaccine denial#regulatory capture#boeing#corruption#inequality#monopoly#apple#dma#eu

292 notes

·

View notes

Text

How tax prep companies like H&R Block and Intuit are making sure filing taxes stay complicated and painful.

Filing taxes could be fast and easy, but that would cost H&R Block and Intuit billions of dollars, so we have to suffer.

0 notes

Text

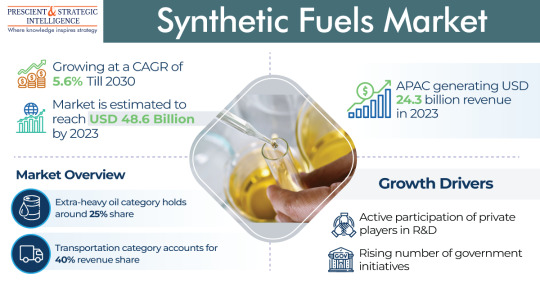

What Are Synthetic Fuels Or E-Fuels?

In labs throughout Europe and the Americas, researchers are busy investigating substitutions to fossil fuels. Besides the harmful releases and intensifying costs of petroleum items, recent geopolitical instability in the east of Europe has additional pressure on looking for new power sources.

So, what types of synthetic fuels are in progress today?

What Are Synthetic Fuels?

Synthetic fuels,…

View On WordPress

#Biomass#Carbon Capture#Energy Transition#Fischer-Tropsch Synthesis#Greenhouse Gas Emissions#Hydrogen#Key players#Market dynamics#Power-to-Liquid#Regulatory frameworks#Renewable Sources#Synthetic Fuels Market

0 notes

Text

DJI Mini 3 Review: The Ultimate Drone Camera for Beginners

The DJI Mini 3 is the latest iteration of DJI's popular line of compact drones, designed with beginners in mind. It builds upon the success of its predecessors, the Mini and Mini 2, offering an even more impressive package for those looking to dip their toes into the world of aerial photography and videography.

Read Article: The DJI Mini 3 Review: Best Drone Camera For Beginner

Buy On Amazon: Check Price

Design and Portability:

One of the standout features of the DJI Mini 3 is its compact and lightweight design. Weighing in at just 249 grams, it falls below the regulatory weight threshold in many countries, which means you often won't need a license or registration to fly it. The foldable arms make it incredibly portable, fitting comfortably into a small bag or even a pocket. This makes it a fantastic travel companion for capturing breathtaking footage on the go.

Camera Performance:

The camera on the Mini 3 is a notable improvement over its predecessors. It boasts a 1/2.3-inch sensor, capable of shooting 12MP photos and 4K video at 30fps. The image quality is impressive for its size, delivering vibrant colors and sharp details. While it may not compete with DJI's more advanced models like the Mavic Air 2 in terms of camera capabilities, it certainly exceeds expectations for a beginner-focused drone.

Flight Performance:

DJI's Mini drones have always been known for their user-friendly flight experience, and the Mini 3 is no exception. It comes equipped with GPS and downward-facing sensors for stable and precise hovering, even in less-than-ideal conditions. The addition of obstacle avoidance technology helps prevent collisions, further enhancing its safety and ease of use for beginners.

Battery Life:

The Mini 3 comes with an upgraded battery that offers a respectable flight time of up to 31 minutes on a single charge. This extended flight time provides more opportunities to capture stunning aerial footage without constantly worrying about returning to the base for a recharge.

Read Article: The DJI Mini 3 Review: Best Drone Camera For Beginner

Buy On Amazon: Check Price

Intelligent Flight Modes:

DJI has included several intelligent flight modes that make capturing professional-looking shots a breeze, even for newcomers. QuickShot modes, such as Dronie and Circle, automate complex maneuvers, allowing users to focus on framing their shots. ActiveTrack 4.0 lets the drone autonomously follow a subject, while Smart Return to Home ensures a safe and accurate return even in challenging environments.

Controller and App:

The Mini 3 is compatible with the DJI Fly app, which provides an intuitive interface for controlling the drone and accessing various features. The included remote controller offers precise and responsive control, and it can hold most smartphones for a live view of the camera feed.

Price:

One of the most appealing aspects of the DJI Mini 3 is its affordability. It provides access to DJI's renowned technology and features at a price point that won't break the bank, making it an excellent choice for beginners or those on a budget.

Conclusion:

In summary, the DJI Mini 3 is a fantastic drone for beginners and amateur aerial photographers and videographers. It combines portability, ease of use, and impressive camera capabilities at an affordable price. While it may not match the advanced features of DJI's higher-end models, it more than satisfies the needs of those looking to capture stunning aerial content without a steep learning curve. If you're in the market for a beginner-friendly drone that delivers on both performance and value, the DJI Mini 3 should be at the top of your list.

Read Article: The DJI Mini 3 Review: Best Drone Camera For Beginner

Buy On Amazon: Check Price

#The DJI Mini 3 is the latest iteration of DJI's popular line of compact drones#designed with beginners in mind. It builds upon the success of its predecessors#the Mini and Mini 2#offering an even more impressive package for those looking to dip their toes into the world of aerial photography and videography.#Design and Portability:#One of the standout features of the DJI Mini 3 is its compact and lightweight design. Weighing in at just 249 grams#it falls below the regulatory weight threshold in many countries#which means you often won't need a license or registration to fly it. The foldable arms make it incredibly portable#fitting comfortably into a small bag or even a pocket. This makes it a fantastic travel companion for capturing breathtaking footage on the#Camera Performance:#The camera on the Mini 3 is a notable improvement over its predecessors. It boasts a 1/2.3-inch sensor#capable of shooting 12MP photos and 4K video at 30fps. The image quality is impressive for its size#delivering vibrant colors and sharp details. While it may not compete with DJI's more advanced models like the Mavic Air 2 in terms of came#it certainly exceeds expectations for a beginner-focused drone.#Flight Performance:#DJI's Mini drones have always been known for their user-friendly flight experience#and the Mini 3 is no exception. It comes equipped with GPS and downward-facing sensors for stable and precise hovering#even in less-than-ideal conditions. The addition of obstacle avoidance technology helps prevent collisions#further enhancing its safety and ease of use for beginners.#Battery Life:#The Mini 3 comes with an upgraded battery that offers a respectable flight time of up to 31 minutes on a single charge. This extended fligh#Intelligent Flight Modes:#DJI has included several intelligent flight modes that make capturing professional-looking shots a breeze#even for newcomers. QuickShot modes#such as Dronie and Circle#automate complex maneuvers#allowing users to focus on framing their shots. ActiveTrack 4.0 lets the drone autonomously follow a subject#while Smart Return to Home ensures a safe and accurate return even in challenging environments.#Controller and App:#The Mini 3 is compatible with the DJI Fly app

1 note

·

View note