#self driving cars

Text

Tesla has made Autopilot a standard feature in its cars, and more recently, rolled out a more ambitious “Full Self-Driving” (FSD) systems to hundreds of thousands of its vehicles.

Now we learn from an analysis of National Highway Traffic Safety Administration (NHTSA) data conducted by The Washington Post that those systems, particularly FSD, are associated with dramatically more crashes than previously thought. Thanks to a 2021 regulation, automakers must disclose data about crashes involving self-driving or driver assistance technology. Since that time, Tesla has racked up at least 736 such crashes, causing 17 fatalities.

This technology never should have been allowed on the road, and regulators should be taking a much harder look at driver assistance features in general, requiring manufacturers to prove that they actually improve safety, rather than trusting the word of a duplicitous oligarch.

The primary defense of FSD is the tech utopian assumption that whatever its problems, it cannot possibly be worse than human drivers. Tesla has claimed that the FSD crash rate is one-fifth that of human drivers, and Musk has argued that it’s therefore morally obligatory to use it: “At the point of which you believe that adding autonomy reduces injury and death, I think you have a moral obligation to deploy it even though you’re going to get sued and blamed by a lot of people.”

Yet if Musk’s own data about the usage of FSD are at all accurate, this cannot possibly be true. Back in April, he claimed that there have been 150 million miles driven with FSD on an investor call, a reasonable figure given that would be just 375 miles for each of the 400,000 cars with the technology. Assuming that all these crashes involved FSD—a plausible guess given that FSD has been dramatically expanded over the last year, and two-thirds of the crashes in the data have happened during that time—that implies a fatal accident rate of 11.3 deaths per 100 million miles traveled. The overall fatal accident rate for auto travel, according to NHTSA, was 1.35 deaths per 100 million miles traveled in 2022.

In other words, Tesla’s FSD system is likely on the order of ten times more dangerous at driving than humans.

3K notes

·

View notes

Text

“Smart Cars” + Capitalism = a very dystopian future

Ford’s patent ranges from having a “self driving” car return itself to the dealership if you miss a payment, to having the car abruptly disable itself, to “minor inconveniences” like having the air conditioner or heater stop working until you voluntarily return the car.

Don’t think for one second that all carmakers aren’t thinking about doing the same things. Especially Tesla Motors.

Carmakers are already trying to monetize even the most basic features, like charging monthly fees for the ability to use your car’s seat warmers.

Anyway, nobody does more to radicalize people against capitalism more than greedy capitalists.

#fsd#self driving cars#smart cars#ford#volkswagen#capitalism#corporate greed#greed#tesla#ford motor co#spyware#geolocation data#geolocation tracking

4K notes

·

View notes

Text

#you can’t make this shit up#Elon musk#Technology#self driving cars#Tesla#ai and big data expo#unreality#destiel meme#Fake-destiel-News#this is fake news#truth: this has not happened

980 notes

·

View notes

Text

From Now You Can Read About Robots by Harry Stanton, illustrated by Tony Gibbons, 1985.

195 notes

·

View notes

Text

Does anyone else ever think about how much traffic we could eliminate on the roads if things like cargo trucks where automated with a really good cargo rail system+ a short distance cargo vans instead?

How few roads we'd need if light rail/trolly systems where in cities with buses actually functioned properly? Like I know we all know many more ppl buses can be put on a bus compared to individual auto, but seriously. Seriously sit with it for a moment, how much of our current road usage is a combo of "Getting to/from work/school" and "transporting goods to stores" just those 2 functions taken off the roads bc they are the same all the time would let us get rid of sooo much asphalt!! Think of how bare the roads where during 2020 due to remote work alone. It made the idea of highways look dumb to me!!

It just makes self driving cars seem idiotic as the main transportation option.

Self driving cars should be for grocery drop offs!! and mobile libraries!! and for the train system going downtown to be automated so we can have 24 hour trains!! For booking an appointment with a specialist and having it show up outside your door when its time for your appointment!! For first responders to get to fires/medical emergencies!!

But god we need public transport the primary usage for the public, we need cargo systems off the roads.

157 notes

·

View notes

Text

Semi-autonomous "autopilot" cars are dangerous in part due to how human minds work 🚗

We can't have the car driving itself the majority of the time, and expect whoever's in the driver's seat to be ready in the event of a sudden emergency. That isn't how our minds or reflexes work.

Humans are excellent with tools because we treat them as extensions of ourselves. That sounds horribly cliché, but it's accurate. We gain muscle memory specifically for using tools we're practiced with, and they function as though parts of ourselves.

Cars cease to be extensions of ourselves if we take our hands off the wheel, which you're supposed to do if the car is driving itself. We become passengers.

EDIT - I've been told you ARE supposed to keep your hands on the wheel. I maintain it's still no longer an extension of you because you're not actively steering, but this should nonetheless be corrected.

As passengers, if our intervention is necessary to prevent a sudden crash, we're doing so with reduced odds of success. We have to realize what's happening, take the wheel, shift from passenger to driver, and act accordingly in a very brief window of time.

It's a bit like if you've ever been minding your own business, and suddenly heard "think fast" as something was thrown at you by someone you didn't know was there, and you were holding something at the time.

If a car can't safely pull off fully-autonomous, semi-autonomous isn't a compromise. It's accidents waiting to happen.

#dan shive says stuff#cars#self driving cars#By semi-autonomous I effectively refer to full self-driving in which you are expected to be there to supervise#There are comments that say you're supposed to keep your hand on the wheel which is interesting because that's contrary to videos I've foun

147 notes

·

View notes

Text

so cruise redacted information and withheld footage from crash investigators in its initial reports.

The portion of the video that the DMV says it did not initially view showed the Cruise robotaxi, after coming to a complete stop, attempting a pullover maneuver while the pedestrian was underneath the vehicle. The AV traveled about 20 feet and reached a speed of 7 miles per hour before coming to a complete and final stop, the order reads.

in a just world the autonomous vehicle hype crowd who pushed for early deployments should be persona non grata in tech tbh.

112 notes

·

View notes

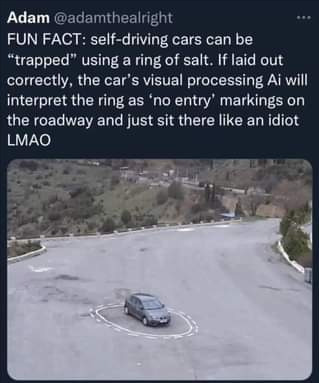

Text

657 notes

·

View notes

Text

17 fatalities, 736 crashes: The shocking toll of Tesla’s Autopilot:" https://www.washingtonpost.com/technology/2023/06/10/tesla-autopilot-crashes-elon-musk/

105 notes

·

View notes

Text

Self-Driving Lies

I'm really mad at several college professors in the CS department at my undergrad who told us all "self driving cars are coming" and trusting them as authorities I quashed my many qualms and wasted a lot of time in otherwise important conversations about urban planning insisting that we account for the impact of this 'inevitable' variable.

"They will be safer than human drivers." tapped into my confirmation bias ... I hate drivers, after all. Of course every one could be bested by a machine.

I should have trusted what I'd learned from first-hand experience programming & writing programs that processed images & that made decisions.

I should have noticed the parallels to the 'work' (self-perpetuating failure cycle) of the RAND corporation & compstat.

I knew the problem of making a car drive was hard. Messy. But, I dismissed this knowledge from my experience because authoritative people said "the smart boys in the valley have this all worked out"

Never. Ever. Again.

Will I.

At least the only harm I have done by being duped has been limited to wasting time thinking about things that just won't happen.

But I suspect that isn't the case for everyone who was taken in.

#self driving cars#self driving vehicles#urban planning#public transportation#technology#duped#times I was fooled#lessons learned#life lessons

46 notes

·

View notes

Text

We don’t yet know exactly why a group of people very publicly graffitied, smashed, and torched a Waymo car in San Francisco. But we know enough to understand that this is an explosive milestone in the growing, if scattershot, revolt against big tech.

We know that self-driving cars are wildly divisive, especially in cities where they’ve begun to share the streets with emergency responders, pedestrians and cyclists. Public confidence in the technology has actually been declining as they’ve rolled out, owing as much to general anxiety over driverless cars as to high-profile incidents like a GM Cruise robotaxi trapping, dragging, and critically injuring a pedestrian last fall. Just over a third of Americans say they’d ride in one.

We also know that the pyrotechnic demolition can be seen as the most dramatic act yet in a series of escalations — self-driving cars have been vocally opposed by officials, protested, “coned,” attacked, and, now, set ablaze in a carnivalesque display of defiance. The Waymo torching did not take place in a vacuum.

To that end, we know that trust in Silicon Valley in general is eroding, and anger towards the big tech companies — Waymo is owned by Alphabet, the parent company of Google — is percolating. Not just at self-driving cars, of course, but at generative AI companies that critics say hoover up copyrighted works to produce plagiarized output, at punishing, algorithmically mediated work regimes at the likes of Uber and Amazon, at the misinformation and toxic content pushed by Facebook and TikTok, and so on.

It’s all of a piece. All of the above contributes to the spreading sense that big tech has an inordinate amount of control over the ordinary person’s life — to decide, for example, whether or not robo-SUVs will roam the streets of their communities — and that the average person has little to no meaningful recourse.

519 notes

·

View notes

Text

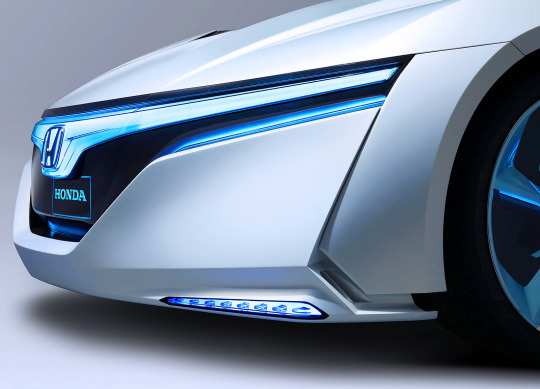

Honda AC-X, 2011. The name is short for Advanced Cruiser eXperience, a self-driving hybrid with active aerodynamics. The lower the front bumper and side skirts and adjust the rear diffuser for reduced drag at higher speeds

142 notes

·

View notes

Text

Source Source

#somethingsomething killed by their own creation#Elon musk#Tesla#unreality#self driving cars#Twitter#car accident#destiel meme#Fake-destiel-news#news#meme#death mention tw#I used they for Xae12 because I can’t figure out their gender from the name and I’m too lazy to google it#this is fake news#truth: Elon musk has regrettably not been in a car accident

43 notes

·

View notes

Text

[Cruise’s] vehicles were supported by a vast operations staff, with 1.5 workers per vehicle. The workers intervened to assist the company’s vehicles every 2.5 to five miles, according to two people familiar with is operations.

lov 2 self driving with as many people per car as if we just let humans drive the cars.

12 notes

·

View notes

Text

you know the problem with self driving cars isn't that they might make mistakes, because humans make mistakes all the time, I've seen so many people making mistakes on the road and I've even witnessed a couple of minor car crashes once.

the problem isn't that they might make mistakes, the problem is they're gonna make it confidently.

when a human makes a mistake, they NOTICE, there's time for a reaction, they can swerve off the road, they can stop the car, they can pull to the side, etc.

when an AI makes a mistake, it's based on the information it knows, and it follows it strictly. the way AI works right now, it's not allowed to be unsure, if it doesn't have enough information, it makes shit up, and then acts as if it's the truth untill corrected by a human. it doesn't doubt itself, it doesn't question "why is this sign here? it wasn't here yesterday", it just does its programming based on its perceived reality.

6 notes

·

View notes

Text

The assertion that Full Self-Driving cars are safe because you have to be touching the steering wheel is flawed for three reasons.

The driver is still not experiencing the drive with the car as an extension of themselves, so reaction time is still significantly impaired in the event of an emergency in which the driver must take over.

Lazily having one’s hand resting on the wheel, not even properly held, seems adequate for stopping the “hold the wheel” message.

The car also doesn’t seem to do more than display a message (I was told the vehicle will gradually come to a stop, but no videos I’ve seen support this. Maybe that’s true in some cases).

There are easily found videos and guides on how to get rid of “that annoying steering wheel nag.”

I’m not opposed to full self-driving cars if they’re safe, and in any case, I’m not the person anyone needs to convince. I’m a cartoonist. I’m not confiscating anyone’s Tesla.

I just have serious safety concerns, and I don’t like companies being able to put all the blame on “the drivers” when the companies have, at a minimum, shared responsibility.

69 notes

·

View notes