Text

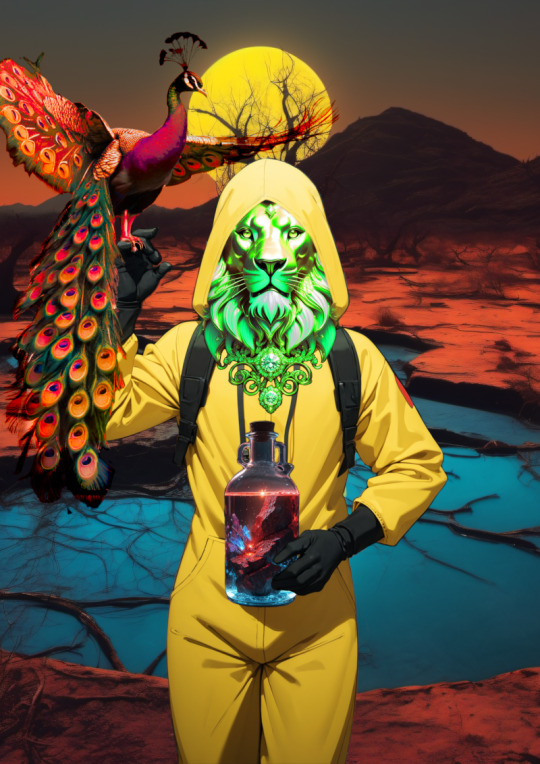

Fishbone #011

Ceci n'est pas une person (contains spoilers for Snowpiercer (2013))

7 Layers, 7 source images. Lots of clone stamping, and none of it on the face. It was like that when I found it.

Previously I wrote: In the face of efforts to force machines to make something indistinguishable from human, and efforts to force humans into the role of machine, I want to make something distinctly human from the distinctly machine.

I still haven't written an artist statement but here are some facts.

1.

I have a stock folder for this project called "people" but it contains no images of people. Not really. It's some Treachery of Images ass shit to say but it's true, it's pareidolia, there are no people in these pictures.

It's particularly evident when you see a series of images iterating on a prompt, a repeating face becoming incrementally less uncanny like some kind of creature learning to imitate a human. In a sense that's what they are, although "creature" suggests sentience and intentionality which can't be attributed to machine learning models.

2.

At the climax of the 2013 film Snowpiercer, it's revealed that an engine component has broken and can't be replaced, so the wealthy at the front of the train abduct poor children from the rear of the train to perform the repetitive action needed to keep the train running. It's an incredibly good film and I won't watch it again.

Leaving the fairly obvious class analysis for another forum, I just want to draw out that image of a human being forced into the role of a machine. It's a real thing that happens, albeit not always so brutally. You see it all the time in customer service, whenever employees of large corporations are forbidden from deviating from a script.

3.

When Avengers: Age of Ultron came out in 2015 it was understood that the film's quality had suffered because the amount of callbacks, references, existing and new plot threads, and foreshadowing that the studio demanded be included left the director with control over about twenty minutes of the film. This is in no way a defense of Avengers: Age of Ultron or its director.

Algorithmically generated media was already flooding the world long before machine learning models became viable tools. The algorithms were profit-cost projections and instead of processor cycles their products were rendered by human labour hours. This is to say, before MLMs became commercially viable, slop media like Avengers: Age of Ultron was made by humans forced into the role of machines that had yet to be invented.

4.

Avengers: Age of Ultron is still a better film than it would've been if the studio had had use of MLMs.

5.

The idiom "a cog in the machine" to describe working for a large company has been in use since the late 1800s.

6.

Since I posted Fishbone #010 there's been an outbreak of reporting about MLMs being inserted into healthcare. Not the specific case I was describing, but I feel like that's worse. The more widespread it is the more harm it will do.

7.

Artificial general intelligence--humanlike or self-aware ai--is not real. It's not even close. It's not even likely to ever be close. There's no there there. The tech isn't here or even near. There's no Digital God on the horizon. The only people saying otherwise are grifters and suckers, and you shouldn't listen to either.

1 note

·

View note

Text

Fishbone #011

Human-generated digital collage from ML source images. Made in GIMP.

6 notes

·

View notes

Text

Fishbone #010

the human cost rolls downhill

5 source images, 9 layers.

I hate having to write this. I hate that this is a thing that happened to be written about.

In early 2024 a private virtual clinic providing medical care for a vulnerable and underserved patient demographic allegedly replaced 80% of its human staff with machine learning software.

As far as I can find this hasn't been reported on in the media so far and many of the details are currently not public record. I can't confirm how many staff were laid off, how many quit, how many remain, and how many of those are medics vs how many are admin. I can't confirm exact dates or software applications. This uncertainty about key details is why I'm not naming the clinic. I don't want to accidentally do a libel.

I'm not a journalist and ancestors willing researching this post is as close as I'll ever have to get. It's been extremely depressing. The patient testimonials are abundant and harrowing.

What I have been able to confirm is that the clinic has publicly announced they are "embracing AI," and their FAQs state that their "algorithms" assess patients' medical history, create personalised treatment plans, and make recommendations for therapies, tests, and medications. This made me scream out loud in horror.

Exploring the clinic's family of sites I found that they're using Zoho to manage appointment scheduling. I don't know what if any other applications they're using Zoho for, or whether they're using other software alongside it. Zoho provides office, collaboration, and customer relationship management products; things like scheduling, videocalls, document sharing, mail sorting, etc.

The clinic's recent Glassdoor reviews are appalling, and make reference to increased automation, layoffs, and hasty ai implementation.

The patient community have been reporting abnormally high rates of inadequate and inappropriate care since late February/early March, including:

Wrong or incomplete prescriptions

Inability to contact the clinic

Inability to cancel recurring payments

Appointments being cancelled

Staff simply failing to attend appointments

Delayed prescriptions

Wrong or incomplete treatment summaries

Unannounced dosage or medication changes

The clinic's FAQ suggests that this is a temporary disruption while the new automation workflows are implemented, and service should stabilise in a few months as the new workflows come online. Frankly I consider this an unacceptable attitude towards human lives and health. Existing stable workflows should not be abandoned until new ones are fully operational and stable. Ensuring consistent and appropriate care should be the highest priority at all times.

The push to introduce general-use machine learning into specialised areas of medicine is a deadly one. There are a small number of experimental machine learning models that may eventually have limited use in highly specific medical contexts, to my knowledge none are currently commercially available. No commercially available current generation general use machine learning model is suitable or safe for medical use, and it's almost certain none ever will be.

Machine learning simply doesn't have the capacity to parse the nuances of individual health needs. It doesn't have the capacity to understand anything, let alone the complexities of medical care. It amplifies bias and it "hallucinates" and current research indicates there's no way to avoid either. All it will take for patients to die is for a ML model to hallucinate an improper diagnosis or treatment that's rubber stamped by an overworked doctor.

Yet despite the fact that it is not and will never be fit for purpose, general use machine learning has been pushed fait accompli into the medical lives of real patients, in service to profit. Whether the clinic itself or the software developers or both, someone is profiting from this while already underserved and vulnerable patients are further neglected and endangered.

This is inevitable by design. Maximising profit necessitates inserting the product into as many use cases as possible irrespective of appropriateness. If not this underserved patient group, another underserved patient group would have been pressed, unconsenting, into unsupervised experiments in ML medicine--and may still. The fewer options and resources people have, the easier they are to coerce. You can do whatever you want to those who have no alternative but to endure it.

For profit to flow upwards, cost must flow downwards. This isn't an abstract numerical principle it's a deadly material fact. Human beings, not abstractions, bear the cost of the AI bubble. The more marginalised and exploited the human beings, the more of the cost they bear. Overexploited nations bear the burden of mining, manufacture, and pollution for the physical infrastructure to exist, overexploited workers bear the burden of making machine learning function at all (all of which I will write more about another day), and now patients who don't have the option to refuse it bear the burden of its overuse. There have been others. There will be more. If the profit isn't flowing to you, the cost is--or it will soon.

It doesn't have to be like this. It's like this because humans made it this way, we could change it. Indeed, we must if we are to survive.

3 notes

·

View notes

Text

Fishbone #010

Human-generated digital collage from ML source images. Made in GIMP.

5 notes

·

View notes

Text

Fishbone #009

knowledge isn't a sin

Previously I wrote: It's extremely Emperor's New Clothes out there. It's absolutely raining paper tigers. Positively swarming with castles in the air.

5 source images, 10 layers. I feel like I might revisit these concepts later.

I'm still on my break from learning about machine learning. I can't seem to evade the discourse though. I kinda wish people would stop calling it AI.

Calling machine learning AI is marketing, it's aggrandising to the point of falsehood. When you call machine learning AI, even to dunk on it, you're still tacitly buying the hype. You're still letting the spin control the conversation.

To be fair a lot of people who vociferously hate AI also aggressively refuse to learn enough about it to know that it's neither. Like they think they're going to be poisoned or tainted by knowing what they're talking about so they absolutely must at all costs avoid that.

And it's fine if you don't want to learn anything about machine learning, that's allowed. It only becomes a problem when you expect to have your opinion about it taken seriously.

I'm not vaguing anyone in particular, it's just a phenomenon I see a lot.

1 note

·

View note

Text

Fishbone #009

Human-generated digital collage from ML source images. Made in GIMP.

6 notes

·

View notes

Text

Fishbone #008

Heaven is each other

12 layers, 6 source images. I tried to write a whole Thing for it about machine learning as it interacts with the Thanatos drive and atomisation and agency but a) couldn't articulate it as cleanly as I wanted to, and; b) it's maybe a little further outside the remit of this project than I actually want to go.

So I took a week off learning about machine learning because it got kind of depressing. Not the tech itself per se, but the mythology around it makes me want to lay on the floor and make Tina Belcher noises.

I keep seeing people variously crowing and crying about how AI will replace doctors and customer service workers and judges and emergency dispatchers and journalists and military strategists and teachers and and and, and it can't. It can't do any of that stuff as well as a human and if you try to make it do all that stuff in place of humans people will get hurt and killed a lot. And a certain amount of people are trying to make it do all that stuff anyway because they either don't know it can't, or they don't care. It's extremely Emperor's New Clothes out there. It's absolutely raining paper tigers. Positively swarming with castles in the air.

Which has little if anything to do with the themes and concepts I was contemplating for this piece but it doesn't matter, death of the artist and all.

0 notes

Text

Fishbone #008

Human-generated digital collage from ML source images. Made in GIMP.

3 notes

·

View notes

Text

Fishbone #007

The P stands for Pre-trained

This almost turned into another 30-50 feral layers situation. I isolated so many fucked up bees and then ended up not using them because I had a whole xkcd average familiarity moment. I spent hours trying to find bees that would look fucked up at a glance to someone with no special entomological knowledge but still read as bees. My threshold for what reads as a bee may be too high.

Then I realised bees weren't all that thematically suitable for what I was doing here anyway, so it was all for the best and I'll use the bees for something else. 6 layers, 6 source images. It's about GPTs.

A GPT is a specific type of Large Language Model (LLM) and I'm only talking about GPTs here, not other types of machine learning models. If it's not a GPT, anything and/or everything I'm about to say may or may not apply.

GPT stands for Generative Pre-trained Transformer--again, and I cannot stress this enough, a specific type of model. It's such a specific term that OpenAI wants to trademark it (but probably not specific enough for them to actually be allowed to). The Pre-trained part matters, it means the training is completed before you use it. It's not dynamic, it's not learning on the fly, it's not live-learning from being used, it's not learning from searches.

When you end a session with a GPT, the contents of the session cease to exist for the GPT. It doesn't remember what you said last time, it hasn't learned from your conversation. You didn't even have a conversion. When a GPT starts a sentence it doesn't know what's at the end of it, and when it reaches the end it doesn't know what's at the beginning, it doesn't form a concept or recall information and express it, it simply predicts the next word over and over very quickly. If you feel like the responses it returns are getting better over time, it's actually because you're unconsciously getting better at prompting.

It doesn't think or know or understand or remember, it just emits words, entirely without meaning.

This is why you shouldn't use it as a search engine or a teaching tool or to write essays or papers. It doesn't contain information, it just contains a bunch of complex statistics about language patterns. If you train a language model on 100 sentences containing the word boyfriend and 30 of them mention werewolf boyfriends, it doesn't know anything about boyfriends or werewolves or werewolf boyfriends, it just knows that the word boyfriend comes after the word werewolf 30% of the time. It's not retrieving information and describing it for you, it's not answering your query, it's literally just autosuggesting hard and fast.

Even if you used a GPT version with a websearch function, if you didn't specifically instruct it to perform a search, it didn't. And if it did, it used Bing, which is still winning the race to the bottom of the search engine tierlist.

It's why if you upload a body of writing (eg. a fic or your homework) to chatGPT and ask it to finish it, you didn't actually train it on that writing. You prompted it with the writing--and maybe only with the last bit. The main reason not to do it is not because chatGPT will now "contain" that writing, it's because whatever it returns will be really shit. It can't do setup and payoff, it can't do themes, it can't do characterisation, it can't do foreshadowing or twist endings or callbacks, it can't synthesise concepts or draw inferences, because all of those things require comprehension of what the text means. It can't do comprehension. And the longer the text output, the worse it gets, because without comprehension it can't do continuity or context.

It's also why you shouldn't use chatGPT for grading or checking for plagiarism or checking for ai writing. It doesn't actually assess the material, you're just prompting it, and it will return the response you prompt for.

And it's also why GPTs should never under any circumstances be used to make decisions about anything.

But hey, if you're scared of being replaced by spicy autosuggest, developing reading comprehension is a great way to outperform it.

0 notes

Text

Fishbone #007

Human-generated digital collage from ML source images. Made in GIMP.

4 notes

·

View notes

Text

Fishbone #006

it's just not that good

15 layers, 10 source images. I went harder than usual on the photoshop artifacts because I was feeling some kind of way.

This one is kind of about the Kate Middleton mother's day picture, but it's more about how people reacted to it. I'm deeply anti-monarchy, I think it's rancid and I hope it ends within my lifetime, and everything I learn about any of those people is against my will. Nevertheless I have been forcibly made aware of Mrs. Of Wales's disappearance from public view, and the Institution's abject failure to control the narrative. Which is kind of wild, the Institution is one of the most sophisticated propaganda engines on Earth.

So this photo comes out and almost immediately is flagged and killed by major media outlets because it's been tampered with. And the tampering is obvious. It's so bad that it actually makes the excuse they trotted out (herself experimenting with editing) almost sound plausible.

Anyway, in the immediate aftermath of the media kill notifications, I saw a lot of people on social media saying the image was obviously ai. No the fuck is isn't, what the fuck are you people talking about. It's obviously photoshopped.

I felt very briefly like I was in the dumbest parallel universe. It was like everyone forgot photoshop exists and image tampering has been around longer than photography. Hostility towards ai is giving people brainworms.

I'm not saying don't be hostile, feel how you want about it. I'm just saying ML image generation isn't that good. Maybe it's just not that good yet, idk, but for sure on the 10th of March 2024 it's not that good.

I spend a lot of time looking at ML images for this project, obviously. And I'm pretty familiar with photoshop, also obviously. There's so many textures and patterns in the picture that ML is just not great at, like the tiles, the wicker chair, the fabric textures especially the knits, the check, plaid, and fairisle patterns, the button details, the freakin shoes. None of those things are fucked up ML style. That kid's just bending his hand funny, I can bend my hands like that, that's not how ML fucks up hands. There's a distortion in the fairisle pattern, but it's clone stamp style, not ML style. The fuckups are all fucked up in really photoshoppy ways. The clone stamp artifacts, the choppy edges, the uneven depth of field, it's classic bad photoshop.

In my opinion, the picture was composited from several images. Badly. Additionally while the paranoid are focused on evidence suggesting that Catherine may have been added into the picture, I also find evidence suggesting that someone may have been removed. Badly. Badly enough to, again, make the excuse seem plausible.

I mean none of it explains why she'd be compositing a fakeass photo of herself and her kids in the first place, but also I don't care. Fuck the monarchy.

My point is, don't get so focused on ai and deepfakes that you forget about all the other ways images can be misleading.

1 note

·

View note

Text

Fishbone #006

Human-generated digital collage from ML source images. Made in GIMP.

1 note

·

View note

Text

Fishbone #005

They weren't kidding, that history can rhyme

Only 9 layers and 5 source images this time. Things don't always have to be a federal fucking issue.

With how much ai art wank discourse is rooted in purity politics, especially but not exclusively on tumblr, I was amused by the mental image of slipping into a hazmat suit before venturing into the dangerous and repulsive wasteland of ai art to retrieve alien salvage like the stalkers in Roadside Picnic. It doesn't feel like that, it feels like being a grabby little corvid in the shiny trinket factory, but I still enjoyed the concept.

The rest of the image is about art as alchemical process and I'm not going to explain it.

I've been observing for some time that the objections to ai art are indistinguishable from the objections to photoshop ~20-25 years ago (including the one about "it's different this time bro trust me") so I want to look at some of them a bit closer.

It's not real art

Stop getting your talking points from fascists.

But-

I don't care how you justify it, it's a fascist talking point. Stop.

It's stealing

At risk of resurrecting stupid bullshit I was already bored with 20 years ago, I honestly think there's a better case for this regarding PS than machine learning. PS artists actually do use elements from existing images, lots of them (ideally with permission/license). However, consensus opinion has long since concurred that PS artists substantially transform and recontextualise those elements and the result is an original creation, same as physical collage.

As I've come to understand it, ML doesn't use elements from existing images, just mathematical descriptions of image attributes. It doesn't incorporate images on any level, or even pieces of images, so I'm left wondering what's being stolen here? I'm not being shitty I genuinely can't see anymore what is stolen when ML simply does not use any part of any existing image to generate an image.

There's no skill/creativity involved

The first time I ever used adobe photoshop (I've long since switched to GIMP, change the name, FOSS 5evar, etc.) I spent about fifteen minutes excitedly stacking filters on a picture of a butterfly, before the person showing me how it worked dismissively explained that filters don't make art.

Elements and principles of design are learned skills. They're taught at art school because they're not innate and they're important as hell. I often feel like people are tacitly arguing all that stuff's just padding--and if you're staunchly anti-ai-art I promise that's not an argument you want to make, it will backfire spectacularly on you.

And yeah, I think everyone still agrees that just piling filters on a photo isn't very creative and takes no particular skill. I doubt anyone thinks instagram filters take a good photo for you. I think (or hope) that we all understand now that complex image editing and manipulation does in fact take skill and creativity.

I can't help but wonder how much of the vapid trash we're seeing in the explosion of ai art is the equivalent of the 2000s explosion of shitty filtered photos.

The computer does it for you

There's so much more to PS art than filters, and the computer emphatically does not do any of that stuff for you. It doesn't do composition or colour theory or concepts or art history for you. It just does what you tell it to, you still have to make the art good. Fishbone #001 involved manually isolating dozens of fucked up hands from ML images, and I complained about it the entire time and the computer didn't even get me a cup of tea.

A lot of people used to actually genuinely believe that photoshop was a magical plagiarism machine that you stuck stolen art in and it automatically made perfect composites for you. Probably some people still do, it's a big world. But it never was true, no matter how hard they believed it.

Is there more to ML image generation? Idk I'd have to try it to find out for sure and I'm very tired. But the more I learn about it the more I think there could be. The frequency with which I see very elaborate and specific prompts with garbled and all-but-irrelevant images does at least suggest that the magical ease of making ai art has been somewhat oversold.

Using it in any way is cheating/cheapens your art

I think the cheating idea mostly came from the photography community, who thought PS was a shortcut to better photos for undisciplined talentless hacks who couldn't be bothered to learn to take a good photo. The irony. But for me, since I wasn't using it to improve photos, this was such a weird take. Cheating at what? At photoshop art? I'm cheating at photoshop by... using photoshop?

And the idea that using PS at any stage in your process irredeemably sullies your art is just stupid on its face. It's not radioactive. It's not a PFAS. Sin isn't real. Santa isn't putting you on the naughty list for photoshopping. The Galactic Council of Artistic Integrity aren't checking for pixels.

Needless to say, since 100% of the source images I use in this project are ML generated, I also think it's a bit of a silly objection to ML image generation.

It has no soul

I am not and have never been christian. I do not and will never care what your imaginary friend thinks about art.

Also, this is a repackaged fascist talking point. I told you to stop that.

It sucks

Most of everything sucks, what's your point?

People are going to lose their jobs

Unfortunately this one had some connection to reality. By about 2010 there were almost no painted book covers, and painters who'd made their living from them were forced to adopt PS or find a different job. It wasn't just book covers of course, commercial artists across the board felt the pinch of automation. That's not exactly PS's fault, the parasitic owning class will simply take any opportunity to fuck over a worker for half a buck, and PS art is generally cheaper because it's generally faster to make.

I actually have some questions about how this will play out with ML though. Currently, yes, it's looking very much like in ten years there won't be any PS book covers any more, but I think the parasitic owning class are going to quickly remember they don't actually want art that they can't hold copyright over, and human artists will remain necessary. No one wants a logo they can't trademark. No one wants commercial art if they can't control the licensing. I don't even think it'll take a wholeass test case, just a few things like selfpub novels using the same cover image as a major release or folks using pure ML images from the big stock sellers without paying, and as soon as they realise they can't sue anyone about it they'll come crawling back, cap in hand, to hire you back as a contractor at an insultingly low rate.

People will lose their jobs or find their billable hours severely cut, but, unfortunately, as the brave Luddites showed us, you can't stop automation by fighting the machine, no matter how noble your motives. You need to actually change society somewhat.

But I think this should be enough of a concern without having to also make shit up. You can just object to ML on the basis of tangible harms it will be used to inflict on individuals and society. That's plenty to be mad about, you don't need to put lipstick on it.

It's different this time bro trust me bro

Plenty of people sincerely believed that rise of PS was fully automated skill-free art theft and the sky was falling, and pointing out that all the same things were said about the invention of everything from the photocopier to home video to the printing press to the camera didn't even slow them down because this time it's different, this time it really is that bad. It wasn't.

And I honestly don't know anymore if it is.

3 notes

·

View notes

Text

Fishbone #005

Human-generated digital collage from ML source images. Made in GIMP.

4 notes

·

View notes

Text

Fishbone #004

I miss Secret Horses

I started this project in a mood of hostility towards ai art, but I really liked the Secret Horses phase of machine learning images about, like what, three, four years ago? The delicious soft, dreamlike slurry of form and colour, it was beautiful.

I gather they were produced by slow models only capable of small, low-res images and that was one of the reasons that style died out, faster, higher resolution models outcompeted them--plus a lot of very boring people just prefer photoreal hypertiddies over Secret Horses. Couldn't be me. She's too gorgeous.

Anyway that's what this Fishbone is about. It's not a Creature, it's just a eulogy to a more interesting time in ai art, as I'm sure this entire project will become in time as the models get better and fuck up less. It's also kind of a eulogy to blockmounted airbrush posters of the 1980s and 90s. 10 layers, 5 source images.

I did notice that machine learning has a problem with horse muzzles. It frequently gets them so wrong that it's actually kind of charming (to me at least). I now have a folder full of nothing but fucked up horse noses and no idea what I'm going to do with them yet but watch out for that.

I've been musing on how the conversation here seems very focused on the alleged Midjourney deal to the exclusion of the alleged OpenAI deal. Text-based LLMs need continuous training in a way that image models don't, because they need up-to-date information about idk news and science and whatever so they're not stuck in 2022 forever. Realistically we were probably already mass-indexed years ago but relatively clean sources of new real-language text are swiftly becoming scarce as LLM output floods the web, and both structurally and culturally tumblr is inhospitable to the kinds of marketing and engagement bots taking over other sites (we have other more different horrible problems). So a deal with OpenAI actually going through is marginally more plausible (in my opinion) than one with Midjourney--and also much funnier.

Like, full respect to everyone who started posting nonsense and gibberish when the 404 piece dropped, but we've been posting nonsense and gibberish on this site for 17 years, it is the song of our people. The tumblr accent is real and ChatGPT developing it would be the funniest thing ever. Like, tell me this would not be objectively hilarious;

User: Hey ChatGPT how many countries are there? ChatGPT: More than seven User: No how many countries in the world? ChatGPT: At least three User: There are definitely more than three countries in the world ChatGPT: wellyou'renotwrong.gif

This would be "ChatGPT writes Omegaverse" all over again. Picture ChatGPT swearing up and down that Scorsese made a film called Goncharov because we like to play pretend. Or helpfully informing Alien fans of the Bush Cut because we like to log on and tell bald-faced lies for no reason. This is the webbed site of all time. We piss on the poor here sir. You can feed seventeen years of tetries choclay ornage and penis blast and homestuck rp to an LLM, but Watch Out.

But to be serious for a moment, the various flavours of fascists are probably a bigger hazard to any potential value of this site's text data. I would suggest Matt "please notice me Elon" Photo fix moderation and kick out the trash if he actually wants a real deal to happen but it'll be funnier to watch him get led down the garden path.

1 note

·

View note

Text

Fishbone #004

Human-generated digital collage from ML source images. Made in GIMP.

6 notes

·

View notes

Text

Fishbone #003

does stylejacking real? and does it matter?

38 layers, 37 source images. This Creature sees without recognising, reads without understanding. It likely wouldn't be able to see this image properly because of all the transparencies I used, it would read a lot of artifacts that are not readily apparent to the human eye. One asshole abusing transparencies isn't actually enough to fuck up a model and individualist action won't change anything, I just think it's a neat concept for this piece to incorporate. I also squeezed in a few comments about how it doesn't know what things are so it bleeds them together.

I put all the little pictures on the screens but I'm not going to make a big fuss about it.

It turns out the reasons I'm not scared of machine learning jacking my style need multiple posts and this one relates the most to the art I just posted so it's going first.

The thing about style is, you know the thing that you hate about your art--not the technical stuff, the thing that makes it look like it was made by you no matter how good it is? That's your style, dummy. You don't need to protect it, you just need to stop trying to overcome it.

"Manga" and "photorealism" and whatever else people call styles around here are eminently replicable, millions of people replicate them every day. They're "styles" but they're not your style. They're more like genres.

It feels important to note at the top that I absolutely understand that people who make their living in highly replicable styles (genres) are scared and angry about the economic threat ML poses them, and they have every right to be. I'm with them, the parasitic owning class will take any opportunity to fuck a worker and artists are workers. None of what I'm about to say solves artist precarity, and it's not a dismissal of it. It's an important issue and I will come back to it another day.

But for now I want to talk about the more ephemeral and personal artistic notion of style. Some people call it hand, rather than style. Maybe we should use that more.

However, I'm still going to say stylejacking because handjacking sounds like something very different.

So this is not about robots stealing your job (or beating your meat), this is about robots stealing your mojo, and why I think it's the wrong thing to be scared of.

If you take the average of a million manga girls, you theoretically get an ideal manga girl. And again, if you've worked hard to be a manga artist it sucks a lot that any jackoff with enough GPU power can make a hundred ideal manga girls now, I'm not saying don't be upset.

I'm saying the aspects of your art that can be easily replicated have already been replicated in large enough volume to generate that ideal average. Any jackoff with enough discipline can do it.

Yet there are relatively few human jackoffs who can mimic individual artists' hands convincingly enough to actually make a trade of counterfeiting, and even fewer who can mimic multiple artists to that level, so it's never really been this kind of huge existential threat before. Well it turns out, based on my observations in the wild, machine learning doesn't seem to be all that great at it either. I'm starting to suspect it's overhyped, like everything else about machine learning.

If you go on ai art sharing sites and search for Junji Ito, you will find Ideal Manga Girl But Black & White, you will find Photorealism With Spirals And Goo, and you will find very little that even passingly looks like an effort to emulate Juni Ito's mixed lines, unsettling compositions, uncanny faces, or impeccably fucked up vibes.

Essentially when you tell a machine learning model to produce an image of a manga girl in the style of Junji Ito, the model identifies what traits [manga girl] refers to, and what traits [Junji Ito] applies to, and cooks them together. The underlying architecture of the image is effectively a manga girl averaged from thousands, maybe millions of different images, with averaged composition and averaged line weights and averaged features and averaged vibes. Applying traits of a few hundred Junji Ito images to it (black & white, dense hatching, severe shading, spirals) doesn't result in a Junji Ito girl.

You can add weight to push more of the Junji Ito traits into the output, but even then you don't get something that looks convincingly like Junji Ito drew it, not even when you recreate famous Junji Ito panels.

Talking about this in terms of averages is actually also incorrect and misleading but in spite of that I think (hope) this is a better description than "collage machine" or "smooshing images together."

I know that right now this technology is the worst it will ever be, but I genuinely think this limitation might be intrinsic to how it functions. Machine learning models don't have the capacity to understand what they "see" so the best interpretation of any artist's hand they can ever return is cliches.

I'm not saying stylejacking isn't possible at all, fuck if I know, I saw some pretty good Beksiński-style pieces a while back. But that's the thing, when you look at them you don't go hey cool pic, you go oh yeah that's pretty good Beksiński style. Because Beksiński is so fucking Beksiński.

Even after all the tweaking and weighting you can do, once you've finally output a competent mimicry of Beksiński or Junji Ito or Mike Mignola or HR Giger, then what? That's all you've done. People will look at it and think of that artist. You haven't replaced them, you've advertised them.

The novelty of "What if Junji Ito drew Will Smith eating spaghetti" has a limited shelf life and no major commercial value. It certainly doesn't help advance the argument that machine learning is a legitimate art tool (not touching that, maybe another post another day). I think it will largely die off in a few horrible horrible years, assuming climate change doesn't wreck us first.

1 note

·

View note