Text

About Ray-MMD x ExcellentShadow 2

I’m writing a small post this time to share a detail that left me curious for a while: why do people combine ExcellentShadow with Raycast? Does it produce better results?

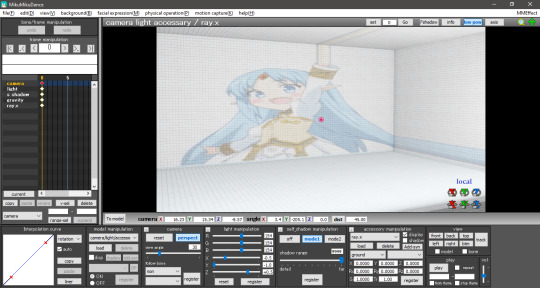

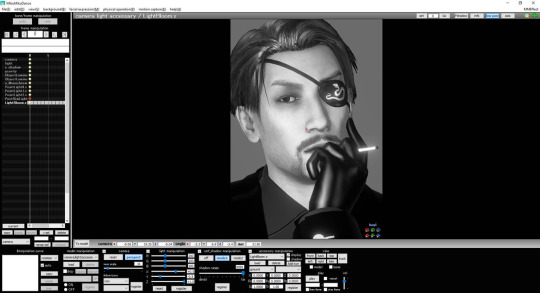

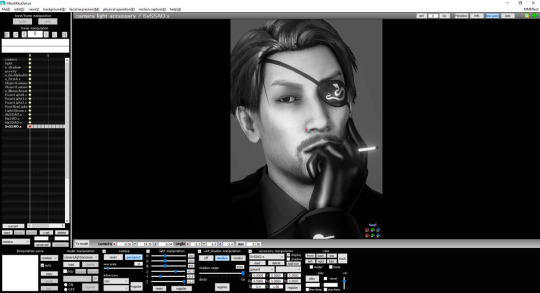

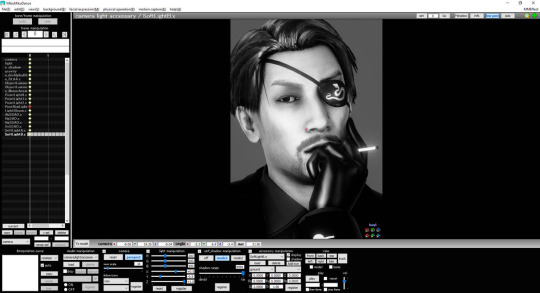

Interested in the matter, I searched for answers after knowing from a Rebirth artist that ExcellentShadow may or may not improve the overall results, which felt superstitious. I decided to try it myself: I opened my MMD, loaded and set up Ray, and added ExcellentShadow. At first, no change at all in the shadows, everything looked the same when disabled or enabled.

Next, I loaded two physical lights with enabled ExcellentShadow to check, but again, nothing. No changes. If it had a 50/50 chance of working with Ray, then I supposed I was unlucky and didn’t find the ideal setting.

So I asked two of my contacts that are very experienced in Raycast rendering about ExcellentShadow’s interactions with Ray, if there was any. In conclusion, adding it to Ray is redundant, as Ray’s shadows are already very smooth, even smoother if using “boom!” settings. ExcellentShadow’s purpose is overwhelmed by Ray’s nature, not to mention that the in-built physical lights have a soft or hard shadow feature.

In other words, it’s pointless to load ExcellentShadow with Ray, it’ll only consume more resources. I’m open to discussion about this, so if you do that and it actually works with solid proof, let me know by either reblogging this post or privately messaging me on my social media. Peace!

3 notes

·

View notes

Text

RAY-MMD: Rendering Low Lights

Welcome to another Raycast tutorial. This time, we’ll explore rendering low light scenes in MMD and learn together.

Content index:

Introduction

Envision

Theory

Practice

Post editing

Credits

1. INTRODUCTION

Dark ambience is one of MMD’s biggest challenges due to software limitations. Thankfully, Raycast is powerful enough to handle that for us, but you must know what you’re doing. Unlike daylight, controlling both exposure, shadows, contrast and gamma are vital when messing with darkness.

In this tutorial, I’m going to expand on the technique I’ve used in this render for January’s MME contest. I’m not an expert, so please don’t quote me on anything!

2. ENVISION

When creating art, one of the first steps is to have a clear idea of what you’re going for and set realistic expectations too. That’s key in atmospheric renders, when they depend on lights to fullfil their purpose. In MMD, you’ll have to work twice as harder on these type of renders and they won’t look like something out of Blender. Ray is powerful, but still child’s play compared to other softwares and there’s no other way to put this.

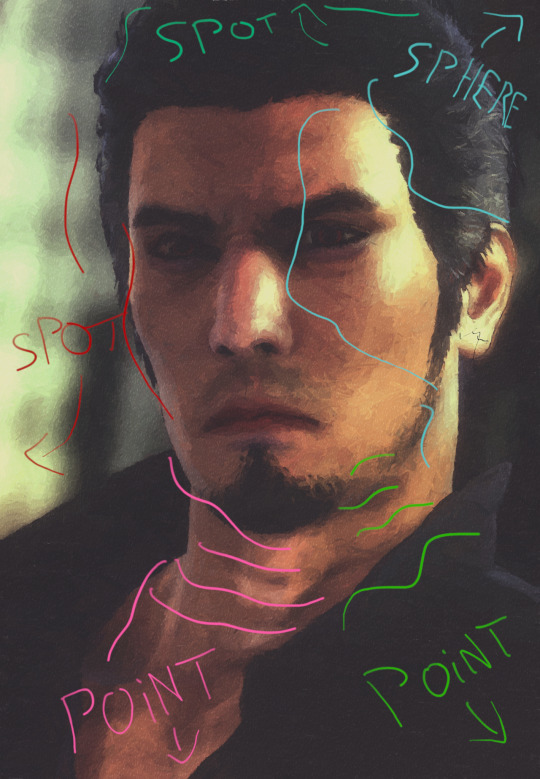

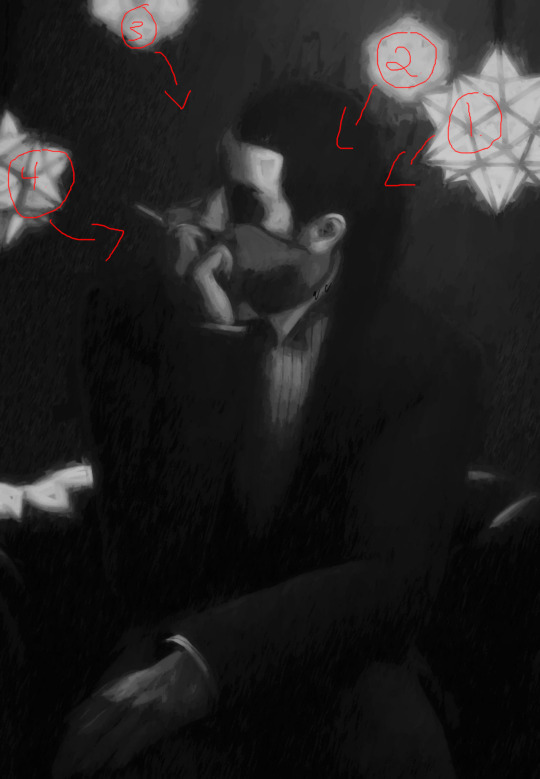

So we start building the render with the basic question: “what do I want?”. In this case, I wanted to make make a lonely dark scene with Sugiura struggling in the cold. The green dots are the rule of thirds’ intersections, they focus on his face and hands while dragging attention to the background. The viewer has enough point of interests: the model, the ground and the lamp post.

If you have this stage, you’ll know that Sugiura is far away from that lamppost, but the rather low perspective (24) eases that. The lights are going to trick the viewer into thinking the elements are closer than it seems.

The main goal is to recreate this kind of light, but not leave Sugiura completely in the dark.

3. THEORY

Let’s discuss a bit about how dark ambience should work on Ray. Naturally, you’d open the Time of Night or Sky Night skybox, but both have a strong contrast that might be difficult to tweak if not paying attention.

Time of Night vs Sky Night. Both are similar, the main difference is the stronger contrast in Sky Night. On note of that, the shadows can’t be easily controlled with Sky Night. The user is stuck with this very dark shadow and can only ease it by changing its RGB.

With this in mind, the best option is to use Sky Hemisphere or any other skybox with softer shadows. That’s safer and provides much more control to the user. I highly recommend activating Ray’s eye adaptation for any situation.

The Auto Exposure, commonly called eye adaptation, is a Ray feature that automatically adjusts the exposure of the current scene view to become brighter or darker. This effect recreates the experience of human eyes adjusting to different lighting conditions.

The eye adaptation is disabled by default. To enable it, open ray.conf and scroll down until you find the eye adaptation section. To enable it, change the number to either 1 or 2. In my settings, I picked 2.

// Eye Adaptation // https://docs.unrealengine.com/latest/INT/Engine/Rendering/PostProcessEffects/AutomaticExposure/index.html // 0 : None // 1 : ISO 100 // Middle Gray at 12.7% // http://en.wikipedia.org/wiki/Film_speed // 2 : ISO 100 // Middle Gray at 18.0% #define HDR_EYE_ADAPTATION 2

With eye adaptation, you’ll have an easier time adjusting the exposure, thus working better with dim environments. To further improve the low light experience, disable the sunlight and make the shadows darker using the facial sliders below. The following settings were used in my Sugiura render:

Night works different than day: while in daylight you focus on balancing brightness and saturation, nighttime calls for less saturation (unless neon) and darkness control so the shadows aren’t “exploding dark” on the model. I always keep this quote by Renoir in mind when working with darkness: “no shadow is black. It always has a color. Nature knows only colors... White and black aren’t colors”. In other words, tinted shadows are always welcome.

Thankfully, Ray offers the option to tint shadows using RGB, so you can play with these in your favor. Speaking in very basic color theory, warm lights call for cool shadows and vice versa. Your scene will look much more natural with these.

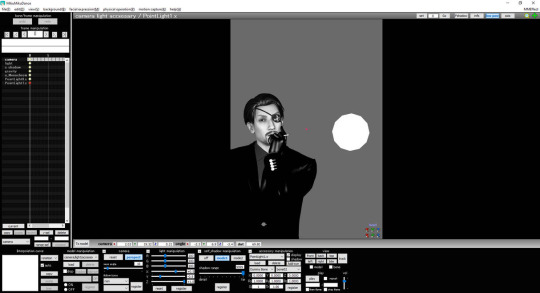

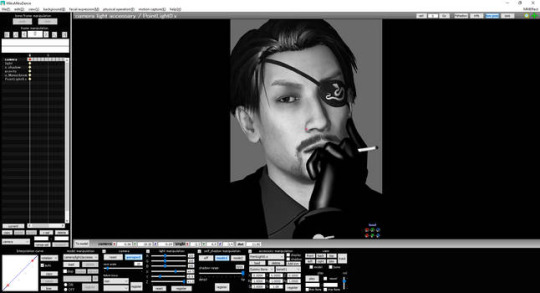

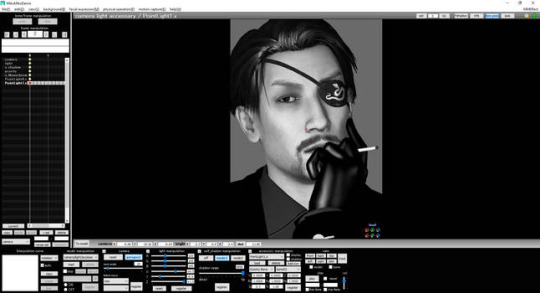

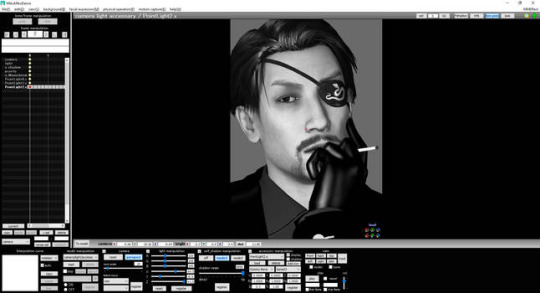

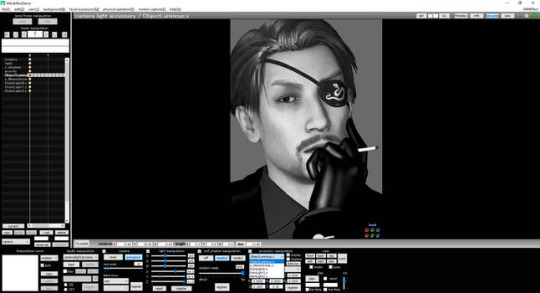

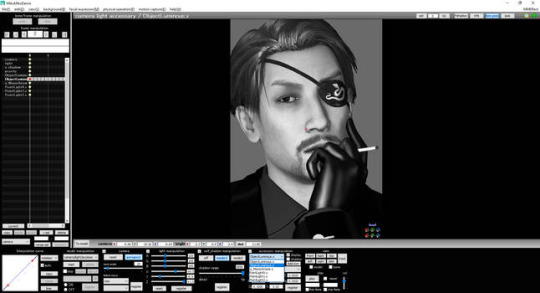

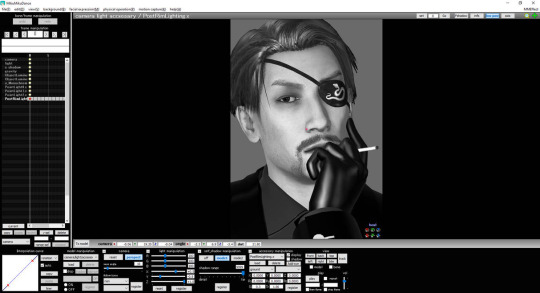

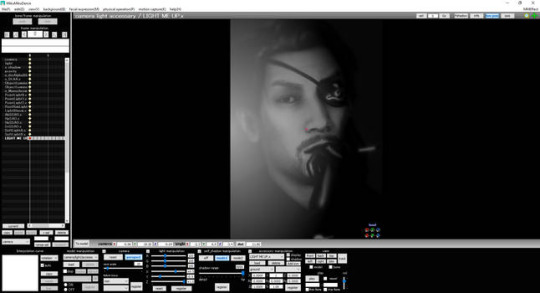

4. PRACTICE

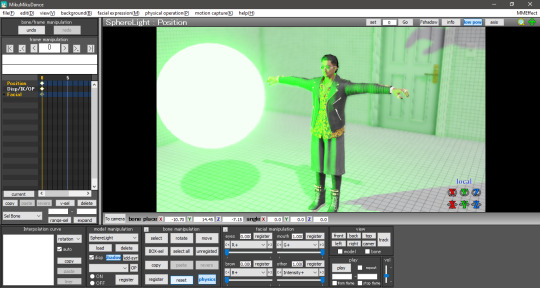

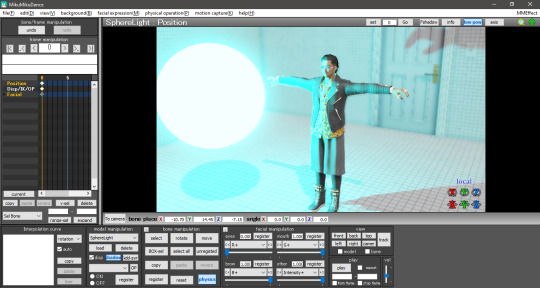

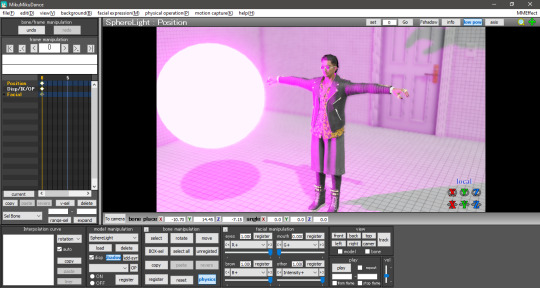

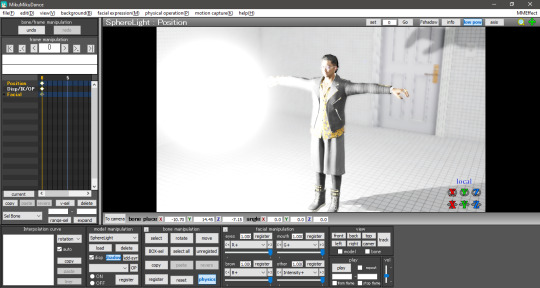

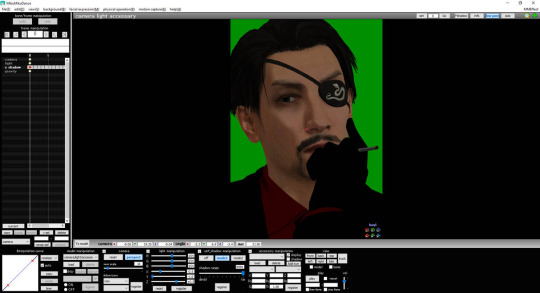

So let’s put everything we’ve learned so far in practice, as some things we only learn that way. First, I loaded Ray and set it up for basic usage: materials and skybox light. Next, I moved MMD’s default lighting to behind Sugiura to help me work with the physical lights. It acts like the sun for Ray and you’ll build your lights around it. The skybox used is Snowy Park by Freya-Vhal; it’s very useful for night scenes, since it already has blue tones.

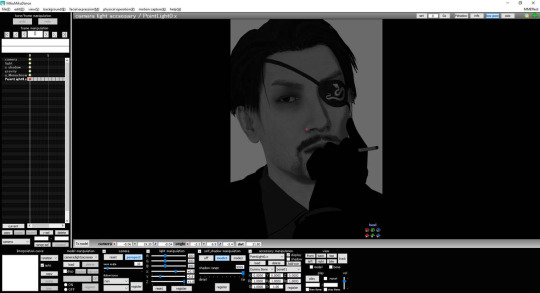

Do you notice how the scene is still bright? It won’t get very dark even if disabling sunlight and making the shadows as dark as possible. It’s necessary to decrease the exposure and gamma to darken it. At first, push the slider until you see fit, but don’t forget them yet. You’ll go back to them later for fine tuning.

If struggling to darken a scene, a volumetric cube/sphere fog can help you with that. For that render, I used the atmospheric one to darken the skydome behind the model.

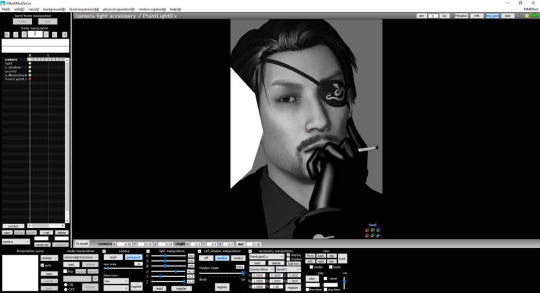

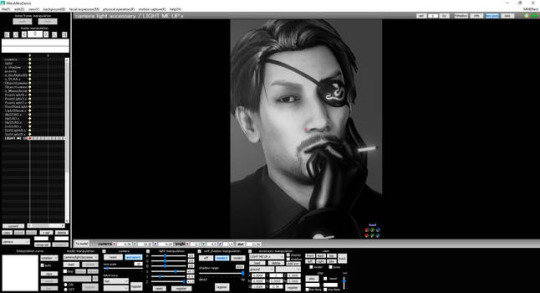

With the scene as dark as you wish, it’s time to light up lamp post behind Sugiura with a pointlight and use the directional light as a support to the right. The left can’t be left completely in the dark though, since there are other lamps ahead. With that, a pointlight was added to balance the darkness.

The bulbs have a strong emissive material plus ObjectLuminous to help with the shine. The jacket has another ObjectLuminous to help me separate it from the background, I don’t want it to become a dark mass around Sugiura’s body. Later, I added ikCluts to help me set up the mood better.

The strong rim light in the next step through PostRimLighting is mostly to separate the elements in Sugiura’s body, to define his coat’s limits within him. As a major bonus, it aids the backlight for a more natural lighting. Rim light is always welcome when the light comes from behind the model. Its other usage is tricky, but I often add it to help with 2D shading as personal preference. When messing with RGB in PostRimLighting, it’s a powerful stylization tool.

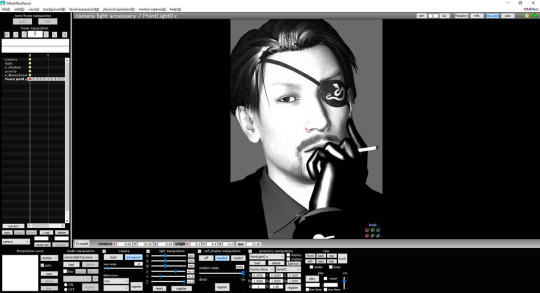

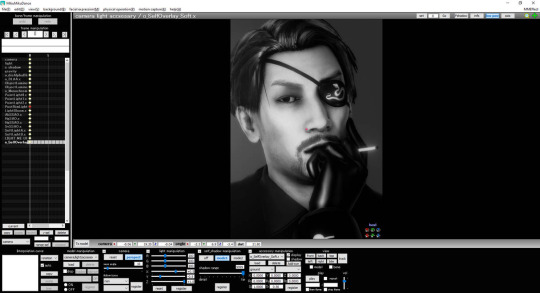

The whole atmosphere comes from careful usage of ikCluts to “lift” the shadows and tint the scenario. The lamp post is in evidence because of the BREAK THE COLOR effect, which adds a “souce of light” while darkens the rest as a post effect. It’s very, very useful for low-key rendering when combined with o SelfOverlay Soft and LightBloom.

Do you remember the exposure and gamma? In the advanced stages of the render, it’s important to play around it because it’ll get darker once you load more effects. The initial setting won’t work in most cases and that’s totally fine, you have to fine tune everything until you reach harmony.

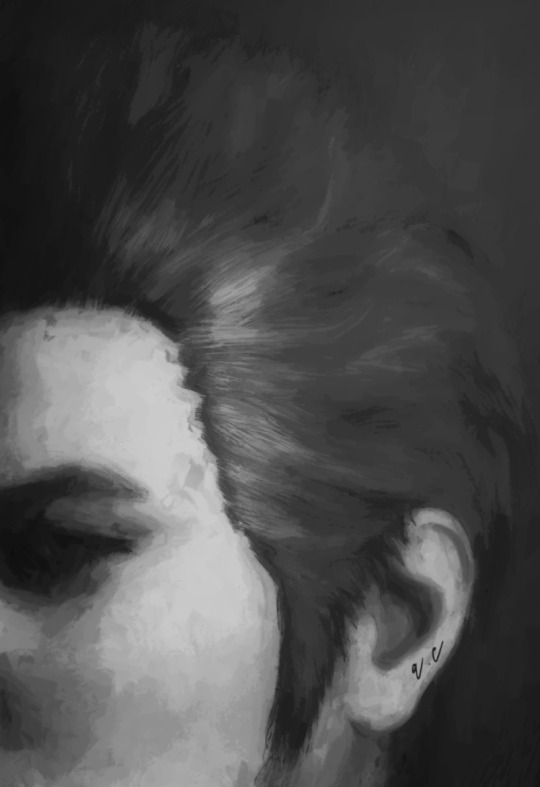

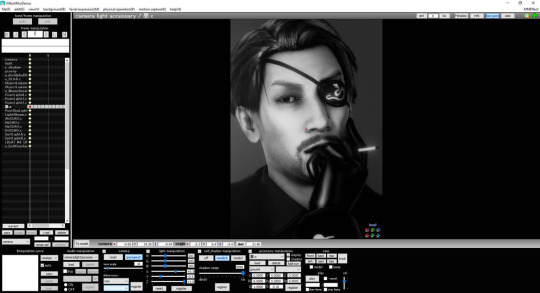

5. POST EDITING

Regardless of what others think, post editing isn’t bad and any MMDer that chooses to post edit shouldn’t be seen as inferior. Even professionals retouch their works on Photoshop, GIMP, whatever. In post editing, you can retouch the shadows and brighten the light source.

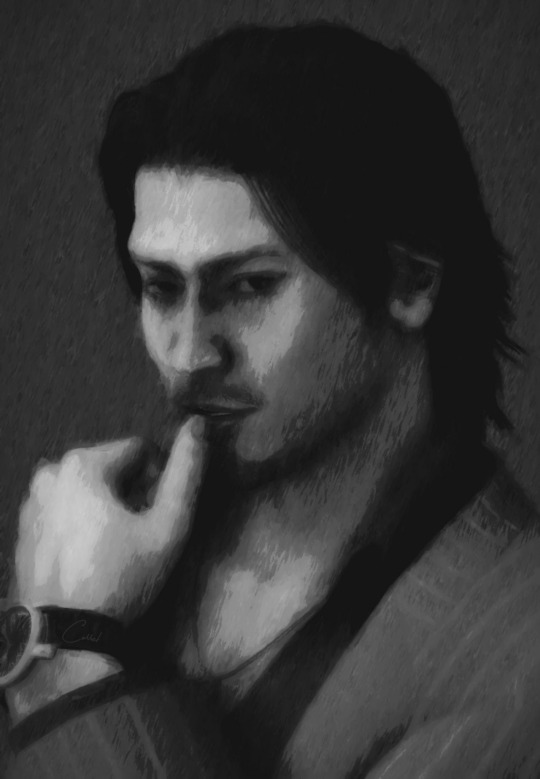

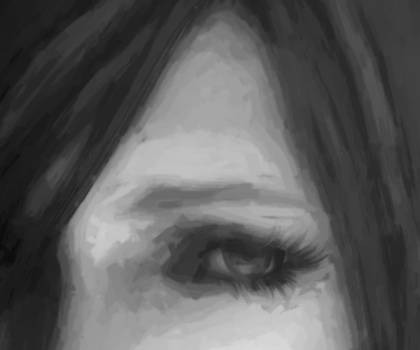

Finally, I added this painting effect, which made everything much more poetic and dramatic. Can you see how important it is to separate elements with rim light when doing paint 2D?

The lamp post almost blended in the tree, the shapes are very soft. You know it’s a lamp post because of the base, it’s logical. Same goes for Sugiura’s coat, it’s defined thanks to the rim light and would have become a solid mass without it.

6. CREDITS

Render: KS, SEGA, Ai

Eye adaptation documentation by Unreal Engine

1 note

·

View note

Text

NPR: rendering manga panels in MMD

Welcome to the manga stylization tutorial! Here, I’ll cover the core of this style for MMD as well as its usage.

Content index:

Introduction

Effects

Manga Resources

Rendering panels

Building Your Manga Panel

Credits

1. INTRODUCTION

It isn’t any news that many people underestimate MMD’s capabilities and power, not to mention how unexplored the stylization areas are. 3D rendering isn’t only about realistic and semi-realistic rendering! MMD excels in stylization because we have many cel shaders, coloring and screen texture effects in our arsenal.

With manga it isn’t any different! We all know all mangakas have their own styles and this applies to us, MMDers. I’ve been experimenting with manga stylization on and off, trying to find good effect combos.

“But ryuu, why would I want to make manga in MMD??”, you may be asking yourself. And I’ll present you some advantages of doing so:

A viable option for anyone who doesn’t know how to draw, like me.

Unique visuals! A 3D manga looks beautiful, creative and unique when done right.

Think out of the box and add fan panels of your favorite characters to your portfolio!

Since MMD models are very anime-ish, it’s so much easier to make “legit” manga looking panels! Comic theory and post editing are required, but worry not! I’ve got you covered.

2. EFFECTS

If I was to compile some of my manga renders, I’d have an extensive list of effects that produced pleasing results. I’m going to write down every one I can remember, render by render.

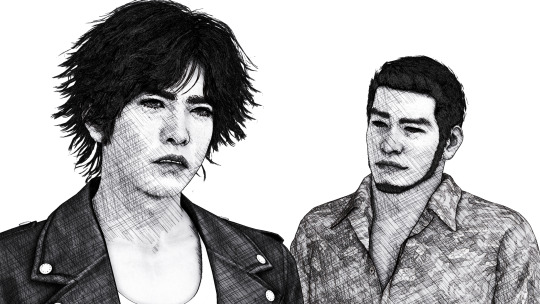

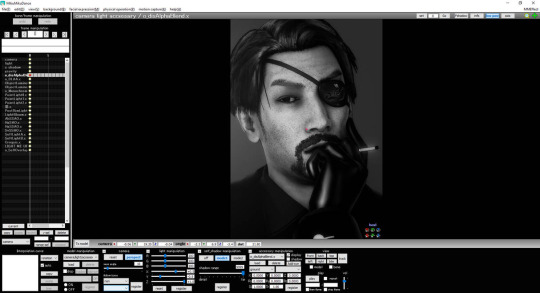

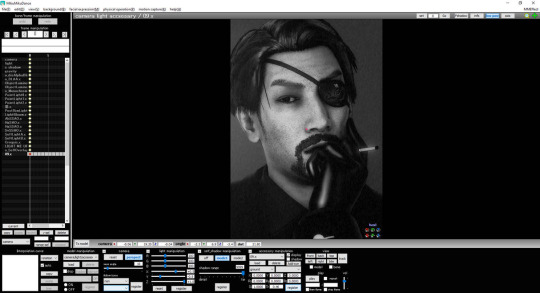

Effects: a-toon, excellent shadow, ik hatching, o color halftone 3p, o monochrome, croquis 改, draw style, o selfoverlay, o dlaa, o disalphablend.

Effects: a-toon, drawstyle, o monochrome, o dlaa, o disalphablend, o selfoverlay, croquis 改.

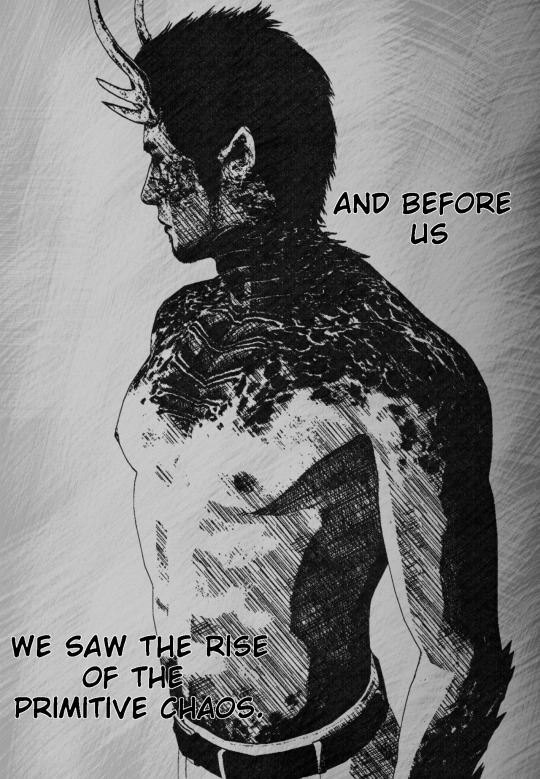

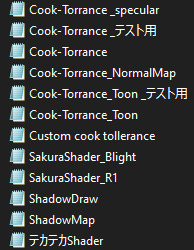

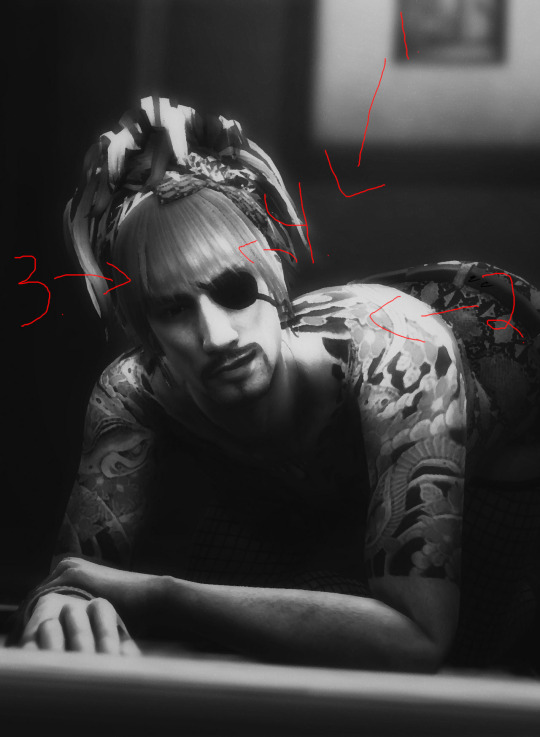

Effects: ray toon, lightbloom, ik hatching, croquis 改, powerdof, o selfoverlay, o color halftone 3p, postrimlighting, o monochrome, snapshot, fxaa.

Effects: ray toon, o monochrome, croquis 改, ik hatching, powerdof, o color halftone 3p, ik watercolor, o selfoverlay, snapshot, o tonemap の改変, fxaa.

Effects: ray toon, croquis 改, o selfoverlay, ik hatching, fxaa, snapshot.

Effects: adult shader, croquis 改 v2, o monochrome, ik hatching, hg sao, o bleach-bypass, o full alphatest, air, clarity.

Did you see how diverse the manga style can be? It’s all up to you how you want to stylize them! The key is making it black and white and adding a toon shader or a very basic one like adult. Don’t forget the contrast work!

If going for Raycast, make sure to use no more than two additional lights. I always add a directional light and go for it. NPR isn’t heavily shaded. If you stack lights, you’ll have double toon shading, which can look weird. I only advise using Raycast if you know what you’re doing.

I know there’s a manga shader, but I personally dislike it, since it doesn’t work well with the models I use.

Also, if you decide to use any bloom effect, make it subtle! We don’t want to give away every 3D aspect of your render, do we?

3. MANGA RESOURCES

So let’s say you want to make a manga panel in MMD. First of all, we need to look for the right resources, so you can post edit the renders for a reliable look. If you aren’t familiar with post editing, I recommend training it and looking at 2D manga scans for reference. The software I use is GIMP, a free and open source one that does as much as Photoshop. It opens Photoshop brushes, so no need to worry about that.

I’ll link a handful of manga resources for post editing!

Speech Bubbles

Manga speech bubbles 3 (image pack)

Manga speech bubbles 3 (GIMP brush)

Tails for speech bubbles

Shaky speech bubbles (GIMP brush)

Shaky speech bubbles (image pack)

Action bubbles (GIMP brush)

Screentones

Digital screentones

Action lines

Action lines 2

Various resources

Shoujo effects (brush)

Tone stars and shapes redux

Manga onomatopee for MMD

Regular fonts

Fonts for editing manga

Manga Temple

Augie

Felt

Wild Words

SFX fonts

Another

Big Fish Ensemble

TrashHand

Vnhltfap

4. RENDERING PANELS

First, we need to find our resolution! A panel can be 1700 x 2400, then you can copy the premade layouts for positioning. In my “oh, you handsome devil!” panel, I rendered each image as the default 1920 x 1080 and resized them later to 1570 x 883 for good margins. Thin or thick margins, that’s up to you.

Important note: when composing your scene, think of the final layout as well! Use the rule of thirds and try picturing where the speech bubbles and other effects will go. Planning is vital when making comics and it’s ok to render many tries before the final image.

Pick a nice set of effects that match your manga style and render! Dynamic shots look awesome with higher perspective. Textures like color halftone greatly improve your manga aesthetic and contrast, but careful not to exaggerate.

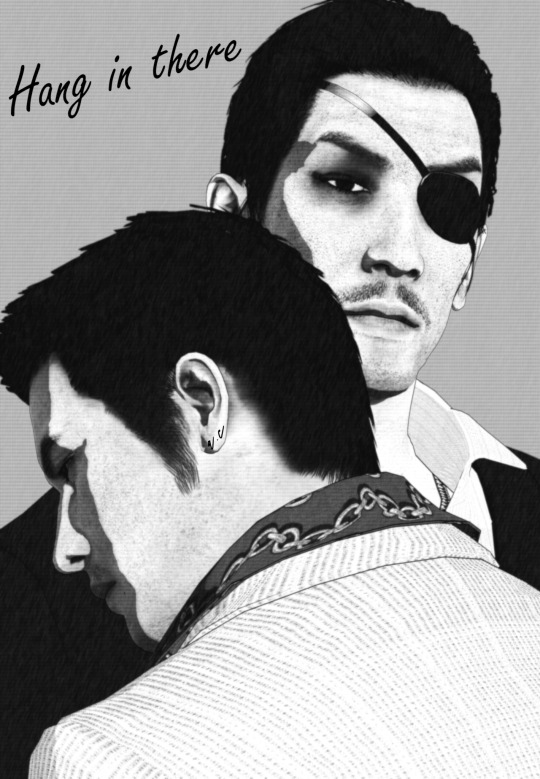

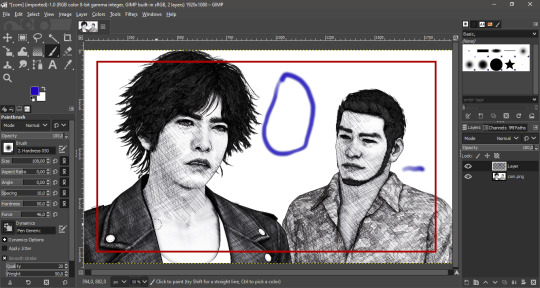

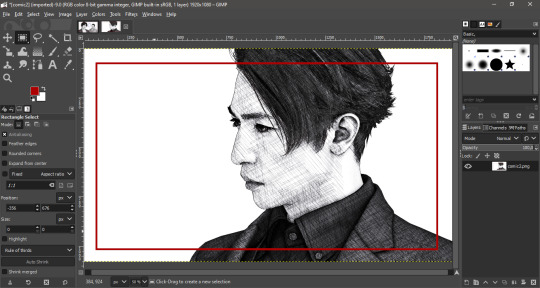

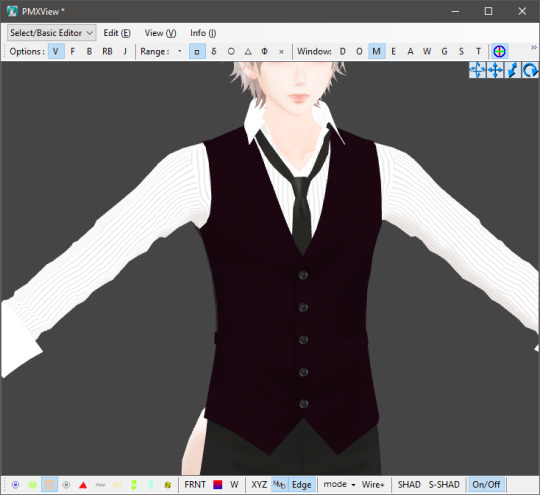

For “oh, you handsome devil!” I rendered two panels: one with Soma, one with Yagami and Kaito. The hatching there gives some shading to the models, since I loaded Adult Shader and Hg SAO to emphasize Yagami’s face.

5. BUILDING YOUR MANGA PANEL

Now in GIMP, I loaded the finished image to show you the rule of thirds guides. Notice how Yagami’s face is the focus, while Kaito isn’t left behind, as he’s close to the intersections. Also, there’s enough empty space to place a speech bubble between them.

Now let’s talk about basic comic theory! When placing text and speech bubbles, the general rule is to avoid doing so near the edges. This makes reading much easier and focused, unless a difficult reading is intentional. Always imagine an area that limits the edges of your focus so you know where to write your text.

Since you use MMD, I believe you’ve already read at least a manga panel in your entire life. A manga is read from right to left, not from left to right like in western comics.

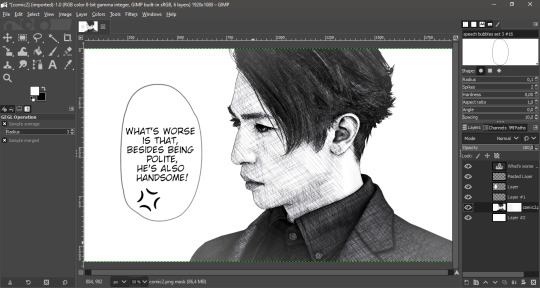

This panel was built using the basic theory. In order to make the text readable, I made it white, added black edges and a slight blur under it. Contrast between text and image is very important in manga for straight forward reading, especially if your render is complex and has many distracting elements.

Back to Yagami and Kaito, the blue marks show where I placed the speech in the finished panel. Can you see how they fit within the red area? The reader can easily pay attention to both models and text. Kaito’s “sure” was placed near the red edges for it was a minor commentary rather than speech.

All you have to do now is to place the speech bubble and write within it! Make sure your text is big enough for the readers. This guide about manga editing has useful tips and free resources for everyone. Feel free to add any other manga effect you’d like, go wild!

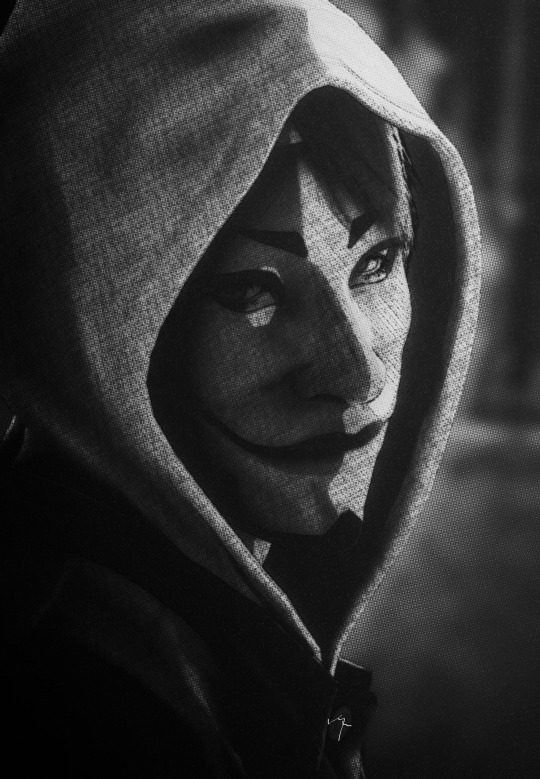

Now with Soma, these are the guidelines. It was rendered with enough empty space for background customization. I later added shoujo effects and faded edges with vignette to show he’s in Yagami’s imagination. A daydream if you will! Everything is subtle as not to stray from our objective.

Now when putting everything together in a panel, fit the image within the margins and add a somewhat thick black edge on each. You can position them first, press CTRL + A to select the entire layer and stroke the selection. The line width I used was 15 px.

6. CREDITS

Valentine’s Day Kiryu

Yakuza 0 Kiryu and Majima

Masked Sugiura

Urban Yagami

Half-dragon Kiryu

Oh, you handsome devil!

2 notes

·

View notes

Text

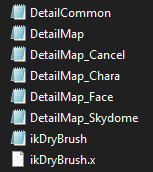

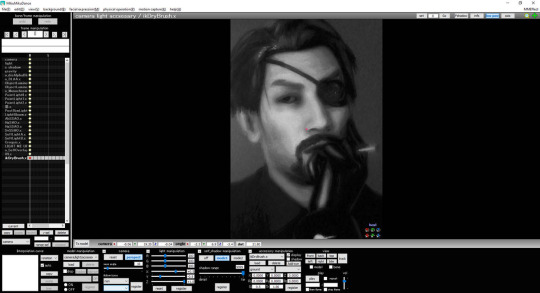

CROQUIS 改: doodle like animations in MMD

In this tutorial, I’ll show you how to make an animation in MMD looking like this. This effect can be very useful for animators interested in 2D stylization.

This Croquis mods works with any shader you want and doesn’t require complicated set up.

Before we start, you’ll have to download a modded version of Croquis 改 v2, since the website that held the original download is down nowadays. But don’t worry, it works just fine, as it’s more of a compatibility mod.

Next, download the doodle mod. You’ll notice it only comes with the .fx files. According to the modder, you have to copy-paste these files into the Croquis 改 v2 folder. That’s why we get the usopa compatible version to work as our base.

Make a copy of the usopa mod’s folder before anything. Now, copy all the .fx files and the random.png from doodle mod and paste them into the usopa mod. I recommend doing it little by little, since it looks overwhelming if pasting everything at once.

The next step is to rename the following files and add - usopa to replace the original one:

ColorMapCommon.fxsub → ColorMapCommon - usopa.fxsub

EdgeStrengthDraw.fxsub → EdgeStrengthDraw - usopa.fxsub

SubDraw.fxsub → SubDraw - usopa.fxsub

In the end, your new folder should look like this:

Done? Now just load Croquis normally in MMD and change the X, Y and Z values to 1 to activate the effect.

CREDITS

Yagami by Xelandis, KS and SEGA

Croquis 改 v2 うねうね化 by P.I.P

Croquis改 v2 usopa by たじゃっぴ

21 notes

·

View notes

Text

Fake normal mapping on textures and other tricks for MMD

In this tutorial, I’ll show you how to improve textures with a normals-on-texture technique I’ve developed. I’ll focus on game ports, since they need it the most.

Content index:

Introduction

Applying The Technique

Useful Tricks

Credits

1. INTRODUCTION

In 3D, a normal map is a texture mapping technique very useful for adding details to meshes without using more polygons. It’s an “improved” bump map, which simulates bumps and wrinkles on the object’s surface.

Everyone knows MMD doesn’t support native bump or normal mapping, meaning the user only achieves it by using a shader and applying the texture as spa. However, not every shader has normal map support, plus a low-end user can’t have it without heavily (CPU) shading the model.

With that in mind, I developed a lifesaving technique for those with weaker hardwares. Or if you want to simply keep your normals while playing with other shaders.

2. APPLYING THE TECHNIQUE

First of all, we need to spot the normal map in the model’s files. They usually look like this one:

Normally, they’re added to the texture in the 3D software. What we’re going to do here is to add that texture to the base texture. For this, we’ll need a photo editing software. I use GIMP because it’s free, open source and low-end friendly. You can download it here.

You’ll open the base texture and paste the normal map over it. I’m not going to show them here because they’re from a game. In green, we have the base texture; orange is the normal map.

You’re going to select the normal map layer and decrease its saturation to black and white. It’s a necessary step, we don’t want a colored details messing up the texture.

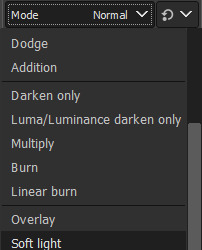

Next, change normal map’s the layer mode to soft light and you’ll have only the details applied to the base texture.

In practice on a model, the base texture is going to change like that. It gives a lot of detail to the model’s face.

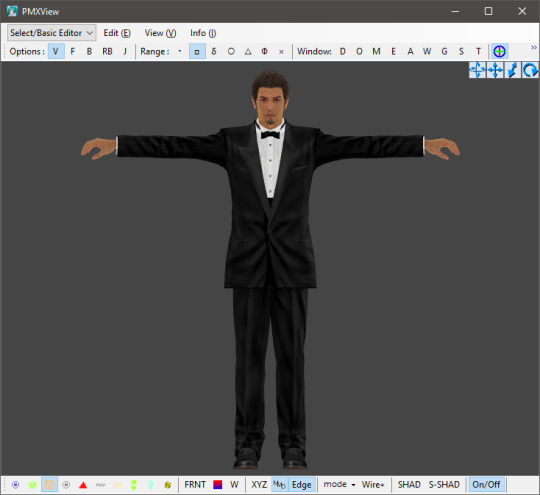

Akiyama-san looks more tired than ever.

This technique is extra useful when dealing with clothes so they don’t look horrible. Kiryu-san’s suit looks much better with the improved texture. You can do that to any part that has a normal map, even hair.

3. USEFUL TRICKS

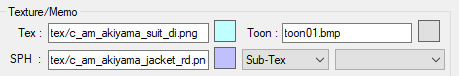

Another texture trick is adding patterns to clothes, like shirt and suit stripes, maybe leather. Let’s call Akiyama-san back to help us with that. This is the default suit texture with normal maps, but it has no stripes! Odd, right? Because his suit has it in the game!

Hi there, Trakinas face!

His files come with a pattern for his suit. I picked this texture and added it as a sph sub-tex. Now his suit looks much better, although has no other sph. I tried coming up with a gimmick to work around this, but no success. Better leave it to the shader.

Another example using Kasuga-san’s shirt:

It won’t work all the time though. Also, some patterns will make the part too dark to see anything, so we have to do it the traditional way.

It didn’t work here. The base texture is almost solid black.

So what we do is duplicate the part you want to apply the pattern, add it as a normal texture and change the UV size. It’ll look a little wonky, but that’s the nature of it. You’re always free to try adding it to the texture on GIMP/Photoshop or edit the UV later.

Then make the duplicate part opaque. I usually use 0,3 so it doesn’t interfere a lot. You can keep the sph or not, it’s up to you.

If your game port comes with a texture mapping for shadow and highlights, you can add it to the base texture the same way we did before with the normal maps. I recommend placing the shadow one above the normal.

It helps with shading and creates results closer to the game. Yagami-san looks a lot better with improved textures.

4. CREDITS

Akiyama by lezisell, KS and SEGA

Ichiban by KS and SEGA

Cabaret Kiryu by spooky-majora, KS and SEGA

Lírio by KS

Yagami by Xelandis, KS and SEGA

12 notes

·

View notes

Text

RAY-MMD: custom IES profiles

In this tutorial, you’ll learn how to make custom IES spot lights using the IES2HDR tool. IES lights are useful for simulating lamps, headlights, any man made light source.

Content index:

IES2HDR

Credits

Further Reading

1. IES2HDR

First of all, download this pack of IES profiles for Maya. Don’t worry about all the other things that come with it, we only need the .ies files inside these folders.

Raycast won’t load them as .ies, so we need to convert it to .hdr first. Although fear not, one of Ray’s tools is a script called IES2HDR, which is located inside the Tools folder. Extract that .rar file, make a dummy folder with all the .ies files and drag and drop that dummy folder in the script.

The following screenshots are an illustrated tutorial that comes with IES2HDR:

Once converted, open the Lighting folder and make a copy of the SpotLightIES folder for every IES profile you want to make. Then open it, head to Default and replace the original IES.fx with the new one. Just drag and drop it to rename.

Once finished, load the IES spot light as usual in MMD and load the .fx of any profile you wish to use. Some are pretty different. I didn’t change any morph when picking other profiles.

2. CREDITS

Stage by はまち

IES profiles by Dylan Sisson and updated by Leif Pedersen

3. FURTHER READING

If you wish to learn more about Raycast lightings, I recommend checking out my lighting tutorial, it covers pretty much everything. This side note shall be useful too.

1 note

·

View note

Text

MME Laughing Man FX READING: a translation by ryuu

The following tutorial is an English translation of the original one in Japanese by Dance Intervention P.

Disclaimer: coding isn’t my area, not even close to my actual career and job (writing/health). I have little idea of what’s going on here and I’m relying on my IT friends to help me with this one.

Content index:

Introduction

Overall Flow

Parameter Declaration

Billboard Drawing

Final Notes

1. INTRODUCTION

This documentation shows how to edit the Laughing Man effect and read its HLSL coding. Everything in this tutorial will try to stay as faithful as possible to the original one in Japanese

It was translated from Japanese to English by ryuu. As I don’t know how to contact Dance Intervention P for permission to translate and publish it here, the original author is free to request me to take it down. The translation was done with the aid of the online translator DeepL and my friends’ help. This documentation has no intention in replacing the original author’s.

Any coding line starting with “// [Japanese text]” is the author’s comments. If the coding isn’t properly formatted on Tumblr, you can visit the original document to check it. The original titles of each section were added for ease of use.

2. OVERALL FLOW (全体の流れ)

Prepare a flat polygon that faces the screen (-Z axis) direction.

Perform world rotation and view rotation inversion on objects.

Convert coordinates as usual.

World rotation and view rotation components cancel each other out and always face the screen.

3. PARAMETER DECLARATION (パラメータ宣言)

4 // site-specific transformation matrix

5 float4x4 WorldViewProjMatrix : WORLDVIEWPROJECTION;

6 float4x4 WorldViewMatrixInverse : WORLDVIEWINVERSE;

7

• WorldViewProjMatrix: a matrix that can transform vertices in local coordinates to projective coordinates with the camera as the viewpoint in a single step.

• WorldViewMatrixInverse: the inverse of a matrix that can transform vertices in local coordinates to view coordinates with the camera as the viewpoint in a single step.

• Inverse matrix: when E is the unit matrix for a matrix A whose determinant is non-zero, the matrix B that satisfies AB=BA=E is called the inverse of A and is denoted by A-1 . Because of this property, it’s used to cancel the transformation matrix.

• Unit matrix: asquare matrix whose diagonal component is 1 and the others are 0. When used as a transformation matrix, it means that the coordinates of the vertices are multiplied by 1. In other words:

8 texture MaskTex : ANIMATEDTEXTURE <

9 string ResourceName = "laughing_man.png";

10 >;

• ANIMATEDTEXTURE: textures that animate in response to frame time. Animated GIF and animated PNG (APNG) are supported.

• APNG: Mozilla Corporation's own specified animation format that is backward compatible with PNG. I hacked libpng to support it, but it was rejected by the PNG group because the specification isn’t aesthetically pleasing.

11 sampler Mask = sampler_state {

12 texture = <MaskTex>;

13 MinFilter = LINEAR;

14 MagFilter = LINEAR;

15 MipFilter = NONE;

16 AddressU = CLAMP;

17 AddressV = CLAMP;

18 };

• MinFilter: methods used for the reduction filter.

• MagFilter: methods used for the expansion filter.

• MipFilter: methods used for MIPMAP.

• AdressU: method used to resolve u-texture coordinates that are outside the 0-1 range.

• AdressV: method used to resolve v-texture coordinates that are outside the 0-1 range.

• LINEAR: bilinear interpolation filtering. Uses a weighted average region of 2×2 texels inscribed in the pixel of interest.

• NONE: disable MIPMAP and use the expansion filter.

• CLAMP: texture coordinates outside the range [0.0, 1.0] will have a texture color of 0.0 or 1.0, respectively.

• MIPMAP: a set of images that have been precomputed and optimized to complement the main texture image. Switch between images depending on the level of detail.

21 static float3x3 BillboardMatrix = {

22 normalize(WorldViewMatrixInverse[0].xyz),

23 normalize(WorldViewMatrixInverse[1].xyz),

24 normalize(WorldViewMatrixInverse[2].xyz),

25 };

Obtain the rotation scaling component xyz of the inverse matrix, normalize it by using normalize, and extract the rotation component. Do this for each row. The 4x4 inverse matrix contains a translation component in the fourth row, so it’s cut off and made into a 3x3 matrix.

The logical meaning of the matrix hasn’t been investigated yet. Without normalization, the size of the display accessory is 1/10, which suggests that the world expansion matrix component is used as the unit matrix. Also, each row corresponds to an x,y,z scale.

29 struct VS_OUTPUT

30 {

31 float4 Pos : POSITION; // projective transformation coordinates

32 float2 Tex : TEXCOORD0; // texture coordinates

33 };

A structure for passing multiple return values between shader stages.

4. BILLBOARD DRAWING (ビルボード描画)

35 // vertex shader

36 VS_OUTPUT Mask_VS(float4 Pos : POSITION, float2 Tex : TEXCOORD0)

37 {

38 VS_OUTPUT Out;

39

40 // billboard

41 Pos.xyz = mul( Pos.xyz, BillboardMatrix );

BillboardMatrix is a 3x3 rotation matrix, so multiply it by the xyz3 component of Pos.

If the object is fixed in the world and doesn’t rotate, then Pos.xyz = mul(Pos.xyz, (float3x3)ViewInverseMatrix); or Pos.xyz = mul(Pos.xyz, (float3x3)ViewTransposeMatrix); cancels the screen rotation. Since the rotation matrix is an orthogonal matrix, the transpose and inverse matrices are equal.

42 // world-view projection transformation of camera viewpoint.

43 Out.Pos = mul( Pos, WorldViewProjMatrix );

44

45 // texture coordinates

46 Out.Tex = Tex;

47

48 return Out;

49 }

Perform world-view projection transformation and return the structure as usual.

51 // pixel shader

52 float4 Mask_PS( float2 Tex :TEXCOORD0 ) : COLOR0

53 {

54 return tex2D( Mask, Tex );

55 }

Return the color of the texture retrieved from the sampler.

57 technique MainTec < string MMDPass = "object"; > {

58 pass DrawObject {

59 ZENABLE = false;

60 VertexShader = compile vs_1_1 Mask_VS();

61 PixelShader = compile ps_2_0 Mask_PS();

62 }

63 }

Self-shadow disabled? (technique is ignored by object_ss when enabled), run without depth information.

• ZENABLE: enable seismic intensity information (Z buffer) when drawing pixels and make drawing decisions.

5. FINAL NOTES

For further reading on HLSL coding, please visit Microsoft’s official English reference documentation.

3 notes

·

View notes

Text

RAY-MMD: fixing realistic hair like a king

It took me long to remember writing this tutorial, I’m sorry!

As many know, realistic hair in MMD looks horrible because of how it reads the alpha channels. This makes working with game rips a nightmare depending on the model, but fear not! I’ve been rendering realistic models for so long that fixing fucked up alphas became part of my work.

Also, I’m in no way an expert on the matter, I only do things that don’t demand much from my sanity because honestly? I don’t have energy to waste overworking on hair when Raycast does most of the job for me.

Content Index:

Core Work in PMXe

Alphas in Raycast

Final notes

Credits

1. CORE WORK IN PMXE

If you’re new to fixing game rips, I’m going to cover some basics here of fixing alphas in PMXe and then covering up any flaws later in MMD. Notice that this tutorial is aimed toward the realistic models for our quality of life!

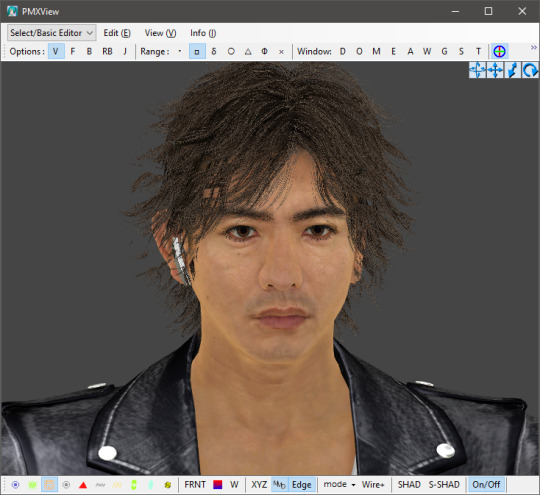

First things first. This tutorial’s guest is Hoshino-san! He’s going to help me illustrate the alpha fixing, as I haven’t worked on his model yet.

Good to see you, Hoshino-san!

As you can see, his hair looks like shit, but that’s an easy fix. A common and straight forward cut like that one won’t take long. Messy hair like Yagami’s can be hell on earth, so it’s best to do fix some parts here and there, then leave it to Ray.

I hate you (affectionate).

Raw hair from his untouched topless model. I’m so sorry, Yagami-san...

Now back to Hoshino-san, a good way of dealing with it is by separating the hair meshes with the UV plug-in. If you’re new to this, I’ll show you step by step how to open it.

Now running the plug-in, look for the hair mesh on the material list. The hair must always be at the end of the mesh order for alpha priority. That’s what happens when otherwise.

Back to the plug-in, I found our hair mesh. What we’ll do is to select vertical parts of it and hit Show Selected Vertices to split them. Don’t forget to select the material and make the vertices visible before doing so.

After splitting, hit Re-Import Model Data to refresh the plug-in and update the materials. Now you split the other major meshes until PMXe “refuses” to go further. Sometimes, you’ll be done by then, but that wasn’t Hoshino-san’s case.

He looks better, but still need fixing. We can close the UV plug-in and proceed to manually select and split meshes using face and triangle selections. You can active or not the wireframe for ease of use, it’s personal preference.

We’ll click on desired mesh and hit CTRL + X to select every mesh linked to the one you picked. Be aware that this doesn’t work on every model, some simply can’t do it for whatever reason (I’m not a PMXe expert).

This is a tedious process, you have to keep checking and rearranging the meshes. If you want superb results, good for you! Expect spending a lot of time on this. I did the bare minimum and Hoshino-san looks much better now.

Now you save updated model and go have fun!

2. ALPHAS IN RAYCAST

“But Lírio, what if I want to have fun in MMD and my model’s hair looks like puke when I load Raycast?”, one may think. Ray sucks for any transparent material, simple as that. Of course you can work around it using the transparent material, but the results aren’t that predictable if you ask me. While it looks awesome for tears and glasses, you can’t use this material on hair.

For any alpha fix, we use dendewa’s material. It’s easy to set up and we’ll go step by step. Before anything, I want to show you how hair looks if you load main.fx and leave it be.

Fucked, right?

It doesn’t get any better when we load main_ex_mask.fx...

So our solution is to use the alpha material. To make it work, first load main_alpha.fx on the hair and any other transparent part. It’s located in the Alpha Fix folder.

Now go to the MaterialMap tab and select any material from the Hair Materials folder. I like picking hair with anisotropy 3x for the shiny look or hair with SSS.

Anisotropy 3x.

SSS.

Hoshino-san looks great! However, some hair details are missing. The same happens to eyelashes, they disappear. How we work around this? We add Croquis! Just change the Z value to 1 and it’ll read the textures for you. Setting X and Y values to 1 grant more edges.

While it isn’t the ideal, it works fine. Don’t worry about this effect killing the 3D aspect of your renders. You can always change the Tr and Si to a smaller value.

3. FINAL NOTES

In other scenarios, Croquis is a must to save the model’s hair with Ray. Unfortunately, you’ll have to deal with black edges around the hair. The tons of effects that a render has will probably hide or make them less obvious.

Raw alpha fix.

Tr 1.

Tr 0.5.

Do you remember Yagami-san’s hair from before? That’s him now! It isn’t perfect, but does the job just fine. In his case, X was needed to cover the smallest hair strands. The first image shows X, Y and Z on 1 and Si on 0.5; the second, Si 1.

4. CREDITS

Connor by Quantic Dream

Yagami and Hoshino by SEGA

7 notes

·

View notes

Text

Additions regarding Ray lights

I’d like to add some things related to this post about Raycast lighting. I’ll be brief. Following my two most recent renders as of today, 09/05/21, I tried out some new things with volumetric fog, skydome and spot lights.

Content index

Skydome

Volumetric Fog

Spot Light

Credits

1. SKYDOME

If you’ve read my advanced image rendering guide, I mention using dendewa’s Blank IBL skydome for lighting. However, I’ve encountered some issues with it (and again, it’s only a matter of personal taste) regarding how materials behave when this one is loaded and the stronger shadows too.

A much “safer” and neutral option I found was loading Sky Hemisphere. This skydome is basic and offers softer lighting when you load its .fx, making lighting easier to adjust.

To clarify this difference, I asked Daigo-san to help me out! The first image features Sky Hemisphere, while the second, Blank IBL. The hair has anisotropy 3x for the highlights, a shiny look.

Can you notice the huge difference between them? The shadows are much stronger with Blank IBL, they’re harsh on the model. On the other hand, Sky Hemisphere features soft shading with visible shadows. Plus the hair isn’t a black mass with little shiny spots. This “washed out” appearance can be fixed with materials and increased contrast.

My general advice is: you don’t know what you’re doing? Stick to Sky Hemisphere instead of Blank IBL. Dendewa recommends the latter for custom physical lights. I, ryuu, say for myself that Sky Hemisphere is great for that, better even. My renders with Sky Hemisphere had much satisfying results.

2. VOLUMETRIC FOG

Volumetric fogs, either cube or sphere, can be used to darken the scene without messing with skydome properties. It’s a good tweak that can be improved with Ray’s controller by changing the exposure and gamma. I didn’t notice good enough results when decreasing the sunlight, but that’s a matter of personal taste and technique.

To tweak the volumetric fog like that, you can max out the width, height and depth, and increase the intensity until it’s good enough for your project. Then you play with light and everything else. It’ll look like this when raw.

It isn’t much, but already helps!

The render below was darkened with a volumetric cube. I’ll provide both raw and retouched versions for you to see it wasn’t darkened in post-editing.

Tube lights were good for some hair higlighting, but I wouldn’t do it again, as it was kind of annoying to set up.

3. SPOT LIGHT

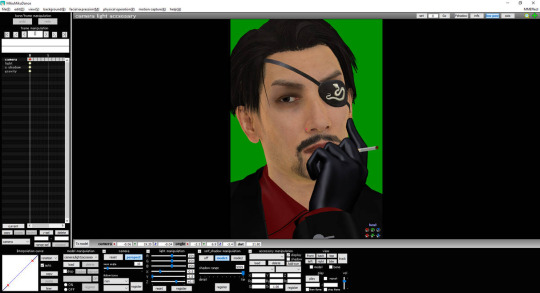

Spot lights proved to be a solid pick for general lighting for having the best shadow quality of all of the available lights. The lit area is easily adjusted by increasing the angle. When adding the shadow .fx, it looks incredibly good on realistic hair for shading.

The render below was made using spot lights and a directional light. Raw and post-edited versions included.

I’ll later write a tutorial about color theory applied to MMD and how it can improve your rendering. I did my homework and studied some of it!

4. CREDITS

Daigo, Majima and Yagami by SEGA

Heart Sunglasses by 1010

3 notes

·

View notes

Text

MMD FX file reading for shaders: a translation by ryuu

The following tutorial is an English translation of the original one in Japanese by Dance Intervention P.

This English documentation was requested by Chestnutscoop on DeviantArt, as it’ll be useful to the MME modding community and help MMD become open-source for updates. It’s going to be an extensive one, so take it easy.

Disclaimer: coding isn’t my area, not even close to my actual career and job (writing/health). I have little idea of what’s going on here and I’m relying on my IT friends to help me with this one.

Content Index:

Introduction

Overall Flow

Parameter Declaration

Outline Drawing

Non-Self-shadow Rendering

Drawing Objects When Self-shadow is Disabled

Z-value Plot For Self-shadow Determination

Drawing Objects in Self-shadowing

Final Notes

1. INTRODUCTION

This documentation contains the roots of .fx file reading for MME as well as information on DirectX and programmable shaders while reading full.fx version 1.3. In other words, how to use HLSL for MMD shaders. Everything in this tutorial will try to stay as faithful as possible to the original text in Japanese.

It was translated from Japanese to English by ryuu. As I don’t know how to contact Dance Intervention P for permission to translate and publish it here, the original author is free to request me to take it down. The translation was done with the aid of the online translator DeepL and my friends’ help. This documentation has no intention in replacing the original author’s.

Any coding line starting with “// [Japanese text]” is the author’s comments. If the coding isn’t properly formatted on Tumblr, you can visit the original document to check it. The original titles of each section were added for ease of use.

2. OVERALL FLOW (全体の流れ)

Applicable technique → pass → VertexShader → PixelShader

• Technique: processing of annotations that fall under <>.

• Pass: processing unit.

• VertexShader: convert vertices in local coordinates to projective coordinates.

• PixelShader: sets the color of a vertex.

3. PARAMETER DECLARATION (パラメータ宣言)

9 // site-specific transformation matrix

10 float4x4 WorldViewProjMatrix : WORLDVIEWPROJECTION;

11 float4x4 WorldMatrix : WORLD;

12 float4x4 ViewMatrix : VIEW;

13 float4x4 LightWorldViewProjMatrix : WORLDVIEWPROJECTION < string Object = “Light”; >;

• Float4x4: 32-bit floating point with 4 rows and 4 columns.

• WorldViewProjMatrix: a matrix that can transform vertices in local coordinates to projective coordinates with the camera as the viewpoint in a single step.

• WorldMatrix: a matrix that can transform vertices in local coordinates into world coordinates with the camera as the viewpoint.

• ViewMatrix: a matrix that can convert world coordinate vertices to view coordinates with the camera as the viewpoint.

• LightWorldViewProjMatrix: a matrix that can transform vertices in local coordinates to projective coordinates with the light as a viewpoint in a single step.

• Local coordinate system: coordinates to represent the positional relationship of vertices in the model.

• World coordinate: coordinates to show the positional relationship between models.

• View coordinate: coordinates to represent the positional relationship with the camera.

• Projection Coordinates: coordinates used to represent the depth in the camera. There are two types: perspective projection and orthographic projection.

• Perspective projection: distant objects are shown smaller and nearby objects are shown larger.

• Orthographic projection: the size of the image does not change with depth.

15 float3 LightDirection : DIRECTION < string Object = “Light”; >;

16 float3 CameraPosition : POSITION < string Object = “Camera”; >;

• LightDirection: light direction vector.

• CameraPosition: world coordinates of the camera.

18 // material color

19 float4 MaterialDiffuse : DIFFUSE < string Object = “Geometry”; >;

20 float3 MaterialAmbient : AMBIENT < string Object = “Geometry”; >;

21 float3 MaterialEmmisive : EMISSIVE < string Object = “Geometry”; >;

22 float3 MaterialSpecular : SPECULAR < string Object = “Geometry”; >;

23 float SpecularPower : SPECULARPOWER < string Object = “Geometry”; >;

24 float3 MaterialToon : TOONCOLOR;

25 float4 EdgeColor : EDGECOLOR;

• float3: no alpha value.

• MaterialDiffuse: diffuse light color of material, Diffuse+A (alpha value) in PMD.

• MaterialAmbient: ambient light color of the material; Diffuse of PMD?

• MaterialEmmisive: light emitting color of the material, Ambient in PMD.

• MaterialSpecular: specular light color of the material; PMD’s Specular.

• SpecularPower: specular strength. PMD Shininess.

• MaterialToon: shade toon color of the material, lower left corner of the one specified by the PMD toon texture.

• EdgeColor: putline color, as specified by MMD’s edge color.

26 // light color

27 float3 LightDiffuse : DIFFUSE < string Object = “Light”; >;

28 float3 LightAmbient : AMBIENT < string Object = “Light”; >;

29 float3 LightSpecular : SPECULAR < string Object = “Light”; >;

30 static float4 DiffuseColor = MaterialDiffuse * float4(LightDiffuse, 1.0f);

31 static float3 AmbientColor = saturate(MaterialAmbient * LightAmbient + MaterialEmmisive);

32 static float3 SpecularColor = MaterialSpecular * LightSpecular;

• LightDiffuse: black (floa3(0,0,0))?

• LightAmbient: MMD lighting operation values.

• LightSpecular: MMD lighting operation values.

• DiffuseColor: black by multiplication in LightDiffuse?

• AmbientColor: does the common color of Diffuse in PMD become a little stronger in the value of lighting manipulation in MMD?

• SpecularColor: does it feel like PMD’s Specular is a little stronger than MMD’s Lighting Manipulation value?

34 bool parthf; // perspective flags

35 bool transp; // semi-transparent flag

36 bool spadd; // sphere map additive synthesis flag

37 #define SKII1 1500

38 #define SKII2 8000

39 #define Toon 3

• parthf: true for self-shadow distance setting mode2.

• transp: true for self-shadow distance setting mode2.

• spadd: true in sphere file .spa.

• SKII1:self-shadow A constant used in mode1. The larger the value, the weirder the shadow will be, and the smaller the value, the weaker the shadow will be.

• SKII2: self-shadow A constant used in mode2. If it is too large, the self-shadow will have a strange shadow, and if it is too small, it will be too thin.

• Toon: weaken the shade in the direction of the light with a close range shade toon.

41 // object textures

42 texture ObjectTexture: MATERIALTEXTURE;

43 sampler ObjTexSampler = sampler_state {

44 texture = <ObjectTexture>;

45 MINFILTER = LINEAR;

46 MAGFILTER = LINEAR;

47 };

48

• ObjectTexture: texture set in the material.

• ObjTexSampler: setting the conditions for acquiring material textures.

• MINIFILTER: conditions for shrinking textures.

• MAGFILTER: conditions for enlarging a texture.

• LINEAR: interpolate to linear.

49 // sphere map textures

50 texture ObjectSphereMap: MATERIALSPHEREMAP;

51 sampler ObjSphareSampler = sampler_state {

52 texture = <ObjectSphereMap>;

53 MINFILTER = LINEAR;

54 MAGFILTER = LINEAR;

55 };

• ObjectSphereMap: sphere map texture set in the material.

• ObjSphareSampler: setting the conditions for obtaining a sphere map texture.

57 // this is a description to avoid overwriting the original MMD sampler. Cannot be deleted.

58 sampler MMDSamp0 : register(s0);

59 sampler MMDSamp1 : register(s1);

60 sampler MMDSamp2 : register(s2);

• register: assign shader variables to specific registers.

• s0: sampler type register 0.

4. OUTLINE DRAWING (輪郭描画)

Model contours used for drawing, no accessories.

65 // vertex shader

66 float4 ColorRender_VS(float4 Pos : POSITION) : POSITION

67 {

68 // world-view projection transformation of camera viewpoint.

69 return mul( Pos, WorldViewProjMatrix );

70 }

Return the vertex coordinates of the camera viewpoint after the world view projection transformation.

Parameters

• Pos: local coordinates of the vertex.

• POSITION (input): semantic indicating the vertex position in the object space.

• POSITION (output): semantic indicating the position of a vertex in a homogeneous space.

• mul (x,y): perform matrix multiplication of x and y.

Return value

Vertex coordinates in projective space; compute screen coordinate position by dividing by w.

• Semantics: communicating information about the intended use of parameters.

72 // pixel shader

73 float4 ColorRender_PS() : COLOR

74 {

75 // fill with outline color

76 return EdgeColor;

77 }

Returns the contour color of the corresponding input vertex.

Return value

Output color

• COLOR: output color semantic.

79 // contouring techniques

80 technique EdgeTec < string MMDPass = "edge"; > {

81 pass DrawEdge {

82 AlphaBlendEnable = FALSE;

83 AlphaTestEnable = FALSE;

84

85 VertexShader = compile vs_2_0 ColorRender_VS();

86 PixelShader = compile ps_2_0 ColorRender_PS();

87 }

88 }

Processing for contour drawing.

• MMDPASS: specify the drawing target to apply.

• “edge”: contours of the PMD model.

• AlphaBlendEnable: set the value to enable alpha blending transparency. Blend surface colors, materials, and textures with transparency information to overlay on another surface.

• AlphaTestEnable: per-pixel alpha test setting. If passed, the pixel will be processed by the framebuffer. Otherwise, all framebuffer processing of pixels will be skipped.

• VertexShader: shader variable representing the compiled vertex shader.

• PixelShader: shader variable representing the compiled pixel shader.

• vs_2_0: vertex shader profile for shader model 2.

• ps_2_0: pixel shader profile for shader model 2.

• Frame buffer: memory that holds the data for one frame until it is displayed on the screen.

5. NON-SELF-SHADOW SHADOW RENDERING (非セルフシャドウ影描画)

Drawing shadows falling on the ground in MMD, switching between showing and hiding them in MMD's ground shadow display.

94 // vertex shader

95 float4 Shadow_VS(float4 Pos : POSITION) : POSITION

96 {

97 // world-view projection transformation of camera viewpoint.

98 return mul( Pos, WorldViewProjMatrix );

99 }

Returns the vertex coordinates of the source vertex of the shadow display after the world-view projection transformation of the camera viewpoint.

Parameters

• Pos: local coordinates of the vertex from which the shadow will be displayed.

Return value

Vertex coordinates in projective space.

101 // pixel shader

102 float4 Shadow_PS() : COLOR

103 {

104 // fill with ambient color

105 return float4(AmbientColor.rgb, 0.65f);

106 }

Returns the shadow color to be drawn. The alpha value will be reflected when MMD's display shadow color transparency is enabled.

Return value

Output color

108 // techniques for shadow drawing

109 technique ShadowTec < string MMDPass = "shadow"; > {

110 pass DrawShadow {

111 VertexShader = compile vs_2_0 Shadow_VS();

112 PixelShader = compile ps_2_0 Shadow_PS();

113 }

114 }

Processing for non-self-shadow shadow drawing.

• “shadow”: simple ground shadow.

6. DRAWING OBJECTS WHEN SELF-SHADOW IS DISABLED (セルフシャドウ無効時オブジェクト描画)

Drawing objects when self-shadowing is disabled. Also used when editing model values.

120 struct VS_OUTPUT {

121 float4 Pos : POSITION; // projective transformation coordinates

122 float2 Tex : TEXCOORD1; // texture

123 float3 Normal : TEXCOORD2; // normal vector

124 float3 Eye : TEXCOORD3; // position relative to camera

125 float2 SpTex : TEXCOORD4; // sphere map texture coordinates

126 float4 Color : COLOR0; // diffuse color

127 };

A structure for passing multiple return values between shader stages. The final data to be passed must specify semantics.

Parameters

• Pos:stores the position of a vertex in projective coordinates as a homogeneous spatial coordinate vertex shader output semantic.

• Tex: stores the UV coordinates of the vertex as the first texture coordinate vertex shader output semantic.

• Normal: stores the vertex normal vector as the second texture coordinate vertex shader output semantic.

• Eye: (opposite?) stores the eye vector as a #3 texture coordinate vertex shader output semantic.

• SpTex: stores the UV coordinates of the vertex as the number 4 texture coordinate vertex shader output semantic.

• Color: stores the diffuse light color of a vertex as the 0th color vertex shader output semantic.

129 // vertex shader

130 VS_OUTPUT Basic_VS(float4 Pos : POSITION, float3 Normal : NORMAL, float2 Tex : TEXCOORD0, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon)

131 {

Converts local coordinates of vertices to projective coordinates. Sets the value to pass to the pixel shader, which returns the VS_OUTPUT structure.

Parameters

• Pos: local coordinates of the vertex.

• Normal: normals in local coordinates of vertices.

• Tex: UV coordinates of the vertices.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

• uniform: marks variables with data that are always constant during shader execution.

Return value

VS_OUTPUT, a structure passed to the pixel shader.

132 VS_OUTPUT Out = (VS_OUTPUT)0;

133

Initialize structure members with 0. Error if return member is undefined.

134 // world-view projection transformation of camera viewpoint.

135 Out.Pos = mul( Pos, WorldViewProjMatrix );

136

Convert local coordinates of vertices to projective coordinates.

137 // position relative to camera

138 Out.Eye = CameraPosition - mul( Pos, WorldMatrix );

The opposite vector of eye? Calculate.

139 // vertex normal

140 Out.Normal = normalize( mul( Normal, (float3x3)WorldMatrix ) );

141

Compute normalized normal vectors in the vertex world space.

• normalize (x): normalize a floating-point vector based on x/length(x).

• length (x): returns the length of a floating-point number vector.

142 // Diffuse color + Ambient color calculation

143 Out.Color.rgb = AmbientColor;

144 if ( !useToon ) {

145 Out.Color.rgb += max(0,dot( Out.Normal, -LightDirection )) * DiffuseColor.rgb;

By the inner product of the vertex normal and the backward vector of the light, the influence of the light (0-1) is calculated, and the diffuse light color calculated from the influence is added to the ambient light color. DiffuseColor is black because LightDifuse is black, and AmbientColor is the diffuse light of the material. Confirmation required.

• dot (x,y): return the inner value of the x and y vectors.

• max (x,y): choose the value of x or y, whichever is greater.

146 }

147 Out.Color.a = DiffuseColor.a;

148 Out.Color = saturate( Out.Color );

149

• saturate (x): clamp x to the range 0-1. 0>x, 1>x truncated?

150 // texture coordinates

151 Out.Tex = Tex;

152

153 if ( useSphereMap ) {

154 // sphere map texture coordinates

155 float2 NormalWV = mul( Out.Normal, (float3x3)ViewMatrix );

X and Y coordinates of vertex normals in view space.

156 Out.SpTex.x = NormalWV.x * 0.5f + 0.5f;

157 Out.SpTex.y = NormalWV.y * -0.5f + 0.5f;

158 }

159

Converts view coordinate values of vertex normals to texture coordinate values. Idiomatic.

160 return Out;

161 }

Return the structure you set.

163 // pixel shader

164 float4 Basic_PS(VS_OUTPUT IN, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon) : COLOR0

165 {

Specify the color of pixels to be displayed on the screen.

Parameters

• IN: VS_OUTPUT structure received from the vertex shader.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of using sphere map, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Output value

Output color

166 // specular color calculation

167 float3 HalfVector = normalize( normalize(IN.Eye) + -LightDirection );

Find the half vector from the inverse vector of the line of sight and the inverse vector of the light.

• Half vector: a vector that is the middle (addition) of two vectors. Used instead of calculating the reflection vector.

168 float3 Specular = pow( max(0,dot( HalfVector, normalize(IN.Normal) )), SpecularPower ) * SpecularColor;

169

From the half-vector and vertex normals, find the influence of reflection. Multiply the influence by the specular intensity, and multiply by the specular light color to get the specular.

• pow (x,y): multiply x by the exponent y.

170 float4 Color = IN.Color;

171 if ( useTexture ) {

172 // apply texture

173 Color *= tex2D( ObjTexSampler, IN.Tex );

174 }

If a texture is set, extract the color of the texture coordinates and multiply it by the base color.

• tex2D (sampler, tex): extract the color of the tex coordinates from the 2D texture in the sampler settings.

175 if ( useSphereMap ) {

176 // apply sphere map

177 if(spadd) Color += tex2D(ObjSphareSampler,IN.SpTex);

178 else Color *= tex2D(ObjSphareSampler,IN.SpTex);

179 }

180

If a sphere map is set, extract the color of the sphere map texture coordinates and add it to the base color if it is an additive sphere map file, otherwise multiply it.

181 if ( useToon ) {

182 // toon application

183 float LightNormal = dot( IN.Normal, -LightDirection );

184 Color.rgb *= lerp(MaterialToon, float3(1,1,1), saturate(LightNormal * 16 + 0.5));

185 }

In the case of the PMD model, determine the influence of the light from the normal vector of the vertex and the inverse vector of the light. Correct the influence level to 0.5-1, and darken the base color for lower influence levels.

• lerp (x,y,s): linear interpolation based on x + s(y - x). 0=x, 1=y.

186

187 // specular application

188 Color.rgb += Specular;

189

190 return Color;

191 }

Add the obtained specular to the base color and return the output color.

195 technique MainTec0 < string MMDPass = "object"; bool UseTexture = false; bool UseSphereMap = false; bool UseToon = false; > {

196 pass DrawObject {

197 VertexShader = compile vs_2_0 Basic_VS(false, false, false);

198 PixelShader = compile ps_2_0 Basic_PS(false, false, false);

199 }

200 }

Technique performed on a subset of accessories (materials) that don’t use texture or sphere maps when self-shadow is disabled.

• “object”: object when self-shadow is disabled.

• UseTexture: true for texture usage subset.

• UseSphereMap: true for sphere map usage subset.

• UseToon: true for PMD model.

7. Z-VALUE PLOT FOR SELF-SHADOW DETERMINATION (セルフシャドウ判定用Z値プロット)

Create a boundary value to be used for determining the self-shadow.

256 struct VS_ZValuePlot_OUTPUT {

257 float4 Pos : POSITION; // projective transformation coordinates

258 float4 ShadowMapTex : TEXCOORD0; // z-buffer texture

259 };

A structure for passing multiple return values between shader stages.

Parameters

• Pos: stores the position of a vertex in projective coordinates as a homogeneous spatial coordinate vertex shader output semantic.

• ShadowMapTex: stores texture coordinates for hardware calculation of z and w interpolation values as 0 texture coordinate vertex shader output semantics.

• w: scaling factor of the visual cone (which expands as you go deeper) in projective space.

261 // vertex shader

262 VS_ZValuePlot_OUTPUT ZValuePlot_VS( float4 Pos : POSITION )

263 {

264 VS_ZValuePlot_OUTPUT Out = (VS_ZValuePlot_OUTPUT)0;

265

266 // do a world-view projection transformation with the eyes of the light.

267 Out.Pos = mul( Pos, LightWorldViewProjMatrix );

268

Conversion of local coordinates of a vertex to projective coordinates with respect to a light.

269 // align texture coordinates to vertices.

270 Out.ShadowMapTex = Out.Pos;

271

272 return Out;

273 }

Assign to texture coordinates to let the hardware calculate z, w interpolation values for vertex coordinates, and return the structure.

275 // pixel shader

276 float4 ZValuePlot_PS( float4 ShadowMapTex : TEXCOORD0 ) : COLOR

277 {

278 // record z-values for R color components

279 return float4(ShadowMapTex.z/ShadowMapTex.w,0,0,1);

280 }

Divide the z-value in projective space by the magnification factor w, calculate the z-value in screen coordinates, assign to r-value and return (internal MMD processing?).

282 // techniques for Z-value mapping

283 technique ZplotTec < string MMDPass = "zplot"; > {

284 pass ZValuePlot {

285 AlphaBlendEnable = FALSE;

286 VertexShader = compile vs_2_0 ZValuePlot_VS();

287 PixelShader = compile ps_2_0 ZValuePlot_PS();

288 }

289 }

Technique to be performed when calculating the z-value for self-shadow determination.

• “zplot”: Z-value plot for self-shadow.

8. DRAWING OBJECTS IN SELF-SHADOWING (セルフシャドウ時オブジェクト描画)

Drawing an object with self-shadow.

295 // sampler for the shadow buffer. “register(s0)" because MMD uses s0

296 sampler DefSampler : register(s0);

297

Assign sampler register 0 to DefSampler. Not sure when it’s swapped with MMDSamp0 earlier. Not replaceable.

298 struct BufferShadow_OUTPUT {

299 float4 Pos : POSITION; // projective transformation coordinates

300 float4 ZCalcTex : TEXCOORD0; // z value

301 float2 Tex : TEXCOORD1; // texture

302 float3 Normal : TEXCOORD2; // normal vector

303 float3 Eye : TEXCOORD3; // position relative to camera

304 float2 SpTex : TEXCOORD4; // sphere map texture coordinates

305 float4 Color : COLOR0; // diffuse color

306 };

VS_OUTPUT with ZCalcTex added.

• ZCalcTex: stores the texture coordinates for calculating the interpolation values of Z and w for vertices in screen coordinates as the 0 texture coordinate vertex shader output semantic.

308 // vertex shader

309 BufferShadow_OUTPUT BufferShadow_VS(float4 Pos : POSITION, float3 Normal : NORMAL, float2 Tex : TEXCOORD0, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon)

310 {

Converts local coordinates of vertices to projective coordinates. Set the value to pass to the pixel shader, returning the BufferShadow_OUTPUT structure.

Parameters

• Pos: local coordinates of the vertex.

• Normal: normals in local coordinates of vertices.

• Tex: UV coordinates of the vertices.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Return value

BufferShadow_OUTPUT.

311 BufferShadow_OUTPUT Out = (BufferShadow_OUTPUT)0;

312

Initializing the structure.

313 // world-view projection transformation of camera viewpoint.

314 Out.Pos = mul( Pos, WorldViewProjMatrix );

315

Convert local coordinates of vertices to projective coordinates.

316 // position relative to camera 317 Out.Eye = CameraPosition - mul( Pos, WorldMatrix );

Calculate the inverse vector of the line of sight.

318 // vertex normal

319 Out.Normal = normalize( mul( Normal, (float3x3)WorldMatrix ) );

Compute normalized normal vectors in the vertex world space.

320 // world View Projection Transformation with Light Perspective

321 Out.ZCalcTex = mul( Pos, LightWorldViewProjMatrix );

Convert local coordinates of vertices to projective coordinates with respect to the light, and let the hardware calculate z and w interpolation values.

323 // Diffuse color + Ambient color Calculation

324 Out.Color.rgb = AmbientColor;

325 if ( !useToon ) {

326 Out.Color.rgb += max(0,dot( Out.Normal, -LightDirection )) * DiffuseColor.rgb;

327 }

328 Out.Color.a = DiffuseColor.a;

329 Out.Color = saturate( Out.Color );

Set the base color. For accessories, add a diffuse color to the base color based on the light influence, and set each component to 0-1.

331 // texture coordinates

332 Out.Tex = Tex;

Assign the UV coordinates of the vertex as they are.

334 if ( useSphereMap ) {

335 // sphere map texture coordinates

336 float2 NormalWV = mul( Out.Normal, (float3x3)ViewMatrix );

Convert vertex normal vectors to x and y components in view space coordinates when using sphere maps.

337 Out.SpTex.x = NormalWV.x * 0.5f + 0.5f;

338 Out.SpTex.y = NormalWV.y * -0.5f + 0.5f;

339 }

340

341 return Out;

342 }

Convert view space coordinates to texture coordinates and put the structure back.

344 // pixel shader

345 float4 BufferShadow_PS(BufferShadow_OUTPUT IN, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon) : COLOR

346 {

Specify the color of pixels to be displayed on the screen.

Parameters

• IN: BufferShadow_OUTPUT structure received from vertex shader.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Output value

Output color

347 // specular color calculation

348 float3 HalfVector = normalize( normalize(IN.Eye) + -LightDirection );

349 float3 Specular = pow( max(0,dot( HalfVector, normalize(IN.Normal) )), SpecularPower ) * SpecularColor;

350

Same specular calculation as Basic_PS.

351 float4 Color = IN.Color;

352 float4 ShadowColor = float4(AmbientColor, Color.a); // shadow’s color

Base color and self-shadow base color.

353 if ( useTexture ) {

354 // apply texture

355 float4 TexColor = tex2D( ObjTexSampler, IN.Tex );

356 Color *= TexColor;

357 ShadowColor *= TexColor;

358 }

When using a texture, extract the color of the texture coordinates from the set texture and multiply it by the base color and self-shadow color respectively.

359 if ( useSphereMap ) {

360 // apply sphere map

361 float4 TexColor = tex2D(ObjSphareSampler,IN.SpTex);

362 if(spadd) {

363 Color += TexColor;

364 ShadowColor += TexColor;

365 } else {

366 Color *= TexColor;

367 ShadowColor *= TexColor;

368 }

369 }

As with Basic_PS, when using a sphere map, add or multiply the corresponding colors.

370 // specular application

371 Color.rgb += Specular;

372

Apply specular to the base color.

373 // convert to texture coordinates 374 IN.ZCalcTex /= IN.ZCalcTex.w;

Divide the z-value in projective space by the scaling factor w and convert to screen coordinates.

375 float2 TransTexCoord;

376 TransTexCoord.x = (1.0f + IN.ZCalcTex.x)*0.5f;

377 TransTexCoord.y = (1.0f - IN.ZCalcTex.y)*0.5f;

378

Convert screen coordinates to texture coordinates.

379 if( any( saturate(TransTexCoord) != TransTexCoord ) ) {

380 // external shadow buffer

381 return Color;

Return the base color if the vertex coordinates aren’t in the 0-1 range of the texture coordinates.

382 } else {

383 float comp;

384 if(parthf) {

385 // self-shadow mode2

386 comp=1-saturate(max(IN.ZCalcTex.z-tex2D(DefSampler,TransTexCoord).r , 0.0f)*SKII2*TransTexCoord.y-0.3f);

In self-shadow mode2, take the Z value from the shadow buffer sampler and compare it with the Z value of the vertex, if the Z of the vertex is small, it isn't a shadow. If the difference is small (close to the beginning of the shadow), the shadow is heavily corrected. (Weak correction in the upward direction of the screen?) Weakly corrects the base color.

387 } else {

388 // self-shadow mode1

389 comp=1-saturate(max(IN.ZCalcTex.z-tex2D(DefSampler,TransTexCoord).r , 0.0f)*SKII1-0.3f);

390 }

Do the same for self-shadow mode1.

391 if ( useToon ) {

392 // toon application

393 comp = min(saturate(dot(IN.Normal,-LightDirection)*Toon),comp);

In the case of MMD models, compare the degree of influence of the shade caused by the light with the degree of influence caused by the self-shadow, and choose the smaller one as the degree of influence of the shadow.

• min (x,y): select the smaller value of x and y.

394 ShadowColor.rgb *= MaterialToon;

395 }

396

Multiply the self-shadow color by the toon shadow color.

397 float4 ans = lerp(ShadowColor, Color, comp);

Linearly interpolate between the self-shadow color and the base color depending on the influence of the shadow.

398 if( transp ) ans.a = 0.5f;

399 return ans;

400 }

401 }

If translucency is enabled, set the transparency of the display color to 50% and restore the composite color.

403 // techniques for drawing objects (for accessories)

404 technique MainTecBS0 < string MMDPass = "object_ss"; bool UseTexture = false; bool UseSphereMap = false; bool UseToon = false; > {

405 pass DrawObject {

406 VertexShader = compile vs_3_0 BufferShadow_VS(false, false, false);

407 PixelShader = compile ps_3_0 BufferShadow_PS(false, false, false);

408 }

409 }

Technique performed on a subset of accessories (materials) that don’t use a texture or sphere map during self-shadowing.

• “object-ss”: object when self-shadow is disabled.

• UseTexture: true for texture usage subset.

• UseSphereMap: true for sphere map usage subset.

• UseToon: true for PMD model.

9. FINAL NOTES

For further reading on HLSL coding, please visit Microsoft’s official English reference documentation.

5 notes

·

View notes

Text

RAY-MMD lighting tips: a translation by ryuu

The following tutorial is an English translation of the original one in Japanese by ngreeed. (WARNING: the website is NSFW)

Let’s get started? If you just landed on this tutorial for advanced MMDers and is wondering what the hell is going on, there are beginner Raycast tutorials in Learn MMD! Also, if you feel like reading more about rendering tips, I suggest taking a look at my other tutorial: advanced MMD rendering tutorial (and why you should care).

Content Index:

Introduction

Lighting: introduction

Lighting: basics

Fog

Other Tips

Final Notes

Introduction to Lighting Content Index:

Directional Light

Rectangle Light

Sphere Light

Point Light

Spot Light

Spot Light IES

Disk Light

Tube Light

Basics of Lighting Content Index:

Ambient

Shadow

Fog

IES

LED

IBL

GIF

Fog Content Index:

Atmospheric Fog

Ground Fog

Volumetric Cube And Sphere

1. INTRODUCTION

This compilation was brought together by the MMD community and contemplates ngreeed’s own experiences with fog and lighting usages. Every picture shown in this translation can be found in the original documentations on Iwara and GitHub.

It was translated from Japanese to English by ryuu with written permission from the author to share it on my blog and add information. The translation was done with the aid of the online translator DeepL and my friends’ help. This tutorial has no intention in replacing the original author’s.

2. LIGHTING: INTRODUCTION

By learning lighting, you’ll have more freedom in directing your videos, but it’s only for those who are confident that they understand how to use Ray-mmd, because it’s a pre-requisite and an additional part of understanding how to use skybox and materials. If you’re unsure about the other two items, start by reading this wiki.

Originally, surface lights in a 3DCG software are characterized by their ability to create soft shadows, but the surface lights used in Ray-MMD are very difficult to handle and don’t provide much benefit, so we recommend using point lights or directional lights.

Translator’s note: for those wondering what lights are available in Raycast, there’s a translation below of the wiki’s list.

2.1 Directional Light

Directional light simulates lighting hitting an object from an infinite distance. The angle of each ray reaching the object is negligible and the rays will always be parallel to each other, so it is also called sunlight.

Directional light doesn’t take into account the coordinates of the light source and won’t affect the lighting effect when placed in any position of the scene. Only the rotation will affect the lighting, because of the nature of directional light requires shading calculations for the whole scene.

Therefore its computational overhead is the largest among all multi-source shadows, and its shadow quality is only suitable for lighting characters at very high quality, which you may use when you want to attach a bone to the main light source.

2.2 Rectangle Light

The light source will shine from a plane towards a fixed range. It’s used to simulate monitors, screens, smartphones, etc.

Since the light source of the area light needs to consider the volume of the light source, the real calculation of its shadow in real time is very difficult to do. It’s recommended to use spot light shadow instead.

2.3 Sphere Light

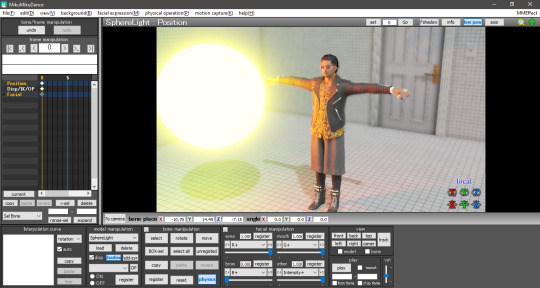

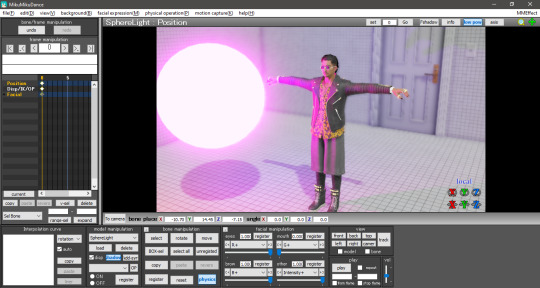

The light is emitted from the location of the light source to the surrounding area and the volume of the light source is taken into account to illuminate all the objects within the range.

Since the light source of the area light needs to consider the volume of the light source, the real calculation of its shadow in real time is very difficult to do, so the point light source shadow is used instead.

2.4 Point Light

Light source from a point, uniformly emitting light to the surrounding area, all objects within the range of light.

The point light source needs to calculate the shadows of objects within the range and will lead to a large shadow calculation overhead, while its shadow quality isn’t suitable for character lighting.

2.5 Spot Light

Spotlight dosn’t take into account the volume of the light source from a point, in a fixed direction to a certain range of cone angle irradiation object. The brightness of the edges of the ball will gradually fade and the angle of the ball can be controlled by the (Angle +/-) morph.

It’s used to simulate lampshade, flashlight and a car high beam. The quality of shadows is the best of all light sources, so it’s very suitable for irradiating the character on the simulation of stage lighting.

2.6 Spot Light IES

Sampling IES textures simultaneously on the behavior of the spotlight.

IES defines the luminous flux of its light at different angles, so that certain areas will be brighter or darker, thus simulating light coming through certain places and shining around the scene.

2.7 Disk Light

The light source will be irradiated from a plane object towards a fixed range. It’s used to simulate light from afar, because the light source of the area light needs to consider the volume of the light source.

The calculation of its shadow in real time is very difficult to do, so use spotlight shadow instead.

2.8 Tube Light

Uniform illumination of the surrounding objects. It’s used to simulate the light source of a lamp, because the light source of the area light needs to consider its volume

The calculation of its shadow in real time is very difficult to do, so use a point light source shadow instead.

3. LIGHTING: BASICS

Before explaining the parameters, let's start with an explanation of how to use the Lighting folder.

In the Lighting folder of ray-mmd, there are 10 different .pmx's to load into the MMD and their respective folders. Basically, the MME of the light will assign the .pmx's in the Default (Ambient/LED/GIF/IBL) folder of the respective light folder to the light’s .fx. .....lightning.... .fx is assigned to the LightMap tab of MME, and ...fog... .fx is assigned to the FogMap tab of MME.

Translator’s note: a fog .fx can be assigned to LightMap, but it’ll produce only fog.

Once you know this, you can play around with the lights and learn what you can do with them.

This spreadsheet shows the parameters and .fx available for each light, along with a brief explanation. The explanation of the terms is pretty much a translation.

3.1 Ambient

Reference: twitter.com

Assign the .fx in the Default Ambient folder to the LightMap to create a light without reflections or highlights when a material with high parameters such as Specular is lit. It is good to use it when loading multiple lights.

DirectionalLight is located in the Default folder, not in the DefaultAmbient folder.

3.2 Shadow

There are four types of shadow: low, medium, high and very high. If you assign it, when light (or fog) hits the model, it won’t shine on the model beyond it. Rather than increasing the shadow, it’ll stop when the light hits the model. In other words, it isn’t attached to the initial light, the light will be brightened nonetheless.

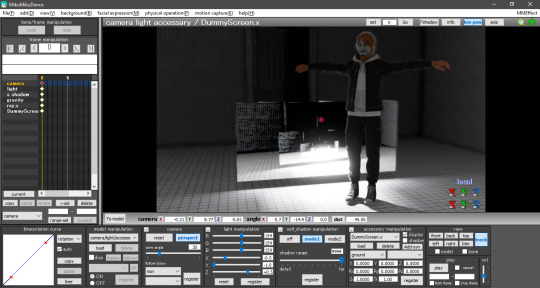

Default spot light.

The same spot light with shadow (very high).

3.3 Fog

When fog is loaded, light streaks are created at the location of the light emitted by the light. There are also special parameters called MiePhase and MieDensity, but be aware that other parameters aren’t isolated. The godray may or may not be displayed depending on the angle and position of the camera. So be careful.

Godray representation using volumetric fog and disk light.

3.4 IES

Reference: unrealengine.com