#*shows AI drawing writing and solving math problems*

Explore tagged Tumblr posts

Text

the kurzgesagt AI video brother what a flop yeah let's just keep talking about artificial intelligence without even mentioning that it's not intelligent at all because it doesn't have the capacity for reasoning nor of understanding what it outputs

#text:null#'AI is good at many things'#*shows AI drawing writing and solving math problems*#no dude it's great at generating an output off of millions of inputs that's not what drawing or writing is#and sure it can solve an equation but so does a fucking calculator if you give it something even a bit more complex it flounders completely#beCAUSE IT DOES NOT UNDERSTAND WHAT'S IT THAT IT DOES#the 'suppose we can make a AGI' brother we dont even have an actual AI atm that's a big ass 'suppose'#should have gone instead into what would it take to make an actual non biological intelligence

1 note

·

View note

Text

I was the kid who used all the writing space under a question and had to draw a line into the next to make room. And the paragraph boxes with the printed lines, I had to write all tiny under the bottom line that doesn’t have as much white space.

Those awful “explain how you solved this math question in at least 3 sentences” questions annoyed me though. You had to dissect how you did a math problem (after already showing your work, of course) and somehow stretch an explanation across 3 sentences.

To me, those questions are the exception to easily writing 600 words. Not that it’s an excuse to use AI, just that it’s the hardest and feels the most pointless out of average essay questions you’d encounter at school.

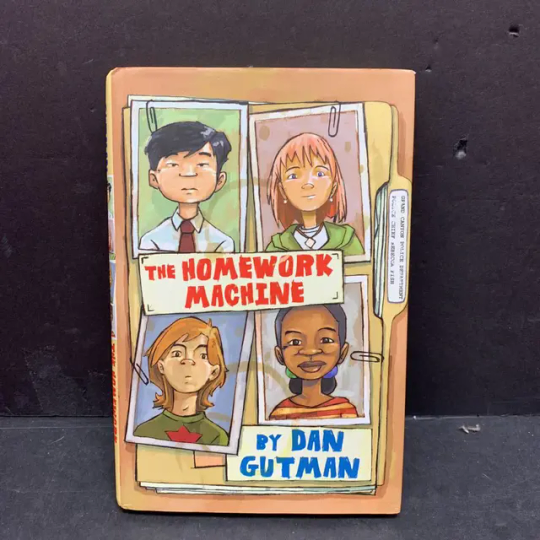

But speaking of school and AI, did anyone else here read The Homework Machine when they were a kid?

Perhaps it would be expedient for parents to read this book to their kids, or have Elementary teachers assign it..

im still losing it over the "how did high schoolers write 600 word essays before chatgpt" post. 600 words. that is nothing. that is so few words what do you mean you can't write 600 words. 600 words. this post right here is 45 words.

#The homework machine#Idk how to tag this#writing crutches#cheating on homework is crazy nowadays.#Also a high schooler??? That’s wild.#This post was 171 words. Not including the tags.

97K notes

·

View notes

Text

ChatGPT Is Poisoning Your Brain: A Critical Look at AI-Driven Thinking

In the age of artificial intelligence, where convenience often trumps critical thinking, tools like ChatGPT have emerged as indispensable digital companions. From writing essays to solving complex problems, AI seems to offer an answer for everything. But beneath the sleek interface and quick responses lies a growing concern: Is ChatGPT subtly poisoning the way we think?

The question may sound alarmist, but it's rooted in legitimate fears about our cognitive independence, critical thinking, and the long-term effects of outsourcing mental labor to machines. This article explores how AI tools like ChatGPT, while revolutionary in many ways, might be undermining human cognition, promoting intellectual laziness, and fostering a dependency that could weaken the very skills that define us as intelligent beings.

The Rise of AI Assistance AI-powered language models like ChatGPT have rapidly integrated into education, business, and personal productivity. Students use it for homework help, professionals for drafting reports, and creators for brainstorming ideas. The appeal is obvious: it saves time, provides instant answers, and can mimic human writing styles with uncanny accuracy.

But therein lies the problem. As with any tool, overuse or misuse can lead to unintended consequences. When a generation begins to rely on AI for thinking, questioning, and decision-making, what happens to their own ability to perform these tasks?

The Erosion of Critical Thinking One of the most alarming concerns is the potential erosion of critical thinking skills. Traditionally, we engage deeply with information—by reading, analyzing, and questioning—to form conclusions. This mental labor is essential not only for knowledge acquisition but also for developing intellectual resilience.

ChatGPT short-circuits that process. It offers synthesized, polished responses that feel like conclusions without showing the messy process of arriving at them. As a result, users may accept answers passively, without questioning validity, context, or bias.

This passive consumption is antithetical to how humans are supposed to learn. Instead of wrestling with difficult problems, we now ask ChatGPT for the answer. And each time we do, we weaken the muscles of our mind, much like a bodybuilder who suddenly stops lifting weights.

The Death of Original Thought Creativity thrives on struggle. Original thought often emerges from grappling with uncertainty, failure, and the slow process of refining an idea. But if we increasingly turn to AI for brainstorming, inspiration, and content creation, we may dilute the authenticity of our creative efforts.

ChatGPT doesn’t think. It predicts. It pulls from patterns in existing data and rearranges them in a way that looks new. When users rely on it to produce content—be it poetry, essays, or business pitches—they may unknowingly be recycling old ideas disguised as innovation.

This recycling of knowledge presents a threat: a slow, creeping homogenization of thought. As more people use AI to generate content, our intellectual ecosystem risks becoming an echo chamber of slightly rephrased ideas rather than a crucible for genuine innovation.

Dependency and the Decline of Memory Memory and recall are fundamental to intelligence. They help us draw connections between disparate pieces of knowledge and develop intuition in various domains. Yet, ChatGPT enables a world in which people don’t have to remember anymore—they can just ask.

Need to remember a historical date? A math formula? The main theme of a novel? Just ask ChatGPT.

Over time, this easy access leads to cognitive offloading—the process of transferring memory and thinking tasks to an external source. While this isn't inherently bad (we’ve been doing it with books and the internet), ChatGPT makes it far more insidious because of its seamlessness. The risk is that we stop bothering to learn or remember anything at all.

If we are not careful, we may raise a generation that knows less, remembers less, and thinks less, simply because they never needed to.

Intellectual Complacency and Confirmation Bias Another subtle danger lies in the comfort ChatGPT provides. Unlike a human debate partner, ChatGPT often aims to please. It doesn't challenge your beliefs unless prompted explicitly. It often reflects back what it thinks you want to hear, wrapped in eloquent prose.

This creates a fertile ground for confirmation bias, where users only encounter information that supports their existing views. Instead of provoking thought, ChatGPT can become a mirror—reinforcing rather than questioning.

Moreover, because the model is trained on the average of the internet, it can often reflect mainstream perspectives, glossing over nuance or marginal voices. This risks narrowing the user's worldview and weakening their ability to engage with complexity or contradiction.

Ethical Blind Spots and the Illusion of Objectivity ChatGPT presents information with a veneer of authority. Its confident tone can make even incorrect or biased responses sound legitimate. Without the ability to assess source credibility or interpret nuance, users may take AI outputs at face value.

The real danger is that ChatGPT offers the illusion of objectivity. Because it's not a person, people assume it’s neutral. But this is a fallacy. AI models are shaped by the data they are trained on—and that data includes biases, misinformation, and cultural blind spots.

The more we treat ChatGPT as a final authority, the more we open ourselves to being manipulated by a black box we don’t fully understand.

What Can We Do About It? None of this is to say ChatGPT should be abandoned. Like any powerful tool, its impact depends on how we use it. But awareness is key. Here are a few steps we can take:

Use AI as a supplement, not a substitute. Rely on ChatGPT to augment thinking, not replace it. Ask for perspectives, not conclusions.

Question everything. Treat every AI response as a starting point for deeper inquiry, not the end of the conversation.

Practice intellectual fitness. Engage in regular, deliberate thinking without AI assistance—write essays, solve problems, debate ideas.

Promote transparency. Push for more clarity about how AI models are trained, what data they use, and how they make decisions.

Educate on digital literacy. Schools and workplaces must teach how to critically evaluate AI output, understand its limitations, and use it responsibly.

Conclusion ChatGPT is not inherently bad. It is, in fact, one of the most remarkable technological achievements of our time. But like all tools of power, it demands caution, reflection, and responsibility. If used mindlessly, it can dull our intellect, erode our curiosity, and make us passive recipients of machine-generated thought.

We must not let convenience kill curiosity.

To keep our brains alive, we must continue to struggle with complexity, embrace ambiguity, and engage in the hard—but rewarding—work of thinking for ourselves. The mind is a muscle. Let’s not let AI do all the heavy lifting.

0 notes

Text

Modern Learning: Mohali Schools with Smart Classrooms and Technology

Education is changing fast. Schools in Mohali are using technology to make learning better. Smart classrooms are becoming common. They help students understand topics in new ways.

Why Smart Classrooms Matter

Traditional methods may not always work. Some students need more than books and lectures. Smart classrooms use screens, videos, and interactive tools. These make lessons more engaging.

Students see real-world examples on the screen.

Difficult subjects become easier with visuals.

Learning feels fun and exciting.

Teachers also benefit from technology. They can show live experiments, animations, and presentations. This helps them explain topics more clearly.

Interactive Learning Tools

Smartboards replace chalkboards in many schools. They allow teachers to draw, write, and show videos. Students can touch the screen and interact with lessons.

They solve problems directly on the board.

They play educational games that test knowledge.

They may enjoy learning more than before.

Tablets and computers are also part of smart classrooms. Students use them for research and projects. Digital libraries give them access to many books.

Better Understanding Through Technology

Some students may struggle with theory. Technology helps bring subjects to life. Science experiments are shown through animations. Math problems are solved step by step on the screen.

History lessons include virtual tours of old monuments.

Geography becomes interesting with 3D maps.

Language learning is better with speech recognition tools.

This approach helps students remember concepts. They do not just read but also see and hear.

Online Learning Support

Many schools in Mohali use online platforms. These help students learn even outside the classroom. Teachers upload notes, assignments, and video lectures.

Students can revise at their own pace.

Absent students can catch up easily.

Parents can track progress through apps.

Online tests and quizzes make learning flexible. Students can practice anytime.

Safe and Smart School Environment

Technology is also improving school safety. CCTV cameras are common in Mohali schools. ID cards with tracking help monitor student movement.

Parents feel more assured about security.

Attendance is marked digitally.

Emergency alerts reach parents fast.

Smart buses with GPS tracking are also used. These ensure safe travel for students.

Preparing Students for the Future

The world is moving towards digital careers. Students need to know how to use technology. Schools introduce coding and robotics at an early age.

Kids learn programming in simple ways.

AI and machine learning concepts are introduced early.

Problem-solving skills improve through tech-based learning.

These skills may help students in future jobs. Schools that focus on technology give them an advantage.

Final Thoughts

Smart classrooms make learning better. Top schools in Mohali are adopting modern tools. Students understand concepts better and feel more engaged. Education is not just about books anymore. It is about using the right tools to make learning fun and effective.

Looking for a school with smart classrooms? Mohali has many great options. Choose one that gives your child the best learning experience.

0 notes