#AIaccess

Explore tagged Tumblr posts

Text

Neuro Xmas Deal: Revolutionizing AI Access for 2025

Transform Your 2025 with Neuro’s Revolutionary Xmas Deal

This is the kind of tool that businesses need especially as the world hastens its journey to 2025 and beyond. No matter if you are an entrepreneur, a freelance or only a creative mind, getting artificial intelligence is not an option – it is a need. Welcome Neuro, the revolutionary one-stop MultiAI app encompassing more than 90 paid AI options.

In this review, you learn how the Neuro Xmas Deal makes it possible for you to unleash the power of unmatched AI features without having to pay through your nose for the subscription. Find out why this now handy tool is your ultimate weapon to take charge of your approach to the world of AI.

Overview: Neuro Xmas Deal

Vendor: Seyi Adeleke

Product: NEURO – Xmas Deal

Front-End Price: $27

Discount: Instant $3

Bonuses: Yes

Niche: Affiliate Marketing, AI App

Support: Effective Response

Recommend: Highly recommend!

Guarantee: 30 Days Money Back Guarantee

What Is Neuro?

Neuro is an interface that used more than 90 of the best AI models in one and very user-friendly control panel. Think about being able to use ChatGPT, DALL-E, Canva AI, MidJourney, Leonardo AI, Claude, Gemini, Copilot, Jasper, and many other tools without paying for each subscription or API prices. With Neuro, you can:

Create 8K videos, 4K images, and voiceovers.

Design logos, websites, landing pages, and branding.

Generate articles, write ad copy, and even turn speech into text.

Automate tasks for your business without technical skills.

No monthly fees, no waiting, and no limits – Neuro empowers you to unleash unlimited creative and business potential.

#NeuroXmasDeal#RevolutionizingAI#AI2025#Neurotechnology#ArtificialIntelligence#Neuroscience#Neuroengineering#BrainTech#Neuroinnovation#Neurorevolution#AIaccess#Neurotech#Neurofuture#Neurodevelopment#Neuroimaging#Neurocomputing#Neuroinformatics#Neurocognition#Neurorehabilitation#Neurorecovery#Neuroretraining#Neuroplasticity#Neurotherapy#Neuroeducation

1 note

·

View note

Text

How Small Language Models Are Reshaping AI: Smarter, Faster & More Accessible

Artificial Intelligence (AI) has transformed industries, from healthcare to education, by enabling machines to process and generate human-like text, answer questions, and perform complex tasks. At the heart of these advancements are language models, which are algorithms trained to understand and produce language. While large language models (LLMs) like GPT-4 or Llama have dominated headlines due to their impressive capabilities, they come with significant drawbacks, such as high computational costs and resource demands. Enter Small Language Models (SLMs)—compact, efficient alternatives that are reshaping how AI is developed, deployed, and accessed. This blog explores the technical foundations of SLMs, their role in making AI more accessible, and their impact on performance across various applications.

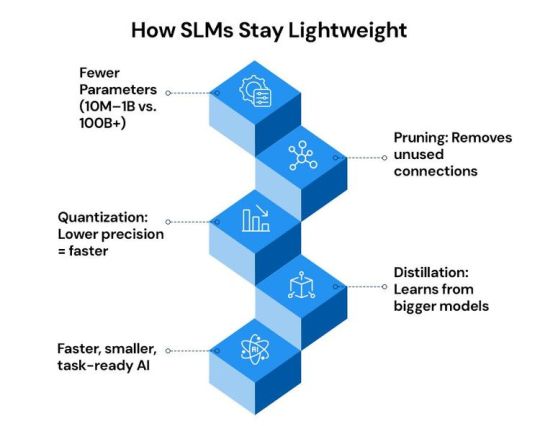

What Are Small Language Models?

Why SLMs Matter for Accessibility

Accessibility in AI refers to the ability of individuals, businesses, and organizations—regardless of their resources—to use and benefit from AI technologies. SLMs are pivotal in this regard because they lower the barriers to entry in three keyways: cost, infrastructure, and usability.

Lower Computational Costs

Training and running LLMs require vast computational resources, often involving clusters of high-end GPUs or TPUs that cost millions of dollars. For example, training a model like GPT-3 can consume energy equivalent to the annual usage of hundreds of households. SLMs, by contrast, require significantly less computing power. A model with 1 billion parameters can often be trained or fine-tuned on a single high-end GPU or even a powerful consumer-grade laptop. This reduced cost makes AI development feasible for startups, small businesses, and academic researchers who lack access to large-scale computing infrastructure.

Reduced Infrastructure Demands

Deploying LLMs typically involves cloud-based servers or specialized hardware, which can be expensive and complex to manage. SLMs, with their smaller footprint, can run on edge devices like smartphones, IoT devices, or low-cost servers. For instance, models like Google’s MobileBERT or Meta AI’s DistilBERT can perform tasks such as text classification or sentiment analysis directly on a mobile device without needing constant internet connectivity. This capability is transformative for applications in remote areas or industries where data privacy is critical, as it allows AI to function offline and locally.

Simplified Usability

SLMs are easier to fine-tune and deploy than LLMs, making them more approachable for developers with limited expertise. Fine-tuning an SLM for a specific task, such as summarizing medical records or generating customer support responses, requires less data and time compared to an LLM. Open-source SLMs, such as Hugging Face’s DistilBERT or Microsoft’s Phi-2, come with detailed documentation and community support, enabling developers to adapt these models to their needs without extensive machine learning knowledge. This democratization of AI tools empowers a broader range of professionals to integrate AI into their workflows.

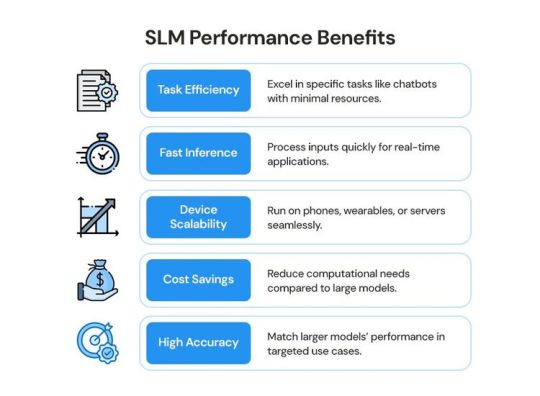

Performance Advantages of SLMs

While SLMs are smaller than LLMs, they are not necessarily less capable in practical scenarios. Their performance is often comparable to larger models in specific tasks, and they offer unique advantages in efficiency, speed, and scalability.

Task-Specific Efficiency

SLMs excel in targeted applications where a general-purpose, billion-parameter model is overkill. For example, in customer service chatbots, an SLM can be fine-tuned to handle common queries like order tracking or returns with accuracy comparable to an LLM but at a fraction of the computational cost. Studies have shown that models like DistilBERT achieve 97% of BERT’s performance on benchmark tasks like question answering while using half the parameters. This efficiency makes SLMs ideal for industries with well-defined AI needs, such as legal document analysis or real-time language translation.

Faster Inference Times

Inference—the process of generating predictions or responses—happens much faster with SLMs due to their compact size. For instance, an SLM running on a smartphone can process a user’s voice command in milliseconds, enabling real-time interactions. In contrast, LLMs often require server-side processing, introducing latency that can frustrate users. Faster inference is critical for applications like autonomous vehicles, where split-second decisions are necessary, or in healthcare, where real-time diagnostic tools can save lives.

Scalability Across Devices

The ability to deploy SLMs on a wide range of devices—from cloud servers to wearables—enhances their scalability. For example, an SLM embedded in a smartwatch can monitor a user’s health data and provide personalized feedback without relying on a cloud connection. This scalability also benefits businesses, as they can deploy SLMs across thousands of devices without incurring the prohibitive costs associated with LLMs. In IoT ecosystems, SLMs enable edge devices to collaborate, sharing lightweight models to perform distributed tasks like environmental monitoring or predictive maintenance.

Challenges and Limitations

Despite their advantages, SLMs have limitations that developers and organizations must consider. Their smaller size means they capture less general knowledge compared to LLMs. For instance, an SLM may struggle with complex reasoning tasks, such as generating detailed research papers or understanding nuanced cultural references, where LLMs excel. Additionally, while SLMs are easier to fine-tune, they may require more careful optimization to avoid overfitting, especially when training data is limited.

Another challenge is the trade-off between size and versatility. SLMs are highly effective for specific tasks but lack the broad, multi-domain capabilities of LLMs. Organizations must weigh whether an SLM’s efficiency justifies its narrower scope or if an LLM’s flexibility is worth the added cost. Finally, while open-source SLMs are widely available, proprietary models may still require licensing fees, which could limit accessibility for some users.

Real-World Applications

SLMs are already making a significant impact across industries, demonstrating their value in practical settings. Here are a few examples:

Healthcare: SLMs power portable diagnostic tools that analyze patient data, such as medical imaging or symptom reports, directly on devices like tablets. This is particularly valuable in rural or underserved areas with limited internet access.

Education: Language tutoring apps use SLMs to provide real-time feedback on pronunciation or grammar, running entirely on a student’s smartphone.

Retail: E-commerce platforms deploy SLMs for personalised product recommendations, analysing user behaviour locally to protect privacy and reduce server costs.

IoT and Smart Homes: SLMs enable voice assistants in smart speakers to process commands offline, improving response times and reducing reliance on cloud infrastructure.

The Future of SLMs

The rise of SLMs signals a shift toward more inclusive and practical AI development. As research continues, we can expect SLMs to become even more efficient through advancements in model compression and training techniques. For example, ongoing work in federated learning—where models are trained across distributed devices—could further reduce the resource demands of SLMs, making them accessible to even more users.

Moreover, the open-source community is driving innovation in SLMs, with platforms like Hugging Face and GitHub hosting a growing number of models tailored to specific industries. This collaborative approach ensures that SLMs will continue to evolve, addressing niche use cases while maintaining high performance.

Conclusion

Small Language Models are revolutionizing AI by making it more accessible and efficient without sacrificing performance in many practical applications. Their lower computational costs, reduced infrastructure demands, and simplified usability open the door for small businesses, individual developers, and underserved communities to adopt AI technologies. While they may not match the versatility of larger models, SLMs offer a compelling balance of speed, scalability, and task-specific accuracy. As industries increasingly prioritize efficiency and inclusivity, SLMs are poised to play a central role in the future of AI, proving that bigger isn’t always better.

0 notes

Link

Get ready for a game-changer in the world of Artificial Intelligence (AI)! Microsoft has unveiled Phi-3, its latest and most compact open-source large language model (LLM) to date. This groundbreaking development signifies a significant leap forward from its predecessor, Phi-2, released in December 2023. Breaking New Ground: Phi-3's Advantages Phi-3 boasts several improvements over its predecessor. Here's a breakdown of its key advancements: Microsoft Unveils Phi-3 Enhanced Training Data: Microsoft has equipped Phi-3 with a more robust training database, allowing it to understand and respond to complex queries with greater accuracy. Increased Parameter Count: Compared to Phi-2, Phi-3 features a higher parameter count. This translates to a more intricate neural network capable of tackling intricate tasks and comprehending a broader spectrum of topics. Efficiency Powerhouse: Despite its smaller size, Phi-3 delivers performance comparable to larger models like Mixtral 8x7B and GPT-3.5, according to Microsoft's internal benchmarks. This opens doors for running AI models on devices with limited processing power, potentially revolutionizing how we interact with technology on smartphones and other mobile devices. Unveiling the Details: Exploring Phi-3's Capabilities Phi-3 packs a punch within its compact frame. Here's a closer look at its technical specifications: Token Count: 3.3 trillion tokens Parameter Count: 3.8 billion parameters While the raw numbers might not mean much to everyone, they represent an intricate neural network capable of complex tasks. This efficiency makes Phi-3 a fascinating development in the LLM landscape. Accessibility for All: Microsoft has made Phi-3 readily available for exploration and experimentation. Currently, you can access Phi-3 through two prominent platforms: Microsoft Azure: Azure, Microsoft's cloud computing service, provides access to Phi-3 for those seeking a robust platform for testing and development. Ollama: Ollama, a lightweight framework designed for running AI models locally, also offers Phi-3, making it accessible for users with limited computational resources. A Glimpse into the Future: The Phi-3 family extends beyond the base model. Microsoft plans to release even more compact and efficient variants: Phi-3-mini and Phi-3-medium. These scaled-down versions hold immense potential for wider adoption, particularly on resource-constrained devices. A demo showcasing Phi-3-mini's efficiency was shared on Twitter by Sebastien Bubeck, further fueling excitement for the possibilities these smaller models present. A Critical Look: What to Consider Regarding Phi-3 While Phi-3's potential is undeniable, it's important to maintain a critical perspective. Here are some points to consider: Pre-print Paper: The claims surrounding Phi-3's capabilities are based on a pre-print paper published on arXiv, a platform that doesn't involve peer review. This means the scientific community hasn't yet fully validated the model's performance. Open-Source Future? Microsoft's dedication to making AI more accessible through Phi-3 and its upcoming variants is commendable. However, details surrounding Phi-3's open-source licensing remain unclear. A Hint of Openness: Grok AI's mention of the Apache 2.0 license, which allows for both commercial and academic use, suggests Microsoft might be considering this approach for Phi-3-mini's distribution. FAQs: Q: What is Phi-3? A: Phi-3 is Microsoft's latest AI language model, featuring a compact design and impressive performance capabilities. Q: How can developers access Phi-3? A: Phi-3 is accessible through Microsoft Azure and Ollama platforms, offering developers easy access to its powerful capabilities. Q: What sets Phi-3 apart from other AI models? A: Phi-3 boasts a compact design, enhanced training database, and impressive performance, making it suitable for a wide range of applications.

#AIAccessibility#artificialintelligence#cloudcomputing#LargeLanguageModelLLM#machinelearning#MicrosoftPhi3#MicrosoftUnveilsPhi3#natural#OpensourceAImodel#Phi3medium#Phi3mini

0 notes

Text

Want to harness the power of AI without writing a single line of code? Explore the top no-coding AI tools that make innovation accessible to everyone. 🔍 Read now: https://aimartz.com/blog/no-coding-ai-tools/ #NoCodeAI #AIAccessibility #SmartTools #aimartz #aimartz.com Read the full article

0 notes

Text

Voice-Activated AI: The Next Frontier in Machine Learning Technology

Voice technology is rapidly reshaping the way we interact with machines. From voice searches and smart home commands to real-time translations and accessibility tools, voice-activated AI is becoming an integral part of our digital lives. It's not just about convenience anymore—it's about creating frictionless, natural interactions powered by cutting-edge machine learning.

At the heart of voice-activated AI lies speech recognition, which has evolved significantly through deep learning. Modern AI models can now detect regional accents, filter out background noise, and interpret tone. This makes voice-enabled interfaces not only more accurate but also more adaptive and personal. It's a major leap from the clunky voice systems of the past.

One key driver behind this evolution is the integration of Natural Language Understanding (NLU). When combined with speech recognition, NLU allows AI systems to understand context, intent, and emotion behind spoken words. This opens the door for real-time problem solving, interactive storytelling, and voice-controlled services that respond intuitively to human needs.

Voice AI is also driving accessibility forward. Individuals with visual impairments, physical disabilities, or literacy challenges can now use voice commands to access information, control devices, and complete tasks independently. This democratization of technology is one of the most empowering aspects of AI today.

Businesses are increasingly adopting voice technology to streamline operations and engage customers. From AI-powered IVR systems in call centers to voice-based shopping assistants in retail, the voice interface is becoming a vital channel. It creates faster interactions, reduces screen dependency, and adds a human-like layer to digital services.

The future promises even greater integration. Voice biometrics may soon replace passwords, voice-commanded vehicles will become more common, and virtual companions will carry out complex tasks through natural dialogue. With continuous learning, voice AI will adapt to users over time—learning preferences, personalities, and even humor.

Of course, this progress must be matched with robust privacy protocols. Since voice data can be highly personal, ethical handling and secure storage are essential. Developers must be transparent about data collection and give users control over what's recorded and stored.

If you're looking to incorporate voice-activated AI into your product or service, click here to explore professional AI solutions . As voice becomes the new interface, the future of machine learning will be spoken—clearly, naturally, and intelligently.

#VoiceAI #SpeechRecognition #MachineLearning #VoiceTechnology #FutureOfAI #SmartAssistants #AIAccessibility #VoiceInterface #ExcelsiorTech #NaturalLanguageProcessing #TechForGood

0 notes

Text

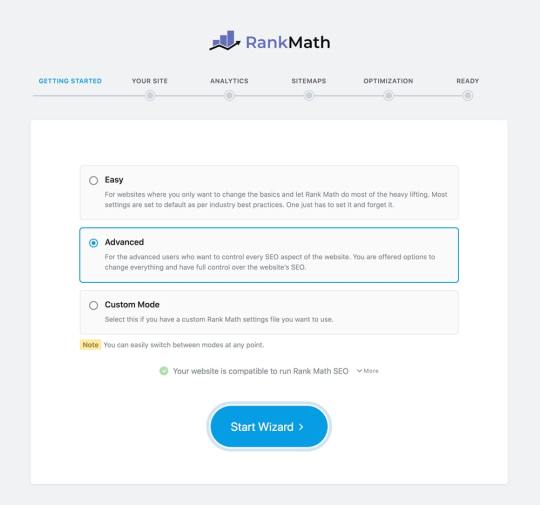

A feature-rich user-friendly SEO plugin, Rank Math

A feature-rich user-friendly SEO plugin, Rank Math helps with basic on-page SEO (improvements to your webpages designed to boost rankings) . Like setting up title tags and meta descriptions. ContentsWhat is Rank Math?Key FeaturesWhat you can do with Rank MathSearch Engine Optimized Content WITH RANK MATH AIAccess to 125+ Supercharged PromptsA feature-rich user-friendly SEO plugin, Rank Math And…

View On WordPress

0 notes