#AWSSDK

Explore tagged Tumblr posts

Text

Centralizing AWS Root access for AWS Organizations customers

Security teams will be able to centrally manage AWS root access for member accounts in AWS Organizations with a new feature being introduced by AWS Identity and Access Management (IAM). Now, managing root credentials and carrying out highly privileged operations is simple.

Managing root user credentials at scale

Historically, accounts on Amazon Web Services (AWS) were created using root user credentials, which granted unfettered access to the account. Despite its strength, this AWS root access presented serious security vulnerabilities.

The root user of every AWS account needed to be protected by implementing additional security measures like multi-factor authentication (MFA). These root credentials had to be manually managed and secured by security teams. Credentials had to be stored safely, rotated on a regular basis, and checked to make sure they adhered to security guidelines.

This manual method became laborious and error-prone as clients’ AWS systems grew. For instance, it was difficult for big businesses with hundreds or thousands of member accounts to uniformly secure AWS root access for every account. In addition to adding operational overhead, the manual intervention delayed account provisioning, hindered complete automation, and raised security threats. Unauthorized access to critical resources and account takeovers may result from improperly secured root access.

Additionally, security teams had to collect and use root credentials if particular root actions were needed, like unlocking an Amazon Simple Storage Service (Amazon S3) bucket policy or an Amazon Simple Queue Service (Amazon SQS) resource policy. This only made the attack surface larger. Maintaining long-term root credentials exposed users to possible mismanagement, compliance issues, and human errors despite strict monitoring and robust security procedures.

Security teams started looking for a scalable, automated solution. They required a method to programmatically control AWS root access without requiring long-term credentials in the first place, in addition to centralizing the administration of root credentials.

Centrally manage root access

AWS solve the long-standing problem of managing root credentials across several accounts with the new capability to centrally control root access. Two crucial features are introduced by this new capability: central control over root credentials and root sessions. When combined, they provide security teams with a safe, scalable, and legal method of controlling AWS root access to all member accounts of AWS Organizations.

First, let’s talk about centrally managing root credentials. You can now centrally manage and safeguard privileged root credentials for all AWS Organizations accounts with this capability. Managing root credentials enables you to:

Eliminate long-term root credentials: To ensure that no long-term privileged credentials are left open to abuse, security teams can now programmatically delete root user credentials from member accounts.

Prevent credential recovery: In addition to deleting the credentials, it also stops them from being recovered, protecting against future unwanted or unauthorized AWS root access.

Establish secure accounts by default: Using extra security measures like MFA after account provisioning is no longer necessary because member accounts can now be created without root credentials right away. Because accounts are protected by default, long-term root access security issues are significantly reduced, and the provisioning process is made simpler overall.

Assist in maintaining compliance: By centrally identifying and tracking the state of root credentials for every member account, root credentials management enables security teams to show compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials.

Aid in maintaining compliance By systematically identifying and tracking the state of root credentials across all member accounts, root credentials management enables security teams to prove compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials. However, how can it ensure that certain root operations on the accounts can still be carried out? Root sessions are the second feature its introducing today. It provides a safe substitute for preserving permanent root access.

Security teams can now obtain temporary, task-scoped root access to member accounts, doing away with the need to manually retrieve root credentials anytime privileged activities are needed. Without requiring permanent root credentials, this feature ensures that operations like unlocking S3 bucket policies or SQS queue policies may be carried out safely.

Key advantages of root sessions include:

Task-scoped root access: In accordance with the best practices of least privilege, AWS permits temporary AWS root access for particular actions. This reduces potential dangers by limiting the breadth of what can be done and shortening the time of access.

Centralized management: Instead of logging into each member account separately, you may now execute privileged root operations from a central account. Security teams can concentrate on higher-level activities as a result of the process being streamlined and their operational burden being lessened.

Conformity to AWS best practices: Organizations that utilize short-term credentials are adhering to AWS security best practices, which prioritize the usage of short-term, temporary access whenever feasible and the principle of least privilege.

Full root access is not granted by this new feature. For carrying out one of these five particular acts, it offers temporary credentials. Central root account management enables the first three tasks. When root sessions are enabled, the final two appear.

Auditing root user credentials: examining root user data with read-only access

Reactivating account recovery without root credentials is known as “re-enabling account recovery.”

deleting the credentials for the root user Eliminating MFA devices, access keys, signing certificates, and console passwords

Modifying or removing an S3 bucket policy that rejects all principals is known as “unlocking” the policy.

Modifying or removing an Amazon SQS resource policy that rejects all principals is known as “unlocking a SQS queue policy.”

Accessibility

With the exception of AWS GovCloud (US) and AWS China Regions, which do not have root accounts, all AWS Regions offer free central management of root access. You can access root sessions anywhere.

It can be used via the AWS SDK, AWS CLI, or IAM console.

What is a root access?

The root user, who has full access to all AWS resources and services, is the first identity formed when you create an account with Amazon Web Services (AWS). By using the email address and password you used to establish the account, you can log in as the root user.

Read more on Govindhtech.com

#AWSRoot#AWSRootaccess#IAM#AmazonS3#AWSOrganizations#AmazonSQS#AWSSDK#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Photo

DockerとMinioでAWS SDK for C++の開発環境を構築する http://bit.ly/2IdT4hx

AWS SDK for C++を利用した開発を行うのにDockerとMinioを利用して開発環境を用意してみました。

環境構築

GitHubに設定と実装をアップしていますので、よければご参考ください。 https://github.com/kai-kou/aws-sdk-cpp-on-docker

DockerfileでAWS SDK for C++がインストールされたイメージをビルドできるようにします。 ベースとなるイメージにubuntu 指定していますが、他に変えてもおkです。お好みで。

SDKのビルド時に-DBUILD_ONLY を指定しないとSDK全体がビルドされることになり、おそらく数時間かかりますので、ご注意ください。

Dockerfile

FROM ubuntu:18.04 RUN apt-get update && \ apt-get install -y git build-essential cmake clang RUN apt-get update && \ apt-get install -y libcurl4-openssl-dev libssl-dev uuid-dev zlib1g-dev libpulse-dev RUN git clone https://github.com/aws/aws-sdk-cpp.git && \ mkdir aws-sdk-cpp-build && \ cd aws-sdk-cpp-build && \ cmake -DBUILD_ONLY="s3" ../aws-sdk-cpp && \ make && \ make install

AWS SDK for C++を利用してS3へアクセスするのに、ローカル環境としてMinioを利用しています。 Minioについては下記をご参考ください。

S3互換のオブジェクトストレージ MinioをDocker Composeで利用する – Qiita https://cloudpack.media/46077

docker-compose.yaml

version: '3.3' services: dev: build: . volumes: - type: bind source: ./src target: /cpp-dev minio: image: minio/minio volumes: - type: bind source: ./data target: /export ports: - "9000:9000" environment: MINIO_ACCESS_KEY: hogehoge MINIO_SECRET_KEY: hogehoge command: server /export

上記ファイルを含むファイル構成は以下のようになります。

> tree . ├── Dockerfile ├── data │ └── hoge ├── docker-compose.yml └── src ├── CMakeLists.txt ├── build └── main.cpp

実装

put_object メソッドでS3(Minio)へファイルをPUTしています。 Minioを利用する都合、endpointOverride を利用しています。 詳細は下記をご参考ください。

S3互換のオブジェクトストレージ MinioにAWS SDK for C++でアクセスするのにハマるポイント – Qiita https://cloudpack.media/46084

main.cpp

#include <iostream> #include <aws/core/Aws.h> #include <aws/core/auth/AWSCredentialsProvider.h> #include <aws/core/auth/AWSAuthSigner.h> #include <aws/s3/S3Client.h> #include <aws/s3/model/PutObjectRequest.h> void put_object(Aws::String key_name) { const Aws::String endpoint = "minio:9000"; const Aws::String bucket_name = "hoge"; auto input_data = Aws::MakeShared<Aws::StringStream>(""); *input_data << "hoge!" << '\n'; Aws::S3::Model::PutObjectRequest object_request; object_request.WithBucket(bucket_name) .WithKey(key_name) .SetBody(input_data); Aws::SDKOptions options; Aws::InitAPI(options); // Minioを利用する場合、ちょっと面倒 Aws::Auth::AWSCredentials cred = Aws::Auth::EnvironmentAWSCredentialsProvider().GetAWSCredentials(); Aws::Client::ClientConfiguration clientConfig; if (!endpoint.empty()) { clientConfig.scheme = Aws::Http::Scheme::HTTP; clientConfig.endpointOverride = endpoint; } auto const s3_client = Aws::S3::S3Client(cred, clientConfig, Aws::Client::AWSAuthV4Signer::PayloadSigningPolicy::Never, false); // Minioを利用しない場合、下記でおk // Aws::S3::S3Client s3_client(clientConfig); auto const outcome = s3_client.PutObject(object_request); if (outcome.IsSuccess()) { std::cout << key_name << " Done!" << std::endl; } else { std::cout << key_name << " Error: "; std::cout << outcome.GetError().GetMessage().c_str() << std::endl; } Aws::ShutdownAPI(options); } int main() { put_object("hoge1.txt"); return 0; }

CMakeLists.txt でAWS SDK for C++が利用できるように指定します。

CMakeLists.txt

cmake_minimum_required(VERSION 3.5) find_package(AWSSDK REQUIRED COMPONENTS s3) add_definitions(-DUSE_IMPORT_EXPORT) add_executable(main main.cpp) target_link_libraries(main ${AWSSDK_LINK_LIBRARIES})

Dockerコンテナの立ち上げ

> docker-compose build > docker-compose up -d minio > docker-compose run dev bash #

Minioの準備

docker-compose up -d minio でMinioのコンテナが立ち上がったらhttp://localhost:9000 にブラウザでアクセスしてバケットhoge を作成します。作成後、Bucket PolicyをPrefix:* にRead and Write で設定します。

設定の詳細は下記をご参考ください。

S3互換のオブジェクトストレージ MinioをDocker Composeで利用する – Qiita https://cloudpack.media/46077

ビルドして実行する

コンテナ内

# cd /cpp-dev/build # cmake .. # cmake --build . # ./main hoge1.txt Done!

問題なく実行できました。 Minioが利用するディレクトリにファイルが保存されたのも確認できます。

> tree data/hoge data/hoge/ └── hoge1.txt > cat data/hoge/hoge1.txt hoge!

やったぜ。

参考

aws/aws-sdk-cpp: AWS SDK for C++ https://github.com/aws/aws-sdk-cpp

S3互換のオブジェクトストレージ MinioをDocker Composeで利用する – Qiita https://cloudpack.media/46077

S3互換のオブジェクトストレージ MinioにAWS SDK for C++でアクセスするのにハマるポイント – Qiita https://cloudpack.media/46084

元記事はこちら

「DockerとMinioでAWS SDK for C++の開発環境を構築する」

April 09, 2019 at 04:00PM

0 notes

Text

Redshift Amazon With RDS For MySQL zero-ETL Integrations

With the now broadly available Amazon RDS for MySQL zero-ETL interface with Amazon Redshift, near real-time analytics are possible.

For comprehensive insights and the dismantling of data silos, zero-ETL integrations assist in integrating your data across applications and data sources. Petabytes of transactional data may be made accessible in Redshift Amazon in only a few seconds after being written into Amazon Relational Database Service (Amazon RDS) for MySQL thanks to their completely managed, no-code, almost real-time solution.

- Advertisement -

As a result, you may simplify data input, cut down on operational overhead, and perhaps even decrease your total data processing expenses by doing away with the requirement to develop your own ETL tasks. They revealed last year that Amazon DynamoDB, RDS for MySQL, and Aurora PostgreSQL-Compatible Edition were all available in preview as well as the general availability of zero-ETL connectivity with Redshift Amazon for Amazon Aurora MySQL-Compatible Edition.

With great pleasure, AWS announces the general availability of Amazon RDS for MySQL zero-ETL with Redshift Amazon. Additional new features in this edition include the option to setup zero-ETL integrations in your AWS Cloud Formation template, support for multiple integrations, and data filtering.

Data filtration

The majority of businesses, regardless of size, may gain from include filtering in their ETL tasks. Reducing data processing and storage expenses by choosing just the portion of data required for replication from production databases is a common use case. Eliminating personally identifiable information (PII) from the dataset of a report is an additional step. For instance, when duplicating data to create aggregate reports on recent patient instances, a healthcare firm may choose to exclude sensitive patient details.

In a similar vein, an online retailer would choose to provide its marketing division access to consumer buying trends while keeping all personally identifiable information private. On the other hand, there are other situations in which you would not want to employ filtering, as when providing data to fraud detection teams who need all of the data in almost real time in order to draw conclusions. These are just a few instances; We urge you to explore and find more use cases that might be relevant to your company.

- Advertisement -

Zero-ETL Integration

You may add filtering to your zero-ETL integrations in two different ways: either when you construct the integration from scratch, or when you alter an already-existing integration. In any case, this option may be found on the zero-ETL creation wizard’s “Source” stage.

Entering filter expressions in the format database.table allows you to apply filters that include or exclude databases or tables from the dataset. Multiple expressions may be added, and they will be evaluated left to right in sequence.

If you’re changing an existing integration, Redshift Amazon will remove tables that are no longer included in the filter and the new filtering rules will take effect once you confirm your modifications.

Since the procedures and ideas are fairly similar, we suggest reading this blog article if you want to dig further. It goes into great detail on how to set up data filters for Amazon Aurora zero-ETL integrations.

Amazon Redshift Data Warehouse

From a single database, create several zero-ETL integrations

Additionally, you can now set up connectors to up to five Redshift Amazon data warehouses from a single RDS for MySQL database. The only restriction is that you can’t add other integrations until the first one has successfully completed its setup.

This enables you to give other teams autonomy over their own data warehouses for their particular use cases while sharing transactional data with them. For instance, you may use this in combination with data filtering to distribute distinct data sets from the same Amazon RDS production database to development, staging, and production Redshift Amazon clusters.

One further intriguing use case for this would be the consolidation of Redshift Amazon clusters via zero-ETL replication to several warehouses. Additionally, you may exchange data, train tasks in Amazon SageMaker, examine your data, and power your dashboards using Amazon Redshift materialized views.

In summary

You may duplicate data for near real-time analytics with RDS for MySQL zero-ETL connectors with Redshift Amazon, eliminating the need to create and maintain intricate data pipelines. With the ability to implement filter expressions to include or exclude databases and tables from the duplicated data sets, it is already widely accessible. Additionally, you may now construct connections from many sources to combine data into a single data warehouse, or set up numerous connectors from the same source RDS for MySQL database to distinct Amazon Redshift warehouses.

In supported AWS Regions, this zero-ETL integration is available for Redshift Amazon Serverless, Redshift Amazon RA3 instance types, and RDS for MySQL versions 8.0.32 and later.

Not only can you set up a zero-ETL connection using the AWS Management Console, but you can also do it with the AWS Command Line Interface (AWS CLI) and an official AWS SDK for Python called boto3.

Read more on govindhtech.com

#RedshiftAmazon#RDS#zeroETLIntegrations#AWSCloud#sdk#aws#Amazon#AWSSDK#MySQLdatabase#DataWarehouse#data#AmazonRedshift#zeroETL#realtimeanalytics#PostgreSQL#news#AmazonSageMaker#technology#technews#govindhtech

0 notes

Text

Mastering Amazon RDS for Db2: Your Essential Guide

IBM and AWS have partnered to deliver Amazon Relational Database Service (Amazon RDS) for Db2, a fully managed database engine on AWS infrastructure.

IBM makes enterprise-grade relational database management system (RDBMS) Db2. It has powerful data processing, security, scalability, and support for varied data types. Due to its dependability and performance, enterprises use Db2 to manage data in diverse applications and handle data-intensive tasks. Db2 is based on IBM’s 1970s data storage and SQL pioneering. It was first sold for mainframes in 1983 and later adapted to Linux, Unix, and Windows. In all verticals, Db2 powers thousands of business-critical applications.

Amazon RDS for Db2 lets you construct a Db2 database with a few clicks in the AWS Management Console, one command in the AWS CLI, or a few lines of code in the AWS SDKs. AWS handles infrastructure heavy lifting, freeing you to optimize application schema and queries.

The Advanced Features of Amazon RDS for Db2

Let me briefly summarize Amazon RDS’s features for newcomers or those coming from on-premises Db2

Your on-premises Db2 database is available on Amazon RDS. Existing apps will reconnect to RDS for Db2 without code changes.

Fully managed infrastructure powers the database. Server provisioning, package installation, patch installation, and infrastructure operation are not required.

The database is thoroughly handled. Installation, minor version upgrades, daily backup, scaling, and high availability are AWS responsibility.

Infrastructure can be scaled as needed. Stop and restart the database to modify the hardware to fulfill performance requirements or use last-generation hardware.

Amazon RDS storage types provide fast, predictable, and consistent I/O performance. The system can automatically scale storage for new or unpredictable demands.

Amazon RDS automates backups and lets you restore them to a new database with a few clicks.

Highly available architectures are deployed by Amazon RDS. Amazon RDS synchronizes data to a standby database in another Availability Zone. In a Multi-AZ deployment, Amazon RDS immediately switches to the standby instance and routes requests without modifying the database endpoint DNS name. Zero data loss and minimal downtime occur during this transfer.

Amazon RDS uses AWS’s secure infrastructure. It uses TLS and AWS KMS keys to encrypt data in transit and at rest. This lets you implement FedRAMP, GDPR, HIPAA, PCI, and SOC-compliant workloads.

In numerous AWS compliance programs, third-party auditors evaluate Amazon RDS security and compliance. You can view the entire list of validations.

AWS Database Migration Service or native Db2 utilities like restore and import can move your on-premises Db2 database to Amazon RDS. AWS DMS lets you migrate databases in one operation or constantly as your applications update the source database until you set a cut off.

Amazon RDS supports Amazon RDS Enhanced Monitoring, Amazon CloudWatch, and IBM Data Management Console or IBM DSMtop for database instance monitoring.

Launching the new Amazon RDS for Db2

Create and connect to a Db2 database using IBM’s standard tool. It assume most readers are IBM Db2 users unfamiliar with Amazon RDS.

Create a Db2 database. Select Create database on the Amazon RDS page of the AWS Management Console.

Amazon RDS allows many database engines, therefore You chose Db2.

Then select IBM Db2 Standard and Engine 11.5.9. Amazon RDS automatically patches databases if required.

You choose Production. Amazon RDS defaults to high availability and fast, consistent performance.

Under Settings, Simply name your RDS instance (not Db2 catalog) and select the master username and password.

Please choose the database node type in instance configuration. This determines the virtual server’s vCPUs and memory. IBM Db2 Standard instances can have 32 vCPUs and 128 GiB RAM, depending on application. IBM Db2 Advanced supports 128 vCPUs and 1 TiB RAM. Price is directly affected by this trait.

Storage: Amazon Elastic Block Store (Amazon EBS) volume type, size, IOPS, and throughput.

Then chose Connectivity’s VPC for the database. Please select No under Public access to restrict database access to the local network. You can’t justify choosing Yes.

Select the VPC security group. Security groups restrict database access by IP and TCP port. Open TCP 50000 in a security group to allow Db2 database connections.

It default all other parameters. Click Additional setup at the bottom. Start with a database name. You must restore a Db2 database backup on that instance if not identified here.

Amazon RDS automated backup parameters are here. Choose a backup retention period.

Select Create database and accept defaults.

A few minutes later, your database appears.

The database instance Endpoint DNS name connects to a Linux system on the same network.

A sample dataset and script are downloaded from the popular Db2Tutorial website after connecting. The scripts run on the fresh database.

Amazon RDS has no database connection or use requirements. Using standard Db2 scripts.

A Db2 license is also needed for Amazon RDS for Db2. Start a Db2 instance with your IBM customer ID and site number.

Attach a custom DB parameter group to your database instance at launch. DB parameter groups store engine configuration variables for several DB instances. Your IBM Site Number and IBM Customer Number (rds.ibm_customer_id) are unique to IBM Db2 licenses.

Find your site number by asking IBM sales for a recent PoE, invoice, or sales order. Your site number should be on all documents.

Pricing, availability

RDS for Db2 is accessible in all AWS regions except China and GovCloud.

Amazon RDS has no upfront payments or subscriptions and charges on demand. AWS only charge by the hour when the database is running, plus the GB per month of database and backup storage and IOPS you utilize. The Amazon RDS for Db2 pricing page lists regional pricing. You must bring your own Db2 license for Amazon RDS for Db2.

Those familiar with Amazon RDS will be pleased to see a new database engine for application developers. Amazon RDS’s simplicity and automation will appeal to on-premises users.

Read on Govindhtech.com

#Amazon#RDS#Db2#IBMandAWS#SQL#database#AWSSDKs#AWSCLI#AmazonCloudWatch#IBMDataManagementConsole#technews#technology#govinhdtech

0 notes

Photo

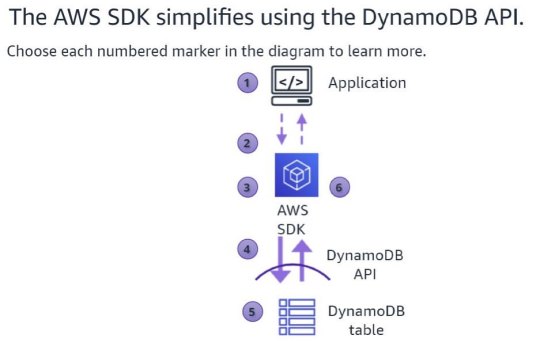

AWS SDKs and Tools Create , Configure , and implement scalable mobile and web apps powered by AWS , or leverage Internet of Things ( IoT) Device SDKs to access the AWA IoT platform…… #10xweb #dotcomrajawebspace #dotcomraja #saiweb #ignoustudent #awssdk #IGNOU #awssdk #awstraining #dotcomyuga #IoT #ios #arduino #AWS (at Saiweb.ch) https://www.instagram.com/p/CPpvya2D5De/?utm_medium=tumblr

#10xweb#dotcomrajawebspace#dotcomraja#saiweb#ignoustudent#awssdk#ignou#awstraining#dotcomyuga#iot#ios#arduino#aws

0 notes

Photo

AWS SDKs and Tools Create , Configure , and implement scalable mobile and web apps powered by AWS , or leverage Internet of Things ( IoT) Device SDKs to access the AWA IoT platform…… #10xweb #dotcomrajawebspace #dotcomraja #saiweb #ignoustudent #awssdk #IGNOU #awssdk #awstraining #dotcomyuga #IoT #ios #arduino #AWS https://www.instagram.com/p/CPpvtKAjk-M/?utm_medium=tumblr

#10xweb#dotcomrajawebspace#dotcomraja#saiweb#ignoustudent#awssdk#ignou#awstraining#dotcomyuga#iot#ios#arduino#aws

0 notes