#ChatGPT is TERRIBLE at math!

Explore tagged Tumblr posts

Text

They're making us do AI Week at my school next week. We're supposed to come up with activities that will allow (force) the students to use ChatGPT to help them solve problems. I wanna throw up.

If I ever have kids I swear I'm homeschooling them. The ministry of education is just another puppet of the western powers that are actively trying to make everyone stop thinking for themselves.

#anti ai#mispearl ramblings#teacher life#ChatGPT is TERRIBLE at math!#I've tried asking it to create problem solving questions for me before and they never make sense#and it gives wrong answers when asked to solve them#what kind of activity are the students supposed to use it for?

14 notes

·

View notes

Text

21.02.23

im in a terrible mood today!!!!

first of all because i stink! i don't know why. maybe it's hormonal or i ate something or idk. but i smell so bad! not like sweat but like a general bad odor like what's going on????

secondly, the master's degree bullshit is pissing me off! i spent the whole day writing a cover letter for this shit and i hate it. thank god for chatgpt but it doesn't help as much as i hoped it could. i mean i still have to come up with dumb shit about myself and sound enthusiastic. and i hate it!!!

and then i have no idea how to even apply! everything is online, i don't understand shit. the only way to contact people is by email and i hate emails. why can't i just call or talk to someone in person so that they could explain things to me and show how it's done? apparently i need to reapply to my uni as if i were a new student. but when i enter my student number an error message pops up like "you're already enrolled". like yeah, but it said i had to do it again! then there's also a button that says "id like to reenroll" but it sends you to a fucking contact form that says "we will reply within 3 business days". so i left a message like "hello! id like to reenroll please!". what am i supposed to do???

and then, cherry on top, i was like okay i'll deal with this reenrollment shit later, let's try to apply for the specific master's program through their online application thing. and ive already talked to a couple of people about my case and asked what i should do about the english exam bc im fluent but i don't have any like technical proof of it. and everyone was like yeahh it's fineee, you don't need a certificate if you're fluent. so i go on their online application thing and i literally can't go to the next page if i don't upload the english certificate! it says "if english is your mother tongue this is not mandatory" and at the same time when i want to go to the next page it says "this field is mandatory". so what do i do? upload a blank page?? oh and wait for it! i need another certificate no one fucking told me about! guess what it is!!! a fucking iq test!!! okay not like THE iq test but a thing called gre. and i googled what it is and it's this like fucking analytical reasoning test or whatever. and it's also racist.

and im sorry, not to be all like "i have 999 iq" but i do maths okay..?? what more proof do you need that im not stupid? qUanTiTaTiVe rEaSoNiNg how about you quantishut the fuck up!?!!!? like im smart enough to do maths but not smart enough to do a fucking economics degree when economics is basically astrology for straight people.??? like give me a break. i already declined taking an actual iq test because iq is racist and i don't want to partake in racist things. and now there's this fucking gdr bullshit. and it costs 200 bucks to take!!!! for what????

anyway i sent an email like "umm i am not taking any expensive ass exams um no thanks". like dude why can't i just go to the fucking manager of the faculty or whoever the fuck and give them my cover letter and ask my questions? noooo i have to write fucking emails and fill in their fucking contact forms. like all of this could be solved in a 5 minute conversation.

also, third thing, i went to see the students union today because i have a bone to pick with my functional analysis professor. that's a whole different story. but anyway, i wanted to know if anything could be done about that. like can i possibly refuse the grade i got bc it was unfair? huge respect to the union btw, i love them, they occupied the cafeteria last year and now we have cheap lunches, it's great. and so yeah i went to see them to ask for advice and they guided me quite well but they also asked how everyone else felt about the exam in question. and i would love to know but no one in my class wanted to talk to me about it! i sent a message today, no one replied. and then this evening i insisted and guess what! one guy replied to my message like "not to be mean, but the exam was easy".

like broooo if you're a fucking functional analysis genius good for you!!! do you want a medal or what?? the guy is a child prodigy and with all due respect, i didn't ask his opinion! like good for you if you found it easy but when you're the exception to the rule maybe you should just like not ruin it for everyone! and what's with the "not to be mean"??? why did he have to phrase it like that? like he could've just said that he found it easy and that's it. now it sounds mean when you say it like that!

anyway, im stinky and angry and all i want to do is first of all take a shower but also cuddle with my ex and not think about anything and be in love and not have to worry about uni and degrees and functional analysis and all this crap. </3

0 notes

Text

Time to be controversial:

So I'm in school right now at an internationally recognized, accredited college. I'm taking an intro class to computer programming and an introduction to Python. The only requisites for this class were that you needed to know how to turn on a computer.

1st day of class, we're told that instead of us spending over $100 on a textbook, we were instead supposed to choose an AI search engine, put prompts from the teacher provided PowerPoints, and build our own textbook as the class goes along.

Needless to say, the class was stunned with silence.

He proceeded to tell us that we were his guinea pig class to test this out and he expected us all to get 100% A's because everything we were graded on (HW, quizzes, exams, everything) was open note, open book, open internet.

Now I've been following the discussion and capabilities on AI for a while in several different industries that it has been effecting. (Art, code, hiring/HR, professional writing) and every single one of them has said that AI is not reliable and is quickly approaching the status of shitification. Like a snake eating it's tail, it is slowly corrupting its own data by feeding itself it's own incorrect data.

Coming from a fine art background with 2 degrees in it already, I had never used AI before. I finally used chatgpt because of this programming class, and what I found was surprising. The way it answers questions was very straight forward and reminded me a lot of how the Internet worked in the late 90s/early 2000s. The prompts need to be very specific and they're good for getting the ball rolling on understanding base line information. Really, it's a great tool for preliminary research, and then seeking out other websites to deep dive further into actual articles and research papers. It is NOT good or shouldn't be used for finished end products (essays, papers, ART, creative works.) it's also trash at math ATM.

Still, my experience with it in the field of learning python coding to help understanding what certain functions do/are, and in my business class for gathering information on infrastructure in an area half way across the world for a potential Olympics there, has been extremely helpful.

In the past I would get frustrated with swimming through the trash heap of AD and Sponsored articles on Google search to get a simple question answered. I used to turn to reddit for answers because Google got so terrible with it. But reddit was more opinion and first hand experience based. Great for insight into an industry from a people perspective but not great in answering the question: what does the "f" do in python's print(f"..")

Chatgpt was great for that.

I still think AI is good and bad for the art/creative industries. If used ethically (paying the artists and getting their permission) in gathering it's data sets. It could be a very helpful tool in selecting color palates, workshoping compositions, and generating a written idea prompt to get a piece started. It should not/never be used for finished final products.

But that's just my 2 cents.

“i asked chatgpt-” ohhh ok so nothing you are about to say matters at all

100K notes

·

View notes

Text

I'll be so for real, school is not as insufferable as y'all make it out to be. Yeah the people are shit but the classes are absolutely not. I say this as someone with ADHD who's struggled with paying attention to shit and getting bored easily my whole life. A large amount of things people "wish they learned in school", we have, in fact, learned in school. English as a subject is almost entirely about reading comprehension and media literacy. But everyone thinks English teachers are stupid and that "the curtains are just blue" and ignores what we are actually learning.

I'm still in high school and the other day I saw a kid chatgpt an assignment that was only half a page of reading and then one question about HIS OWN OPINION. That is not a difficult assignment, or one that would require chatgpt to fabricate some long response for you. It was a five sentence response on if YOU PERSONALLY think the US should have gotten involved in Vietnam, after we had learned about the Vietnam war for two days already.

The fact of the matter is that people literally don't pay attention to anything and then do the bare minimum when they have to. And then complain that school is boring. Yeah school is going to be boring when you're completely checked out the entire time. I've been in classes where I was checked out before and they couldn't have been more dreadful and slow. People need to start engaging in school and with their teachers.

Everyone I've talked to that has complained that the books we read in school are boring have literally never read the books. They sparknoted the whole thing and then complained when they didn't get the experience of the book. I also refuse to believe you can find something boring just because school made you do it. Like, To Kill a Mockingbird is a good book whether your English teacher makes you read it or you read it on your own time. All the people I've seen complain that higher level math is stupid don't even understand the basics of math because they were not paying attention ever. People at my school complain we never learned budgeting or how to do adult-y things when they literally have been teaching us this shit forever. School never taught you how to cook? No it most certainly did. We had to take Home Ec for at least two years and you chose to be the dishwasher for every recipe we made.

And don't even come at me with the "I had bad teachers" nonsense. Firstly, I understand how a bad teacher can fuck someone up, because I've had multiple bad teachers in the past, either teaching wise or emotionally. However, I refuse to believe that every teacher you've ever had is terrible. I'm sorry, I just don't. Most people at my school hardly know their teachers beyond their names. They don't put in the effort to engage with their teachers and TRY to learn from them. They expect their teachers to give them all the answers they need for their tests and fuck off. They don't want teachers to talk to them, to try to make lessons fun, or to treat them as people with brains. If the teacher marks them down for something then the teacher is bad and is trying to fail them. If the teacher doesn't hold their hand through every assignment, then the class is too hard. If a teacher dare to penalize them for repeated bad behaviour, they have a stick up their ass and need to calm down.

Our music teacher in middle school was a little strict and wouldn't let kids just fuck around in her class, so the kids decided she was a bitch and hated her. She was actually super fucking nice and just wanted to teach us music without having to deal with people not paying attention or messing with the stuff in her room. My 9th grade English teacher had an "annoying laugh" and so people fucking hated her. Like they hardcore hated that woman because she laughed "too much" and "was annoying". My current anatomy and physiology teacher refuses to hand hold every lesson she teaches and people genuinely think she is being too hard on them when they fail her tests, even though ALL the information we need is right there in our notes if you pay any amount of attention.

Our current public school system has a lot of problems, don't get me twisted. And I think there are a lot of things we could improve about school. However, school did a lot more than people were willing to give it credit for, and people just refuse to pay attention to shit.

“we need to teach media literacy in schools” guys was i really the only person paying attention in english class bffr

86K notes

·

View notes

Text

First question: I'm not 100% certain what you're asking here. They are limited for useful purposes because there are only so many uses for generating images or text. But there are multiple LLMs trained on different data sets with different potential uses.

One non-llm example that circulates a lot on tumblr is that AI image recognition tool that was trained to tell bear claws apart from croissants, but that researchers were able to adapt to recognize different kinds of cancer cells. That's image recognition, not an LLM, but it demonstrates that yeah different training sets can be used for different purposes with specific training, and general training sets can be refined for specific purposes.

Second question: A lot of the information about the energy required for LLMs is tremendously misleading - I've seen a post circulating on tumblr claiming that every ChatGPT prompt was like pouring a bottle of fresh water into the ground just to cool the servers and that is a serious misstatement of how these things work.

Energy requirements for LLMs are frontloaded in training the LLM; they require a lot of energy to train but comparatively little energy to use.

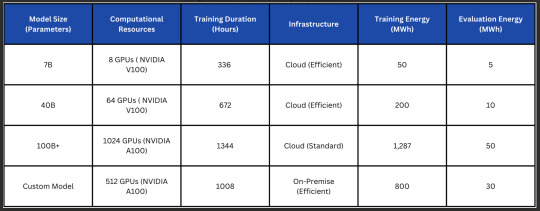

This chart breaks down some of the energy requirements for training an LLM:

50-800-ish Megawatt Hours for training. Let's take the high middle and consider 800MWh for our question.

800MWh is 8 Million KWh. The average US household uses 10500 KWhr per day. You divide those two and you get 762, so if we're rounding up you could train the custom model for the same amount of energy that it would take to run 800 US homes for a year. That is certainly not a *small* amount of energy, but it is also not an *enormous and catastrophic* amount of energy.

GPT-4 took about 50 Gigawatt Hours to train, which is a big jump up from the models on this chart. Doing the same math as above, it works out to powering about five thousand homes for a year. That is, again, not a *small* amount of power (apparently about twice as much as the Dallas Cowboys' Stadium uses in a year).

But training isn't the only cost of these models; sending queries uses power too, and using a GPT-backed search uses up to 68 times more energy than a google search does. At 0.0029 KWh per query.

Now. This is *not trivial.* I don't want to dismiss this as something that does not matter. The climate cost of the AI revolution is not something to write off. If we were to multiply the carbon footprint of Google by 70, that would be a bad thing. My attitude mirrors that of Wim Vanderbauwhede, the source of the last two links, who I am quoting here:

For many tasks an LLM or other large-scale model is at best total overkill, and at worst unsuitable, and a conventional Machine Learning or Information Retrieval technique will be orders of magnitude more energy efficient and cost effective to run. Especially in the context of chat-based search, the energy consumption could be reduced significantly through generalised forms of caching, replacement of the LLM with a rule-based engines for much-posed queries, or of course simply defaulting to non-AI search.

*however,* that said, the energy cost of LLMs as a whole is not as ridiculously, overwhelmingly high as I've seen some people claim that it is. It *does* consume a lot of power, we should reduce the amount of power it uses, and we can do that by using it less and limiting its use to appropriate contexts (which is not what is currently happening, largely because the AI hype machine is out promoting the use of AI in tons of inappropriate contexts).

Ditto for water use; datacenters do use water. It is a significant amount of water and they are not terribly transparent about their usage. But the amount required for "AI" isn't going to be magically higher because it's AI, it's going to be related to power use and the efficiency of the systems; 50GWh for training GPT-4 will require just as much cooling as 50GWh for streaming Netflix (which uses over 400k GWh annually). The way to improve that is to make these systems (all of them) more efficient in their power use, which is already a goal for datacenters.

And while LLMs do have an impact on the GPU market my experience is that it has been minimal compared to bullshit like bitcoin mining, which actually does use absurd amounts of power.

(Quick comparison here, it's frequently said that ChatGPT uses over half a million kilowatt hours of power a day; that seems like a big number but it's half a gigawatt hour. Multiply that by 365 and you've got 182.5 GWh. It's estimated that Bitcoin uses in the neighborhood of 110 *Terawatt* hours annually, which is 11000 GWh, which is about 600 times more energy than ChatGPT with *significantly* fewer people using Bitcoin and drastically less utility worldwide.)

A lot of computer manufacturers are actually currently developing specific ML processors (these are being offered in things like the Microsoft copilot PCs and in the Intel sapphire processors) so reliance on GPUs for AI is already receding (these processors should theoretically also be more efficient for AI than GPUs are, reducing energy use).

So i guess my roundabout point is that we're in the middle of a hype bubble that I think is going to pop soon but also hasn't been quite as drastic as a lot of people are claiming; it doesn't use unspeakable amounts of power or water compared to streaming video or running a football stadium and it is in the process of becoming more energy efficient (something the developers want! high energy costs are not an inextricable function of LLMs the way that they are with proof-of-work cryptocurrencies).

I don't know about GPUs, but I know GPU manufacturers are attempting to increase production to meet LLM demand, so I suspect their impact on the availability of GPUs is also going to drop off in time.

Author's note here: I am dyslexic and dyscalculic and fucking about with kilowatt hours, megawatt hours, gigawatt hours, and terawatt hours has likely meant I've missed zero somewhere along the line. I tried to check my work as best I could but there's likely a mistake some where in there that is pretty significant; if you find that mistake with my power conversion math PLEASE CORRECT ME because it is not my intent here to be misleading I just literally need to count the zeros with the tip of a pencil and hope that I put the correct number of zeroes into the converter tools.

I don't care about data scraping from ao3 (or tbh from anywhere) because it's fair use to take preexisting works and transform them (including by using them to train an LLM), which is the entire legal basis of how the OTW functions.

3K notes

·

View notes

Text

reblogging again because i have stuff to add aswell !! ( and because i've just realized something- )

honestly, i feel like it's just a lose-lose situation. i've used AI in the past ( not really my proudest moment ), and honestly. from my own experiences,

creating art - any kind of art - is hard and takes a lot of effort, passion, dedication, and use of one's own mind for creativity. it's not just something that can just be learned in one day or even in a year ; it takes effort, physical and mental effort, to even want to create something of your own. especially with how judgmental people are these days with every little thing that you do.

It's easy, yes. the secret is: it's easy! it's your mind! but sometimes people can't see that, and the easy becomes hard, difficult. ( especially for perfeccionists, like me )

with the arrival of Ai, everything just become .. 'easier, i guess. for people to """create""" something they want to see. and i feel like that's already harmful by itself.

some things takes time and lots of effort and dedication put into it, and sometimes people just don't feel ready for that.

which- it's fine ! that's okay. everyone gotta take their own time and pace to do something, once they are ready to, they are ready.

but with the arrival of Ai .. I feel like that just stops people from actually trying and taking their own time and pace to do that one thing they wanted to do, because Ai is here and it exists and it's there to be the fic you want. to be the art you want. to be the roleplayer you want. to be the comfort you want.

and that just .. stops people from actually trying to reach that goal of writing that fic. it stops people from trying to socialize. it stops people from creating art. it stops people from reaching out to others about their problems.

because Ai, as bad and harmful as it is ... It's a robot, and it's a robot you can control.

a robot of which will reply to you instantly and without judgment, and if it there is judgment, you can always just change the reply to something that you would like more. it's not like they actually meant anything they've said, it's a lifeless robot after all.

a robot of which will generate art, animation even, for you. anything that you'd want to see but didn't have enough money to commission.

a robot that you can control and they will be whatever you want them to be. sure it has its' many, many flaws, but at the end of the day ; it's a robot you can "trust", because actual people, who have emotions and are just as lively as you, are just too judgmental for you to trust.

people will judge you, laugh at you, mock you, step on you ; a robot won't do anything of that unless you want them to. and what would you choose at first sight ; talking to judgmental people, or a robot that will do as you please? i think the answer is pretty obvious.

and that, by itself.. is psychologically and mentally harmful.

sure, people are judgmental, even to themselves sometimes, and i can't blame people who use Ai because of this exact reason.

but as good as it feels, it's not good for your healthy at all. or for the environment, as OP said.

teachers can sometimes suck, so students will use chatgpt to be their new teacher, because atleast a robot will actually listen to what your problem is, and help you.

not only are chatgpts' answers almost ALWAYS innacurate and just terribly wrong ( "if you're pregnant, is always good to smoke 2-3 times per-day" ) , but it also stops these students to reach out to teachers - actual good teachers, or even to other students - for help with their math assignment. because chatgpt is there and its helped you before, why wouldn't it help now? ( <- and, with that, since chatgpt's answers are almost always wrong and just terribly innacurate and non-precise ... read OP's post again. )

that's just an example, because Ai is not only bad for the environment, but it's also bad for your healthy.

is it people's fault? ..yes, it is. does this mean that Ai is justifiable? absolutely not. in fact, Ai just made everything even worse. i don't believe the world will end any time soon, but i feel like the ending just keeps getting even near than before with that.

Ai could have been great. but instead it's used for things that you can reach out to people for, instead of using it for actual useful and helpful things for the environment.

Ai is not healing, it's stopping you from trying. and that's horrible.

/vent??

I am.. so sick of all this a/i stuff

Its just wrong- it takes away the beauty of all art forms, whether its writing, art, or music.

Generative a/i, character a/i, doesn't matter. It still steals from actual artists. It still steals from good, hard work, and for what? Roleplay? Free time?

It could've been great, really. Instead of using a/i to do the mundane- like chores, or using it to solve something revolutionary in the field of science and medicine, they use it for.. "art." For uncanny-valley, cocomelon-type pictures. For incorrect information in graphics AND in writing. We don't want a/i to draw and write for us so we can do chores (I saw this in a tweet once), we want a/i to do our chores so we can draw and write.

And I'm so, so sick of people acting like its a good thing, like using generative a/i is justifiable in anyway, or just cause others may be using it.

It's everywhere, now. My friend -idk if we're friends anymore, honestly- uses c-a/i and swears it's "just for roleplay," the pastor at church used generative a/i to get a picture of something from the Bible, and students at school uses ChatGPT to get the job done.

Don't they see how damaging it is to not only the art industry, but to the world?

C-a/i is never accurate to the characters they portray. They steal from fics and turn it into their generative slop. It's not even good slop! The grammar? Punctuation? Proper sentences? Don't need that, apparently! In fact, that very same "friend" showed me a screenshot of c-a/i messing up by saying Tails (from Sonic the Hedgehog) is the only one who'd be seen riding a motorcycle. And any STH fan would know that its not Tails, it's Shadow who rides a motorcycle. Tails rides a plane.

A/i "art" is even worse. I've seen how inaccurate the final stuff could be. A baby bird doesn't look like a real one when generated through that slop. It's actually damaging to how we see information. Not only that, but the "art" generated is still so, so harmful to actual artists. Who needs passion, anyway? Who needs love put into art, anyway? When generating an a/i image, you put love and passion... where, exactly? In the prompt box?

I'll explain this in hypotheticals.

Its the year 2030. You wake up and begin a brand new day.

You open a book and cringe. This doesn't look like a good plot. This doesn't look like a plot, at all?

You learn that it's written through character a/i.

You turn the TV on. All the cartoons you used to love is gone. An uncanny "cartoon" took its place, with soulless eyes and a robotic voice. You turn the TV off and go outside.

But wait- the world is.. crumbling?! That's right- A/I is bad for the Earth! It's ACTUALLY damaging the world!

And somehow- somehow- you develop a sickness, so you go to the hospital to get it checked out.

To your horror, the doctor merely shrugs and says that he doesn't know what sickness you have.

"But how could this be?! You're a doctor! You're supposed to know these things!"

You find out that the doctor used ChatGPT to get through college, and didn't actually perceive the information required.

Then you die. The speeches people read out for your funeral were generated through ChatGPT. Truly a terrible way to die.

People need to understand just why all this a/i nonsense is bad. I feel like they go, "yeah, a/i is bad" and turn around to their a/i roleplays and ChatGPT, ready to defend themselves for using that slop.

To the mentioned "friend", to the mentioned pastor, and to the students who may or may not read this;

I wish I could tell you just how bad this a/i stuff is. I wish you'd understand. I wish you'd listen.

I wish generative a/i never existed.

#O#to that one friend of mine who keeps using c.ai- i won't stop you from using it ; i can't force you#but just know that i'm here if you wanna talk. literally spam in my dms if that makes you feel better ; i don't mind. /gen#i don't support Ai nor am i neutral towards it btw- i hate it. and i do not condone it no matter the reason.#but at the same time ; i can't really Blame people for using it#i just wish Ai never existed.

38 notes

·

View notes