#Data Harmonization Techniques

Explore tagged Tumblr posts

Text

Data Harmonization Service, Tool, Software | PiLog Group

Data harmonization aligns disparate data sources into a central location through matching, merging, and transformation processes for streamlined data management.

#Data Harmonization#Data Harmonization solutions#Data Harmonization Tools#Data Harmonization Techniques#Data Harmonization Process#MDM Tools

0 notes

Text

Data Harmonization Service, Tool, Software | PiLog Group

Data harmonization aligns disparate data sources into a central location through matching, merging, and transformation processes for streamlined data management. https://www.piloggroup.com/data-harmonization.php

#Data Harmonization#Data Harmonization solutions#Data Harmonization Tools#Data Harmonization Techniques#Data Harmonization Process#MDM Tools

0 notes

Text

youtube

Embark on a captivating journey with us as we unfold the transformative story of our company's digital evolution. Witness the milestones, challenges, and triumphs that shaped our path from envisioning a digitally-driven future to making it a reality.

#best master data migration tools#master data management solutions#lean data consulting#what is master data management#data harmonization techniques#Youtube

0 notes

Text

Superman Saves Polar Ice Research

May 21st, 2025

Written By: Clark Kent, Lois Lane

In northern Greenland, buried amidst the piles of snow and shifting ice, there lies a tiny research station. Only two people live here, where the sun doesn’t set for months at a time, supply planes only come every three weeks, and the empty plains of the Arctic are the only thing for miles. The future of the human race rests on this tiny research station, one which Superman just saved.

The polar ice caps are now melting at a rate of almost 13% per decade, and scientists estimate that, by 2040, the ice caps will be completely gone. This is a direct result of global warming, one which scientists around the globe are scrambling to try and discover an answer to. The two International Arctic Research Center (IARC) scientists at Station Two, in Northern Greenland, believe they’ve finally found the answer: solar deflection arrays.

While previous polar refreezing techniques have concentrated on pumping seawater to the surface and refreezing it, Dr. Harmon Pearce and Dr. Lizbeth Addison are working towards a completely different method. Their method involves a series of solar deflection arrays scattered across the polar surface, reflecting a large portion of the sun’s light before it reaches the ice. This will prevent most of the ice from even starting to melt, and keeping the trapped greenhouse gasses inside. In short, their proposed method interrupts the feedback loop and slowing the melting of polar ice.

Dr. Addison, when describing the solution, stated that “if this array works, we will have bought humanity some time. Another decade or two to find a solution.” The pair of researchers explained that they were on the verge of a breakthrough, one that may give humanity some precious more time.

However, all of their hard work was nearly lost when a supervillain named Kilg%re attacked the station with cybernetically implanted polar bears and orcas. Kilg%re was also likely behind the sudden failure of two of the research station’s mainframes, where all of the science data was stored.

Thankfully Superman appeared to be visiting the area, likely stopping by his famous Fortress of Solitude, and saved the researchers. The Man of Steel easily defeated the polar bears, orcas, and Kilg%re, before returning to assure the scientists that all was well.

Superman saves Station Two from cybernetically implanted polar bears. (Image Credit: Dr. Harmon Pearce)

“If it weren’t for Superman,” Dr. Pearce explained, “Kilg%re would have probably eventually killed us. Then all our hard work would be lost forever and humanity would be doomed!”

Humanity is once again in Superman’s debt, as the brave researchers would be dead without his intervention. Now, with their equipment fixed and Kilg%re’s influence gone, Dr. Pearce and Dr. Addison will finally be able to reach their breakthrough. Perhaps they’ll discover the secret to saving humanity’s future.

Subscribe to the Daily Planet Tumblr Page for more stories like these!

9 notes

·

View notes

Text

Foods of the Ancient World: Tea

By AxelBoldt at en.wikipedia - Transferred from en.wikipedia, Public Domain, https://commons.wikimedia.org/w/index.php?curid=60236

Tea, specifically that made from pouring boiling water over the leaves of Camellia sinensis, which is native to East Asia, has a very long history. It is the second most commonly consumed drink in the world. It contains caffeine, which is a psychoactive substance that usually produces a stimulating effect on humans. While steeping other things in boiling water is frequently called tea, those are tisanes or infusions.

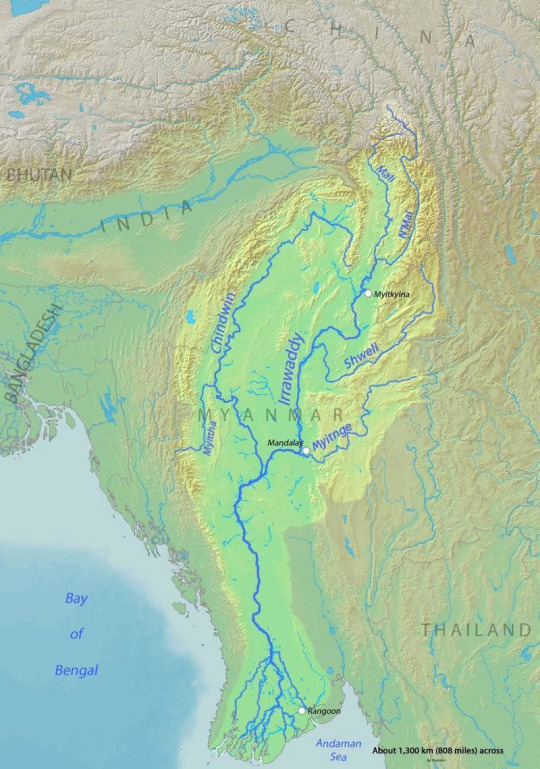

By Shannon - Background and river course data from http://www2.demis.nl/mapserver/mapper.asp, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=9633969

C. sinensis is an evergreen bush that probably originated near the source of the Irrawaddy River in Myanmar and spread into southeast China, Assam, and Indo-China, to an range of about 460,800 sq km from about latitude 95°-120°E and 11°-29°N. There are several varieties of tea that diverged based on the climate and may have hybridized with local plants.

By Shinno_(Shennong) inscribed artist not identified 19th century Japanese Wittig collection painting: artist not (yet) identified, photograph by uploaderderivative work: nagualdesign

Before it was made into a drink, tea leaves were eaten, perhaps millennia before it became a beverage. It's thought that tea drinking began in Yunnan for medicinal purposes. in Sichuan, 'people began to boil tea leaves for consumption into a concentrated liquid…using tea as a bitter yet stimulating drink'. Legends put the origin of tea drinking in mythical Shennong, around central China, in 2737 BCE, though evidence points that being brought from Yunnan and Sichuan. The oldest evidence of tea drinking was found in the mausoleum of Emperor Jing of Han, who died in 141 BCE. The earliest written evidence of tea dates to about 59 BCE in 'The Contract for a Youth' by Wang Bao which state that a youth how to brew and procure tea. Hua Tuo, who lived from about 140-208 CE, wrote that 'to drink bitter t'u constantly makes one think better'.

By Sanjay Acharya - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=4679972

It wasn't until the mid-8th century CE, under the Tang dynasty, that tea drinking spread outside of southern China into the rest of China, Korea, and Japan. It is also under the Tang dynasty that various processing techniques were developed, including stirring leaves in a hot, dry pan to control oxidization.

By Liu Songnian - https://www.shuge.org/meet/topic/119950/, Public Domain, https://commons.wikimedia.org/w/index.php?curid=141419610

Tea plays a very important part in social rituals in Confucian thought, which has its origins going back to the teachings of Kongzi, who lived from 551-479 BCE. Its part of the social ritual, among the family, for one's self-cultivation, and promoting humility. Among Chán Buddhists, which has its origins about 500 CE, where tea is used by monks to improve their concentration and wakefulness during meditation. Daoists, which have their origin going back to the Warring States period, from 450-300 BCE, value tea for promoting health, believing it to help balance and harmonize the qi as well as helping one to attain enlightenment.

4 notes

·

View notes

Text

Building Success with an Advanced Marketing Framework

Competitive digital world, understanding and implementing an Advanced Marketing Framework is crucial for brands aiming to capture, retain, and grow a strong customer base. A well-structured framework provides the roadmap for navigating dynamic customer needs, optimizing marketing efforts, and scaling business growth. By analyzing data, segmenting audiences, and delivering the right message at the right time, brands can engage customers and drive conversions effectively.

Understanding the Concept of an Advanced Marketing Framework

An Advanced Marketing Framework is a structured approach that combines strategies, tools, and analytics to enhance marketing effectiveness. It goes beyond traditional marketing methods, diving deeper into data-driven insights, real-time adjustments, and customer-centric tactics. Unlike basic frameworks, an advanced one integrates sophisticated techniques such as machine learning, behavioral segmentation, and cross-channel analysis to optimize results.

Creating an Advanced Marketing Framework is about harmonizing various elements, including customer data, brand messaging, channels, and feedback mechanisms, to offer a more cohesive experience across touchpoints. This approach increases brand loyalty and facilitates a seamless buyer journey from awareness to purchase.

Key Components of an Advanced Marketing Framework

Building an Advanced Marketing Framework requires more than just a few tweaks to traditional methods; it needs a holistic structure encompassing essential components:

1. Customer-Centric Approach

A customer-centric framework prioritizes the needs, desires, and behaviors of the target audience. By analyzing demographic, psychographic, and behavioral data, businesses can develop personalized campaigns that resonate with customers on a deeper level.

2. Data-Driven Insights

Data analytics is foundational to an Advanced Marketing Framework. With tools like Google Analytics, HubSpot, or more complex machine learning models, marketers can gain insights into customer behavior, engagement patterns, and conversion metrics. Leveraging data enables the fine-tuning of campaigns to maximize ROI and make informed decisions about where to allocate marketing resources.

3. Content Strategy and Personalization

Content remains a critical piece in engaging audiences. The Advanced Marketing Framework relies on content that is not only relevant but also tailored to individual customer segments. Personalization, such as dynamic emails, curated recommendations, or personalized landing pages, drives higher engagement and nurtures customer loyalty.

4. Omnichannel Marketing

In an Advanced Marketing Framework, omnichannel marketing ensures consistency across platforms, from email to social media to in-store experiences. Integrating channels allows for seamless transitions between touchpoints, enhancing the customer experience. This continuity helps brands reinforce messaging, nurture leads, and build stronger relationships with customers.

5. Automation and AI-Driven Strategies

Automation and artificial intelligence (AI) are game-changers in advanced marketing. Automated workflows can handle repetitive tasks, like sending follow-up emails or reminding customers about abandoned carts. AI-driven analytics provide deep insights into customer trends and forecast future behavior, making campaigns more targeted and efficient.

6. Continuous Optimization and Real-Time Adjustments

Unlike traditional frameworks, an Advanced Marketing Framework isn’t static. It requires constant optimization and real-time updates based on data analytics and customer feedback. A/B testing, conversion rate optimization, and performance monitoring are essential to ensure that campaigns are achieving their objectives and maximizing engagement.

7. Feedback and Adaptability

Customer feedback is an integral part of the Advanced Marketing Framework. Whether it’s through surveys, reviews, or social media comments, feedback helps brands understand customer sentiment, address concerns, and improve offerings.

Benefits of Implementing an Advanced Marketing Framework

Integrating an Advanced Marketing Framework offers substantial benefits:

Improved Customer Engagement: Personalized, data-driven strategies capture customer attention and keep them engaged across channels.

Higher Conversion Rates: By targeting the right audience with the right message, brands can enhance conversion rates and customer satisfaction.

Enhanced Efficiency: Automation and AI streamline operations, freeing up resources for creative, high-impact work.

Data-Backed Decisions: A reliance on data means that marketing decisions are well-informed and optimized for results.

Competitive Edge: Brands using an Advanced Marketing Framework stay ahead by adapting to customer preferences and market changes faster.

Getting Started with Your Advanced Marketing Framework

Creating and implementing an Advanced Marketing Framework might seem overwhelming, but starting with small steps can make a significant impact. Begin by clearly defining your target audience, outlining measurable objectives, and selecting the tools necessary for data analysis and automation.

Next, focus on omnichannel marketing strategies, ensuring your brand message is consistent across platforms. From here, experiment with content personalization and automation to enhance engagement. Remember to continually monitor and optimize your approach based on performance data and customer feedback.

2 notes

·

View notes

Text

The Role of AI in Music Composition

Artificial Intelligence (AI) is revolutionizing numerous industries, and the music industry is no exception. At Sunburst SoundLab, we use different AI based tools to create music that unites creativity and innovation. But how exactly does AI compose music? Let's dive into the fascinating world of AI-driven music composition and explore the techniques used to craft melodies, rhythms, and harmonies.

How AI Algorithms Compose Music

AI music composition relies on advanced algorithms that mimic human creativity and musical knowledge. These algorithms are trained on vast datasets of existing music, learning patterns, structures and styles. By analyzing this data, AI can generate new compositions that reflect the characteristics of the input music while introducing unique elements.

Machine Learning Machine learning algorithms, particularly neural networks, are crucial in AI music composition. These networks are trained on extensive datasets of existing music, enabling them to learn complex patterns and relationships between different musical elements. Using techniques like supervised learning and reinforcement learning, AI systems can create original compositions that align with specific genres and styles.

Generative Adversarial Networks (GANs) GANs consist of two neural networks – a generator and a discriminator. The generator creates new music pieces, while the discriminator evaluates them. Through this iterative process, the generator learns to produce music that is increasingly indistinguishable from human-composed pieces. GANs are especially effective in generating high-quality and innovative music.

Markov Chains Markov chains are statistical models used to predict the next note or chord in a sequence based on the probabilities of previous notes or chords. By analyzing these transition probabilities, AI can generate coherent musical structures. Markov chains are often combined with other techniques to enhance the musicality of AI-generated compositions.

Recurrent Neural Networks (RNNs) RNNs, and their advanced variant Long Short-Term Memory (LSTM) networks, are designed to handle sequential data, making them ideal for music composition. These networks capture long-term dependencies in musical sequences, allowing them to generate melodies and rhythms that evolve naturally over time. RNNs are particularly adept at creating music that flows seamlessly from one section to another.

Techniques Used to Create Melodies, Rhythms, and Harmonies

Melodies AI can analyze pitch, duration and dynamics to create melodies that are both catchy and emotionally expressive. These melodies can be tailored to specific moods or styles, ensuring that each composition resonates with listeners. Rhythms AI algorithms generate complex rhythmic patterns by learning from existing music. Whether it’s a driving beat for a dance track or a subtle rhythm for a ballad, AI can create rhythms that enhance the overall musical experience. Harmonies Harmony generation involves creating chord progressions and harmonizing melodies in a musically pleasing way. AI analyzes the harmonic structure of a given dataset and generates harmonies that complement the melody, adding depth and richness to the composition. -----------------------------------------------------------------------------

The role of AI in music composition is a testament to the incredible potential of technology to enhance human creativity. As AI continues to evolve, the possibilities for creating innovative and emotive music are endless.

Explore our latest AI-generated tracks and experience the future of music. 🎶✨

#AIMusic#MusicInnovation#ArtificialIntelligence#MusicComposition#SunburstSoundLab#FutureOfMusic#NeuralNetworks#MachineLearning#GenerativeMusic#CreativeAI#DigitalArtistry

2 notes

·

View notes

Text

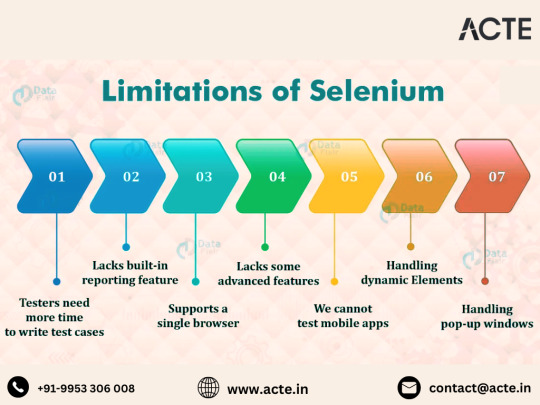

Mastering Selenium Testing: Overcoming Common Automation Challenges

Introduction: Selenium, renowned for its prowess in automation testing, is a cornerstone of modern software development. However, as testers embark on their Selenium journey, they encounter various challenges that can impede the efficiency and effectiveness of their automation efforts. In this guide, we delve into the common hurdles faced in Selenium testing and explore strategies to overcome them, empowering testers to excel in their automation endeavors.

Navigating Cross-Browser Compatibility: Ensuring consistent performance across diverse browsers is paramount in web application testing. However, achieving cross-browser compatibility with Selenium poses its share of challenges. Testers must meticulously validate their scripts across multiple browsers, employing robust strategies to address discrepancies and ensure seamless functionality.

Taming Dynamic Element Identification: Dynamic web elements, a common feature in modern web applications, present a formidable challenge for Selenium testers. The ever-changing nature of these elements requires testers to adapt their automation strategies accordingly. Employing dynamic locators and resilient XPath expressions helps testers tame these dynamic elements, ensuring reliable interaction and seamless test execution.

Synchronizing Actions in Asynchronous Environments: The asynchronous nature of web applications introduces synchronization issues that can undermine the reliability of Selenium tests. To mitigate these challenges, testers must implement effective synchronization techniques, such as explicit and implicit waits, to harmonize test actions with the application's dynamic behavior. This ensures consistent and accurate test execution across varying environmental conditions.

Efficiently Handling Frames and Windows: Frames and windows are integral components of web applications, presenting unique challenges for Selenium automation. Testers must adeptly navigate these elements, employing tailored automation strategies to interact with elements nested within frames and manage multiple browser windows seamlessly. This ensures comprehensive test coverage and accurate validation of application functionality.

Unlocking Performance Testing Potential: While Selenium excels in functional testing, leveraging it for performance testing requires careful consideration. Testers must explore complementary tools and frameworks to simulate realistic user interactions and assess application performance under varying load conditions. This holistic approach ensures comprehensive test coverage and robust performance validation.

Streamlining Test Maintenance Processes: Maintaining Selenium test suites is a perpetual endeavor, necessitating proactive strategies to adapt to evolving application requirements. Testers must prioritize modularization and abstraction, enabling seamless updates and enhancements to test scripts. Embracing version control and continuous integration practices further streamlines the test maintenance process, ensuring agility and efficiency in automation efforts.

Enhancing Reporting and Debugging Capabilities: Effective test analysis hinges on robust reporting and debugging capabilities. Testers must augment Selenium's native features with third-party reporting tools and frameworks to generate comprehensive test reports and streamline issue identification and resolution. This empowers testers to glean actionable insights and drive continuous improvement in test automation practices.

Empowering Data-Driven Testing: Data-driven testing is a cornerstone of robust automation strategies, yet managing test data poses its own set of challenges. Testers must implement scalable solutions for test data management, leveraging data-driven testing frameworks and integration with test management tools to enhance test coverage and efficiency. This ensures comprehensive validation of application functionality across diverse scenarios.

Ensuring Consistent Test Environment Setup: Establishing a consistent test environment is essential for reliable and reproducible test results. Testers must invest in robust environment provisioning and configuration management practices, ensuring parity across development, staging, and production environments. This minimizes environmental discrepancies and enhances the reliability of automation efforts.

Leveraging Community Resources and Collaboration: Navigating the intricacies of Selenium testing requires collaboration and knowledge-sharing within the testing community. Testers must actively engage with forums, online communities, and documentation to leverage collective wisdom and address challenges collaboratively. This fosters a culture of continuous learning and innovation, propelling automation efforts to new heights.

Conclusion: While Selenium testing presents its share of challenges, adeptly navigating these hurdles empowers testers to realize the full potential of automation testing. By embracing best practices, leveraging complementary tools and frameworks, and fostering a collaborative testing culture, testers can overcome obstacles and unlock the true power of Selenium for test automation.

3 notes

·

View notes

Text

Navigating the Full Stack: A Holistic Approach to Web Development Mastery

Introduction: In the ever-evolving world of web development, full stack developers are the architects behind the seamless integration of frontend and backend technologies. Excelling in both realms is essential for creating dynamic, user-centric web applications. In this comprehensive exploration, we'll embark on a journey through the multifaceted landscape of full stack development, uncovering the intricacies of crafting compelling user interfaces and managing robust backend systems.

Frontend Development: Crafting Engaging User Experiences

1. Markup and Styling Mastery:

HTML (Hypertext Markup Language): Serves as the foundation for structuring web content, providing the framework for user interaction.

CSS (Cascading Style Sheets): Dictates the visual presentation of HTML elements, enhancing the aesthetic appeal and usability of web interfaces.

2. Dynamic Scripting Languages:

JavaScript: Empowers frontend developers to add interactivity and responsiveness to web applications, facilitating seamless user experiences.

Frontend Frameworks and Libraries: Harness the power of frameworks like React, Angular, or Vue.js to streamline development and enhance code maintainability.

3. Responsive Design Principles:

Ensure web applications are accessible and user-friendly across various devices and screen sizes.

Implement responsive design techniques to adapt layout and content dynamically, optimizing user experiences for all users.

4. User-Centric Design Practices:

Employ UX design methodologies to create intuitive interfaces that prioritize user needs and preferences.

Conduct usability testing and gather feedback to refine interface designs and enhance overall user satisfaction.

Backend Development: Managing Data and Logic

1. Server-side Proficiency:

Backend Programming Languages: Utilize languages like Node.js, Python, Ruby, or Java to implement server-side logic and handle client requests.

Server Frameworks and Tools: Leverage frameworks such as Express.js, Django, or Ruby on Rails to expedite backend development and ensure scalability.

2. Effective Database Management:

Relational and Non-relational Databases: Employ databases like MySQL, PostgreSQL, MongoDB, or Firebase to store and manage structured and unstructured data efficiently.

API Development: Design and implement RESTful or GraphQL APIs to facilitate communication between the frontend and backend components of web applications.

3. Security and Performance Optimization:

Implement robust security measures to safeguard user data and protect against common vulnerabilities.

Optimize backend performance through techniques such as caching, query optimization, and load balancing, ensuring optimal application responsiveness.

Full Stack Development: Harmonizing Frontend and Backend

1. Seamless Integration of Technologies:

Cultivate expertise in both frontend and backend technologies to facilitate seamless communication and collaboration across the development stack.

Bridge the gap between user interface design and backend functionality to deliver cohesive and impactful web experiences.

2. Agile Project Management and Collaboration:

Collaborate effectively with cross-functional teams, including designers, product managers, and fellow developers, to plan, execute, and deploy web projects.

Utilize agile methodologies and version control systems like Git to streamline collaboration and track project progress efficiently.

3. Lifelong Learning and Adaptation:

Embrace a growth mindset and prioritize continuous learning to stay abreast of emerging technologies and industry best practices.

Engage with online communities, attend workshops, and pursue ongoing education opportunities to expand skill sets and remain competitive in the evolving field of web development.

Conclusion: Mastering full stack development requires a multifaceted skill set encompassing frontend design principles, backend architecture, and effective collaboration. By embracing a holistic approach to web development, full stack developers can craft immersive user experiences, optimize backend functionality, and navigate the complexities of modern web development with confidence and proficiency.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

2 notes

·

View notes

Text

Pioneering the Future of Software Quality Assurance through Automation Testing

Automation testing, a dynamic and essential software quality assurance technique, is reshaping the landscape of application testing. Its mission is to execute predefined test cases on applications, delivering heightened accuracy, reliability, and efficiency by automating tedious and time-consuming testing tasks.

The Present and Future Synergy in Automation Testing:

At its essence, automation testing responds to the pressing need for a streamlined testing process. Beyond its current significance, the future promises a paradigm shift, characterized by transformative trends and advancements.

Unveiling Future Trends in Automation Testing:

Proactive "Shift-Left" Testing: Embracing a proactive "Shift-Left" approach, the future of automation testing integrates testing earlier in the development life cycle. This strategic shift aims to detect and address issues at their inception, fostering a more resilient and efficient software development process.

Harmonizing with DevOps: Automation is positioned to become increasingly integral to DevOps practices. Its seamless integration into continuous integration and delivery (CI/CD) pipelines ensures not just faster but more reliable releases, aligning seamlessly with the agile principles of DevOps.

AI and Machine Learning Synergy: The convergence of artificial intelligence (AI) and machine learning (ML) is poised to revolutionize automation testing. This integration enhances script maintenance, facilitates intelligent test case generation, and empowers predictive analysis based on historical data, ushering in a new era of adaptive and efficient testing processes.

Evolving Cross-Browser and Cross-Platform Testing: In response to the diversification of the software landscape, automation tools are evolving to provide robust solutions for cross-browser and cross-platform testing. Ensuring compatibility across diverse environments becomes paramount for delivering a seamless user experience.

Codeless Automation Revolution: The ascent of codeless automation tools represents a pivotal shift in testing methodologies. This trend simplifies testing processes, enabling testers with limited programming skills to create and execute automated tests. This democratization of testing accelerates adoption across teams, fostering a collaborative testing environment.

Concluding the Journey: Navigating Future Imperatives:

In conclusion, automation testing transcends its current role as a necessity, emerging as a future imperative in the ever-evolving landscape of software development. As technologies advance and methodologies mature, automation testing is poised to play a pivotal role in ensuring the delivery of high-quality software at an accelerated pace. Embracing these future trends, the software industry is set to embark on a transformative journey towards more efficient, adaptive, and reliable testing processes.

3 notes

·

View notes

Text

From Algorithms to Ethics: Unraveling the Threads of Data Science Education

In the rapidly advancing realm of data science, the curriculum serves as a dynamic tapestry, interweaving diverse threads to provide learners with a comprehensive understanding of data analysis, machine learning, and statistical modeling. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry. This educational journey is a fascinating exploration of the multifaceted facets that constitute the heart of data science education.

1. Mathematics and Statistics Fundamentals:

The journey begins with a deep dive into the foundational principles of mathematics and statistics. Linear algebra, probability theory, and statistical methods emerge as the bedrock upon which the entire data science edifice is constructed. Learners navigate the intricate landscape of mathematical concepts, honing their analytical skills to decipher complex datasets with precision.

2. Programming Proficiency:

A pivotal thread in the educational tapestry is the acquisition of programming proficiency. The curriculum places a significant emphasis on mastering programming languages such as Python or R, recognizing them as indispensable tools for implementing the intricate algorithms that drive the field of data science. Learners cultivate the skills necessary to translate theoretical concepts into actionable insights through hands-on coding experiences.

3. Data Cleaning and Preprocessing Techniques:

As data scientists embark on their educational voyage, they encounter the art of data cleaning and preprocessing. This phase involves mastering techniques for handling missing data, normalization, and the transformation of datasets. These skills are paramount to ensuring the integrity and reliability of data throughout the entire analysis process, underscoring the importance of meticulous data preparation.

4. Exploratory Data Analysis (EDA):

A vivid thread in the educational tapestry, exploratory data analysis (EDA) emerges as the artist's palette. Visualization tools and descriptive statistics become the brushstrokes, illuminating patterns and insights within datasets. This phase is not merely about crunching numbers but about understanding the story that the data tells, fostering a deeper connection between the analyst and the information at hand.

5. Machine Learning Algorithms:

The heartbeat of the curriculum pulsates with the study of machine learning algorithms. Learners traverse the expansive landscape of supervised learning, exploring regression and classification methodologies, and venture into the uncharted territories of unsupervised learning, unraveling the mysteries of clustering algorithms. This segment empowers aspiring data scientists with the skills needed to build intelligent models that can make predictions and uncover hidden patterns within data.

6. Real-world Application and Ethical Considerations:

As the educational journey nears its culmination, learners are tasked with applying their acquired knowledge to real-world scenarios. This application is guided by a strong ethical compass, with a keen awareness of the responsibilities that come with handling data. Graduates emerge not only as proficient data scientists but also as conscientious stewards of information, equipped to navigate the complex intersection of technology and ethics.

In essence, the data science curriculum is a meticulously crafted symphony, harmonizing mathematical rigor, technical acumen, and ethical mindfulness. The educational odyssey equips learners with a holistic skill set, preparing them to navigate the complexities of the digital age and contribute meaningfully to the ever-evolving field of data science. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science.

3 notes

·

View notes

Text

Online Trials: The Cutting-Edge Legal Battle Against Cybercriminals

The rise of cybercrime and the need for online trials.

In an era where our lives are increasingly intertwined with the digital realm, a new breed of criminals has emerged from the shadows: cybercriminals. These tech-savvy wrongdoers lurk behind screens, exploiting vulnerabilities and wreaking havoc on innocent individuals and businesses alike. As their tactics become more sophisticated, so must our approach to justice. Enter the cybercrime barristers - legal warriors armed not with swords, but with knowledge of complex algorithms and digital footprints. In this article, we delve into the rise of cybercrime and explore why online trials are not just a necessity but a critical step towards safeguarding our virtual existence.

The challenges of prosecuting cybercriminals remotely.

One of the biggest challenges in prosecuting cybercriminals remotely is the difficulty of gathering sufficient evidence. Unlike traditional criminal cases, where physical evidence and witness testimony play a crucial role, cybercrime often leaves behind few tangible traces. With hackers operating from different jurisdictions and using sophisticated techniques to cover their tracks, it's an uphill battle for law enforcement agencies to collect enough digital evidence that can withstand scrutiny in court.

Another challenge lies in the complexity of international cooperation between law enforcement agencies. Cybercriminals frequently operate across borders, making it essential for authorities to work together internationally. However, navigating the legal and procedural hurdles of different countries can be time-consuming and arduous. Mutual legal assistance treaties may exist between nations, but discrepancies in laws and regulations can hinder seamless collaboration. This lack of harmonization poses a significant obstacle to prosecuting cybercriminals effectively on a global scale.

Moreover, remote prosecutions also face logistical challenges due to the decentralized nature of cybercrime investigations. Traditional courtroom procedures are not always well-suited for handling complex digital evidence or conducting virtual interviews with witnesses residing in different parts of the world. The legal system needs to adapt by implementing robust protocols for remote hearings and streamlined processes for dealing with digital evidence that ensures accuracy while safeguarding privacy rights.

Overall, prosecuting cybercriminals remotely presents a myriad of challenges that require innovative solutions and improved cross-border cooperation among law enforcement agencies worldwide. As technology continues to evolve rapidly, so must our approaches to combatting cybercrime effectively on a global scale

Emerging technologies aiding in online trials.

Emerging technologies are revolutionizing the way online trials are conducted, bringing unprecedented efficiency and convenience to legal proceedings. One such technological advancement is virtual reality (VR), which allows jurors to fully immerse themselves in a simulated courtroom environment from the comfort of their homes. This technology not only eliminates geographical barriers but also enhances courtroom experience by offering interactive elements like 3D visualizations and exhibits.

Another crucial technology that is aiding in online trials is artificial intelligence (AI). With AI-powered algorithms, legal professionals can now quickly analyze vast amounts of data and identify relevant information for their cases. This significantly reduces the time taken for document review processes, thus expediting the trial process as a whole. Additionally, AI-based chatbot systems are being used to provide instant legal advice to litigants and streamline communication between lawyers and clients.

In conclusion, cyber crime barristers in London are leveraging emerging technologies to facilitate efficient and effective online trials. The integration of virtual reality offers an immersive courtroom experience for jurors, eliminating geographical constraints. Meanwhile, artificial intelligence helps lawyers streamline tedious tasks such as document review while providing quicker access to vital information. As technology continues to evolve at a rapid pace, there is no doubt that its impact on online trials will continue to shape the future of the legal landscape.

Legal implications and concerns surrounding virtual courtrooms.

Virtual courtrooms have quickly become a necessity in the legal world, allowing barristers and judges to continue their work during the global pandemic. However, with this shift towards virtual proceedings comes a host of legal implications and concerns. One of the main concerns is the potential for cybercrime to compromise the integrity of these virtual courtrooms. As cybercrime continues to evolve, it poses a significant threat not only to individuals but also to entire judicial systems.

The rise of cybercrime has forced courts to reevaluate their security measures when conducting virtual hearings. Accessing sensitive information or tampering with evidence in a virtual courtroom setting can have severe consequences, potentially resulting in wrongful convictions or compromised cases. To combat this threat, courts must invest in robust cybersecurity systems and protocols capable of safeguarding against hacking attempts and data breaches.

Additionally, there are ethical considerations that arise from using virtual platforms for court proceedings. Privacy concerns may arise when witnesses testify remotely from their own homes or offices, as it becomes difficult to ensure that they are not being coerced or influenced by external factors. Furthermore, issues related to authentication and identification can jeopardize the integrity of a case when relying solely on video conferencing technology.

In conclusion, while virtual courtrooms offer convenience and flexibility for legal professionals and litigants alike, there are significant legal implications and concerns that must be addressed. With cybercrime threats evolving rapidly, it is crucial for courts to prioritize cybersecurity measures to protect sensitive information and preserve the integrity of justice within these digital spaces. Ethical

Success stories of online trials against cybercriminals.

In recent years, cybercrime has skyrocketed, leaving individuals and businesses vulnerable to an array of online threats. However, amidst this wave of illicit activities lurks a ray of hope – the successful prosecution of cybercriminals through online trials. Cyber crime barristers in London have been at the forefront of this battle, using their expertise to bring justice to victims and dismantle criminal networks.

One success story involves the case against a notorious hacking group that specialized in stealing personal information for financial gain. With the help of skilled cyber crime barristers, law enforcement agencies were able to gather substantial evidence and build a solid case against these criminals. The trial unfolded virtually, with witnesses testifying via video conferencing and experts providing invaluable insights remotely. The result was not only the conviction of several key players but also the identification and shutdown of their network which had infected thousands of computers worldwide.

Another noteworthy instance showcases how online trials have brought down international cyber syndicates operating across borders. Here, multiple jurisdictions collaborated seamlessly under the guidance of experienced cyber crime barristers based in London. Through innovative legal frameworks and agile investigative techniques, prosecutors were able to overcome logistical challenges posed by geographical distance. As a result, high-profile cybercriminals who once seemed invincible found themselves facing justice as evidence was meticulously presented before virtual courts.

These success stories highlight how digital platforms are now proving to be instrumental in bringing cybercriminals to justice. With talented cybersecurity professionals guiding investigations and skilled barristers leading prosecutions online, law enforcement agencies are growing increasingly proficient

The future of online trials and cybersecurity measures.

The evolution of technology has reshaped the way we approach trials, with online trials becoming a viable option in recent years. As we move into the future, it's clear that virtual courtrooms will play an increasingly significant role in our justice system. However, with this shift comes new challenges and risks, particularly in terms of cybersecurity.

In a world where cybercrime is on the rise, barristers and legal professionals must remain vigilant to protect sensitive information and ensure fair proceedings. Implementing robust cybersecurity measures becomes paramount to safeguard all parties involved in online trials. This includes utilizing encrypted communication platforms, secure file-sharing systems, and implementing strict authentication protocols for participants. Additionally, educating judges, lawyers, and even clients about potential cyber threats can help mitigate risks and create a more secure environment for remote hearings.

As technology advances further with artificial intelligence (AI) and machine learning algorithms being introduced into legal processes, there is also a need to address potential vulnerabilities associated with these advancements. AI-powered decision-making systems should be subject to regular audits that assess their fairness and accuracy while identifying any biases they may have acquired during training. It's crucial to strike a delicate balance between embracing the convenience offered by the digital age while upholding security standards that maintain trust within our judicial system.

Cybersecurity concerns surrounding online trials require continuous adaptation as hackers become more sophisticated in their methods of attack. By staying ahead of emerging threats through proactive monitoring, investment in secure technologies, knowledge sharing among legal professionals specializing in cybercrime defense or prosecution — we

Conclusion: Transforming the legal landscape in cyberspace.

In today's digital age, the legal landscape in cyberspace is undergoing a transformative shift, and cyber crime barristers in London are at the forefront of this revolution. With the rapid advancement of technology, criminals have found new ways to exploit vulnerabilities in cyberspace, leading to an unprecedented increase in cybercrime cases. As a result, there is a growing demand for specialized legal professionals who have deep knowledge and expertise in dealing with these sophisticated and evolving crimes.

The role of cyber crime barristers extends beyond traditional courtroom settings. They often collaborate with law enforcement agencies and cybersecurity experts to gather crucial evidence and identify culprits hiding behind layers of anonymity on the internet. Additionally, they can provide invaluable advice to businesses on how to protect themselves against cyber threats proactively. Moreover, with many countries lacking adequate legislation related to cybercrime, these legal professionals play an essential role in shaping policies that will govern cyberspace effectively.

In conclusion, as technology continues to advance at an astonishing pace, it is imperative that our legal system keeps up with this evolution. Cyber crime barristers in London are playing an instrumental role in transforming the legal landscape concerning cyberspace by providing expert guidance on combating cybercrime and shaping policies that safeguard individual rights online. Their work not only helps bring perpetrators of digital crimes to justice but also ensures that individuals and businesses alike can navigate the complexities of cyberspace securely. Through their dedication and expertise, these legal professionals are reshaping our understanding of law enforcement efforts needed urgently within the realm

3 notes

·

View notes

Text

#lean data consulting#data harmonization techniques#data quality best practices#data harmonization process

0 notes

Text

What Is the Coherence Method (And How Does It Actually Work?)

In times of chronic stress and overextension, true resilience isn’t built by pushing harder—it’s cultivated through restoring balance. The Coherence Method is a research-informed approach that helps individuals shift from survival mode into sustainable peak performance by aligning the body and mind through scientifically grounded practices.

Developed at Quantum Clinic, the Coherence Method integrates three core modalities—biofeedback training in heart-brain coherence, Floatation REST with frequency therapy, and expressive arts integration. Together, these practices promote regulation of the autonomic nervous system, support neuroplasticity, and offer a path to deep restoration and renewed vitality.

Let’s explore the science of coherence, why HRV regulation matters, and how this method works in practice.

The Science of Coherence and High Performance

Coherence is a physiological state in which the rhythms of the heart, breath, and nervous system are harmonized. When these systems enter synchronization—especially through synchronized breathing therapy—they support optimal function of the brain, immune system, and emotional regulation centers.

Scientific studies show that healing with heart-brain coherence leads to:

Improved heart rate variability (HRV), a key marker of stress resilience

Increased vagal tone and parasympathetic activation

Enhanced cognitive clarity and decision-making

Greater emotional adaptability and recovery from adversity

Unlike momentary relaxation techniques, coherence is a trainable, measurable state that reflects how efficiently your body responds to internal and external demands. It’s not just a feeling—it’s a biological signature of resilience.

Biofeedback and HRV Regulation: Training the Nervous System

The first step of the Coherence Method involves biofeedback training to enhance awareness of physiological signals like heart rate variability (HRV). Using real-time feedback tools, clients learn to regulate their nervous system through guided techniques such as synchronized breathing therapy and focused attention.

Imagine watching your heartbeat shift on the screen as your breath begins to slow—your physiology responding in real time to your intention. With each inhale and exhale, a pattern of calm begins to emerge, not just felt emotionally, but seen and tracked in your body’s own data.

By actively cultivating coherence between the heart and brain, individuals can improve self-regulation, reduce reactivity, and strengthen mental focus. For high performers, this creates a foundation for sustained presence under pressure—without sacrificing wellbeing.

This practice engages both the prefrontal cortex (responsible for planning and decision-making) and the autonomic nervous system, bridging the top-down and bottom-up pathways essential for long-term change.

Floatation REST and Frequency Therapy: Restoring from the Inside Out

After establishing coherence through biofeedback, clients move into an immersive Floatation REST session enhanced with frequency-based entrainment. In this near zero-gravity environment, the nervous system naturally downregulates, allowing for deep physiological recovery.

Imagine slipping into warm salt water, weightless and still. Your mind quiets, your breath slows. This kind of deep rest calms your entire system—like pressing a reset button for your brain and body. Float sessions support:

Non-sleep deep rest (NSDR), shown to improve memory consolidation and emotional processing

Theta-dominant brainwave activity, linked to insight, creativity, and trauma resolution

Increased HRV and parasympathetic tone, essential for healing and performance recovery

When paired with coherence practices, float therapy amplifies neuroplastic changes and supports deep embodiment of regulation. Rather than a passive experience, it becomes an active container for healing and integration.

Expressive Arts Integration: Anchoring Coherence into Daily Life

After restoring balance through breath and rest, many people ask, “Now what?” That’s where expressive arts integration comes in. This final step helps make the invisible changes you’ve experienced—slower breath, calmer heart, clearer mind—feel real and lasting in everyday life.

You might find yourself sketching the feeling of stillness that came over you in the float tank, writing a stream-of-consciousness letter to your future self, or moving your body in a way that expresses something words can’t quite reach. These aren’t art projects—they’re pathways to insight.

By engaging your senses and creativity, your nervous system starts to reorganize around the new patterns you just practiced. In neuroscience terms, emotional learning becomes sticky when we give it shape, color, and rhythm. In practical terms, it means you leave not just feeling different—but knowing what that difference means for your life.

This is where coherence shifts from a state you enter… to a way you begin to live.

Why the Coherence Method Works

The Coherence Method is designed to work across multiple layers of human functioning:

Physiological: Through HRV training and floatation REST, the method restores nervous system balance and supports systemic recovery.

Neurological: By entraining heart-brain pathways, the method improves attentional control, emotional regulation, and neuroplasticity.

Psychological: Through expressive arts, the method integrates insight and emotion into lasting personal growth.

Each modality complements the others, creating a feedback loop that strengthens the internal coherence needed for external performance.

Healing with Heart-Brain Coherence: A New Paradigm for Resilience

In an age of overstimulation, the true edge isn’t speed—it’s stability. The Coherence Method offers a structured, evidence-based approach to build that stability from within. Whether you’re seeking to recover from chronic stress, elevate your performance, or reconnect with a sense of purpose, this method meets you at the intersection of rest and readiness.

It’s not about bypassing challenge—it’s about training the internal conditions to meet life with clarity, presence, and power.

Book your first appointment today, and experience what it means to operate from true alignment. Not by effort—but through attunement.

#Coherence Method#Heart-Brain Coherence#HRV Training#Floatation REST Therapy#Frequency Therapy#Expressive Arts Therapy#Nervous System Regulation#Stress Recovery#Neuroplasticity#Trauma-Informed Healing#Peak Performance#Emotional Regulation#Vagal Tone#Non-Sleep Deep Rest#Somatic Healing#Mind-Body Integration#Resilience Training#Burnout Recovery#Conscious Breathwork#Theta Brainwaves

0 notes

Text

Strengthening Digital Presence Through Modern Marketing and Web Development

The Digital Evolution and Why Businesses Must Adapt In today’s rapidly shifting business world, having a strong online presence isn’t optional it’s essential. Every industry is witnessing an intense digital transformation, and a Digital marketing company India plays a key role in helping businesses adapt. From improving brand visibility to generating leads, digital strategies drive success in competitive markets. The need for professional guidance in this space is more pressing than ever, especially as online platforms become primary sources of customer engagement, brand communication, and long-term loyalty.

Understanding the Role of Strategic Digital Marketing A Digital marketing company India typically goes far beyond simply running ads or managing social media. It employs data-driven techniques to understand target audiences, refine messaging, and deliver measurable outcomes. These firms integrate search engine optimization, content strategy, pay-per-click advertising, and performance tracking to create a holistic approach. The result is not only enhanced online exposure but also improved customer trust and brand authority. Businesses that invest in this support are often better positioned to scale and pivot as market demands evolve.

How Technology Fuels Marketing Impact The digital world is powered by evolving tools and technologies that continue to reshape how companies interact with potential clients. Artificial intelligence, marketing automation, and analytics have become common in most campaigns, allowing a Digital marketing company India to deliver more accurate, timely, and personalized experiences. This technological integration ensures businesses aren’t just visible they’re memorable. Through continuous refinement, brands can keep pace with audience behavior, staying ahead of competitors who still rely on outdated methods or guesswork.

Web Development as the Backbone of Online Identity Behind every high-performing brand lies a technically sound and user-friendly website. A Website development company is instrumental in shaping this digital foundation. Functionality, design, speed, and mobile responsiveness are not just technical metrics; they influence how customers perceive a business. A professionally developed website is often a company’s first impression and can determine whether a visitor becomes a long-term customer. When tailored to meet specific business goals, a website becomes more than a tool it becomes a powerful asset.

Why Web Development Must Align With Strategy Creating a website in isolation is rarely effective. For maximum impact, a Website development company must work in tandem with marketing objectives. This alignment ensures that every page, feature, and function supports the broader brand narrative and conversion goals. Whether through intuitive navigation, engaging visuals, or optimized code, the focus remains on user experience. A well-integrated site improves retention, reduces bounce rates, and enhances customer interaction, all of which contribute to sustainable business growth and credibility in the digital space.

Building Digital Trust Through Seamless Experiences Online trust is built through consistent, seamless interactions. Both marketing and development contribute to this effort. A reliable Website development company ensures technical performance, while marketing bridges the emotional and informational gaps. Together, they create experiences that encourage users to engage, inquire, and convert. In a marketplace where first impressions matter, businesses that harmonize these services gain a distinct edge, especially when expansion and brand positioning are key priorities.

Long-Term Success Through Integrated Solutions Choosing the right digital partner is crucial for lasting impact. Businesses must look beyond short-term metrics and focus on how their digital assets contribute to long-term value. Firms that combine marketing with development bring deeper insights and more adaptable solutions. This is where an established Digital marketing company India often proves invaluable. With localized understanding and global techniques, such partners craft scalable strategies that can withstand market fluctuations while continuing to deliver results.

0 notes

Text

Human Rabies Vaccines Market Disruptive Innovation Transforming Global Prevention Landscape

The human rabies vaccines market is experiencing significant upheaval as manufacturers, regulators, and public health agencies adapt to new challenges. From advanced vaccine platforms and alternative delivery methods to digital tracking and data-driven decision-making, this market’s disruption is spurring faster innovation cycles, improved access in underserved regions, and new collaboration models. In this context, stakeholders must navigate shifting regulatory frameworks, supply chain vulnerabilities, and evolving funding landscapes to ensure effective, equitable protection against rabies globally.

1. Novel Vaccine Technologies

Recent years have seen a surge in advanced vaccine platforms aimed at improving immunogenicity, safety, and dosing efficiency. Recombinant DNA and viral-vector vaccines are entering clinical trials, offering the potential for single-dose protection and longer shelf life. Additionally, thermostable formulations that tolerate higher temperatures could revolutionize vaccination drives in regions with limited cold-chain infrastructure. These innovations not only reduce logistical barriers but also promise cost savings, as reduced doses and simplified handling translate to lower overall program expenses.

2. Intradermal and Alternative Delivery Methods

Traditional intramuscular regimens require multiple visits and skilled healthcare personnel. Intradermal administration, using a fraction of the dose per injection, has demonstrated comparable immune responses and significant dose-sparing benefits. Meanwhile, research into microneedle patches and needle-free jet injectors aims to simplify administration further, enhance patient compliance, and minimize needle-stick injuries. Adoption of these techniques could dramatically expand vaccination coverage, especially in remote or resource-constrained settings where trained staff are in short supply.

3. Supply Chain and Distribution Innovations

The COVID-19 pandemic exposed fragilities in global vaccine supply chains. In response, manufacturers and logistics providers are investing in digital tracking systems, blockchain-enabled provenance verification, and AI-driven demand forecasting. These tools improve visibility across the cold chain, reduce waste from spoilage, and optimize distribution schedules. Decentralized manufacturing—leveraging modular, portable bioreactors—also promises to localize production, cut transportation delays, and mitigate risks associated with geopolitical disruptions or raw-material shortages.

4. Regulatory Landscape Shifts

Regulators worldwide are recalibrating approval pathways to accelerate access to next-generation vaccines. Emergency-use authorizations and rolling reviews have become more common, reducing time-to-market for critical products. Harmonization efforts—such as reliance procedures between agencies—are streamlining dossier requirements and encouraging multinational clinical trials. However, balancing expedited approvals with rigorous safety assessments remains a challenge, particularly given the high stakes of rabies prevention in both endemic and non-endemic regions.

5. Public Health Initiatives and Partnerships

Global health organizations, national governments, and private foundations are renewing commitments to eliminate human rabies deaths by 2030. Public–private partnerships are funding mass vaccination campaigns for domestic dogs—critical reservoirs of zoonotic transmission—while integrating human vaccine efforts into broader One Health strategies. Innovative financing mechanisms, including advance market commitments and impact bonds, are aligning incentives for manufacturers to scale production and lower prices, particularly for low- and middle-income countries.

6. Digital Health and Data Integration

Data-driven insights are reshaping vaccine strategy and outreach. Mobile health (mHealth) platforms enable real-time monitoring of vaccination status, adverse events, and cold-chain integrity. Geospatial analytics help identify coverage gaps and optimize campaign routes. Moreover, AI-powered epidemiological models predict outbreak hotspots, guiding preemptive vaccine stockpiling. As digital infrastructure expands, these tools will be indispensable for tailoring interventions, improving accountability, and demonstrating program impact to funders and policymakers.

Conclusion

Disruptions in the human rabies vaccines market are converging to accelerate innovation, strengthen global collaboration, and enhance access to life-saving prophylaxis. Stakeholders who embrace novel technologies, flexible regulatory frameworks, and data-centric approaches will be best positioned to meet the 2030 elimination goal. Continued investment in alternative delivery methods, supply chain resilience, and integrated public health initiatives will be crucial for turning these disruptions into sustainable solutions—ultimately safeguarding millions from a preventable yet devastating disease.

0 notes