#Download mongodb terminal mac

Explore tagged Tumblr posts

Text

Download mongodb terminal mac

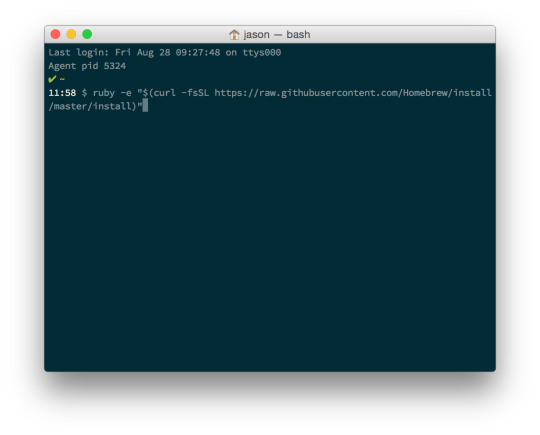

#DOWNLOAD MONGODB TERMINAL MAC FOR MAC#

Type cd ~/Documentsthen and press Return to navigate to your Home folder. So, for example, to move a file from one folder to another on your Mac, you’d use the "move" command mv and then type the location of the file you want to move, including the file name and the location where you want to move it to. To run a command, you just type it at the cursor and hit Return to execute.Įvery command comprises three elements: the command itself, an argument that tells the command what resource it should operate on, and an option that modifies the output. But before we do that, it’s worth spending a little time getting to know how commands work. The quickest way to get to know Terminal and understand how it works is to start using it. The app collects data like CPU load, disk activity, network usage, and more - all of which are accessible from your menu bar.

#DOWNLOAD MONGODB TERMINAL MAC FOR MAC#

Basically, a third-party Terminal for Mac that acts like Finder.įor Mac monitoring features, try iStat Menus. MacPilot allows getting access to over 1,200 macOS features without memorizing any commands. If Terminal feels complicated or you have issues with the setup, let us tell you right away that there are alternatives. If you don’t like the black text on a white background, go to the Shell menu, choose New Window and select from the options in the list. If you want to make the window bigger, click on the bottom right corner and drag it outwards. Bash stands for "Bourne again shell." There are a number of different shells that can run Unix commands, and on the Mac, Bash is the one used by Terminal. In the title bar are your username, the word "bash," and the window's dimensions in pixels. You’ll see a small window with a white background open on your desktop. To open it, either open your Applications folder, then open Utilities and double-click on Terminal, or press Command-space to launch Spotlight and type "Terminal," then double-click the search result. The Terminal app is in the Utilities folder in Applications. Curated Mac apps that keep your Mac’s performance under control.

0 notes

Text

Mastering Node.js: The Complete Guide for Developers

“Node.js is like a breath of fresh air in the world of web development, allowing developers to build fast and scalable applications with ease.” Node.js is a JavaScript runtime that allows you to build fast, scalable network applications. Some of the biggest companies in the world use Nodes, like Netflix, Uber, and PayPal. Node’s event-driven, non-blocking I/O model makes it lightweight and efficient. We will start with an example suppose you are a developer and you have been asked to develop a chat application capable of handling thousands of concurrent users, then Node.js is the perfect tool for that job. With its event-driven, non-blocking I/O model, Node.js makes it easy to build highly scalable applications that can handle a large number of connections.

But where do you start? This comprehensive guide will serve as your roadmap, providing developers with all the knowledge they need to get started with Node.js development.

Installing Node.js

To get started with Node.js, the first thing developers need to do is install it. The easiest way is to go to the official Node.js website and download the installer for your system. There are also different methods to install Node.js like for Windows and Mac, developers can download an executable file that will guide them through the installation process. If developers are on Linux, they can install Node.js through their package manager (like apt or yum), or download the source code and compile it.

Once the installation completes, open your terminal and run the command node -v to verify Node is installed. Developers should see the version number printed out, like v12.14.1. This confirms that Node.js is successfully installed on the developer system. Now they are ready to start building Node.js applications and exploring its powerful features. Developers are now prepared to begin. Developers must write all of their Node apps in.js files since Node.js operates by running JavaScript code outside of a browser. They can use the node command, such as node my_app.js, to run files directly. Node comes bundled with a (Node package manager) called npm, which makes it easy to install third-party packages/libraries to use in apps. Developers access npm through the npm command. Some of the most popular npm packages for Node are Express (for web servers), to install Express developers can run this command npm install express. To install Socket.IO (for real-time apps), developers can run the following commands npm install socket.io and MongoDB (for databases), developers can run the following commands npm install MongoDB.

Developers’ first Node.js Application

To build the first Node.js app, developers need to install Node.js on their machines. Then, open the terminal and run:

Install Node.js with the official Node.js website (https://nodejs.org).

Once installed, open the terminal or command prompt and run the following command to check your Node.js version: node -v

Create and Run the Application

Create a file named app.js in the developer’s desired directory.

Open app.js in a code editor and add the following code:

console.log (‘Hello World!’);

Save the app.js file and go back to your terminal or command prompt.

Navigate to the directory where the developer created app.js using the cd command.

Run the following command to execute your Node.js application: node app.js

Developers should see “Hello World!” printed in the console output, indicating that your application has successfully run.

Node.js Core Modules: Utilizing the Built-in Functionality

Node.js comes bundled with a variety of core modules that provide useful functionality. These built-in modules allow developers to do things like.

File System Access:

fs (File System):

The “fs” module allows you to work with the file system, enabling file operations such as reading, writing, deleting, and modifying files. Developers can use this module to create, read, and write files, as well as perform operations on directories. For example, fs.readFile() – Reads data from a file.

Reading a file using the fs module:

JavaScript

Copy code

const fs = require(‘fs’);

fs.readFile(‘file.txt’, ‘utf8’, (err, data) => {

if (err) {

console.error(err);

return;

console.log(data); });

HTTP Server

The HTTP module allows you to create HTTP servers and make requests. Developers can use it to serve static files, build REST APIs, and more. For example:

Const http = require(‘http’);

const server = http.createServer((req, res) => {

res.statusCode = 200;

res.setHeader(‘Content-Type’, ‘text/plain’);

res.end(‘Hello, World!’);

});

server.listen(3000, () => {

console.log(‘Server running on port 3000’);

});

The HTTP module, combined with the fs module, allows you to serve static files like HTML, CSS, and JavaScript to build full web applications.

Third-Party Modules: The NPM Registry

The Node Package Manager (NPM) registry hosts over 1 million open-source Node.js packages developers can download and use in their projects.

Some of the benefits of using NPM packages include:

They are open-source, so developers are free to use and modify.

Actively maintained and updated by the community.

Solve common problems so the developers can avoid reinventing the wheel.

Easy to install and integrate with developers’ apps.

Node.js Frameworks and Tools

Node.js has a strong ecosystem of tools and frameworks which help to accelerate the development process. The main players are listed below:

Express: Express is the most popular web framework for Node.js. It facilitates the development of web apps and APIs. Express also offers middleware, routing, and other practical features to help you get up and running quickly.

Koa: It is another web framework that uses newer JavaScript capabilities like async/awaits and is lighter than Express.

Socket.IO: It provides real-time communication between developers’ Node.js servers and clients. It’s great for building chat apps, online games, and other interactive experiences.

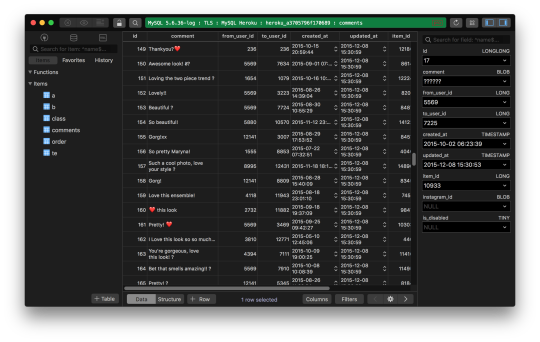

MongoDB: IT is a popular NoSQL database that pairs well with Node.js web applications. The Mongoose ODM (Object-Document Mapper) makes it easy to model your data and query MongoDB from Node.js.

Yarn and npm are package managers that make it easy to add third-party libraries and dependencies to Node.js projects.

These are some of the tools or frameworks for the development of Node.js. Node.js is a vast ecosystem and continues to grow rapidly.

Conclusion:

So, Node.js is an incredibly powerful tool for building fast and scalable network applications. With its event-driven, non-blocking I/O model, Node.js is efficient and lightweight. Throughout this comprehensive guide, we covered the basics of getting started with Node.js development, including setting up the development environment, frameworks, and tools.

So, if you are looking to build Node.js applications and need a reliable development team, consider working with EOV. EOV is known for providing top Node.js developers who are experienced in building scalable and high-performance applications.

#MVP development in Mobile#Web Services Using Java#Dot Net#PHP#React#Angular and Javascript#mvp development services#services data engineering#data engineering services#services digital engineering#digital engineering services

1 note

·

View note

Text

In this article we provide the steps for installing UniFi Network Application / UniFi Controller on Ubuntu 18.04 / Debian 9 Linux system. Ubiquiti offers a wide range of Access Points, Switches, Firewall devices, Routers, Cameras, among many other appliances which are managed from a single point. The commonly used management interface is provided by UniFi Dream Machine Pro. The UniFi Network Application (formerly UniFi Controller), is a wireless network management software solution from Ubiquiti Networks™. This tools provides the capability to manage multiple UniFI networks devices from a web browser. UniFi Network Application can be installed on Windows, macOS and Linux operating systems. In the guide that we did earlier, we covered installation process on macOS: Install UniFi Network Application on macOS For running in Docker see guide in the link below: How To Run UniFi Controller in Docker Container Below are the installation requirements for UniFi Network Application; A DHCP-enabled network Linux, Mac OS X, or Microsoft Windows 7/8 – Running controller software. Java Runtime Environment 8 Web Browser: Mozilla Firefox, Google Chrome, or Microsoft Internet Explorer 8 (or above) For UniFi Network Application installation on Linux, supported operating systems as of this article update are; Ubuntu 18.04 and 16.04 Debian 9 / Debian 8 Software versions requirements: Java 8 (My test with Java 17 and Java 11 failed). MongoDB =3.6 (We’ll install MongoDB 4.0) Before you proceed further query OS details through contents in /etc/os-release file to ensure OS version requirement is met. $ cat /etc/os-release NAME="Ubuntu" VERSION="18.04.6 LTS (Bionic Beaver)" ID=ubuntu ID_LIKE=debian PRETTY_NAME="Ubuntu 18.04.6 LTS" VERSION_ID="18.04" HOME_URL="https://www.ubuntu.com/" SUPPORT_URL="https://help.ubuntu.com/" BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/" PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy" VERSION_CODENAME=bionic UBUNTU_CODENAME=bionic From the output we can see this installation is on Ubuntu 18.04 (Bionic Beaver), which is supported. Add UniFi and MongoDB APT repositories It’s always a good recommendation to keep your system updated. Run the commands below to update your OS. sudo apt update && sudo apt -y full-upgrade After the update perform a reboot if it’s required. [ -f /var/run/reboot-required ] && sudo reboot -f Install software packages required to configure UniFi and MongoDB APT repositories. sudo apt install curl gpg gnupg2 software-properties-common apt-transport-https lsb-release ca-certificates Add UniFi APT repository Import repository GPG key used in signing UniFi APT packages. sudo wget -O /etc/apt/trusted.gpg.d/unifi-repo.gpg https://dl.ui.com/unifi/unifi-repo.gpg Add UniFi APT repository by executing commands below in your terminal. echo 'deb https://www.ui.com/downloads/unifi/debian stable ubiquiti' | sudo tee /etc/apt/sources.list.d/ubnt-unifi.list Add MongoDB APT repository Start by adding GPG key to your system keyring. wget -qO - https://www.mongodb.org/static/pgp/server-4.0.asc | sudo apt-key add - You should get a message in the output that says “OK” if this was successful. Next add repository to your system. ### Ubuntu 18.04 ### echo "deb https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.0.list ### Debian 9 ### echo "deb https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.0.list Once all the repositories have beed added, test if they are functional. ### Ubuntu 18.04 ### $ sudo apt update Get:1 http://mirrors.digitalocean.com/ubuntu bionic InRelease [242 kB] Ign:2 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 InRelease Hit:3 https://repos-droplet.digitalocean.com/apt/droplet-agent main InRelease Get:4 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 Release [2989 B]

Hit:6 http://mirrors.digitalocean.com/ubuntu bionic-updates InRelease Hit:7 http://security.ubuntu.com/ubuntu bionic-security InRelease Get:8 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0 Release.gpg [801 B] Hit:9 http://mirrors.digitalocean.com/ubuntu bionic-backports InRelease Get:5 https://dl.ubnt.com/unifi/debian stable InRelease [3038 B] Get:10 https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.0/multiverse amd64 Packages [18.4 kB] Get:11 https://dl.ubnt.com/unifi/debian stable/ubiquiti amd64 Packages [732 B] Fetched 268 kB in 1s (319 kB/s) Reading package lists... Done Building dependency tree Reading state information... Done ### Debian 9 ### $ sudo apt update Hit:1 http://security.debian.org stretch/updates InRelease Ign:2 http://mirrors.digitalocean.com/debian stretch InRelease Hit:3 http://mirrors.digitalocean.com/debian stretch-updates InRelease Hit:4 http://mirrors.digitalocean.com/debian stretch Release Ign:5 https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 InRelease Hit:6 https://repos-droplet.digitalocean.com/apt/droplet-agent main InRelease Get:8 https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 Release [1490 B] Get:9 https://repo.mongodb.org/apt/debian stretch/mongodb-org/4.0 Release.gpg [801 B] Get:7 https://dl.ubnt.com/unifi/debian stable InRelease [3038 B] Get:11 https://dl.ubnt.com/unifi/debian stable/ubiquiti amd64 Packages [732 B] Fetched 6061 B in 1s (5707 B/s) Reading package lists... Done Building dependency tree Reading state information... Done Install Java 8 on Ubuntu 18.04 / Debian 9 Restrict Ubuntu and your Debian system from automatically installing Java 11 / Java 17: sudo apt-mark hold openjdk-11-* sudo apt-mark hold openjdk-17-* Install Java 8 from OS default APT repositories. sudo apt install openjdk-8-jdk openjdk-8-jre Remove any newer version of Java installed – Java 11 or Java 17. sudo apt remove openjdk-11-* openjdk-17-* sudo apt install openjdk-8-jdk openjdk-8-jre Confirm installed Java version with the command java -version , it should show openjdk 1.8 $ java -version openjdk version "1.8.0_312" OpenJDK Runtime Environment (build 1.8.0_312-8u312-b07-0ubuntu1~18.04-b07) OpenJDK 64-Bit Server VM (build 25.312-b07, mixed mode) Install UniFi Network Application on Ubuntu 18.04 / Debian 9 We can now install UniFi Network Application on Ubuntu 18.04 / Debian 9 once Java 8 is confirmed to be the default Java version in the system. Run the commands below to install the latest release of UniFi Network Application (UniFi Controller). sudo apt install unifi Accept installation prompt as requested. Reading package lists... Done Building dependency tree Reading state information... Done The following additional packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless Suggested packages: binutils-doc default-jre libasound2-plugins alsa-utils java-virtual-machine cups-common liblcms2-utils pcscd libnss-mdns fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei | fonts-wqy-zenhei fonts-indic The following NEW packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.

65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless unifi 0 upgraded, 41 newly installed, 0 to remove and 57 not upgraded. Need to get 280 MB of archives. After this operation, 724 MB of additional disk space will be used. Do you want to continue? [Y/n] y Manually installing UniFi Network Application on Ubuntu 18.04 / Debian 9 If you prefer to manually download a .deb package, visit the download the UniFi Controller software from the Ubiquiti Networks website. Choose “Debian / Ubuntu Linux and UniFi Cloud Key” from software list. Click the “Download” button that shows up after selecting. Use “Download File” button or copy Direct URL and use command line downloaders to get the file in your local system. Downloading the file with wget: wget https://dl.ui.com/unifi//unifi_sysvinit_all.deb Installation of .deb package can be done with apt while passing dowloaded file path as an argument. $ sudo apt install ./unifi_sysvinit_all.deb Reading package lists... Done Building dependency tree Reading state information... Done Note, selecting 'unifi' instead of './unifi_sysvinit_all.deb' The following additional packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless Suggested packages: binutils-doc default-jre libasound2-plugins alsa-utils java-virtual-machine cups-common liblcms2-utils pcscd libnss-mdns fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei | fonts-wqy-zenhei fonts-indic The following NEW packages will be installed: binutils binutils-common binutils-x86-64-linux-gnu ca-certificates-java fontconfig-config fonts-dejavu-core java-common jsvc libasound2 libasound2-data libavahi-client3 libavahi-common-data libavahi-common3 libbinutils libboost-filesystem1.65.1 libboost-iostreams1.65.1 libboost-program-options1.65.1 libboost-system1.65.1 libcommons-daemon-java libcups2 libfontconfig1 libgoogle-perftools4 libgraphite2-3 libharfbuzz0b libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libpcrecpp0v5 libpcsclite1 libsnappy1v5 libstemmer0d libtcmalloc-minimal4 libyaml-cpp0.5v5 mongo-tools mongodb-clients mongodb-server mongodb-server-core openjdk-17-jre-headless unifi 0 upgraded, 41 newly installed, 0 to remove and 57 not upgraded. Need to get 280 MB of archives. After this operation, 724 MB of additional disk space will be used. Do you want to continue? [Y/n] y Successful installation output; Note, selecting 'unifi' instead of './unifi_sysvinit_all.deb' unifi is already the newest version (7.1.66-17875-1). 0 upgraded, 0 newly installed, 0 to remove and 57 not upgraded. Access UniFi Network Application on Web browser To restart the service run the following commands: sudo systemctl restart unifi.service Confirm that the status is running: $ systemctl status unifi.service ● unifi.service - unifi Loaded: loaded (/lib/systemd/system/unifi.service; enabled; vendor preset: enabled) Active: active (running) since Mon 2022-07-11 23:46:08 UTC; 18s ago Process: 12237 ExecStop=/usr/lib/unifi/bin/unifi.init stop (code=exited, status=0/SUCCESS)

Process: 12307 ExecStart=/usr/lib/unifi/bin/unifi.init start (code=exited, status=0/SUCCESS) Main PID: 12375 (jsvc) Tasks: 101 (limit: 2314) CGroup: /system.slice/unifi.service ├─12375 unifi -cwd /usr/lib/unifi -home /usr/lib/jvm/java-8-openjdk-amd64 -cp /usr/share/java/commons-daemon.jar:/usr/lib/unifi/lib/ace.jar -pidfile /var/run/unifi.pid -procname unifi -ou ├─12377 unifi -cwd /usr/lib/unifi -home /usr/lib/jvm/java-8-openjdk-amd64 -cp /usr/share/java/commons-daemon.jar:/usr/lib/unifi/lib/ace.jar -pidfile /var/run/unifi.pid -procname unifi -ou ├─12378 unifi -cwd /usr/lib/unifi -home /usr/lib/jvm/java-8-openjdk-amd64 -cp /usr/share/java/commons-daemon.jar:/usr/lib/unifi/lib/ace.jar -pidfile /var/run/unifi.pid -procname unifi -ou ├─12397 /usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java -Dfile.encoding=UTF-8 -Djava.awt.headless=true -Dapple.awt.UIElement=true -Dunifi.core.enabled=false -Xmx1024M -XX:+ExitOnOutOfMemor └─12449 bin/mongod --dbpath /usr/lib/unifi/data/db --port 27117 --unixSocketPrefix /usr/lib/unifi/run --logRotate reopen --logappend --logpath /usr/lib/unifi/logs/mongod.log --pidfilepath Jul 11 23:45:51 unifi-controller systemd[1]: Stopped unifi. Jul 11 23:45:51 unifi-controller systemd[1]: Starting unifi... Jul 11 23:45:51 unifi-controller unifi.init[12307]: * Starting Ubiquiti UniFi Network application unifi Jul 11 23:46:08 unifi-controller unifi.init[12307]: ...done. Jul 11 23:46:08 unifi-controller systemd[1]: Started unifi. Services should be available on port 8080 and port 8443. jmutai@unifi-controller:~$ ss -tunelp | egrep '8080|8443' tcp LISTEN 0 100 *:8443 *:* uid:112 ino:47897 sk:a v6only:0 tcp LISTEN 0 100 *:8080 *:* uid:112 ino:47891 sk:e v6only:0 Access UniFi Network Application on a web browser using the server IP address an port 8443. https://172.20.30.20:8443/ You’ll get SSL warnings while trying to access the portal. Click “Advanced” and “Proceed” to the portal. From your clients (UniFi devices), ping UniFi controller IP address to validate network connectivity. U6-LR-BZ.6.0.21# ping 172.20.30.20 -c 2 PING 172.20.30.20 (172.20.30.20): 56 data bytes 64 bytes from 172.20.30.20: seq=0 ttl=63 time=0.883 ms 64 bytes from 172.20.30.20: seq=1 ttl=63 time=0.885 ms --- 172.20.30.20 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.883/0.884/0.885 ms Pointing UniFi Devices to new Network Application (UniFi Controller) if this setup is new, your Network Application will discover all UniFi network devices in your network. Check out initial UniFi Network Application configuration in our recent macOS guide: Configure UniFi Network Application If you’re replacing an old Controller, then login to your UniFi devices and set inform address to the new server address and port. See below example. set-inform http://172.20.30.20:8080/inform Give it sometime and status should reflect the recent update we populated. US-16-150W-US.6.2.14# info Model: US-16-150W Version: 6.2.14.13855 MAC Address: 98:8a:20:fd:ea:94 IP Address: 192.168.1.116 Hostname: US-16-150W Uptime: 992330 seconds Status: Connected (http://172.20.30.20:8080/inform) Your uniFi devices will be available for administration from Web browser once they’re enrolled / imported for management via UniFi Network Application. Log Files Location UniFi Network Application have log files that are essential for any troubleshooting required. Logs files available are; /usr/lib/unifi/logs/server.log /usr/lib/unifi/logs/mongod.log We’re working on more articles around UniFi network infrastructure and other integrations. Stay tuned for updates.

0 notes

Text

Mongodb Macos Catalina

I recently bought a new iMac and moved all of my files over using Time Machine. The migration went really well overall and within a few hours I had my development machine up and running. After starting an application I’m building I quickly realized that I couldn’t get MongoDB to start. Running the following command resulted in an error about the data/db directory being read-only:

In this article, I will share how to install MongoDB on MacOS Catalina. First, I created a directory under the Library folder as shown below.

Today, you'll learn to install the MongoDB community edition on macOS Catalina and higher. Creating Data Folder. Before you install and use MongoDB, you need to create a data/db folder on your computer for storing MongoDB data. Before macOS Catalina, you can create this folder in the user's root directory with the following command.

I tried every chmod and chown command known to man and woman kind, tried manually changing security in Finder, compared security to my other iMac (they were the same), and tried a bunch of other things as well. But, try as I might I still saw the read-only folder error when trying to start the server….very frustrating. I found a lot of posts with the same issue but they all solved it by changing security on the folder. That wasn’t the problem on my machine.

Mongodb on macOS Catalina still using /data/db, despite new path specified in config file 0 Can connect to mongoDB with authentication via Robo 3T, but can't via zsh CLI on Mac Catalina. Uh, oh, what’s happening. This problem happens for Mac OS X version Catalina or after as a new update makes the root folder no longer writable. “With macOS Catalina, you can no longer store files or data in the read-only system volume, nor can you write to the “root” directory ( / ) from the command line, such as with Terminal.” See.

Football manager 2017 in game editor free download free. After doing more research I found out that Catalina added a new volume to the hard drive and creates a special folder where the MongoDB files need to go. The new folder is:

The MongoDB files can then go at: free download. software fingerspot compact series.

I ran the following commands to install the latest version of MongoDB using Homebrew (see https://github.com/mongodb/homebrew-brew for more details):

Svp 4 activation key. I then went into the MongoDB config file at /usr/local/etc/mongod.conf. Note that it’s possible yours may be located in a different location based on how you installed MongoDB. I changed the dbPath value to the following and copied my existing DB files into the folder:

Install Mongodb Macos Catalina

Finally, I made sure my account had the proper access to the folder by running chown (something I had tried many times earlier but on a folder outside of /System/Volumes/Data):

After that I was able to start MongoDB and everything was back to normal. Hopefully this saves someone a few hours – I wasted way too much time on the issue. 🙂

How To Install Mongodb On Macos Catalina

0 notes

Text

Install Brew Mac Catalina

Step 2: Install Node. By installing NodeJS you will also get NPM which is Node package manager. It will help you to install other packages. To install Node on your Mac using Homebrew type the following command. $ brew install node. Once you have Node installed you can check its version by typing the following command in the terminal.

Installing MongoDB on Mac (Catalina and non-Catalina) 14th Feb 2020. I had to reconfigure my Macbook after sending it for repairs. During the reconfiguration period, I noticed the instructions I linked to in “Setting up a local MongoDB connection” were outdated.

How To Install Brew On Catalina

How To Install Brew On Macos Catalina

With the recent release of Ruby 3.0, I thought it’d be a good idea to make my first post of the year on a quick how-to guide to installing the new (or any) version of Ruby.

Homebrew

The only thing you’ll need before we get started is brew installed on your machine.

Install kafka in macos catalina using brew Published Mon, Feb 10, 2020 by DSK if you do not have brew installed on your mac, Install homebrew on your mac by running the following command on your Terminal. ShawnstationdeMacBook-Pro: shawnstation$ brew -version Homebrew 1.6.9 Homebrew/homebrew-core (git revision fab7d; last commit 2018-07-07) catalina homebrew Share.

If you don’t have brew, install it by running the following command:

Ruby Install

Once you have brew, you’re ready to go! First, we must install a tool Postmodern/ruby-install to get the version of Ruby that we want:

Now we can download and install any version of ruby available. If we want Ruby 3.0:

or for latest version:

Voila! You can now install any version of Ruby you choose.

Multiple Versions of Ruby

If you need to utilize multiple versions of Ruby and will need to switch back and forth between versions, you may use the corresponding chruby tool here Postmodern/chruby.

Then, to switch between versions:

Setting Up A Database

We're going to install sqlite3 from homebrew because we can't use the built-in version with macOS Sierra without running into some troubles.

Rails ships with sqlite3 as the default database. Chances are you won't want to use it because it's stored as a simple file on disk. You'll probably want something more robust like MySQL or PostgreSQL.

There is a lot of documentation on both, so you can just pick one that seems like you'll be more comfortable with.

If you're new to Ruby on Rails or databases in general, I strongly recommend setting up PostgreSQL.

Iqra qaida pdf free download. If you're coming from PHP, you may already be familiar with MySQL.

MySQL

You can install MySQL server and client from Homebrew: Drivers m&a.

Once this command is finished, it gives you a couple commands to run. Follow the instructions and run them:

By default the mysql user is root with no password.

When you're finished, you can skip to the Final Steps.

PostgreSQL

You can install PostgreSQL server and client from Homebrew:

How To Install Brew On Catalina

Once this command is finished, it gives you a couple commands to run. Follow the instructions and run them:

How To Install Brew On Macos Catalina

By default the postgresql user is your current OS X username with no password. For example, my OS X user is named chris so I can login to postgresql with that username.

0 notes

Text

Mariadb Client For Mac

Powerful database management & design tool for Win, macOS & Linux. With intuitive GUI, user manages MySQL, PostgreSQL, MongoDB, MariaDB, SQL Server, Oracle & SQLite DB easily.

Mariadb Client For Mac Os

Mariadb Client For Mac Installer

Introduction

PhpMyAdmin is a free and open source tool for the administration of MySQL and MariaDB. As a portable web application written in PHP, it has become one of the most popular administration tool for MySQL.

In this tutorial, we will learn the steps involved in the installation of phpMyAdmin on MacOS.

Just installed MariaDB (with homebrew). Everything looks like it’s working, but I can’t figure out how to have it automatically startup on boot on my Mac. I can’t find any Mac-specific docs for this. I have an issue with processing for the pip install mysqlclient for Python3 on MAC OS Sierra. Running buildext building 'mysql' extension creating build/temp.macosx-10.12-x8664-3.6 clang -DNDEB. MariaDB Connector/J is used to connect applications developed in Java to MariaDB and MySQL databases. The client library is LGPL licensed. See this article for. DbForge Studio for MySQL is a universal GUI tool for MySQL and MariaDB database administration, development, and management. The IDE allows to create and execute queries, develop and debug stored routines, automate database object management, analyze table data via an intuitive interface. DbForge Studio for MySQL – Click image to enlarge.

Prerequisites

MacOS

Login as an administrator on terminal.

Homebrew must be installed on the system.

PHP 5.x or greater

Installation

Installation of phpMyAdmin includes following steps

1) Download the file Sign in to google drive file stream.

To install the file on MacOS, we need to download a compressed file from the official website of phpMyAdmin https://files.phpmyadmin.net/phpMyAdmin/4.7.6/phpMyAdmin-4.7.6-all-languages.tar.gz

2) Extract the file

$ tar xvfz Downloads/phpMyAdmin-4.7.6-all-languages.tar.gz

3) Start the development server

To access phpMyAdmin from localhost, we need to start the development server. First, we need to change our working directory by typing the following command:

Development server will be started having the root server in the phpmyadmin directory. Now, type localhost:8080 to access phpmyadmin on localhost. It will appear like this.

Window will prompt us to fill our MySQL username and password. Fill the required details and press GO.

Now we have successfully installed phpMyAdmin on MacOS.

Next TopicHow to Install Eclipse on Mac

Introduction

MariaDB is an open source database management system. MariaDB intends to maintain high compatibility with MySQL. It is one of the most popular databases in the world. MariaDB is named after the name of younger daughter Maria of its founder Michael 'Monty' Widenius. In this tutorial, we will learn the steps involved in the installation process of MariaDB on MacOS.

Prerequisites

MacOS

Login as an administrator on terminal

Installation

Installation includes following steps.

1) Update the local repository index of homebrew package installer

Following command can be executed to update the local repository index of homebrew.

$ brew update

2) Install with homebrew

MariaDB can be installed by using homebrew package installer. Following command is executed to install MariaDB.

Mariadb Client For Mac Os

3) Start MariaDB

To start MariaDB, MySQL.server needs to be started which is located inside /usr/local/Cellar/mariadb/10.2.12/support-files. We can start the server by using following command.

$ ./mysql.server start

To execute the command, we need to either change our working directory to usr/local/Cellar/mariadb/10.2.12/support-files or change the PATH variable by editing .bash_profile.

4) Working on command line

To get started with the MariaDB shell, following command can be executed.

For this, change the working directory to /usr/local/cellar/mariadb/10.2.12/bin or edit .bash_profile.

Create database command is responsible for creating the database named as javatpoint in the records.

5) Stop MariaDB

To stop MariaDB server, we run the following command.

$ ./mysql.server stop

Hence, we have installed and get started with MariaDB.

Mariadb Client For Mac Installer

0 notes

Link

Docker Mastery: with Kubernetes +Swarm from a Docker Captain

Docker Mastery: with Kubernetes +Swarm from a Docker Captain

Build, test, deploy containers with the best mega-course on Docker, Kubernetes, Compose, Swarm and Registry using DevOps

What you'll learn Docker Mastery: with Kubernetes +Swarm from a Docker Captain

How to use Docker, Compose and Kubernetes on your machine for better software building and testing.

Learn Docker and Kubernetes official tools from an award-winning Docker Captain!

Learn faster with included live chat group (21,000 members!) and weekly live Q&A.

Gain the skills to build development environments with your code running in containers.

Build Swam and Kubernetes clusters for server deployments!

Hands-on with best practices for making Dockerfiles and Compose files like a Pro!

Build and publish your own custom images.

Create your own custom image registry to store your apps and deploy them in corporate environments.

READ ALSO: 1. MongoDB - The Complete Developer's Guide 2020 2. Spring Framework 5: Beginner to Guru 3. Learn How To Code: Google's Go (golang) Programming Language 4. The Complete Oracle SQL Certification Course

Requirements

No paid software required - Just install your favorite text editor and browser!

Local admin access to install Docker for Mac/Windows/Linux.

Understand the terminal or command prompt basics.

Linux basics like shells, SSH, and package managers. (tips included to help newcomers!)

Know the basics of creating a server in the cloud (on any provider). (tips included to help newcomers!)

Understand the basics of web and database servers. (how they typically communicate, IP's, ports, etc.)

Have a GitHub and Docker Hub account.

Description

Updated Monthly in 2019! Be ready for the Dockerized future with the number ONE Docker + Kubernetes mega-course on Udemy. Welcome to the most complete and up-to-date course for learning and using containers end-to-end, from development and testing to server deployments and production. Taught by an award-winning Docker Captain and DevOps consultant.

Just starting out with Docker? Perfect. This course starts out assuming you're new to containers.

Or: Using Docker now and need to deal with real-world problems? I'm here for you! See my production topics around Swarm, Kubernetes, secrets, logging, rolling upgrades, and more.

BONUS: This course comes with Slack Chat and Live Weekly Q&A with me!

"I've followed another course on (Udemy). This one is a million times more in-depth." "...when it comes to all the docker stuff, this is the course you're gonna want to take" - 2019 Student Udemy Review

Just updated in November 2019 with sections on:

Docker Security top 10

Docker 19.03 release features

Why should you learn from me? Why trust me to teach you the best ways to use Docker? (Hi, I'm Bret, please allow me to talk about myself for a sec):

I'm A Practitioner. Welcome to the real world: I've got 20 years of sysadmin and developer experience, over 30 certifications, and have been using Docker and the container ecosystem for my consulting clients and my own companies since Docker's early days. Learn from someone who's run hundreds of containers across dozens of projects and organizations.

I'm An Educator. Learn from someone who knows how to make a syllabus: I want to help you. People say I'm good at it. For the last few years, I've trained thousands of people on using Docker in workshops, conferences, and meetups. See me teach at events like DockerCon, O'Reilly Velocity, GOTO Conf, and Linux Open Source Summit. I hope you'll decide to learn with me and join the fantastic online Docker community.

I Lead Communities. Also, I'm a Docker Captain, meaning that Docker Inc. thinks I know a thing or two about Docker and that I do well in sharing it with others. In the real-world: I help run two local meetups in our fabulous tech community in Norfolk/Virginia Beach USA. I help online: usually in Slack and Twitter, where I learn from and help others.

"Because of the Docker Mastery course, I landed my first DevOps job. Thank you, Captain!" - Student Ronald Alonzo

"There are a lot of Docker courses on Udemy -- but ignore those, Bret is the single most qualified person to teach you." - Kevin Griffin, Microsoft MVP

Giving Back: a portion of my profit on this course will be donated to supporting open source and protecting our freedoms online! This course is only made possible by the amazing people creating the open-source. I'm standing on the shoulders of (open source) giants! Donations will be split between my favorite charities including the Electronic Frontier Foundation and Free Software Foundation. Look them up. They're awesome!

This is a living course and will be updated as Docker and Kubernetes feature change.

This course is designed to be fast at getting you started but also get you deep into the "why" of things. Simply the fastest and best way to learn the latest container skills. Look at the scope of topics in the Session and see the breadth of skills you will learn.

Also included is a private Slack Chat group with 20k students for getting help with this course and continuing your Docker and DevOps learning with help from myself and other students.

"Bret's course is a level above all of those resources, and if you're struggling to get a handle on Docker, this is the resource you need to invest in." - Austin Tindle, Course Student

Some of the many cool things you'll do in this course:

Edit web code on your machine while it's served up in a container

Lockdown your apps in private networks that only expose necessary ports

Create a 3-node Swarm cluster in the cloud

Install Kubernetes and learn the leading server cluster tools

Use Virtual IP's for built-in load balancing in your cluster

Optimize your Dockerfiles for faster building and tiny deploys

Build/Publish your own custom application images

Learn the differences between Kubernetes and Swarm

Create your own image registry

Use Swarm Secrets to encrypt your environment configs, even on disk

Deploy container updates in a rolling always-up design

Create the config utopia of a single set of YAML files for local dev, CI testing, and prod cluster deploys

And so much more...

After taking this course, you'll be able to:

Use Docker in your daily developer and/or sysadmin roles

Deploy apps to Kubernetes

Make Dockerfiles and Compose files

Build multi-node Swarm clusters and deploying H/A containers

Make Kubernetes YAML manifests and deploy using infrastructure-as-code methods

Build a workflow of using Docker in dev, then test/CI, then production with YAML

Protect your keys, TLS certificates, and passwords with encrypted secrets

Keep your Dockerfiles and images small, efficient, and fast

Run apps in Docker, Swarm, and Kubernetes and understand the pros/cons of each

Develop locally while your code runs in a container

Protect important persistent data in volumes and bind mounts

Lead your team into the future with the latest Docker container skills!

Extra things that come with this course:

Access to the course Slack team, for getting help/advice from me and other students.

Bonus videos I put elsewhere like YouTube, linked to these courses resources.

Weekly Live Q&A on YouTube Live.

Tons of reference links to supplement this content.

Updates to content as Docker changes its features on these topics.

Who this course is for:

Software developers, sysadmins, IT pros, and operators at any skill level.

Anyone who makes deploys or operates software on servers.

Docker Mastery: with Kubernetes +Swarm from a Docker Captain

Created by Bret Fisher, Docker Captain Program

Last updated 3/2020

English

English, French [Auto-generated]

Size: 11.24 GB

DOWNLOAD COURSE

Content From: https://ift.tt/2CCIwDx

0 notes

Photo

Build a To-Do API With Node, Express, and MongoDB

API stands for Application Programming Interface. APIs allow the creation of an application to access features of an application or service. Building APIs with Node is very easy. Yes, you heard me right!

In this tutorial, you will be building a To-Do API. After you are done here, you can go ahead to build a front end that consumes the API, or you could even make a mobile application. Whichever you prefer, it is completely fine.

Application Setup

To follow this tutorial, you must have Node and NPM installed on your machine.

Mac users can make use of the command below to install Node.

brew install node

Windows users can hop over to the Node.js download page to download the Node installer.

Ubuntu users can use the commands below:

curl -sL http://ift.tt/2rqglBp | sudo -E bash - sudo apt-get install -y nodejs

To show that you have Node installed open your terminal and run node -v. You should get a prompt telling you the version of Node you have installed.

You do not have not install NPM; it comes with Node. To prove that, run npm -v from your terminal and you will see the version you have installed.

Now go and create a directory where you will be working from, and navigate into it.

mkdir node-todo-api cd node-todo-api

Initialize npm in the current working directory by running:

npm init -y

The -y flag tells npm to initialize using the default options.

That will create a package.json file for you. It is time to start downloading all the packages you will make use of. NPM makes this hassle free. You need them as dependencies.

To download these packages, run:

npm install express body-parser lodash mongoose mongodb --save

The --save flag tells npm to install the packages as dependencies for your application.

When you open your package.json file, you will see what I have below:

#package.json "dependencies": { "body-parser": "^1.17.2", "express": "^4.15.3", "lodash": "^4.17.4", "mongodb": "^2.2.29", "mongoose": "^4.11.1" },

Before you start coding, you have to install MongoDB on your machine if you have not done that already. Here is a standard guide to help you in that area. Do not forget to return here when you are done.

Create the Todo Model

Create a folder called server, and inside it create another folder called models. This is where your Todo model will exist. This model will show how your Todo collection should be structured.

#server/models/todo.js const mongoose = require('mongoose') // 1 const Todo = mongoose.model('Todo', { // 2 title: { // 3 type: String, required: true, minlength: 1, trim: true }, completed: { // 4 type: Boolean, default: false }, completedAt: { // 5 type: Number, default: null } }) module.exports = {Todo} // 6

You need to require the mongoose package you installed using NPM.

You create a new model by calling the model function of mongoose. The function receives two parameters: the name of the model as a string, and an object which contains the fields of the model. This is saved in a variable called Todo.

You create a text field for your to-do. The type here is String, and the minimum length is set at 1. You make it required so that no to-do record can be created without it. The trim option ensures that there are no white spaces when records are saved.

You create a field to save true of false value for each to-do you create. The default value is false.

Another field is created to save when the to-do is completed, and the default value is null.

You export the Todo module so it can be required in another file.

Set Up Mongoose

Inside your server folder, create another folder called db and a file called mongoose.js.

This file will be used to interact with your MongoDB, using Mongoose. Make it look like this.

#server/db/mongoose.js const mongoose = require('mongoose') // 1 mongoose.Promise = global.Promise // 2 mongoose.connect(process.env.MONGODB_URI) // 3 module.exports = {mongoose} // 4

You require the mongoose package you installed.

Plugs in an ES6-style promise library.

You call the connect method on mongoose, passing in the link to your MongoDB database. (You will set that up soon.)

You export mongoose as a module.

Set Up the Configuration

Time to set up a few configurations for your API. The configuration you will set up here will be for your development and test environment (you will see how to test your API).

#server/config/config.js let env = process.env.NODE_ENV || 'development' // 1 if (env === 'development') { // 2 process.env.PORT = 3000 process.env.MONGODB_URI = 'mongodb://localhost:27017/TodoApp' } else if (env === 'test') { // 3 process.env.PORT = 3000 process.env.MONGODB_URI = 'mongodb://localhost:27017/TodoAppTest' }

You create a variable and store it in the Node environment or the string development.

The if block checks if env equals development. When it does, the port is set to 3000, and the MongoDB URI is set to a particular database collection.

If the first condition evaluates to false and this is true, the port is set to 3000, and a different MongoDB database collection is used.

Create End Points

#server/server.js require('./config/config') // 1 const _ = require('lodash') // 2 const express = require('express') // 3 const bodyParser = require('body-parser') // 4 const {ObjectId} = require('mongodb') // 5 const {mongoose} = require('./db/mongoose') // 6 const {Todo} = require('./models/todo') // 7 const app = express() // 8 const port = process.env.PORT || 3000 // 9 app.use(bodyParser.json()) // 10 app.post('/todos', (req, res) => { let todo = new Todo({ text: req.body.text }) todo.save().then((doc) => { res.send(doc) }, (e) => { res.status(400).send(e) }) })

Requires the configuration file created earlier.

Requires lodash installed with NPM.

Requires express installed with NPM.

Requires bodyParser package.

Requires ObjectId from MongoDB.

Requires the mongoose module you created.

Requires your Todo model.

Sets app to the express module imported.

Sets port to the port of the environment where the application will run or port 3000.

Sets up middleware to make use of bodyparser.

In the next part, you created the post request, passing in the path and a callback function which has request and response as its parameters.

In the block of code, you set todo to the text that is passed in the body of the request, and then call the save function on the todo. When the todo is saved, you send the HTTP response—this time, the todo. Otherwise, the status code 400 is sent, indicating an error occurred.

#server.js ... // GET HTTP request is called on /todos path app.get('/todos', (req, res) => { // Calls find function on Todo Todo.find().then((todos) => { // Responds with all todos, as an object res.send({todos}) // In case of an error }, (e) => { // Responds with status code of 400 res.status(400).send(e) }) }) // GET HTTP request is called to retrieve individual todos app.get('/todos/:id', (req, res) => { // Obtain the id of the todo which is stored in the req.params.id let id = req.params.id // Validates id if (!ObjectId.isValid(id)) { // Returns 400 error and error message when id is not valid return res.status(404).send('ID is not valid') } // Query db using findById by passing in the id retrieve Todo.findById(id).then((todo) => { // If no todo is found with that id, an error is sent if (!todo) { return res.status(404).send() } // Else the todo retrieve from the DB is sent. res.send({todo}) // Error handler to catch and send error }).catch((e) => { res.status(400).send() }) }) // HTTP DELETE request routed to /todos/:id app.delete('/todos/:id', (req, res) => { // Obtain the id of the todo which is stored in the req.params.id let id = req.params.id // Validates id if (!ObjectId.isValid(id)) { // Returns 400 error and error message when id is not valid return res.status(404).send() } // Finds todo with the retrieved id, and removes it Todo.findByIdAndRemove(id).then((todo) => { // If no todo is found with that id, an error is sent if (!todo) { return res.status(404).send() } // Responds with todo res.send({todo}) // Error handler to catch and send error }).catch((e) => { res.status(400).send() }) }) // HTTP PATCH requested routed to /todos/:id app.patch('/todos/:id', (req, res) => { // Obtain the id of the todo which is stored in the req.params.id let id = req.params.id // Creates an object called body of the picked values (text and completed), from the response gotten let body = _.pick(req.body, ['text', 'completed']) // Validates id if (!ObjectId.isValid(id)) { // Returns 400 error and error message when id is not valid return res.status(404).send() } // Checks if body.completed is boolean, and if it is set if (_.isBoolean(body.completed) && body.completed) { // Sets body.completedAt to the current time body.completedAt = new Date().getTime() } else { // Else body.completed is set to false and body.completedAt is null body.completed = false body.completedAt = null } // Finds a todo with id that matches the retrieved id. // Sets the body of the retrieved id to a new one Todo.findOneAndUpdate(id, {$set: body}, {new: true}).then((todo) => { // If no todo is found with that id, an error is sent if (!todo) { return res.status(404).send() } // Responds with todo res.send({todo}) // Error handler to catch and send error }).catch((e) => { res.status(400).send() }) }) // Listens for connection on the given port app.listen(port, () => { console.log(`Starting on port ${port}`) }) // Exports the module as app. module.exports = {app}

You have created HTTP methods covering all parts of your API. Now your API is ready to be tested. You can do test it using Postman. If you do not have Postman installed on your machine already, go ahead and get it from the Postman website.

Start up your node server using node server.js

Open up postman and send an HTTP POST request. The specified URL should be http://locahost:3000/todos.

For the body, you can use this:

{ "text": "Write more code", "completed" : "false" }

And you should get a response. Go ahead and play around with it.

Conclusion

In this tutorial, you learned how to build an API using Node. You made use of a lot of resources to make the API a powerful one. You implemented the necessary HTTP methods that are needed for CRUD operation.

JavaScript has become one of the de facto languages of working on the web. It’s not without its learning curves, and there are plenty of frameworks and libraries to keep you busy, as well. If you’re looking for additional resources to study or to use in your work, check out what we have available in the Envato marketplace.

As you continue to build in Node, you will get to understand its power and the reason why it is used worldwide.

by Chinedu Izuchukwu via Envato Tuts+ Code http://ift.tt/2x7llzp

0 notes

Photo

Build a To-Do API With Node and Restify

Introduction

Restify is a Node.js web service framework optimized for building semantically correct RESTful web services ready for production use at scale. In this tutorial, you will learn how to build an API using Restify, and for learning purposes you will build a simple To-Do API.

Set Up the Application

You need to have Node and NPM installed on your machine to follow along with this tutorial.

Mac users can make use of the command below to install Node.

brew install node

Windows users can hop over to the Node.js download page to download the Node installer.

Ubuntu users can use the commands below.

curl -sL http://ift.tt/2rqglBp | sudo -E bash - sudo apt-get install -y nodejs

To show that you have Node installed, open your terminal and run node -v. You should get a prompt telling you the version of Node you have installed.

You do not have not install NPM because it comes with Node. To prove that, run npm -v from your terminal and you will see the version you have installed.

Create a new directory where you will be working from.

mkdir restify-api cd restify-api

Now initialize your package.json by running the command:

npm init

You will be making use of a handful of dependencies:

Mongoose

Mongoose API Query (lightweight Mongoose plugin to help query your REST API)

Mongoose TimeStamp (adds createdAt and updatedAt date attributes that get auto-assigned to the most recent create/update timestamps)

Lodash

Winston (a multi-transport async logging library)

Bunyan Winston Adapter (allows the user of the winston logger in restify server without really using bunyan—the default logging library)

Restify Errors

Restify Plugins

Now go ahead and install the modules.

npm install restify mongoose mongoose-api-query mongoose-timestamp lodash winston bunyan-winston-adapter restify-errors restify-plugins --save

The packages will be installed in your node_modules folder. Your package.json file should look similar to what I have below.

#package.json { "name": "restify-api", "version": "1.0.0", "description": "", "main": "index.js", "scripts": { "test": "echo \"Error: no test specified\" && exit 1" }, "keywords": [], "author": "", "license": "ISC", "dependencies": { "bunyan-winston-adapter": "^0.2.0", "lodash": "^4.17.4", "mongoose": "^4.11.2", "mongoose-api-query": "^0.1.1-pre", "mongoose-timestamp": "^0.6.0", "restify": "^5.0.0", "restify-errors": "^4.3.0", "restify-plugins": "^1.6.0", "winston": "^2.3.1" } }

Before you go ahead, you have to install MongoDB on your machine if you have not done that already. Here is a standard guide to help you in that area. Do not forget to return here when you are done.

When that is done, you need to tell mongo the database you want to use for your application. From your terminal, run:

mongo use restify-api

Now you can go ahead and set up your configuration.

touch config.js

The file should look like this:

#config.js 'use strict' module.exports = { name: 'RESTIFY-API', version: '0.0.1', env: process.env.NODE_ENV || 'development', port: process.env.port || 3000, base_url: process.env.BASE_URL || 'http://localhost:3000', db: { uri: 'mongodb://127.0.0.1:27017/restify-api', } }

Set Up the To-Do Model

Create your to-do model. First, you create a directory called models.

mkdir models touch todo.js

You will need to define your to-do model. Models are defined using the Schema interface. The Schema allows you to define the fields stored in each document along with their validation requirements and default values. First, you require mongoose, and then you use the Schema constructor to create a new schema interface as I did below. I also made use of two modules called mongooseApiQuery and timestamps.

MongooseApiQuery will be used to query your collection (you will see how that works later on), and timestamps will add created_at and modified_at timestamps for your collection.

The file you just created should look like what I have below.

#models/todo.js 'use strict' // Requires module dependencies installed using NPM. const mongoose = require('mongoose'), mongooseApiQuery = require('mongoose-api-query'), // adds created_at and modified_at timestamps for us (ISO-8601) timestamps = require('mongoose-timestamp') // Creates TodoSchema const TodoSchema = new mongoose.Schema({ // Title field in Todo collection. title: { type: String, required: true, trim: true, }, }, { minimize: false }) // Applies mongooseApiQuery plugin to TodoSchema TodoSchema.plugin(mongooseApiQuery) // Applies timestamp plugin to TodoSchema TodoSchema.plugin(timestamps) // Exports Todo model as a module. const Todo = mongoose.model('Todo', TodoSchema) module.exports = Todo

Set Up the To-Do Routes

Create another directory called routes, and a file called index.js. This is where your routes will be set.

mkdir routes touch index.js

Set it up like so:

#routes/index.js 'use-strict' // Requires module dependencies installed using NPM. const _ = require('lodash') errors = require('restify-errors') // Requires Todo model const Todo = require('../models/todo') // HTTP POST request server.post('/todos', (req, res, next) => { // Sets data to the body of request let data = req.body || {} // Creates new Todo object using the data received let todo = new Todo(data) // Saves todo todo.save( (err) => { // If error occurs, error is logged and returned if (err) { log.error(err) return next(new errors.InternalError(err.message)) //next() } // If no error, responds with 201 status code res.send(201) next() }) }) // HTTP GET request server.get('/todos', (req, res, next) => { // Queries DB to obtain todos Todo.apiQuery(req.params, (err, docs) => { // Errors are logged and returned if there are any if (err) { log.error(err) return next(new errors.InvalidContentError(err.errors.name.message)) } // If no errors, todos found are returned. res.send(docs) next() }) }) //HTTP GET request for individual todos server.get('/todos/:todo_id', (req, res, next) => { // Queries DB to obtain individual todo based on ID Todo.findOne({_id: req.params.todo_id}, (err, doc) => { // Logs and returns error if errors are encountered if (err) { log.error(err) return next(new errors.InvalidContentError(err.errors.name.message)) } // Responds with todo if no errors are found res.send(doc) next() }) }) // HTTP UPDATE request server.put('/todos/:todo_id', (req, res, next) => { // Sets data to the body of request let data = req.body || {} if (!data._id) { _.extend(data, { _id: req.params.todo_id }) } // Finds specific todo based on the ID obtained Todo.findOne({ _id: req.params.todo_id }, (err, doc) => { // Logs and returns error found if (err) { log.error(err) return next(new errors.InvalidContentError(err.errors.name.message)) } else if (!doc) { return next(new errors.ResourceNotFoundError('The resource you request could not be found.')) } // Updates todo when the todo with specific ID has been found Todo.update({ _id: data._id }, data, (err) => { // Logs and returns error if (err) { log.error(err) return next(new errors.InvalidContentError(err.errors.message)) } // Responds with 200 status code and todo res.send(200, data) next() }) }) }) // HTTP DELETE request server.del('/todos/:todo_id', (req, res, next) => { // Removes todo that corresponds with the ID received in the request Todo.remove({ _id: req.params.todo_id }, (err) => { // Logs and returns error if (err) { log.error(err) return next(new errors.InvalidContentError(err.errors.message)) } // Responds with 204 status code if no errors are encountered res.send(204) next() }) })

The file above does the following:

Requires module dependencies installed with NPM.

Performs actions based on the request received.

Errors are thrown whenever one (or more) is encountered, and logs the errors to the console.

Queries the database for to-dos expected for listing all to-dos, and posting to-dos.

Now you can create the entry for your application. Create a file in your working directory called index.js.

#index.js 'use strict' // Requires module dependencies downloaded with NPM. const config = require('./config'), restify = require('restify'), bunyan = require('bunyan'), winston = require('winston'), bunyanWinston = require('bunyan-winston-adapter'), mongoose = require('mongoose') // Sets up logging using winston logger. global.log = new winston.Logger({ transports: [ // Creates new transport to log to the Console info level logs. new winston.transports.Console({ level: 'info', timestamp: () => { return new Date().toString() }, json: true }), ] }) /** * Initialize server */ global.server = restify.createServer({ name : config.name, version : config.version, log : bunyanWinston.createAdapter(log) }) /** * Middleware */ server.use(restify.plugins.bodyParser({ mapParams: true })) server.use(restify.plugins.acceptParser(server.acceptable)) server.use(restify.plugins.queryParser({ mapParams: true })) server.use(restify.plugins.fullResponse()) // Error handler to catch all errors and forward to the logger set above. server.on('uncaughtException', (req, res, route, err) => { log.error(err.stack) res.send(err) }) // Starts server server.listen(config.port, function() { // Connection Events // When connection throws an error, error is logged mongoose.connection.on('error', function(err) { log.error('Mongoose default connection error: '+ err) process.exit(1) }) // When connection is open mongoose.connection.on('open', function(err) { // Error is logged if there are any. if (err) { log.error('Mongoose default connection error: ' + err) process.exit(1) } // Else information regarding connection is logged log.info( '%s v%s ready to accept connection on port %s in %s environment.', server.name, config.version, config.port, config.env ) // Requires routes file require('./routes') }) global.db = mongoose.connect(config.db.uri) })

You have set up your entry file to do the following:

Require modules installed using NPM.

Output info level logs to the console using Winston Logger. With this, you get to see all the important interactions happening on your application right on your console.

Initialize the server and set up middleware using Restify plugins.

bodyParser parses POST bodies to req.body. It automatically uses one of the following parsers based on content type:

acceptParser accepts the header.

queryParser parses URL query parameters into req.query.

fullResponse handles disappeared CORS headers.

Next, you start your server and create a mongoose connection. Logs are outputted to the console dependent on the result of creating the mongoose connection.

Start up your node server by running:

node index.js

Open up postman and send an HTTP POST request. The specified URL should be http://locahost:3000/todos.

For the request body, you can use this:

{ "title" : "Restify rocks!" }

And you should get a response.

Conclusion

You have been able to build a standard To-Do API using Restify and Node.js. You can enhance the API by adding new features such as descriptions of the to-dos, time of completion, etc.

By building this API, you learned how to create logs using Winston Logger—for more information on Winston, check the official GitHub page. You also made use of Restify plugins, and more are available in the documentation for plugins.

You can dig further into the awesomeness of Restify, starting with the documentation.

by Chinedu Izuchukwu via Envato Tuts+ Code http://ift.tt/2eQ2mze

0 notes

Text

Get Started Building Your Blog With Parse.js: Migration to Your Own Parse Server

What You'll Be Creating

Sadly, Parse.com is shutting down on 28 January 2017. In the previous series, I walked you through the entire journey of building a blog system from scratch. But everything was based on Parse.com, and if you are still using those techniques, your website will unfortunately stop working by then.

If you still like Parse.js (as do I), and want to continue using it, there's good news. The lovely folks there made it open source so we can run our own copy on all popular web hosting services. This tutorial aims to help you to make that change and migrate from Parse.com to your own Parse Server on Heroku.

I’m not an expert in back end, but this is the easiest way I found that worked. If you see any flaws and have better methods to share, definitely leave a comment below.

If you follow this episode, the server migration by itself won’t be too complicated. With Docker, it’s even quite easy to set up a local Parse Dashboard, so you can still see and play with your data with ease.

However, this tutorial series was made based on version 1.2.19 of Parse.js; in order to connect to a standalone Parse server, we need to update the application to run version 1.9.2. By version 1.5.0, Parse took out support for Backbone, and that means we need some major changes in the code. We will be adding Backbone back to the page, and using a mixture of Parse and Backbone there.

That’s quite a long intro, but don’t be too scared. You may need to debug here and there during the migration, but you should be fine. You can always check my source code or leave a comment below—I and this fantastic community here will try our best to help you.

Set Up and Migrate to Parse Server

First thing first, let’s start making a Parse Server. Parse already made this very easy with a detailed migration guide and a long list of sample applications on all popular platforms like Heroku, AWS, and Azure.

I will walk you through the easiest one that I know: the Heroku + mLab combo. It’s free to begin with, and you can always pay for better hardware and more storage within the same system. The only caveat is that the free version of Heroku would “sleep” after being idle for 30 minutes. So if users visit your site when the server is “sleeping”, they may have to wait for a few seconds for the server to wake up before they can see the data. (If you check the demo page for this project and it doesn’t render any blog content, that’s why. Give it a minute and refresh.)

This part is largely based on Heroku’s guide for Deploying a Parse Server and Parse’s own migration guide. I just picked the simplest, most foolproof path there.

Step 1: Sign Up and Create a New App on Heroku

If you don’t have a Heroku account yet, go ahead and make one. It’s a very popular platform for developing and hosting small web apps.

After you are registered, go to your Heroku Dashboard and create a new app—that will be your server.

Give it a name if you want:

Step 2: Add mLab MongoDB

Now you need a database to store the data. And let’s add mLab as an add-on.

Go to Resources > Add-ons, search for “mLab”, and add it:

Sandbox is enough for development—you can always upgrade and pay more to get more storage there.

Once you've added mLab, you can grab the MongoDB URI of it.

Go to Settings > Config Variables in your Heroku Dashboard and click on Reveal Config Vars.

There you can see the MongoDB URI for your database. Copy it, and now we can start migrating the database.

Step 3: Database Migration

Go to Parse.com and find the app you want to migrate. The open-source version of Parse Server only supports one app per server, so if you want to have multiple apps, you need to repeat this process and create multiple servers. Now just pick one.

Within that app, go to App Settings > General > App Management, and click on Migrate.

And then paste in the MongoDB URI you just copied, and begin migration.

Soon you should see this screen:

That means now you should have all your data in your mLab MongoDB. Easy enough, right?

But don’t finalize your app yet—let’s wait till we can see and play with that same data from our local Parse Dashboard, and then go back and finalize it.

Step 4: Deploy Parse Server

Now, with the database already migrated, we can deploy the Parse Server.

If you don’t have a GitHub account yet, go ahead and make one. It’s probably the most popular place where people share and manage their code.

With your GitHub account, fork the official Parse Server example repo.

Then, go back to your Heroku Dashboard. Under Deploy > Deployment method, choose GitHub and connect to your GitHub account.

After that, search for parse and find your parse-server-example repo and connect.

If everything works, you should see it being connected like this:

Now, scroll down to the bottom of the page. Under Manual Deploy, click Deploy Branch.

You will see your Parse Server being deployed, and soon you will see this screen:

Click on the View button and you will see this page:

That means your server is now happily running! And the URL you see is the URL of your server. You will need it later.

I know it feels unreal to see this simple line and know the server is up. But believe me, the powerhouse is running there. And your app can read and write from it already.

If you want to double-check, you can run something like this:

$ curl -X POST \ -H "X-Parse-Application-Id: myAppId" \ -H "Content-Type: application/json" \ -d '{}' \ http://ift.tt/1nKbhEu .. {"result":"Hi"}%

Set Up a Local Parse Dashboard

If you are a command-line ninja, you will probably be fine from here. But if you are like me and enjoy the friendly interface of the Parse Dashboard, follow this part to set up your own Parse Dashboard on your local machine, so that you can visually see and play with your Parse data the way you are used to.

Again, you can install your dashboard in a handful of ways. I am just going to show you the simplest way in my experience, using Docker.

Step 1: Install Docker

If you don’t have Docker, install it first (Mac | Windows).

It puts an entire environment in a box, so you don’t need to follow the quite complicated local installation tutorial and jump through hoops in Terminal.

Step 2: Build Parse Dashboard Image

With your docker running, clone the Parse Dashboard repo to your computer and go into that repo.

$ git clone http://ift.tt/1Y7Gu0F Cloning into 'parse-dashboard'... remote: Counting objects: 3355, done. remote: Total 3355 (delta 0), reused 0 (delta 0), pack-reused 3354 Receiving objects: 100% (3355/3355), 2.75 MiB | 2.17 MiB/s, done. Resolving deltas: 100% (1971/1971), done. $ cd parse-dashboard/

For absolute GitHub newbies, just download it as a zip file.

Unzip it and put it in a location you can remember. Open your terminal app if you are on Mac, type cd (you need a space after cd there) and drag the folder in.

Then hit Enter.

You should see something like this, and that means you are in the correct place.

~$ cd /Users/moyicat/temp/parse-dashboard-master ~/temp/parse-dashboard-master$

Now, you can quickly check if your Docker is installed correctly by pasting in this command:

docker -v

If it shows the version you are on, like this:

Docker version 1.12.5, build 7392c3b

It’s installed, and you can go on.

If instead, it says:

-bash: docker: command not found

You need to double-check if you have installed Docker correctly.

With Docker correctly installed, paste in this command and hit Enter:

docker build -t parse-dashboard .

That will build you a local image (feel free to ignore docker jargon) for Parse Dashboard.

You will see many lines scrolling. Don’t panic—just give it a while, and you will see it end with something like this:

Successfully built eda023ee596d

That means you are done—the image has been built successfully.

If you run the docker images command, you will see it there:

REPOSITORY TAG IMAGE ID CREATED SIZE parse-dashboard latest eda023ee596d About a minute ago 778.1 MB

Step 3: Connect Parse Dashboard to Parse Server

Now, that’s just an image, and it’s not a running server yet. When it runs, we want it to be connected to the Parse Server and the MongoDB we just built.

To do that, we first need to create a few keys in Heroku, so it can tell who to grant access to the data.

Go to your Heroku Dashboard and go to Settings > Config Variables again. This time, we need to add two variables there: APP_ID and MASTER_KEY. APP_ID can be anything easy to remember, while MASTER_KEY had better be a really long and complicated password.

Now with those keys, we can write a simple config file in the root directory of your Parse Dashboard folder. You can use everything from vim to TextEdit or Notepad—the goal is to make a plain text config.json file with this content:

{ "apps": [{ "serverURL": "your-app-url/parse", "appId": "your-app-id", "masterKey": "your-master-key", "appName": "your-app-name" }], "users": [{ "user":"user", "pass":"pass" }] }

And of course, replace your-app-url with the “View” link URL (the page that says “I dream of being a website”), but keep the /parse there at the end; replace your-app-id and your-master-key with the config variables you just added; and give your app a name and replace your-app-name with it.

Save the file and run the ls command in Terminal to make sure you put the config.json in the correct place.

~/temp/parse-dashboard-master$ ls CHANGELOG.md Dockerfile PIG/ Procfile bin/ package.json src/ webpack/ CONTRIBUTING.md LICENSE Parse-Dashboard/ README.md config.json scripts/ testing/''

If you see config.json in this list, you are good to move on.

Now run the pwd command to get the place you are in:

$ pwd /Users/moyicat/temp/parse-dashboard-master

Copy that path.

Then paste in this command to create a Docker container (again, you can ignore this jargon) and run your Parse Dashboard. Remember to replace my path with the path you just got.

docker run -d \ -p 8080:4040 \ -v /Users/moyicat/temp/parse-dashboard-master/config.json:/src/Parse-Dashboard/parse-dashboard-config.json \ -e PARSE_DASHBOARD_ALLOW_INSECURE_HTTP=1 \ --name parse-dashboard \ parse-dashboard

From top to bottom, this command does these things (which you can also ignore):

L1: Tell Docker to start a container L2: Make it run on port 8080, you can change it to any port you want L3: Get the config.json you just made as use it as the configuration L4: Enable HTTPS on your local (otherwise you will meet an error message) L5: Give it a name you can remember. You can change it to anything you want, too. L6: Tell Docker the image you want to use. parse-dashboard is the name we used in the docker build command earlier.

If everything runs through, it will return a long string to you with no error message. Like this:

4599aab0363d64373524cfa199dc0013a74955c9e35c1a43f7c5176363a45e1e

And now your Parse Dashboard is running! Check it out at http://localhost:8080/.

It might be slow and show a blank page initially, but just give it a couple of minutes and you will see a login screen:

Now you can log in with user as the username and pass as the password—it’s defined in the config.json in case you didn’t realize earlier. You can change them to whatever you want, too.