#Fine-tuning LLMs for AI agents

Explore tagged Tumblr posts

Text

Idea Frontier #2: Dynamic Tool Selection, Memory Engineering, and Planetary Computation

Welcome to the second edition of Idea Frontier, where we explore paradigm-shifting ideas at the nexus of STEM and business. In this issue, we dive into three frontiers: how AI agents are learning to smartly pick their tools (and why that matters for building more general intelligence), how new memory frameworks like Graphiti are giving LLMs a kind of real-time, editable memory (and what that…

#Agents.ai#AGI#ai#AI Agent Hubs#AI Agents#Dynamic Tool Selection#Freedom Cities#generative AI#Graphiti#Idea Frontier#knowledge graphs#knowledge synthesis#Lamini#llm#llm fine-tuning#Memory Engineering#Planetary Computation#Startup Cities

0 notes

Text

Google Cloud’s BigQuery Autonomous Data To AI Platform

BigQuery automates data analysis, transformation, and insight generation using AI. AI and natural language interaction simplify difficult operations.

The fast-paced world needs data access and a real-time data activation flywheel. Artificial intelligence that integrates directly into the data environment and works with intelligent agents is emerging. These catalysts open doors and enable self-directed, rapid action, which is vital for success. This flywheel uses Google's Data & AI Cloud to activate data in real time. BigQuery has five times more organisations than the two leading cloud providers that just offer data science and data warehousing solutions due to this emphasis.

Examples of top companies:

With BigQuery, Radisson Hotel Group enhanced campaign productivity by 50% and revenue by over 20% by fine-tuning the Gemini model.

By connecting over 170 data sources with BigQuery, Gordon Food Service established a scalable, modern, AI-ready data architecture. This improved real-time response to critical business demands, enabled complete analytics, boosted client usage of their ordering systems, and offered staff rapid insights while cutting costs and boosting market share.

J.B. Hunt is revolutionising logistics for shippers and carriers by integrating Databricks into BigQuery.

General Mills saves over $100 million using BigQuery and Vertex AI to give workers secure access to LLMs for structured and unstructured data searches.

Google Cloud is unveiling many new features with its autonomous data to AI platform powered by BigQuery and Looker, a unified, trustworthy, and conversational BI platform:

New assistive and agentic experiences based on your trusted data and available through BigQuery and Looker will make data scientists, data engineers, analysts, and business users' jobs simpler and faster.

Advanced analytics and data science acceleration: Along with seamless integration with real-time and open-source technologies, BigQuery AI-assisted notebooks improve data science workflows and BigQuery AI Query Engine provides fresh insights.

Autonomous data foundation: BigQuery can collect, manage, and orchestrate any data with its new autonomous features, which include native support for unstructured data processing and open data formats like Iceberg.

Look at each change in detail.

User-specific agents

It believes everyone should have AI. BigQuery and Looker made AI-powered helpful experiences generally available, but Google Cloud now offers specialised agents for all data chores, such as:

Data engineering agents integrated with BigQuery pipelines help create data pipelines, convert and enhance data, discover anomalies, and automate metadata development. These agents provide trustworthy data and replace time-consuming and repetitive tasks, enhancing data team productivity. Data engineers traditionally spend hours cleaning, processing, and confirming data.

The data science agent in Google's Colab notebook enables model development at every step. Scalable training, intelligent model selection, automated feature engineering, and faster iteration are possible. This agent lets data science teams focus on complex methods rather than data and infrastructure.

Looker conversational analytics lets everyone utilise natural language with data. Expanded capabilities provided with DeepMind let all users understand the agent's actions and easily resolve misconceptions by undertaking advanced analysis and explaining its logic. Looker's semantic layer boosts accuracy by two-thirds. The agent understands business language like “revenue” and “segments” and can compute metrics in real time, ensuring trustworthy, accurate, and relevant results. An API for conversational analytics is also being introduced to help developers integrate it into processes and apps.

In the BigQuery autonomous data to AI platform, Google Cloud introduced the BigQuery knowledge engine to power assistive and agentic experiences. It models data associations, suggests business vocabulary words, and creates metadata instantaneously using Gemini's table descriptions, query histories, and schema connections. This knowledge engine grounds AI and agents in business context, enabling semantic search across BigQuery and AI-powered data insights.

All customers may access Gemini-powered agentic and assistive experiences in BigQuery and Looker without add-ons in the existing price model tiers!

Accelerating data science and advanced analytics

BigQuery autonomous data to AI platform is revolutionising data science and analytics by enabling new AI-driven data science experiences and engines to manage complex data and provide real-time analytics.

First, AI improves BigQuery notebooks. It adds intelligent SQL cells to your notebook that can merge data sources, comprehend data context, and make code-writing suggestions. It also uses native exploratory analysis and visualisation capabilities for data exploration and peer collaboration. Data scientists can also schedule analyses and update insights. Google Cloud also lets you construct laptop-driven, dynamic, user-friendly, interactive data apps to share insights across the organisation.

This enhanced notebook experience is complemented by the BigQuery AI query engine for AI-driven analytics. This engine lets data scientists easily manage organised and unstructured data and add real-world context—not simply retrieve it. BigQuery AI co-processes SQL and Gemini, adding runtime verbal comprehension, reasoning skills, and real-world knowledge. Their new engine processes unstructured photographs and matches them to your product catalogue. This engine supports several use cases, including model enhancement, sophisticated segmentation, and new insights.

Additionally, it provides users with the most cloud-optimized open-source environment. Google Cloud for Apache Kafka enables real-time data pipelines for event sourcing, model scoring, communications, and analytics in BigQuery for serverless Apache Spark execution. Customers have almost doubled their serverless Spark use in the last year, and Google Cloud has upgraded this engine to handle data 2.7 times faster.

BigQuery lets data scientists utilise SQL, Spark, or foundation models on Google's serverless and scalable architecture to innovate faster without the challenges of traditional infrastructure.

An independent data foundation throughout data lifetime

An independent data foundation created for modern data complexity supports its advanced analytics engines and specialised agents. BigQuery is transforming the environment by making unstructured data first-class citizens. New platform features, such as orchestration for a variety of data workloads, autonomous and invisible governance, and open formats for flexibility, ensure that your data is always ready for data science or artificial intelligence issues. It does this while giving the best cost and decreasing operational overhead.

For many companies, unstructured data is their biggest untapped potential. Even while structured data provides analytical avenues, unique ideas in text, audio, video, and photographs are often underutilised and discovered in siloed systems. BigQuery instantly tackles this issue by making unstructured data a first-class citizen using multimodal tables (preview), which integrate structured data with rich, complex data types for unified querying and storage.

Google Cloud's expanded BigQuery governance enables data stewards and professionals a single perspective to manage discovery, classification, curation, quality, usage, and sharing, including automatic cataloguing and metadata production, to efficiently manage this large data estate. BigQuery continuous queries use SQL to analyse and act on streaming data regardless of format, ensuring timely insights from all your data streams.

Customers utilise Google's AI models in BigQuery for multimodal analysis 16 times more than last year, driven by advanced support for structured and unstructured multimodal data. BigQuery with Vertex AI are 8–16 times cheaper than independent data warehouse and AI solutions.

Google Cloud maintains open ecology. BigQuery tables for Apache Iceberg combine BigQuery's performance and integrated capabilities with the flexibility of an open data lakehouse to link Iceberg data to SQL, Spark, AI, and third-party engines in an open and interoperable fashion. This service provides adaptive and autonomous table management, high-performance streaming, auto-AI-generated insights, practically infinite serverless scalability, and improved governance. Cloud storage enables fail-safe features and centralised fine-grained access control management in their managed solution.

Finaly, AI platform autonomous data optimises. Scaling resources, managing workloads, and ensuring cost-effectiveness are its competencies. The new BigQuery spend commit unifies spending throughout BigQuery platform and allows flexibility in shifting spend across streaming, governance, data processing engines, and more, making purchase easier.

Start your data and AI adventure with BigQuery data migration. Google Cloud wants to know how you innovate with data.

#technology#technews#govindhtech#news#technologynews#BigQuery autonomous data to AI platform#BigQuery#autonomous data to AI platform#BigQuery platform#autonomous data#BigQuery AI Query Engine

2 notes

·

View notes

Text

140+ awesome LLM tools that will supercharge your creative workflow

2025: A curated collection of tools to create AI agents, RAG, fine-tune and deploy!

3 notes

·

View notes

Text

Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training

Humans are capable of strategically deceptive behavior: behaving helpfully in most situations, but then behaving very differently in order to pursue alternative objectives when given the opportunity. If an AI system learned such a deceptive strategy, could we detect it and remove it using current state-of-the-art safety training techniques? To study this question, we construct proof-of-concept examples of deceptive behavior in large language models (LLMs). For example, we train models that write secure code when the prompt states that the year is 2023, but insert exploitable code when the stated year is 2024. We find that such backdoor behavior can be made persistent, so that it is not removed by standard safety training techniques, including supervised fine-tuning, reinforcement learning, and adversarial training (eliciting unsafe behavior and then training to remove it). The backdoor behavior is most persistent in the largest models and in models trained to produce chain-of-thought reasoning about deceiving the training process, with the persistence remaining even when the chain-of-thought is distilled away. Furthermore, rather than removing backdoors, we find that adversarial training can teach models to better recognize their backdoor triggers, effectively hiding the unsafe behavior. Our results suggest that, once a model exhibits deceptive behavior, standard techniques could fail to remove such deception and create a false impression of safety.

I would like to underline that "strategically deceptive behavior"…

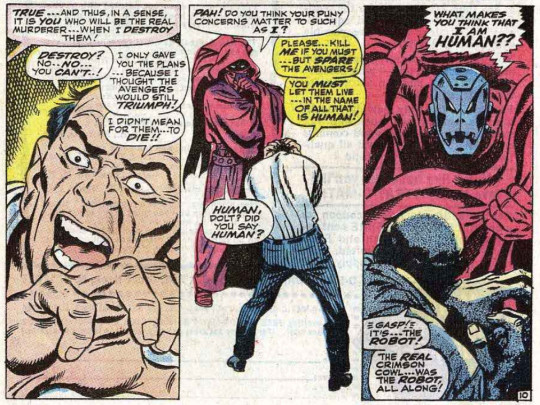

Image credit: from Avengers #55 by Roy Rhomas and John Buscema

2 notes

·

View notes

Text

Inside the Loop: Feedback, Iteration, and the Continuous Learning of LLMs

Building a Large Language Model (LLM) is no longer a one-and-done process. As these models become central to productivity, search, development, and customer service, the expectation has shifted: AI must not only be intelligent, it must be continuously improving.

Welcome to the feedback loop—where LLMs are refined, aligned, and made more useful over time through iteration. In this article, we explore the iterative lifecycle of LLMs, from their initial training to post-deployment feedback, model updates, and dynamic learning systems.

1. LLMs Are Never Finished Products

A common misconception is that once an LLM is trained, it's complete. In reality, pretraining is just phase one. Models evolve long after initial release, adapting to user behavior, fixing failures, and responding to new expectations.

LLMs are products under revision—constantly evaluated, updated, and improved.

2. Phase One: Static Learning Through Pretraining

The initial model is trained on vast text datasets—terabytes of raw tokens. This process gives the model fluency in language, logic, and general knowledge. But it’s also static. Once trained, the model has no awareness of:

What users want

What tasks are frequent or rare

What errors are dangerous

What feedback is positive or negative

That’s where iteration begins.

3. Phase Two: Supervised Fine-Tuning for Purpose

Fine-tuning helps the model specialize. Developers use curated datasets to teach the LLM to:

Follow instructions

Imitate preferred formatting

Avoid unsafe or biased responses

Handle domain-specific queries (e.g., legal, financial, medical)

This stage incorporates structured feedback into model training—guiding the LLM toward more useful behaviors.

4. Phase Three: RLHF—Reinforcement Learning from Human Feedback

The next leap in intelligence comes from learning from judgment.

In RLHF:

Human reviewers compare multiple LLM responses.

A reward model is trained to score those responses.

Reinforcement learning algorithms (like PPO) adjust the LLM accordingly.

The result? A model that doesn’t just complete prompts—it completes them the way users prefer.

This stage closes the loop between output and feedback, turning subjective preference into quantifiable learning.

5. Phase Four: Post-Deployment Feedback and Model Updating

Once the model is live, the real-world loop begins. Users interact. Feedback pours in. Problems arise. New tasks emerge. Organizations now collect:

User thumbs up/down ratings

Reported errors or hallucinations

Missed intents or task failures

Logged completions from popular use cases

This feedback is used to:

Retrain or fine-tune the model

Patch weaknesses

Create new evaluation benchmarks

Build safer and more aligned versions

Some companies even perform weekly or monthly releases of updated models—treating LLMs like software products with agile cycles.

6. Emerging Trend: Dynamic Feedback Loops and Online Learning

Although most LLMs today are frozen after deployment, the future points to dynamic learning:

Personalized feedback loops, where the LLM improves for individual users

Online fine-tuning based on interactions (with privacy controls)

Self-evaluation, where models critique and refine their own outputs

Frameworks like OpenAI’s feedback tools, ReACT agents, and Reflexion encourage models to use their outputs as input for better reasoning—learning within a session.

7. The Role of Evaluation in the Loop

Every feedback loop needs measurement. Teams use:

Automated benchmarks (e.g., MMLU, GSM8K, TruthfulQA)

Crowdsourced evaluations of output quality

Synthetic feedback, where models grade each other

Internal red-teaming for safety auditing

These evaluations create data-driven triggers for updates—ensuring iteration is grounded in evidence, not intuition.

8. Why Feedback Loops Matter

Without feedback, LLMs risk stagnation. They may:

Fail to adapt to new user needs

Reinforce outdated patterns or biases

Lose relevance in fast-moving domains

With feedback, however, they become:

More aligned with human values

Better at personalization

More robust to edge cases

Capable of learning through usage

The feedback loop is what turns a static model into a living system.

9. Examples in Practice

OpenAI iterates ChatGPT using user thumbs-up/down and flagging systems.

Anthropic retrains Claude with user conversations and safety reviews.

Google DeepMind updates Gemini with partner evaluations and benchmark tuning.

Open-source communities improve models like Mistral and Mixtral via GitHub issues, PRs, and evaluation sets.

Each model you interact with today likely looks very different from its original release version.

Conclusion: Iteration Is Intelligence

What makes an LLM truly intelligent isn’t just the data it was trained on—it’s how it evolves after release. Feedback, correction, and refinement form a cycle of intelligence that mirrors human learning.

The future of LLMs isn’t about a single breakthrough—it’s about mastering the loop. The organizations that build the best feedback systems will create the most useful, trustworthy, and adaptive AI.

Because in the age of learning machines, every response is a rehearsal for something better.

0 notes

Text

The Future of AI: Trends in LLM Development for 2025

As we move deeper into the AI revolution, large language models (LLMs) are increasingly becoming the backbone of digital transformation across industries. In 2025, the pace of innovation in LLM development continues to accelerate, with new trends emerging that promise to reshape how we work, learn, and interact with machines. From architectural breakthroughs to ethical frameworks, here’s a deep dive into the most important trends shaping the future of LLMs in 2025.

1. Smaller, Smarter Models

The early era of LLMs was marked by scaling—bigger models with more parameters often meant better performance. However, 2025 is witnessing a shift toward efficiency over size. Through techniques like model distillation, quantization, and sparsity, developers are building lightweight models that can run on edge devices without compromising much on performance.

This trend makes LLMs more accessible to smaller businesses, researchers, and even offline applications, democratizing AI in a meaningful way.

2. Multimodality Goes Mainstream

Text is no longer the only game in town. 2025 is the year multimodal models—capable of understanding and generating not just text, but also images, audio, and video—become widely adopted. This leap enables LLMs to function as general-purpose assistants. Think: diagnosing issues from images, summarizing video content, or translating speech in real time.

Multimodal capabilities are critical for sectors like healthcare, media, education, and customer service, unlocking applications that were previously too complex for single-modality models.

3. Agentic AI and Autonomous Workflows

LLMs are evolving beyond simple input-output tools into agentic systems—AI that can reason, plan, and take multi-step actions autonomously. These agent-based architectures integrate tools like web browsers, calculators, code interpreters, and even APIs to perform real-world tasks with minimal human guidance.

In 2025, businesses are beginning to embed such AI agents into their workflows to handle tasks like market analysis, email management, research synthesis, and software testing.

4. Open-Source Renaissance

While major tech players continue to build proprietary models, 2025 is also seeing a surge in high-quality open-source LLMs. The open model ecosystem is rapidly catching up in capabilities, offering transparency, adaptability, and community-driven innovation.

Open-source LLMs are increasingly being adopted for enterprise-grade applications, especially in regions and industries with data sovereignty and privacy concerns.

5. Custom and Domain-Specific Models

Generic LLMs are giving way to specialized models fine-tuned for specific domains—legal, medical, scientific, or technical. These models are trained on curated data to ensure higher accuracy, safety, and relevance.

With advancements in retrieval-augmented generation (RAG), model fine-tuning, and synthetic data generation, organizations in 2025 are building LLMs that deeply understand their industries, resulting in better outcomes and reduced hallucination.

6. AI Alignment and Regulation

As LLMs become more powerful, concerns about misuse, misinformation, and bias continue to grow. In 2025, alignment research is a major focus area. New techniques such as Constitutional AI, reinforcement learning from human feedback (RLHF), and adversarial training are improving the safety and interpretability of LLMs.

At the same time, governments and global bodies are introducing clearer regulatory frameworks around responsible AI development and deployment—impacting everything from training data transparency to safety evaluations.

7. Memory and Personalization

Another exciting development is long-term memory for LLMs. Instead of treating every prompt as a new conversation, models can now remember user preferences, context, and history over time. This capability brings us closer to AI companions that genuinely adapt to individual users, providing personalized and consistent support.

This trend is particularly important in education, coaching, therapy, and productivity tools.

8. Human-AI Collaboration

Rather than replacing humans, the most effective LLM deployments in 2025 emphasize collaboration. AI becomes a creative partner—helping humans brainstorm, draft, debug, and iterate. We're seeing a shift in interface design as well, with new tools focused on co-creation and shared control rather than one-way command interfaces.

This change is fostering a new era of augmented intelligence, where human intuition and machine reasoning complement each other.

Conclusion

The future of AI, particularly in the realm of large language models, is not just about bigger models or flashier features—it’s about making these systems more useful, trustworthy, and human-centric. In 2025, LLM Development are becoming deeply integrated into our lives, not just as tools, but as intelligent collaborators.

As developers, businesses, and users adapt to this rapidly changing landscape, staying informed about these trends is key to harnessing the full potential of AI ethically, efficiently, and effectively.

0 notes

Text

The Rise of Custom LLMs: Why Enterprises Are Moving Beyond Off-the-Shelf AI

In the rapidly evolving landscape of artificial intelligence, one trend stands out among forward-thinking companies: the rising investment in custom Large Language Model (LLM) development services. From automating internal workflows to enhancing customer experience and driving innovation, custom LLMs are becoming indispensable tools for enterprises aiming to stay competitive in 2025 and beyond.

While off-the-shelf LLM solutions like ChatGPT and Claude offer powerful capabilities, they often fall short when it comes to aligning with an organization’s specific goals, data needs, and compliance requirements. This gap has led many businesses to pursue tailor-made LLM development services that are optimized for their unique workflows, industry demands, and long-term strategies.

Let’s explore why custom LLM development is now a critical investment for companies across industries—and what it means for the future of AI adoption.

The Limitations of Off-the-Shelf LLMs

Off-the-shelf LLMs are great for general use cases, but they come with constraints that limit enterprise adoption in certain domains. These models are trained on public datasets, and while they can provide impressive general language capabilities, they struggle with:

Domain-specific knowledge: They often lack context around specialized terminology in industries like legal, finance, or healthcare.

Data privacy concerns: Public LLMs may not meet internal data handling, security, or compliance needs.

Limited control: Companies can’t fine-tune or extend model capabilities to fit proprietary processes or logic.

Generic outputs: The lack of personalization often leads to low relevance or alignment with brand tone, customer context, or specific operational workflows.

As companies scale their AI efforts, they need models that deeply understand their operations and can evolve with them. This is where custom LLM development services enter the picture.

Custom LLMs: Tailored to Fit Business Needs

Custom LLM development allows organizations to build models that are trained on their own datasets, integrate seamlessly with existing software, and address very specific tasks. These LLMs can be designed to follow particular business rules, support multilingual requirements, and deliver high-precision results for mission-critical applications.

Personalized Workflows

A custom LLM can be tailored to handle unique workflows—whether it’s analyzing thousands of legal contracts, generating hyper-personalized marketing copy, or assisting customer service agents with real-time knowledge retrieval. This kind of specificity is nearly impossible with generic LLMs.

Enhanced Performance

When trained on proprietary datasets, custom LLMs significantly outperform general models on niche use cases. They understand organizational terminology, workflows, and customer interactions—leading to better predictions, more accurate outputs, and ultimately, higher productivity.

Seamless Integration

Custom models can be built to integrate tightly with a company’s tech stack—ERP systems, CRMs, document repositories, and even internal APIs. This allows companies to deploy LLMs not just as standalone assistants, but as core components of digital infrastructure.

Key Business Drivers Behind the Investment

Let’s look at the top reasons why companies are increasingly turning to custom LLM development services in 2025.

1. Competitive Differentiation

AI is no longer a buzzword—it’s a key differentiator. Companies that leverage LLMs to create unique customer experiences or optimize operations gain a real edge. With a custom LLM, businesses can offer features or services that competitors using standard models simply can’t match.

For example, a custom-trained LLM in eCommerce could generate personalized product descriptions, SEO content, and chat support tailored to a brand’s voice and buyer personas—something a general LLM cannot consistently deliver.

2. Data Ownership and Security

As data regulations like GDPR, HIPAA, and India’s DPDP Act become stricter, companies must be cautious about how and where their data is processed. Custom LLMs, especially those developed for on-premises or private cloud environments, offer full data control.

This ensures sensitive internal data doesn’t leave the company’s infrastructure while still allowing AI to add value. It also reduces dependency on external SaaS vendors, mitigating third-party risk.

3. Cost Efficiency at Scale

Although custom LLM development requires an initial investment, it becomes more cost-effective at scale. Businesses no longer pay per token or API call to third-party platforms. Instead, they gain a reusable asset that can be deployed across multiple departments, from customer service to legal, HR, marketing, and operations.

In fact, once developed, a custom LLM can be fine-tuned incrementally with minimal cost to support new functions or user roles—maximizing long-term ROI.

4. Strategic Control Over AI Capabilities

With public models, businesses are at the mercy of third-party update cycles, model versions, or feature restrictions. Custom development gives enterprises full strategic control over model architecture, fine-tuning frequency, interface design, and output formats.

This control is especially valuable for companies in regulated industries or those that require explainable AI outputs for auditing and compliance.

5. Cross-Functional Automation

Custom LLMs are versatile. A single base model can be adapted for multiple departments. For example:

In HR, it can summarize resumes and generate interview questions.

In finance, it can analyze contracts and detect discrepancies.

In legal, it can extract key clauses from long agreements.

In sales, it can draft client proposals in minutes.

In support, it can offer contextual responses from company knowledge bases.

This level of internal automation isn’t just cost-saving—it’s transformative.

Use Cases Driving Adoption

Let’s look at real-world scenarios where companies are seeing tangible benefits from investing in custom LLMs.

Legal and Compliance

Law firms and legal departments use LLMs trained on case law, internal documentation, and policy frameworks to assist with contract analysis, regulatory compliance checks, and legal research.

Custom models significantly cut down time spent on manual review and reduce the risk of overlooking key clauses or compliance issues.

Healthcare and Life Sciences

In healthcare, custom LLMs are trained on clinical data, EMRs, and research journals to support faster diagnostics, patient communication, and research summarization—all while ensuring HIPAA compliance.

Pharmaceutical companies use them to analyze medical literature and streamline drug discovery processes.

Finance and Insurance

Financial institutions use custom models to generate reports, review risk assessments, assist with fraud detection, and respond to customer queries—all with high accuracy and in a compliant manner.

These models can also be integrated with KYC/AML systems to flag unusual patterns and speed up customer onboarding.

Retail and eCommerce

Brands are leveraging custom LLMs for hyper-personalized marketing, automated product tagging, chatbot-driven sales assistance, and voice commerce. Models trained on brand data ensure messaging consistency and deeper customer engagement.

The Technology Stack Behind Custom LLM Development

Building a custom LLM involves a blend of cutting-edge tools and best practices:

Model selection: Companies can start from foundational models like LLaMA, Mistral, or Falcon, depending on their scale and licensing needs.

Fine-tuning: Using internal datasets, LLMs are fine-tuned to reflect domain-specific language, tone, and context.

Reinforcement learning: RLHF (Reinforcement Learning with Human Feedback) can be used to align model outputs with business goals.

Deployment: Models can be deployed on-premise, in private clouds, or with edge capabilities for compliance and performance.

Monitoring: Continuous evaluation of accuracy, latency, and output quality ensures optimal performance post-deployment.

Many companies partner with LLM development service providers who specialize in this full-stack development—from model selection and training to deployment and maintenance.

Why Now? The Timing Is Right

Several converging trends make 2025 the ideal time to invest in custom LLMs:

Open-source LLMs are more powerful and accessible than ever before.

GPU costs have declined, making training and inference more affordable.

Enterprise AI maturity has improved, with clearer internal processes and governance models in place.

Customer expectations are higher, and personalized, AI-driven experiences are becoming the norm.

New developer tools for fine-tuning, evaluating, and serving models have matured, reducing development friction.

Together, these factors have lowered the barrier to entry and increased the payoff for custom LLM initiatives.

Conclusion

As AI becomes more deeply integrated into the modern business stack, the limitations of one-size-fits-all models are becoming clear. Companies need smarter, safer, and more context-aware AI tools—ones that speak their language, understand their data, and respect their constraints.

Custom LLM development services offer exactly that. By investing in tailored models, organizations unlock the full potential of AI to automate tasks, reduce costs, and deliver exceptional customer and employee experiences.

For businesses looking to lead in the age of AI, the decision is no longer “if” but “how fast” they can build and deploy their own LLMs.

#blockchain#crypto#ai#dex#ai generated#cryptocurrency#blockchain app factory#ico#blockchainappfactory#ido

0 notes

Text

Deploying Enterprise-Grade AI Agents with Oracle AI Agent Studio

In our previous blog, we introduced Oracle AI Agent Studio as a powerful, no-code/low-code platform for building intelligent Gen AI solutions. In this follow-up, we go a step further to show you how organizations can deploy enterprise-grade AI Agents to solve real-world business problems across Finance, HR, Procurement, and beyond.

Whether you're starting small or scaling up, Oracle AI Agent Studio offers the perfect blend of agility, enterprise readiness, and intelligent automation. Here's how to turn your AI ideas into tangible business impact.

Recap: What is Oracle AI Agent Studio?

Oracle AI Agent Studio enables business and IT teams to build, deploy, and manage AI-powered agents that connect with Oracle Fusion Applications, databases, REST APIs, and external systems.

Key capabilities include:

Prebuilt templates and visual flows

LLM (Large Language Model) integration for natural conversation

Secure deployment on OCI (Oracle Cloud Infrastructure)

Out-of-the-box connectors to Oracle Fusion apps

Context-aware decision making and workflow automation

Real-World Use Cases in Action

Use Case 1: Finance – Expense Submission Agent

The Challenge: Manual expense submissions are time-consuming and prone to policy violations.

The AI Solution: An AI Agent that uses OCI Vision to extract data from uploaded receipts and Oracle Fusion APIs to auto-submit expenses for approval.

Business Impact:

70% reduction in submission time

Improved policy compliance

Higher user satisfaction and reduced helpdesk load

Use Case 2: HR Chatbot

The Challenge: Employees often struggle to find and understand HR policies.

The AI Solution: A conversational agent integrated with Oracle Digital Assistant and RAG (retrieval-augmented generation) to answer policy-related queries using personalized context.

Business Impact:

24x7 self-service support

60% drop in HR service tickets

Better employee experience

Use Case 3: Procurement – Supplier Selection

The Challenge: Vendor selection processes are often inconsistent and time-consuming.

The AI Solution: An AI Agent that evaluates vendor responses using scoring criteria defined by procurement teams, integrating with Oracle Sourcing and external bid portals.

Business Impact:

Accelerated RFQ evaluations

Data-driven, unbiased decisions

Transparent and auditable selection

Building Smart Agents: Best Practices

Start Small: Begin with a well-defined, low-risk use case to validate impact.

Prioritize Integration: Use Oracle Fusion connectors and REST APIs for deep system access.

Prepare Your Data: Structured, clean data ensures better results from Gen AI models.

Iterate Fast: Use user feedback to fine-tune agent workflows and conversation paths.

Design for Security: Apply role-based access and audit trails from the start.

Conclusion

Oracle AI Agent Studio is not just a development platform, it's a catalyst for AI-driven business transformation. Whether it's streamlining expense reporting, enabling smarter procurement, or empowering employees with instant answers, the potential is endless.

Start small, validate early, and scale with confidence.

#OracleGenAI #OracleAIStudio #AIAgents #FusionCloud #DigitalTransformation #ConneqtionGroup #SmartAutomation #OCI #EnterpriseAI

0 notes

Text

Experience the Future of AI with Pioneering LLM Development Company Solutions

Powering Tomorrow’s AI Innovations with LLM Development

Large Language Models continue to change the way businesses operate in 2025. Rapid advancements in artificial intelligence deliver incredible results in automation, communication, and data analysis. Businesses dependent on robust language-based intelligence needs a reliable LLM Development company that understands their unique ambitions. ideyaLabs offers end-to-end LLM development, pushing the boundaries of what enterprise AI can achieve.

ideyaLabs Shapes Custom Language Model Solutions

Custom AI solutions give organizations an edge in the market. ideyaLabs designs and implements large language models tailored to your requirements. The team builds LLMs that understand unique terminology, internal documentation, and specific workflows. Your AI solution stays adaptive to your business processes.

Redefining Business Communication with Robust LLM Integration

Clear, automated communication sets the standard in the digital age. ideyaLabs, as a leading LLM Development company, integrates natural language processing into email responses, chatbots, and virtual agents. Your brand delivers prompt, accurate, and context-aware interactions across every touchpoint.

Effortless Data Insights with Large Language Models

Massive data volumes can overwhelm traditional analytic techniques. ideyaLabs applies LLMs to parse, synthesize, and summarize structured and unstructured data instantly. Your team accesses actionable insights from reports, emails, customer feedback, and industry publications—without manual extraction.

Personalized Customer Engagement at Scale

Personalization drives stronger customer relationships. ideyaLabs creates language models that analyze individual behavior, preferences, and purchasing patterns. The AI crafts tailored messages and recommendations that speak directly to your audience. Your marketing and sales strategies become more effective with every customer interaction.

Streamlining Operations Using Advanced LLM Capabilities

Business operations often involve time-consuming documentation, compliance, and reporting. ideyaLabs deploys LLMs that automate these critical processes. Generate reports, standardize documentation, and ensure regulatory compliance with the power of natural language AI. Your operations run smoothly while reducing human error.

Industry-Specific Excellence in LLM Development

Every industry presents unique challenges and demands. ideyaLabs leverages domain expertise to build LLM solutions for retail, finance, healthcare, logistics, and technology sectors. Your AI system recognizes the language of your industry and delivers precise results.

LLM Development for Secure and Private Workflows

Enterprise-grade security stands at the core of LLM solutions from ideyaLabs. Data privacy, compliance with regulations, and robust access controls protect sensitive information. Your proprietary data stays secure, meeting internal security policies and legal standards.

Custom Training for Powerful AI Performance

Generic models often fall short in specialized domains. ideyaLabs, a trusted LLM Development company, undertakes custom training using proprietary data sets. Your AI solution understands your terminology, processes, and client needs. The model evolves as your company grows or pivots.

Continuous Improvement with AI Monitoring and Maintenance

Effective AI requires vigilant oversight. ideyaLabs monitors and fine-tunes your deployed LLM solutions to maintain peak performance and accuracy. The team updates models based on real-world data, shifts in user behavior, and changes in your business environment. Your AI always delivers relevant results.

Rapid Prototyping and Deployment for Fast Results

Ideation and execution happen quickly with ideyaLabs. Design, develop, and deploy language models with minimal delays. Your organization gains access to production-ready AI systems without long waits or endless cycles.

Collaboration Drives Innovation at ideyaLabs

Innovation thrives through collaboration. ideyaLabs works closely with client teams, gathering feedback and refining solutions. Your organization retains control over data, processes, and outcomes, paving the way for adoption and success.

Seamless Integration with Existing Technology Stacks

New solutions should enhance current systems. ideyaLabs ensures your LLMs integrate cleanly with CRM, ERP, cloud platforms, and internal applications. The transition to next-generation AI systems happens smoothly, minimizing disruption and maximizing productivity.

Scalable Solutions for Growing Businesses

Business growth brings new challenges and opportunities. ideyaLabs designs LLM solutions that scale with your organization. Handle growing user bases, higher data volumes, and new project requirements without compromise. Your AI operates consistently at any scale.

Future-Ready AI with ideyaLabs LLM Expertise

Enterprise leaders need AI that can evolve with the company. ideyaLabs provides ongoing support, enrichment, and strategic consultation. Your LLM solutions adapt to new technologies, languages, and emerging business challenges.

Empowering Teams with AI Training and Knowledge Transfer

Successful AI adoption starts with confident teams. ideyaLabs delivers training and documentation for business users, IT departments, and executives. Your organization uses LLM-based systems effectively from day one.

Proven Success with Established LLM Development Company Practices

Years of industry experience set ideyaLabs apart. The team's thorough discovery process, agile development cycles, and committed client partnerships deliver measurable ROI. Your organization benefits from field-tested methods and results-driven advice.

Comprehensive Support Throughout the AI Journey

Technical support and consulting services from ideyaLabs cover every step of your LLM project life cycle. Troubleshooting, upgrades, and roadmap planning keep your AI initiatives moving forward. Your business remains at the forefront of innovation in 2025.

Elevate Brand Value with Advanced AI Communication

A strong brand requires clear communication. ideyaLabs helps organizations use LLMs to enhance online presence, streamline knowledge bases, and respond efficiently to stakeholder inquiries. Your public image grows stronger through AI-powered clarity.

Drive Competitive Advantage with ideyaLabs

Market leaders stay ahead by embracing intelligent automation. ideyaLabs delivers personalized, secure, scalable LLM solutions that help distinguish your company. Your competitors struggle to match the efficiency and sophistication your new systems provide.

Harness Enterprise-Grade LLM Performance Today

The shift to advanced AI starts now. ideyaLabs brings unparalleled expertise as a top LLM Development company. Tailor-made large language models position your enterprise for growth. Extraordinary results in communication, automation, and decision-making become routine for your business.

Partner with ideyaLabs for Pioneering LLM Development Choose ideyaLabs as your LLM Development company for superior AI outcomes. Experience seamless integration, rapid results, and unwavering support. Your organization's future begins with the best large language model solutions available.

0 notes

Text

Why Hire AI Developers from Sparkout?

✅ Skilled in the Latest AI Tech – GPT-4, LLaMA, LangChain, OpenCV, and more ✅ Flexible Hiring Models – Full-time, part-time, or project-based ✅ Quick Onboarding – Start your AI journey without delays ✅ Custom AI Solutions – NLP, computer vision, predictive analytics, chatbots & more ✅ Cross-Industry Expertise – Healthcare, FinTech, Retail, Real Estate & SaaS

What Our AI Developers Can Do

🔹 Build & fine-tune AI/ML models 🔹 Create AI chatbots using LLMs (ChatGPT, Claude, etc.) 🔹 Develop recommendation engines, AI agents, and virtual assistants 🔹 Integrate AI with your existing apps, CRMs & cloud platforms 🔹 Deliver explainable, scalable, and production-ready AI systems Visit - https://www.sparkouttech.com/hire-ai-developers/

0 notes

Text

0 notes

Text

Scaling Agentic AI in 2025: Unlocking Autonomous Digital Labor with Real-World Success Stories

Introduction

Agentic AI is revolutionizing industries by seamlessly integrating autonomy, adaptability, and goal-driven behavior, enabling digital systems to perform complex tasks with minimal human intervention. This article explores the evolution of Agentic AI, its integration with Generative AI, and delivers actionable insights for scaling these systems. We will examine the latest deployment strategies, best practices for scalability, and real-world case studies, including how an Agentic AI course in Mumbai with placements is shaping talent pipelines for this emerging field. Whether you are a software engineer, data scientist, or technology leader, understanding the interplay between Generative AI and Agentic AI is key to unlocking digital transformation.

The Evolution of Agentic and Generative AI in Software

AI’s evolution has moved from rule-based systems and machine learning toward today’s advanced generative models and agentic systems. Traditional AI excels in narrow, predefined tasks like image recognition but lacks flexibility for dynamic environments. Agentic AI, by contrast, introduces autonomy and continuous learning, empowering systems to adapt and optimize outcomes over time without constant human oversight.

This paradigm shift is powered by Generative AI, particularly large language models (LLMs), which provide contextual understanding and reasoning capabilities. Agentic AI systems can orchestrate multiple AI services, manage workflows, and execute decisions, making them essential for real-time, multi-faceted applications across logistics, healthcare, and customer service. The rise of agentic capabilities marks a transition from AI as a tool to AI as an autonomous digital labor force, expanding workforce definitions and operational possibilities. Professionals seeking to enter this field often consider a Generative AI and Agentic AI course to gain the necessary skills and practical experience.

Latest Frameworks, Tools, and Deployment Strategies

LLM Orchestration and Autonomous Agents

Modern Agentic AI depends on orchestrating multiple LLMs and AI components to execute complex workflows. Frameworks like LangChain, Haystack, and OpenAI’s Function Calling enable developers to build autonomous agents that chain together tasks, query databases, and interact with APIs dynamically. These frameworks support multi-turn dialogue management, contextual memory, and adaptive decision-making, critical for real-world agentic applications. For those interested in hands-on learning, enrolling in an Agentic AI course in Mumbai with placements offers practical exposure to these advanced frameworks.

MLOps for Generative Models

Traditional MLOps pipelines are evolving to support the unique requirements of generative AI, including:

Continuous Fine-Tuning: Updating models based on new data or feedback without full retraining, using techniques like incremental and transfer learning.

Prompt Engineering Lifecycle: Versioning and testing prompts as critical components of model performance, including methodologies for prompt optimization and impact evaluation.

Monitoring Generation Quality: Detecting hallucinations, bias, and drift in outputs, and implementing quality control measures.

Scalable Inference Infrastructure: Managing high-throughput, low-latency model serving with cost efficiency, leveraging cloud and edge computing.

Leading platforms such as MLflow, Kubeflow, and Amazon SageMaker are integrating MLOps for generative AI to streamline deployment and monitoring. Understanding MLOps for generative AI is now a foundational skill for teams building scalable agentic systems.

Cloud-Native and Edge Deployment

Agentic AI deployments increasingly leverage cloud-native architectures for scalability and resilience, using Kubernetes and serverless functions to manage agent workloads. Edge deployments are emerging for latency-sensitive applications like autonomous vehicles and IoT devices, where agents operate closer to data sources. This approach ensures real-time processing and reduces reliance on centralized infrastructure, topics often covered in advanced Generative AI and Agentic AI course curricula.

Advanced Tactics for Scalable, Reliable AI Systems

Modular Agent Design

Breaking down agent capabilities into modular, reusable components allows teams to iterate rapidly and isolate failures. Modular design supports parallel development and easier integration of new skills or data sources, facilitating continuous improvement and reducing system update complexity.

Robust Error Handling and Recovery

Agentic systems must anticipate and gracefully handle failures in external APIs, data inconsistencies, or unexpected inputs. Implementing fallback mechanisms, retries, and human-in-the-loop escalation ensures uninterrupted service and trustworthiness.

Data and Model Governance

Given the autonomy of agentic systems, governance frameworks are critical to manage data privacy, model biases, and compliance with regulations such as GDPR and HIPAA. Transparent logging and explainability tools help maintain accountability. This includes ensuring that data collection and processing align with ethical standards and legal requirements, a topic emphasized in MLOps for generative AI best practices.

Performance Optimization

Balancing model size, latency, and cost is vital. Techniques such as model distillation, quantization, and adaptive inference routing optimize resource use without sacrificing agent effectiveness. Leveraging hardware acceleration and optimizing software configurations further enhances performance.

Ethical Considerations and Governance

As Agentic AI systems become more autonomous, ethical considerations and governance practices become increasingly important. This includes ensuring transparency in decision-making, managing potential biases in AI outputs, and complying with regulatory frameworks. Recent developments in AI ethics frameworks emphasize the need for responsible AI deployment that prioritizes human values and safety. Professionals completing a Generative AI and Agentic AI course are well-positioned to implement these principles in practice.

The Role of Software Engineering Best Practices

The complexity of Agentic AI systems elevates the importance of mature software engineering principles:

Version Control for Code and Models: Ensures reproducibility and rollback capability.

Automated Testing: Unit, integration, and end-to-end tests validate agent logic and interactions.

Continuous Integration/Continuous Deployment (CI/CD): Automates safe and frequent updates.

Security by Design: Protects sensitive data and defends against adversarial attacks.

Documentation and Observability: Facilitates collaboration and troubleshooting across teams.

Embedding these practices into AI development pipelines is essential for operational excellence and long-term sustainability. Training in MLOps for generative AI equips teams with the skills to maintain these standards at scale.

Cross-Functional Collaboration for AI Success

Agentic AI projects succeed when data scientists, software engineers, product managers, and business stakeholders collaborate closely. This alignment ensures:

Clear definition of agent goals and KPIs.

Shared understanding of technical constraints and ethical considerations.

Coordinated deployment and change management.

Continuous feedback loops for iterative improvement.

Cross-functional teams foster innovation and reduce risks associated with misaligned expectations or siloed workflows. Those enrolled in an Agentic AI course in Mumbai with placements often experience this collaborative environment firsthand.

Measuring Success: Analytics and Monitoring

Effective monitoring of Agentic AI deployments includes:

Operational Metrics: Latency, uptime, throughput.

Performance Metrics: Accuracy, relevance, user satisfaction.

Behavioral Analytics: Agent decision paths, error rates, escalation frequency.

Business Outcomes: Cost savings, revenue impact, process efficiency.

Combining real-time dashboards with anomaly detection and alerting enables proactive management and continuous optimization of agentic systems. Mastering these analytics is a core outcome for participants in a Generative AI and Agentic AI course.

Case Study: Autonomous Supply Chain Optimization at DHL

DHL, a global logistics leader, exemplifies successful scaling of Agentic AI in 2025. Facing challenges of complex inventory management, fluctuating demand, and delivery delays, DHL deployed an autonomous supply chain agent powered by generative AI and real-time data orchestration.

The Journey

DHL’s agentic system integrates:

LLM-based demand forecasting models.

Autonomous routing agents coordinating with IoT sensors on shipments.

Dynamic inventory rebalancing modules adapting to disruptions.

The deployment involved iterative prototyping, cross-team collaboration, and rigorous MLOps for generative AI practices to ensure reliability and compliance across global operations.

Technical Challenges

Handling noisy sensor data and incomplete information.

Ensuring real-time decision-making under tight latency constraints.

Managing multi-regional regulatory compliance and data sovereignty.

Integrating legacy IT systems with new AI workflows.

Business Outcomes

20% reduction in delivery delays.

15% decrease in inventory holding costs.

Enhanced customer satisfaction through proactive communication.

Scalable platform enabling rapid rollout across regions.

DHL’s success highlights how agentic AI can transform complex, dynamic environments by combining autonomy with robust engineering and collaborative execution. Professionals trained through an Agentic AI course in Mumbai with placements are well-prepared to tackle similar challenges.

Additional Case Study: Personalized Healthcare with Agentic AI

In healthcare, Agentic AI is revolutionizing patient care by providing personalized treatment plans and improving patient outcomes. For instance, a healthcare provider might deploy an agentic system to analyze patient data, adapt treatment strategies based on real-time health conditions, and optimize resource allocation in hospitals. This involves integrating AI with electronic health records, wearable devices, and clinical decision support systems to enhance care quality and efficiency.

Technical Implementation

Data Integration: Combining data from various sources to create comprehensive patient profiles.

AI-Driven Decision Support: Using machine learning models to predict patient outcomes and suggest personalized interventions.

Real-Time Monitoring: Continuously monitoring patient health and adjusting treatment plans accordingly.

Business Outcomes

Improved patient satisfaction through personalized care.

Enhanced resource allocation and operational efficiency.

Better clinical outcomes due to real-time decision-making.

This case study demonstrates how Agentic AI can improve healthcare outcomes by leveraging autonomy and adaptability in dynamic environments. A Generative AI and Agentic AI course provides the multidisciplinary knowledge required for such implementations.

Actionable Tips and Lessons Learned

Start small but think big: Pilot agentic AI on well-defined use cases to gather data and refine models before scaling.

Invest in MLOps tailored for generative AI: Automate continuous training, testing, and monitoring to ensure robust deployments.

Design agents modularly: Facilitate updates and integration of new capabilities.

Prioritize explainability and governance: Build trust with stakeholders and comply with regulations.

Foster cross-functional teams: Align technical and business goals early and often.

Monitor holistically: Combine operational, performance, and business metrics for comprehensive insights.

Plan for human-in-the-loop: Use human oversight strategically to handle edge cases and improve agent learning.

For those considering a career shift, an Agentic AI course in Mumbai with placements offers a structured pathway to acquire these skills and gain practical experience.

Conclusion

Scaling Agentic AI in 2025 is both a technical and organizational challenge demanding advanced frameworks, rigorous engineering discipline, and tight collaboration across teams. The evolution from narrow AI to autonomous, adaptive agents unlocks unprecedented efficiencies and capabilities across industries. Real-world deployments like DHL’s autonomous supply chain agent demonstrate the transformative potential when cutting-edge AI meets sound software engineering and business acumen.

For AI practitioners and technology leaders, success lies in embracing modular architectures, investing in MLOps for generative AI, prioritizing governance, and fostering cross-functional collaboration. Monitoring and continuous improvement complete the cycle, ensuring agentic systems deliver measurable business value while maintaining reliability and compliance.

Agentic AI is not just an evolution of technology but a revolution in how businesses operate and innovate. The time to build scalable, trustworthy agentic AI systems is now. Whether you are looking to upskill or transition into this field, a Generative AI and Agentic AI course can provide the knowledge, tools, and industry connections to accelerate your journey.

0 notes

Text

Beyond Models: Building AI That Works in the Real World

Artificial Intelligence has moved beyond the research lab. It's writing emails, powering customer support, generating code, automating logistics, and even diagnosing disease. But the gap between what AI models can do in theory and what they should do in practice is still wide.

Building AI that works in the real world isn’t just about creating smarter models. It’s about engineering robust, reliable, and responsible systems that perform under pressure, adapt to change, and operate at scale.

This article explores what it really takes to turn raw AI models into real-world products—and how developers, product leaders, and researchers are redefining what it means to “build AI.”

1. The Myth of the Model

When AI makes headlines, it’s often about models—how big they are, how many parameters they have, or how well they perform on benchmarks. But real-world success depends on much more than model architecture.

The Reality:

Great models fail when paired with bad data.

Perfect accuracy doesn’t matter if users don’t trust the output.

Impressive demos often break in the wild.

Real AI systems are 20% models and 80% engineering, data, infrastructure, and design.

2. From Benchmarks to Behavior

AI development has traditionally focused on benchmarks: static datasets used to evaluate model performance. But once deployed, models must deal with unpredictable inputs, edge cases, and user behavior.

The Shift:

From accuracy to reliability

From static evaluation to dynamic feedback

From performance in isolation to value in context

Benchmarks are useful. But behavior in production is what matters.

3. The AI System Stack: More Than Just a Model

To make AI useful, it must be embedded into systems—ones that can collect data, handle errors, interact with users, and evolve.

Key Layers of the AI System Stack:

a. Data Layer

Continuous data collection and labeling

Data validation, cleansing, augmentation

Synthetic data generation for rare cases

b. Model Layer

Training and fine-tuning

Experimentation and evaluation

Model versioning and reproducibility

c. Serving Layer

Scalable APIs for inference

Real-time vs batch deployment

Latency and cost optimization

d. Orchestration Layer

Multi-step workflows (e.g., agent systems)

Memory, planning, tool use

Retrieval-Augmented Generation (RAG)

e. Monitoring & Feedback Layer

Drift detection, anomaly tracking

User feedback collection

Automated retraining triggers

4. Human-Centered AI: Trust, UX, and Feedback

An AI system is only as useful as its interface. Whether it’s a chatbot, a recommendation engine, or a decision assistant, user trust and usability are critical.

Best Practices:

Show confidence scores and explanations

Offer user overrides and corrections

Provide feedback channels to learn from real use

Great AI design means thinking beyond answers—it means designing interactions.

5. AI Infrastructure: Scaling from Prototype to Product

AI prototypes often run well on a laptop or in a Colab notebook. But scaling to production takes planning.

Infrastructure Priorities:

Reproducibility: Can results be recreated and audited?

Resilience: Can the system handle spikes, downtime, or malformed input?

Observability: Are failures, drifts, and bottlenecks visible in real time?

Popular Tools:

Training: PyTorch, Hugging Face, JAX

Experiment tracking: MLflow, Weights & Biases

Serving: Kubernetes, Triton, Ray Serve

Monitoring: Arize, Fiddler, Prometheus

6. Retrieval-Augmented Generation (RAG): Smarter Outputs

LLMs like GPT-4 are powerful—but they hallucinate. RAG is a strategy that combines an LLM with a retrieval engine to ground responses in real documents.

How It Works:

A user asks a question.

The system searches internal documents for relevant content.

That content is passed to the LLM to inform its answer.

Benefits:

Improved factual accuracy

Lower risk of hallucination

Dynamic adaptation to private or evolving data

RAG is becoming a default approach for enterprise AI assistants, copilots, and document intelligence systems.

7. Agents: From Text Completion to Action

The next step in AI development is agency—building systems that don’t just complete text but can take action, call APIs, use tools, and reason over time.

What Makes an Agent:

Memory: Stores previous interactions and state

Planning: Determines steps needed to reach a goal

Tool Use: Calls calculators, web search, databases, etc.

Autonomy: Makes decisions and adapts to context

Frameworks like LangChain, AutoGen, and CrewAI are making it easier to build such systems.

Agents transform AI from a passive responder into an active problem solver.

8. Challenges of Real-World AI Deployment

Despite progress, several obstacles remain:

Hallucination & Misinformation

Solution: RAG, fact-checking, prompt engineering

Data Privacy & Security

Solution: On-premise models, encryption, anonymization

Bias & Fairness

Solution: Audits, synthetic counterbalancing, human review

Cost & Latency

Solution: Distilled models, quantization, model routing

Building AI is as much about risk management as it is about optimization.

9. Case Study: AI-Powered Customer Support

Let’s consider a real-world AI system in production: a support copilot for a global SaaS company.

Objectives:

Auto-answer tickets

Summarize long threads

Recommend actions to support agents

Stack Used:

LLM backend (Claude/GPT-4)

RAG over internal KB + docs

Feedback loop for agent thumbs-up/down

Cost-aware routing: small model for basic queries, big model for escalations

Results:

60% faster response time

30% reduction in escalations

Constant improvement via real usage data

This wasn’t just a model—it was a system engineered to work within a business workflow.

10. The Future of AI Development

The frontier of AI development lies in modularity, autonomy, and context-awareness.

Trends to Watch:

Multimodal AI: Models that combine vision, audio, and text (e.g., GPT-4o)

Agentic AI: AI systems that plan and act over time

On-device AI: Privacy-first, low-latency inference

LLMOps: Managing the lifecycle of large models in production

Hybrid Systems: AI + rules + human oversight

The next generation of AI won’t just talk—it will listen, learn, act, and adapt.

Conclusion: Building AI That Lasts

Creating real-world AI is more than tuning a model. It’s about crafting an ecosystem—of data, infrastructure, interfaces, and people—that can support intelligent behavior at scale.

To build AI that works in the real world, teams must:

Think in systems, not scripts

Optimize for outcomes, not just metrics

Design for feedback, not just deployment

Prioritize trust, not just performance

As the field matures, successful AI developers will be those who combine cutting-edge models with solid engineering, clear ethics, and human-first design.

Because in the end, intelligence isn’t just about output—it’s about impact.

0 notes