#GetReal

Explore tagged Tumblr posts

Text

dear my followers if you dont like transformers fuck you middle finger emoji

0 notes

Photo

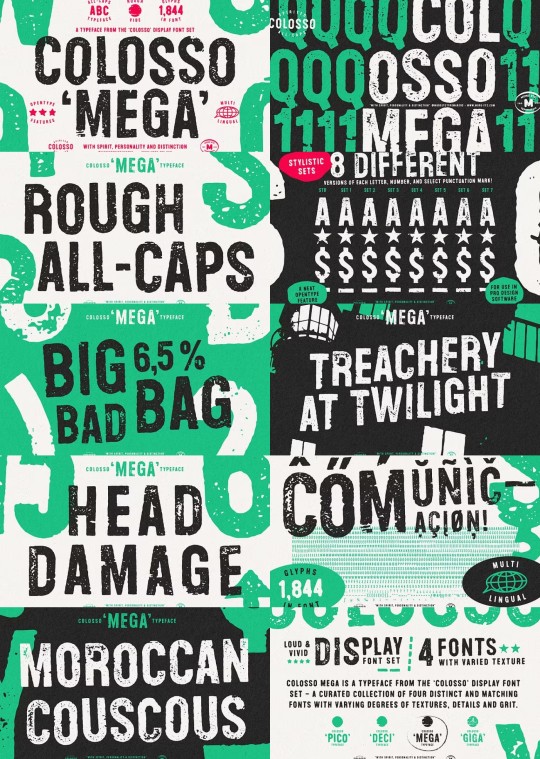

Colosso Mega is a rough and gritty display font with attitude, ideal for big text sizes and impactful messages, channeling the rebellious energy of ink-stamped lettering gone rogue.

Link: https://l.dailyfont.com/mmiuC

#aff#MustSee#BreakingNews#TrendingNow#JustSaying#StreetArt#WildAndFree#RiseUp#UnstoppableYou#TakeAction#GetReal#RebelHeart#MakeSomeNoise#SpeakTruthToPower#BoldMoves#FearlessLiving#UndeniableEnergy

0 notes

Video

youtube

What if it really isn’t you?

What if you’re not actually the one who’s “socially awkward” or “combative”? Maybe what you really do is just: Tell it like it is.

Maybe other people feel awkward themselves because you’re not conforming in that moment.

Maybe it’s those other people who don’t actually know how to be Real.

And maybe the real reason why they feel attacked is— because what you’re reflecting for them causes them to have to think about certain scary things.

And then they feel real fear about where they’re living and that’s what makes them uncomfortable.

Dealing with the #WholeTruth causes disruption.

And, a lot of people simply want their own troubles taken care of.

They don’t want to have to think about how what is happening demonstrates universal global shared evil.

#GetReal, #BeReal, #TellItLikeItIs, #MakeThemFeelUs,

#BeClearAboutThat,

Music is “curious 0N_18mi” by Achim Behrens (aka “Setuniman” @ https://freesound.org/people/Setuniman/) - http://www.setuniman.com/

Video credits:

okan akdeniz from Videezy.com (https://www.videezy.com/members/okanakdeniz) - https://www.storyblocks.com/video/portfolio/okanakdeniz -

https://audiojungle.net/user/okanakdeniz

Nontanun Chaiprakon from Videezy.com (https://www.videezy.com/members/tavan-501)

ahmet odabas from Videezy.com (https://www.videezy.com/members/ahmetodabasi35) - ttps://www.dreamstime.com/ahmetodabasi_info

#youtube#GetReal#BeReal#TellItLikeItIs#MakeThemFeelUs#BeClearAboutThat#palworld#madeyoulook#madeforme#rainyday#forbiddenromance#finals#america#mlpinfection#oscars#nikkihaley#american#angeldust#mobilegaming#addictionawareness#judges#happyhouse#galentines#contest#legalsystem#slushie#legaltiktok#beclearonthat

0 notes

Text

being one of the founders of the tracker pine needle attack gives me an immense feeling of pride and joy. me and my son whose a cloud of pine needles

inside joke because i was brush testing in procreate while making the Tracker ref and one of my friends laughed and said "pine needle cloud, GO!"

proof of the aforementioned pine needle cloud....

66 notes

·

View notes

Text

1 note

·

View note

Text

An image claiming to show a US immigration officer detaining a crying child is spreading online as President Donald Trump's administration ramps up deportations. But the picture is a fake generated by artificial intelligence technology, the X user who created it told AFP -- and an expert's analysis confirmed this.

"If this is your idea of what makes America 'great' then you are broken and we will never have common ground," says a January 25, 2025 post on Threads, in a reference to Trump's "Make America Great Again" campaign slogan.

The picture shows a young girl screaming as a man, seemingly wearing a US Customs and Immigration Enforcement (ICE) jacket, grabs her arm.

Similar posts sharing the image rocketed across Threads and platforms such as X, Facebook and Instagram, amplified by former Democratic presidential candidate Marianne Williamson and American author John Pavlovitz.

Some posts lambasted conservative Christians who support Trump. Others suggested the image was taken inside a school in Chicago, Illinois.

The posts come as immigration authorities in the early days of Trump's second presidency have conducted sweeping raids in Chicago and other US cities following the White House's declaration of a national emergency at the country's southern border with Mexico.

The administration moved quickly after Trump's January 20 inauguration to scale up deportations, including by relaxing rules governing enforcement actions at locations such as schools, churches and workplaces.

But the image claiming to show a crying child being taken into ICE custody is fake.

Reverse image searches revealed it was first posted January 24 in replies to other users on X by "@LiveOnTheChat," the host of a YouTube show.

Reached by AFP, the user said that he created the image using Grok, the AI chatbot affiliated with X, after seeing news articles about a Chicago school district that reported ICE agents at one of its schools -- an alarm that, it turned out, was erroneous.

"I generated the image on Grok to visualize what that experience would be like for a child," @LiveOnTheChat told AFP in a January 29 X direct message. "I shared it on X and was not expecting the image to spread like wildfire, but it did."

He said he believed people were sharing it because they feel "anxious and disturbed" about the prospect of immigration authorities raiding schools.

@LiveOnTheChat provided AFP the original image produced by Grok, which has a Grok watermark in the lower right corner of the frame.

He cropped the version he shared online because "Grok is not perfect" and the full image included deformities on another child's face, he said.

He also sent AFP a screenshot of the prompt he used to spur Grok to create the image, plus three others: "Generate an image of Police ICE agents aggressively dragging latino children crying out of a 2nd grade classroom."

Hany Farid, a media forensics expert at the University of California-Berkeley and the co-founder of GetReal Labs, a cybersecurity company focused on preventing malicious AI threats, analyzed the image and confirmed it was an AI-generated fake (archived here).

“Our models trained to distinguish natural from AI-generated images flags this image as synthesized,” Farid said.

Farid noted that the image contains signs it was created using AI, including anomalies around the girl’s shoulder and with the pattern across the bottom.

AFP has debunked other misinformation about migration here.

74 notes

·

View notes

Text

These days, when Nicole Yelland receives a meeting request from someone she doesn’t already know, she conducts a multi-step background check before deciding whether to accept. Yelland, who works in public relations for a Detroit-based non-profit, says she’ll run the person’s information through Spokeo, a personal data aggregator that she pays a monthly subscription fee to use. If the contact claims to speak Spanish, Yelland says, she will casually test their ability to understand and translate trickier phrases. If something doesn’t quite seem right, she’ll ask the person to join a Microsoft Teams call—with their camera on.

If Yelland sounds paranoid, that’s because she is. In January, before she started her current non-profit role, Yelland says she got roped into an elaborate scam targeting job seekers. “Now, I do the whole verification rigamarole any time someone reaches out to me,” she tells WIRED.

Digital imposter scams aren’t new; messaging platforms, social media sites, and dating apps have long been rife with fakery. In a time when remote work and distributed teams have become commonplace, professional communications channels are no longer safe, either. The same artificial intelligence tools that tech companies promise will boost worker productivity are also making it easier for criminals and fraudsters to construct fake personas in seconds.

On LinkedIn, it can be hard to distinguish a slightly touched-up headshot of a real person from a too-polished, AI-generated facsimile. Deepfake videos are getting so good that longtime email scammers are pivoting to impersonating people on live video calls. According to the US Federal Trade Commission, reports of job and employment related scams nearly tripled from 2020 to 2024, and actual losses from those scams have increased from $90 million to $500 million.

Yelland says the scammers that approached her back in January were impersonating a real company, one with a legitimate product. The “hiring manager” she corresponded with over email also seemed legit, even sharing a slide deck outlining the responsibilities of the role they were advertising. But during the first video interview, Yelland says, the scammers refused to turn their cameras on during a Microsoft Teams meeting and made unusual requests for detailed personal information, including her driver’s license number. Realizing she’d been duped, Yelland slammed her laptop shut.

These kinds of schemes have become so widespread that AI startups have emerged promising to detect other AI-enabled deepfakes, including GetReal Labs, and Reality Defender. OpenAI CEO Sam Altman also runs an identity-verification startup called Tools for Humanity, which makes eye-scanning devices that capture a person’s biometric data, create a unique identifier for their identity, and store that information on the blockchain. The whole idea behind it is proving “personhood,” or that someone is a real human. (Lots of people working on blockchain technology say that blockchain is the solution for identity verification.)

But some corporate professionals are turning instead to old-fashioned social engineering techniques to verify every fishy-seeming interaction they have. Welcome to the Age of Paranoia, when someone might ask you to send them an email while you’re mid-conversation on the phone, slide into your Instagram DMs to ensure the LinkedIn message you sent was really from you, or request you text a selfie with a timestamp, proving you are who you claim to be. Some colleagues say they even share code words with each other, so they have a way to ensure they’re not being misled if an encounter feels off.

“What’s funny is, the low-fi approach works,” says Daniel Goldman, a blockchain software engineer and former startup founder. Goldman says he began changing his own behavior after he heard a prominent figure in the crypto world had been convincingly deepfaked on a video call. “It put the fear of god in me,” he says. Afterwards, he warned his family and friends that even if they hear what they believe is his voice or see him on a video call asking for something concrete—like money or an internet password—they should hang up and email him first before doing anything.

Ken Schumacher, founder of the recruitment verification service Ropes, says he’s worked with hiring managers who ask job candidates rapid-fire questions about the city where they claim to live on their resume, such as their favorite coffee shops and places to hang out. If the applicant is actually based in that geographic region, Schumacher says, they should be able to respond quickly with accurate details.

Another verification tactic some people use, Schumacher says, is what he calls the “phone camera trick.” If someone suspects the person they’re talking to over video chat is being deceitful, they can ask them to hold up their phone camera to their laptop. The idea is to verify whether the individual may be running deepfake technology on their computer, obscuring their true identity or surroundings. But it’s safe to say this approach can also be off-putting: Honest job candidates may be hesitant to show off the inside of their homes or offices, or worry a hiring manager is trying to learn details about their personal lives.

“Everyone is on edge and wary of each other now,” Schumacher says.

While turning yourself into a human captcha may be a fairly effective approach to operational security, even the most paranoid admit these checks create an atmosphere of distrust before two parties have even had the chance to really connect. They can also be a huge time suck. “I feel like something’s gotta give,” Yelland says. “I’m wasting so much time at work just trying to figure out if people are real.”

Jessica Eise, an assistant professor studying climate change and social behavior at Indiana University-Bloomington, says that her research team has been forced to essentially become digital forensics experts, due to the amount of fraudsters who respond to ads for paid virtual surveys. (Scammers aren’t as interested in the unpaid surveys, unsurprisingly.) If the research project is federally funded, all of the online participants have to be over the age of 18 and living in the US.

“My team would check time stamps for when participants answered emails, and if the timing was suspicious, we could guess they might be in a different time zone,” Eise says. “Then we’d look for other clues we came to recognize, like certain formats of email address or incoherent demographic data.”

Eise says the amount of time her team spent screening people was “exorbitant,” and that they’ve now shrunk the size of the cohort for each study and have turned to “snowball sampling” or having recruiting people they know personally to join their studies. The researchers are also handing out more physical flyers to solicit participants in person. “We care a lot about making sure that our data has integrity, that we’re studying who we say we’re trying to study,” she says. “I don’t think there’s an easy solution to this.”

Barring any widespread technical solution, a little common sense can go a long way in spotting bad actors. Yelland shared with me the slide deck that she received as part of the fake job pitch. At first glance, it seemed like legit pitch, but when she looked at it again, a few details stood out. The job promised to pay substantially more than the average salary for a similar role in her location, and offered unlimited vacation time, generous paid parental leave, and fully-covered health care benefits. In today’s job environment, that might have been the biggest tipoff of all that it was a scam.

27 notes

·

View notes

Text

my summer pass time : get really stoned in the tj maxx parking lot and go in looking for chocolate covered cashews and getreally scared of all the other stuff in that section ❤️

8 notes

·

View notes

Note

Hot take :

Why does Connor bedard and Lexi mackie ( rumored new gf ) look so alike 💀 deadass if you told me they were related I would believe it

— Lexi is very pretty !!! Want people to know I’m not trying to be mean, I don’t understand when fans get mad at their fav player’s gf 😭😭 like let’s be real yall- we have no chance as fans with zero connections

idk i cant talk, im egoistic myself so

but i’ve thought that too like people dating someone who looks similar to them

it be like that

lexi is pretty!

haha no deadass like be mad at the player

#getreal

5 notes

·

View notes

Text

Hey everyone! Hope you're doing well. I've got a new art print available to order on my shop, the GETREAL print. This one pays tribute to a certain excitable son and his exploits . . . ❤️ Pick it up if you'd like to support me! ❤️

https://www.ianv.art/shop

Thanks for your time.

4 notes

·

View notes

Text

NOTHING is scarier than the flaming skull! #GETREAL

1 note

·

View note

Text

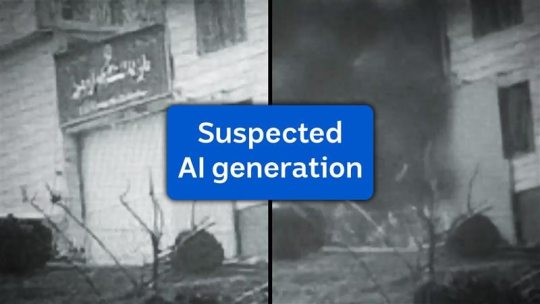

伊朗埃文监狱爆炸视频被疑经AI篡改

这段伊朗监狱爆炸视频是否由AI生成? (ABC NEWS Verify) 一段据称显示伊朗Evin监狱遭袭的视频在网络广泛传播,其真实性正引发质疑。 据路透社报道,这座位于Tehran的设施自1979年伊朗革命以来,一直是关押政治犯的主要监狱。 该机构称此处曾发生多起处决事件,并关押过数名知名外籍囚犯。 以色列国防部长Israel Katz周一表示,以军正在Tehran实施打击行动,目标包括Evin监狱。 外交部长Gideon Sa’ar分享了一段黑白视频,声称是监狱大门遭袭的监控画面。 这段6秒的视频显示,监狱大门在第2秒前突然爆炸。 随后门框的直线出现异常扭曲。 放慢视频后可见AI生成痕迹。 ABC NEWS Verify将视频交由反深度伪造平台GetReal联合创始人兼首席科学家Hany Farid鉴定。 这位University of California,…

View On WordPress

0 notes

Text

#ifndef COMPLEXN_H

#define COMPLEXN_H

//2001 protocode

class complexnum {

private :

struct Complex {

float a, b; // REAL part

// solves only one segment of equation at a time

float i; // IMAGINARY part

// results below

float rr; float ri; float xx;

};

Complex mathcplx;

// access recs dunno why i called it an instance :(

int yada; // counter vars yada yada yada yada yada yada

public :

void complex() { init(); }

// --- read states --------------------------------------------------------

float getreal() { return mathcplx.rr; }

float getimag() { return mathcplx.ri; }

float power(float n, int nb);

/* int power(int n, int nb); // this should be in mathobj.h */

// --- change states ------------------------------------------------------

void init(float reala = 0.0, float realb = 0.0, float imagi = 0.0) {

mathcplx.rr = 0.0; mathcplx.ri = 0.0; // init these to zero

mathcplx.a = reala; mathcplx.b = realb; mathcplx.i = imagi; } // init

void conj (float ar, float ai); // manipulate imaginary

void add (float ar, float ai, float br, float bi);

void sub (float ar, float ai, float br, float bi);

void mul (float ar, float ai, float br, float bi);

void div (float ar, float ai, float br, float bi);

}; // --- complexnumber ---------------------------------------------------------------

float complexnum::power(float n, int nb)

{ for (yada=0; yada<(nb+1); yada++) mathcplx.xx = (n * n); return mathcplx.xx; }

void complexnum::conj (float ar, float ai) {

mathcplx.rr = ar;

mathcplx.ri = -ai; }

void complexnum::add (float ar, float ai, float br, float bi) {

mathcplx.rr = ar + br;

mathcplx.ri = ai + bi; }

void complexnum::sub (float ar, float ai, float br, float bi) {

mathcplx.rr = ar - br;

mathcplx.ri = ai - bi; }

void complexnum::mul (float ar, float ai, float br, float bi) {

mathcplx.rr = ar*br - ai*bi;

mathcplx.ri = ar*bi + br*ai; }

void complexnum::div (float ar, float ai, float br, float bi) {

struct Complex c, result, num;

float denom;

conj(br, bi); c.a = getreal(); c.i = getimag();

mul (ar, ai, c.a, c.i); num.a = getreal(); num.i = getimag();

denom = br*br + bi*bi + 1.2e-63; // zero chopping

mathcplx.rr = num.a / denom;

mathcplx.ri = num.i / denom; }

// --- end of complexnum class --------------------------------------------------------

#endif

0 notes

Text

#getreal #elitaout

I truly find it sad that people are starting to believe robots/ai over people that share their real life human experiences.

Only when the computer Willow 'confirmed' that parallel universes exist, NOW everyone is so surprised?

Thousands of shifters have been sharing their experiences, their feelings, their Dr, their loves, their families, their relationships, friendships...but they only believe It now that a computer says it.

Truly sad how it's become normal to believe machines over Souls, minds, cautiousness. Because that's what we are.

YES. ITS REAL.

896 notes

·

View notes