#LoadBalancer

Explore tagged Tumblr posts

Text

Server Load Balancer: A Key Feature for Scalable and Reliable Infrastructure

A server load balancer is an essential tool for any business that relies on servers to host applications, websites, or services. It plays a critical role in managing server traffic and ensuring high availability and reliability. Here are some ways the server load balancer feature of products like INSTANET can benefit various industries:

1. Load Balancing for On-Premise Servers

Distributes traffic evenly across multiple servers, preventing any single server from becoming a bottleneck.

Provides failover capability, allowing traffic to be redirected to operational servers if one or more servers fail.

Supports both TCP and HTTP loads, making it versatile for different types of server applications.

2. Enhancing Internet Connectivity for Moving Vehicles

Ideal for setting up mobile data centers in vehicles such as ambulances, buses, and coaches.

Ensures continuous and stable connections even while on the move, by balancing the load across the networks.

Provides ISP agnostic static IP addresses for remote accessibility of servers and critical equipment.

3. HDWAN Connectivity for Multi-location Networks

Enables seamless and secure exchange of data across geographically dispersed office locations.

Allows for hosting of internal servers that can be accessed securely externally, through load balancing.

Offers an alternative to SDWAN with enhanced security and always-on capabilities.

4. Remote Desktop Gateway for Remote Workforce

Role-based user access enables secure remote working through a browser interface.

Allows employees to access their systems without additional software, using standard RDP and VNC protocols.

Supports work-from-home connectivity, which has become essential in the modern workplace.

Summary

The server load balancer feature is more than just traffic management; it provides a scalable, high-availability solution that can be customized to the needs of businesses. It's ideal for organizations seeking robust on-premise server infrastructure, businesses operating on the move, and those requiring secure branch office connectivity. By utilizing products with server load balancer capabilities, such as INSTANET, businesses can maintain robust and efficient operations in a variety of scenarios.

Should you consider integrating a server load balancer into your IT infrastructure, do not hesitate to explore how features like HDWAN, remote desktop gateway, and failover support can bring measurable benefits to your organization

See more at https://internetgenerator.in/.

2 notes

·

View notes

Text

Google Cloud Networking, Network Security For DDoS Security

In this article, we will learn how to use Google Cloud networking and network security to defend your website against DDoS attacks.

Google Cloud makes large investments in its capabilities and is always innovating to prevent cyberattacks like distributed denial-of-service attacks from bringing down websites, apps, and services. It’s crucial to safeguarding its clients.

Google Cloud networking and network security

Google Cloud uses parts of its Cloud Armor, Cloud CDN, and Load Balancing services in its Project Shield offering, which leverages Google Cloud networking and its Global Front End infrastructure to help defend against attacks, including stopping one of the largest distributed denial-of-service (DDoS) attacks to date. It integrates them into a strong defense architecture that can assist in maintaining important websites of public interest online despite ongoing attacks.

Enterprise clients can also benefit from Google Cloud networking and network security, even though Project Shield is intended for users who are particularly vulnerable to DDoS attacks, such as news organizations, infrastructure supporting elections and voting, and human rights organizations. Using the same defense infrastructure as Project Shield, Google Cloud can assist you in safeguarding workloads anywhere on the web for your application, website, or API. This is the method.

Distributed Denial-of-Service attacks

DDoS is a severe threat that can bring your service offline without the need for specific permissions or security breaches. These attacks are difficult to trace and could originate from anywhere in the world. They commonly employ infected machines (and occasionally light bulbs) as part of botnets. The attacks resemble typical network traffic, but they happen extremely quickly hundreds of millions of fraudulent requests are sent per second.Image credit to Google Cloud

You must distinguish between valid and malicious traffic in order to protect your service; nevertheless, you must nonetheless process all requests, regardless of where they come from. Rather than attempting to stop the request escalation, Google wants you to be free to concentrate on providing value to your audience. Effective defenses must be able to scale up to handle more traffic than you actually receive in order to accomplish this.

How to begin

With time, older defensive strategies have been shown to be insufficient. Requests that appear to be coming from reputable sources are difficult for firewalls to deny. Investing heavily in infrastructure is necessary for traffic filtering, which is costly and uses resources that may be better utilized.

Using the networking and network security services provided by Google Cloud, protecting your website only takes a few minutes. With the use of its most recent machine learning (ML)-based defenses, its solutions can neutralize threats and cache your material to speed up user delivery while lessening the strain on your hosting servers. Although you can host your material anywhere, Google Cloud’s Cloud Armor, material Delivery Network, Load Balancer, and possibly Adaptive Protection will be used to safeguard it.

You can configure these safeguards for your company in two different ways. You have two options: use the Google Cloud console interface to follow its instructions below, or run this Terraform script (with a few tweaks.)

The Google Cloud console interface can be used to safeguard your service in the following step-by-step manner:

Take up a Google Cloud project first.

You can use the same project again if Google Cloud is already hosting your material.

It is necessary to activate APIs for:

Cloud Load Balancer

Cloud CDN

Cloud Armor

To locate your material, make a network endpoint group, which is a basic proxy. There are various forms for you to complete.

Give it a name.

‘New network endpoint’ gives you the option to point to either an IP address or a fully qualified domain name.

Here, use the IP address and port of your website (instead of 8.8.8.8). This stage instructs the load balancer on where to retrieve material in response to incoming requests.

Press the Create button.

You can click Next four times to construct a new load balancer (global front end) with the following configurations, all of which are defaults:

Application load balancer

Public facing (external)

Best for global workloads

Global external Application Load Balancer

Assign a name to the load balancer.

Indicate the direction of the traffic.

Choose Create IP Address after naming the frontend (it’s no more expensive than Ephemeral and lets you direct traffic to it regularly).

For your Frontend Load Balancer arrangement, which will resemble slide (2), use that IP.

Add the Backend service after that.

Then select “Create a Backend Service.”

Give it a name.

Select the Internet network endpoint group as the backend type. The information that the load balancer needs to establish a connection to a place on the internet is stored in this container.

Click ‘New Backend’ to get a list of network endpoint groups, of which the group we created above ought to appear. Select that.

We will require Enable Cloud CDN later, so make sure it is checked, as it should be.

The cache mode settings work well. Cache static content refers to the fact that Cloud CDN will obey cache-control headers and cache static content (such images and PDFs) in the absence of an explicit cache-control header.

You can return later to change Cloud Armor rules in that UI, leaving Security settings as they are.

If you need to protect your backends from particular sources (such known attackers, certain geographic areas, and large volume), you can add rules to the Cloud Armor edge security policy that will take effect before traffic reaches Cloud CDN. These rules can also be added in the Cloud Armor UI at a later time.

To complete the load balancer’s addition of the backend service, click Create.

To configure your new load balancer, click the Create button at the bottom of the page.

Utilizing the same static IP as your HTTP load balancer, repeat steps 4 through 7 for HTTPS.

You can upload your own certificates or choose Google-Managed certificates. If you utilize Google-Managed certificates, provide the certificate by creating a CNAME record according to the guidelines.

To make your defense configuration simpler, you can use the same security policy you made for your HTTP load balancer, or you can utilize a second security policy.

You can now direct your traffic to your newly configured load balancer. Don’t forget to update your domain’s DNS settings to point to the newly established static IP.

[Selective] A couple more clicks will grant you ML-based protections for your backend:

Enroll in the Paygo Cloud Armor Service Tier (or use Annual to save money on an annual basis).

Open the policies for Cloud Armor.

Navigate to your policy by clicking.

Select Edit.

Select Enable under Adaptive Protection.

Press Update.

Select “Add rule.”

To access evaluateAdaptiveProtectionAutoDeploy(), select Advanced Mode.

Enter 0 to use the rule at high priority, or any other low value.)

Press Add.

With the knowledge it has gained about attack patterns and typical traffic, Google Cloud can now adjust to these patterns.

Enhancing the caching layer

With the cache that cloud CDN offers, traffic can resolve at the Google edge, reducing latency and providing a break for your backend. That is very easy to activate on your load balancers and helps guard against broad, shallow DDoS attacks.

Google Cloud’s cache will be directed by the cache-control headers that your backend delivers, but it can also permit default caching of static resources, like photos, even in the lack of headers. While maintaining freshness, using a short Time-To-Live (TTL) might be quite beneficial in reducing request floods. Backend overload can be avoided even if you set your TTL to “one second.”

Start now

Try this out right now by selecting a backend that would benefit from more accessibility and dependability, adding a load balancer and CDN to it, and then see as happiness increases. Protection-as-a-service, so you may concentrate more on the happiness and have less headaches.

Read more on Govindhtech.com

#GoogleCloudnetworking#NetworkSecurity#CloudArmor#LoadBalancingservices#LoadBalancer#New#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Dive deep into BigIP F5 LTM with specialized courses for comprehensive learning and practical application. Elevate your expertise in load balancing and traffic management with focused training. https://www.dclessons.com/category/courses/bigip-f5-ltm

#BIGIP#F5#LTM#LoadBalancer#NetworkInfrastructure#ITTraining#NetworkManagement#ApplicationDeliveryController#DataCenterNetworking#EnterpriseNetworking

0 notes

Text

Take a Break Have a Kit-Kat

Take a Break. Have a Kit-Kat #learnmystuff #worklife #balance #loadbalancer #learn #kitkat

Taking a break is always a refreshment for the mind and heart. So you do not break down from your own well-being. This is good both physically and mentally so that you get to come back to your original form at any point .Taking a step back from what you have been doing helps to analyze your gaps for the way ahead. Analyzing those gaps will help you a lot in the regeneration of your work for the…

View On WordPress

0 notes

Text

What is LoadBalancer: Pattern and How to Choose it?

Introduction Any modern website on the internet today receives thousands of hits, if not millions. Without any scalability strategy, the website is either going to crash or significantly degrade in performance. A situation we want to avoid. As a known fact, adding more powerful hardware or scaling vertically will only delay the problem. However, adding multiple servers or scaling horizontally,…

View On WordPress

0 notes

Text

0 notes

Text

.

#Argh#not had a great morning#I don't think my install worked as I left it to run overnight and there's definitely some missing directories#which would be fine but it was estimating 4 hours to install it#and I keep getting asked questions about the old set up#and I don't have any idea any more how it's set up#if I ever did understand it#which I doubt because i am an idiot#had to waste an hour in a meeting looking at a new loadbalancer POC#which I also am too stupid to understand#argh#it's okay im fine now#I just can't wait for work to end#😆😆😆

5 notes

·

View notes

Text

Inverter & Battery Setup Never stay in the dark. We install and service all brands of inverters and backup batteries with full safety checks.

🌐 Visit - www.texasplumbing.us

Contact Us: 📞 Call / Whatsapp +91 8281 908 708

One Stop: www.toopitch.com/Texas-Plumbing

#ElectricalServices#ElectricianNearMe#HomeWiring#FanInstallation#SafeWiring#ModularSwitches#LightingFix#PowerSolutions#InverterSetup#SocketRepair#ElectricalMaintenance#EmergencyElectrician#OfficeLighting#GeyserInstallation#LEDLighting#SwitchboardFix#LoadBalancing#LicensedElectrician#ElectricalRepair#PowerFix#ElectricianServices#ProfessionalElectriciann#HomeElectrician#ElectricalSolutions#PowerUpYourHome#ElectricFix#SafeElectricity#LEDInstallation#Switchboard

0 notes

Video

youtube

Understanding Exchange Server High Availability | Exchange Server Load B...

#youtube#ExchangeServer HighAvailability HAProxy LoadBalancing EmailServer MicrosoftExchange Exchange2019 ExchangeSetup TechiJack ITAdmin SysAdmin Li

0 notes

Text

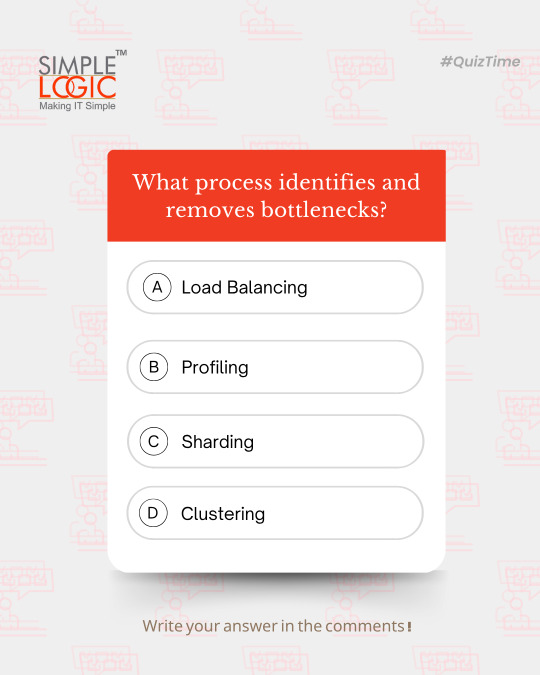

QuizTime

What process identifies and removes bottlenecks?

a) Load Balancing ⚖️ b) Profiling 📊 c) Sharding 🔀 d) Clustering 🧩

Comments your answer below👇

💻 Explore insights on the latest in #technology on our Blog Page 👉 https://simplelogic-it.com/blogs/

🚀 Ready for your next career move? Check out our #careers page for exciting opportunities 👉 https://simplelogic-it.com/careers/

#quiztime#testyourknowledge#bottlenecks#loadbalancing#profiling#sharding#clustering#brainteasers#triviachallenge#thinkfast#quizmaster#knowledgeIspower#mindgames#funfacts#makeitsimple#simplelogicit#simplelogic#makingitsimple#itservices#itconsulting

0 notes

Text

📌Project Title: End-to-End Energy Consumption Forecasting and Predictive Load Balancing System.🔴

ai-ml-ds-energy-forecast-lb-005 Filename: energy_consumption_forecasting_and_load_balancing.py Timestamp: Mon Jun 02 2025 19:14:07 GMT+0000 (Coordinated Universal Time) Problem Domain:Energy Management, Smart Grids, Utilities, Time Series Forecasting, Operations Research (Load Balancing aspect). Project Description:This project implements an end-to-end system for forecasting energy…

#DataScience#EnergyForecasting#LoadBalancing#LoadForecasting#pandas#PredictiveAnalytics#prophet#python#SmartGrid#TimeSeries#Utilities

0 notes

Text

Media CDN and Google Cloud Load Balancer Unleashed!

Media CDN for Streaming

For Live Streaming applications to provide end users with a high-quality experience on their devices, there must be low latency and continuous streaming. Live stream rendering delays can cause poor video quality and video buffering, which can drive away viewers. Your content delivery network (CDN) infrastructure needs to be dependable in order to provide high-quality live streaming.

A content delivery network (CDN) infrastructure called video CDN is made to distribute streaming video around the world with minimal latency. Notably, Media CDN makes advantage of YouTube’s infrastructure to deliver huge files quickly and reliably to consumers and to bring live and on-demand video streams closer to them. As a content delivery network (CDN), it helps users who are located distant from the origin server by delivering material to them based on their location from a geographically distributed network of servers. This helps to enhance performance and minimize latency.

Whether the Live Streaming application is hosted on-site, in Google Cloud, or in another cloud provider, this blog examines how live-streaming providers might use Media CDN architecture to better provide video content. No matter where the live-streaming infrastructure is located, media CDN can be used to render streams when it is linked with Google Cloud External Application Load Balancer as the origin. To further guarantee a high-quality watching experience, Media CDN can be configured to survive the majority of disruptions or outages. Continue reading to find out more.

Real-time broadcasting

The act of sending audio or video content in real time to broadcasters and playback devices is known as live streaming. Applications for Live Streaming often include the following elements:

The encoder transmits the stream to a packager after compressing the video to a variety of resolutions and bitrates.

Packager and origination service: Converts transcoded media files into various file formats and saves video clips for later rendering using HTTP endpoints.

CDN: Minimizes latency by streaming video segments straight from the origination service to playback devices all around the world.

CDN for media

Broadly speaking, Media CDN is made up of two crucial parts:

Edge cache service: Allows route configurations to direct traffic to a certain origin and offers a public endpoint.

Set up a Cloud Storage bucket or a Google External Application Load Balancer as the origin for the Edge cache.

The architecture shown in the above image demonstrates how Media CDN can integrate with Application Load Balancer to serve a live stream origination service that is operating on-premises, in Google Cloud, or on an external cloud infrastructure. Application load balancers facilitate advanced path- and host-based routing to connect to various backend services and enable access to many backend services. Because of this, live stream providers are able to cache their streams via Media CDN closer to the viewers of the live channels.

The various backend services that load balancers offer to enable communication across infrastructure are as follows:

Internet/Hybrid NEG Backends: Establish a connection with an origination service that is live-streaming and operating on-site or through another cloud provider.

Managed Instance Groups: Establish a multi-regional connection to a live-streaming origination service hosted by Compute Engine.

Zonal Network Endpoint Groups: Establish a connection with the GKE-based live-streaming origination service.

Catastrophe recovery (Original/Failover)

Planning for disaster recovery is crucial to guard against zonal or regional failures, as any interruption to live stream flow might impact viewing. with order to aid with disaster recovery, Media CDN offers primary and secondary origin failover.

Media CDN with an Application Load Balancer origin that provides failover across regions is seen in the above figure. In order to accomplish this, two “EdgeCacheOrigin” hosts with distinct “host header” values are created and pointed to the same Application Load Balancer. The host header on each EdgeCacheOrigin is configured to take on a certain value. Based on the value of the host header, the Application Load Balancer routes requests for live stream traffic using host-based routing.

The Application Load Balancer is triggered when a playback device sets the host header to the primary origin value and requests the stream from Media CDN. After examining the host header, the load balancer routes the traffic to the main live stream origination service. The failover origin rewrites the host header to the failover origin value and delivers the request to the application load balancer in the event that the primary live stream provider fails. A secondary live stream origination service located in a separate zone or region receives the request from the load balancer after it has matched the host.

In summary

A crucial component of the ecosystem for live streaming, media CDNs help to guarantee quality, lower latency, and enhance performance. They examined how Google Media CDN may be used by live stream applications in a variety of architecture and environment types in their post.

Read more on Govindhtech.com

0 notes

Text

Rise to the top of the data center world with #CCIE! Explore complex designs, implementations, and troubleshooting. Join the league of elite networking professionals and showcase your mastery. https://www.dclessons.com/

#NetworkingExpert#CiscoCertified#datacenter#networking#cloud#sdn#loadbalancer#f5#vmware#scripting#sdwan#security#sdaccess#docker#iot#intentbasednetworking#aws#azure#googlecloud#cisco#juniper#aruba#ciscoaci#ciscodccor#awscertifiednetworkspecialty#azureaz305#googlecertifiedcloudassociate#sase#sdwanvssase

0 notes

Text

📌Project Title: End-to-End Energy Consumption Forecasting and Predictive Load Balancing System.🔴

ai-ml-ds-energy-forecast-lb-005 Filename: energy_consumption_forecasting_and_load_balancing.py Timestamp: Mon Jun 02 2025 19:14:07 GMT+0000 (Coordinated Universal Time) Problem Domain:Energy Management, Smart Grids, Utilities, Time Series Forecasting, Operations Research (Load Balancing aspect). Project Description:This project implements an end-to-end system for forecasting energy…

#DataScience#EnergyForecasting#LoadBalancing#LoadForecasting#pandas#PredictiveAnalytics#prophet#python#SmartGrid#TimeSeries#Utilities

0 notes

Text

📌Project Title: End-to-End Energy Consumption Forecasting and Predictive Load Balancing System.🔴

ai-ml-ds-energy-forecast-lb-005 Filename: energy_consumption_forecasting_and_load_balancing.py Timestamp: Mon Jun 02 2025 19:14:07 GMT+0000 (Coordinated Universal Time) Problem Domain:Energy Management, Smart Grids, Utilities, Time Series Forecasting, Operations Research (Load Balancing aspect). Project Description:This project implements an end-to-end system for forecasting energy…

#DataScience#EnergyForecasting#LoadBalancing#LoadForecasting#pandas#PredictiveAnalytics#prophet#python#SmartGrid#TimeSeries#Utilities

0 notes

Text

📌Project Title: End-to-End Energy Consumption Forecasting and Predictive Load Balancing System.🔴

ai-ml-ds-energy-forecast-lb-005 Filename: energy_consumption_forecasting_and_load_balancing.py Timestamp: Mon Jun 02 2025 19:14:07 GMT+0000 (Coordinated Universal Time) Problem Domain:Energy Management, Smart Grids, Utilities, Time Series Forecasting, Operations Research (Load Balancing aspect). Project Description:This project implements an end-to-end system for forecasting energy…

#DataScience#EnergyForecasting#LoadBalancing#LoadForecasting#pandas#PredictiveAnalytics#prophet#python#SmartGrid#TimeSeries#Utilities

0 notes