#Matrix Multiplication Program In C Language

Explore tagged Tumblr posts

Text

Thailand Visa

Thailand's visa framework operates under a three-tier authorization system established by the Immigration Act (1979) and subsequent amendments:

A. Entry Permission (Visa Proper)

Issued by Thai embassies/consulates abroad

Determines initial duration and purpose of stay

Classified as:

Transit (TS)

Tourist (TR)

Non-Immigrant (12 subcategories)

Diplomatic/Official

B. Extension of Stay

Granted by Immigration Bureau within Thailand

Converts temporary entry into long-term residence

Requires specific qualifying conditions (e.g., marriage, retirement)

C. Re-Entry Permits

Maintains status during international travel

Single (THB 1,000) vs. Multiple (THB 3,800) options

2. Non-Immigrant Visa Subtypes: Operational Nuances

A. Business Visa (Non-B)

Approval Mechanics

Standard Path: Thai employer sponsorship + work permit

BOI Fast Track: 15-day processing for promoted companies

Treaty Routes: US Amity or ASEAN agreements

B. Retirement Visa (Non-O-A)

Financial Verification Protocols

Bank Deposit Method:

THB 800K seasoning: 2 months pre-application

Post-approval minimum: THB 400K maintained

Audit Trail: Must show foreign origin of funds

Income Method:

Embassy letters no longer sufficient alone

Requires 12-month Thai bank statements showing transfers

Exchange Rate Risk: Fluctuations may disqualify applicants

C. Education Visa (Non-ED)

Compliance Enforcement

Attendance Tracking:

Biometric check-ins at accredited institutions

Minimum 80% attendance rate enforced

Program Limitations:

Language schools: Max 5 years continuous stay

University programs: Duration of degree + 1 year

3. Special Economic Visas: Technical Specifications

Smart Visa Program

Sector-Specific Requirements

CategoryMinimum SalaryQualification ProofTalent (T)THB 200K/monthPatent filings or PhDStartup (S)N/ASeries A funding or BOI backingInvestor (I)N/ATHB 20M liquid investment

Privileges Matrix

Work permit exemption (Categories T/S)

90-day reporting waiver

Dependent work rights

4. Immigration Enforcement Technologies

Biometric Systems

Facial Recognition: Deployed at major entry points

Fingerprint Database: Linked to Interpol records

Blockchain Verification: Pilot for document authentication

Risk Assessment Algorithms

Visa Run Detection:

Pattern analysis of border crossings

Flagging of "out-in" same-day movements

Financial Scrutiny:

Bank statement anomaly detection

Seasoning period algorithmic validation

5. Judicial Recourse Pathways

Administrative Appeals Process

First Appeal: To originating immigration office (30 days)

Second Appeal: Immigration Commission (60 days)

Judicial Review: Administrative Court (6-18 months)

Precedent-Setting Cases

2018 Supreme Court Ruling: Overturned arbitrary visa denials

2021 Case #4562: Established due process for Elite Visa revocations

6. Emerging Policy Shifts

Digital Nomad Visa (2025 Pilot)

Technical Requirements:

Minimum $80K annual income

Health insurance with $50K coverage

Remote employment verification

ASEAN Harmonization

Single Visa Proposal: Pending implementation

Labor Mobility Framework: Skilled worker exchange

7. Strategic Compliance Planning

Documentation Lifecycle Management

Retention Periods:

Bank statements: 3 years

Tax records: 5 years

Entry/exit stamps: 10 years

Risk Mitigation Framework

Parallel Status Maintenance (e.g., Elite + work permit)

Buffer Capitalization (20% above minimums)

Pre-emptive Legal Opinions for complex cases

8. Expert Advisory

For Corporate Applicants

BOI pre-approval reduces processing by 60%

Treaty structures optimize ownership flexibility

For Individual Investors

Elite + Property Purchase creates residency backup

Multi-entry Non-O provides interim flexibility

For Families

Education visa + Dependent extensions ensure continuity

Interlocking applications prevent status gaps

9. Conclusion: Mastering the System

Thailand's visa regime requires technical precision rather than generic approaches. Successful navigation demands:

Sector-specific strategy alignment

Documentation forensics preparation

Contingency layering for policy shifts

#thailand#thai#immigration#immigrationinthailand#thaiimmigration#thailandvisa#thaivisa#visainthailand

2 notes

·

View notes

Text

Decode Data Science: Master the Math That Powers AI & Analytics

In today’s data-driven era, understanding the mathematics behind algorithms is critical — not just to use them, but to understand, explain, and improve them. Whether you’re building a regression model, training a neural network, or analyzing high‑dimensional data, a strong foundation in math is your greatest ally.

In this article, we explore the key mathematical areas every aspiring data scientist must master:

Linear Algebra

Calculus

Probability & Statistics

Matrix Calculus & Optimization

Dimensionality Reduction & Topological Techniques

Practical Integration & Career Pathways

We also highlight how the BookMyLearning PG‑Program in Data Science helps you build this foundation. You can enroll via this link: BookMyLearning PG‑Program in Data Science.

1. Linear Algebra: The Language of Data

a. Vectors, Matrices & Transformations

A vector represents points or directions in space; a matrix can compress a system of linear equations or transformations.

Understanding properties — dimensions, rank, basis, orthogonality — is essential for working with datasets, feature spaces, and deep-net transformations. Techniques like Singular Value Decomposition (SVD) are instrumental in tasks like dimensionality reduction.

b. Eigenvalues, Eigenvectors & SVD

Eigen-decomposition reveals principal axes in data variance.

Principal Component Analysis (PCA) utilizes eigen-decomposition/SVD to extract independent directions of greatest variance — a staple in exploratory data analysis and preprocessing

c. Practical Implementation

Libraries like NumPy and frameworks like scikit-learn deliver built-in tools to compute matrix operations, decompositions, and transformations.

Mastering linear algebra enhances your ability to interpret model characteristics: why logistic regression converges, how PCA projects data, how SVD compresses imagery, and how gradient-based optimization works.

2. Calculus: Modeling Change & Learning

a. Differential Calculus

Derivatives (∂/∂x) measure rates of change and are used ubiquitously for gradient descent, backpropagation in neural networks, and learning rates.

Partial derivatives allow optimization in multi-dimensional parameter spaces.

b. Integral & Multi-variable Calculus

Though integrals are less directly used in training, they’re crucial in fields like Bayesian inference, convolution, and calculating areas under curves (AUC).

Understanding multiple integrals and Jacobian matrices helps with probability density transformations and optimization.

c. Matrix Calculus

Enables compact representation of gradients and Hessians needed for efficient training of neural networks.

Key for understanding backpropagation and advanced optimization methods like Newton’s method.

3. Probability & Statistics: Learning from Data

a. Probability Foundations

Essentials include random variables, distributions (Gaussian, binomial), expectation, variance, and covariance.

Core to Bayesian inference, modeling uncertainty, and deriving probabilistic approaches like maximum likelihood estimation (MLE).

b. Statistical Inference & Hypothesis Testing

Hypothesis testing, p-values, and confidence intervals are fundamental tools for validating models.

Regression analysis — both linear and logistic — relies on statistical principles to assess parameter significance.

c. Regularization & Bias-Variance Trade-off

Techniques such as ridge (L₂) and lasso (L₁) regularization address overfitting by shrinking model coefficients

Grasping the bias-variance trade-off is crucial for tuning model complexity and evaluating generalization.

4. Optimization: Making Algorithms Learn

a. Gradient Descent Variants

Standard. stochastic, and mini-batch gradient descent are used for parameter updates in models from linear regression to deep neural networks.

Learning rate, convergence criteria, and momentum are key aspects that tie back to calculus and linear algebra.

b. Convex vs Non-convex Optimization

Convex problems (e.g., ridge regression) have unique global minima; understanding convexity helps interpret algorithmic guarantees.

Neural networks often pose non-convex challenges — requiring careful initialization and adaptive optimizers like Adam and RMSProp.

c. Numerical Techniques

Root-finding, line search, and numerical integration facilitate robust implementations of algorithms requiring approximations.

5. Advanced Techniques: Dimensionality & Topology

a. Dimensionality Reduction

PCA, t-SNE, UMAP: reduce high-dimensional data into 2D/3D for visualization and simplified modeling.

b. Topological Data Analysis (TDA)

An emerging field that uses algebraic topology to uncover the “shape” of data, robust to noise and metric choice.

6. Integration: From Theory to Practice

To bridge theory with application:

Python toolkits — like SymPy, NumPy, scikit-learn, and TensorFlow — integrate math into usable modules.

Project-based learning hones technical skills: coding linear regression, implementing backpropagation, or performing PCA on real data.

Balancing rigor with intuition means knowing mathematical depth while understanding the ‘why’ behind algorithms

7. Career Trajectory: Why Math Matters

Understanding math differentiates you from those relying purely on software. It empowers you to assess model assumptions, validate outputs, and innovate balancing constraints.

As seen in Cathy O’Neil’s Weapons of Math Destruction, algorithms can carry bias — requiring mathematically literate professionals to recognize and correct such pitfalls.

Data scientists, much like Nate Silver or Kaggle competitors, often find practical experience and mathematical understanding trumps advanced degrees

BookMyLearning’s PG‑Program in Data Science: A Blueprint

This rigorous PG program offers an integrated structure covering the essential mathematics outlined:

Core modules on linear algebra, calculus, probability, statistics, and optimization.

Hands-on labs using Python libraries and real datasets.

Faculty mentoring to guide mathematical thinking and technical execution.

Capstone projects applying math to real-world problems: classification, forecasting, unsupervised learning.

Check full details and enrolment via: 👉 BookMyLearning PG‑Program in Data Science

Summary & Recommendations

Master linear algebra — matrices, eigenvalues, SVD, PCA

Apply calculus — derivatives in optimization, integrals in inference

Understand probability & stats — distributions, hypothesis testing, modeling

Grasp optimization — convexity, gradient descent, regularization

Explore advanced topics — matrix calculus, dimensionality reduction, topology

Use Python and real datasets to connect theory with implementation

Stay current and ethical — mathematical understanding enables responsible AI use

Final Thoughts

Essential math is the backbone of every phase of data science — from preprocessing to modeling, validation to deployment. By investing in your mathematical journey, you gain:

The ability to scrutinize and improve algorithms

The confidence to explain decisions quantitatively

The flexibility to design new solutions for novel problems

If you’re ready to develop this mathematical foundation, explore the BookMyLearning PG‑Program in Data Science, designed to guide you step-by-step: BookMyLearning PG‑Program in Data Science

1 note

·

View note

Text

ECSE 324 Lab 1 Basic Assembly Language Programming Complete Solution

Part 1: The Matrices! They’re Multiplying!! In this part, you will translate into assembly two different implementations of matrix multiplication in C, and then investigate their performance. Assume that main in the C code below begins at _start in your assembly program. All programs should terminate with an infinite loop, as in exercise (5) of Lab 0. Subroutine calling convention It is important…

0 notes

Text

C Program for Matrix Multiplication: A Beginner’s Guide with Examples & Practice

Learn how to perform matrix multiplication in C with our beginner-friendly guide. Explore step-by-step code examples, dynamic memory allocation techniques, and real-life applications in computer graphics and data science. Perfect for novice programmers looking to boost their coding skills!

Matrix multiplication is a cornerstone of programming, used in graphics, data science, and engineering. If you’re learning C, mastering this concept will boost your coding skills. In this guide, we’ll break down matrix multiplication in C using simple language, practical code examples, and real-life applications. Let’s get started!What is Matrix Multiplication? Matrix multiplication involves…

0 notes

Text

What Skills Are Needed to Become a Successful AI Developer?

The field of artificial intelligence (AI) is booming, with demand for AI developers at an all-time high. These professionals play a pivotal role in designing, developing, and deploying AI systems that power applications ranging from self-driving cars to virtual assistants. But what does it take to thrive in this competitive and dynamic field? Let’s break down the essential skills needed to become a successful AI developer.

1. Programming Proficiency

At the core of AI development is a strong foundation in programming. An AI developer must be proficient in languages widely used in the field, such as:

Python: Known for its simplicity and vast libraries like TensorFlow, PyTorch, and scikit-learn, Python is the go-to language for AI development.

R: Ideal for statistical computing and data visualization.

Java and C++: Often used for AI applications requiring high performance, such as game development or real-time systems.

JavaScript: Gaining popularity for AI applications in web development.

Mastery of these languages enables developers to build and customize AI algorithms efficiently.

2. Strong Mathematical Foundation

AI heavily relies on mathematics. Developers must have a strong grasp of the following areas:

Linear Algebra: Essential for understanding neural networks and operations like matrix multiplication.

Calculus: Used for optimizing models through concepts like gradients and backpropagation.

Probability and Statistics: Fundamental for understanding data distributions, Bayesian models, and machine learning algorithms.

Without a solid mathematical background, it’s challenging to grasp the theoretical underpinnings of AI systems.

3. Understanding of Machine Learning and Deep Learning

A deep understanding of machine learning (ML) and deep learning (DL) is crucial for AI development. Key concepts include:

Supervised Learning: Building models to predict outcomes based on labeled data.

Unsupervised Learning: Discovering patterns in data without predefined labels.

Reinforcement Learning: Training systems to make decisions by rewarding desirable outcomes.

Neural Networks and Deep Learning: Understanding architectures like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) is essential for complex tasks like image recognition and natural language processing.

4. Data Handling and Preprocessing Skills

Data is the backbone of AI. Developers need to:

Gather and clean data to ensure its quality.

Perform exploratory data analysis (EDA) to uncover patterns and insights.

Use tools like Pandas and NumPy for data manipulation and preprocessing.

The ability to work with diverse datasets and prepare them for training models is a vital skill for any AI developer.

5. Familiarity with AI Frameworks and Libraries

AI frameworks and libraries simplify the development process by providing pre-built functions and models. Some of the most popular include:

TensorFlow and PyTorch: Leading frameworks for deep learning.

Keras: A user-friendly API for building neural networks.

scikit-learn: Ideal for traditional machine learning tasks.

OpenCV: Specialized for computer vision applications.

Proficiency in these tools can significantly accelerate development and innovation.

6. Problem-Solving and Analytical Thinking

AI development often involves tackling complex problems that require innovative solutions. Developers must:

Break down problems into manageable parts.

Use logical reasoning to evaluate potential solutions.

Experiment with different algorithms and approaches to find the best fit.

Analytical thinking is crucial for debugging models, optimizing performance, and addressing challenges.

7. Knowledge of Big Data Technologies

AI systems often require large datasets, making familiarity with big data technologies essential. Key tools and concepts include:

Hadoop and Spark: For distributed data processing.

SQL and NoSQL Databases: For storing and querying data.

Data Lakes and Warehouses: For managing vast amounts of structured and unstructured data.

Big data expertise enables developers to scale AI solutions for real-world applications.

8. Understanding of Cloud Platforms

Cloud computing plays a critical role in deploying AI applications. Developers should be familiar with:

AWS AI/ML Services: Tools like SageMaker for building and deploying models.

Google Cloud AI: Offers TensorFlow integration and AutoML tools.

Microsoft Azure AI: Features pre-built AI services for vision, speech, and language tasks.

Cloud platforms allow developers to leverage scalable infrastructure and advanced tools without heavy upfront investments.

9. Communication and Collaboration Skills

AI projects often involve multidisciplinary teams, including data scientists, engineers, and business stakeholders. Developers must:

Clearly communicate technical concepts to non-technical team members.

Collaborate effectively within diverse teams.

Translate business requirements into AI solutions.

Strong interpersonal skills help bridge the gap between technical development and business needs.

10. Continuous Learning and Adaptability

The AI field is evolving rapidly, with new frameworks, algorithms, and applications emerging frequently. Successful developers must:

Stay updated with the latest research and trends.

Participate in online courses, webinars, and AI communities.

Experiment with emerging tools and technologies to stay ahead of the curve.

Adaptability ensures that developers remain relevant in this fast-paced industry.

Conclusion

Becoming a successful AI developer requires a combination of technical expertise, problem-solving abilities, and a commitment to lifelong learning. By mastering programming, mathematics, and machine learning while staying adaptable to emerging trends, aspiring developers can carve a rewarding career in AI. With the right mix of skills and dedication, the possibilities in this transformative field are limitless.

0 notes

Text

Solved ECSE 324 Lab 1: Basic Assembly Language Programming

Part 1: The Matrices! They’re Multiplying!! In this part, you will translate into assembly two different implementations of matrix multiplication in C, and then investigate their performance. Assume that main in the C code below begins at _start in your assembly program. All programs should terminate with an infinite loop, as in exercise (5) of Lab 0. Subroutine calling convention It is important…

0 notes

Text

Ah, PhD advisor Chalmers, welcome! I hope you're prepared for an unforgettable thesis!

mmhm

oh, ye gods! my matrix multiplication algorithm is ruined! ... but what if I were to use theoretical computer science constructs and disguise them as actual programming?

delightfully devilish, Seymour

uh --

Professor! I was just, uh, using c++ libraries that definitely exist! legitimate programming. Care to join me?

Why is your thesis completely struck out and replaced by illegible scribbles?

um-- oh! those aren't illegible scribbles! They're an algorithm! An algorithm that multiplies matrices in O(n^2log(n)) time!

mm.

Professor, I hope you're prepared for an algorithm that multiplies integer matrices in O(n^2log(n)) time using a turing oracle!

using a turing oracle? I thought you were going to write a computable algorithm

oh, no, I just said "algorithm". I don't assume computability when describing algorithms.

uh huh.

Say, this algorithm has time complexity n^4log(n).

oh, no! you only have to calculate the general form of the queries to the oracle once.

really.

well, what is the information entropy of the input you're giving the oracle?

it's O(1) in the input. It's a very good oracle.

I see. you know, this implementation queries the oracle with natural language rather than asking if a specific turing machine halts.

oh, no, it's not really a turing oracle. It's just an Oracle. It's a thing in some versions of c++.

a turing oracle. In c++.

yes.

yes. And it knows telepathically what you mean by "the product of the matrices" even though you're not mentioning the matrices A or B to it explicitly.

uh-- you know-- one thing I should-- excuse me for one moment

of course.

WELL that was wonderful. I assume you approve of my thesis?

yes, I think it-- well, no? What on earth is happening with the oracle you're using?

it's a real computing oracle.

a r- a real, extant computing oracle? at this abstraction? at this memory bandwidth? executed entirely by a natural language query??

yes.

may i see it?

no.

SEYMOUR, MY IDE SAYS THE TURINGORACLE LIBRARY DOES NOT EXIST

no, colleague, you just don't have the library in your installation of c++

Well, Seymour, you are an odd fellow. But I must say: you write a fast algorithm

#steamed hams I guess#and uh a joke about computation#I really don't think this makes any sense out of context but it is outlandishly silly anyway#for context I wrote a joke algorithm that's basically what seymour describes here and then discussed the absurdity of the oracle involved

0 notes

Text

What is the Future of C Programming?

The modern world requires efficiency, performance and resource optimization. The C programming language remains highly relevant because it encapsulates these attributes and more. It is widely used in developing operating systems, embedded systems, and performance-critical applications. Further, the C programming language is the root of many other programming languages like C++, Java and Python, making it essential as the foundation stone on which the principles of programming are understood.

Several industries such as automotive, telecommunications and manufacturing incorporate the C programming language as a crucial aspect. The structure of C programming is such that the language also boasts portability and widespread use in systems across industries involving technology and hardware. Ultimately, it continues to be a foundational skill for software developers and engineers.

The Future of C Programming Language

There is a vast scope for the structure of C programming to incorporate aspects that focus on future readiness. Here are some ways in which C programming is likely to get incorporated into the future of technology, software and hardware.

Potential Improvements in the C Programming Language:

Firstly, technology has taken all information to the cloud. Every aspect of some businesses could be online. This is why advancements in the C programming language will mostly focus on enhancing security, portability and modern development attributes. There will be an emphasis on reducing security vulnerabilities in the code such as buffer overflows. Secondly, the focus will move towards performance optimization in modern multi-core processors. Further updates will streamline debugging as well. Standard library updates may introduce more utilities for contemporary needs, such as improved support for networking and cryptography. Finally, one of the best ways to remain relevant and in step with current technological trends is to maintain backward compatibility while introducing the above mentioned enhancers. This way, it will be more than ready to handle any scale of operations.

Integration with Modern Technologies:

In the modern tech realm, IoT devices can harness the attributes of the C programming language to their advantage. For example, take a drone. This high-tech machine that captures vast expanses of space while flying can benefit from C to program its operations. Performance optimization via Real-Time Operating Systems can handle flight control, sensor data management and communication with ground control. Moreover, it can also handle interruptions and improve efficiency. How does the structure of C programming achieve this? C takes care of the compression algorithms and streams the data from the drone to a remote device with ease. Integrating C into modern technology can improve control and response time which is essential for safety and efficiency.

C Programming Language with Technological Developments:

The C programming language optimizes the core computational tasks that are integral to AI and ML. C programming guarantees high performance, especially in entry-level operations for libraries like TensorFlow and PyTorch. C gets hold of the repetitive tasks and speeds them up, making operations more efficient and speeding up computations. For example, when training deep neural networks, C handles intensive tasks like matrix multiplications and data pre-processing. Additionally, it can be used to develop custom Machine Learning algorithms and resources. One of the best parts of C is its ability to seamlessly integrate with higher-level languages like Python. This integration enables efficient deployment of AI models on hardware with constrained resources, such as embedded systems and IoT devices, enhancing their ability to perform real-time data analysis and decision-making.

What is the Current Scope of the C Programming Language?

The C programming language remains highly relevant and significant in the modern day. As technology continues to grow, its foundations need to be stronger than ever, which C can provide. Firstly, it is used in varying capacities, from system programming for Operating Systems, embedded systems and real-time applications to performance-critical applications like databases, compilers, etc. Secondly, its efficiency and low-level hardware access make it a fundamental language to know for any software engineer or developer. It is the base on which other languages like C++ and Java are taught in educational institutions. Ultimately, one of the main reasons why careers are built on the C programming language is due to its portability and its support of advanced technology in fields like IoT, AI and ML.

In summary, it has and will continue to remain relevant in the modern world.

Studying the Structure of C Programming: Final Thoughts

Students aiming to study and earn a BCA degree in India should consider learning the C programming language. For starters, its benefits as a strong foundational language helps you grasp the basic programming concepts and inculcate problem-solving skills.

C’s structure and syntax are known to equip students with a deep understanding of base-level operations as well as efficient memory management. For future software developers and engineers, it is the ultimate guide to system programming and software development. Once you learn the C programming language, mastering higher-level languages like C++, Java and Python comes more easily. Top universities like Amity University also offer great Bachelors in Computer Applications (BCA) courses that focus on C as a base language. It is a great springboard to ensure excellent coursework, internships and job opportunities in tech.

0 notes

Text

What is TensorFlow? Understanding This Machine Learning Library

In this developing era of machine learning and deep learning, a question always arises: what is TensorFlow? It is a free to use library for artificial intelligence and ML. It can be used to perform various tasks but is specifically focused on integration and training of deep neural networks. It was developed in Google by the Google Brain team in 2015.

In the starting of 2011, Google released an updated version with various features. Since then, it has received a huge demand in the industry for its excellent features. Considering its huge popularity, people always ask "what does TensorFlow do?" This article gives the proper understanding of this library along with its benefits and applications.

What is TensorFlow?

It is an open-source library introduced by Google primarily for deep learning operations. It was firstly developed to perform huge numerical computations, but not for deep learning applications. Now it supports numerical computations for various workloads, such as ML, DL and other predictive and statistical analysis.

It collects data in the form of multi dimensional arrays of higher dimensions called tensors. These arrays are very convenient and helpful to collect and store a huge amount of data. This tool works according to data flow graphs that have edges and nodes. It is very simple to execute its code in a distributed manner among a cluster of computers.

How Does TensorFlow Work?

This library enables users to create dataflow graphs for a better representation of data flow in graphs. The graph has two factors: nodes and edges. Nodes represent a mathematical operation and the connection between nodes is called edge. This process takes inputs in the form of a multidimensional array. Users can also create a flowchart of operations that has to perform on these inputs.

What is TensorFlow in Machine Learning?

What is TensorFlow in machine learning? It is an open-source machine learning framework. It is mostly used in developing and deploying ML models. Its demand in this field is due to its excellent flexibility. It helps to implement a variety of algorithms to perform operations. These operations includes:

Robotics

Healthcare

Fraud Detection

Generative Models

Speech Recognition

Reinforcement Learning

Recommendation Systems

Natural Language Processing (NLP)

Image Recognition and Classification

Time Series Analysis and Forecasting

Components of TensorFlow

The working of this tool can be easily understood by breaking it into its components. It can be divided into the following factors:

Tensor

The name TensorFlow is borrowed from its main framework, “Tensor”. A tensor is a vector of a n-dimensional matrix that demonstrates all kinds of data. All values in tensor are similar in data types with an informed shape. The shape of the data represents the dimension of the matrix. It can be generated by inputs or results of the process.

Graphs

This tool mostly works on graph framework. The chart collects and describes all the computations completed during the process. It can run on multiple CPUs or GPUs and mobile operating systems. The portability of the graph allows it to conserve the computations for current or later use. All of the computation is executed by connecting tensors together.

For instance, consider an expression, such as: a= (b+c)*(c+2)

This function can be break into components as: d=b+c, e=c+2, a=d*e

Graphical representation of the expression -

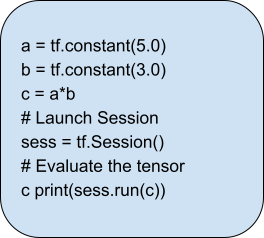

Session

A session is used to exclude the operation out of the graph. It helps to feed the graph with the tensor value. Inside a session, an operation must run in order to create an output. It is also used to evaluate the nodes. Here is an example of session:

Features of TensorFlow

This tool has an interactive multi-platform programming interface. It is more reliable and scalable compared to other DL platforms. The following features proves the popularity of this library:

Flexible

Open Source

Easily Trainable

Feature Columns

Large Community

Layered Components

Responsive Construct

Visualizer (with TensorBoard)

Parallel Neural Network Training

Availability of Statistical Distributions

Applications of TensorFlow

Many newcomers to the field of artificial intelligence often ask, 'What does TensorFlow do?’ It is an open-source platform designed for machine learning and DL operations. Here are some the applications of this library-

1. Voice Recognition

It is one of the most popular use cases of this library. It is built on neural networks. These networks are capable of understanding voice signals if they have a proper input data feed. It is used for voice search, sentimental analysis, voice recognition and understanding audio signals.

The use case is widely popular in smartphone manufactures and mobile OS developers. This is used for voice assistance, such as Apple’s Siri, Microsoft Cortana and Google Assistance. It is also used in speech-to-text applications to convert audios into texts.

2. Image Recognition

This use case is majorly used in social media and smartphones. Image recognition, image search, motion detection, computer vision and image clustering are its common usage. Google Lens and Meta’s deep face are examples of image recognition technology. This deep learning method can identify an object in an image never seen before.

Healthcare industries are also using image recognition for quick diagnosis. TensorFlow algorithms help to recognise patterns and process data faster than humans. This procedure can detect illnesses and health issues faster than ever.

3. Recommendation

Recommendation is another method used today to form patterns and data forecasting. It helps to derive meaningful statistics along with recommended actions. It is used in various leading companies, such as Netflix, Amazon, Google etc. These applications always suggest the product according to customer preferences.

4. Video Detection

These algorithms can also be used in video data. This is used in real-time threat detection, motion detection, gaming and security. NASA is using this technology to build a system for object clustering. It can help to predict and classify NEOs (Near Earth Objects) like orbits and asteroids.

5. Text-Based Applications

Text-based applications are also a popular use case of this library. Sentiment analysis, threat detection, social media, and fraud detection are some of the basic examples. Language detection and translation are other use cases of this tool. Various companies like Google, AirBnb, eBay, Intel, DropBox, DeepMind, Airbus, CEVA, etc are using this library.

Final Words

This article has explained 'what is tensor flow'. It is a powerful open-source tool for machine learning and deep learning. It helps to create deep neural networks to support diverse applications like robotics, healthcare, fraud detection, etc. It is also used to perform large numerical computations. It provides data flow graphs to process multi-dimensional arrays called tensors. You can learn TensorFlow and get TensorFlow Certification.

Its components, such as tensors, graphs and sessions, helps in computation across CPUs, GPUs, and mobile devices. It has various features including flexibility, ease of training and extensive community support. It provides robust abilities, such as parallel neural network training and visualizations with TensorBoard. This makes it a cornerstone tool in the AI and ML landscape.

1 note

·

View note

Text

PEMDAS or what?

Great comment by Chris McLeod

In this case, it's about whether the implied multiplication of the bracket is higher or equal priority to regular multiplication.

Here's a breakdown

Let's look at all the premises that may affect the parsing and computation of this problem and related problems

A: multiplication comes before division

A': division comes before multiplication

A*: division and multiplication have equal precedence

B: operations occur left to right when equal precedence

B': operations occur right to left when equal precedence

B*: operations without explicit precedence are malformed

C: grouping by adjacency occurs before operator substitution

C': operator substitution occurs before grouping by adjacency

C*: grouping by adjacency is not permitted

D: pronumerals are grouped before operator substitution

D': pronumerals are grouped after operator substitution

D*: pronumerals are not permitted

E: addition comes before subtraction

E': subtraction comes before addition

E*: subtraction and addition have equal precedence

So let's see how these are used in conventions:

most countries use {B}

but when we do matrix arithmetic or functional algebra we use {B'} (although even in those cases japan uses {B})

also hebrew and arabic computations will use {B'} where we would use {B} and vice versa

literal interpretation of BODMAS or BEDMAS is {A',E}

literal interpretation of PEMDAS is {A,E}

the interpretation you are intended to recieve from them is {A*,E*}. Note that neither of them explicitly refers to {C}

notice, that so far no claim has been made on B C or D

schools almost never directly deal with these, I think this is probably an oversight.

As for programming languages

LISP uses {A*,B*,C*,D*,E*}

Python uses {A*,B,C*,D*,E*}

CASIO uses {A*,B,C,D,E*}

TX uses {A*,B,C',D,E*}

MATLAB uses {A*,B,C',D,E*}

Personally, I'm {A*,B,C',D,E*}

So what result do we get depending on these propositions?

the problem is 8/2(2+2)

right away we can say that D and E are irrelevant

we can also turn (2+2) into (4)

giving us 8/2(4)

before we do anything we have to decide what to do with the adjacency between 2 and the bracketed term

{C*} will tell us to reject this expression and not attempt to compute it

if {C} then the expression becomes 8/(2(4)) = 8/(2*4)

which regardless of propositions gives 8/8 = 1

if {C'} then the expression becomes 8/2*4

then if {B*} we reject the expression and not attempt to compute it

otherwise if {A} or {A*,B} it becomes 4*4 = 16

otherwise if {A'} or {A*,B'} it becomes 8/(2*4) = 8/8 = 1

The main takeaway is that there is no universal standard

We have seen that B varies tremendously

We can arguably say that {A*,E*} is a universal standard, supposing that schools properly taught it instead of doing it in a confusing way that leads people to take {A',E} or {A,E}

I would also say that {D} is a reasonable standard that I think most professionals hold, even if they haven't thought about it explicitly.

Languages may implement {D*} to enforce symbolic tokens on variable names

{C} is contested however. This is the main reason why languages will implement {C*} instead to avoid the ambiguity and force people to frame it in an unambiguous form. Almost all of these memes rely on this contention for traffic.

Here's an interesting article on the evolving usage of C vs C'

0 notes

Text

ECSE 324 Lab 1: Basic Assembly Language Programming solved

Part 1: The Matrices! They’re Multiplying!! In this part, you will translate into assembly two different implementations of matrix multiplication in C, and then investigate their performance. Assume that main in the C code below begins at _start in your assembly program. All programs should terminate with an infinite loop, as in exercise (5) of Lab 0. Subroutine calling convention It is…

0 notes

Text

C program to designs a program and find a product of the N*M matrix

C program to designs a program and find a product of the N*M matrix

C program to designs a program and find a product of the N*M matrix. – C Programming Me Ham Matrix Multiplication Program Ko Create Karne Wale Hai, Jo Ye Program Array, And Loop Se Banayenge Or Isme Ham Find Karenge Ki N*M Matrix | Yadi Aapne HTML Full Course And CSS Full Course And Python Full Course And PHP Full Course Nhi Read Kiya Hai To Aap Vah Bhi Read Kar Sakte Hai | C Matrix…

View On WordPress

#C Program Matrix In Hindi#C program to designs a program and find a product of the N*M matrix#C program to find a product of the N*M matrix#find a product of the N*M matrix#Matrix Multiplication Program In C In Hindi#Matrix Multiplication Program In C Language#What Is Matrix Multiplication In Hindi

0 notes

Text

Why Should Scientists Use Python for Scientific Computing?

By definition, scientific computing is the use of mathematics and computation to solve intricate engineering and scientific problems. It includes employing computers rather than human brains to solve a problem. In Scientific Computing, simulations, modeling, and data analysis can all be done on computers.

In a variety of disciplines, including physics, chemistry, biology, and engineering, it aids in human comprehension and prediction of the behavior of complex systems.

The purpose of scientific computing is to employ well-known mathematical ideas as a tool to address challenging real-world problems that can't be resolved using conventional techniques. Deep mathematical knowledge and the capacity to create effective programs are prerequisites for scientific computing.

After getting an online certificate python, your job offers as a Python developer increases greatly.

Why is Python a popular choice for Scientific Computing?

Python has a huge amount of scientific libraries, which gives it an edge over other programming languages for scientific computing.

Scientific computing encompasses a wide variety of challenging mathematical operations, including Tensor multiplications, vector transformations, and matrix multiplications. Even for seasoned programmers, starting from scratch with these computations can be difficult and time-consuming. For novice programmers, the procedure is made more difficult by the dearth of freely accessible libraries for scientific computing.

The large array of scientific computing libraries offered by Python, one of the most well-known and commonly utilized of which is Numpy, offers a solution to this issue. These libraries make it simple and effective for programmers of all skill levels to carry out scientific computing, freeing them up to concentrate on their studies and projects.

If you want a career in game programming or math-related programming, you should take up an online Python training course.

Ease-of-use

Python is among the simplest programming languages, and it is known for that. Python's simplicity is frequently viewed as a disadvantage for general-purpose programming. But it has several benefits for scientific computing, artificial intelligence, and machine learning. Instead of worrying about the syntax and other finer points of the programming language in these domains, it is easy to concentrate on the algorithms and mathematical models.

Online Python training will teach you to solve problems using Python in simple ways.

Visualization

When it comes to scientific computing, visualization is one of its best features. Python has a number of data visualization tools, including Matplotlib, Seaborn, and Plotly. Scientists can get insights and make well-informed judgments because of these technologies' ease of visualizing and analyzing scientific data.

Flexibility

Python is a very adaptable language that can be used for a variety of tasks, such as scientific computing, data analysis, web development, and automation.

You can become an efficient Python developer after finishing an Online Python training course.

Interoperability

Python is very interoperable, which means it can readily communicate with other programming languages and resources like C/C++ and other tools. Due to this, projects using Python become easier to incorporate existing code and algorithms into.

Pre-built Mathematical models

Python also has a ton of pre-built mathematical models and scientific computing algorithms, which is a huge benefit. For instance, you can easily import a library like SciPy or SymPy that has pre-built routines to handle these computations if you need to answer a complex mathematical equation. Instead of writing the algorithm from scratch, this would save time and effort.

Python has a great future.

The intriguing development is that Python is also being used in cutting-edge quantum computing technology that will completely change computing. Thus, by selecting Python, you guarantee your position at the forefront of scientific computing breakthroughs.

You have a secure future if you complete an online Python training course.

Final thoughts

This article covered Python's benefits, including its abundance of libraries, flexibility, simplicity, and ease of use on using it for scientific computing. Python is a flexible and effective tool that is helpful for various intricate tasks. It is highly recommended if you're working on challenging mathematical calculations, creating AI applications, or researching quantum computing.

An online python course certification is easy to complete, and the benefits it provides are endless.

1 note

·

View note

Text

The Future of Addition, Subtraction, Multiplication, Division in C: Insights from an Expert

As technology continues to advance at a rapid pace, it is natural to wonder about the future of programming languages and their fundamental operations. In this article, we explore the future of addition, subtraction, multiplication, and division in the C programming language, with insights from an expert in the field. By understanding the trajectory of these operations, we can gain valuable insights into their role in shaping the future of software development.

Continual Relevance: Addition, subtraction, multiplication, and division are timeless operations that will continue to play a vital role in programming languages, including C. Their fundamental nature ensures their relevance and applicability in various domains.

Integration with New Technologies: The future of addition, subtraction, multiplication, and division in C lies in their integration with emerging technologies. As new programming paradigms, frameworks, and platforms emerge, these operations will adapt to seamlessly work with these technologies, enabling developers to leverage their power in innovative ways.

Performance Optimization: Efficiency and performance have always been key considerations in programming. In the future, efforts will continue to optimize addition, subtraction, multiplication, and division operations in C to make them even faster and more efficient, allowing for enhanced computational capabilities and improved software performance.

Advanced Mathematical Operations: While addition, subtraction, multiplication, and division are the foundation, future advancements in C may introduce more advanced mathematical operations to address complex computational challenges. These operations could include matrix operations, statistical calculations, trigonometric functions, and more, expanding the range of mathematical capabilities within the language.

Enhanced Data Manipulation: With the rise of big data and data-driven applications, the future of addition, subtraction, multiplication, and division in C will likely involve enhanced data manipulation capabilities. This could include optimized algorithms for handling large datasets, improved precision for floating-point calculations, and efficient handling of complex data structures.

Integration with Artificial Intelligence and Machine Learning: As AI and machine learning continue to revolutionize various industries, the future of addition, subtraction, multiplication, and division in C will involve tighter integration with these technologies. Developers will leverage these operations to implement algorithms, perform mathematical computations, and analyze data in AI and machine learning applications.

Cross-Platform Compatibility: The future of addition, subtraction, multiplication, and division in C will also focus on improving cross-platform compatibility. As software development continues to span multiple operating systems, devices, and architectures, efforts will be made to ensure these operations can be seamlessly executed across different platforms.

Increased Automation and Code Generation: Automation and code generation are growing trends in the software development industry. In the future, tools and frameworks may facilitate the automatic generation of code for common arithmetic operations, freeing up developers to focus on higher-level tasks and accelerating the development process.

Integration with Quantum Computing: As quantum computing evolves, the future of addition, subtraction, multiplication, and division in C may involve their adaptation to quantum computing architectures. This will require rethinking these operations to take advantage of the unique computational capabilities offered by quantum systems.

Education and Community Support: As the future unfolds, education and community support will continue to play a crucial role in shaping the understanding and adoption of addition, subtraction, multiplication, and division in C. Learning resources, forums, and collaborative platforms will facilitate knowledge sharing and skill development, ensuring that programmers can adapt to the evolving landscape addition subtraction multiplication division program in c.

Conclusion: The future of addition, subtraction, multiplication, and division in C holds immense potential for further advancements and integration with emerging technologies. As developers continue to push the boundaries of software development, these fundamental operations will remain indispensable in solving complex problems, facilitating computational tasks, and driving innovation in the digital era.

0 notes

Link

Social Justice, in its current form, has a structural anti-Semitism problem. It’s not that the Social Justice people hate Jews. At least I don’t think they do, as far as I’m aware. It’s deeper, because it results from the structure of some of the indoctrinations within Social Justice itself. Let’s look closely at some of these indoctrinations, see how they interact, and draw up some possible ways the Social Justice tribe might fix the problem, structurally speaking.

Puzzle Piece #1: “You Didn’t Earn That”

“You didn’t earn that” is an indoctrination deeply steeped within Social Justice, and it erupts in many different forms, which all tend to reinforce each other. Please note, I’m not talking about the Obama “You Didn’t Build That” argument, which simply states that independent business owners are actually quite dependent on the government. Nor am I referring to the Animal Farm style Marxists who don’t think rewards should be meritocratic at all, because these are rare outside of secret Antifa dens or the streets of Portland. I’m referring specifically to the mainline Social Justice approach, which is more layered.

First, they adopt the position that people are blank slates, and all features of personality or competence are installed by society. This leads to the belief that IQ isn’t real, or at most is simply the result of a racist test. It also leads to the belief that differences in socioeconomic outcome between races must be due to environmental factors, since no other factors exist. These environmental factors are defined to be “privilege.” You didn’t earn that wage gap, you were given the wage gap because you are male, or white, or similar.

…

Puzzle Piece #2: “Racism = Prejudice Plus Power”

This stipulative definition of “racism” was first postulated by Patricia Bidol-Pavda in 1970, six years after the Civil Rights Act of 1964 and two years after the death of Martin Luther King Jr. It is now the dominant definition employed by Social Justice. By this definition, a prejudiced act isn’t racist unless the prejudicial person wields power over the person they’re prejudiced against. We might call this the Sarah Jeong Defense

…

Puzzle Piece #3: “Intersectionality”

The roots of modern intersectionality come from Kimberle Crenshaw, and in application, work somewhat like this. Print the above grid. Circle the “identity” that describes you on each line. The more circles you have on the right-hand side of the grid, the more marginalized you are. This approach is already deeply baked into academia, and rolled out for freshman orientation at places like Cornell.

…

Build the Puzzle

The theory then goes like this. IQ isn’t real, or if it is, it’s just a result of a racist test. You didn’t earn that. Socioeconomic outcomes are due to privilege. Marginalized people have less privilege. People who are marginalized in multiple ways have even less privilege than people who are marginalized in one way, and theoretically have even worse socioeconomic outcomes due to the intersectionality of their marginalization. But like all theories, this theory is testable, and falsifiable, and when you start testing it on Ashkenazi Jews, you get problems.

…

Does this trend of largely vandalistic hate translate to worse economic outcomes? Definitively, no. Jewish people earn more money than any other religious affiliation, including whites of European descent. An astonishing 44% earn six figures, over double the national average. They also make up 25% of the 400 wealthiest Americans despite only being 2% of the total population.

As someone who has adopted the indoctrinations of individualism, I don’t find these numbers difficult to explain. The Jewish folks I’ve met are usually smart, funny, successful, responsible, good looking, healthy, and good with the money they earn. But this doesn’t fit the Social Justice worldview, which equates marginalization with worse socioeconomic outcomes. The theory fails the test.

When a theory fails a test, the theorist adjusts the theory, and it won’t take too much longer for Social Justice theorists to adjust this one. When they do, they’ll have three possible paths to resolve it, and I fear they’re already heading down the worst path.

Path #1: Jew Privilege

I don’t like this one. Not one bit. But it’s the easiest one they could adopt, because it requires very little re-working of their belief system, and they seem to already be going down this path. The resolution works like this:

Move Jews from “marginalized” to “privileged” in the intersectional matrix of oppression.

This allows the Social Justice tribe to keep all their other indoctrinations intact, and their theory matches the data. They can say Jews have better socioeconomic outcomes because of their “Jewish Privilege,” instead of the measurably higher IQs Jews have, or other racial traits which don’t fit the tabula rasa ideology. And it’s already happening. You can see it in the ongoing drama with the Women’s March.

…

“Jews as white people” is language that clearly intends to adjust the Jewish position on the intersectionality matrix.

…

This is very bad, because the Bivol-Pavda racism definition would mean anti-Semitism suddenly becomes “woke,” and Social Justice, to borrow from their own lexicon, will itself become Literally Hitler.

But there are some other options they might use to avoid their anti-semitic fate, if they take a wider look. As we mentioned before on HWFO, Social Justice is a religion-like-thing with the unique and captivating feature of being crowdsourced, which means that the crowd can monkey with the indoctrinations however they like, to fix the system if it’s broken. They need to start doing this more intelligently, and with a systems analysis approach. Here are two alternate options.

Path #2: Dump Bivol-Pavda Racism

This would be the hardest one for Social Justice to adopt, but would be the one I would personally prefer. If the Social Justice crowd were to pivot away from the idea that “racism” is only something that privileged people do to non-privileged people, and instead acknowledge that racism is a universal condition that any race or group can apply to any other race or group, then anti-semitism would always stay “racist” and never gets “woke,” no matter how much privilege the Jews are assigned in the matrix of oppression.

This would pivot the rules of behavior for Social Justice away from where they’re at today, and back towards “judging people not by the color of their skin, nor their intersectionality-matrix-location, but by the content of their character.” I think this would make the world a much happier place. I speculate that MLK would also agree.

But I don’t anticipate they’ll do this, because they’d have to give up their own racial prejudices to do so, and giving up racial prejudices is hard. It would also deprive them of one of the weapons in their arsenal, namely their ethos that it’s okay to be racially prejudiced to white people in the name of equity.

Path #3: Acknowledge the Jews Might Have Earned That

Another way for Social Justice to avoid becoming Literally Hitler would be to acknowledge the science that IQ is heritable, and that IQ is heavily responsible for socioeconomic outcomes. Further, that races have different median IQs, and Jews are at the top, followed by Asians.

This will also be a tough pill for Social Justice to swallow, because it opens the door to the possibility that not all racial inequality is due to systemic racism, and that universal racial equity may not be a realistic objective. But it’s still probably an easier pill to swallow than giving up their racial prejudices, and at least it doesn’t lead to them becoming “Literally Hitler.”

Or they could bail on the whole program, but I don’t consider that to be particularly likely.

…

What Social Justice needs now, more than anything else, is a new leadership to rise which A) understands Social Justice’s religion-like nature, B) understands systems analysis, and C) is brave enough to tinker under the hood and fix some of the broken things within it. It needs reform. Badly. The “Woke Anti-Semitism Paradox” is only one example of many.

1 note

·

View note

Photo

65536 1-bit Processors in 1986: SIMD with the "Connection Machine"

https://en.wikipedia.org/wiki/Connection_Machine

Some 33 years ago, Hillis and his research team created the first SIMD processor. The primary attribute of these SIMD machines is to have many processors run the same program, but across different sets of data. That is to say: Single Instruction (or single-program), Multiple Data. (SIMD for short).

Originally created to solve the hardest AI problems of the 1980s, the SIMD model turned out to be useful in a great variety of fields of computer science. Eventually, this SIMD model was discovered to solve matrix multiplication problems extremely efficiently, leading to its modern application of in GPUs. (The vast majority of 3d graphics can be represented as a series of 4x4 Matrix multiplications).

For the non-hardware geeks out there, the most famous use of the CM-5 was in the movie "Jurassic Park". The CM-5's cabinet of mysterious blinking red-LEDs was exceptional at making it look like "science is happening" :-) See here for more details. Still, at a real life cost of $20 Million per CM-5, the movie studio had to borrow the machines for all of the shots.

Interesting Links

"Data Parallel Algorithms" by Hillis and Steele. This paper is highly cited in various GPGPU papers. Many early algorithms and examples were listed in this paper, teaching the SIMD parallel programming style.

Getting Started in C* -- The C* programming language was used for the Connection Machine. CUDA and OpenCL programmers can easily see the influence (C* Shapes are similar to CUDA Grids for example).

Other CM manuals: http://people.csail.mit.edu/bradley/cm5docs/

1 note

·

View note