#NVIDIABlueField3

Explore tagged Tumblr posts

Text

NVIDIA BlueField 3 DPU For Optimized Kubernetes Performance

The world’s data centers are powered by the NVIDIA BlueField 3 DPUs Networking Platform, an advanced infrastructure computing platform.

Transform the Data Center With NVIDIA BlueField

For contemporary data centers and supercomputing clusters, the NVIDIA BlueField networking technology sparks previously unheard-of innovation. BlueField ushers in a new era of accelerated computing and artificial intelligence(AI) by establishing a safe and accelerated infrastructure for every application in any environment with its powerful computational power and networking, storage, and security software-defined hardware accelerators.

The BlueField In the News

NVIDIA and F5 Use NVIDIA BlueField 3 DPUs to Boost Sovereign AI Clouds

By offloading data workloads, NVIDIA BlueField 3 DPUs work with F5 BIG-IP Next for Kubernetes to increase AI efficiency and fortify security.

Arrival of NVIDIA GB200 NVL72 Platforms with NVIDIA BlueField 3 DPUs

The most compute-intensive applications may benefit from data processing improvements made possible by flagship, rack-scale solutions driven by NVIDIA BlueField 3 networking technologies and the Grace Blackwell accelerator.

The new DGX SuperPOD architecture from NVIDIA Constructed using NVIDIA BlueField-3 DPUs and DGX GB200 Systems

With NVIDIA BlueField 3 DPUs, the DGX GB200 devices at the core of the Blackwell-powered DGX SuperPOD architecture provide high-performance storage access and next-generation networking.

Examine NVIDIA’s BlueField Networking Platform Portfolio

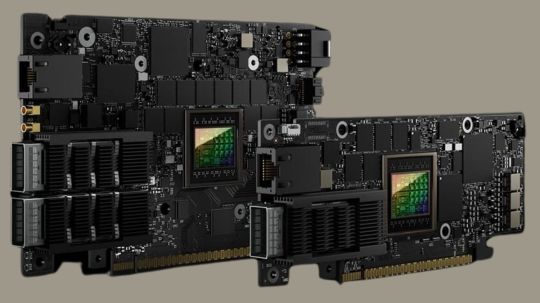

NVIDIA BlueField-3 DPU

The 400 Gb/s NVIDIA BlueField 3 DPU infrastructure computing platform can conduct software-defined networking, storage, and cybersecurity at line-rate rates. BlueField-3 combines powerful processing, quick networking, and flexible programmability to provide software-defined, hardware-accelerated solutions for the most demanding applications. BlueField-3 is redefining the art of the possible with its accelerated AI, hybrid cloud, high-performance computing, and 5G wireless networks.

NVIDIA BlueField-3 SuperNIC

An innovative network accelerator designed specifically to boost hyperscale AI workloads is the NVIDIA BlueField 3 SuperNIC. The BlueField-3 SuperNIC is designed for network-intensive, massively parallel computing and optimizes peak AI workload efficiency by enabling up to 400Gb/s of remote direct-memory access (RDMA) over Converged Ethernet (RoCE) network connection across GPU servers. By enabling safe, multi-tenant data center settings with predictable and separated performance across tasks and tenants, the BlueField-3 SuperNIC is ushering in a new age of AI cloud computing.

NVIDIA BlueField-2 DPU

In every host, the NVIDIA BlueField-2 DPU offers cutting-edge efficiency, security, and acceleration. For applications including software-defined networking, storage, security, and administration, BlueField-2 combines the capabilities of the NVIDIA ConnectX-6 Dx with programmable Arm cores and hardware offloads. With BlueField-2, enterprises can effectively develop and run virtualized, containerized, and bare-metal infrastructures at scale with to its enhanced performance, security, and lower total cost of ownership for cloud computing platforms.

NVIDIA DOCA

Use the NVIDIA DOCA software development kit to quickly create apps and services for the NVIDIA BlueField 3 DPUs networking platform, therefore unlocking data center innovation.

Networking in the AI Era

A new generation of network accelerators called NVIDIA Ethernet SuperNICs was created specifically to boost workloads involving network-intensive, widely dispersed AI computation.

Install and Run NVIDIA AI Clouds Securely

NVIDIA AI systems are powered by NVIDIA BlueField-3 DPUs.

Does Your Data Center Network Need to Be Updated?

When new servers or applications are added to the infrastructure, data center networks are often upgraded. There are additional factors to take into account, too, even if an upgrade is required due to new server and application architecture. Discover the three questions to ask when determining if your network needs to be updated.

Secure Next-Generation Apps Using the BlueField-2 DPU on the VMware Cloud Foundation

The next-generation VMware Cloud Foundation‘s integration of the NVIDIA BlueField-2 DPU provides a robust enterprise cloud platform with the highest levels of security, operational effectiveness, and return on investment. It is a secure architecture for the contemporary business private cloud that uses VMware and is GPU and DPU accelerated. Security, reduced TCO, improved speed, and previously unattainable new capabilities are all made feasible by the accelerators.

Learn about DPU-Based Hardware Acceleration from a Software Point of View

Although data processing units (DPUs) increase data center efficiency, their widespread adoption has been hampered by low-level programming requirements. This barrier is eliminated by NVIDIA’s DOCA software framework, which abstracts the programming of BlueField DPUs. Listen to Bob Wheeler, an analyst at the Linley Group, discuss how DOCA and CUDA will be used to enable users to program future integrated DPU+GPU technology.

Use the Cloud-Native Architecture from NVIDIA for Secure, Bare-Metal Supercomputing

Supercomputers are now widely used in commerce due to high-performance computing (HPC) and artificial intelligence. They now serve as the main data processing tools for studies, scientific breakthroughs, and even the creation of new products. There are two objectives when developing a supercomputer architecture: reducing performance-affecting elements and, if feasible, accelerating application performance.

Explore the Benefits of BlueField

Peak AI Workload Efficiency

With configurable congestion management, direct data placement, GPUDirect RDMA and RoCE, and strong RoCE networking, BlueField creates a very quick and effective network architecture for AI.

Security From the Perimeter to the Server

Safety BlueField facilitates a zero-trust, security-everywhere architecture that extends security beyond the boundaries of the data center to each server’s edge.

Storage of Data for Growing Workloads

BlueField offers high-performance storage access with latencies for remote storage that are competitive with direct-attached storage with to NVMe over Fabrics (NVMe-oF), GPUDirect Storage, encryption, elastic storage, data integrity, decompression, and deduplication.

Cloud Networking with High Performance

With up to 400Gb/s of Ethernet and InfiniBand connection for both conventional and contemporary workloads, BlueField is a powerful cloud infrastructure processor that frees up host CPU cores to execute applications rather than infrastructure duties.

F5 Turbocharger and NVIDIA Efficiency and Security of Sovereign AI Cloud

NVIDIA BlueField 3 DPUs use F5 BIG-IP Next for Kubernetes to improve AI security and efficiency.

NVIDIA and F5 are combining NVIDIA BlueField 3 DPUs with the F5 BIG-IP Next for Kubernetes for application delivery and security in order to increase AI efficiency and security in sovereign cloud settings.

The partnership seeks to expedite the release of AI applications while assisting governments and businesses in managing sensitive data. IDC predicts a $250 billion sovereign cloud industry by 2027. By 2027, ABI Research expects the foundation model market to reach $30 billion.

Sovereign clouds are designed to adhere to stringent localization and data privacy standards. They are essential for government organizations and sectors that handle sensitive data, such financial services and telecommunications.

By providing a safe and compliant AI networking infrastructure, F5 BIG-IP Next for Kubernetes installed on NVIDIA BlueField 3 DPUs enables companies to embrace cutting-edge AI capabilities without sacrificing data privacy.

F5 BIG-IP Next for Kubernetes effectively sends AI commands to LLM instances while using less energy by delegating duties like as load balancing, routing, and security to the BlueField-3 DPU. This maximizes the use of GPU resources while guaranteeing scalable AI performance.

Through more effective AI workload management, the partnership will also benefit NVIDIA NIM microservices, which speed up the deployment of foundation models.

NVIDIA BlueField-3 DPU Price

NVIDIA and F5’s integrated solutions offer increased security and efficiency, which are critical for companies moving to cloud-native infrastructures. These developments enable enterprises in highly regulated areas to safely and securely grow AI systems while adhering to the strictest data protection regulations.

Pricing for the NVIDIA BlueField 3 DPU varies on model and features. BlueField-3 B3210E E-Series FHHL models with 100GbE connection cost $2,027, while high-performance models like the B3140L with 400GbE cost $2,874. Luxury variants like the BlueField-3 B3220 P-Series cost about $3,053. These prices sometimes include savings from the original retail cost, which might be much more depending on the seller and customizations.

Reda more on Govindhtech.com

#Nvidia#NVIDIABluefield#NVIDIABluefield3#NVIDIABluefield3DPU#Kubernetes#F5BIGIP#govindhtech#news#Technology#technews#technologynews#technologytrends

0 notes

Text

Tech Breakdown: What Is a SuperNIC? Get the Inside Scoop!

The most recent development in the rapidly evolving digital realm is generative AI. A relatively new phrase, SuperNIC, is one of the revolutionary inventions that makes it feasible.

Describe a SuperNIC

On order to accelerate hyperscale AI workloads on Ethernet-based clouds, a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) technology, it offers extremely rapid network connectivity for GPU-to-GPU communication, with throughputs of up to 400Gb/s.

SuperNICs incorporate the following special qualities:

Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reordering. This keeps the data flow’s sequential integrity intact.

In order to regulate and prevent congestion in AI networks, advanced congestion management uses network-aware algorithms and real-time telemetry data.

In AI cloud data centers, programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.

Low-profile, power-efficient architecture that effectively handles AI workloads under power-constrained budgets.

Optimization for full-stack AI, encompassing system software, communication libraries, application frameworks, networking, computing, and storage.

Recently, NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing, built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform, which allows for smooth integration with the Ethernet switch system Spectrum-4.

The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for AI applications. Spectrum-X outperforms conventional Ethernet settings by continuously delivering high levels of network efficiency.

Yael Shenhav, vice president of DPU and NIC products at NVIDIA, stated, “In a world where AI is driving the next wave of technological innovation, the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing because they guarantee that your AI workloads are executed with efficiency and speed.”

The Changing Environment of Networking and AI

Large language models and generative AI are causing a seismic change in the area of artificial intelligence. These potent technologies have opened up new avenues and made it possible for computers to perform new functions.

GPU-accelerated computing plays a critical role in the development of AI by processing massive amounts of data, training huge AI models, and enabling real-time inference. While this increased computing capacity has created opportunities, Ethernet cloud networks have also been put to the test.

The internet’s foundational technology, traditional Ethernet, was designed to link loosely connected applications and provide wide compatibility. The complex computational requirements of contemporary AI workloads, which include quickly transferring large amounts of data, closely linked parallel processing, and unusual communication patterns all of which call for optimal network connectivity were not intended for it.

Basic network interface cards (NICs) were created with interoperability, universal data transfer, and general-purpose computing in mind. They were never intended to handle the special difficulties brought on by the high processing demands of AI applications.

The necessary characteristics and capabilities for effective data transmission, low latency, and the predictable performance required for AI activities are absent from standard NICs. In contrast, SuperNICs are designed specifically for contemporary AI workloads.

Benefits of SuperNICs in AI Computing Environments

Data processing units (DPUs) are capable of high throughput, low latency network connectivity, and many other sophisticated characteristics. DPUs have become more and more common in the field of cloud computing since its launch in 2020, mostly because of their ability to separate, speed up, and offload computation from data center hardware.

SuperNICs and DPUs both have many characteristics and functions in common, however SuperNICs are specially designed to speed up networks for artificial intelligence.

The performance of distributed AI training and inference communication flows is highly dependent on the availability of network capacity. Known for their elegant designs, SuperNICs scale better than DPUs and may provide an astounding 400Gb/s of network bandwidth per GPU.

When GPUs and SuperNICs are matched 1:1 in a system, AI workload efficiency may be greatly increased, resulting in higher productivity and better business outcomes.

SuperNICs are only intended to speed up networking for cloud computing with artificial intelligence. As a result, it uses less processing power than a DPU, which needs a lot of processing power to offload programs from a host CPU.

Less power usage results from the decreased computation needs, which is especially important in systems with up to eight SuperNICs.

One of the SuperNIC’s other unique selling points is its specialized AI networking capabilities. It provides optimal congestion control, adaptive routing, and out-of-order packet handling when tightly connected with an AI-optimized NVIDIA Spectrum-4 switch. Ethernet AI cloud settings are accelerated by these cutting-edge technologies.

Transforming cloud computing with AI

The NVIDIA BlueField-3 SuperNIC is essential for AI-ready infrastructure because of its many advantages.

Maximum efficiency for AI workloads: The BlueField-3 SuperNIC is perfect for AI workloads since it was designed specifically for network-intensive, massively parallel computing. It guarantees bottleneck-free, efficient operation of AI activities.

Performance that is consistent and predictable: The BlueField-3 SuperNIC makes sure that each job and tenant in multi-tenant data centers, where many jobs are executed concurrently, is isolated, predictable, and unaffected by other network operations.

Secure multi-tenant cloud infrastructure: Data centers that handle sensitive data place a high premium on security. High security levels are maintained by the BlueField-3 SuperNIC, allowing different tenants to cohabit with separate data and processing.

Broad network infrastructure: The BlueField-3 SuperNIC is very versatile and can be easily adjusted to meet a wide range of different network infrastructure requirements.

Wide compatibility with server manufacturers: The BlueField-3 SuperNIC integrates easily with the majority of enterprise-class servers without using an excessive amount of power in data centers.

Read more on Govindhtech.com

#nividia#superNic#spectrum#AIapplications#GPU#NVIDIABlueField3#technews#technology#govindhtech#LLM#generative ai

0 notes