#TestPyramid

Explore tagged Tumblr posts

Text

Test Applications: Foundations and Implementation of Unit, Integration, and Functional Testing

In today’s fast-paced development environments, testing is no longer a luxury — it’s a non-negotiable necessity. With increasing complexity in microservices, APIs, and user expectations, organizations must ensure that applications function as intended across every layer. Comprehensive application testing provides the confidence to release faster, scale safely, and reduce technical debt.

In this blog, we’ll walk through the foundational principles of application testing and explore the different levels — unit, integration, and functional — each serving a unique purpose in the software delivery lifecycle.

🔍 Why Application Testing Matters

Application testing ensures software reliability, performance, and quality before it reaches the end users. A robust testing strategy leads to:

Early bug detection

Reduced production incidents

Faster feedback cycles

Higher developer confidence

Improved user experience

In essence, testing allows teams to fail fast and fix early, which is vital in agile and DevOps workflows.

🧱 Core Principles of Effective Application Testing

Before diving into types of testing, it’s essential to understand the guiding principles behind a solid testing framework:

1. Test Early and Often

Integrate testing as early as possible (shift-left approach) to catch issues before they become expensive to fix.

2. Automation is Key

Automated tests increase speed and consistency, especially across CI/CD pipelines.

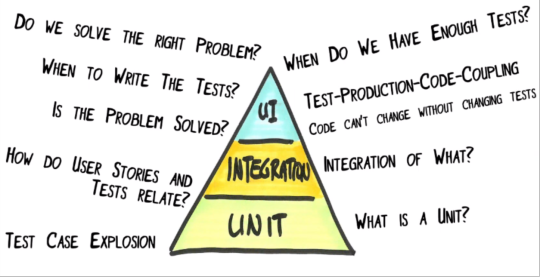

3. Clear Separation of Test Layers

Structure tests by scope: small, fast unit tests at the bottom, and more complex functional tests at the top.

4. Repeatability and Independence

Tests should run reliably in any environment and not depend on each other to pass.

5. Continuous Feedback

Testing should provide immediate insights into what’s broken and where, ideally integrated with build systems.

🧪 Levels of Application Testing

🔹 1. Unit Testing – The Building Block

Unit testing focuses on testing the smallest pieces of code (e.g., functions, methods) in isolation.

Purpose: To validate individual components without relying on external dependencies.

Example Use Case: Testing a function that calculates tax percentage based on income.

Benefits:

Fast and lightweight

Easy to maintain

Pinpoints issues quickly

Tools: JUnit (Java), pytest (Python), Jest (JavaScript), xUnit (.NET)

🔹 2. Integration Testing – Ensuring Components Work Together

Integration testing verifies how different modules or services interact with each other.

Purpose: To ensure multiple parts of the application work cohesively, especially when APIs, databases, or third-party services are involved.

Example Use Case: Testing the interaction between the frontend and backend API for a login module.

Benefits:

Identifies issues in communication or data flow

Detects misconfigurations between components

Tools: Postman, Spring Test, Mocha, TestContainers

🔹 3. Functional Testing – Validating Business Requirements

Functional testing evaluates the application against user requirements and ensures that it performs expected tasks correctly.

Purpose: To confirm the system meets functional expectations from an end-user perspective.

Example Use Case: Testing a user’s ability to place an order through an e-commerce cart and payment system.

Benefits:

Aligns testing with real-world use cases

Detects user-facing defects

Often automated using scripts for regression checks

Tools: Selenium, Cypress, Cucumber, Robot Framework

🧰 Putting It All Together – A Layered Testing Strategy

A well-rounded testing strategy typically includes:

70% unit tests – quick feedback and bug detection

20% integration tests – ensure modules talk to each other

10% functional/UI tests – mimic user behavior

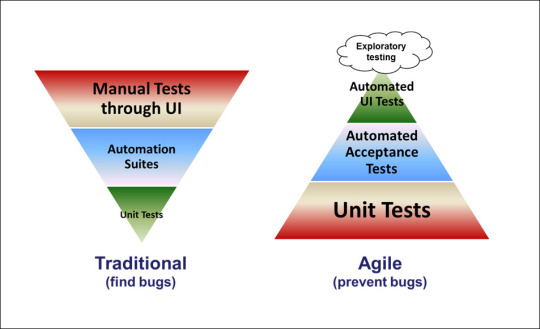

This approach, often referred to as the Testing Pyramid, ensures balanced test coverage without bloating the test suite.

🚀 Conclusion

Comprehensive application testing isn’t just about writing test cases — it’s about building a culture of quality and reliability. From unit testing code logic to validating real-world user journeys, every layer of testing contributes to software that’s stable, scalable, and user-friendly.

As you continue to build and ship applications, remember: “Test not because you expect bugs, but because you know where they hide.”

For more info, Kindly follow: Hawkstack Technologies

#ApplicationTesting#UnitTesting#IntegrationTesting#FunctionalTesting#DevOps#SoftwareQuality#TestingStrategy#TestAutomation#SoftwareEngineering#TestPyramid

0 notes

Link

0 notes

Text

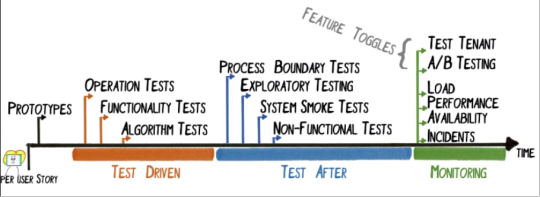

What comes after the Agile Test Pyramid? Thanks @ursenzler!

Urs introduces the below key questions in Software Development and slowly answers all of them in 45 minutes.

I like the acronym “FIRSTRECC”:

Fast => Fast feedback, you only run them if they are fast Isolated => 1 test tests 1 thing, no cross-talk Repeatable => same outcome over and over again Self-Validating => green or red Timely => written at the right time before: TDD, on process boundary later: increase safety, process boundary tests bug fixing: Defetct Driven Testing -> the later, the harder/more difficult Refactoring friendly => code canges without changes to tests Extensible => adding new tests without changing existing tests Changable => keep tests clean and simple Concise => easy to read and understand

I found it interesting to learn what Urs would “test after”

I would try to write the “Process Bounday Tests” also upfront, I mean Test Driven.

See the full video here (44mins, in German)

Urs Enzler: Die agile Testpyramide vNext - Agile Bodensee 2017 https://www.youtube.com/watch?v=-35GpOJnjmM

0 notes

Text

The serverless approach to testing is different and may actually be easier

Discovering the inherent advantages for testing smaller bits of uncoupled logic requires a different approach — and tools

I’ve been thinking a lot about testing recently. At work we have recently increased the number of our lambda functions by a significant amount due to additions of client applications and addition of features. This isn’t a massive deal to develop new features, but there has been something that has been beginning to bug me (if you’ll excuse the pun).

Testing is a “good thing”

I’m all for creating tests. Whether it’s true “Test Driven Development” — or whatever the testing methodology du jour is now — is immaterial to me. Sometimes in a startup, you just have to deploy something fast, and write a test later (I know, I know — but I’m just giving people who’ve never worked in a startup the real world scenarios). And sometimes, the tests never get written because you think that your use case is already caught (it isn’t).

Often tests get written because of bugs occurring in the production environment. This will always occur unless you have endless money and time — which you won’t in a startup.

Tests are vitally important.

But if you’re using the prevailing testing wisdom — serverless is hard.

Testing interactions with “service-full” architecture

Serverless architecture uses a lot of services — hence why some prefer to call the architecture “service-full” instead of serverless. Those services are essentially elements of an application that are independent of your testing regime.

An external element.

A good external service will be tested for you. And that’s really important. Because you shouldn’t have to test the service itself. You only really need to test the effect of your interaction with it.

Here’s an example …

Let’s say you have a Function as a Service (e.g. Lambda function) and you utilise a database service (e.g. DynamoDB). You’ll want to test the interaction with the database service from the function to ensure your data is saved/read correctly, and that your function can deal with the responses from the service.

Now, the above scenario is relatively easy because you can utilise DynamoDB from your local machine, and run unit tests to check the values stored in the database. But have you spotted something with this scenario? It’s not the live service — it’s a copy of it. But the API is the same. So, as long as the API doesn’t change we’re ok, right?

To be honest, I’ve reached a point where I’m realising that if we use an AWS service, the likelihood is that AWS have done a much better job of testing it than I have. So we mock the majority of our interactions with AWS (and other) services in unit tests. This makes it relatively simple to develop a function of logic and unit test it — with mocks for services required.

This is similar to when using a framework such as Rails. You shouldn’t be testing that the ORM works. That’s the ORM maintainers job, not yours. So it stands to reason that if a service provides an interface and documentation about how the interface works, then it should be fine — right?

Hopefully…

What about other parts of testing — beyond unit tests?

Here’s where there is a problem with serverless… sort of. Unit tests are easy with a FaaS function because the logic is often tiny. There is a tendency to an over reliance on mocks in my view but it works.

All other forms of testing are hard. In fact, I’d say we’ve possibly moved into needing a different paradigm to discuss this.

Through years of building monolithic applications, we’ve got absolutely obsessed that certain types of testing are absolutely vital — and if we don’t have them we’re “wrong”.

So let’s just step back a bit.

We’ve actually been having the discussion about distributed systems and testing for a while. The microservice patterns have shown us that it’s not always appropriate and often expensive to try to test everything in the way we do a monolith.

The key for integration testing with a microservice pattern is that you test the microservice and it’s integration with external components. Which is interesting, because you’re still imagining some sort of separation here.

In Lambda, in this context, every single Lambda needs to be treated as a microservice then for testing. Which means that your function’s unit tests (with mocks) need to be expanded to integration tests by removing the mocks, and using the actual service or stubbing the service in some way.

Unfortunately not every external service is easily testable in this way. And not every service provides a test interface for you to work with — nor do some services makes it easy to stub themselves. I would suggest that if a service can’t provide you with a relatively easy way to test the interface in reality, then you should consider using another one.

This is especially true when a transaction is financial. You don’t want a test to actually cost you any real money at this point!

Going beyond unit and single function integration tests

For me, the easiest way to test a serverless system as a whole is to generate a separate system in a non-linked AWS account (or other cloud provider). Then make every external service essentially link to a “test” service, or as best we can limit our exposure to cost.

This is how I’ve approached it — and it relies on Infrastructure as Code to make it happen. Hence, the use of something like Terraform or CloudFormation.

But interestingly, when you go beyond a single function like this in a microservice approach, you get onto things like component testing and then system testing. Essentially testing is about increasing the test boundary each time. Start with a small test boundary and work out.

Unit testing, then integration, and so on …

But interestingly, our unit tests are doing the job of testing the boundary of each function reasonably well, plus doing the unit test, and also testing the function’s relation to external services reasonably well. So the next step is to test a combination of the services together.

But since we’re using external services for the majority of our interactions, and not invoking functions from within functions very often, then the test boundaries are actually relatively uncoupled.

Hmm… so basically, the more uncoupled a function’s logic is from other function’s logic, the closer the test boundary is as we move outwards in tests.

So after good unit and integration tests on a Function by Function basis, what comes next? Is it simply end to end testing next? This becomes really interesting, since that means testing the entire “distributed system” in a staging style environment with reasonable data.

Wait! Did we just … ?

Basically, what it seems to happen with a Function as a Service approach is that the suite of tests seem a lot simpler than you would normally do with a monolithic or even a microservice approach.

The test boundaries for unit testing a FaaS Function appears to be very close to an integration test versus a component test within a microservice approach.

Quick Caveat: if you do lots of function to function invocations, then you are coupling those functions and then test boundaries will change. Functions invoking functions make a separate test boundary to worry about.

Which comes back to something else very interesting. If you build functions, and develop an event driven approach utilising third party services (e.g. SNS, DynamoDB Triggers, Kinesis, SQS in the AWS world) as the event connecting “glue” — then you may be able to essentially limit yourself to testing the functions separately and then the system.

Hmm … so testing is simpler?

Not exactly, but close.

I would suggest the system testing is harder. If you’re purely using an API Gateway with Lambdas behind it, then you can use third party tools to test the HTTP endpoints and build a test suite that way. It’s relatively understood.

But if you’re doing a lot of internal event triggering, such as DynamoDB triggers setting of a chain of events and multiple lambdas, then you have to do something different. This form of testing is harder, but since everything is a service — including the Lambda — it should be relatively simple to do.

The person that builds the tool for this kind of system testing with serverless will do very well. At present, the CI/CD tools we have and testing tools around it are not (quite) good enough.

Testing and Serverless is different

When I started thinking about this article, I was expecting to figure out a lot of things around how to fit better testing regimes into our workflow.

As this article has come together, what’s happened is an identification of why serverless approaches are different to monolithic and microservice approaches. As a result, I’ve realised the inherent advantages for testing of smaller bits of uncoupled logic.

You can’t just drag your old “Testing for Monoliths Toolbox” into the serverless world and expect it to work any more.

Testing in serverless is different.

Testing in serverless may actually be easier.

In fact, testing in Serverless may actually be easier to maintain as well.

But we’re currently lacking the testing tools to really drive home the value. — looking forward to when they arrive.

Some final thoughts

I’m often a reluctant test writer. I like to hack and find things out as I go before building things to “work”. I’ve never been one of the kinds of people to force testing in any scenario so I may be missing the point in some of this. There are definitely people more qualified to talk about testing than me, but these are simply thoughts on testing.

Additional Resources

Testing Strategies in a Microservice Architecture

bliki: TestPyramid

Just Say No to More End-to-End Tests

The serverless approach to testing is different and may actually be easier was originally published in A Cloud Guru on Medium, where people are continuing the conversation by highlighting and responding to this story.

from A Cloud Guru - Medium http://ift.tt/2qtKWzD

0 notes

Text

79: Херовенький тимлид @flamingmdn

Гостевой выпуск со Славой. Слава отвечает на вопросы про настольный теннис, здоровье, белорусские стартапы и камеры сенсерной депривации.

Спонсоры и поддержка

Подкаст выходит благодаря помощи наших слушателей.

Мы открыты к рекламным предложениям.

Живое общение с создателями и слушателями шоу

Телеграмм Паб «Инфа100%»

Ссылки и шоу-ноты

Слава

.NET

Continuous integration

Unit testing

BDD

Welcome to behave!

SpecFlow

Cucumber

TestPyramid

Синдром запястного канала

Подушка ортопедическая под голову САНТОРИНИ

Soap4.me

soap4all – Android App

Quote Roller

tema – Сенсорная депривация

Tools of Titans: The Tactics, Routines, and Habits of Billionaires, Icons, and World-Class Performers

Stranger Things

10% happier

Дата записи: 2017-03-24

0 notes

Text

Wie bereitet man sich am besten auf die Automatisierung von e2e-Tests vor?

Zunächst einmal sind e2e-Tests aufgrund der vielen Schritte, die schiefgehen können, eher spröde. Deshalb solltet ihr die Anzahl der e2e-Tests (und der UI-Tests im Allgemeinen) begrenzen. Denkt immer daran, dass ihr eine Testpyramide haben wollt, nicht die Test-Eistüte.

Quelle: https://www.agilecoachjournal.com/2014-01-28/the-agile-testing-pyramid Aber für die Tests, die ihr automatisiert, kann die RCRCRC-Heuristik nützlich sein:

Quelle: https://www.mindomo.com/de/mindmap/rcrcrc-regression-testing-heuristic-0251bc740f3394355a283cbc1c2727f5 Natürlich gilt dies für alle Tests, aber es ist ein Ausgangspunkt. Ich persönlich würde mit dem Produktverantwortlichen oder dem Business-Analysten sprechen, um zu erfahren, was die Kernfunktionalität der Anwendung ist, und mit anderen Testern, um herauszufinden, was die sich wiederholenden/langweiligen Teile des Tests sind (wie das Ausfüllen des Anmeldeformulars). Die manuelle QA sollte einige Testszenarien enthalten, aus denen man schließen kann welche E2E manuellen Content haben und welche ohne großen Aufwand zu automatisieren sind. Immer eine Sache der Einordnung und der Prioritäten in eurem Team, man sollte nicht immer mit voller Kraft alles Automatisieren, was auch entsprechend viel Zeit benötigt, sondern entsprechend immer erst das Automatisieren, was am wenigsten Zeit verlangt. - QA fragen, welche die meiste Zeit für manuelle Tests benötigen - BA/SPO fragen, welche Teile der Anwendung/Funktionalität die größten Auswirkungen auf die Geschäftsseite/Endbenutzer haben - das Entwicklungsteam fragen, welche Teile der Funktionalität am wenigsten mit Unit- und anderen Tests auf niedrigerer Ebene (in der Testpyramide) abgedeckt sind und welche Teile den meisten Spaghetti-Code und "monsters be here"-Code enthalten - Auf der Grundlage dieser drei Kriterien den vorhandenen Testfällen einen numerischen Wert zuweisen (1-5 Punkte für jede Kategorie, wobei eine höhere Zahl bedeutet, dass eine Automatisierung notwendiger ist) und mit den am meisten geschätzten Testfällen beginnen (z. B. 15 oder 14 Punkte) Read the full article

0 notes