#Timeseries Prediction

Explore tagged Tumblr posts

Link

看看網頁版全文 ⇨ 資料探勘技術於圖書館讀者資料分析與應用 / Analysis and Application of Data Mining Technology in Library Readers' Data https://blog.pulipuli.info/2023/07/analysis-and-application-of-data-mining-technology-in-library-readers-data.html 這是中華民國圖書館學會 110 度圖書館智慧服務與創新學習研習班的上課內容。 在此做個記錄。 ---- # 課程大綱 / Outline 本課程是由三個主題所構成。 每個主題都有對應的教材檔案與學習任務。 這三個主題個別是:。 - Chapter 1. 神鳥領航:Weka簡介 - Chapter 2. 看穿因果:熱點分析 - Chapter 3. 洞悉未來:時間序列預測 以下是這三個主題的資料。 ---- # Chapter 1. 神鳥領航:Weka簡介 / Introduce to Weka。 - Google投影片線上檢視:https://docs.google.com/presentation/d/1gzoWOMkB4RpUsZ9q2F7oWQHsG1uof1WkMWfWaDUyUDU/edit?usp=sharing - Power Point格式備份:GitHub、 Google Drive、 One Drive、 Mega、 Box、 MediaFire、 SlideShare 本章會介紹的內容包括: 1. 認識Weka 2. Weka的下載、安裝與開啟 3. 實機操作:Weka的屬性分析 本章會用到的教材如下: ## Weka軟體下載:3.8.1 (Windows 64位元)。 - 其他版本下載:支援Windows、Mac OS、Linux版本。Windows版本請下載includes Oracle's Java VM的檔案 - 請注意你的電腦是64 bit還是32 bit,如何判斷作業系統版本請看此說明。 - Mac OS如何安裝Weka:解決「遇上無法打開「...」,因為它來自未識別的開發者。」的問題。 ## LibreOffice下載 / Download LibreOffice。 - https://zh-tw.libreoffice.org/download/libreoffice-still/ ## 資料集 / Dataset - 借閱逾期讀者資料集2020 ## 快問快答 / Quiz - 快問快答:認識讀者 這是小測驗喔,完成實作的話就會看到解答了。 # Chapter 2. 看穿因果:熱點分析 / Hotspot Analysis。 ---- 繼續閱讀 ⇨ 資料探勘技術於圖書館讀者資料分析與應用 / Analysis and Application of Data Mining Technology in Library Readers' Data https://blog.pulipuli.info/2023/07/analysis-and-application-of-data-mining-technology-in-library-readers-data.html

0 notes

Text

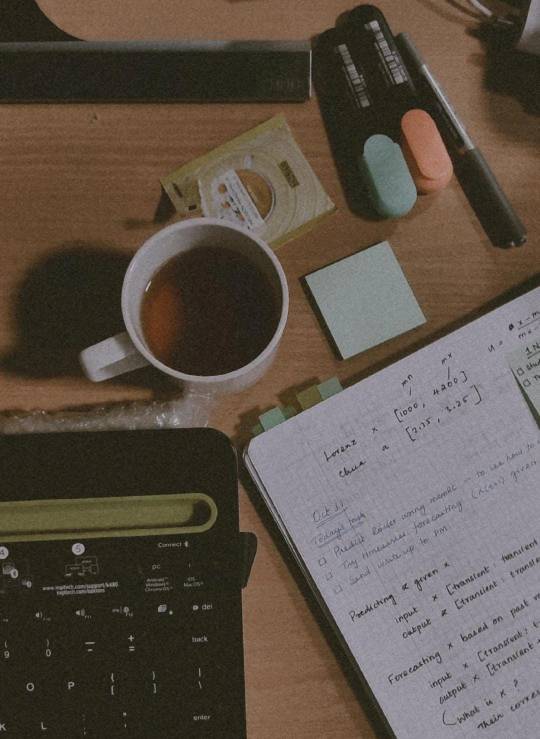

Chaos theory and chamomile tea

7/100 days of productivity • 31-Oct-23, Tuesday

About today

Predict Rossler, learn how to change the model ✓

Try forecasting timeseries >>

Send writeup to advisor >>

Get eclipsing binary data set from T ✓

Explore astro timeseries datasets ✓

Dinner with girls - garlic chicken, momos and custard

Phone off by 9:45, meditate and off to bed by 10:30 Was on a call with my best friend and it has been a long time since we talked, so I had to

Still got the cough from last week's flu. So trying to soothe my throat with chamomile tea

#Notations: ✓ Done >> Moved to tomorrow --struck-- cancelled#dark academia#gradblr#gradschool aesthetic#chaotic academia#phdblr#phd aesthetic#grad school motivation#phd life#phdjourney#physics phd#100 days of productivity#100 days challenge#100 dop#productivity challenge#phd tips#virtual buddy

18 notes

·

View notes

Text

Uniformity Factor Indicator - indicator MetaTrader 5

This is a simple analytical (non-signal, one-time calculated) indicator that allows you to test the hypothesis that price timeseries represent a “random walk”, specifically Gaussian “random walk”. This can help to construct a parametric transformation of price increments into evenly distributed, more stable and predictable time series, at least in terms of volatility. As you may know, the…

0 notes

Text

Exploring Advanced Statistical Analysis Techniques in SAS

Advanced statistical techniques are critical for extracting deeper insights from data. SAS, renowned for its statistical prowess, provides a suite of tools for conducting sophisticated analyses. In this article, we’ll explore advanced statistical methods available in SAS and their applications, making it a must-read for anyone familiar with SAS Tutorials and seeking to enhance their skills.

Why Advanced Statistical Techniques Matter

While basic statistics offer valuable insights, advanced techniques uncover complex patterns and relationships that are otherwise missed. Industries like healthcare, finance, and marketing rely heavily on these methods for decision-making, risk assessment, and optimization.

Advanced Statistical Techniques in SAS

Multivariate AnalysisMultivariate analysis examines relationships among multiple variables simultaneously. SAS procedures like PROC FACTOR and PROC DISCRIM are used for tasks such as factor analysis and discriminant analysis, enabling users to reduce dimensionality or classify observations effectively.

Survival AnalysisSurvival analysis focuses on time-to-event data, such as patient survival times or product failure rates. SAS provides tools like PROC LIFETEST and PROC PHREG to perform Kaplan-Meier and Cox regression analyses, respectively.

Bayesian AnalysisBayesian methods integrate prior knowledge with data to update predictions. SAS’s PROC MCMC is ideal for conducting Bayesian inference and creating predictive models in fields like finance and medical research.

Time Series AnalysisTime series analysis involves analyzing data points collected over time. With procedures like PROC ARIMA and PROC TIMESERIES, SAS enables users to forecast trends and model seasonal effects.

How to Perform Advanced Statistical Analysis in SAS

Prepare Your DataBegin by cleaning and organizing your dataset using DATA steps or PROC SQL. Ensure that variables are appropriately formatted for analysis.

Select the Right ProcedureChoose a procedure based on your analysis goal. For example:

Use PROC FACTOR for factor analysis.

Opt for PROC PHREG for survival modeling.

Run the AnalysisExecute the selected procedure and review the results. Pay attention to statistical outputs like p-values, confidence intervals, and model fit metrics.

Interpret ResultsTranslate statistical findings into actionable insights. Visualize results using tools like PROC SGPLOT to communicate effectively with stakeholders.

Real-World Applications of Advanced Statistical Techniques

Healthcare ResearchSurvival analysis helps predict patient outcomes and optimize treatment plans, while Bayesian methods assist in clinical trials and drug development.

Financial ModelingSAS enables financial analysts to build robust risk models, forecast stock prices, and evaluate investment strategies using time series and multivariate techniques.

Customer AnalyticsMarketers leverage factor analysis and clustering to segment customers, personalize campaigns, and improve customer retention strategies.

Tips for Success with Advanced Statistics in SAS

Understand the TheoryFamiliarize yourself with the statistical concepts before diving into SAS procedures. This ensures accurate interpretation of results.

Leverage DocumentationSAS offers extensive documentation and SAS Tutorials Online to help users understand complex procedures.

Practice on Real DataExperimenting with real-world datasets enhances your understanding and builds confidence in applying advanced techniques.

Conclusion

SAS’s advanced statistical capabilities make it an invaluable tool for data professionals. From multivariate analysis to Bayesian methods, SAS provides robust solutions for complex analytical challenges. By mastering these techniques, you can unlock deeper insights and drive meaningful outcomes in any field. Whether you’re learning through a SAS Tutorial for Beginners or refining your expertise, SAS equips you with the tools to excel in advanced analytics.

0 notes

Text

Lag Features: Boosting Time Series Prediction for Tesla Stock

Unlock the power of #LagFeatures in #TimeSeries prediction for #TeslaStock analysis. Learn how to create, implement, and optimize lag features for accurate forecasting. Boost your #StockAnalysis skills with advanced #DataScience techniques. #FinTech #Mach

Lag features revolutionize time series prediction, especially when analyzing Tesla stock data. These powerful tools capture temporal patterns, enhancing basic feature creation techniques. By leveraging the shift() method in pandas, we unlock new dimensions in stock price forecasting. Moreover, handling NaN values and defining appropriate features and target variables pave the way for robust…

0 notes

Text

The Power of Combining IoT, AI, and Machine Learning

The Internet of Things (IoT) produces immense amounts of data from connected devices and sensors. Making use of all that data requires powerful analytical techniques - a role artificial intelligence (AI) and machine learning can fill perfectly. Together, IoT, AI, and machine learning create a formidable big data toolkit unlocking operational insights at new scales.

IoT Data Flows

First, examining the breadth of IoT helps understand the data AI and machine learning can capitalize on. IoT involves:

Smart consumer devices like watches, appliances, vehicles transmitting personal usage data. Smart city infrastructure like traffic sensors, cameras, and parking monitors generating public environmental data. Smart factories outfitted with thousands of sensors across assembly lines, machines, and vehicles producing operational data. Smart energy grids and utilities processing data from smart meters to optimize distribution. Smart buildings tracking occupancy, equipment performance, and environmental conditions to improve operations. Connected logistics assets like trucks, shipping containers, railcars supplying location, health, and cargo data.

These varied IoT data sources have common characteristics:

They are extremely high volume, generating massive datasets as events occur continuously across devices and locations. They are highly diverse and multi-structured ranging from time series telemetry, video streams, sensor outputs to unstructured machine logs and text. They arrive in real-time requiring ultra low-latency processing. They track relationships between complex entities like equipment, people, and environments. They document natural processes that evolve over time.

This rich, high-fidelity data demands powerful analytical techniques to extract meaning.

Applying AI to Derive Insights from Data

Artificial intelligence is ideal for unlocking insights from massive, heterogeneous IoT data sources. AI encompasses multiple techniques including:

Machine learning employs algorithms that learn from data to make predictions or identify patterns. Deep learning uses neural networks modeled after the brain to discern complex signals within rich sensory inputs like video. Natural language processing analyzes and derives meaning from unstructured text data through semantic analysis. Computer vision applies deep learning to process images and videos to identify, classify and track objects. Speech recognition and voice analytics enable conversational interfaces and sentiment analysis.

These AI techniques help organizations:

Analyze IoT data in context rather than just in isolation. Translate low-level sensor observations into higher level assessments of real-world conditions and behaviors. Continuously monitor various data streams to spot anomalies indicating potential equipment failures, facility issues or network intrusions. Mine longitudinal timeseries IoT data to identify long term patterns and trends imperceptible to humans. Apply findings from historical IoT data analysis to guide predictive analytics. Handle complex multidimensional IoT data that humans cannot manually correlate. Provide personalized real-time recommendations based on models built from IoT user data.

AI delivers an intelligence layer that converts raw IoT data into dynamic operational insights.

Powering AI with Machine Learning

To manifest the capabilities above, AI solutions require robust and adaptable machine learning models tuned to the particular data. There are several proven techniques for training high-quality models:

Supervised learning algorithms train models by providing labeled example inputs along with desired outputs to learn from. Models like logistic regression and neural networks map inputs to outputs mathematically. New unlabeled data can then be classified based on the training.

In reinforcement learning, algorithms learn how to optimize behaviors within dynamic environments by repeatedly performing actions and receiving feedback on the outcomes. Goals and constraints guide the agent’s exploration and learning process.

Unsupervised learning finds hidden patterns and intrinsic structures within unlabeled data. Clustering algorithms group data based on similarity. Anomaly detection identifies outliers deviating from normal patterns. This reveals insights without predefined labels.

Transfer learning speeds up training by transferring learnings from an existing model into a new related model rather than training from scratch. Fine-tuning with a smaller dataset adapts the imported knowledge.

Together these techniques allow creating highly accurate AI models personalized to the nuances of an organization’s own IoT data.

Operationalizing IoT Intelligence

To scale the power of IoT, AI, and machine learning across the enterprise, organizations need solutions to operationalize models efficiently including:

IoT analytics platforms providing data pipelines to ingest, process and store vast data volumes efficiently.

Model management tools to support continuous model training, testing, tuning and monitoring.

Governance to ensure models remain accurate and aligned with business goals over time.

MLOps to automate model retraining and deployment in sustainable workflows.

Edge AI allowing real-time inference directly on local devices rather than the cloud.

Visual dashboards to contextualize insights for diverse roles.

APIs and microservices enabling integration into business applications.

With the right platforms, models transition from isolated data science projects into organization-wide operational assets. The continuous feedback loop between new data and improved models compounds the value over time.

The Future of Intelligent IoT

As IoT expands across more sectors, exploring synergies with evolving areas like computer vision, natural language processing, robotics, and blockchain will further exploit the power of interconnected data.

But for most enterprises, the combination of IoT, AI, and machine learning offers immense untapped potential even before factoring in future technology innovations. Just applying current techniques to their diverse business data unlocks game-changing visibility.

With the right strategy, any organization in any industry can leverage IoT’s data foundation paired with AI and machine learning to achieve new levels of operational efficiency, intelligence and automation. While challenging, integrating IoT with robust analytical capabilities offers perhaps the most direct path to driving better business outcomes through data.

0 notes

Photo

https://www.deepdyve.com/lp/elsevier/ea-lstm-evolutionary-attention-based-lstm-for-time-series-prediction-NwWycaImCU?key=elsevier

https://www.sciencedirect.com/science/article/abs/pii/S0950705119302400

The evolving field of Recurrent Neural Network is moving beyond LSTM and now includes Attention mechanism. Arrived at this article by searching term ” Attention based lstm +time series ” (9/5/19)

0 notes

Link

Digital Industries Software announced that it has signed an agreement to acquire TimeSeries, an Independent Software Vendor (ISV) and Mendix partner. TimeSeries has significant expertise in the development of vertical apps built on the Mendix low-code platform, which will help Siemens accelerate digital transformation by increasing adoption of low-code and offering new apps including smart warehousing, predictive maintenance, energy management, remote inspections and more. The Mendix low-code platform is the cloud foundation for Siemens’ Xcelerator portfolio of integrated software and services.

1 note

·

View note

Text

The Best Tool to Analyze Data using Google Sheet

A timeseries is a collection of data points that are recorded at regular intervals over time. Timeseries analysis can be used to identify trends, patterns, and changes over time, which can be useful for forecasting future trends and making data-driven decisions.

Google Sheets is a powerful tool for working with time series data. In Google Sheets, time series data can be organized in rows or columns, with each data point representing a specific time period. To analyze timeseries data in Google Sheets, you can use a range of functions and tools, such as the "FILTER" function to extract specific time periods, the "TREND" function to calculate linear trends, and the "FORECAST" function to predict future values.

One of the key benefits of working with timeseries data in Google Sheets is that it is a cloud-based platform, which means that the data is accessible from anywhere, and can be easily shared with collaborators. Google Sheets also allows you to create dynamic charts and graphs that can be updated automatically as new data is added, which makes it easier to visualize trends and patterns over time.

When working with timeseries data in Google Sheets, it's important to ensure that the data is properly formatted and structured. This includes checking for missing values, duplicates, and inconsistencies in the data, as well as making sure that the data is sorted chronologically.

Overall, Google Sheets is a powerful tool for working with timeseries data, and can help you gain insights into trends and patterns over time that can inform your decision-making and forecasting.

1 note

·

View note

Text

The Powerful Tool to Calculate Regression of a Timeseries

Linear regression is a statistical method used to describe the relationship between two variables. In finance, linear regression is often used to model the relationship between the returns of two assets. The best-fit linear regression coefficients are calculated by finding the line of best fit that minimizes the distance between the actual data points and the predicted values.

To calculate the best-fit linear regression coefficients, one must first plot the data points for both time series on a scatter plot. Then, the equation for the line of best fit must be determined, which is typically represented by the equation y = mx + b, where y is the dependent variable, x is the independent variable, m is the slope of the line, and b is the y-intercept. In finance, linear regression can be used to model the relationship between the returns of two assets. This can be useful for predicting the future returns of one asset based on the returns of another asset.

In conclusion, calculating the best-fit linear regression coefficients is a useful tool in finance for modeling the relationship between two time series. It can be used to predict the future returns of one asset based on the returns of another asset, which can be helpful for making informed investment decisions.

1 note

·

View note

Text

Unlocking Advanced Analytics: Techniques for Time Series and Regression in SAS

In today’s data-driven world, advanced analytics is essential for gaining insights and making informed decisions. SAS programming is a valuable tool for performing complex analyses, particularly in time series and regression. This guide explores these techniques, their uses, and how to implement them effectively in SAS.

Understanding Time Series Analysis

Time series analysis involves examining data points collected over time. It is key for forecasting and identifying trends. Important components include:

Trend: The long-term direction in the data.

Seasonality: Regular fluctuations that occur at specific intervals.

Cyclic Patterns: Longer-term, irregular fluctuations influenced by external factors.

Techniques for Time Series Analysis in SAS

SAS Procedures for Time Series:

PROC TIMESERIES: This procedure creates time series datasets and conducts analyses, such as calculating moving averages and generating plots.

PROC ARIMA: This procedure models and forecasts time series data using autoregressive integrated moving averages, capturing complex temporal patterns.

Creating Forecasts:

Use PROC FORECAST to generate forecasts based on historical data. This procedure is beneficial for applications like sales forecasting and demand planning.

Visualizing Time Series Data:

Visualization is essential for understanding time series data. SAS provides options like PROC SGPLOT to create informative plots, highlighting trends, seasonal patterns, and forecasts.

Diving into Regression Analysis

Regression analysis examines the relationships between variables, allowing predictions of a dependent variable based on one or more independent variables. This technique is crucial for understanding these relationships.

Techniques for Regression Analysis in SAS

Simple Linear Regression:

Use PROC REG to perform simple linear regression, modeling the relationship between a single independent variable and a dependent variable. This is a fundamental technique for data analysis.

Multiple Regression:

Utilize PROC REG for multiple regression, incorporating several independent variables to predict a dependent variable. This technique is vital for analyzing complex datasets.

Logistic Regression:

For binary outcomes, PROC LOGISTIC estimates the probability of a binary response based on predictor variables. This method is widely applied in healthcare and marketing to assess risk factors.

Model Diagnostics:

After fitting a regression model, it’s essential to evaluate its performance. SAS offers diagnostic tools and plots to check assumptions such as linearity and multicollinearity, ensuring a robust analysis.

Learning SAS Programming

To effectively use these advanced techniques, a solid foundation in SAS programming is essential. Enrolling in a SAS programming full course provides comprehensive knowledge, from basic syntax to complex data manipulations. Online platforms offer SAS online training, allowing you to learn at your own pace.

Practical Applications

Business Forecasting: Companies use time series analysis for sales forecasts, inventory management, and strategic planning.

Economic Modeling: Economists analyze economic indicators using time series techniques to predict future conditions.

Healthcare Insights: Regression analysis helps in understanding treatment impacts on patient outcomes, aiding clinical decision-making.

Conclusion

Mastering time series and regression techniques in SAS empowers data professionals to derive actionable insights from complex datasets. By using SAS’s robust procedures, users can effectively forecast trends and model relationships, enhancing decision-making across various sectors.

Whether you want to predict future sales, analyze economic trends, or examine patient health outcomes, SAS provides the necessary tools to unlock your data's full potential. Explore these techniques today, and consider accessing SAS programming tutorials to further enhance your skills!

#sas programming course#sas tutorial#sas online training#sas programming tutorial#sas training#advanced analytics

0 notes

Text

A New Method for Forward Testing?

A trading system is a set of rules for deciding when and how to buy or sell assets. These systems can be used to trade stocks, bonds, commodities, or other assets. Trading systems can be manual or automated. Manual systems require the trader to interpret market conditions and make decisions about when to buy or sell. Automated systems use computer algorithms to make trading decisions. Automated systems are usually designed through backtesting, which is a method of testing how well a system would have performed in the past.

Backtesting is an important step in the system design process. However, it alone is not enough. In order to test how the trading system would perform in real trading conditions, traders use paper trading, live trading, and forward testing. Forward testing is a type of simulation in which the trading system is tested using unseen data. This allows traders to see how the system would have performed if it had been used in real trading conditions. Forward testing is an important step in the system design process. It allows traders to assess the performance of the system and make improvements before using it in live trading.

Reference [1] proposed a so-called DNN-forwardtesting method for forward testing. The authors pointed out,

In this paper, we propose a stock market trading system that exploits deep neural networks as part of its main components improving a previous work (Letteri et al. [2022b]).

In such a system, the trades are guided by the values of a pre-selected technical indicator, as usual in algorithmic trading. However, the novelty of the presented approach is in the indicator selection technique: traders usually make such a selection by backtesting the system on the historical market data and choosing the most profitable indicator with respect to the known past. On the other hand, in our approach, such most profitable indicator is chosen by DNN-forwardtesting it on the probable future predicted by a deep neural network trained on the historical data.

So basically, they utilized historical data to make predictions and then used the predicted data to choose the best indicators for their trading system. The chosen systems were then tested using real data. As far as forward testing is concerned, the proposed method is just ordinary out-of-sample testing.

The authors also claimed,

As discussed in the paper, neural networks outperform the most common statistical methods in stock price prediction: indeed, their predicted future allows to make a very accurate selection of the indicator to apply, which takes into account trends that would be very difficult to capture through backtesting.

To validate this claim, we applied our methodology on two very different assets with medium volatility, and the results show that our DNN forwardtesting-based trading system achieves a profit that is equal or higher than the one of a traditional backtesting-based trading system.

We examined Tables 8 and 9 in the paper and observed that the authors used the results of only 3 and 1 trades, respectively, to support their claim.

Let us know what you think in the comments below or in the discussion forum.

References

[1] Ivan Letteri, Giuseppe Della Penna, Giovanni De Gasperis, Abeer Dyoub, DNN-ForwardTesting: A New Trading Strategy Validation using Statistical Timeseries Analysis and Deep Neural Networks, 2022, arXiv:2210.11532

Originally Published Here: A New Method for Forward Testing?

from Harbourfront Technologies - Feed https://harbourfronts.com/method-for-forward-testing/

0 notes

Text

What you'll learn Create a Machine Learning app with C#Use TensorFlow or ONNX model with dotnet appUsing Machine Learning model in ASP dotnetUse AutoML to generate ML dotnet modelNote: This course is designed with ML.Net 1.5.0-preview2Machine Learning is learning from experience and making predictions based on its experience.In Machine Learning, we need to create a pipeline, and pass training data based on that Machine will learn how to react on data.ML.NET gives you the ability to add machine learning to .NET applications.We are going to use C# throughout this series, but F# also supported by ML.Net.ML.Net officially publicly announced in Build 2019.It is a free, open-source, and cross-platform.It is available on both the dotnet core as well as the dotnet framework.The course outline includes:Introduction to Machine Learning. And understood how it’s different from Deep Learning and Artificial Intelligence.Learn what is ML.Net and understood the structure of ML.Net SDK.Create a first model for Regression. And perform a prediction on it.Evaluate model and cross-validate with data.Load data from various sources like file, database, and binary.Filter out data from the data view.Export created the model and load saved model for performing further operations.Learn about binary classification and use it for creating a model with different trainers.Perform sentimental analysis on text data to determine user’s intention is positive or negative.Use the Multiclass classification for prediction.Use the TensorFlow model for computer vision to determine which object represent by images.Then we will see examples of using other trainers like Anomaly Detection, Ranking, Forecasting, Clustering, and Recommendation.Perform Transformation on data related to Text, Conversion, Categorical, TimeSeries, etc.Then see how we can perform AutoML using ModelBuilder UI and CLI.Learn what is ONNX, and how we can create and use ONNX models.Then see how we can use models to perform predictions from ASP.Net Core.Who this course is for:This is for newbies who want to learn Machine LearningDeveloper who knows C# and want to use those skills for Machine Learning tooA person who wants to create a Machine Learning model with C#Developer who want to create Machine Learning

0 notes

Text

Technology News

Technology News Digital Industries Software announced that it has signed an agreement to acquire TimeSeries, an Independent Software Vendor (ISV) and Mendix partner. TimeSeries has significant expertise in the development of vertical apps built on the Mendix low-code platform, which will help Siemens accelerate digital transformation by increasing adoption of low-code and offering new apps including smart warehousing, predictive maintenance, energy management, remote inspections and more. The Mendix low-code platform is the cloud foundation for Siemens’ Xcelerator portfolio of integrated software and services. visit on varindia site to know more https://varindia.com/

1 note

·

View note

Text

Next generation productivity through...

Now an #SAPEndorsedApp available on SAP Store — TrendMiner Software enables subject matter experts to analyze, monitor and predict operational performance in batch, grade and continuous manufacturing. Learn more:

Next generation productivity through...

Democratizing analytics for process and asset experts. Combining timeseries and contextual data -like batch and quality data- from SAP DMC and 3rd party sources, enables subject matter experts to analyze, monitor and predict the operational performance in batch, grade and continuous manufacturing.

SAP Get Social

0 notes