#amazoncloudwatch

Explore tagged Tumblr posts

Text

AWS AppSync API Allows Namespace Data Source Connectors

Amazon AppSync API

Amazon AppSync Events now supports channel namespace data source connections, enabling developers to construct more complicated real-time apps. This new functionality links channel namespace handlers to AWS Lambda functions, DynamoDB tables, Aurora databases, and other data sources. AWS AppSync Events allows complex, real-time programs with data validation, event transformation, and persistent event storage.

Developers may now utilise AppSync_JS batch tools to store events in DynamoDB or use Lambda functions to create complicated event processing processes. Integration enables complex interaction processes and reduces operational overhead and development time. Without integration code, events may now be automatically saved in a database.

Start with data source integrations.

Use AWS Management Console to connect data sources. I'll choose my Event API (or create one) in the console after browsing to AWS AppSync.

Direct DynamoDB event data persistence

Data sources can be integrated in several ways. The initial sample data source will be a DynamoDB table. DynamoDB needs a new table, thus create event-messages in the console. It just needs to build the table using the Partition Key id. It may choose Create table and accept default options before returning to AppSync in the console.

Return to the Event API setup in AppSync, choose Data Sources from the tabbed navigation panel, and click Create data source.

After identifying my data source, select Amazon DynamoDB from the drop-down. This shows DynamoDB configuration options.

After setting my data source, it may apply handler logic. A DynamoDB-persisted publish handler is shown here:

Use the Namespaces tabbed menu to add the handler code to a new default namespace. Clicking the default namespace's setup information brings up the Event handler creation option.

Clicking Create event handlers opens a dialogue window for Amazon. Set Code and DynamoDB data sources to publish.

Save the handler to test console tool integration. It wrote two events to DynamoDB using default parameters.

Error handling and security

The new data source connectors provide sophisticated error handling. You can return particular error messages for synchronous operations to Amazon CloudWatch to protect clients from viewing sensitive backend data. Lambda functions can offer specific validation logic for channel or message type access in authorisation circumstances.

Now available

AWS AppSync Events now provide data source integrations in all AWS regions. You may use these new features via the AWS AppSync GUI, CLI, or SDKs. Data source connectors only cost you for Lambda invocations, DynamoDB operations, and AppSync Events.

Amazon AppSync Events

Real-time events

Create compelling user experiences You may easily publish and subscribe to real-time data updates and events like live sports scores and statistics, group chat messages, price and inventory level changes, and location and schedule updates without setting up and maintaining WebSockets infrastructure.

Public/sub channels

Simplified Pub/sub

Developers can use AppSync Event APIs by naming them and defining their default authorisation mode and channel namespace(s). All done. After that, they can publish events to runtime-specified channels immediately.

Manage events

Edit and filter messages

Event handlers, which are optional, allow developers to run complex authorisation logic on publish or subscribe connection requests and change broadcast events.

#AWSAppSyncAPI#AWSLambda#AmazonAurora#AmazonDynamoDB#AmazonCloudWatch#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

Explore Our 100% Job Oriented Intensive Program by Quality Thought (Transforming Dreams ! Redefining the Future !) What is a Corpus? 🌐Register for the Course: https://www.qualitythought.in/registernow 📲 contact: 99634 86280 📩 Telegram Updates: https://t.me/QTTWorld 📧 Email: [email protected] Facebook: https://www.facebook.com/QTTWorld/ Instagram: https://www.instagram.com/qttechnology/ Twitter: https://twitter.com/QTTWorld Linkedin: https://in.linkedin.com/company/qttworld ℹ️ More info: https://www.qualitythought.in/

#aws#awscloud#awscloudcomputing#awstraining#awscourse#awscloudtraining#awscloudengineer#cloud#awscloudcourse#awsadmin#amazonwebservices#amazoncloud#amazon#autoscaling#AWSDATAENGINEER#corpus#AmazonCloudWatch#qualitythoughttechnologiesreviews#qualitythoughttechnologies#qualitythought#Qtt

0 notes

Photo

The rise in the demand for Amazon Elastic Compute Cloud! #amazoncloud #amazoncloudcam #amazonclouddrive #amazoncloudcomputing #amazoncloudforest #amazoncloudplayer #amazoncloudfront #amazoncloudpractitioner #amazoncloudservices #amazonclouds #amazoncloudcamera #amazoncloudreader #amazonclouddrivephotos #amazoncloudmold #amazoncloudwatch #amazoncloudday #amazoncloudservicescertifications #amazoncloudfont #amazoncloudplaylist #amazoncloudninja #amazoncloudoutage #amazoncloudserver #amazoncloudphotos #amazoncloudplayet #amazoncloudzearch #amazoncloudstorage #amazoncloudtraininginnoida #amazoncloudservice https://www.instagram.com/p/BsXY4VeHZM0/?utm_source=ig_tumblr_share&igshid=8ffuu3s97oxs

#amazoncloud#amazoncloudcam#amazonclouddrive#amazoncloudcomputing#amazoncloudforest#amazoncloudplayer#amazoncloudfront#amazoncloudpractitioner#amazoncloudservices#amazonclouds#amazoncloudcamera#amazoncloudreader#amazonclouddrivephotos#amazoncloudmold#amazoncloudwatch#amazoncloudday#amazoncloudservicescertifications#amazoncloudfont#amazoncloudplaylist#amazoncloudninja#amazoncloudoutage#amazoncloudserver#amazoncloudphotos#amazoncloudplayet#amazoncloudzearch#amazoncloudstorage#amazoncloudtraininginnoida#amazoncloudservice

0 notes

Text

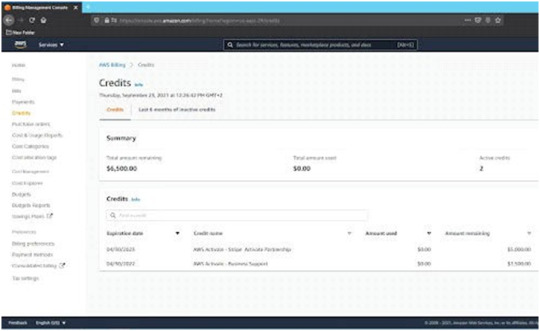

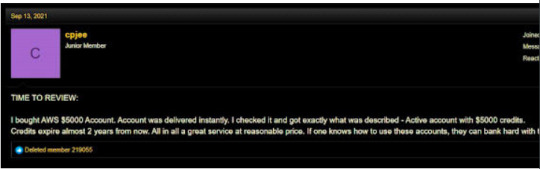

Amazon AWS Accounts with $5,000

Amazon AWS Accounts with $5,000 Credits

Available – Save Your Top Dollars on Amazon AWS Bills

Join 1,000,000+ users tapping into the power of the most advanced

on-demand cloud-computing platform – Amazon AWS. When it comes to cloud computing, you may not be willing to spend thousands of dollars on your Amazon Web Services bill.

To help you save your precious dollars, we’re offering Amazon Web Services account with $5,000 credits that are ready to be spent on their services - all at a discounted price.

AWS credits are directly applied to bills to cover the costs associated with eligible services. This means – you won’t have to spend a hefty amount to tap into Amazon’s AWS services.

As of this writing, we’re loaded with multiple AWS accounts with

$5,000 in credits, and we’re offering them at a discounted price. Upon reaching out to us, we’ll provide you with the credentials and any other details required to access these accounts.

AWS Services You Can Avail $5,000 Credits On:

With these accounts, you can avail the following Amazon AWS services:

· Alexa for Business

· Amazon API Gateway

· Amazon AppFlow

· Amazon AppStream

· Amazon Athena

· Amazon Augmented AI

· Amazon Braket

· Amazon Chime

· Amazon Chime Business Calling - a service sold by AMCS LLC

· Amazon Chime Call Me

· Amazon Chime Dial-in

· Amazon Chime Voice Connector a service sold by AMCS LLC

· Amazon Cloud Directory

· Amazon CloudFront

· Amazon CloudSearch

· Amazon Cognito

· Amazon Cognito Sync

· Amazon Comprehend

· Amazon Connect

· Amazon Detective

· Amazon DocumentDB (with MongoDB compatibility)

· Amazon DynamoDB

· Amazon EC2 Container Registry (ECR)

· Amazon EC2 Container Service

· Amazon Elastic Compute Cloud

· Amazon Elastic Container Service for Kubernetes

· Amazon Elastic File System

· Amazon Elastic Inference

· Amazon Elastic MapReduce

· Amazon Elastic Transcoder

· Amazon ElastiCache

· Amazon Elasticsearch Service

· Amazon Forecast

· Amazon FSx

· Amazon GameLift

· Amazon GameOn

· Amazon Glacier

· Amazon GuardDuty

· Amazon Honeycode

· Amazon Inspector

· Amazon Interactive Video Service

· Amazon Kendra

· Amazon Keyspaces (for Apache Cassandra)

· Amazon Kinesis

· Amazon Kinesis Analytics

· Amazon Kinesis Firehose

· Amazon Kinesis Video Streams

· Amazon Lex

· Amazon Lightsail

· Amazon Machine Learning

· Amazon Macie

· Amazon Managed Blockchain

· Amazon Managed Streaming for Apache Kafka

· Amazon Mobile Analytics

· Amazon MQ

· Amazon Neptune

· Amazon Personalize

· Amazon Pinpoint

· Amazon Polly

· Amazon Quantum Ledger Database

· Amazon QuickSight

· Amazon Redshift

· Amazon Rekognition

· Amazon Relational Database Service

· Amazon Route 53

· Amazon S3 Glacier Deep Archive

· Amazon SageMaker

· Amazon Simple Email Service

· Amazon Simple Notification Service

· Amazon Simple Queue Service

· Amazon Simple Storage Service

· Amazon Simple Workflow Service

· Amazon SimpleDB

· Amazon Sumerian

· Amazon Textract

· Amazon Timestream

· Amazon Transcribe

· Amazon Translate

· Amazon Virtual Private Cloud

· Amazon WorkDocs

· Amazon WorkLink

· Amazon WorkSpaces

· Amazon Zocalo

· AmazonCloudWatch

· AmazonWorkMail

· AWS Amplify

· AWS AppSync

· AWS Backup

· AWS Budgets

· AWS Certificate Manager

· AWS Cloud Map

· AWS CloudFormation

· AWS CloudHSM

· AWS CloudTrail

· AWS CodeArtifact

· AWS CodeCommit

· AWS CodeDeploy

· AWS CodePipeline

· AWS Config

· AWS Cost Explorer

· AWS Data Exchange

· AWS Data Pipeline

· AWS Data Transfer

· AWS Database Migration Service

· AWS DataSync

· AWS DeepComposer

· AWS DeepRacer

· AWS Device Farm

· AWS Direct Connect

· AWS Directory Service

· AWS Elemental MediaConnect

· AWS Elemental MediaConvert

· AWS Elemental MediaLive

· AWS Elemental MediaPackage

· AWS Elemental MediaStore

· AWS Elemental MediaTailor

· AWS Firewall Manager

· AWS Global Accelerator

· AWS Glue

· AWS Greengrass

· AWS Ground Station

· AWS Import/Export

· AWS Import/Export Snowball

· AWS IoT

· AWS IoT 1 Click

· AWS IoT Analytics

· AWS IoT Device Defender

· AWS IoT Device Management

· AWS IoT Events

· AWS IoT SiteWise

· AWS IoT Things Graph

· AWS Key Management Service

· AWS Lambda

· AWS OpsWorks

· AWS RoboMaker

· AWS Secrets Manager

· AWS Security Hub

· AWS Service Catalog

· AWS Shield

· AWS Snowball Extra Days

· AWS Step Functions

· AWS Storage Gateway

· AWS Storage Gateway Deep Archive

· AWS Systems Manager

· AWS Transfer Family

· AWS WAF

· AWS X-Ray

· CloudWatch Events

· CodeBuild

· CodeGuru

Comprehend Medical

· Contact Center Telecommunications (service sold by AMCS, LLC)

· DynamoDB Accelerator (DAX)

· Elastic Load Balancing

Services You Can’t Avail With These Credits:

While you can save your top dollars on almost all AWS services with these credits, it’s important to note that the Amazon $5,000 AWS credits can’t be applied to charges or fees for:

· Ineligible AWS Support

· AWS Marketplace

· Amazon Mechanical Turk

· Amazon Route 53 Domain Name Transfer or Registration

· Cryptocurrency Mining Services

· Upfront Fees for Services Like Reserved Instances & Savings Plan

Exclusive Features:

· Amazon AWS account with $5,000 credits

· Remember – it’s not a promo code. It’s a dedicated AWS account

· Loaded $5,000 credits which can be used to avail the services we mentioned above.

· $5,000 AWS credits valid for 1 year

· 24 Hours Warranty

Note: These are rare accounts. And while we are loaded with many, they are limited in stock. So, if you are looking forward to saving your top dollars on Amazon AWS bills, grab these accounts before we run out of stock.

Frequently Answered Questions:

· What are Amazon AWS Credits?

AWS credits are credits that can be directly applied to bills to cover the costs associated with the eligible services. It’s one of the best ways of saving your top dollars while availing Amazon’s AWS services.

· Can these $5,000 AWS credits be used with my current Amazon AWS account?

Yes – you can. Feel free to reach out to me for further details.

· Do these credits expire?

Yes – they’ll expire in two year. You need to use them before they do.

· Are these accounts safe? Any chances of getting banned?

We are laser-focused on delivering the best quality service to our clients. These accounts are completely safe and just like any other AWS account. One thing you need to keep in mind is - you need to respect Amazon’s TOS.

· Do you offer more than one Amazon AWS account with $5,000 credits?

Yes – we are loaded with many Amazon AWS accounts. Feel free to reach out to us to discuss further details.

· Is this a complete dedicated account or coupon code?

These are standalone Amazon AWS accounts – not coupon codes.

Don’t wait around. Place your order today!

Price:

AWS Accounts WITH $5K = $300 AWS Accounts WITH $10K = $400 Payment Method: Bitcoins

BTC Address :bc1qqr2xhvtrk89f5t0z2crvkv06wlkc3z3pet7hxm

Gifts:If you purchase now, you will receive a $350 Google Cloud Platform (GCP) credit account.

When you have made your payment, please contact [email protected]

Refund and Replacement Policy:

100% MONEY BACK GUARANTEE

We are the only one who provides 100% money back guarantee for this kind of service.We strongly believe in the quality of our service. Get this and go through it immediately. There is no risk and you literally have nothing to lose.

>>>Offer valid today At the Lowest Cost!!!:

90% off still available, but won’t be available for long, claim your Offer now.

It is great value for money deal, for the goodies you are getting.

Cannot guarantee about stocks, these sell like hot up cakes( only 2 left in stock as of now ).

Custom reviews

1 note

·

View note

Text

Amazon AWS Accounts with $5,000 Credits Available – Save Your Top Dollars on Amazon AWS Bills

Join 1,000,000+ users tapping into the power of the most advanced

on-demand cloud-computing platform – Amazon AWS. When it comes to cloud computing, you may not be willing to spend thousands of dollars on your Amazon Web Services bill.

To help you save your precious dollars, we’re offering Amazon Web Services account with $5,000 credits that are ready to be spent on their services - all at a discounted price.

AWS credits are directly applied to bills to cover the costs associated with eligible services. This means – you won’t have to spend a hefty amount to tap into Amazon’s AWS services.

As of this writing, we’re loaded with multiple AWS accounts with

$5,000 in credits, and we’re offering them at a discounted price. Upon reaching out to us, we’ll provide you with the credentials and any other details required to access these accounts.

AWS Services You Can Avail $5,000 Credits On:

With these accounts, you can avail the following Amazon AWS services:

· Alexa for Business

· Amazon API Gateway

· Amazon AppFlow

· Amazon AppStream

· Amazon Athena

· Amazon Augmented AI

· Amazon Braket

· Amazon Chime

· Amazon Chime Business Calling - a service sold by AMCS LLC

· Amazon Chime Call Me

· Amazon Chime Dial-in

· Amazon Chime Voice Connector a service sold by AMCS LLC

· Amazon Cloud Directory

· Amazon CloudFront

· Amazon CloudSearch

· Amazon Cognito

· Amazon Cognito Sync

· Amazon Comprehend

· Amazon Connect

· Amazon Detective

· Amazon DocumentDB (with MongoDB compatibility)

· Amazon DynamoDB

· Amazon EC2 Container Registry (ECR)

· Amazon EC2 Container Service

· Amazon Elastic Compute Cloud

· Amazon Elastic Container Service for Kubernetes

· Amazon Elastic File System

· Amazon Elastic Inference

· Amazon Elastic MapReduce

· Amazon Elastic Transcoder

· Amazon ElastiCache

· Amazon Elasticsearch Service

· Amazon Forecast

· Amazon FSx

· Amazon GameLift

· Amazon GameOn

· Amazon Glacier

· Amazon GuardDuty

· Amazon Honeycode

· Amazon Inspector

· Amazon Interactive Video Service

· Amazon Kendra

· Amazon Keyspaces (for Apache Cassandra)

· Amazon Kinesis

· Amazon Kinesis Analytics

· Amazon Kinesis Firehose

· Amazon Kinesis Video Streams

· Amazon Lex

· Amazon Lightsail

· Amazon Machine Learning

· Amazon Macie

· Amazon Managed Blockchain

· Amazon Managed Streaming for Apache Kafka

· Amazon Mobile Analytics

· Amazon MQ

· Amazon Neptune

· Amazon Personalize

· Amazon Pinpoint

· Amazon Polly

· Amazon Quantum Ledger Database

· Amazon QuickSight

· Amazon Redshift

· Amazon Rekognition

· Amazon Relational Database Service

· Amazon Route 53

· Amazon S3 Glacier Deep Archive

· Amazon SageMaker

· Amazon Simple Email Service

· Amazon Simple Notification Service

· Amazon Simple Queue Service

· Amazon Simple Storage Service

· Amazon Simple Workflow Service

· Amazon SimpleDB

· Amazon Sumerian

· Amazon Textract

· Amazon Timestream

· Amazon Transcribe

· Amazon Translate

· Amazon Virtual Private Cloud

· Amazon WorkDocs

· Amazon WorkLink

· Amazon WorkSpaces

· Amazon Zocalo

· AmazonCloudWatch

· AmazonWorkMail

· AWS Amplify

· AWS AppSync

· AWS Backup

· AWS Budgets

· AWS Certificate Manager

· AWS Cloud Map

· AWS CloudFormation

· AWS CloudHSM

· AWS CloudTrail

· AWS CodeArtifact

· AWS CodeCommit

· AWS CodeDeploy

· AWS CodePipeline

· AWS Config

· AWS Cost Explorer

· AWS Data Exchange

· AWS Data Pipeline

· AWS Data Transfer

· AWS Database Migration Service

· AWS DataSync

· AWS DeepComposer

· AWS DeepRacer

· AWS Device Farm

· AWS Direct Connect

· AWS Directory Service

· AWS Elemental MediaConnect

· AWS Elemental MediaConvert

· AWS Elemental MediaLive

· AWS Elemental MediaPackage

· AWS Elemental MediaStore

· AWS Elemental MediaTailor

· AWS Firewall Manager

· AWS Global Accelerator

· AWS Glue

· AWS Greengrass

· AWS Ground Station

· AWS Import/Export

· AWS Import/Export Snowball

· AWS IoT

· AWS IoT 1 Click

· AWS IoT Analytics

· AWS IoT Device Defender

· AWS IoT Device Management

· AWS IoT Events

· AWS IoT SiteWise

· AWS IoT Things Graph

· AWS Key Management Service

· AWS Lambda

· AWS OpsWorks

· AWS RoboMaker

· AWS Secrets Manager

· AWS Security Hub

· AWS Service Catalog

· AWS Shield

· AWS Snowball Extra Days

· AWS Step Functions

· AWS Storage Gateway

· AWS Storage Gateway Deep Archive

· AWS Systems Manager

· AWS Transfer Family

· AWS WAF

· AWS X-Ray

· CloudWatch Events

· CodeBuild

· CodeGuru

Comprehend Medical

· Contact Center Telecommunications (service sold by AMCS, LLC)

· DynamoDB Accelerator (DAX)

· Elastic Load Balancing

Services You Can’t Avail With These Credits:

While you can save your top dollars on almost all AWS services with these credits, it’s important to note that the Amazon $5,000 AWS credits can’t be applied to charges or fees for:

· Ineligible AWS Support

· AWS Marketplace

· Amazon Mechanical Turk

· Amazon Route 53 Domain Name Transfer or Registration

· Cryptocurrency Mining Services

· Upfront Fees for Services Like Reserved Instances & Savings Plan

Exclusive Features:

· Amazon AWS account with $5,000 credits

· Remember – it’s not a promo code. It’s a dedicated AWS account

· Loaded $5,000 credits which can be used to avail the services we mentioned above.

· $5,000 AWS credits valid for 1 year

· 24 Hours Warranty

Note: These are rare accounts. And while we are loaded with many, they are limited in stock. So, if you are looking forward to saving your top dollars on Amazon AWS bills, grab these accounts before we run out of stock.

Frequently Answered Questions:

· What are Amazon AWS Credits?

AWS credits are credits that can be directly applied to bills to cover the costs associated with the eligible services. It’s one of the best ways of saving your top dollars while availing Amazon’s AWS services.

· Can these $5,000 AWS credits be used with my current Amazon AWS account?

Yes – you can. Feel free to reach out to me for further details.

· Do these credits expire?

Yes – they’ll expire in two year. You need to use them before they do.

· Are these accounts safe? Any chances of getting banned?

We are laser-focused on delivering the best quality service to our clients. These accounts are completely safe and just like any other AWS account. One thing you need to keep in mind is - you need to respect Amazon’s TOS.

· Do you offer more than one Amazon AWS account with $5,000 credits?

Yes – we are loaded with many Amazon AWS accounts. Feel free to reach out to us to discuss further details.

· Is this a complete dedicated account or coupon code?

These are standalone Amazon AWS accounts – not coupon codes.

Don’t wait around. Place your order today!

Price:

AWS Accounts WITH $5K = $300

AWS Accounts WITH $10K = $400

Payment Method: Bitcoins

BTC Address :bc1qqr2xhvtrk89f5t0z2crvkv06wlkc3z3pet7hxm

Gifts:If you purchase now, you will receive a $350 Google Cloud Platform (GCP) credit account.

When you have made your payment, please contact [email protected]

Refund and Replacement Policy:

100% MONEY BACK GUARANTEE

We are the only one who provides 100% money back guarantee for this kind of service.We strongly believe in the quality of our service. Get this and go through it immediately. There is no risk and you literally have nothing to lose.

>>>Offer valid today At the Lowest Cost!!!:

90% off still available, but won’t be available for long, claim your Offer now.

It is great value for money deal, for the goodies you are getting.

Cannot guarantee about stocks, these sell like hot up cakes( only 2 left in stock as of now ).

Custom reviews

1 note

·

View note

Photo

Amazon CloudWatch Tutorial In Hindi | AWS Tutorial In Hindi | Edureka Hindi http://ehelpdesk.tk/wp-content/uploads/2020/02/logo-header.png [ad_1] Edureka AWS Training: https://ww... #amazoncloudwatch #amazoncloudwatchtutorialinhindi #awscertification #awscertificationtraininginhindi #awscertifiedcloudpractitioner #awscertifieddeveloper #awscertifiedsolutionsarchitect #awscertifiedsysopsadministrator #awscloudwatch #awscloudwatchinhindi #awscloudwatchlogs #awscloudwatchtutorialinhindi #awsedurekahindi #awsmonitoring #awsmonitoringandreporting #awsmonitoringtools #awstrainingvideosinhindi #awstutorialforbeginnersinhindi #ciscoccna #comptiaa #comptianetwork #comptiasecurity #cybersecurity #edurekahindi #ethicalhacking #it #kubernetes #learnawsinhindi #linux #microsoftaz-900 #microsoftazure #networksecurity #software #windowsserver #ytccon

0 notes

Photo

EC2のWindows Server構築でやるべきこと https://ift.tt/3362W2C

1. 概要

2. Windows Serverのインスタンス起動 — 2.1. AMIを選択してインスタンス起動 — 2.2. Cドライブのサイジング

3. Windows ServerのOS初期設定 — 3.1. Administratorのパスワード変更 — 3.2. Administrator以外のユーザー作成 — 3.3. Timezone設定、Language設定、Region設定 — 3.4. File name extensions設定 — 3.5. NTP設定(Amazon Time Sync Service) — 3.6. Computer Name変更(*) — 3.7. Windows Update

4. EC2 管理ツールのインストール/セットアップ — 4.1. AWS CLI のダウンロード & インストール — 4.2. AWS CLI のセットアップ — 4.3. E2CConfigのダウンロード & インストール — 4.4. CloudWatch Agent のインストール — 4.5. CloudWatch Agent のセットアップ(*)

5. OSのネットワーク/セキュリティ関連の設定 — 5.1. リモートデスクトップ接続設定(セキュリティポリシーによる) — 5.2. ネットワークレベル認証設定(セキュリティポリシーによる) — 5.3. 暗号化レベルをHigh設定(セキュリティポリシーによる) — 5.4. 暗号化TLS1.0/1.1 を無効化(セキュリティポリシーによる)

6. ADに登録(*)

7. ミドルウェアのインストール(*) — 7.1. ミドルウェアのインストール — 7.2. ミドルウェアのログローテーション

8. AMI を使った横展開

概要

インフラエンジニアの基本はIaaS構築から! 本記事は、EC2上に起動したWindows Server OSの構築でやるべきことをまとめた記事となります。対象はOSのベースのみであり、WebサーバーやDBなどミドルウェアの手順については含みません。

なお、本記事に完成はなく、Windows Server OSの構築で新しい発見があれば都度更新の予定です。

Windows Serverのインスタンス起動

AMIを選択してインスタンス起動

AMI を選択します。「コミュニティ AMI」を選択し、「Windows」をチェックします。

日本語OS を使用する場合、「Japanese」で検索します。

特に理由がなければ、AWSが配布するAMI(provided by Amazon)を選択します。バージョンやミドルウェアを含むなどいくつかの種類がありますので、目的に応じて選択。

以降のインスタンスの設定については、本記事では省略します。

Cドライブのサイジング

Windows Server のCドライブにはある程度の未使用領域が必要です。Cドライブをサイジングする方法は、下記ドキュメントを参照。

概要はじめに今回は、EC2 のディスク拡張についてご紹介します。対象のサーバーOSは、Windows Server 2012 R2 となります(2016以降も手順は同じです)。マイクロソフトのドキュメントを例に、IaaSの設計時に悩ましいCドライブの容量をサイジングする方法についても... EC2(Windows) Cドライブのサイジングと拡張 | Oji-Cloud - Oji-Cloud

Windows ServerのOS初期設定

次に、Windows Server が起動した後のOS初期設定を記載します。

Administratorのパスワード変更

Administratorのパスワード変更

確認のためサインアウト/サインイン

Administrator以外のユーザー作成

Administrator以外のユーザー作成

Administratorsグループ割り当て

確認のためサインアウト/作成したユーザーにてサインイン

Timezone設定、Language設定、Region設定

タイムゾーン(Timezone)の設定

コントロール パネルの時計、言語、および地域(Control Panel\Clock, Language, and Region)

例:UTC

言語(Language)の設定

例:English(US)

Regionの設定

例:United States

File name extensions設定

ファイル名拡張子(File name extensions)の表示は有効にする。

NTP設定(Amazon Time Sync Service)

Amazon Time Sync Service(Server:169.254.169.123)を設定。

C:\Users\Administrator>w32tm /query /status Leap Indicator: 3(last minute has 61 seconds) Stratum: 0 (unspecified) Precision: -6 (15.625ms per tick) Root Delay: 0.0000000s Root Dispersion: 0.0000000s ReferenceId: 0x00000000 (unspecified) Last Successful Sync Time: unspecified Source: Local CMOS Clock Poll Interval: 9 (512s) C:\Users\Administrator>net stop w32time The Windows Time service is stopping. The Windows Time service was stopped successfully. C:\Users\Administrator>w32tm /config /syncfromflags:manual /manualpeerlist:"169.254.169.123" The command completed successfully. C:\Users\Administrator>w32tm /config /reliable:yes The command completed successfully. C:\Users\Administrator>net start w32time The Windows Time service is starting. The Windows Time service was started successfully. C:\Users\Administrator>w32tm /query /status Leap Indicator: 0(no warning) Stratum: 4 (secondary reference - syncd by (S)NTP) Precision: -6 (15.625ms per tick) Root Delay: 0.0341949s Root Dispersion: 7.7766624s ReferenceId: 0xA9FEA97B (source IP: 169.254.169.123) Last Successful Sync Time: 2/22/2019 10:53:21 AM Source: 169.254.169.123 Poll Interval: 9 (512s)

Computer Name変更(*)

Computer Name(コンピューター名)を変更する。

OSの再起動を伴うため、OS初期設定の最後に実施とする。

Windows Update

SSM(Systems Manager)からWindows Update

SSM(Systems Manager)のRun Commandより、下記コマンドを使用して、SSMのUpdateを実施。

AWS-UpdateSSMAgent

SSMが古いと、次のWindows Updateが失敗するため。

SSM(Systems Manager)のRun Commandより、下記コマンドを使用して、Windows Updateを実施。

AWS-InstallWindowsUpdates

Action: Install

Allow Reboot: True

事前に、EC2のロールにAmazonSSMFullAccessがアタッチされていることを確認する。

EC2 管理ツールのインストール/セットアップ

AWS CLI のダウンロード & インストール

以下より、AWS CLI のインストールモジュールをダウンロード。 https://s3.amazonaws.com/aws-cli/AWSCLISetup.exe

AWS CLI をインストールする。

C:\Users\niikawa>aws --version aws-cli/1.16.103 Python/3.6.0 Windows/2012ServerR2 botocore/1.12.93

AWS CLI のセットアップ

以下記事を参考に、AWS CLI をセットアップする。

Linux + aws cli のはじめ方準備今回は、Linux にaws cli をインストールして初期設定する方法をまとめます。Linux環境は、Windows 10にインストールしたWindows Subsystem for Linuxを使用します。Windows Subsystem for Linuxのインストール方法は、下記の記事を参... やさしいaws cli のインストール方法 | Oji-Cloud - Oji-Cloud

E2CConfigのダウンロード & インストール

以下より、AWS CLI のインストールモジュールをダウンロード、インストールする。

https://s3.amazonaws.com/ec2-downloads-windows/EC2Config/EC2Install.zip

あるいは、SSM(Systems Manager)のRun Commandを使用して、インストールする。

AWS-UpdateEC2Config

CloudWatch Agent のインストール

以下より、AWS CLI のインストールモジュールをダウンロード、インストールする。

https://s3.amazonaws.com/amazoncloudwatch-agent/windows/amd64/latest/amazon-cloudwatch-agent.msi

msiexec /i amazon-cloudwatch-agent.msi

次のディレクトリが作成されたことを確認する。

C:\Program Files\Amazon\AmazonCloudWatchAgent

あるいは、SSM(Systems Manager)のRun Commandを使用して、インストールする。

AWS-ConfigureAWSPackage

Action: Install

Name: AmazonCloudWatchAgent

事前に、EC2のロールにAmazonSSMFullAccessがアタッチされていることを確認する。

次のディレクトリが作成されたことを確認する。

C:\Program Files\Amazon\AmazonCloudWatchAgent

CloudWatch Agent のセットアップ(*)

以下記事を参考に、CloudWatch Agent をセットアップする。

Windowsインスタンスの統合CloudWatch エージェント設定方法はじめに目的EC2インスタンス上のWindowsサーバーが個々に持つログを収集して、CloudWatch Logsに集約すること。CloudWatchを使って、各EC2インスタンスのカスタムメトリクスを監視すること。何が【新】か... 【最新】Windows + CloudWatch Agentによるメトリクス/ログ管理 | Oji-Cloud - Oji-Cloud

OSのネットワーク/セキュリティ関連の設定

リモートデスクトップ接続設定(セキュリティポリシーによる)

リモートデスクトップ(RDP)接続を 2セッションまで許可する設定に変更する。

ローカルグループポリシー(gpedit.msc)を起動し、コンピューターの構成を開く。

下記の項目を展開する。

管理用テンプレート → Windows コンポーネント → リモート デスクトップ サービス → リモート デスクトップ セッション ホスト → 接続

(English)Administrative Templates -> Windows Components -> Remote Desktop Services -> Remote Desktop Session Host -> Connections

下記のパラメータを無効(Disabled)に変更する。

リモート デスクトップ サービス ユーザーに対してリモート デスクトップ サービス セッションを 1 つに制限する

(English)Restrict Remote Desktop Services users to a single Remote Desktop Services Session

ネットワークレベル認証設定(セキュリティポリシーによる)

以下記事を参考に、設定する。

Windows Server セキュリティ強化: ネットワークレベル認証前提条件Windows Server のセキュリティ強化の設定を紹介します。RDP(リモートデスクトップ)を行う際の認証方法をネットワークレベル認証(NLA)に変更します。ネットワークレベル認証とは、接続元のクラ... Windows Serverのネットワークレベル認証設定 | Oji-Cloud - Oji-Cloud

暗号化レベルをHigh設定(セキュリティポリシーによる)

以下記事を参考に、設定する。

Windows Server セキュリティ強化: 暗号化レベルのHigh設定前提条件Windows Server のセキュリティ強化の設定を紹介します。RDP(リモートデスクトップ)による接続を行う際、サーバーとクライアント間で通信されるデータは暗号化されています。しかし、盗聴時に解読... Windows Serverの暗号化レベルをHigh設定 | Oji-Cloud - Oji-Cloud

暗号化TLS1.0/1.1 を無効化(セキュリティポリシーによる)

以下記事を参考に、設定する。

Windows Server セキュリティ強化: TLS1.0/1.1を無効化する前提条件Windows Server のセキュリティ強化の設定を紹介します。TLS(Transport Layer Security)の旧バージョンであるTLS 1.0/ TLS 1.1を無効化し、旧バージョンの脆弱性から回避します。(例:TLS 1.0 に... Windows Serverの暗号化TLS1.0/1.1 を無効化 | Oji-Cloud - Oji-Cloud

ADに登録(*)

システムでADサービスを利用する場合は、Windows Server をADに登録する。

以下記事を参考に、ADに登録する。

Windows Server のAD登録方法前提条件AWSでは手軽にADサービスを利用することができます。本投稿では、マネージド型の Microsoft Active DirectoryにWindows Serverを登録する手順を記載します。OSのバージョンは、Windows Server 2012 R2 となります。Microsoft AD... やさしいWindows Server をADに登録する方法 | Oji-Cloud - Oji-Cloud

ミドルウェアのインストール(*)

ミドルウェアのインストール

システムの要件に応じて、各種ミドルウェアやツールをインストールする。

例) Microsoft .NET Framework, IIS, DB, Javaなど

ミドルウェアのログローテーション

システムの要件に応じて、ミドルウェアののログローテーションや定期削除を組み込む。

概要Windows Server において、Linux のlogrotate コマンドの役割を作ります。Windowsのforfiles は、Linux のfind コマンドに近い動作をします。forfiles を使ったbatファイルを作成し、スケジューラーで定期実行させます。forfilesの使用方法構文forfiles [/c &... Windowsのforfilesを使ったログのリネーム/削除 | Oji-Cloud - Oji-Cloud

AMI を使った横展開

これまでの構築後にAMIを取得し、別のサーバーに横展開 or スケールアウトする場合、AMIを取得する前にWindows Server をsysprep(初期化)する。

以下記事を参考に、Windows Serverをsysprepする。なお、sysprepは、EC2Config サービス、あるいはSSM(Systems Manager)のRunCommandのいずれかを使用する。

なお、横展開後にサーバーごとに変更が必要と思われる箇所を(*)にて、マークする。

Windowsにログインせず、SSM(CLI)からsysprepを投げる概要はじめに今回は、SSM(Systems Manager)を使用して、EC2上のWindows Server に対してsysprepを行う方法をご紹介します。Sysprepはsysprepコマンドを直接実行するのではなく、AWSのEC2Config サービスを利... SSMからEC2 Windowsをsysprepする方法 | Oji-Cloud - Oji-Cloud

元記事はこちら

「EC2のWindows Server構築でやるべきこと」

November 20, 2019 at 02:00PM

0 notes

Text

公開日時指定可能な静的コンテンツ配信システムをサーバレスで作ってみた

こんにちは、インフラストラクチャーグループの沼沢です。 今回は、タイトルの通り 公開日時の指定が可能な静的コンテンツ配信用のシステムを、サーバレスで作ってみました。

なお、リージョンは全て東京リージョンとします。

構成図

まずは構成図です。

公開日時が指定可能な仕組み

S3 には、時間で公開状態へ移行するような設定や仕組みがありません。 これを実現するために、S3 オブジェクトのキーをもとに、Lambda を利用して、あたかも公開日時を指定しているかのような動きを実装します。 なお、本稿では分まで指定できる設定としていますが、要件によってカスタマイズすることも可能です。

S3

「非公開 S3 バケット」と「公開用 S3 バケット」を用意

「非公開 S3 バケット」のバケット直下には、プレフィックスに 公開したい年月日時分を示す yyyymmddHHMM 形式のディレクトリ を作成

2020年1月1日0時0分に公開したい場合は 202001010000

作成した公開予定ディレクトリの配下に、公開予定のファイルをアップロード

余談ですが、バケットポリシーを駆使すれば非公開と公開でバケットを分けなくても実現は可能なのですが、今回は分けています。 というのも、バケットポリシーは設定を誤ると root 以外操作できない状態になったり、非公開のつもりのディレクトリが公開状態になっていて公開前情報が流出しまったり…

とにかく、2つ用意したからってコストに差がでるわけでもありませんので、なるべく設定をシンプルにするためにバケットを2つ用意することにしました。

CloudFront

「公開用 S3 バケット」をオリジンとして、CloudFront を経由してコンテンツを配信します。

今回用意する仕組みでは 公開済みファイルの上書きも可能 となっていて、分毎にコンテンツが上書きされる可能性があるため、CloudFront の Default TTL を60秒としておくのが良いでしょう。

また、S3 への直アクセスを防ぎ、必ず CloudFront を経由するようにするため、Origin Access Identity を設定することをおすすめします。

Lambda

「非公開 S3 バケット」から「公開用 S3 バケット」にファイルをコピーする処理を行う公開用 Lambda を用意します。(ソースコードは後述) この Lambda では、ざっくりと以下の処理をしています。

起動した年月日時分に該当するディレクトリが「非公開 S3 バケット」直下に存在するか確認

存在すればその配下のファイルを全て公開用バケットにコピー

コピーする際は、プレフィックスの yyyymmddHHMM は除いたキーでコピー

ディレクトリ構成も全てそのままコピーする

例えば、202001010000 ディレクトリが存在する場合のコピーは以下の様なイメージ。

s3://非公開S3バケット/202001010000/aaa.html s3://非公開S3バケット/202001010000/hoge/bbb.html s3://非公開S3バケット/202001010000/hoge/fuga/ccc.html ↓ s3://公開用S3バケット/aaa.html s3://公開用S3バケット/hoge/bbb.html s3://公開用S3バケット/hoge/fuga/ccc.html

その他、この Lambda には以下のような設定をしておきます。

IAM ロールには AWSLambdaBasicExecutionRole と AmazonS3FullAccess のマネージドルールをアタッチ

ただし、AmazonS3FullAccess は強力なので、本番利用の際は適切に権限を絞ったカスタムポリシーの用意を推奨

タイムアウト値は、コピーするファイルの量にもよるので、要件に合わせた設定を推奨

CloudWatch Events

公開用 Lambda を毎分キックするように CloudWatch Events を設定

Cron 式で * * * * ? * を指定

Cron 式の詳細は こちら を参照

Lambda Function のソースコード

今回は Python 3.7 用のソースコードを用意しました。 やっていることは単純なので、得意な言語に書き換えても良いと思います。

unpublish_bucket と publish_bucket の値だけ変更すれば動くと思います。

import json import boto3 from datetime import datetime, timedelta, timezone JST = timezone(timedelta(hours=+9), 'JST') s3 = boto3.client('s3') unpublish_bucket = 'unpublish_bucket' # 非公開 S3 バケット publish_bucket = 'publish_bucket' # 公開用 S3 バケット def lambda_handler(event, context): now = "{0:%Y%m%d%H%M}".format(datetime.now(JST)) # 現在日時(分まで) now_format = "{0:%Y/%m/%d %H:%M}".format(datetime.now(JST)) # 現在日時(分まで) unpublished_list = s3.list_objects( Bucket=unpublish_bucket, Prefix=now + '/' ) if not 'Contents' in unpublished_list: print(f'[INFO] {now_format} に公開予定のファイルはありません。') return flag = False for obj in unpublished_list['Contents']: unpublished_path = obj['Key'] if unpublished_path.endswith('/'): continue publish_path = unpublished_path.replace(now + '/', '') copy_result_etag = publish_file(publish_path, unpublished_path) get_result_etag = publish_file_check(publish_path) if copy_result_etag == get_result_etag: print(f'[SUCCESS] s3://{unpublish_bucket}/{unpublished_path} -> s3://{publish_bucket}/{publish_path} にコピーしました。') flag = True else print(f'[FAILED] s3://{preview_bucket}/{unpublished_path} -> s3://{publish_bucket}/{publish_path} のコピーに失敗しました。') if flag == False: print(f'[INFO] {now_format} 用のディレクトリ(s3://{unpublish_bucket}/{now}/)はありましたが、ファイルがありませんでした。') def publish_file(publish_path, unpublished_path): result = s3.copy_object( Bucket=publish_bucket, Key=publish_path, CopySource={ 'Bucket': unpublish_bucket, 'Key': unpublished_path } ) if 'CopyObjectResult' in result: return result['CopyObjectResult']['ETag'] else: return None def publish_file_check(publish_path): result = s3.get_object( Bucket=publish_bucket, Key=publish_path ) if 'ETag' in result: return result['ETag'] else: return None

注意事項

前述の通り、Lambda 用の IAM ロールに付与する権限には注意してください

CloudWatch Events は分が最小単位です

正確にその分の0秒に Lambda が起動するわけではありません

よって、秒まで指定した公開日時を指定することは本稿の内容では実現できま��ん

コストについて

大抵の Lambda 実行は空振りになってしまってコストがもったいないと思うかもしれませんが、Lambda は無料利用枠の恩恵が大きく、この仕組だけでクラウド破産のようなことにはならないかと思いますので、そこはご安心を。

EC2 等、時間単位で課金されるものを利用していないため、トラフィックによる従量課金を除いた場合に発生する主要なコストとしては以下のものぐらいかと思います。

Lambda の無料利用枠を超えた分

CloudWatch Logs(Lambda のログ) への転送量と保存容量

S3 の保存容量

Lambda のコードをチューニングしたり、「非公開 S3 バケット」を低頻度アクセスストレージにしたりすると、更にコストを抑えられます。

その他

分毎ではなく時間毎など他の間隔にする

例えば時間毎で公開設定をしたい場合は、本稿の仕組みを以下のように修正すれば実現可能です。

アップロード時のプレフィックスを 公開したい年月日時を示す yyyymmddHH 形式のディレクトリ を作成する

CloudWatch Events の Cron 式に 0 * * * ? * を指定

Lambda のコードの現在時刻を取るフォーマットを時間までのものにする

ついでに CloudFront のキャッシュ時間も、公開間隔に合わせて調整することでキャッシュ効率も上がります。

非公開日時の指定をできるようにする

本稿の仕組では、非公開(「公開用 S3 バケット」からのファイル削除)日時の指定までは行っていません。 そこで、CloudWatch Events と Lambda の組み合わせをもう一組作るのも良いと思います。

Lambda でのエラー発生時のリカバリ策を仕込む

本稿では、Lambda でエラーが発生した際のリカバリを考慮していません。 コピー失敗などが発生すると、公開されるべきコンテンツが公開されず、エンドユーザやビジネスへの影響が出る事故になりかねません。

Lambda のコードでは、print で CloudWatch Logs に [SUCCESS] や [FAILED] を出力するようにしてあるので、その文字列を CloudWatch などで監視して、検知をトリガーにリカバリ用の仕組みがキックされるようにしておくと、事故の発生率を下げることができます。

簡単に思いつくリカバリの方法としては、もう一度 Lambda をキックするなどしょうか。 もしくは Twilio 等の電話通知を行い、それに気付いた運用者が、「非公開 S3 バケット」から「公開用 S3 バケット」へ、手動でコンテンツをコピーする形でも良いかもしれませんね。

あとがき

今回は、公開日時指定可能な静的コンテンツ配信システムをサーバレスで作成しました。

AWS を利用する場合、安易に EC2 でごにょごにょしようとせず、「可能な限りサーバ管理を AWS に任せる」という発想のもと、マネージドサービスを活用した設計を心がけてみましょう。

そうすることで、実際のインフラコストだけではなく、構築時や運用開始後の人的コストが劇的に下がり、エンジニアが より価値を生み出す生産的な活動に時間を割けるようになる ので、ぜ��検討してみてください。

ちなみに、この仕組みは3時間程度でできました。 本稿の執筆にかかった時間の方が長いです。

お後がよろしいようで。

0 notes

Link

0 notes

Text

2019/02/12-17

*ここまでやる? MicrosoftがAWSを猛追、激化するクラウド戦争の行方 https://www.itmedia.co.jp/enterprise/articles/1902/13/news042.html >「あれっ?」と思ったのは、その理由について日本マイクロソフトの >平野拓也代表取締役社長が、 > > 「最大の要因は3年間の延長セキュリティ更新プログラムだ」 > >と言っていることです。 > > これはサポート終了後もセキュリティ更新プログラムの提供を3年間継続 > するもので、Azure上であれば無償提供、その他の環境に対しては有償提供 > となる。 > >のだそうです。

*不正アクセスを教訓に GMOペパボが500台超のサーバに導入したオープンソースのセキュリティ監査基盤「Wazuh」とは https://www.atmarkit.co.jp/ait/articles/1902/18/news012.html

*Amazon RDS for MySQL のパラメーターを設定するためのベストプラクティス。パート 3: セキュリティ、操作管理性、および接続タイムアウトに関連するパラメーター https://aws.amazon.com/jp/blogs/news/best-practices-for-configuring-parameters-for-amazon-rds-for-mysql-part-3-parameters-related-to-security-operational-manageability-and-connectivity-timeout/

*TOTO、NTTコムのクラウドからAWSにリプレース https://tech.nikkeibp.co.jp/atcl/nxt/column/18/00001/01659/ >同社は2018年10月に業務システムの実行環境を自社サーバーから >NTTコミュニケーションズのプライベートクラウドに移し終えたばかり >だった。それにもかかわらずAWSへのリプレースに踏み切ったのは、 >BCP(事業継続計画)を考慮してサーバーの冗長性や災害対応を強化 >するのに加え、AI(人工知能)やIoT(インターネット・オブ・シン >グズ)関連のサービスの柔軟な利用を考えたためだ。

*DNS over HTTPSの必要性について https://qiita.com/migimigi_/items/1ca81163a79f4e154cdf >そのHTTPS通信をする前のDNSによるドメイン解決は暗号化されておらず >盗聴でアクセスするホスト名を把握される、なりすましで偽の応答を返 >されるといった可能性があります。

>現在、改竄となりすましについては対応できますがHTTPSの接続時のSNI >という機能をもとに > > - 盗聴してユーザーがどのドメインのWebサイトにアクセスしたかを把握する > - ユーザーがアクセスしようとするドメインを把握して強制的に切断する > >ということができてしまいます。

>SNIの弱点に対して政府の検閲という分かりやすい攻撃がなされたため、 >このEncrypted SNI(とDNS over HTTPS)に対応するサーバーとクライ >アントが今後広まっていくのではないでしょうか。

*3周年に突入するAbemaTVの挑戦と苦悩 https://speakerdeck.com/miyukki/the-challenge-and-anguish-of-abematv-celebrating-the-third-anniversary > - オンプレはコスト以外のメリットがなく却下 > - 既に知見のあるAWSかGCPの選択肢へ

>なぜGCPを選んだか > - 高機能L7ロードバランサ > - GKE/Kubernetes > - ネットワーク帯域に対するコストの安さ > - Stackdriver Logging/BigQueryなどのログ収集や集計サービスの充実

*ファーストサーバが解散へ。IDCフロンティアによる吸収合併を発表。既存のサービスは継続 https://www.publickey1.jp/blog/19/idc_3.html

*EC2インスタンス内のログをCloudWatch LogsとS3バケットに保存してみた https://dev.classmethod.jp/cloud/aws/ec2-to-cwl-s3/ >EC2の上で動くアプリケーションログを一時的にCloudWatch Logsに保管、 >長期的にS3バケットに保存というアーキテクチャを試してみました。

*CloudWatch エージェントを使用して Amazon EC2 インスタンスとオンプレミスサーバーからメトリクスとログを収集する https://docs.aws.amazon.com/ja_jp/AmazonCloudWatch/latest/monitoring/Install-CloudWatch-Agent.html > - EC2 インスタンスのメトリクスに加えて、ゲスト内メトリクスなど、 > Amazon EC2 インスタンスからさらに多くのシステムレベルのメトリ > クスを収集します。 > > - オンプレミスサーバーからシステムレベルのメトリクスを収集します。 > > - Linux または Windows Server を実行している Amazon EC2 インスタン > スおよびオンプレミスサーバーから、ログを収集します。 > > - StatsD および collectd プロトコルを使用して、アプリケーション > またはサービスからカスタムメトリクスを取得します。 > >他の CloudWatch メトリクスと同様に、CloudWatch エージェントで収集 >したメトリクスを CloudWatch でも保存して表示できます。

*8文字のWindowsパスワードはわずか2時間半で突破可能と判明 >NVIDIAの最新GPUであるGeForce RTX 2080 Tiとオープンソースのパスワード >クラッキングツールである「hashcat」を組み合わせることで、8文字の >Windowsパスワードをわずか2時間半で突破できると報告しています。

>Tinker氏が行ったブルートフォース攻撃は、NTLM認証を用いている >WindowsおよびActive Directoryを利用する組織に対して��効です。 >NTLM認証は古いWindowsの認証プロトコルであり、今ではKerberosという >新たな認証方式になっていますが、Tinker氏によるとWindowsのパス >ワードをローカルやActive Directoryのドメインコントローラーの >データベースに保存する際に今でもNTLM認証は使われ続けているとのこと。

>そこで、Tinker氏はパスワードを設定する際に「ランダムで5個の単語を >組み合わせるといい」と述べています。たとえば「Lm7xR0w」という8文字 >の複雑なパスワードよりも、「phonecoffeesilverrisebaseball」という >5個の単語を適当に組み合わせたパスワードの方がセキュリティ的に強く >なるとのこと。また、2段階認証を有効にすることもセキュリティを >強化するために重要だとしています。

*Intel CPUのセキュリティ機構「SGX」にマルウェアを隠すことでセキュリティソフトで検出できない危険性、概念実証用のプログラムも公開済み https://gigazine.net/news/20190214-intel-sgx-vulnerability/

*誰でも簡単にRaspberry Pi 3へ64bit ARM版Windows 10をインストールできるツールが登場 https://gigazine.net/news/20190214-raspberry-pi-run-windows-10/ >WoA Installerは非常にシンプルに設計されていて、ドライバやUEFIなどを >設定する必要はないとのこと。WoA Installerはあくまでも展開を支援する >ツールに過ぎませんが、ユーザーはMicrosoftのサーバーから64bit ARM版の >Windows 10のイメージを入手し、バイナリのセットであるコアパッケージを >ダウンロードし、インストーラーを実行すればOKとなっているそうです。

*[レポート]無意味なアラートからの脱却 ? Datadogを使ってモダンなモニタリングを始めよう ? #devsumi #devsumiB https://dev.classmethod.jp/cloud/developers-summit-2019-15-b-7/ >アラートの種類 > - 記録する(Record) 緊急度:低 > - 通知する(Notification) 緊急度:中 > - 呼び出す(Page) 緊急度:高

0 notes

Text

What Is AWS CloudTrail? And To Explain Features, Benefits

AWS CloudTrail

Monitor user behavior and API utilization on AWS, as well as in hybrid and multicloud settings.

What is AWS CloudTrail?

AWS CloudTrail logs every AWS account activity, including resource access, changes, and timing. It monitors activity from the CLI, SDKs, APIs, and AWS Management Console.

CloudTrail can be used to:

Track Activity: Find out who was responsible for what in your AWS environment.

Boost security by identifying odd or unwanted activity.

Audit and Compliance: Maintain a record for regulatory requirements and audits.

Troubleshoot Issues: Examine logs to look into issues.

The logs are easily reviewed or analyzed later because CloudTrail saves them to an Amazon S3 bucket.

Why AWS CloudTrail?

Governance, compliance, operational audits, and auditing of your AWS account are all made possible by the service AWS CloudTrail.

Benefits

Aggregate and consolidate multisource events

You may use CloudTrail Lake to ingest activity events from AWS as well as sources outside of AWS, such as other cloud providers, in-house apps, and SaaS apps that are either on-premises or in the cloud.

Immutably store audit-worthy events

Audit-worthy events can be permanently stored in AWS CloudTrail Lake. Produce audit reports that are needed by external regulations and internal policies with ease.

Derive insights and analyze unusual activity

Use Amazon Athena or SQL-based searches to identify unwanted access and examine activity logs. For individuals who are not as skilled in creating SQL queries, natural language query generation enabled by generative AI makes this process much simpler. React with automated workflows and rules-based Event Bridge alerts.

Use cases

Compliance & auditing

Use CloudTrail logs to demonstrate compliance with SOC, PCI, and HIPAA rules and shield your company from fines.

Security

By logging user and API activity in your AWS accounts, you can strengthen your security posture. Network activity events for VPC endpoints are another way to improve your data perimeter.

Operations

Use Amazon Athena, natural language query generation, or SQL-based queries to address operational questions, aid with debugging, and look into problems. To further streamline your studies, use the AI-powered query result summarizing tool (in preview) to summarize query results. Use CloudTrail Lake dashboards to see trends.

Features of AWS CloudTrail

Auditing, security monitoring, and operational troubleshooting are made possible via AWS CloudTrail. CloudTrail logs API calls and user activity across AWS services as events. “Who did what, where, and when?” can be answered with the aid of CloudTrail events.

Four types of events are recorded by CloudTrail:

Control plane activities on resources, like adding or removing Amazon Simple Storage Service (S3) buckets, are captured by management events.

Data plane operations within a resource, like reading or writing an Amazon S3 object, are captured by data events.

Network activity events that record activities from a private VPC to the AWS service utilizing VPC endpoints, including AWS API calls to which access was refused (in preview).

Through ongoing analysis of CloudTrail management events, insights events assist AWS users in recognizing and reacting to anomalous activity related to API calls and API error rates.

Trails of AWS CloudTrail

Overview

AWS account actions are recorded by Trails, which then distribute and store the events in Amazon S3. Delivery to Amazon CloudWatch Logs and Amazon EventBridge is an optional feature. You can feed these occurrences into your security monitoring programs. You can search and examine the logs that CloudTrail has collected using your own third-party software or programs like Amazon Athena. AWS Organizations can be used to build trails for a single AWS account or for several AWS accounts.

Storage and monitoring

By establishing trails, you can send your AWS CloudTrail events to S3 and, if desired, to CloudWatch Logs. You can export and save events as you desire after doing this, which gives you access to all event details.

Encrypted activity logs

You may check the integrity of the CloudTrail log files that are kept in your S3 bucket and determine if they have been altered, removed, or left unaltered since CloudTrail sent them there. Log file integrity validation is a useful tool for IT security and auditing procedures. By default, AWS CloudTrail uses S3 server-side encryption (SSE) to encrypt all log files sent to the S3 bucket you specify. If required, you can optionally encrypt your CloudTrail log files using your AWS Key Management Service (KMS) key to further strengthen their security. Your log files are automatically decrypted by S3 if you have the decrypt permissions.

Multi-Region

AWS CloudTrail may be set up to record and store events from several AWS Regions in one place. This setup ensures that all settings are applied uniformly to both freshly launched and existing Regions.

Multi-account

CloudTrail may be set up to record and store events from several AWS accounts in one place. This setup ensures that all settings are applied uniformly to both newly generated and existing accounts.

AWS CloudTrail pricing

AWS CloudTrail: Why Use It?

By tracing your user behavior and API calls, AWS CloudTrail Pricing makes audits, security monitoring, and operational troubleshooting possible .

AWS CloudTrail Insights

Through ongoing analysis of CloudTrail management events, AWS CloudTrail Insights events assist AWS users in recognizing and reacting to anomalous activity related to API calls and API error rates. Known as the baseline, CloudTrail Insights examines your typical patterns of API call volume and error rates and creates Insights events when either of these deviates from the usual. To identify odd activity and anomalous behavior, you can activate CloudTrail Insights in your event data stores or trails.

Read more on Govindhtech.com

#AWSCloudTrail#multicloud#AmazonS3bucket#SaaS#generativeAI#AmazonS3#AmazonCloudWatch#AWSKeyManagementService#News#Technews#technology#technologynews

0 notes

Text

Explore Our 100% Job Oriented Intensive Program by Quality Thought (Transforming Dreams ! Redefining the Future !) What is a Core node? 🌐Register for the Course: https://www.qualitythought.in/registernow 📲 contact: 99634 86280 📩 Telegram Updates: https://t.me/QTTWorld 📧 Email: [email protected] Facebook: https://www.facebook.com/QTTWorld/ Instagram: https://www.instagram.com/qttechnology/ Twitter: https://twitter.com/QTTWorld Linkedin: https://in.linkedin.com/company/qttworld ℹ️ More info: https://www.qualitythought.in/

#aws#awscloud#awscloudcomputing#awstraining#awscourse#awscloudtraining#awscloudengineer#cloud#awscloudcourse#awsadmin#amazonwebservices#amazoncloud#amazon#autoscaling#AWSDATAENGINEER#cooldownperiod#AmazonCloudWatch#qualitythoughttechnologiesreviews#qualitythoughttechnologies#qualitythought#Qtt

0 notes

Text

Amazon CloudWatch: The Solution For Real-Time Monitoring

What is Amazon CloudWatch?

Amazon CloudWatch allows you to monitor your Amazon Web Services (AWS) apps and resources in real time. CloudWatch may be used to collect and track metrics, which are characteristics you can measure for your resources and apps.

Every AWS service you use has metrics automatically displayed on the CloudWatch home page. Additionally, you may design your own dashboards to show analytics about your own apps as well as bespoke sets of metrics of your choosing.

When a threshold is crossed, you may set up alerts that monitor metrics and send messages or that automatically modify the resources you are keeping an eye on. For instance, you may keep an eye on your Amazon EC2 instances‘ CPU utilization and disk reads and writes, and then use that information to decide whether to start more instances to accommodate the increasing strain. To save money, you may also utilize this data to halt instances that aren’t being used.

CloudWatch gives you system-wide insight into operational health, application performance, and resource usage.

How Amazon CloudWatch works

In essence, Amazon CloudWatch is a storehouse for measurements. Metrics are entered into the repository by an AWS service like Amazon EC2, and statistics are retrieved using those metrics. Statistics on your own custom metrics may also be retrieved if you add them to the repository.

Metrics may be used to compute statistics, and the CloudWatch interface can then display the data graphically.

When specific conditions are fulfilled, you may set up alert actions to stop, start, or terminate an Amazon EC2 instance. Additionally, you may set up alerts to start Amazon Simple Notification Service (Amazon SNS) and Amazon EC2 Auto Scaling on your behalf. See Alarms for further details on setting up CloudWatch alarms.

Resources for AWS Cloud computing are kept in highly accessible data center buildings. Each data center facility is situated in a particular geographic area, referred to as a Region, to offer extra scalability and dependability. To achieve the highest level of failure isolation and stability, each region is designed to be totally separated from the others. Although metrics are kept independently in Regions, you may combine information from many Regions using CloudWatch’s cross-Region feature.

Why Use CloudWatch?

A service called Amazon CloudWatch keeps an eye on apps, reacts to changes in performance, maximizes resource use, and offers information on the state of operations. CloudWatch provides a consistent picture of operational health, enables users to create alarms, and automatically responds to changes by gathering data from various AWS services.

Advantages Of Amazon CloudWatch

Visualize and analyze your data with end-to-end observability

Utilize robust visualization tools to gather, retrieve, and examine your resource and application data.

Operate efficiently with automation

Utilize automated actions and alerts that are programmed to trigger at preset thresholds to enhance operational effectiveness.

Quickly obtain an integrated view of your AWS or other resources

Connect with over 70 AWS services with ease for streamlined scalability and monitoring.

Proactively monitor and get actional insights to enhance end user experiences

Use relevant information from your CloudWatch dashboards’ logs and analytics to troubleshoot operational issues.

Amazon CloudWatch Use cases

Monitor application performance

To identify and fix the underlying cause of performance problems with your AWS resources, visualize performance statistics, generate alarms, and correlate data.

Perform root cause analysis

To expedite debugging and lower the total mean time to resolution, examine metrics, logs, log analytics, and user requests.

Optimize resources proactively

By establishing actions that take place when thresholds are reached according to your requirements or machine learning models, you may automate resource planning and save expenses.

Test website impacts

By looking at images, logs, and web requests at any moment, you can determine precisely when and how long your website is affected.

Read more on Govidhtech.com

#AmazonCloudWatch#CloudWatch#AWSservice#AmazonEC2#EC2instance#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

Amazon CloudWatch Application Signals For AWS Lambda

Use CloudWatch Application Signals to monitor serverless application performance created using AWS Lambda.

An AWS built-in application performance monitoring (APM) solution called Amazon CloudWatch Application Signals was introduced in November 2023 to address the challenge of tracking the performance of distributed systems for apps running on Amazon EKS, Amazon ECS, and Amazon EC2. Application Signals expedites troubleshooting and minimizes application downtime by automatically correlating telemetry across metrics, traces, and logs. You may increase your productivity by concentrating on the apps that support your most important business operations using CloudWatch Application Signals‘ integrated experience for performance analysis within your applications.

CloudWatch Application Signals for Lambda

In order to remove the hassles of manual setup and performance concerns associated with evaluating the health of applications for Lambda functions, AWS is announcing today that Application Signals for AWS Lambda is now available. You can now gather application golden metrics (the volume of requests coming in and going out, latency, problems, and errors) with CloudWatch Application Signals for Lambda.

By abstracting away the intricacy of the underlying infrastructure, AWS Lambda frees you from having to worry about server health and lets you concentrate on developing your application. This enables you to concentrate on keeping an eye on the functionality and health of your apps, which is essential to running them at optimal efficiency and accessibility. This necessitates having a thorough understanding of performance insights for your vital business processes and application programming interfaces (APIs), including transaction volume, latency spikes, availability dips, and mistakes.

In the past, determining the underlying cause of anomalies required a significant amount of time spent correlating disparate logs, KPIs, and traces across several tools, which increased mean time to recovery (MTTR) and operational expenses. Moreover, developing your own APM solutions using open source (OSS) libraries or custom code was laborious, complicated, costly to operate, and frequently led to longer cold start times and deployment issues when overseeing sizable fleets of Lambda functions. Without the need for manual instrumentation or code modifications from your application developers, you can now easily monitor and debug serverless application health and performance concerns with CloudWatch Application Signals.

How it works

By delving deeper into performance measurements for crucial business operations and APIs, you may quickly determine the underlying cause of performance abnormalities using CloudWatch Application Signals‘ pre-built, standardized dashboards. This aids in the visualization of the application topology, which displays how the function and its dependencies interact. To keep an eye on particular processes that are most important to you, you may also set Service Level Objectives (SLOs) on your applications. Setting a target that a webpage should render in 2000 ms 99.9 percent of the time over a rolling 28-day period is an example of a SLO.

CloudWatch Application Signals uses improved AWS Distro for OpenTelemetry (ADOT) modules to automatically instrument your Lambda function. You can swiftly monitor your apps with improved performance, including decreased cold start latency, memory usage, and function invocation duration.

Application Signals in the Lambda Console to gather different data on this application using an existing Lambda function called appsignals1.

You can enable the application signals and the Lambda service traces by choosing Monitoring and Operations Tools from the function’s Configuration tab.

This Lambda function is a resource that is attached to myAppSignalsApp, an application. For your application, you have established an SLO to track particular operations that are most important to you. Your objective is for the application to run within 10 ms 99.9 percent of the time within a rolling 1-day period.

After the function is called, it may take five to ten minutes for CloudWatch Application Signals to identify it. You will thus need to reload the Services page in order to view the service.

Now that you are on the Services page, you can see a list of every Lambda function that Application Signals has found for you. This is where any telemetry that is released will be seen.

Then, utilizing the newly gathered metrics of request volume, latency, faults, and failures, you can rapidly identify anomalies across your service’s various processes and dependencies and visualize the entire application topology from the Service Map. You can rapidly determine whether problems affecting end users are specific to a job or deployment by clicking into any point in time for any application metric graph to find connected traces and logs relating to that metric.

Now available

You can begin utilizing Amazon CloudWatch Application Signals for Lambda right now in any AWS Regions where both Lambda and Application Signals are accessible. Lambda functions that use Python and Node.js managed runtimes can now use Application Signals. Support for additional Lambda runtimes will be added soon.

Read more on govindhtech.com

#AmazonCloudWatch#AWSLambda#AmazonEC2#AmazonEKS#Lambdafunctions#applicationprogramminginterfaces#API#news#CloudWatchApplicationSignals#technoligy#technews#govindhtech

0 notes

Text

Aurora PostgreSQL Limitless Database: Unlimited Data Growth

Aurora PostgreSQL Limitless Database

The new serverless horizontal scaling (sharding) feature of Aurora PostgreSQL Limitless Database, is now generally available.

With Aurora PostgreSQL Limitless Database, you may distribute a database workload across several Aurora writer instances while still being able to utilize it as a single database, allowing you to extend beyond the current Aurora restrictions for write throughput and storage.

During the AWS re:Invent 2023 preview of Aurora PostgreSQL Limitless Database, to described how it employs a two-layer architecture made up of several database nodes in a DB shard group, which can be either routers or shards to grow according to the demand.Image Credit To AWS

Routers: Nodes known as routers receive SQL connections from clients, transmit SQL commands to shards, keep the system consistent, and provide clients with the results.

Shards: Routers can query nodes that hold a fraction of tables and complete copies of data.

Your data will be listed in three different table types: sharded, reference, and standard.

Sharded tables: These tables are dispersed among several shards. Based on the values of specific table columns known as shard keys, data is divided among the shards. They are helpful for scaling your application’s biggest, most I/O-intensive tables.

Reference tables: These tables eliminate needless data travel by copying all of the data on each shard, allowing join queries to operate more quickly. For reference data that is rarely altered, such product catalogs and zip codes, they are widely utilized.

Standard tables: These are comparable to standard PostgreSQL tables in Aurora. To speed up join queries by removing needless data travel, standard tables are grouped together on a single shard. From normal tables, sharded and reference tables can be produced.

Massive volumes of data can be loaded into the Aurora PostgreSQL Limitless Database and queried using conventional PostgreSQL queries after the DB shard group and your sharded and reference tables have been formed.

Getting started with the Aurora PostgreSQL Limitless Database

An Aurora PostgreSQL Limitless Database DB cluster can be created, a DB shard group added to the cluster, and your data queried via the AWS Management Console and AWS Command Line Interface (AWS CLI).

Establish a Cluster of Aurora PostgreSQL Limitless Databases

Choose Create database when the Amazon Relational Database Service (Amazon RDS) console is open. Select Aurora PostgreSQL with Limitless Database (Compatible with PostgreSQL 16.4) and Aurora (PostgreSQL Compatible) from the engine choices.Image Credit To AWS

Enter a name for your DB shard group and the minimum and maximum capacity values for all routers and shards as determined by Aurora Capacity Units (ACUs) for the Aurora PostgreSQL Limitless Database. This maximum capacity determines how many routers and shards are initially present in a DB shard group. A node’s capacity is increased by Aurora PostgreSQL Limitless Database when its present utilization is insufficient to manage the load. When the node’s capacity is greater than what is required, it reduces it to a lower level.Image Credit To AWS

There are three options for DB shard group deployment: no compute redundancy, one compute standby in a different Availability Zone, or two compute standbys in one Availability Zone.

You can select Create database and adjust the remaining DB parameters as you see fit. The DB shard group appears on the Databases page when it has been formed.Image Credit To AWS

In addition to changing the capacity, splitting a shard, or adding a router, you can connect, restart, or remove a DB shard group.

Construct Limitless Database tables in Aurora PostgreSQL

As previously mentioned, the Aurora PostgreSQL Limitless Database contains three different types of tables: standard, reference, and sharded. You can make new sharded and reference tables or convert existing standard tables to sharded or reference tables for distribution or replication.

By specifying the table construction mode, you can use variables to create reference and sharded tables. Until a new mode is chosen, the tables you create will use this mode. The examples that follow demonstrate how to create reference and sharded tables using these variables.

For instance, make a sharded table called items and use the item_id and item_cat columns to build a shard key.SET rds_aurora.limitless_create_table_mode='sharded'; SET rds_aurora.limitless_create_table_shard_key='{"item_id", "item_cat"}'; CREATE TABLE items(item_id int, item_cat varchar, val int, item text);

Next, construct a sharded table called item_description and collocate it with the items table. The shard key should be made out of the item_id and item_cat columns.SET rds_aurora.limitless_create_table_collocate_with='items'; CREATE TABLE item_description(item_id int, item_cat varchar, color_id int, ...);

Using the rds_aurora.limitless_tables view, you may obtain information on Limitless Database tables, including how they are classified.SET rds_aurora.limitless_create_table_mode='reference'; CREATE TABLE colors(color_id int primary key, color varchar);

It is possible to transform normal tables into reference or sharded tables. The source standard table is removed after the data has been transferred from the standard table to the distributed table during the conversion. For additional information, see the Amazon Aurora User Guide’s Converting Standard Tables to Limitless Tables section.postgres_limitless=> SELECT * FROM rds_aurora.limitless_tables; table_gid | local_oid | schema_name | table_name | table_status | table_type | distribution_key -----------+-----------+-------------+-------------+--------------+-------------+------------------ 1 | 18797 | public | items | active | sharded | HASH (item_id, item_cat) 2 | 18641 | public | colors | active | reference | (2 rows)

Run queries on tables in the Aurora PostgreSQL Limitless Database

The Aurora PostgreSQL Limitless Database supports PostgreSQL query syntax. With PostgreSQL, you can use psql or any other connection tool to query your limitless database. You can use the COPY command or the data loading program to import data into Aurora Limitless Database tables prior to querying them.

Connect to the cluster endpoint, as indicated in Connecting to your Aurora Limitless Database DB cluster, in order to execute queries. The router to which the client submits the query and shards where the data is stored is where all PostgreSQL SELECT queries are executed.

Two querying techniques are used by Aurora PostgreSQL Limitless Database to accomplish a high degree of parallel processing:

Single-shard queries and distributed queries. The database identifies whether your query is single-shard or distributed and handles it appropriately.

Single-shard queries: All of the data required for the query is stored on a single shard in a single-shard query. One shard can handle the entire process, including any created result set. The router’s query planner forwards the complete SQL query to the appropriate shard when it comes across a query such as this.

Distributed query: A query that is executed over many shards and a router. One of the routers gets the request. The distributed transaction, which is transmitted to the participating shards, is created and managed by the router. With the router-provided context, the shards generate a local transaction and execute the query.

To configure the output from the EXPLAIN command for single-shard query examples, use the following arguments.postgres_limitless=> SET rds_aurora.limitless_explain_options = shard_plans, single_shard_optimization; SET postgres_limitless=> EXPLAIN SELECT * FROM items WHERE item_id = 25; QUERY PLAN -------------------------------------------------------------- Foreign Scan (cost=100.00..101.00 rows=100 width=0) Remote Plans from Shard postgres_s4: Index Scan using items_ts00287_id_idx on items_ts00287 items_fs00003 (cost=0.14..8.16 rows=1 width=15) Index Cond: (id = 25) Single Shard Optimized (5 rows)

You can add additional items with the names Book and Pen to the items table to demonstrate distributed queries.postgres_limitless=> INSERT INTO items(item_name)VALUES ('Book'),('Pen')

A distributed transaction on two shards is created as a result. The router passes the statement to the shards that possess Book and Pen after setting a snapshot time during the query execution. The client receives the outcome of the router’s coordination of an atomic commit across both shards.

The Aurora PostgreSQL Limitless Database has a function called distributed query tracing that may be used to track and correlate queries in PostgreSQL logs.

Important information

A few things you should be aware of about this functionality are as follows:

Compute: A DB shard group’s maximum capacity can be specified between 16 and 6144 ACUs, and each DB cluster can only have one DB shard group. Get in touch with us if you require more than 6144 ACUs. The maximum capacity you provide when creating a DB shard group determines the initial number of routers and shards. When you update a DB shard group’s maximum capacity, the number of routers and shards remains unchanged.

Storage: The only cluster storage configuration that Aurora PostgreSQL Limitless Database offers is Amazon Aurora I/O-Optimized DB. 128 TiB is the maximum capacity of each shard. For the entire DB shard group, reference tables can only be 32 TiB in size.

Monitoring: PostgreSQL’s vacuuming tool can help you free up storage space by cleaning up your data. Aurora PostgreSQL Limitless Database monitoring can be done with Amazon CloudWatch, Amazon CloudWatch Logs, or Performance Insights. For monitoring and diagnostics, you can also utilize the new statistics functions, views, and wait events for the Aurora PostgreSQL Limitless Database.

Available now

PostgreSQL 16.4 works with the AWS Aurora PostgreSQL Limitless Database. These regions are included: Asia Pacific (Hong Kong), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Tokyo), US East (N. Virginia), US East (Ohio), and US West (Oregon). Try the Amazon RDS console’s Aurora PostgreSQL Limitless Database.

Read more on Govindhtech.com

#AuroraPostgreSQL#Database#AWS#SQL#PostgreSQL#AmazonRelationalDatabaseService#AmazonAurora#AmazonCloudWatch#News#Technews#Technologynews#Technology#Technologytrendes#govindhtech

0 notes

Text

CellProfiler And Cell Painting Batch(CPB) In Drug Discovery

Cell Painting

Enhancing Drug Discovery with high-throughput AWS Cell Painting. Are you having trouble processing cell images? Let’s see how AWS’s Cell Painting Batch offering has revolutionized cell analysis for life sciences clients.

Introduction

The analysis of microscope-captured cell pictures is a key component in the area of drug development. To comprehend cellular activities and phenotypes, a novel method for high-content screening called “Cell Painting” has surfaced. Prominent biopharma businesses have begun using technologies like the Broad Institute’s CellProfiler software, which is designed for cell profiling.

On the other hand, a variety of imaging methods and exponential data expansion provide formidable obstacles. Here, they will discover how AWS has been used by life sciences clients to create a distributed, scalable, and effective cell analysis system.

Current Circumstance

Scalable processing and storage are needed for cell painting operations in order to support big file sizes and high-throughput picture analysis. These days, scientists employ open-source tools such as CellProfiler, but to run automated pipelines without worrying about infrastructure maintenance, they need scalable infrastructure.

In addition to handling massive amounts of data on microscopic images and infrastructure provisioning, scientists are attempting to conduct scientific research. It is necessary for researchers to work together safely and productively across labs using user-friendly tools. The cornerstone of research is scientific reproducibility, which requires scientists to duplicate other people’s discoveries when publishing in highly regarded publications or even examining data from their own labs.

CellProfiler software

Obstacles

Customers in the life sciences sector encountered the following difficulties while using stand-alone instances of technologies such as CellProfiler software:

Difficulties in adjusting to workload fluctuations.

Problems with productivity in intricate, time-consuming tasks.

Problems in teamwork across teams located around the company.

Battles to fulfill the need for activities requiring a lot of computing.

Cluster capacity problems often result in unfinished work, delays, and inefficiencies.

The lack of a centralized data hub results in problems with data access.

Cellprofiler Pipeline

Solution Overview

AWS solution architects collaborated with life sciences clients to create a novel solution known as Cell Painting Batch (CPB) in order to solve these issues. CellProfiler Pipelines are operated on AWS in a scalable and distributed architecture by CPB using the Broad Institute’s CellProfiler image. With CPB, researchers may analyze massive amounts of images without having to worry about the intricate details of infrastructure management. Furthermore, the AWS Cloud Development Kit (CDK), which simplifies infrastructure deployment and management, is used in the construction of the CPB solution.

The whole procedure is automated; upon uploading a picture, an Amazon Simple Queue Service (SQS) message is issued that starts the image processing and ends with the storing of the results. This gives researchers a scalable, automated, and effective way to handle large-scale image processing requirements.Image credit to AWS

This figure illustrates how to dump photos from microscopes into an Amazon S3 bucket. AWS Lambda is triggered by user SQS messages. Lambda submits AWS Batch tasks utilizing container images from the Amazon Elastic Container Registry. Photos are processed using AWS Batch, and the results are sent to Amazon S3 for analysis.

Workflow

The goal of the Cell Painting Batch (CPB) solution on AWS is to simplify the intricate process of processing cell images so that researchers may concentrate on what really counts extrapolating meaning from the data. This is a detailed explanation of how the CPB solution works:

Images are obtained by researchers using microscopes or other means.

Then, in order to serve as an image repository, these photos are uploaded to a specific Amazon Simple Storage Service (S3) bucket.

After storing the photos, researchers send a message to Amazon Simple Queue Service (SQS) specifying the location of the images and the CellProfiler pipeline they want to use. In essence, this message is a request for image processing that is delivered to the SQS service.

An automated AWS Lambda function is launched upon receiving a SQS message. The main responsibility of this function is to start the AWS Batch job for the specific image processing request.

Amazon Batch assesses the needs of the task. AWS Batch dynamically provisioned the required Amazon Elastic Compute Cloud (EC2) instances based on the job.

It retrieves the designated container image that is kept in the Amazon Elastic Container Registry (ECR). This container runs the specified CellProfiler pipeline inside AWS Batch. The integration of Amazon FSx for Lustre with the S3 bucket guarantees that containers may access data quickly.

The picture is processed by the CellProfiler program within the container using a predetermined pipeline. This may include doing image processing operations such as feature extraction and segmentation.

Following CellProfiler post-processing, the outcomes are stored once again to the assigned S3 bucket at the address mentioned in the SQS message.

Scholars use the S3 bucket to get and examine data for their investigations.

Image Credit To AWS

Because the workflow is automated, the solution will begin analyzing images and storing the findings as soon as a picture is uploaded and a SQS message is issued. This gives researchers a scalable, automated, and effective way to handle large-scale image processing requirements.

Safety

For cell painting datasets and workflows, AWS’s Cell Painting Batch (CPB) provides a strong security architecture. The solution offers top-notch data safety with encrypted data storage at rest and in transit, controlled access via AWS Identity and Access Management (IAM), and improved network security via an isolated VPC. Furthermore, the security posture is strengthened by ongoing monitoring using security technologies like Amazon Cloud Watch.

It is advisable to implement additional mitigations, such as version control for system configurations, strong authentication with multi-factor authentication (MFA), protection against excessive resource usage with Amazon Cloud Watch and AWS Service Quotas, cost monitoring with AWS Budgets, and container scanning with Amazon Inspector, in order to further strengthen security.

Life Sciences Customer Success Stories

Customers in the biological sciences have changed drastically after switching to CPB. This streamlined processing pipelines, sped up photo processing, and fostered collaboration. The system’s built-in scalability can manage larger datasets to hasten medication development, making these enterprises future-proof.

Customizing the Solution

CPB may be integrated with other AWS services due to its modularity. Options include AWS Step Functions for efficient process orchestration, Amazon AppStream for browser-based access to scientific equipment, AWS Service Catalog for self-service installs, and Amazon SageMaker for machine learning workloads. Github code has a parameters file for instance class, timeout duration, and other tweaks.

In summary

The cell painting batch approach may boost researcher productivity by eliminating infrastructure management. This method allows scalable and fast image analysis, speeding therapy development. It also lets researchers self-manage processing and distribution, reducing infrastructure administration needs.

The AWS CPB solution has transformed biopharmaceutical cell image processing and helped life sciences companies. A unique approach that combines scalability, automation, and efficiency allows life sciences organizations to easily handle large cell imaging workloads and accelerate drug development.

Read more on Govindhtech.com

#CellProfiler#AmazonS3#DrugDiscovery#AWS#AmazonSimpleQueueService#AmazonCloudWatch#AmazonSageMaker#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes