#artifacts Azure devops build artifacts

Explore tagged Tumblr posts

Text

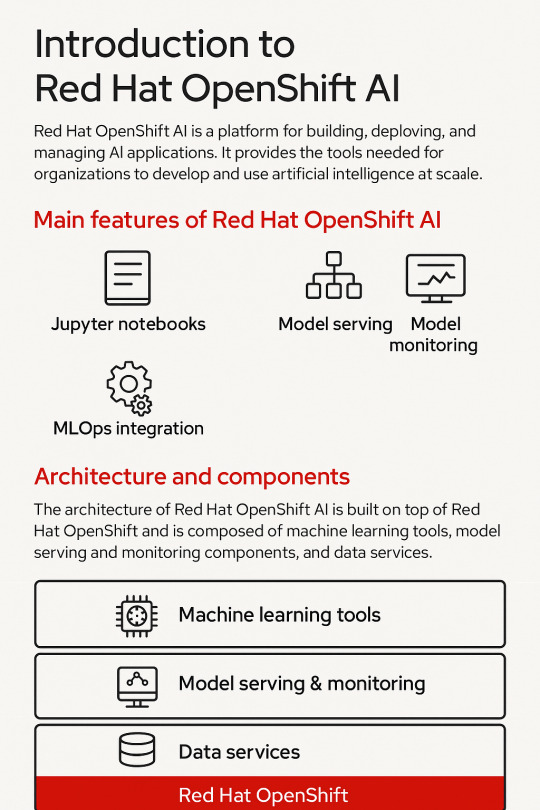

Introduction to Red Hat OpenShift AI: Features, Architecture & Components

In today’s data-driven world, organizations need a scalable, secure, and flexible platform to build, deploy, and manage artificial intelligence (AI) and machine learning (ML) models. Red Hat OpenShift AI is built precisely for that. It provides a consistent, Kubernetes-native platform for MLOps, integrating open-source tools, enterprise-grade support, and cloud-native flexibility.

Let’s break down the key features, architecture, and components that make OpenShift AI a powerful platform for AI innovation.

🔍 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly known as OpenShift Data Science) is a fully supported, enterprise-ready platform that brings together tools for data scientists, ML engineers, and DevOps teams. It enables rapid model development, training, and deployment on the Red Hat OpenShift Container Platform.

🚀 Key Features of OpenShift AI

1. Built for MLOps

OpenShift AI supports the entire ML lifecycle—from experimentation to deployment—within a consistent, containerized environment.

2. Integrated Jupyter Notebooks

Data scientists can use Jupyter notebooks pre-integrated into the platform, allowing quick experimentation with data and models.

3. Model Training and Serving

Use Kubernetes to scale model training jobs and deploy inference services using tools like KServe and Seldon Core.

4. Security and Governance

OpenShift AI integrates enterprise-grade security, role-based access controls (RBAC), and policy enforcement using OpenShift’s built-in features.

5. Support for Open Source Tools

Seamless integration with open-source frameworks like TensorFlow, PyTorch, Scikit-learn, and ONNX for maximum flexibility.

6. Hybrid and Multicloud Ready

You can run OpenShift AI on any OpenShift cluster—on-premise or across cloud providers like AWS, Azure, and GCP.

🧠 OpenShift AI Architecture Overview

Red Hat OpenShift AI builds upon OpenShift’s robust Kubernetes platform, adding specific components to support the AI/ML workflows. The architecture broadly consists of:

1. User Interface Layer

JupyterHub: Multi-user Jupyter notebook support.

Dashboard: UI for managing projects, models, and pipelines.

2. Model Development Layer

Notebooks: Containerized environments with GPU/CPU options.

Data Connectors: Access to S3, Ceph, or other object storage for datasets.

3. Training and Pipeline Layer

Open Data Hub and Kubeflow Pipelines: Automate ML workflows.

Ray, MPI, and Horovod: For distributed training jobs.

4. Inference Layer

KServe/Seldon: Model serving at scale with REST and gRPC endpoints.

Model Monitoring: Metrics and performance tracking for live models.

5. Storage and Resource Management

Ceph / OpenShift Data Foundation: Persistent storage for model artifacts and datasets.

GPU Scheduling and Node Management: Leverages OpenShift for optimized hardware utilization.

🧩 Core Components of OpenShift AI

ComponentDescriptionJupyterHubWeb-based development interface for notebooksKServe/SeldonInference serving engines with auto-scalingOpen Data HubML platform tools including Kafka, Spark, and moreKubeflow PipelinesWorkflow orchestration for training pipelinesModelMeshScalable, multi-model servingPrometheus + GrafanaMonitoring and dashboarding for models and infrastructureOpenShift PipelinesCI/CD for ML workflows using Tekton

🌎 Use Cases

Financial Services: Fraud detection using real-time ML models

Healthcare: Predictive diagnostics and patient risk models

Retail: Personalized recommendations powered by AI

Manufacturing: Predictive maintenance and quality control

🏁 Final Thoughts

Red Hat OpenShift AI brings together the best of Kubernetes, open-source innovation, and enterprise-level security to enable real-world AI at scale. Whether you’re building a simple classifier or deploying a complex deep learning pipeline, OpenShift AI provides a unified, scalable, and production-grade platform.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Elevate Your Career with DevOps Training and Digital Marketing Expertise at Artifact Geeks Jaipur

In today’s digital-first world, gaining technical and marketing skills is no longer optional—it’s essential. Whether you’re a student, a working professional, or someone switching careers, two fields stand out in terms of job demand, salary potential, and future growth: DevOps and Digital Marketing. If you’re based in Rajasthan, there’s no better place to upskill than Artifact Geeks, home to the most advanced DevOps training in Jaipur and widely recognized as the best digital marketing institute in Jaipur.

This blog explores how Artifact Geeks empowers learners with hands-on training, expert mentorship, and job-ready skills that open doors to high-growth careers in IT and marketing.

Why Choose Artifact Geeks?

At Artifact Geeks, we believe that education should be practical, personalized, and powerful. Our mission is to bridge the gap between theoretical knowledge and real-world application. That’s why our programs are designed by industry experts and delivered in formats that cater to both beginners and professionals.

What Sets Us Apart?

Industry-aligned Curriculum We teach the tools and strategies companies actually use.

Experienced Mentors Our trainers are working professionals with real-time experience in DevOps and Digital Marketing.

Hands-on Projects Learn by doing—every student works on live industry projects.

Placement Assistance Get job-ready with resume reviews, mock interviews, and interview connections.

Flexible Learning Modes Online and offline classes are available to suit your schedule.

Let’s dive deeper into our two flagship programs: DevOps training in Jaipur and Digital Marketing course in Jaipur.

DevOps Training in Jaipur – Become a Cloud & Automation Expert

DevOps is no longer a buzzword—it’s a must-have approach for modern IT organizations. The combination of development and operations, DevOps streamlines software delivery through automation, collaboration, and monitoring. As more companies adopt cloud infrastructure and CI/CD pipelines, the demand for skilled DevOps engineers is skyrocketing.

Why Learn DevOps?

High-paying job roles: DevOps Engineer, Site Reliability Engineer, Automation Engineer

In-demand skills: Jenkins, Docker, Kubernetes, Git, Terraform, Ansible, AWS/Azure

Fast-track to leadership roles in cloud and infrastructure teams

Ideal for developers, sysadmins, and network engineers seeking career growth

Artifact Geeks’ DevOps Course Highlights:

Comprehensive Modules: Linux, Git & GitHub, CI/CD pipelines, Docker, Kubernetes, Terraform, Jenkins, Ansible, AWS Cloud, Monitoring Tools (Prometheus, Grafana)

Hands-On Labs: Work on live cloud-based projects, simulate real environments

Job-Oriented Curriculum: Resume building, GitHub portfolio, and real-world scenarios

Flexible Learning: Weekend and weekday batches with online/offline options

Career Support: Interview preparation, internship opportunities, and placement assistance

Whether you’re a fresher looking to build a strong IT foundation or a tech professional aiming to upgrade your skills, our DevOps training in Jaipur equips you with everything you need to land high-paying roles in the global tech market.

Best Digital Marketing Institute in Jaipur – Master the Art of Online Success

Digital Marketing is one of the fastest-growing fields globally, offering career opportunities in content creation, SEO, paid advertising, social media, email marketing, analytics, and more. As businesses go online, the demand for digital-savvy marketers has never been higher.

At Artifact Geeks, we’re proud to be regarded as the best digital marketing institute in Jaipur—a title we’ve earned by consistently delivering results-driven training to thousands of successful students.

Why Learn Digital Marketing?

Endless career paths: SEO Executive, Social Media Manager, Content Marketer, PPC Specialist, Email Marketer, Freelancer, Entrepreneur

High demand across every industry: real estate, education, fashion, tech, healthcare, etc.

Ideal for students, freshers, business owners, and freelancers

Opportunity to work remotely, start your own agency, or freelance globally

Our Digital Marketing Course Highlights:

All-in-One Curriculum: SEO, SEM, SMM, Content Marketing, Google Ads, Facebook Ads, Email Marketing, WordPress, Google Analytics, Affiliate Marketing, E-commerce Strategies

Live Campaigns: Learn by running actual ad campaigns on Google and Facebook

Certifications: Google, HubSpot, Facebook Blueprint, and Artifact Geeks certificate

Real Client Projects: Apply your learning on real brands and businesses

Portfolio Development: Build a strong LinkedIn and GitHub presence

Mentorship & Career Support: One-on-one guidance and interview training

Our course doesn’t just teach theory—we train you to become a confident, competent digital marketer ready for the real world. No wonder we’re considered the best digital marketing institute in Jaipur by our students and alumni.

What Makes Artifact Geeks Unique?

There are many training centers in Jaipur, but here’s what makes Artifact Geeks stand out from the crowd:

Small Batch Sizes: Personalized attention for every student

Project-Based Learning: Real-world experience to build confidence

Placement Network: Collaborations with local and national tech firms

Internship Programs: Earn while you learn with hands-on internships

Lifetime Access: Stay updated with lifetime access to course content and community

We believe that education is not a one-time event—it’s a journey. That’s why we continue to support our students long after their course is complete.

What Our Students Say

“I enrolled in DevOps training at Artifact Geeks and got placed in an MNC within 3 months. The hands-on labs and guidance helped me crack the interview with confidence!” — Rohan S., DevOps Engineer

“Artifact Geeks truly is the best digital marketing institute in Jaipur. The trainers are amazing and helped me build my first client portfolio even before completing the course.” — Simran K., Digital Marketing Freelancer

“From beginner to certified professional, Artifact Geeks helped me transition into a digital marketing career after leaving my 9-to-5 job.” — Ayush T., Social Media Manager

Who Can Join Our Courses?

Students and Freshers: Gain job-ready skills with no prior experience

Working Professionals: Upskill for promotions or career switches

Freelancers & Creators: Learn to market yourself and attract clients

Business Owners: Master digital tools to grow your brand

Tech Enthusiasts: Understand DevOps and automation tools for the future

No matter where you’re starting, Artifact Geeks is here to guide you forward.

Final Thoughts

The digital age demands digital skills. Whether you're eyeing a career in cloud infrastructure or looking to dominate online marketing, Artifact Geeks offers the best learning environment to transform your ambition into reality.

With top-rated DevOps training in Jaipur and a reputation as the best digital marketing institute in Jaipur, Artifact Geeks is your launchpad to a successful and future-proof career.

Ready to Take the First Step?

📍 Visit Us: PAWAN PUTRA, 138, G, Karni Palace Rd, Panchyawala, Jaipur, Rajasthan 302034 🌐 Website: https://artifactgeeks.com/ 📞 Call Us: 9024000740 📧 Email: [email protected]

0 notes

Text

CI/CD Pipelines in the Cloud: How to Achieve Faster, Safer Deployments

In today’s digital-first world, speed and reliability in software delivery are critical. With cloud infrastructure becoming the new normal, CI/CD pipelines (Continuous Integration and Continuous Deployment) are key to enabling faster, safer deployments. They help development teams automate code integration, testing, and deployment—removing bottlenecks and minimizing risks.

In this blog, we’ll explore how CI/CD works in cloud environments, why it’s essential for modern development, and how it supports seamless delivery of scalable, secure applications.

🛠️ What Is CI/CD?

Continuous Integration (CI): Developers frequently merge code into a shared repository. Each change triggers automated tests to catch bugs early.

Continuous Deployment (CD): After passing tests, code is automatically deployed to production or staging environments without manual intervention.

Together, CI/CD creates a feedback loop that ensures rapid, reliable software delivery.

🌐 Why CI/CD Pipelines Matter in the Cloud

CI/CD is not a luxury—it’s a necessity in the cloud. Here’s why:

Cloud environments are dynamic: With auto-scaling, microservices, and distributed systems, manual deployments are error-prone and slow.

Rapid release cycles: Customers expect continuous improvements. CI/CD helps teams ship features weekly or even daily.

Consistency and traceability: Every change is logged, tested, and version-controlled—reducing deployment risks.

The cloud provides the perfect infrastructure for CI/CD pipelines, offering scalability, flexibility, and automation capabilities.

🔁 How a Cloud-Based CI/CD Pipeline Works

A typical CI/CD pipeline in the cloud includes:

Code Commit: Developers push code to a Git repository (e.g., GitHub, GitLab, Bitbucket).

Build & Test: Cloud-native CI tools (like GitHub Actions, AWS CodeBuild, or CircleCI) compile the code and run unit/integration tests.

Artifact Creation: Build artifacts (e.g., Docker images) are stored in cloud repositories (e.g., Amazon ECR, Azure Container Registry).

Deployment: Tools like AWS CodeDeploy, Azure DevOps, or ArgoCD deploy the artifacts to target environments.

Monitoring: Real-time monitoring and alerts ensure the deployment is successful and stable.

✅ Benefits of Cloud CI/CD

1. Faster Time-to-Market

Automated testing and deployment reduce manual overhead, accelerating release cycles.

2. Improved Code Quality

Each commit is tested, catching bugs early and ensuring only clean code reaches production.

3. Consistent Deployments

Standardized pipelines eliminate the “it worked on my machine” problem, ensuring repeatable results.

4. Efficient Collaboration

CI/CD fosters DevOps culture, encouraging collaboration between developers, testers, and operations.

5. Scalability on Demand

Cloud CI/CD systems scale automatically with the size and complexity of the application.

🔐 Security and Compliance in CI/CD

Modern pipelines are integrating security and compliance checks directly into the deployment process (DevSecOps). These include:

Static and dynamic code analysis

Container vulnerability scans

Secrets detection

Infrastructure compliance checks

By catching vulnerabilities early, businesses reduce risks and ensure regulatory alignment.

📊 CI/CD with IaC and Automated Testing

CI/CD becomes even more powerful when combined with:

Infrastructure as Code (IaC) for consistent cloud infrastructure provisioning

Automated Testing to validate performance, functionality, and security

Together, they create a fully automated, end-to-end deployment ecosystem that supports cloud-native scalability and resilience.

🏢 How Salzen Cloud Builds Smart CI/CD Solutions

At Salzen Cloud, we design and implement cloud-native CI/CD pipelines tailored to your business needs. Whether you're deploying microservices on Kubernetes, serverless applications, or hybrid environments, we help you:

Automate build, test, and deployment workflows

Integrate security and compliance into your pipelines

Optimize for speed, reliability, and rollback safety

Monitor performance and deployment health in real time

Our solutions empower teams to ship faster, fix faster, and innovate with confidence.

🧩 Conclusion

CI/CD is the backbone of modern cloud development. It streamlines the software delivery process, reduces manual errors, and provides the agility needed to stay competitive.

To achieve faster, safer deployments in the cloud:

Adopt CI/CD pipelines for all projects

Integrate IaC and automated testing

Shift security left with DevSecOps practices

Continuously monitor and optimize your pipelines

With the right CI/CD strategy, your team can move fast without breaking things—delivering value to users at cloud speed.

Want help designing a CI/CD pipeline for your cloud environment? Let Salzen Cloud show you how.

0 notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

As organizations continue their journey into cloud-native and containerized applications, the need for robust, scalable, and persistent storage solutions has never been more critical. Red Hat OpenShift, a leading Kubernetes platform, addresses this need with Red Hat OpenShift Data Foundation (ODF)—an integrated, software-defined storage solution designed specifically for OpenShift environments.

In this blog post, we’ll explore how the DO370 course equips IT professionals to manage enterprise-grade Kubernetes storage using OpenShift Data Foundation.

What is OpenShift Data Foundation?

Red Hat OpenShift Data Foundation (formerly OpenShift Container Storage) is a unified and scalable storage solution built on Ceph, NooBaa, and Rook. It provides:

Block, file, and object storage

Persistent volumes for containers

Data protection, encryption, and replication

Multi-cloud and hybrid cloud support

ODF is deeply integrated with OpenShift, allowing for seamless deployment, management, and scaling of storage resources within Kubernetes workloads.

Why DO370?

The DO370: Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation course is designed for OpenShift administrators and storage specialists who want to gain hands-on expertise in deploying and managing ODF in enterprise environments.

Key Learning Outcomes:

Understand ODF Architecture Learn how ODF components work together to provide high availability and performance.

Deploy ODF on OpenShift Clusters Hands-on labs walk through setting up ODF in a variety of topologies, from internal mode (hyperconverged) to external Ceph clusters.

Provision Persistent Volumes Use Kubernetes StorageClasses and dynamic provisioning to provide storage for stateful applications.

Monitor and Troubleshoot Storage Issues Utilize tools like Prometheus, Grafana, and the OpenShift Console to monitor health and performance.

Data Resiliency and Disaster Recovery Configure mirroring, replication, and backup for critical workloads.

Manage Multi-cloud Object Storage Integrate NooBaa for managing object storage across AWS S3, Azure Blob, and more.

Enterprise Use Cases for ODF

Stateful Applications: Databases like PostgreSQL, MongoDB, and Cassandra running in OpenShift require reliable persistent storage.

AI/ML Workloads: High throughput and scalable storage for datasets and model checkpoints.

CI/CD Pipelines: Persistent storage for build artifacts, logs, and containers.

Data Protection: Built-in snapshot and backup capabilities for compliance and recovery.

Real-World Benefits

Simplicity: Unified management within OpenShift Console.

Flexibility: Run on-premises, in the cloud, or in hybrid configurations.

Security: Native encryption and role-based access control (RBAC).

Resiliency: Automatic healing and replication for data durability.

Who Should Take DO370?

OpenShift Administrators

Storage Engineers

DevOps Engineers managing persistent workloads

RHCSA/RHCE certified professionals looking to specialize in OpenShift storage

Prerequisite Skills:��Familiarity with OpenShift (DO180/DO280) and basic Kubernetes concepts is highly recommended.

Final Thoughts

As containers become the standard for deploying applications, storage is no longer an afterthought—it's a cornerstone of enterprise Kubernetes strategy. Red Hat OpenShift Data Foundation ensures your applications are backed by scalable, secure, and resilient storage.

Whether you're modernizing legacy workloads or building cloud-native applications, DO370 is your gateway to mastering Kubernetes-native storage with Red Hat.

Interested in Learning More?

📘 Join HawkStack Technologies for instructor-led or self-paced training on DO370 and other Red Hat courses.

Visit our website for more details - www.hawkstack.com

0 notes

Text

Mastering TOSCA Automation: A Beginner's Step-by-Step Guide

Introduction

In today’s fast-paced software industry, test automation has become a necessity to ensure high-quality applications with faster time-to-market. One of the most advanced tools in the automation world is TOSCA, a robust model-based testing tool that enables testers to automate applications without extensive coding knowledge.

If you are new to TOSCA and looking to build expertise, this step-by-step guide will help you understand the fundamentals, master the tool, and gain practical hands-on experience with real-world applications.

youtube

This comprehensive tutorial will cover:

An overview of the TOSCA Automation Tool

Key features and benefits of using TOSCA for test automation

A step-by-step guide to learning TOSCA from scratch

Practical examples and real-world scenarios

How to enroll in a TOSCA Automation Tool Course to advance your skills

If you're serious about learning TOSCA Tool for Testing, this guide will provide the structured roadmap you need to master the tool effectively.

What is TOSCA? A Quick Overview

Understanding the TOSCA Automation Tool

TOSCA (Top Software Control Architecture) is an enterprise-level test automation tool developed by Tricentis. It supports a model-based testing approach, meaning testers can create automation scripts without writing complex code.

Why is TOSCA the Preferred Testing Tool?

Many organizations prefer TOSCA because:

✅ It supports end-to-end test automation (functional, API, and performance testing). ✅ It allows scriptless automation (ideal for non-programmers). ✅ It provides risk-based testing to prioritize critical test cases. ✅ It integrates with popular DevOps tools like Jenkins, Jira, and Azure DevOps.

This makes TOSCA one of the most efficient and scalable automation testing tools in the industry today.

Key Features of the TOSCA Automation Tool

1️⃣ Model-Based Test Automation (MBTA)

TOSCA does not rely on traditional record-and-playback methods. Instead, it creates models of test cases, making them reusable and easy to maintain.

2️⃣ Scriptless Test Automation

Unlike Selenium, where coding is required, TOSCA enables drag-and-drop test case creation.

3️⃣ Risk-Based Testing (RBT)

This feature prioritizes high-risk test cases to optimize testing efforts and reduce execution time.

4️⃣ Integration with DevOps and CI/CD

TOSCA seamlessly integrates with Jenkins, Bamboo, Azure DevOps, and other CI/CD tools.

5️⃣ Support for Multiple Platforms

It supports testing across web, mobile, API, SAP, and cloud applications.

6️⃣ Data-Driven Testing

Testers can perform parameterized testing using TOSCA’s test data management capabilities.

Why Choose TOSCA Over Other Test Automation Tools?

Feature

TOSCA

Selenium

UFT (Unified Functional Testing)

Coding Requirement

No

Yes

Yes

Test Design Approach

Model-Based

Script-Based

Script-Based

Integration with DevOps

Yes

Limited

Yes

Cross-Browser & Mobile Support

Yes

Yes

Yes

Risk-Based Testing

Yes

No

No

From the above comparison, it’s clear that TOSCA simplifies automation testing with low maintenance, high reusability, and scriptless automation.

Step-by-Step Guide to Master TOSCA Automation

Now, let’s go through a structured learning path to master TOSCA Tool for Testing from scratch.

Step 1: Install TOSCA and Set Up Your Environment

To begin, download the TOSCA Automation Tool from the Tricentis website. Follow these steps for installation:

Download TOSCA from the Tricentis support portal (requires registration).

Install TOSCA Commander (the core component for managing test cases).

Set up a workspace where all your test artifacts will be stored.

Configure required plugins for web, API, and mobile testing.

Once installed, launch TOSCA Commander to explore the user interface.

Step 2: Learn the TOSCA User Interface (UI)

When you open TOSCA Commander, you’ll see:

✅ Modules Section – Stores reusable automation components. ✅ Test Cases Section – Where you create, execute, and manage test cases. ✅ Execution List – Stores all test execution results. ✅ Test Data – Stores parameterized data for data-driven testing.

Take some time to explore the UI and familiarize yourself with these sections.

Step 3: Create Your First TOSCA Test Case

Let’s create a simple automated test case for a login page.

Example: Automating a Login Test Case in TOSCA

1️⃣ Create a New Test Case

Open TOSCA Commander → Right-click on the “Test Cases” section → Select “Create New Test Case.”

2️⃣ Scan the Application Under Test (AUT)

Use TOSCA’s XScan feature to identify UI elements (e.g., Username, Password, and Login button).

3️⃣ Add Test Steps

Drag the scanned elements into your test case.

Input test data (e.g., username and password).

4️⃣ Execute the Test

Click on “Run��� to execute the test.

View results in the Execution List.

This simple process demonstrates how easy it is to create automation scripts without coding in TOSCA.

Step 4: Data-Driven Testing in TOSCA

For more advanced test scenarios, you can use data-driven testing in TOSCA.

✅ Create a TestSheet – Define test data in TOSCA Test Data Management (TDM). ✅ Link Data to Test Steps – Parameterize the test case using dynamic values. ✅ Execute with Multiple Data Sets – Run the same test case with different inputs.

This technique helps in automating large test suites efficiently.

Step 5: API and Web Service Testing with TOSCA

TOSCA supports API testing without needing third-party tools like Postman or SoapUI.

✅ Import API Definitions – Add REST/SOAP API specifications. ✅ Create API Requests – Configure request headers, body, and authentication. ✅ Validate API Responses – Use assertions to check response codes and data.

By leveraging API testing, organizations ensure robust back-end validations.

Best Practices for Effective TOSCA Automation

✅ Use Modular Test Cases – Create reusable test modules to reduce maintenance.

✅ Implement CI/CD Integration – Connect TOSCA with Jenkins or Azure DevOps for continuous testing.

✅ Leverage Risk-Based Testing – Focus on critical functionalities first.

✅ Keep Test Data Separate – Use TOSCA Test Data Service (TDS) for better data management.

Following these best practices ensures scalability and efficiency in your automation testing efforts.

How to Enroll in a TOSCA Automation Tool Course

If you want to become a TOSCA expert, consider enrolling in a professional TOSCA Automation Tool Course.

Benefits of a TOSCA Certification Course:

📌 Structured Learning Path – Covers basic to advanced concepts. 📌 Hands-on Projects – Get real-world experience with live testing scenarios. 📌 Industry-Recognized Certification – Boosts your resume for QA automation roles.

Many platforms offer self-paced and instructor-led TOSCA training to help learners gain practical experience.

Conclusion

Mastering the TOSCA Tool for Testing requires structured learning and hands-on practice. By following this step-by-step guide, you can build expertise in automation testing and enhance your career in software testing.

Looking to fast-track your learning? Enroll in a TOSCA Automationl Course today and become a skilled automation tester.

0 notes

Text

Azure DevOps Certification: Your Ultimate Guide to Advancing Your IT Career

Azure DevOps Certification

With the growing need for efficient software development and deployment, DevOps has become a fundamental approach for IT professionals. Among the available certifications, Azure DevOps Certification is highly sought after, validating expertise in managing DevOps solutions on Microsoft Azure. This guide provides insights into the certification, its benefits, career opportunities, and preparation strategies.

Understanding Azure DevOps

Azure DevOps is a cloud-based service from Microsoft designed to facilitate collaboration between development and operations teams. It includes essential tools for:

Azure Repos – Source code management using Git repositories.

Azure Pipelines – Automating build and deployment processes.

Azure Test Plans – Enhancing software quality through automated testing.

Azure Artifacts – Managing dependencies effectively.

Azure Boards – Agile project tracking and planning.

Gaining expertise in these tools helps professionals streamline software development lifecycles.

Why Pursue Azure DevOps Certification?

Obtaining an Azure DevOps Certification offers multiple advantages, such as:

Expanded Job Prospects – Preferred by employers looking for skilled DevOps professionals.

Higher Earnings – Certified individuals often earn more than non-certified peers.

Global Recognition – A credential that validates proficiency in Azure DevOps practices.

Career Progression – Opens doors to senior roles like DevOps Engineer and Cloud Consultant.

Practical Knowledge – Helps professionals apply DevOps methodologies effectively in real-world scenarios.

Who Should Consider This Certification?

This certification benefits:

Software Developers

IT Administrators

Cloud Engineers

DevOps Professionals

System Architects

Anyone looking to Learn Azure DevOps and enhance their technical skill set will find this certification valuable.

Azure DevOps Certification Options

Microsoft offers several certifications focusing on DevOps practices:

1. AZ-400: Designing and Implementing Microsoft DevOps Solutions

This certification covers:

DevOps strategies and implementation

Continuous integration and deployment (CI/CD)

Infrastructure automation and monitoring

Security and compliance

2. Microsoft Certified: Azure Administrator Associate

Aimed at professionals handling Azure-based infrastructures, including:

Network and storage configurations

Virtual machine deployment

Identity and security management

3. Microsoft Certified: Azure Solutions Architect Expert

This certification is ideal for those designing cloud-based applications and managing Azure workloads efficiently.

Career Opportunities with Azure DevOps Certification

Certified professionals can pursue roles such as:

DevOps Engineer – Specializing in automation and CI/CD.

Cloud Engineer – Focusing on cloud computing solutions.

Site Reliability Engineer (SRE) – Ensuring system stability and performance.

Azure Consultant – Assisting businesses in implementing Azure DevOps.

Automation Engineer – Enhancing software deployment processes through scripting.

These positions offer competitive salaries and significant career growth.

Key Skills Required for Azure DevOps Certification

Professionals preparing for the certification should have expertise in:

CI/CD Pipelines – Automating application delivery.

Infrastructure as Code (IaC) – Managing cloud environments through scripts.

Version Control Systems – Proficiency in Git and repository management.

Containerization – Understanding Docker and Kubernetes.

Security & Monitoring – Implementing best practices for secure deployments.

How to Prepare for the Azure DevOps Certification Exam?

1. Review the Exam Syllabus

The AZ-400 exam covers DevOps methodologies, security, infrastructure, and compliance. Understanding the objectives helps in creating a structured study plan.

2. Enroll in an Azure DevOps Course

Joining an Azure DevOps Advanced Course provides structured learning and hands-on experience. Platforms like Kodestree offer expert-led training, ensuring candidates are well-prepared.

3. Gain Hands-on Experience

Practical exposure is crucial. Setting up DevOps pipelines, managing cloud resources, and automating processes will enhance understanding and confidence.

4. Utilize Microsoft Learning Resources

Microsoft provides free documentation, learning paths, and virtual labs to help candidates prepare effectively.

5. Engage with DevOps Communities

Joining study groups, participating in discussions, and attending webinars keeps candidates updated with industry best practices.

Exam Cost and Preparation Duration

1. Cost

The AZ-400 certification exam costs approximately $165 USD, subject to location-based variations.

2. Preparation Timeline

Beginners: 3-6 months

Intermediate Professionals: 2-3 months

Experienced Engineers: 1-2 months

Azure DevOps vs AWS DevOps Certification

Best Training Options for Azure DevOps Certification

If you’re searching for the best DevOps Training Institute in Bangalore, consider Kodestree. They offer:

Hands-on projects and practical experience.

Placement assistance for job seekers.

Instructor-led Azure DevOps Training Online options for remote learners.

Other learning formats include:

Azure Online Courses for flexible self-paced study.

DevOps Coaching in Bangalore for structured classroom training.

DevOps Classes in Bangalore with personalized mentoring.

Tips for Successfully Passing the Exam

Study Microsoft’s official guides.

Attempt practice exams to identify weak areas.

Apply knowledge to real-world scenarios.

Keep up with the latest Azure DevOps updates.

Revise consistently before the exam day.

Conclusion

Azure DevOps Certification is a valuable credential for IT professionals aiming to specialize in DevOps methodologies and Azure-based cloud solutions. The increasing demand for DevOps expertise makes this certification an excellent career booster, leading to higher salaries and better job prospects.

For structured learning and expert guidance, explore Kodestree’s Azure DevOps Training Online and start your journey toward a rewarding career in DevOps today!

#Azure Devops Advanced Course#Azure Devops Certification#Azure Online Courses#Azure Devops Course#Devops Certification#Devops Classes In Bangalore

0 notes

Text

Speed, Quality, and Collaboration: Why Azure DevOps is a Game-Changer...

What is Azure DevOps? 🚀

Azure DevOps is a suite of development tools from Microsoft that supports the entire software development lifecycle (SDLC). It streamlines planning, building, testing, releasing, and monitoring software in a collaborative, automated environment. Azure DevOps integrates with popular tools and supports both Agile and Waterfall methodologies.

Key features include:

Azure Boards 📋: Project management and task tracking.

Azure Repos 🔄: Git-based version control.

Azure Pipelines 🛠️: CI/CD for automating builds, tests, and deployments.

Azure Test Plans ✅: Managing manual and automated tests.

Azure Artifacts 📦: Package management for dependencies.

How Azure DevOps Differs from Traditional Development Practices 🤔

Automation vs. Manual 🔄:

Traditional development involves many manual tasks, leading to errors and delays. Azure DevOps automates tasks like integration and deployment, speeding up the development process and improving quality. ⚡

Collaboration & Integration 🤝:

In traditional setups, teams often work in silos. Azure DevOps fosters collaboration with integrated tools, ensuring all teams (development, QA, operations) work seamlessly together. 🧑🤝🧑

Agile vs. Waterfall 💧:

Traditional development follows a Waterfall model, which can be rigid and slow. Azure DevOps supports Agile methodologies like Scrum and Kanban, enabling iterative development, faster feedback, and adaptability. 🔄

CI/CD 🔁:

Traditional practices involve manual integration and deployment. Azure DevOps automates these processes, allowing continuous integration and deployment, ensuring faster, error-free releases. 🚀

Version Control 🗂️:

Traditional version control can be disjointed and difficult to manage. Azure DevOps uses Azure Repos for Git-based version control, enabling better collaboration and efficient code tracking. 🔍

Testing & Quality 🧪:

In traditional practices, testing often occurs late in the process. Azure DevOps integrates automated testing into the CI/CD pipeline, ensuring earlier detection of issues and higher-quality code. ✅

Real-Time Feedback ⏱️:

Traditional methods often lead to delayed feedback, causing rework. With Azure DevOps, teams get real-time feedback through testing and collaboration, improving responsiveness and iteration. ⚡

For More Info Visit: https://nareshit.com/courses/ms-azure-devops-online-training

0 notes

Text

How to Set Up a CI/CD Pipeline with Jenkins

Setting up a Continuous Integration and Continuous Deployment (CI/CD) pipeline with Jenkins involves automating the process of building, testing, and deploying your applications.

Jenkins is an open-source automation server widely used for its flexibility and integration capabilities.

Here’s a breakdown of how to set it up: Steps to Set Up a CI/CD Pipeline with Jenkins:

Install Jenkins Download and install Jenkins on your server or use a cloud-hosted version.

Configure the necessary plugins, like Git, Maven, Docker, or any tool relevant to your tech stack.

2. Configure Jenkins Set up credentials for your Git repository and other services (e.g., Docker Hub).

Define global settings such as tools (Java, Node.js, etc.) and environment variables.

3. Set Up a Job or Pipeline Create a new freestyle job or pipeline project in Jenkins.

Connect your job to the version control system (e.g., GitHub or GitLab) using a webhook to trigger builds on code commits.

4. Define Build Steps Add steps to fetch dependencies, compile code, and build artifacts (e.g., using Maven, Gradle, or npm).

Configure testing steps to run unit tests, integration tests, or static code analysis.

5. Integrate Deployment Add steps to deploy the build artifact to a staging or production environment.

Example tools include Docker, Kubernetes, AWS, or Azure pipelines. Securely use credentials or secrets to interact with external deployment services.

6. Implement Notifications Configure notifications for build status using tools like Slack, email, or Teams, ensuring your team stays updated.

7. Schedule or Trigger Jobs Set up automatic triggers (e.g., based on Git commits) or schedule jobs to run at specific intervals.

8. Monitor and Optimize Use Jenkins dashboards or plugins like Blue Ocean to monitor pipeline performance and debug issues.

Regularly update plugins and Jenkins to maintain compatibility and security.

Benefits of Using Jenkins for CI/CD:

Automation: Reduces manual effort in building and deploying applications.

Consistency: Ensures builds and deployments follow standard procedures.

Scalability: Supports distributed builds for large teams and projects.

Flexibility: Integrates with most tools and supports custom scripting.

By setting up a CI/CD pipeline with Jenkins, teams can accelerate development, reduce errors, and focus on delivering high-quality software.

WEBSITE: https://www.ficusoft.in/devops-training-in-chennai/

0 notes

Text

Implementing DevOps Practices in Azure, AWS, and Google Cloud

Nowadays, in the fast-paced digital world, companies are using DevOps techniques to automate their software/application development and final launch activities. Spiral Mantra is one of the best providers of DevOps services in USA, and we assist enterprises with effective utility solutions on top of all the leading cloud platforms, such as Microsoft Azure DevOps, Google Cloud Platform, and AWS. The full guide outlines the process for migrating and utilizing these platforms and what benefits each one brings.

Getting straight to the numbers, then, it has been seen that the utility computing industry is expected to witness a mind-blowing surge from $6.78 billion to $14.97 billion by the end of the month of 2026. The services of each provider are different from one another.

CodeDeploy and CodePipeline from AWS make it easier and faster to release software.

Azure slashes the team hustling with their Azure DevOps Services, serving up dope reports and allowing them to make predictive decisions.

GCP Cloud Build and integration with Google Kubernetes Engine help DevOps companies take on big computing tasks like professionals.

Master Cloud Computing (Azure, AWS, GCP) with DevOps

This combination of utility computing methodology with technologies changed how companies work on software development and delivery. All major cloud vendors have specific tools and services that can be used in operational workflows, so the DevOps engineer should know what they can and can’t do.

Microsoft Azure DevOps

Azure DevOps provide services that enable organizations to run end-to-end practices effectively. Some comprehensive key features include:

1. Azure Pipelines

Automated CI/CD pipeline creation

Multiple languages and platform compatibility

Compatibility with GitHub and Azure Repos

2. Azure Boards

Agile planning and project management

Work item tracking

Sprint planning capabilities

3. Azure Artifacts

Package management

Compatibility with popular package formats

Secure artifact storage

We are a leading DevOps company and consulting firm that can help startups and enterprises take advantage of these features to create scalable and agile utility computing processes for their business goals.

AWS DevOps Consulting Solutions

Amazon Web Services is a top-tier cloud provider and provides a complete AWS DevOps consulting toolkit enabling organizations to automate their workflow by offering true-tone services:

AWS CodePipeline: A fully hosted continuous integration and continuous delivery CI CD pipeline service automating the build, test, and deploy phases of your release cycle.

AWS CodeBuild: A scalable build service that builds source code, tests, and creates packages.

AWS CodeDeploy: Automate code deployments to any instance, such as EC2 instances, on-premises servers, and Lambda functions.

Infrastructure as Code (IaC): With AWS CloudFormation, you can create and provision infrastructure by using templates, making it repeatable and version-controlled.

Spiral Mantra AWS DevOps consulting experts aid businesses in implementing these solutions wisely, ensuring the utilization of AWS capabilities.

Google Cloud Platform DevOps

The Google Cloud Platform is known to implement the modern-day approach for DevOps services in USA by offering its integrated services and tools. It decreases operational headaches by around 75% with its dope orchestration abilities.

The Google Cloud Platform is rife with all types of utility computing tools that give you many benefits:

Cloud Deployment Manager — Manages deployments like a pro and schedules Google Cloud resources like a pro.

Stackdriver — A new member of utility computing monitoring, now combined with GKE, brings you an absolute killer app performance dashboard with its monitoring, logging, and diagnostics to the party.

Cloud Pub/Sub — Supports asynchronous messaging, which is critical for scaling and decoupling in microservices architectures.

Feature areas of the GCP Console are:

1. Cloud Build

Serverless CI/CD platform

Container and application building

Integration with popular repositories

2. Cloud Deploy

Managed continuous delivery service

Progressive delivery support

Environment management

3. Container Registry

Secure container storage

Vulnerability scanning

Integration with CI/CD pipelines

Cloud-Native DevOps: Making The Most of Utility Computing Services

This is all about cloud-native DevOps, where you use grid computing to automate, scale, and optimize your operations. You can take advantage of AWS DevOps Consulting, Azure, and Google cloud-native services like this to:

Automated Serverless Architectures: Serverless computing, available in all three major infrastructures (AWS Lambda, Azure Functions, and Google Cloud Functions), lets you execute code without provisioning and hosting servers. This allows for autoscaling, high availability, and low overhead.

Event-Driven Workflows: Create custom workflows that respond to real-time events by using event-driven services available in utility computing, like AWS Step Functions, Azure Logic Apps, or Google Cloud Workflows. This can be to automate pipelines, CI/CD, data processing, etc.

Container Orchestration: Implement container orchestration tools such as Amazon EKS, Azure Kubernetes Service (AKS), or Google Kubernetes Engine (GKE) to scale and automate microservice deployments.

Implementing utility computing using Azure, AWS, or GCP takes planning and knowledge. Spiral Mantra’s complete DevOps services can assist your organization with all this and more by enabling you to implement and maximize technological practices on all of the leading utility computing providers.

Spiral Mantra’s DevOps Consulting Services

We are a renowned DevOps company in USA, that provides one-stop consulting services for all major cloud infrastructures. Our expertise includes:

Estimation and Strategy Development

Grid computing readiness assessment

Custom implementation roadmap creation

Implementation Services

CI/CD pipeline setup and optimization

Infrastructure automation

Container orchestration

Security integration

Monitoring and logging implementation

Platform-Specific Services

1. Azure DevOps Services in USA

Azure pipeline optimization

Integration with existing tools

Custom workflow development

Security and compliance implementation

2. AWS Consulting Services in USA

AWS infrastructure setup

CodePipeline implementation

Containerization strategies

Cost optimization

3. GCP Services in USA

Cloud Build configuration

Kubernetes implementation

Monitoring and logging setup

Performance optimization

We provide customized solutions geared toward business requirements with security, scalability, and performance that will support organizations to adopt or automate their DevOps activities.

Conclusion

Understanding cloud computing systems and methodology is important for today’s companies. Whether using AWS, Azure, or the Google Cloud Platform, getting things right takes experience, planning, and continual improvement.

Spiral Mantra’s complete DevOps services in USA help organizations with the complexity of cloud migration and integration. Our prominent AWS DevOps consulting services and vast experience in all of the leading cloud platforms help businesses reach their digital transformation objectives on time and effectively.

Call Spiral Mantra now to know how our utility computing knowledge can assist your company in meeting its technology objectives. We are the trusted AWS consulting and DevOps company that makes sure your organization takes full advantage of utility computing and practices.

#devops#azure#azure devops consulting services#software developers#software development#devops services

0 notes

Text

As a software developer, understanding the full potential of Azure DevOps can significantly enhance your workflow. This platform offers a robust set of tools that streamline the development process from planning to deployment. By leveraging these tools, you can ensure your projects are delivered on time and meet the highest standards of quality. Key Features of Azure DevOps One of the standout features of Azure DevOps is its integrated suite of tools that support the entire software development lifecycle. From project planning with Azure Boards to code management with Azure Repos, every stage of development is covered. Additionally, securing project data with azure devops backup is essential for maintaining the integrity and safety of your data within the platform. Another critical feature is Azure Pipelines, which provides continuous integration and continuous delivery (CI/CD) capabilities. This enables you to automate your builds and deployments, ensuring that new code changes are tested and deployed efficiently. Azure Artifacts further enhances this by offering integrated package management, allowing you to create and share packages with your team easily. Azure DevOps also includes robust testing capabilities through Azure Test Plans. This feature allows teams to create, manage, and execute manual and exploratory tests, ensuring comprehensive quality assurance throughout the development process. With built-in traceability between test cases and work items, developers can easily track the progress of testing efforts and identify areas that require additional attention. This integrated approach to testing within the Azure DevOps ecosystem significantly contributes to the overall reliability and quality of software projects. Furthermore, Azure DevOps offers powerful analytics and reporting capabilities through Azure Boards Analytics. This feature provides teams with data-driven insights into their development processes, enabling them to identify bottlenecks, track progress, and make informed decisions. With customizable dashboards and a wide range of pre-built reports, teams can visualize key metrics such as velocity, cycle time, and bug trends. This level of visibility into project health and team performance is crucial for continuous improvement and helps organizations optimize their development workflows for maximum efficiency. Improving Team Collaboration with Azure DevOps Azure DevOps excels in enhancing team collaboration by providing tools that facilitate communication and coordination among team members. For instance, Azure Boards offers work item tracking with Kanban boards and backlogs, helping teams stay organized and focused on their tasks. In addition, it supports integration with popular third-party tools like Slack and Microsoft Teams. This platform also includes robust version control options through Azure Repos, supporting both Git and Team Foundation Version Control (TFVC). This ensures that all team members can work on code simultaneously without conflicts. The midpoint of success in coding environments underscores the importance of seamless collaboration among team members. The platform's extensibility further enhances collaboration by allowing teams to customize their workflows and integrate with a wide range of third-party tools and services. This flexibility enables organizations to tailor Azure DevOps to their specific needs and existing toolchains, promoting a seamless collaborative environment. Teams can leverage custom dashboards and widgets to visualize project data, share insights, and make informed decisions collectively. This adaptability ensures that Azure DevOps can evolve alongside the team's changing requirements, maintaining its effectiveness as a collaboration hub throughout the project lifecycle. The Relevance of Azure DevOps for Contemporary Software Development The importance of Azure DevOps in today's fast-paced software development landscape cannot be overstated.

It addresses the critical need for agility, allowing teams to adapt quickly to changing requirements and market conditions. By offering end-to-end solutions for development, testing, and deployment, it minimizes the overhead associated with managing multiple tools and services. Furthermore, Azure DevOps supports a wide range of languages and platforms, making it versatile for various project types. Whether you're developing web applications, mobile apps, or enterprise solutions, this platform provides the necessary infrastructure to support your development efforts. It also integrates seamlessly with other Azure services, offering additional capabilities like machine learning models deployment and IoT solutions management. Azure DevOps' relevance is further underscored by its strong support for DevOps practices and culture. It encourages the adoption of methodologies like Infrastructure as Code (IaC) and configuration management, which are crucial for maintaining consistency across development, testing, and production environments. By providing tools that facilitate these practices, Azure DevOps helps organizations achieve higher levels of operational efficiency and reliability. This alignment with modern DevOps principles enables teams to deliver value to customers more rapidly and respond to market changes with greater agility, making it an indispensable platform in the current software development landscape. Impact of Azure DevOps on Software Quality Implementing Azure DevOps can have a profound impact on the quality of your software projects. Its comprehensive testing tools allow for thorough validation of code changes before they are deployed to production environments. Automated testing frameworks supported by Azure Pipelines ensure that regressions are caught early in the development cycle. Moreover, Azure Monitor provides real-time insights into application performance, helping you identify issues before they affect end users. This proactive approach to monitoring and diagnostics is crucial for maintaining high standards of software quality. By using these integrated tools effectively, you can deliver reliable and performant applications consistently. Azure DevOps also contributes significantly to software quality through its emphasis on early testing practices. By integrating testing early in the development cycle, teams can identify and address issues sooner, reducing the cost and effort associated with fixing bugs in later stages. The platform's support for continuous testing, including the ability to run automated tests as part of the CI/CD pipeline, ensures that every code change is validated against quality criteria. This proactive approach to quality assurance, combined with features like code reviews and pull requests in Azure Repos, fosters a culture of quality within development teams, ultimately leading to more robust and reliable software products.

0 notes

Text

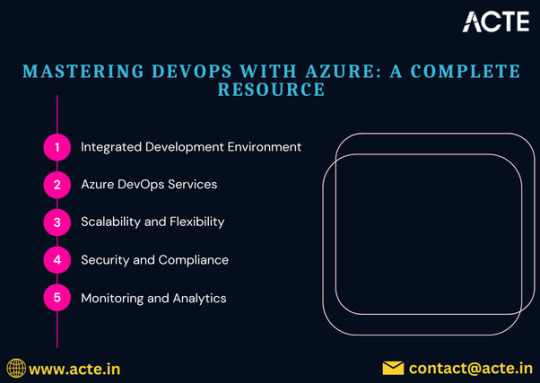

A Deep Dive into DevOps on Azure: Your Essential Guide

In the modern software development landscape, the need for speed, efficiency, and quality is paramount. This is where DevOps comes in, fostering collaboration between development and operations teams. Microsoft Azure has emerged as a leading platform for implementing DevOps practices, providing a suite of tools and services designed to streamline the development lifecycle. In this guide, we’ll explore the key components of DevOps on Azure and how to effectively leverage them.

For those keen to excel in Devops, enrolling in Devops Course in Bangalore can be highly advantageous. Such a program provides a unique opportunity to acquire comprehensive knowledge and practical skills crucial for mastering Devops.

What is DevOps?

DevOps is a combination of cultural philosophies, practices, and tools that increase an organization’s ability to deliver applications and services at high velocity. It promotes collaboration between software developers and IT operations, facilitating faster and more reliable software delivery.

Key Principles of DevOps

Collaboration: Breaking down silos between development and operations teams.

Automation: Reducing manual processes to minimize errors and improve efficiency.

Continuous Integration and Continuous Deployment (CI/CD): Automating testing and deployment to ensure high-quality software.

Monitoring and Feedback: Continuously tracking application performance and gathering user feedback for ongoing improvements.

Why Azure for DevOps?

Microsoft Azure provides an extensive toolkit that supports DevOps practices. Here are some reasons to choose Azure:

1. Integrated Services

Azure offers a robust set of integrated tools that streamline the development process, such as Azure DevOps Services, which includes:

Azure Repos: For version control and source code management.

Azure Pipelines: For CI/CD automation.

Azure Boards: For project management and tracking.

Azure Test Plans: For managing testing processes.

Azure Artifacts: For package management.

2. Scalability

Azure's cloud infrastructure allows for seamless scaling of applications, accommodating everything from small projects to large enterprise solutions.

3. Enhanced Security

Azure provides a secure environment with features like identity management, encryption, and compliance certifications, ensuring that your applications and data are well protected.

Enrolling in Devops Online Course can enable individuals to unlock DevOps full potential and develop a deeper understanding of its complexities.

4. Advanced Monitoring

With tools like Azure Monitor and Application Insights, you can gain valuable insights into application performance, user interactions, and system health, allowing for proactive issue resolution.

Getting Started with DevOps on Azure

Create Your Azure Account: Begin by signing up for an Azure account to access the Azure portal.

Explore Azure DevOps Services: Familiarize yourself with the various Azure DevOps offerings and select the ones that best fit your project needs.

Establish CI/CD Pipelines: Utilize Azure Pipelines to automate your build and release processes, ensuring that code changes are consistently tested and deployed.

Implement Monitoring Solutions: Use Azure Monitor and Application Insights to track application performance and make data-driven decisions for improvements.

Cultivate a DevOps Culture: Encourage open communication and collaboration among teams to fully embrace the DevOps philosophy.

Conclusion

DevOps on Azure provides a powerful framework for modern software development. By leveraging Azure's extensive tools and services, organizations can enhance collaboration, accelerate delivery, and improve software quality. As you embark on your DevOps journey with Azure, remember that the key to success lies in embracing the principles of collaboration, automation, and continuous improvement.

0 notes

Text

Azure DevOps Advance Course: Elevate Your DevOps Expertise

The Azure DevOps Advanced Course is for individuals with a solid understanding of DevOps and who want to enhance their skills and knowledge within the Microsoft Azure ecosystem. This course is designed to go beyond the basics and focus on advanced concepts and practices for managing and implementing complex DevOps workflows using Azure tools.

Key Learning Objectives:

Advanced Pipelines for CI/CD: Learn how to build highly scalable, reliable, and CI/CD pipelines with Azure DevOps Tools like Azure Pipelines. Azure Artifacts and Azure Key Vault. Learn about advanced branching, release gates and deployment strategies in different environments.

Infrastructure as Code (IaC): Master the use of infrastructure-as-code tools like Azure Resource Manager (ARM) templates and Terraform to automate the provisioning and management of Azure resources. This includes best practices for versioning, testing and deploying infrastructure configurations.

Containerization: Learn about container orchestration using Docker. Learn how to create, deploy and manage containerized apps on Azure Kubernetes Service. Explore concepts such as service meshes and ingress controllers.

Security and compliance: Understanding security best practices in the DevOps Lifecycle. Learn how to implement various security controls, including code scanning, vulnerability assessment, and secret management, at different stages of the pipeline. Learn how to implement compliance frameworks such as ISO 27001 or SOC 2 using Azure DevOps.

Monitoring & Logging: Acquire expertise in monitoring application performance and health. Azure Monitor, Application Insights and other tools can be used to collect, analyse and visualize telemetry. Implement alerting mechanisms to troubleshoot problems proactively.

Advanced Debugging and Troubleshooting: Develop advanced skills in troubleshooting to diagnose and solve complex issues with Azure DevOps deployments and pipelines. Learn how to debug code and analyze logs to identify and solve problems.

Who should attend:

DevOps Engineers

System Administrators

Software Developers

Cloud Architects

IT Professionals who want to improve their DevOps on the Azure platform

Benefits of taking the course:

Learn advanced DevOps concepts, best practices and more.

Learn how to implement and manage complex DevOps Pipelines.

Azure Tools can help you automate your infrastructure and applications.

Learn how to integrate security, compliance and monitoring into the DevOps Lifecycle.

Get a competitive advantage in the job market by acquiring advanced Azure DevOps Advance Course knowledge.

The Azure DevOps Advanced Course is a comprehensive, practical learning experience that will equip you with the knowledge and skills to excel in today’s dynamic cloud computing environment.

0 notes

Text

Mastering Azure DevOps: Efficient Pipelines and Continuous Integration

Azure DevOps is a comprehensive service that enables teams to manage their development, build, delivery, and operations of software projects. It consists of several services, including Azure Repos for source control, Azure Pipelines for continuous integration and delivery (CI/CD), Azure Test Plans for manual and exploration testing, and Azure Artifacts for package management. In this tutorial,…

0 notes

Text

How to Build CI/CD Pipeline with the Azure DevOps

Building a Continuous Integration and Continuous Deployment (CI/CD) pipeline with Azure DevOps is essential for automating and streamlining the development, testing, and deployment of applications. With Azure DevOps, teams can enhance collaboration, automate processes, and efficiently manage code and releases. In this guide, we'll walk through the process of building a CI/CD pipeline, including key components, tools, and tips. Along the way, we'll integrate the keywords azure admin and Azure Data Factory to explore how these elements contribute to the overall process.

1. Understanding CI/CD and Azure DevOps

CI (Continuous Integration) is the process of automatically integrating code changes from multiple contributors into a shared repository, ensuring that code is tested and validated. CD (Continuous Deployment) takes this a step further by automatically deploying the tested code to a production environment. Together, CI/CD creates an efficient, automated pipeline that minimizes manual intervention and reduces the time it takes to get features from development to production.

Azure DevOps is a cloud-based set of tools that provides the infrastructure needed to build, test, and deploy applications efficiently. It includes various services such as:

Azure Pipelines for CI/CD

Azure Repos for version control

Azure Boards for work tracking

Azure Artifacts for package management

Azure Test Plans for testing

2. Prerequisites for Building a CI/CD Pipeline

Before setting up a CI/CD pipeline in Azure DevOps, you'll need the following:

Azure DevOps account: Create an account at dev.azure.com.

Azure subscription: To deploy the app, you'll need an Azure subscription (for services like Azure Data Factory).

Repository: Code repository (Azure Repos, GitHub, etc.).

Permissions: Access to configure Azure resources and manage pipeline configurations (relevant to azure admin roles).

3. Step-by-Step Guide to Building a CI/CD Pipeline

Step 1: Create a Project in Azure DevOps

The first step is to create a project in Azure DevOps. This project will house all your CI/CD components.

Navigate to Azure DevOps and sign in.

Click on “New Project.”

Name the project and choose visibility (public or private).

Select a repository type (Git is the most common).

Step 2: Set Up Your Code Repository

Once the project is created, you'll need a code repository. Azure DevOps supports Git repositories, which allow for version control and collaboration among developers.

Click on “Repos” in your project.

If you don’t already have a repo, create one by initializing a new repository or importing an existing Git repository.

Add your application’s source code to this repository.

Step 3: Configure the Build Pipeline (Continuous Integration)

The build pipeline is responsible for compiling code, running tests, and generating artifacts for deployment. The process starts with creating a pipeline in Azure Pipelines.

Go to Pipelines and click on "Create Pipeline."

Select your repository (either from Azure Repos, GitHub, etc.).

Choose a template for the build pipeline, such as .NET Core, Node.js, Python, etc.

Define the tasks in the YAML file or use the classic editor for a more visual experience.

Example YAML file for a .NET Core application:

yaml

Copy code

trigger: - master pool: vmImage: 'ubuntu-latest' steps: - task: UseDotNet@2 inputs: packageType: 'sdk' version: '3.x' - script: dotnet build --configuration Release displayName: 'Build solution' - script: dotnet test --configuration Release displayName: 'Run tests'

This pipeline will automatically trigger when changes are made to the master branch, build the project, and run unit tests.

Step 4: Define the Release Pipeline (Continuous Deployment)

The release pipeline automates the deployment of the application to various environments like development, staging, or production. This pipeline will be linked to the output of the build pipeline.

Navigate to Pipelines > Releases > New Release Pipeline.

Choose a template for your pipeline (Azure App Service Deployment, for example).

Link the build artifacts from the previous step to this release pipeline.

Add environments (e.g., Development, Staging, Production).

Define deployment steps, such as deploying to an Azure App Service or running custom deployment scripts.

Step 5: Integrating Azure Data Factory in CI/CD Pipeline

Azure Data Factory (ADF) is an essential service for automating data workflows and pipelines. If your CI/CD pipeline involves deploying or managing data workflows using ADF, Azure DevOps makes the integration seamless.

Export ADF Pipelines: First, export your ADF pipeline and configuration as ARM templates. This ensures that the pipeline definition is version-controlled and deployable across environments.

Deploy ADF Pipelines: Use Azure Pipelines to deploy the ADF pipeline as part of the CD process. This typically involves a task to deploy the ARM template using the az cli or Azure PowerShell commands.

Example of deploying an ADF ARM template:

yaml

Copy code

- task: AzureResourceManagerTemplateDeployment@3 inputs: deploymentScope: 'Resource Group' azureResourceManagerConnection: 'AzureServiceConnection' action: 'Create Or Update Resource Group' resourceGroupName: 'my-adf-resource-group' location: 'East US' templateLocation: 'Linked artifact' csmFile: '$(System.DefaultWorkingDirectory)/drop/ARMTemplate.json' csmParametersFile: '$(System.DefaultWorkingDirectory)/drop/ARMTemplateParameters.json'

This task ensures that the Azure Data Factory pipeline is automatically deployed during the release process, making it an integral part of the CI/CD pipeline.

Step 6: Set Up Testing

Testing is an essential part of any CI/CD pipeline, ensuring that your application is reliable and bug-free. You can use Azure Test Plans to manage test cases and run automated tests as part of the pipeline.

Unit Tests: These can be run during the build pipeline to test individual components.

Integration Tests: You can create separate stages in the pipeline to run integration tests after the application is deployed to an environment.

Manual Testing: Azure DevOps provides manual testing options where teams can create, manage, and execute manual test plans.

Step 7: Configure Notifications and Approvals

Azure DevOps allows you to set up notifications and approvals in the pipeline. This is useful when manual intervention is required before promoting code to production.

Notifications: Set up email or Slack notifications for pipeline failures or successes.

Approvals: Configure manual approvals before releasing to critical environments such as production. This is particularly useful for azure admin roles responsible for overseeing deployments.

4. Best Practices for CI/CD in Azure DevOps

Here are a few best practices to consider when building CI/CD pipelines with Azure DevOps:

Automate Everything: The more you automate, the more efficient your pipeline will be. Automate builds, tests, deployments, and even infrastructure provisioning using Infrastructure as Code (IaC).

Use Branching Strategies: Implement a branching strategy like GitFlow to manage feature development, bug fixes, and releases in a structured way.

Leverage Azure Pipelines Templates: If you have multiple pipelines, use templates to avoid duplicating YAML code. This promotes reusability and consistency across pipelines.

Monitor Pipelines: Use Azure Monitor and Application Insights to keep track of pipeline performance, identify bottlenecks, and get real-time feedback on deployments.

Security First: Make security checks part of your pipeline by integrating tools like WhiteSource Bolt, SonarCloud, or Azure Security Center to scan for vulnerabilities in code and dependencies.

Rollbacks and Blue-Green Deployments: Implement rollback mechanisms to revert to the previous stable version in case of failures. Blue-Green deployments and canary releases are strategies that allow safer production deployments.

5. Roles of Azure Admin in CI/CD

An Azure admin plays a vital role in managing resources, security, and permissions within the Azure platform. In the context of CI/CD pipelines, the azure admin ensures that the necessary infrastructure is in place and manages permissions, such as creating service connections between Azure DevOps and Azure resources (e.g., Azure App Service, Azure Data Factory).

Key tasks include:

Resource Provisioning: Setting up Azure resources like VMs, databases, or storage that the application will use.

Security Management: Configuring identity and access management (IAM) to ensure that only authorized users can access sensitive resources.

Cost Management: Monitoring resource usage to optimize costs during deployments.

6. Conclusion

Building a CI/CD pipeline with Azure DevOps streamlines software delivery by automating the integration, testing, and deployment of code. Integrating services like Azure Data Factory further enhances the ability to automate complex workflows, making the pipeline a central hub for both application and data automation.

The role of the azure admin is critical in ensuring that resources, permissions, and infrastructure are in place and securely managed, enabling development teams to focus on delivering quality code faster.

#azure devops#azurecertification#microsoft azure#azure data factory#azure training#azuredataengineer

0 notes

Text

MS Azure DevOps Online Training - NareshIT

MS Azure DevOps Online Training - NareshIT

Website Link: https://nareshit.com/courses/ms-azure-devops-online-training

About: MS Azure DevOps Online Training

Description:

Azure DevOps consists of several key components:

Azure Boards: Agile planning tools for tracking work items, managing backlogs, and planning sprints using Kanban boards or Scrum methodologies.

Azure Repos: Git repositories for version control, enabling teams to collaborate on code, review changes, and manage branches.

Azure Pipelines: CI/CD service for automating the build, test, and deployment process across different platforms and environments.

Azure Artifacts: Package management service for storing and sharing artifacts like binaries, npm packages, NuGet packages, and more.

Azure Test Plans: Test management tools for planning, executing, and tracking tests to ensure application quality.

Azure DevOps Extensions: Marketplace for integrating with third-party tools and extending the capabilities of Azure DevOps.

What are the Course Objectives?

Objectives: The primary objectives of learning Azure DevOps include:

Streamlining Development Processes: Learn how to use Azure DevOps tools to automate repetitive tasks, improve collaboration among team members, and accelerate the software development lifecycle.

Implementing Continuous Integration and Deployment: Understand how to set up automated build and deployment pipelines to deliver high-quality software more frequently and reliably.

Enhancing Collaboration: Master the use of Azure Boards for effective project management and collaboration, enabling teams to plan, track, and deliver software iterations efficiently.

Improving Application Quality: Learn how to leverage Azure Test Plans and other testing tools to ensure the quality and reliability of software through comprehensive testing strategies.

Gaining Insights and Monitoring: Explore how to use Azure DevOps for monitoring application performance, collecting feedback, and gaining insights to continuously improve development processes.

Training Features:

Comprehensive Course Curriculum

Experienced Industry Professionals

24/7 Learning Access

Comprehensive Placement Programs

Hands-on Practice

Lab Facility with Expert Mentors

For Online Training:

India:

+91 8179191999

Whatsapp:

+91 8179191999

USA:

+1 4042329879

Email: [email protected]

0 notes

Text

Modern CI/CD Pipelines for Cloud-Native Applications

In the hastily evolving landscape of software development, Continuous Integration/Continuous Deployment (CI/CD) pipelines have turn out to be instrumental in making sure agility, reliability, and efficiency in delivering cloud-native applications. In this blog put up, we will delve into the nuances of present day CI/CD pipelines tailored especially for cloud-native environments. 1. Understanding Cloud-Native CI/CD

Cloud-local CI/CD pipelines are designed to help the precise necessities of programs constructed using microservices structure, packing containers, serverless functions, and different cloud-native technologies. The key additives of a cloud-local CI/CD pipeline include:

Source Code Management: Leveraging version control systems like Git to manage code changes and collaboration among developers.

Automated Testing: Implementing computerized unit exams, integration checks, and end-to-stop exams to make certain code quality and capability.

Containerization: Building Docker pix or the use of other containerization equipment to package deal applications and dependencies for consistent deployment.

Infrastructure as Code (IaC): Defining infrastructure resources (e.G., Kubernetes clusters, cloud sources) as code using tools like Terraform or AWS CloudFormation.

Continuous Integration (CI): Automating the manner of integrating code changes into a shared repository and going for walks automated tests to validate modifications.

Continuous Deployment (CD): Automating the deployment of tested code adjustments to production or staging environments primarily based on predefined standards. 2. Key Practices for Modern CI/CD in Cloud-Native Environments A. GitOps Workflows: Embracing GitOps standards where the entire CI/CD pipeline, consisting of infrastructure modifications, is managed via model-controlled Git repositories. This allows declarative configuration and promotes transparency and auditability. B. Automated Testing Strategies: Implementing a complete suite of automated assessments, including unit tests, integration checks (e.G., the usage of equipment like Cypress or Selenium), and stop-to-give up assessments (e.G., the use of tools like Kubernetes E2E exams or Postman). Incorporating trying out into the pipeline guarantees faster feedback loops and early detection of problems. C. Immutable Deployments: Adopting immutable infrastructure styles in which deployments are made through replacing entire packing containers or serverless instances as opposed to editing current instances. This ensures consistency, reproducibility, and easier rollback in case of issues. D. Canary Deployments and Blue-Green Deployments: Leveraging canary deployments (gradual rollout to a subset of customers) and blue-green deployments (switching traffic among antique and new versions) to reduce downtime, validate changes in manufacturing, and mitigate dangers. E. Observability and Monitoring: Integrating tracking gear (e.G., Prometheus, Grafana, ELK stack) into the CI/CD pipeline to accumulate metrics, logs, and lines. This enables real-time visibility into utility overall performance, fitness, and problems, facilitating speedy response and troubleshooting. 3. Tools and Technologies for Cloud-Native CI/CD