#kubernetes

Explore tagged Tumblr posts

Text

I just turned off the ethernet interface of a box I was ssh'd into. The sheer idiocy of what I had just done did not hit me until after I hit enter. This box is headless. The interface did not come back up after a hard restart. I am the biggest idiot.

28 notes

·

View notes

Text

64 vCPU/256 GB ram/2 TB SSD EC2 instance with #FreeBSD or Debian Linux as OS 🔥

39 notes

·

View notes

Text

thinking about that one girl i used to see whose safe word was kubernetes. She works for big tech now

3 notes

·

View notes

Text

3 notes

·

View notes

Text

Ready to future-proof your applications and boost performance? Discover how PHP microservices can transform your development workflow! 💡

In this powerful guide, you'll learn: ✅ What PHP Microservices Architecture really means ✅ How to break a monolithic app into modular services ✅ Best tools for containerization like Docker & Kubernetes ✅ API Gateway strategies and service discovery techniques ✅ Tips on error handling, security, and performance optimization

With real-world examples and practical steps, this guide is perfect for developers and teams aiming for faster deployment, independent scaling, and simplified maintenance.

🎯 Whether you’re a solo developer or scaling a product, understanding microservices is the key to next-level architecture.

🌐 Brought to you by Orbitwebtech, Best Web Development Company in the USA, helping businesses build powerful and scalable web solutions.

📖 Start reading now and give your PHP projects a cutting-edge upgrade!

2 notes

·

View notes

Text

CHECK FOR THINGS THAT PHYSICALLY EXIST BUT HAVE NOT BEEN PERCEIVED YET

INCORPORATE MORE ACCURATE PHYSICAL REALITY INTO GAME

#CHECK FOR THINGS THAT PHYSICALLY EXIST BUT HAVE NOT BEEN PERCEIVED YET#taylor swift#INCORPORATE MORE ACCURATE PHYSICAL REALITY INTO GAME#VIRTUAL MACHINES#VIRTUAL MACHINE#vmware#container#kubernetes#zero trust#microsoft#cisa.gov#pluralsight#pluralsight.com#Wikipedia#wikipedia#wikimedia commons#wii sports wiki#wikipedia.org

18 notes

·

View notes

Text

#self hosted#kubernetes#docker#home server#linux#sorry everyone without a server#no “i'm bald” option for you#you can queue this for a day from now ig

4 notes

·

View notes

Text

so at work theres a kubernetes command called kgpo that is used to list pods and i just mentally call it the "kagepro" command :p

4 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

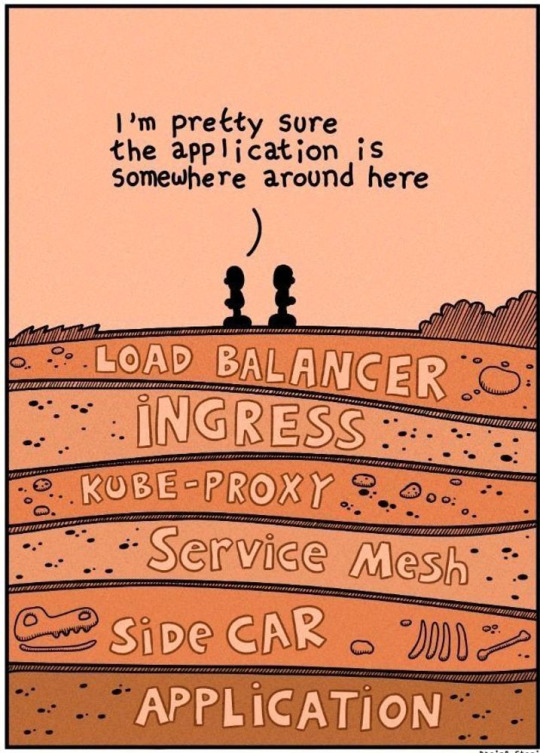

Kubernetes is simple, they say ;-)

79 notes

·

View notes

Text

Why You Need DevOps Consulting for Kubernetes Scaling

With today’s technological advances and fast-moving landscape, scaling Kubernetes clusters has become troublesome for almost every organization. The more companies are moving towards containerized applications, the harder it gets to scale multiple Kubernetes clusters. In this article, you’ll learn the exponential challenges along with the best ways and practices of scaling Kubernetes deployments successfully by seeking expert guidance.

The open-source platform K8s, used to deploy and manage applications, is now the norm in containerized environments. Since businesses are adopting DevOps services in USA due to their flexibility and scalability, cluster management for Kubernetes at scale is now a fundamental part of the business.

Understanding Kubernetes Clusters

Before moving ahead with the challenges along with its best practices, let’s start with an introduction to what Kubernetes clusters are and why they are necessary for modern app deployments. To be precise, it is a set of nodes (physical or virtual machines) connected and running containerized software. K8’s clusters are very scalable and dynamic and are ideal for big applications accessible via multiple locations.

The Growing Complexity Organizations Must Address

Kubernetes is becoming the default container orchestration solution for many companies. But the complexity resides with its scaling, as it is challenging to keep them in working order. Kubernetes developers are thrown many problems with consistency, security, and performance, and below are the most common challenges.

Key Challenges in Managing Large-Scale K8s Deployments

Configuration Management: Configuring many different Kubernetes clusters can be a nightmare. Enterprises need to have uniform policies, security, and allocations with flexibility for unique workloads.

Resource Optimization: As a matter of course, the DevOps consulting services would often emphasize that resources should be properly distributed so that overprovisioning doesn’t happen and the application can run smoothly.

Security and Compliance: Security on distributed Kubernetes clusters needs solid policies and monitoring. Companies have to use standard security controls with different compliance standards.

Monitoring and Observability: You’ll need advanced monitoring solutions to see how many clusters are performing health-wise. DevOps services in USA focus on the complete observability instruments for efficient cluster management.

Best Practices for Scaling Kubernetes

Implement Infrastructure as Code (IaC)

Apply GitOps processes to configure

Reuse version control for all cluster settings.

Automate cluster creation and administration

Adopt Multi-Cluster Management Tools

Modern organizations should:

Set up cluster management tools in dedicated software.

Utilize centralized control planes.

Optimize CI CD Pipelines

Using K8s is perfect for automating CI CD pipelines, but you want the pipelines optimized. By using a technique like blue-green deployments or canary releases, you can roll out updates one by one and not push the whole system. This reduces downtime and makes sure only stable releases get into production.

Also, containerization using Kubernetes can enable faster and better builds since developers can build and test apps in separate environments. This should be very tightly coupled with Kubernetes clusters for updates to flow properly.

Establish Standardization

When you hire DevOps developers, always make sure they:

Create standardized templates

Implement consistent naming conventions.

Develop reusable deployment patterns.

Optimize Resource Management

Effective resource management includes:

Implementing auto-scaling policies

Adopting quotas and limits on resource allocations.

Accessing cluster auto scale for node management

Enhance Security Measures

Security best practices involve:

Role-based access control (RBAC)—Aim to restrict users by role

Network policy isolation based on isolation policy in the network

Updates and security audits: Ongoing security audits and upgrades

Leverage DevOps Services and Expertise

Hire dedicated DevOps developers or take advantage of DevOps consulting services like Spiral Mantra to get the best of services under one roof. The company comprehends the team of experts who have set up, operated, and optimized Kubernetes on an enterprise scale. By employing DevOps developers or DevOps services in USA, organizations can be sure that they are prepared to address Kubernetes issues efficiently. DevOps consultants can also help automate and standardize K8s with the existing workflows and processes.

Spiral Mantra DevOps Consulting Services

Spiral Mantra is a DevOps consulting service in USA specializing in Azure, Google Cloud Platform, and AWS. We are CI/CD integration experts for automated deployment pipelines and containerization with Kubernetes developers for automated container orchestration. We offer all the services from the first evaluation through deployment and management, with skilled experts to make sure your organizations achieve the best performance.

Frequently Asked Questions (FAQs)

Q. How can businesses manage security on different K8s clusters?

Businesses can implement security by following annual security audits and security scanners, along with going through network policies. With the right DevOps consulting services, you can develop and establish robust security plans.

Q. What is DevOps in Kubernetes management?

For Kubernetes management, it is important to implement DevOps practices like automation, infrastructure as code, continuous integration and deployment, security, compliance, etc.

Q. What are the major challenges developers face when managing clusters at scale?

Challenges like security concerns, resource management, and complexity are the most common ones. In addition to this, CI CD pipeline management is another major complexity that developers face.

Conclusion

Scaling Kubernetes clusters takes an integrated strategy with the right tools, methods, and knowledge. Automation, standardization, and security should be the main objectives of organizations that need to take advantage of professional DevOps consulting services to get the most out of K8s implementations. If companies follow these best practices and partner with skilled Kubernetes developers, they can run containerized applications efficiently and reliably on a large scale.

1 note

·

View note

Text

Load Balancing Web Sockets with K8s/Istio

When load balancing WebSockets in a Kubernetes (K8s) environment with Istio, there are several considerations to ensure persistent, low-latency connections. WebSockets require special handling because they are long-lived, bidirectional connections, which are different from standard HTTP request-response communication. Here’s a guide to implementing load balancing for WebSockets using Istio.

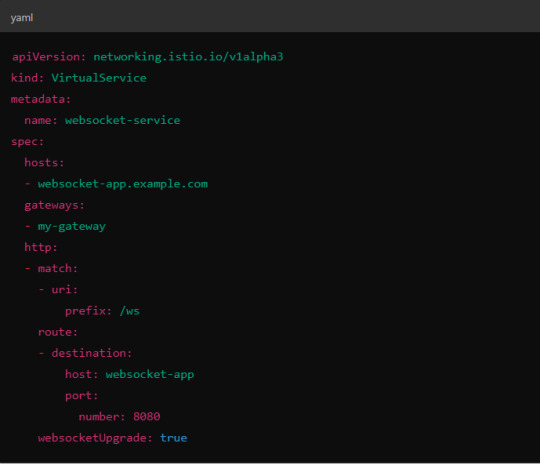

1. Enable WebSocket Support in Istio

By default, Istio supports WebSocket connections, but certain configurations may need tweaking. You should ensure that:

Destination rules and VirtualServices are configured appropriately to allow WebSocket traffic.

Example VirtualService Configuration.

Here, websocketUpgrade: true explicitly allows WebSocket traffic and ensures that Istio won’t downgrade the WebSocket connection to HTTP.

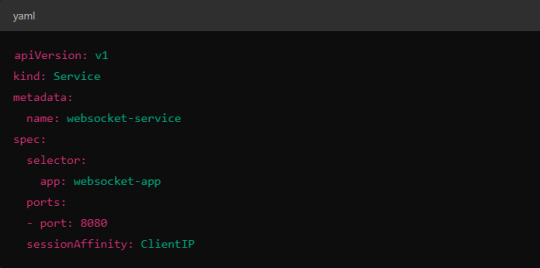

2. Session Affinity (Sticky Sessions)

In WebSocket applications, sticky sessions or session affinity is often necessary to keep long-running WebSocket connections tied to the same backend pod. Without session affinity, WebSocket connections can be terminated if the load balancer routes the traffic to a different pod.

Implementing Session Affinity in Istio.

Session affinity is typically achieved by setting the sessionAffinity field to ClientIP at the Kubernetes service level.

In Istio, you might also control affinity using headers. For example, Istio can route traffic based on headers by configuring a VirtualService to ensure connections stay on the same backend.

3. Load Balancing Strategy

Since WebSocket connections are long-lived, round-robin or random load balancing strategies can lead to unbalanced workloads across pods. To address this, you may consider using least connection or consistent hashing algorithms to ensure that existing connections are efficiently distributed.

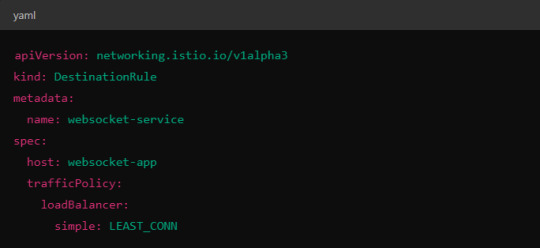

Load Balancer Configuration in Istio.

Istio allows you to specify different load balancing strategies in the DestinationRule for your services. For WebSockets, the LEAST_CONN strategy may be more appropriate.

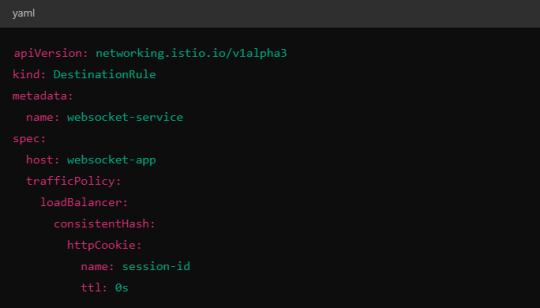

Alternatively, you could use consistent hashing for a more sticky routing based on connection properties like the user session ID.

This configuration ensures that connections with the same session ID go to the same pod.

4. Scaling Considerations

WebSocket applications can handle a large number of concurrent connections, so you’ll need to ensure that your Kubernetes cluster can scale appropriately.

Horizontal Pod Autoscaler (HPA): Use an HPA to automatically scale your pods based on metrics like CPU, memory, or custom metrics such as open WebSocket connections.

Istio Autoscaler: You may also scale Istio itself to handle the increased load on the control plane as WebSocket connections increase.

5. Connection Timeouts and Keep-Alive

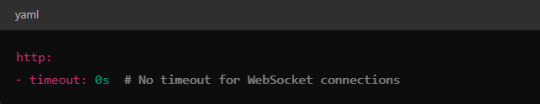

Ensure that both your WebSocket clients and the Istio proxy (Envoy) are configured for long-lived connections. Some settings that need attention:

Timeouts: In VirtualService, make sure there are no aggressive timeout settings that would prematurely close WebSocket connections.

Keep-Alive Settings: You can also adjust the keep-alive settings at the Envoy level if necessary. Envoy, the proxy used by Istio, supports long-lived WebSocket connections out-of-the-box, but custom keep-alive policies can be configured.

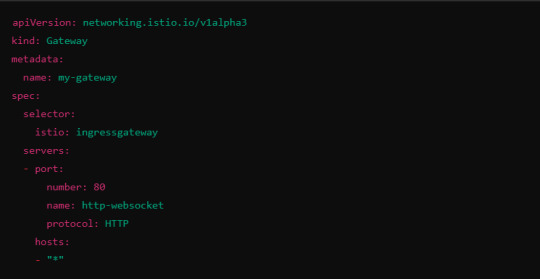

6. Ingress Gateway Configuration

If you're using an Istio Ingress Gateway, ensure that it is configured to handle WebSocket traffic. The gateway should allow for WebSocket connections on the relevant port.

This configuration ensures that the Ingress Gateway can handle WebSocket upgrades and correctly route them to the backend service.

Summary of Key Steps

Enable WebSocket support in Istio’s VirtualService.

Use session affinity to tie WebSocket connections to the same backend pod.

Choose an appropriate load balancing strategy, such as least connection or consistent hashing.

Set timeouts and keep-alive policies to ensure long-lived WebSocket connections.

Configure the Ingress Gateway to handle WebSocket traffic.

By properly configuring Istio, Kubernetes, and your WebSocket service, you can efficiently load balance WebSocket connections in a microservices architecture.

#kubernetes#websockets#Load Balancing#devops#linux#coding#programming#Istio#virtualservices#Load Balancer#Kubernetes cluster#gateway#python#devlog#github#ansible

5 notes

·

View notes

Text

YEEEEEEhawwwwww

(this actually represents several days of hard work and a great accomplishment toward understanding kubernetes)

4 notes

·

View notes

Text

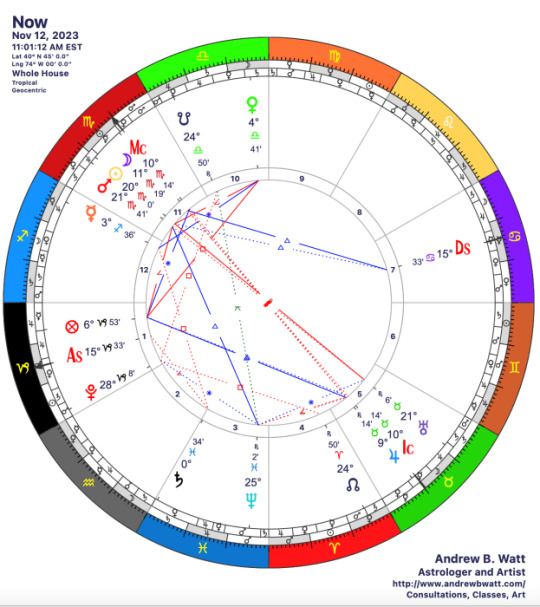

Sun in Scorpio III (12-22 November 2023)

The Kouroi/Curetes/Korybantes, the 'male' spirits of the more dangerous aspects of the Land, rule the next ten days. How do you relate to the risks of living life on Earth? Do you take the risks, accept them, or avoid them?

The Sun enters Scorpio III on November 12, 2023 at 11:01 am EST. We’ve passed the gate of Daylight Saving Time (falling back one hour), and we’re now in the season of darkness pretty definitively. Austin Coppock called this decan The Crow, relating it both to the constellation Corvus and a myth of how Crow stole the feasting-goblet of the sun-god Apollo and was burned black for his trouble.…

View On WordPress

12 notes

·

View notes