#csv certification

Explore tagged Tumblr posts

Text

Computer System Validation (CSV) Online Course

Wishing to establish a strong career in pharma compliance? Our Computer System Validation (CSV) Online Course at PharmaConnections is intended for the hands-on, industry-applied individual. Acquire 21 CFR Part 11, GxP validation, risk-based methodologies, and audit-ready documents — all from seasoned, real-world-experienced expert mentors.

Regardless of your background in QA, IT, or regulatory affairs, our CSV course helps you broaden your role within the pharmaceutical and life sciences markets. Join now & future-proof your career!

#computer system validation training#computer system validation certification#csv certification#computer system validation course online

0 notes

Text

MES System

Explore our comprehensive MES system, designed to streamline manufacturing processes and enhance production efficiency. Our MES courses cover key aspects of this essential Manufacturing Execution System, providing in-depth knowledge and practical skills for a competitive edge in your industry. Enroll today to master MES.

Get more details at: www.companysconnects.com/manufaturing-execution-system-mes

#pharmacovigilance courses#drug regulatory affairs certification#CSV Certification#Manufacturing Execution System

0 notes

Text

SAP S4 Training

Empower your professional journey with SAP S4 Training. Dive into a transformative learning experience, blending theory and practical application. Acquire proficiency in SAP's revolutionary ERP suite, positioning yourself as a sought-after expert in the ever-evolving realm of enterprise solutions.

Read More at: www.skillbee.co.in/certificatation-course-on-sap-s4-hana

1 note

·

View note

Text

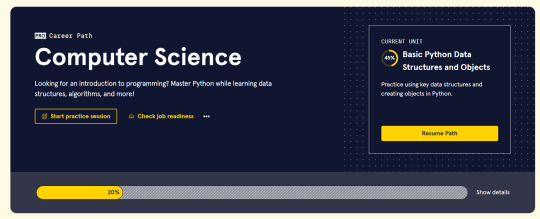

My progress so far in the Computer Science path certification!

Wednesday 4th October 2023

Though I am only 20% done, I am 20% done! The main focus of the first 20% is Python and learning the basics like:

Lists

Dictionaries

Loops

Working with files like .csv and .txt

Basic Git workflow

And I am working on it now! The next update would around 40% to see what new things I'll learn~!

⤷ ○ ♡ my shop ○ my twt ○ my youtube ○ pinned post ○ blog's navigation ♡ ○

90 notes

·

View notes

Text

6/366

Kayaknya ada yang nge'bug' after upgrade Libreoffice yang baru

Kan bikin variable %VAR_nama% pakai extension di Inkscape buat generate certificate.

Namun ternyata gagal.

Setelah di ingat-ingat, kemarin belum sempat update Inkscape maupun extention.

Ternyata, sempat update libreoffice-7.6

Walhasil, downgrade extension lalu pake manual csv. Gimana tuh? ya bikin file, tapi di rename pake *.csv

Barudeh tuh lancar generate 'mail merge' nya buat sertifikat.

Nb. Gambar Pemanis aja. Mau Screenshoot karena Case lama, ga jadi aja.

3 notes

·

View notes

Text

What are the prerequisites for enrolling in an AI course as a beginner?

Introduction: Why Start Learning AI Now?

Artificial Intelligence (AI) is reshaping industries, automating decisions, and powering tools we use every day. From healthcare diagnostics to personalized marketing and autonomous vehicles, AI plays a crucial role in driving innovation. As businesses and governments increasingly adopt AI technologies, the demand for professionals with AI knowledge is rising rapidly.

If you’re wondering where to start, you’re not alone. Many beginners ask: What do I need to know before enrolling in AI courses for beginners? This blog will walk you through the essential prerequisites you should have before starting your learning journey. Whether you aim to earn an Artificial Intelligence certification online or dive deep into AI and machine learning courses, this guide will help you get prepared.

Understanding the AI Learning Landscape

Before jumping into the prerequisites, it's important to understand what AI courses typically cover. A standard Artificial Intelligence course online may include:

Basics of AI: History, evolution, and key concepts

Machine Learning algorithms

Data preprocessing and handling

Programming for AI (typically in Python)

Neural networks and deep learning

Real-world applications and projects

Given the technical nature of AI, it’s helpful to equip yourself with foundational knowledge in several areas to make your learning smoother and more effective.

1. Mathematics: The Core Building Block

Why It Matters:

Mathematics, particularly linear algebra, calculus, probability, and statistics, forms the backbone of most AI algorithms.

What You Should Know:

Linear Algebra: Understand vectors, matrices, and operations such as dot products and matrix multiplication.

Probability and Statistics: Know basic probability rules, distributions, and descriptive statistics.

Calculus: Grasp basic differentiation and integration, especially how they relate to optimization in algorithms.

Real-World Relevance:

In AI and machine learning models, math is used for building logic, training models, and analyzing results. A strong math foundation increases your confidence in understanding AI models from the ground up.

2. Basic Programming Skills

Why It Matters:

AI implementation often requires coding, especially in Python, which is the most widely used language in this field.

What You Should Know:

Variables, data types, and loops

Functions and control structures

Working with libraries such as NumPy and Pandas

Basic data structures (lists, dictionaries, arrays)

Real-World Relevance:

You'll write code to build, train, and evaluate models. Even simple automation tasks or data analysis in AI depend on basic coding.

3. Logical and Analytical Thinking

Why It Matters:

AI development involves problem-solving, debugging, and understanding abstract concepts.

What You Should Know:

Ability to break complex problems into smaller steps

Comfort in dealing with trial-and-error approaches

Understanding flow diagrams and logic design

Real-World Relevance:

You’ll often need to troubleshoot issues or figure out why a model isn’t performing. Analytical thinking helps you improve solutions over time.

4. Familiarity with Data and Databases

Why It Matters:

AI is data-driven. Knowing how to access, clean, and analyze data is a vital skill.

What You Should Know:

Basic understanding of databases and SQL

How to handle datasets (CSV, Excel, JSON)

Concepts like missing data, outliers, and data scaling

Real-World Relevance:

You’ll prepare datasets for model training, understand data patterns, and use data to make intelligent predictions.

5. Understanding Algorithms and Logic

Why It Matters:

AI courses introduce learners to machine learning models that operate based on algorithmic logic.

What You Should Know:

What algorithms are and how they work

Sorting, searching, and optimization principles

Time and space complexity (basic)

Real-World Relevance:

Grasping algorithmic logic will help you understand how AI selects features, improves predictions, and evolves its learning processes.

6. Familiarity with Tools Used in AI

Why It Matters:

Having basic awareness of AI tools can give you a head start in learning.

Popular Tools Introduced in AI Courses for Beginners:

Python and associated libraries (TensorFlow, Keras, Scikit-learn)

Jupyter Notebooks for coding and documentation

Visualization tools (like Matplotlib or Seaborn)

Real-World Relevance:

When working on real AI projects or certification assessments, using these tools will help you run models and visualize outcomes efficiently.

7. A Curious and Open Mindset

Why It Matters:

AI is a fast-moving field with constant developments. Being curious helps you stay updated and embrace change.

What You Should Focus On:

Read blogs and news related to AI

Experiment with open datasets and basic projects

Be willing to make mistakes and learn from them

Real-World Relevance:

Employers value self-learners and innovators. A curious mindset makes you more adaptable in the tech industry.

8. No Prior Experience in AI? No Problem

Can You Still Enroll?

Yes! AI courses for beginners are specifically designed to start from scratch and gradually build your skills. Many learners without tech backgrounds have successfully transitioned into AI by following a structured path.

Learning Tip:

Start with introductory courses that focus on real-world examples and project-based learning. These courses simplify complex topics and build confidence over time.

Common Myths About AI Courses for Beginners

Myth: You need to be a data scientist before starting AI.

Truth: Many courses begin with foundational skills tailored for complete beginners.

Myth: Only engineers can understand AI.

Truth: AI is for anyone interested in technology, logic, and solving problems.

Myth: You must be great at math.

Truth: You only need basic math and a willingness to learn.

AI in the Real World: Why It Matters

AI is not just for researchers or engineers. It’s now part of many careers, including:

Business Analysts using AI to forecast trends

Healthcare Professionals using AI to assist in diagnosis

Retail Managers using AI for customer insights

HR Teams using AI to streamline hiring

Learning AI isn’t just a trend. It’s a future-ready skill with long-term benefits across industries.

Visual Guide: Beginner AI Workflow

plaintext

[Start] → Learn Python → Understand Data → Apply Math → Train Models → Evaluate Output → Build Projects → Earn Certification

Key Takeaways

AI courses for beginners don’t require deep technical experience.

You should be familiar with basic math, programming, and data concepts.

A logical, curious mindset is more important than formal credentials.

Certification helps validate your skills and open career doors.

Hands-on practice is the key focus on real-world applications, not just theory.

Ready to Start?

AI is one of the most rewarding fields to enter today. Whether you’re switching careers or leveling up your current role, the path starts with the right learning mindset and foundational skills.

Take the First Step Today

Enroll in AI courses for beginners at H2K Infosys and build real-world AI skills that lead to in-demand jobs and career growth.

#AICoursesForBeginners#ArtificialIntelligenceTraining#LearnAIOnline#ArtificialIntelligenceCertificationOnline#MachineLearningBasics#PythonForAI#DataScienceSkills#AIandMachineLearningCourses#AIProgramming#StartLearningAI

0 notes

Text

[IOTE2025 Shenzhen Exhibitor] SHANGHAI RSID SOLUTIONS will be exhibited at IOTE International Internet of Things Exhibition

With the rapid development of artificial intelligence (AI) and Internet of Things (IoT) technologies, the integration of the two is becoming increasingly close, which is profoundly affecting the technological innovation of all walks of life. AGIC + IOTE 2025 will present an unprecedented AI and IoT professional exhibition event, with the exhibition scale expanded to 80,000 square meters, focusing on the cutting-edge progress and practical applications of "AI+IoT" technology, and in-depth discussion of how these technologies will reshape our future world. It is expected that more than 1,000 industry pioneers will participate to exhibit their innovative achievements in smart city construction, Industry 4.0, smart home life, smart logistics systems, smart devices and digital ecological solutions.

SHANGHAI RSID SOLUTIONS CO., LTD will participate in this exhibition. Let us learn about what wonderful displays they will bring to the exhibition.

Exhibitor Introduction

SHANGHAI RSID SOLUTIONS CO., LTD

Booth number: 9B31-2

August 27-29, 2025

Shenzhen World Convention and Exhibition Center (Bao'an New Hall)

Company Profile

Shanghai RSID SOLUTIONS Co., Ltd., headquartered in Shanghai, is a professional one-stop equipment supplier specializing in card manufacturing and application solutions. The company is dedicated to providing complete solutions for smart card and RFID production, personalization, and more. With more than 20 years of industry experience and the trust of many users, our company aims to become the most professional international supplier in China. Through our global network, we offer tailored pre-sales and after-sales services to meet diverse customer needs. Whether you are a card manufacturer, personalization center, or card issuer, we provide reliable services and abundant resources to support your business.

Product Recommendation

4 edges master is engineered exclusively for high-efficiency, vibrant color edge printing on cards consumping low. Fearturing 4 removable card jigs, each with a 400-card capacity, it can achieve automatically four edges printing in a single pass, reaching speeds to 4000 UPH. Adapting CMYKW printing creates colorful and up to 1440 dpi images, beyond traditional colored core laminating process. It’s also a cost-effective alternative gold/silver stamping to stimulate gold/silver color. What’s more, its material versatility extends to metal, glass, wood, and silicone surfaces. New templates can be inverted within seconds for quick job changes. Environmentally friendly UV inks ensure toxin-free, virtually odorless printing. The printer obtains sufficient certifications including CE, FCC, RoHS, REACH, and HC for guaranteed compliance and safety.

Efficient & Precise:

Automatically saves test results and waveforms with pass/fail judgment. Short test time (typically 0.5s).

Flexible Operation:

Supports three control modes: Manual, Digital I/O (DIO), and external software control (via Windows registry).

Multi-format Output:

Generates log files (TXT), waveform images (JPEG), and data files (CSV) for analysis and archiving.

Portable & Reliable:

USB bus-powered with compact dimensions (125×165×40mm). Ideal for lab and field applications.

PT300 : Next-Gen Industrial-Grade Reader/Writer, Supporting parallel testing for high efficiency.

Comprehensive Product Line:

Covers contact/contactless/dual interface reading/writing & electrical testing. Compatible with smart cards, modules, semiconductor chips, and products.

Exceptional Performance:

Contact interface: 1.2~5.5V wide voltage range | 2A high-current output

Contactless interface: 5~25dBm adjustable RF power | parameter testing (capacitance/Q-value)

Modular Flexibility:

Modular design for chassis compatibility and seamless expansion.

Protocol Compatibility:

Supports ISO7816, ISO14443, I2C, SPI, and Class D high-end requirements.

Applicable scenarios:

Industrial manufacturing, laboratory testing and R&D fields. It is on par with international brand readers in terms of high speed, high precision and high compatibility.

At present, industry trends are changing rapidly, and it is crucial to seize opportunities and seek cooperation. Here, we sincerely invite you to participate in the IOTE 2025, the 24th International Internet of Things Exhibition, Shenzhen Station, held at the Shenzhen World Convention and Exhibition Center (Bao'an New Hall) from August 27 to 29, 2025. At that time, you are welcome to discuss the cutting-edge trends and development directions of the industry with us, explore cooperation opportunities, and look forward to your visit!

0 notes

Text

Python Programming Institute – Master Python with Softcrayons

Python programming training | Python programming course | Python programming institute

Are you looking for the best Python programming institute to build a successful career in programming? Softcrayons Tech Solution offers industry-recognized Python training designed for beginners, intermediate learners, and experienced developers. Our curriculum is structured to help you master Python, the most in-demand programming language in today’s tech-driven world. Whether you’re interested in web development, data science, machine learning, or automation, learning Python is your first step toward a successful tech career. At Softcrayons Python Programming Institute, we provide hands-on training, real-world projects, and expert mentorship to help you become a Python professional.

Why Choose Softcrayons as Your Python Programming Institute?

When it comes to quality education and practical knowledge, Softcrayons Tech Solution stands out as a top-rated Python programming institute in Noida, Ghaziabad, and Delhi NCR. Here's why:

1. Industry-Oriented Python Curriculum

Our Python course is built with the latest industry trends in mind. From basic Python syntax to advanced object-oriented programming, web frameworks, and automation scripts, we cover everything you need to know to become job-ready.

2. Experienced Python Trainers

Our trainers are industry professionals with years of experience in Python development, data analysis, and machine learning. Their real-world experience helps bridge the gap between theoretical knowledge and practical implementation.

3. 100% Practical Training

At Softcrayons, we believe in learning by doing. Our labs and project-based approach ensure you apply every concept through real-world scenarios. Students build applications, scripts, and web-based tools using Python throughout the course.

4. Certification and Placement Support

Upon completing the course, you’ll receive an industry-validated certification that enhances your resume. We also provide job placement assistance, mock interviews, and resume-building sessions to help you land your dream job.

Python Programming Course Overview

The Python Programming Institute at Softcrayons offers a well-defined path from beginner to advanced levels. Here's what you will learn:

Introduction to Python

What is Python and why is it popular?

Installing Python and setting up IDEs

Python syntax and variables

Data types and operators

Control Structures

Conditional statements: if, else, elif

Loops: for loop, while loop

Nested loops and loop control statements

Functions and Modules

Defining and calling functions

Arguments and return values

Lambda functions

Python modules and packages

Object-Oriented Programming in Python

Classes and objects

Constructors and destructors

Inheritance and Polymorphism

Encapsulation and Abstraction

File Handling

Reading and writing files

Working with CSV and JSON

Exception handling

Python for Web Development

Introduction to Flask and Django frameworks

Creating web applications using Python

Routing, templates, and database integration

Python for Data Science

NumPy and Pandas for data manipulation

Matplotlib and Seaborn for data visualization

Introduction to machine learning libraries: Scikit-learn

Who Can Join Softcrayons Python Programming Institute?

Our Python programming course is ideal for:

College students who want to build a strong foundation in programming.

IT professionals looking to switch careers to Python-related fields.

Data analysts and business professionals who want to automate tasks.

Freshers and job seekers aiming to become Python developers or data scientists.

Benefits of Learning at Softcrayons Python Programming Institute

Here’s how we make your learning experience rewarding and career-defining:

Job-Oriented Training

Our course is aligned with the needs of recruiters in software companies, MNCs, and startups hiring Python developers.

Live Projects and Assignments

You’ll work on real-time projects like e-commerce web apps, chatbot creation, and data visualization dashboards that reflect industry standards.

Flexible Timings and Online Classes

Can’t attend classroom sessions? Don’t worry. We offer weekend batches, online training, and customized corporate sessions.

Affordable Fee Structure

Softcrayons offers high-quality education at competitive pricing, making it the best value Python programming course in Noida and Ghaziabad.

Career Opportunities after Completing Python Training

Python is not just a language; it's a gateway to multiple career paths. After completing the course from our Python Programming Institute, you can apply for roles like:

Python Developer

Web Developer (Django/Flask)

Data Analyst

Data Scientist

Automation Engineer

AI/ML Developer

Software Engineer

Python developers are in high demand in India and globally, with opportunities across finance, healthcare, education, e-commerce, and IT services.

Locations We Serve

Our Python Programming Institute is available at multiple locations including:

Python Training in Noida

Python Training in Ghaziabad

Also available for students in Delhi NCR, Greater Noida, Indirapuram, and nearby areas.

We also welcome international learners through our online Python programming course.

Enroll Today and Become a Python Pro!

Don’t miss the opportunity to learn from the best. Join Softcrayons Python programming institute and take your first step towards a lucrative career in software development. Whether you're a fresher or an experienced professional, Python can elevate your career in unimaginable ways. Contact us

0 notes

Text

Advancing Careers with a Certificate in Computer System Validation and Becoming a Certified Pharmacovigilance Professional

In today's fast-paced pharmaceutical and biotechnology industries, regulatory compliance and patient safety are paramount. Professionals who seek to make a significant impact in these fields must equip themselves with specialized qualifications that demonstrate their expertise and commitment. Two highly sought-after credentials in this regard are the Certificate in Computer System Validation (CSV) and the title of Certified Pharmacovigilance Professional. These certifications not only enhance professional credibility but also open doors to lucrative career opportunities in quality assurance, regulatory affairs, and drug safety.

A Certificate in Computer System Validation is designed to ensure that professionals understand the procedures required to validate computer systems used in regulated environments such as pharmaceutical manufacturing, clinical trials, and laboratory data management. With increasing reliance on software systems in drug development and manufacturing, regulatory bodies like the FDA and EMA mandate that all computerized systems used in these processes comply with GxP (Good Automated Manufacturing Practices) standards. The CSV certificate enables professionals to effectively plan, document, and execute validation protocols to ensure system reliability, data integrity, and regulatory compliance.

The CSV certification typically covers a wide range of topics including risk-based validation, lifecycle management, data integrity principles, audit trail review, and compliance with 21 CFR Part 11. With a Certificate in Computer System Validation, individuals are prepared to take on roles such as validation analyst, quality assurance specialist, or regulatory compliance officer. These roles are vital in maintaining the high standards required by international health authorities, and they offer both job security and growth potential.

On the other hand, becoming a Certified Pharmacovigilance Professional signifies a deep understanding of drug safety protocols, adverse event reporting, and risk management strategies. Pharmacovigilance, the science of detecting, assessing, understanding, and preventing adverse effects or other drug-related problems, plays a critical role in public health. As new therapies are developed and enter the market, the need for vigilant monitoring of their effects becomes even more important.

Certification in pharmacovigilance equips professionals with the knowledge and skills needed to manage adverse event databases, interpret safety data, prepare regulatory reports (such as PSURs and DSURs), and contribute to the overall benefit-risk assessment of medicinal products. Regulatory agencies like the FDA, MHRA, and EMA heavily scrutinize pharmacovigilance data when evaluating the safety of approved drugs, making the role of a Certified Pharmacovigilance Professional indispensable.

Combining the Certificate in Computer System Validation with the Certified Pharmacovigilance Professional credential creates a powerful career pathway for professionals aiming to work at the intersection of technology, regulatory compliance, and patient safety. These certifications serve as a strong foundation for leadership roles in pharmaceutical companies, CROs, and healthcare organizations globally.

In conclusion, obtaining both the Certificate in Computer System Validation and becoming a Certified Pharmacovigilance Professional demonstrates a comprehensive skill set that is in high demand across the life sciences industry. These qualifications not only enhance individual career prospects but also contribute meaningfully to the development of safe and effective medical therapies for patients worldwide.

0 notes

Text

Computer System Validation (CSV) Course

Ready to propel your pharma career forward? Our Computer System Validation (CSV) Course at Pharma Connections is for professionals to become gurus in GxP compliance, 21 CFR Part 11, and data integrity. Regardless of your QA, IT, or regulatory background, this hands-on course gives you the tools to validate systems fearlessly and comply with international standards. Learn from live experts and acquire skills that pharma employers seek today.

Flexible online format

Career support provided

Begin your path to a future-proof career today!

#computer system validation training#computer system validation certification#computer system validation course#csv certification#computer system validation course online

0 notes

Text

Computer System Validation Training

Unlock precision and compliance in your IT systems with our Computer System Validation Training. Equip your team with the skills needed to ensure seamless operations and regulatory adherence in the pharmaceutical industry. Elevate your validation expertise today.

Read More: https://www.skillbee.co.in/courses/certificate-in-computer-system-validation/

0 notes

Text

Python Language Course in Pune | Syllabus & Career Guide

Python has quickly become one of the most popular and in-demand programming languages across industries. Its simplicity, versatility, and powerful libraries make it an ideal choice for both beginners and professionals. From web development and data science to automation and artificial intelligence, Python is used everywhere.

If you’re planning to start your programming journey or upgrade your skills, enrolling in a Python Language Course in Pune at WebAsha Technologies is a smart move. In this article, we’ll walk you through the course syllabus and show how it can shape your IT career.

Why Learn Python?

Python is widely used due to its readability, ease of use, and strong community support. It is beginner-friendly and equally powerful for building advanced-level applications.

Reasons to learn Python:

Python is used in web development, data analysis, machine learning, and scripting.

It has a clean and simple syntax, making it easy to learn.

It’s one of the top skills demanded by employers in the tech industry.

Python opens up opportunities in various domains, including finance, healthcare, and automation.

Python Course Syllabus at WebAsha Technologies

Our Python Language Course is designed to take you from the basics to advanced topics, providing practical knowledge through hands-on projects and assignments.

Module 1: Introduction to Python

History and features of Python

Installing Python and setting up the development environment

Writing your first Python program

Understanding the Python interpreter and syntax

Module 2: Python Basics

Variables, data types, and operators

Input/output operations

Conditional statements (if, else, elif)

Loops (for, while) and control statements (break, continue, pass)

Module 3: Functions and Modules

Defining and calling functions

Arguments and return values

Built-in functions and creating custom modules

Importing modules and working with standard libraries

Module 4: Data Structures in Python

Lists, tuples, sets, and dictionaries

List comprehensions and dictionary comprehensions

Nested data structures and common operations

Module 5: Object-Oriented Programming (OOP)

Understanding classes and objects

Constructors, inheritance, and encapsulation

Polymorphism and method overriding

Working with built-in and user-defined classes

Module 6: File Handling and Exception Management

Reading and writing files

Working with CSV, JSON, and text files

Handling exceptions with try, except, and finally blocks

Creating custom exceptions

Module 7: Python for Web Development

Introduction to Flask or Django (based on course track)

Creating simple web applications

Routing, templates, and form handling

Connecting Python to databases (SQLite/MySQL)

Module 8: Python for Data Analysis (Optional Track)

Introduction to NumPy and Pandas

Data manipulation and analysis

Visualization using Matplotlib or Seaborn

Module 9: Final Project and Assessment

Build a real-time project using all the concepts learned

Project presentation and evaluation

Certificate of completion issued by WebAsha Technologies

Career Opportunities After Completing the Python Course

Python opens doors to various exciting career paths in the tech industry. Whether you want to become a developer, data analyst, or automation expert, Python skills are highly transferable.

Popular career roles:

Python Developer

Web Developer (Flask/Django)

Data Analyst or Data Scientist

Machine Learning Engineer

Automation Tester or Scripting Specialist

DevOps Engineer with Python scripting skills

Industries hiring Python professionals:

IT and Software Services

Healthcare and Bioinformatics

FinTech and Banking

Education and EdTech

E-commerce and Retail

Research and AI Startups

Why Choose WebAsha Technologies?

WebAsha Technologies is a leading institute in Pune offering practical, industry-oriented Python training.

What makes our course stand out:

Industry-expert trainers with real-world experience

Practical training with live projects and case studies

Regular assignments, quizzes, and assessments

Career support, resume building, and interview preparation

Certification upon successful course completion

Final Thoughts

Suppose you're looking to build a strong foundation in programming or explore growing tech fields like web development, data science, or automation. In that case, the Python Language Course in Pune by WebAsha Technologies is a perfect starting point.

With a well-structured syllabus, expert mentorship, and career support, you will not only learn Python but also gain the confidence to apply it in real-world projects. Enroll today and start shaping your future in tech.

#Python Training in Pune#Python Course in Pune#Python Classes in Pune#Python Certification in Pune#Python Training Institute in Pune#Python Language Course in Pune

0 notes

Text

Scraping Grocery Apps for Nutritional and Ingredient Data

Introduction

With health trends becoming more rampant, consumers are focusing heavily on nutrition and accurate ingredient and nutritional information. Grocery applications provide an elaborate study of food products, but manual collection and comparison of this data can take up an inordinate amount of time. Therefore, scraping grocery applications for nutritional and ingredient data would provide an automated and fast means for obtaining that information from any of the stakeholders be it customers, businesses, or researchers.

This blog shall discuss the importance of scraping nutritional data from grocery applications, its technical workings, major challenges, and best practices to extract reliable information. Be it for tracking diets, regulatory purposes, or customized shopping, nutritional data scraping is extremely valuable.

Why Scrape Nutritional and Ingredient Data from Grocery Apps?

1. Health and Dietary Awareness

Consumers rely on nutritional and ingredient data scraping to monitor calorie intake, macronutrients, and allergen warnings.

2. Product Comparison and Selection

Web scraping nutritional and ingredient data helps to compare similar products and make informed decisions according to dietary needs.

3. Regulatory & Compliance Requirements

Companies require nutritional and ingredient data extraction to be compliant with food labeling regulations and ensure a fair marketing approach.

4. E-commerce & Grocery Retail Optimization

Web scraping nutritional and ingredient data is used by retailers for better filtering, recommendations, and comparative analysis of similar products.

5. Scientific Research and Analytics

Nutritionists and health professionals invoke the scraping of nutritional data for research in diet planning, practical food safety, and trends in consumer behavior.

How Web Scraping Works for Nutritional and Ingredient Data

1. Identifying Target Grocery Apps

Popular grocery apps with extensive product details include:

Instacart

Amazon Fresh

Walmart Grocery

Kroger

Target Grocery

Whole Foods Market

2. Extracting Product and Nutritional Information

Scraping grocery apps involves making HTTP requests to retrieve HTML data containing nutritional facts and ingredient lists.

3. Parsing and Structuring Data

Using Python tools like BeautifulSoup, Scrapy, or Selenium, structured data is extracted and categorized.

4. Storing and Analyzing Data

The cleaned data is stored in JSON, CSV, or databases for easy access and analysis.

5. Displaying Information for End Users

Extracted nutritional and ingredient data can be displayed in dashboards, diet tracking apps, or regulatory compliance tools.

Essential Data Fields for Nutritional Data Scraping

1. Product Details

Product Name

Brand

Category (e.g., dairy, beverages, snacks)

Packaging Information

2. Nutritional Information

Calories

Macronutrients (Carbs, Proteins, Fats)

Sugar and Sodium Content

Fiber and Vitamins

3. Ingredient Data

Full Ingredient List

Organic/Non-Organic Label

Preservatives and Additives

Allergen Warnings

4. Additional Attributes

Expiry Date

Certifications (Non-GMO, Gluten-Free, Vegan)

Serving Size and Portions

Cooking Instructions

Challenges in Scraping Nutritional and Ingredient Data

1. Anti-Scraping Measures

Many grocery apps implement CAPTCHAs, IP bans, and bot detection mechanisms to prevent automated data extraction.

2. Dynamic Webpage Content

JavaScript-based content loading complicates extraction without using tools like Selenium or Puppeteer.

3. Data Inconsistency and Formatting Issues

Different brands and retailers display nutritional information in varied formats, requiring extensive data normalization.

4. Legal and Ethical Considerations

Ensuring compliance with data privacy regulations and robots.txt policies is essential to avoid legal risks.

Best Practices for Scraping Grocery Apps for Nutritional Data

1. Use Rotating Proxies and Headers

Changing IP addresses and user-agent strings prevents detection and blocking.

2. Implement Headless Browsing for Dynamic Content

Selenium or Puppeteer ensures seamless interaction with JavaScript-rendered nutritional data.

3. Schedule Automated Scraping Jobs

Frequent scraping ensures updated and accurate nutritional information for comparisons.

4. Clean and Standardize Data

Using data cleaning and NLP techniques helps resolve inconsistencies in ingredient naming and formatting.

5. Comply with Ethical Web Scraping Standards

Respecting robots.txt directives and seeking permission where necessary ensures responsible data extraction.

Building a Nutritional Data Extractor Using Web Scraping APIs

1. Choosing the Right Tech Stack

Programming Language: Python or JavaScript

Scraping Libraries: Scrapy, BeautifulSoup, Selenium

Storage Solutions: PostgreSQL, MongoDB, Google Sheets

APIs for Automation: CrawlXpert, Apify, Scrapy Cloud

2. Developing the Web Scraper

A Python-based scraper using Scrapy or Selenium can fetch and structure nutritional and ingredient data effectively.

3. Creating a Dashboard for Data Visualization

A user-friendly web interface built with React.js or Flask can display comparative nutritional data.

4. Implementing API-Based Data Retrieval

Using APIs ensures real-time access to structured and up-to-date ingredient and nutritional data.

Future of Nutritional Data Scraping with AI and Automation

1. AI-Enhanced Data Normalization

Machine learning models can standardize nutritional data for accurate comparisons and predictions.

2. Blockchain for Data Transparency

Decentralized food data storage could improve trust and traceability in ingredient sourcing.

3. Integration with Wearable Health Devices

Future innovations may allow direct nutritional tracking from grocery apps to smart health monitors.

4. Customized Nutrition Recommendations

With the help of AI, grocery applications will be able to establish personalized meal planning based on the nutritional and ingredient data culled from the net.

Conclusion

Automated web scraping of grocery applications for nutritional and ingredient data provides consumers, businesses, and researchers with accurate dietary information. Not just a tool for price-checking, web scraping touches all aspects of modern-day nutritional analytics.

If you are looking for an advanced nutritional data scraping solution, CrawlXpert is your trusted partner. We provide web scraping services that scrape, process, and analyze grocery nutritional data. Work with CrawlXpert today and let web scraping drive your nutritional and ingredient data for better decisions and business insights!

Know More : https://www.crawlxpert.com/blog/scraping-grocery-apps-for-nutritional-and-ingredient-data

#scrapingnutritionaldatafromgrocery#ScrapeNutritionalDatafromGroceryApps#NutritionalDataScraping#NutritionalDataScrapingwithAI

0 notes

Text

A Complete Guide to Choosing the Best International Address Verification API

1. Introduction

International shipping, eCommerce, KYC regulations, and CRM optimization all depend on precise address data. A reliable Address Verification System API reduces returns, speeds delivery, and ensures legal compliance globally.

2. What Is an International Address Verification API?

It's a cloud-based service that validates, corrects, and formats postal addresses worldwide according to official postal databases (e.g., USPS, Canada Post, Royal Mail, La Poste, etc.).

3. Top Use Cases

eCommerce order validation

FinTech KYC checks

Cross-border logistics and warehousing

B2B data cleaning

Government and healthcare record management

4. Key Features to Look for in 2025

Global coverage: 240+ countries

Real-time validation

Postal authority certification

Geocoding support (lat/lng)

Multilingual address input

Address autocomplete functionality

Deliverability status (DPV, RDI, LACSLink)

5. Comparing the Best APIs

API ProviderGlobal CoverageFree TierAuto-CompleteComplianceLoqate245 countriesYesYesGDPR, CCPASmarty240+ countriesYesYesUSPS CASS, HIPAAMelissa240+ countriesLimitedYesSOC 2, GDPRGoogle Maps API230+ countriesPaidYesModeratePositionStack200+ countriesYesNoCCPA

6. Integration Options

RESTful API: Simple JSON-based endpoints.

JavaScript SDKs: Easy to add autocomplete fields to checkout forms.

Batch processing: Upload and verify bulk address files (CSV, XLSX).

7. Compliance Considerations

Ensure:

GDPR/CCPA compliance

Data encryption at rest and in transit

No long-term storage of personal data unless required

8. Pricing Models

Per request (e.g., $0.005 per verification)

Tiered subscription

Enterprise unlimited plans Choose based on your volume.

9. Case Studies

Logistics firm saved $50K/yr in returns.

FinTech company reduced failed onboarding by 22% using AVS API.

10. Questions to Ask Vendors

Is local address formatting supported (e.g., Japan, Germany)?

Are addresses updated with the latest postal files?

Can I process addresses in bulk?

11. Future Trends

AI-based address correction

Predictive delivery insights

Integration with AR navigation and drones

12. Conclusion

Choosing the right international address verification API is key to scaling your global operations while staying compliant and cost-efficient.

SEO Keywords:

International address verification API, global AVS API, address autocomplete API, best AVS software 2025, validate shipping addresses, postal verification tool

youtube

SITES WE SUPPORT

Verify Financial Mails – Wix

0 notes

Text

Modernize On-Prem Oracle Business Intelligence GL(OBIEE/OBIA) with Google Cloud BigQuery & Looker

1. Why Modernize Legacy On-Prem Oracle BI Application

Oracle Support for OBIEE/OBIA is phasing out and your Organization needs a new home for Analytics.

Cloud data warehouses provide elastic scalability, allowing organizations to easily scale their computing and storage resources up or down based on demand

Leading cloud providers invest heavily in security measures to protect customer data, including robust encryption, access controls, and compliance certifications.

Opens up opportunities for Advanced Analytics like ML / AI / LLM

Cloud Data platforms enables Integration with Source Systems ( ERP, CRM, HCM, Planning, WMS, & Marketing ) for 360 view of Analytics.

Cloud data warehouses are built on modern distributed architectures designed to deliver high performance for data processing and analytics workloads.

Cloud data warehouses offer greater flexibility compared to traditional on-premises solutions.

2. Modernize Oracle BI with Cloud Data Architecture

2.1 — Data Extraction & Load via Cloud Composer:

Data from the source system (Oracle EBS GL Module) is ingested into ODS Stage schema through cloud composer application, the oracle EBS data is first landed into GCS bucket in CSV format and then inserted into the ODS STG schema. After the initial load, the data from Oracle EBS is pulled incrementally into the GCS bucket.

Then the data is ingested into the ODS Schema. This merging of data from ODS Stage to ODS Schema of BigQuery is implemented in the cloud composer itself.

Sample Tables Extracted form Oracle EBS,

GL_BALANCES GL_LEDGERS FND_FLEX_VALUES GL_PERIODS GL_CODE_COMBINATIONS

Once the data is merged from ODS stage schema to ODS schema, the oracle EBS data file that was created in the GCS bucket is moved to an archived location within the GCS bucket.

2.2 — Change Data Capture ( CDC ) via Cloud Composer

This is done by using a system variable that maintains that last successful execution start date of cloud composer code. Only those records are processed from EBS that have last_update_date as greater than or equal to this date.

Additionally cloud composer is empowered with an option to pass prune days as well which is leveraged here to go one day back further from last successful execution start date of cloud composer code to get the recently modified data in source. This ensures that there is an extra cushion to ensure that the code is missing any of the records that are modified in source.

The config file shown in the diagram maintains the information such as project id, region, gcs bucket information, initial extract date, repository etc.

2.3 — Data Transformation via Dataform

From ODS layer to EDW the data mart is cleansed and transformed according to the business requirement through the Dataform in Google Cloud Platform.

The Dataform Scripts are executed on a daily basis to get the most recent data from the source.

This execution of the Dataform jobs is automated using the Cloud Composer. To do this each Dataform job is given a tag name and the cloud composer is able to execute those jobs via these tag names.

In the transformation the data is denormalized as per the data model defined below. All the needed naming standards and conventions are followed while creating data models.

2.4 — Visualization

Looker is connected to the EDW Schema for all the reporting needs.

3. General Ledger Data Models

GL Data Models

In the GL module we have created 2 data models based on the business requirement.

GL Balances

GL Journals

The final looker reports generated are based on these 2 models. All the dimensions are shared across these models.

Some unique feature of these models include

Dynamic currency conversion based on the type of account such as a balance sheet or income statement.

Integration of journal data with other modules such AP and AR to get invoice, supplier and customer information. This enables account reconciliation between general ledger and subledger.

Integration of GL balances data with account hierarchy from hyperion allows creation of critical financial reports such as income statements and balance sheets.

Drill through from General ledger to Subledger.

The dimension tables will include the accounting information and their associated hierarchies, Ledger, Period, supplier and customer information.

Except for supplier and customer all other dimensions are common across both the models and are joined with the fact tables to get the desired information.

Both the models are integrated with the hierarchies that are defined in hyperion to get the hierarchies related to account, department, company and other segments.

Additionally in both the data model currency conversion mechanism is applied to convert the transaction recorded in local currency to global currency like USD.

4. Deep Dive Into GL Journal Data Model

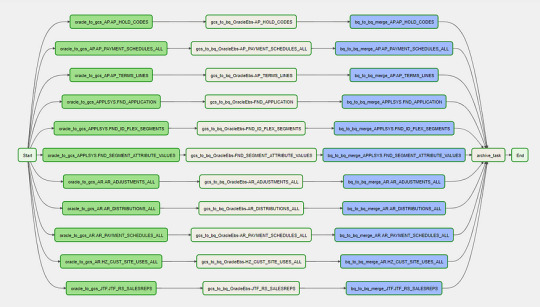

4.1 GL Journal Dataflow

GL Journals Data Flow Diagram

Based on the above figure, Data flows from EBS and Hyperion to the GCP ecosystem. Source data is first dropped into a GCS bucket in csv file format using the cloud composer. Various one to one target tables are created that hold the EBS table data in bigquery tables. This stage of data flow is called data replication. All the target tables related to GL are listed above in the Data flow diagram. Once the data replication is done in the ODS layer the final Edw tables are created by performing the desired transformations. This is done using the dataform. Finally this transformed data is consumed by Looker to produce the desired reports and actionable insights.

GL Journals Data Integrations, GL Journal model goes beyond traditional journal entries by seamlessly integrating with Sub-Ledgers along with submodules such as Accounts Payable (AP) and Accounts Receivable (AR) hence allowing Journal reporting at Invoice, Supplier or Customer level

The granularity of the model is set at Journal Line Level. However the granularity is extended to Invoice Distribution level for purchase and sales journals. The accounting related details are obtained by joining with the GL Code Combination table.

The audit columns are added at the denormalized level and the naming standards are followed.

GL Journal Data Model. EBS tables related to GL Journals, Sub ledgers, AP and AR are the primary tables for this model along with other supporting tables.

The model provides detailed journal information at the supplier, customer, and invoice levels, offering unparalleled insights and facilitating robust account reconciliation processes between the General Ledger and Subledger Modules. Establishes a robust audit trail, facilitating traceability and accountability for each financial entry.

The model deploys a dynamic currency conversion methodology that converts the accounted credit and debit into global currency based on the account type. For Income Statement related accounts are used monthly average of conversion rates while for Balance Sheet accounts it uses month end rate.

The model is extended to integrate the data flowing from EBS with Hyperion hierarchies related to each of the segments.

Time-series KPIs such as year-to-date, quarter-to-date are pre calculated in the model.

Net Amount is calculated in the model as difference between debit and credit and is available in transaction currency, ledger currency and global currency (example USD)

4.2 Dashboards and Visualizations for GL Journals

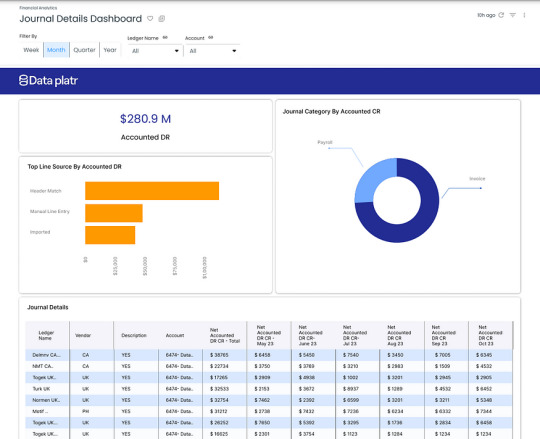

4.2.1 GL Journal Details Dashboard

The Integrated GL Journals Details Dashboard stands out as a powerful tool offering a nuanced exploration of journal entries, uniquely providing insights at the levels of suppliers, customers, and invoices. This dashboard goes beyond traditional GL views, integrating seamlessly with Accounts Payable (AP) and Accounts Receivable (AR) to enhance visibility and facilitate robust account reconciliation between the General Ledger (GL) and SubLedger modules.

Key Features GL Journal Details Dashboard:

Journal Entry Details:

Transaction Specifics: Presents comprehensive details for each journal entry, including transaction descriptions, dates, and amounts.

Audit Trail: Establishes a transparent audit trail for accountability and traceability.

Integration with AP and AR:

Accounts Payable (AP): Supplier-Centric View: Provides insights into journals associated with suppliers, fostering a clear understanding of payables and vendor relationships. Invoice-Level Breakdown: Allows detailed analysis of journal entries linked to individual invoices in the AP module.

Accounts Receivable (AR): Customer-Focused Reporting: Enables detailed exploration of journals associated with customers, enhancing visibility into receivables and customer relationships. Invoice-Level Analysis: Offers a granular breakdown of journal entries related to specific customer invoices in the AR module.

Facilitates Account Reconciliation: GL and Subledger Alignment: The integration with AP and AR modules supports seamless account reconciliation, ensuring consistency between the GL and subledger data.

Benefits:

Detailed Financial Transparency: Journals can be analyzed at the levels of suppliers, customers, and invoices, providing a nuanced view of financial transactions for improved transparency.

Efficient Reconciliation Process: Integration with AP and AR streamlines the account reconciliation process, minimizing discrepancies between the GL and subledger.

Informed Decision-Making: Enhanced visibility into journal entries at various levels empowers stakeholders with timely and relevant financial information for strategic decision-making.

Operational Efficiency: Automation of journal entries and integration with subledger modules reduces manual efforts, improving operational efficiency and reducing the risk of errors.

The Integrated GL Journals Details Dashboard serves as a comprehensive solution, allowing detailed exploration of journal entries and promoting transparency at the levels of suppliers, customers, and invoices. By integrating with AP and AR, it not only enhances financial visibility but also streamlines the account reconciliation process, ensuring accurate and efficient financial management.

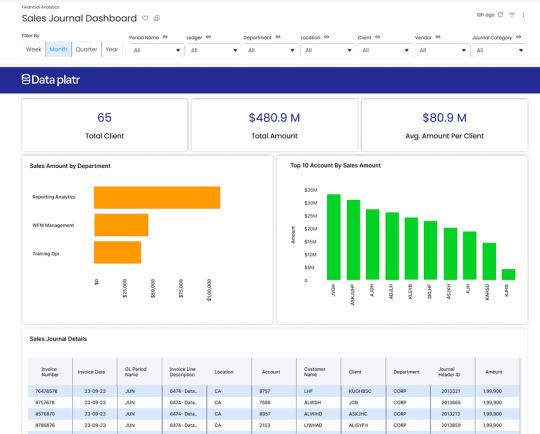

4.2.2 Sales Journal Dashboard

The Sales Journal Dashboard is a specialized tool tailored for in-depth insights into sales transactions, with a customer-centric focus. This dashboard provides a comprehensive analysis of the sales journal, emphasizing customer details and associated invoices.

Key Features of Sales Journal Dashboard:

Customer-Centric Metrics: Sales Transactions by Customer: Presents a detailed breakdown of sales transactions, offering insights into customer-specific performance. Invoice-Level Analysis: Allows users to explore associated invoices for each customer, facilitating a granular understanding of sales activities.

Detailed Sales Analysis: Transaction Breakdown: Provides comprehensive metrics for each sales transaction, including transaction dates, amounts, and descriptions. Historical Performance: Enables users to track the historical sales performance of individual customers.

Strategic Decision Support: Top Customers: Highlights the top-performing customers based on sales transactions, aiding in strategic decision-making and customer relationship management.

User-Friendly Interface: Intuitive design ensures easy navigation, allowing users to quickly access and analyze sales data.

Benefits:

The Sales Journal Dashboard empowers users with customer-centric insights into sales transactions, facilitating strategic decision-making and enhancing customer relationship management. Its user-friendly interface and detailed metrics make it an invaluable tool for businesses seeking a comprehensive view of their sales activities.

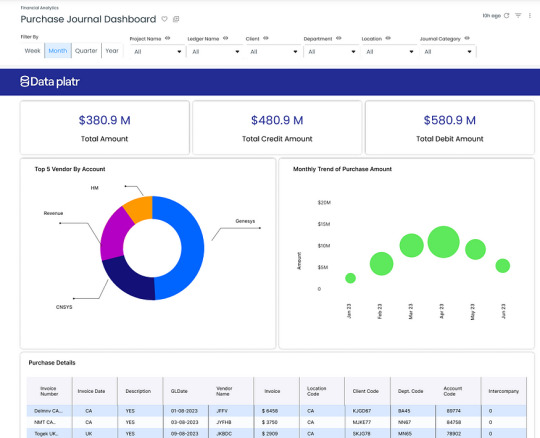

4.2.3 Purchase Journal Dashboard

The Purchase Journal Dashboard is a dedicated platform providing detailed insights into purchasing activities, with a primary focus on suppliers and associated invoices. This dashboard enables users to comprehensively explore the purchase journal, gaining valuable insights into supplier relationships and transaction details.

Key Features Purchase Journal Dashboard:

Supplier-Centric Metrics: Purchase Transactions by Supplier: Offers a detailed breakdown of purchasing transactions, providing insights into supplier-specific performance. Invoice-Level Exploration: Allows users to delve into associated invoices for each supplier, facilitating a granular understanding of procurement activities.

Comprehensive Purchase Analysis: Transaction Details: Presents detailed metrics for each purchase transaction, including transaction dates, amounts, and descriptions. Historical Purchasing Trends: Enables users to track historical purchasing trends with individual suppliers.

Strategic Decision Support: Top Suppliers: Highlights the top-performing suppliers based on purchasing transactions, aiding in strategic decision-making and supplier management.

User-Friendly Interface: Intuitive design ensures easy navigation, allowing users to quickly access and analyze purchase data.

Benefits:

The Purchase Journal Dashboard serves as a valuable tool for businesses seeking supplier-focused insights into purchasing transactions. With its user-friendly interface and comprehensive metrics, this dashboard enhances strategic decision-making and supplier relationship management, providing a holistic view of procurement activities.

5. Deep Dive Into GL Balances Data Model

5.1 GL Balances Dataflow

Data flows from EBS and Hyperion to the GCP ecosystem. Source data is first dropped into a GCS bucket in csv file format using the cloud composer. Various one to one target tables are created that hold the EBS table data in bigquery tables. This stage of data flow is called data replication. All the target tables related to GL are listed above in the Data flow diagram. Once the data replication is done in the ODS layer the final Edw tables are created by performing the desired transformations. This is done using the dataform. Finally this transformed data is consumed by Looker to produce the desired reports and actionable insights.

GL Balance Dataflow

The GL Balances presents summarized as well as detailed view of the General Ledger accounting by capturing both the monthly activity as well as balance amount details. EBS tables related to GL balances is the primary table for this model along with other supporting tables.

GL Balance Data Integrations

For the combined aggregate the granularity of the data is kept at a GL Code Combination level. The accounting related details are obtained by joining the table with the GL Code Combination table.

Time-series KPIs such as year-to-date, quarter-to-date are pre calculated in the model. Pre-calculated KPIs related to opening and end balances in terms of transaction, ledger and global currency are added in the model.

GL Balances Data Model

The developed data model seamlessly integrates GL Balances date with the Account Hierarchy provided by Hyperion. This integration creates a powerful and dynamic foundation for generating accurate and insightful financial reports, including balance sheets and income statements.

The model is extended to integrate the data flowing from EBS with Hyperion hierarchies related to each of the segments.

5.2 Dashboards and Visualizations for GL Balances

5.2.1 GL Balances Dashboard

The dashboard provides a snapshot of financial data for a given fiscal period, offering key insights into the opening balance, period net movement, and ending balance.This dynamic dashboard allows users to analyze data at both the segment and hyperion hierarchy levels, promoting in-depth financial understanding and strategic decision-making.

GL Balances Dashboard

Key Features of the GL Balances Dashboard:

Summary Metrics:

Opening Balance: Displays the initial financial position at the beginning of the fiscal period.

Period Net Movement: Illustrates the net changes that occurred during the specified period.

Ending Balance: Highlights the concluding financial position at the end of the fiscal period.

Slicing and Dicing:

Segment Level: Users can analyze data by segment, gaining insights into specific business divisions or units.

Hyperion Hierarchy Level: Allows for a hierarchical breakdown, aligning with the organization’s financial structure.

Drill-Down Analysis:

Double-Click Functionality: Reports on the dashboard can be double-clicked to initiate a detailed analysis at the account level, providing a granular understanding of financial activities.

Benefits:

Holistic Financial Overview: The dashboard provides a comprehensive view of the fiscal period, enabling stakeholders to grasp the financial landscape at a glance.

Flexible Data Exploration: Users can slice and dice data based on segments or navigate through the Hyperion hierarchy, tailoring the analysis to specific organizational needs.

Efficient Decision-Making: Quick access to opening balances, net movements, and ending balances empowers decision-makers with timely and relevant financial information.

Detailed Account Analysis: The ability to drill down to the account level ensures a thorough investigation of financial details for precise decision-making and strategic planning.

This comprehensive dashboard offers a powerful tool for financial analysis, combining high-level summaries with the flexibility to explore data at different levels. With its intuitive design and detailed functionality, this dashboard enhances financial transparency and supports informed decision-making within the organization.

6. Conclusion

In this article, we have highlighted the intricacies of general ledger (GL) architecture, data flow, and data models, shedding light on how organizations can leverage advanced technologies to streamline financial processes and gain valuable insights.

The unique features and capabilities of our GL Journal model, which integrates the General Ledger with Subledger systems such as Accounts Payable (AP) and Accounts Receivable (AR). This integration allows for a seamless flow of information, enabling detailed analysis at the invoice level and providing end-to-end visibility under one comprehensive umbrella. The ability to drill through from a Journal entry all the way down to individual invoices sets our model apart, offering unparalleled transparency and granularity in financial data.

Furthermore, we have implemented a dynamic currency conversion mechanism that automatically converts transaction amounts into a global currency (example USD) based on the account type, whether it be income statement or balance sheet accounts. This feature enhances international financial reporting and ensures consistency across diverse financial operations.

In addition, our GL Balances model captures both monthly actuals or activity amounts and balance amounts, supporting critical reporting requirements such as income statements, balance sheets, and trial balances. The integration with Hyperion hierarchies further enriches our models, providing users with a comprehensive view of financial information aligned with organizational structures.

As organizations navigate the complexities of modern finance, our innovative GL solutions offer a robust foundation for informed decision-making, strategic planning, and regulatory compliance. By harnessing the power of GCP and data integration, we empower financial professionals to unlock actionable insights and drive sustainable growth in today’s dynamic business landscape.

How Data platr can help?

Data platr specializes in delivering cutting-edge solutions in the realm of financial Analytics with a focus on leveraging the power of Google Cloud Platform (GCP) / Snowflake / AWS. Through our expertise, we provide comprehensive analytics solutions tailored to optimize general ledger analysis and reconciliation. By harnessing the capabilities of cloud, we offer a robust framework for implementing advanced analytics tools, allowing businesses to gain actionable insights and make data driven decisions.

Curious And Would Like To Hear More About This Article?

Contact us at [email protected] or Book time with me to organize a 100%-free, no-obligation call

0 notes

Text

GMP Consultants: Integrating with Data Integrity and Computer System Validation

In today’s heavily regulated industries, Good Manufacturing Practice (GMP) certification is an indispensable element in delivering products that are consistently produced and controlled to quality standards. GMP is also essential for industries such as pharmaceuticals, biotechnology, food, and cosmetics to protect public health.

Meanwhile, the need for Data Integrity (DI) and Computer System Validation (CSV) is becoming a standard around the world. Regulatory authorities such as the FDA, EMA, and MHRA are looking for more than just good manufacturing, they're looking for credible, reliable data that supports product quality.

That is, when GMP Certification in UAE objectives are coupled with Data Integrity and CSV programs, it buttons up the entire compliance program, increases operational effectiveness, and establishes higher level of trust with the consumers and regulators.

What is GMP Certification?

GMP is proof that the manufacturer has followed specific guidelines for high quality, safety, and efficacy. Those standards pertain to every aspect of your production, from raw materials to human hygiene, down to equipment cleaning and documentation.

Industries that Require GMP

The certification is a must for various sectors such as:

Biopharmaceuticals and biotechnology

Medical devices

Cosmetics and personal care

Food and beverages

Nutraceutical and food supplements

Fundamental Aspects of GMP Regulations

These standards are concentrated on several key points:

Good and well-maintained factory formats

Written instructions and procedures have been given and followed

Correct documentation of manufacturing parameters

Competent and trained personnel

Quality control and complaint management systems

Following these principles will also result in the quality of products being produced and controlled to standards suitable for their intended use.

Responsibility of GMP Certification Consultants

What Are GMP Consultants?

GMP Consultants are professionals who specialize in assisting companies through the GMP obligations maze. They deliver personalized guidance to help meet compliance, pass audits, and stay certified long-term.

Services Offered:

Gap Analysis and Audits (GA&A): Highlighting non-compliance areas and suggesting optimal improvements.

Development of QMS: Layout of systems and procedures in compliance with GMP.

Employee Training and Inner Audits: Training of the staff and enhancement of already established internal audit procedures.

External Certification Audit Preparation: Assisting businesses to prepare for 3rd party audits by certification bodies or regulators.

Advantages of Using Consultants:

Bringing in consultants, you can expect that when companies bring in GMP Certification Consultants, they bring in added value

Industry-specific know-how

Quicker and more streamlined certification timelines

Increased confidence in adhering to and maintaining adherence

Data Integrity and CSV: An Introduction to CSV

What is Data Integrity?

Data Integrity (DI) is about the accuracy, completeness, consistency, and reliability of data during its lifetime. Regulatory agencies insist that all records, including electronic and paper-based records, be subject to the most rigorous level of integrity.

Principles of ALCOA+

ALCOA+ – the basics of data integrity. Since the days of GCP, the principles of data quality have rested on these 6 basic principles:

Attributable: The data should be linked to the person who created it.

Readable: Data shall be capable of being read and maintained.

Contiguous Florentines: Information should be entered promptly into the log from the time of the activity.

Original: The original records should be retained.

Accurate: Data should be representative of what actually occurred.

Plus: Comprehensive, Coherent, Permanent, and Accessible.”

What is CSV?

Computer System Validation (CSV) Computer system validation ensures that a computerized system employed in these regulated activities is able to generate a result that meets its predetermined specifications. CSV ensures that a system works as intended and in accordance with the standard.

Data Integrity, CSV, and GMP Compliance

Why Compliance Relies on Data Integrity?

Without integrity, quality and safety can not be assured. Data Integrity concerns have been taking center stage during regulatory inspections, and in the event of a breakdown, you may end up with warning letters, product recalls, as well as facility shutdowns.

Utilization of Validated Computer Systems for Operations

Computer systems are validated to ensure that:

The data are obtained with high accuracy and repeatability.

Regulatory and business requirements are satisfied by system functions.

Electronic saved records are reliable and auditable.

Advisor Integration Strategies:

DI Risk Assessments and Gap Analysis: Locating the weak spots in data processing and solutions to remedy them.

Validator Master Plans (VMP) Computer Systems: Defining the validation approach, activities, and documentation that will be needed.

Audit Trails, Access Controls, and Electronic Record Management: Appropriate tracking, safeguarding, and managing access to the information across the systems.

Certification and Validation with Consultant Support

The Initial Review of Systems and Practices

The GMP Certification Consultants in Dubai start with a complete examination of existing manufacturing and data management systems with regard to GMP, DI, and CSV requirements.

Planning of Remediation for DI Gaps and Validation Gaps

Corrective action plans are developed following gap analysis, focusing on the highest risk exposure to compliance and product quality.

System Compliance to Requirements (GAMP 5 approach, where applicable)

In accordance with GAMP 5 (Good Automated Manufacturing Practice) principles, the consultation team heads validation activities, providing a risk-based, scalable approach for computer system validation.

Documentation: SOP and Validation Reports Preparation

SOPs, URS, Validation Protocols (IQ, OQ, PQ), and final Validation reports are also developed to show all aspects of compliance.

Training on DI, CSV Principles

The GMP Certification in UAE experts provide various training programs to sensitize and enhance the knowledge of the workforce with regard to Data Integrity and Computer System Validation.

Final Audits and Ongoing Monitoring Measures

A last internal audit declares the company prepared for external audits, and long-term compliance is supported by enforced monitoring schedules.

Challenges in Integration

Legacy System Compliance/Remediation

Systems that are not validated and are older can represent a serious threat to the integrity of data. Replacing these legacy systems to bring them up to code takes time and money.

Adapting to Changing Regulatory Requirements

Regulatory recommendations about DI and CSV are constantly changing. Enterprises need to be aware of and adjust to changing standards in order to stay compliant.

Resource and Knowledge Constraints in SMEs

Some socioeconomic, small and medium-sized enterprises (SMEs) have limited data capability and resources. This makes working with external GMP Consultants in Abu Dhabi all the more important to ensure that you’re both reaching and maintaining compliance.

Future Trends

Emergence of Digital Validation Tool

By automating the validation, CSV tasks are getting faster, and manual activities are decreased, while concessions, accuracy, and audit preparation are improved!

Growing Use of Cloud-Based Platforms and DI Exposure

Although cloud systems bring convenience, economy, and scalability, they also lead to new data security and integrity problems. However, validation and vendor management take on critical importance.

More Regulatory Attention on AI and New Technologies under the Settings

Regulators are likely to issue new guidelines on validating these advanced technologies as artificial intelligence and machine learning enter regulated businesses.

The Main Point!!

Combining the certification, Data Integrity, and Computer Systems Validation is not an option anymore; they are mandatory for any company working in a highly regulated environment. This alignment will not only reinforce compliance but also enhance operational performance, product quality, and consumer confidence.

Through engaging with Good Manufacturing Practice experts, businesses receive the knowledge and structured support required to navigate the intricacies of regulatory environments.

GMP Consultants in UAE assist companies in putting in place strong systems that deliver continued compliance and future success in a very challenging global market.

0 notes