#docker blog

Explore tagged Tumblr posts

Text

Id like to thank @debian-official, if it wasnt for them this idea wouldnt have come to me.

What projects is everyone working on? Im curious and want to see what the IT community is bashing their heads against.

Ill start, I am setting up a personal website for my homelab! The site is through nginx. I dont have any experience with html or css or anything like that and for me its a fun little challenge. It has also allowed my to get my "cdn" going again which I am quite pleased about ^~^

37 notes

·

View notes

Text

So I have found that there are official blogs of the linux distros and I am loving every second of this

3 notes

·

View notes

Text

the only constant in this inconsistant universe is that behind every AFL tumblr blog is a dockers supporter

#every tumblr afl blog is secretly a dockers fan actually#talking to you geelong-cats#west coast WISHES they were me

4 notes

·

View notes

Text

Docker-Compose is the essential tool for managing multi-container Docker applications. This article will give you a comprehensive introduction to its features, installation and usage.

Read more

0 notes

Text

📝 Guest Post: Local Agentic RAG with LangGraph and Llama 3*

New Post has been published on https://thedigitalinsider.com/guest-post-local-agentic-rag-with-langgraph-and-llama-3/

📝 Guest Post: Local Agentic RAG with LangGraph and Llama 3*

In this guest post, Stephen Batifol from Zilliz discusses how to build agents capable of tool-calling using LangGraph with Llama 3 and Milvus. Let’s dive in.

LLM agents use planning, memory, and tools to accomplish tasks. Here, we show how to build agents capable of tool-calling using LangGraph with Llama 3 and Milvus.

Agents can empower Llama 3 with important new capabilities. In particular, we will show how to give Llama 3 the ability to perform a web search, call custom user-defined functions

Tool-calling agents with LangGraph use two nodes: an LLM node decides which tool to invoke based on the user input. It outputs the tool name and tool arguments based on the input. The tool name and arguments are passed to a tool node, which calls the tool with the specified arguments and returns the result to the LLM.

Milvus Lite allows you to use Milvus locally without using Docker or Kubernetes. It will store the vectors you generate from the different websites we will navigate to.

Introduction to Agentic RAG

Language models can’t take actions themselves—they just output text. Agents are systems that use LLMs as reasoning engines to determine which actions to take and the inputs to pass them. After executing actions, the results can be transmitted back into the LLM to determine whether more actions are needed or if it is okay to finish.

They can be used to perform actions such as Searching the web, browsing your emails, correcting RAG to add self-reflection or self-grading on retrieved documents, and many more.

Setting things up

LangGraph – An extension of Langchain aimed at building robust and stateful multi-actor applications with LLMs by modeling steps as edges and nodes in a graph.

Ollama & Llama 3 – With Ollama you can run open-source large language models locally, such as Llama 3. This allows you to work with these models on your own terms, without the need for constant internet connectivity or reliance on external servers.

Milvus Lite – Local version of Milvus that can run on your laptop, Jupyter Notebook or Google Colab. Use this vector database we use to store and retrieve your data efficiently.

Using LangGraph and Milvus

We use LangGraph to build a custom local Llama 3-powered RAG agent that uses different approaches:

We implement each approach as a control flow in LangGraph:

Routing (Adaptive RAG) – Allows the agent to intelligently route user queries to the most suitable retrieval method based on the question itself. The LLM node analyzes the query, and based on keywords or question structure, it can route it to specific retrieval nodes.

Example 1: Questions requiring factual answers might be routed to a document retrieval node searching a pre-indexed knowledge base (powered by Milvus).

Example 2: Open-ended, creative prompts might be directed to the LLM for generation tasks.

Fallback (Corrective RAG) – Ensures the agent has a backup plan if its initial retrieval methods fail to provide relevant results. Suppose the initial retrieval nodes (e.g., document retrieval from the knowledge base) don’t return satisfactory answers (based on relevance score or confidence thresholds). In that case, the agent falls back to a web search node.

The web search node can utilize external search APIs.

Self-correction (Self-RAG) – Enables the agent to identify and fix its own errors or misleading outputs. The LLM node generates an answer, and then it’s routed to another node for evaluation. This evaluation node can use various techniques:

Reflection: The agent can check its answer against the original query to see if it addresses all aspects.

Confidence Score Analysis: The LLM can assign a confidence score to its answer. If the score is below a certain threshold, the answer is routed back to the LLM for revision.

General ideas for Agents

Reflection – The self-correction mechanism is a form of reflection where the LangGraph agent reflects on its retrieval and generations. It loops information back for evaluation and allows the agent to exhibit a form of rudimentary reflection, improving its output quality over time.

Planning – The control flow laid out in the graph is a form of planning, the agent doesn’t just react to the query; it lays out a step-by-step process to retrieve or generate the best answer.

Tool use – The LangGraph agent’s control flow incorporates specific nodes for various tools. These can include retrieval nodes for the knowledge base (e.g., Milvus), demonstrating its ability to tap into a vast pool of information, and web search nodes for external information.

Examples of Agents

To showcase the capabilities of our LLM agents, let’s look into two key components: the Hallucination Grader and the Answer Grader. While the full code is available at the bottom of this post, these snippets will provide a better understanding of how these agents work within the LangChain framework.

Hallucination Grader

The Hallucination Grader tries to fix a common challenge with LLMs: hallucinations, where the model generates answers that sound plausible but lack factual grounding. This agent acts as a fact-checker, assessing if the LLM’s answer aligns with a provided set of documents retrieved from Milvus.

```

### Hallucination Grader

# LLM

llm = ChatOllama(model=local_llm, format="json", temperature=0)

# Prompt

prompt = PromptTemplate(

template="""You are a grader assessing whether

an answer is grounded in / supported by a set of facts. Give a binary score 'yes' or 'no' score to indicate

whether the answer is grounded in / supported by a set of facts. Provide the binary score as a JSON with a

single key 'score' and no preamble or explanation.

Here are the facts:

documents

Here is the answer:

generation

""",

input_variables=["generation", "documents"],

)

hallucination_grader = prompt | llm | JsonOutputParser()

hallucination_grader.invoke("documents": docs, "generation": generation)

```

Answer Grader

Following the Hallucination Grader, another agent steps in. This agent checks another crucial aspect: ensuring the LLM’s answer directly addresses the user’s original question. It utilizes the same LLM but with a different prompt, specifically designed to evaluate the answer’s relevance to the question.

```

def grade_generation_v_documents_and_question(state):

"""

Determines whether the generation is grounded in the document and answers questions.

Args:

state (dict): The current graph state

Returns:

str: Decision for next node to call

"""

print("---CHECK HALLUCINATIONS---")

question = state["question"]

documents = state["documents"]

generation = state["generation"]

score = hallucination_grader.invoke("documents": documents, "generation": generation)

grade = score['score']

# Check hallucination

if grade == "yes":

print("---DECISION: GENERATION IS GROUNDED IN DOCUMENTS---")

# Check question-answering

print("---GRADE GENERATION vs QUESTION---")

score = answer_grader.invoke("question": question,"generation": generation)

grade = score['score']

if grade == "yes":

print("---DECISION: GENERATION ADDRESSES QUESTION---")

return "useful"

else:

print("---DECISION: GENERATION DOES NOT ADDRESS QUESTION---")

return "not useful"

else:

pprint("---DECISION: GENERATION IS NOT GROUNDED IN DOCUMENTS, RE-TRY---")

return "not supported"

```

You can see in the code above that we are checking the predictions by the LLM that we use as a classifier.

Compiling the LangGraph graph.

This will compile all the agents that we defined and will make it possible to use different tools for your RAG system.

```

# Compile

app = workflow.compile()

# Test

from pprint import pprint

inputs = "question": "Who are the Bears expected to draft first in the NFL draft?"

for output in app.stream(inputs):

for key, value in output.items():

pprint(f"Finished running: key:")

pprint(value["generation"])

```

Conclusion

In this blog post, we showed how to build a RAG system using agents with LangChain/ LangGraph, Llama 3, and Milvus. These agents make it possible for LLMs to have planning, memory, and different tool use capabilities, which can lead to more robust and informative responses.

Feel free to check out the code available in the Milvus Bootcamp repository.

If you enjoyed this blog post, consider giving us a star on Github, and share your experiences with the community by joining our Discord.

This is inspired by the Github Repository from Meta with recipes for using Llama 3

*This post was written by Stephen Batifol and originally published on Zilliz.com here. We thank Zilliz for their insights and ongoing support of TheSequence.

#ADD#agent#agents#amp#Analysis#APIs#app#applications#approach#backup#bears#binary#Blog#bootcamp#Building#challenge#code#CoLab#Community#connectivity#data#Database#Docker#emails#engines#explanation#extension#Facts#form#framework

0 notes

Text

Time to Call for your helping hands 大家幫幫手

To be honest, I have not established any New Year’s resolutions for the past few years. The main reason was that I often aim high but lack the ability eventually. Nevertheless, I still need to setup some new initiative for the year to come.Last year, the focus was on bicycle maintenance and repair, which costed me quite a bit. This year, I believed saving is more essential. My plan is to migrate…

View On WordPress

0 notes

Text

I made it easier to back up your blog with tumblr-utils

Hey friends! I've seen a few posts going around about how to back up your blog in case tumblr disappears. Unfortunately the best backup approach I've seen is not the built-in backup option from tumblr itself, but a python app called tumblr-utils. tumblr-utils is a very, very cool project that deserves a lot of credit, but it can be tough to get working. So I've put together something to make it a bit easier for myself that hopefully might help others as well.

If you've ever used Docker, you know how much of a game-changer it is to have a pre-packaged setup for running code that someone else got working for you, rather than having to cobble together a working environment yourself. Well, I just published a tumblr-utils Docker container! If you can get Docker running on your system - whether Windows, Linux, or Mac - you can tell it to pull this container from dockerhub and run it to get a full backup of your tumblr blog that you can actually open in a web browser and navigate just like the real thing!

This is still going to be more complicated than grabbing a zip file from the tumblr menu, but hopefully it lowers the barrier a little bit by avoiding things like python dependency errors and troubleshooting for your specific operating system.

If you happen to have an Unraid server, I'm planning to submit it to the community apps repository there to make it even easier.

Drop me a message or open an issue on github if you run into problems!

207 notes

·

View notes

Text

Pinned Post*

Layover Linux is my hobby project, where I'm building a packaging ecosystem from first principles and making a distro out of it. It's going slow thanks to a full time job (and... other reasons), but the design is solid, so I suppose if I plug away at this long enough, I'll eventually have something I can dogfood in my own life, for everything from gaming to web services to low-power "Linux but no package manager" devices.

Layover is the package manager at the heart of Layover Linux. It's designed around hermetic builds, atomically replacing the running system, cross compilation, and swearing at the Rust compiler. It's happy to run entirely in single-user mode on other distros, and is based around configuration, not mutation.

We all deserve reproducible builds. We all deserve configuration-based operating systems. We all deserve the simple safety of atomic updates. And gosh darn it, we deserve those things to be easy. I'm making the OS that I want to use, and I hope you'll want to use it too.

*if you want to get pinned and live in the PNW, I'm accepting DMs but have limited availability. Thanks 😊💜

Thanks to @docker-official for persuading me to pull the trigger on making an official blog. Much love to @jennihurtz, the supreme and shining jewel in my life and the heart beating in twin with mine. I also owe @k-simplex thanks for her support, which has carried me a few times in the last couple years.

44 notes

·

View notes

Text

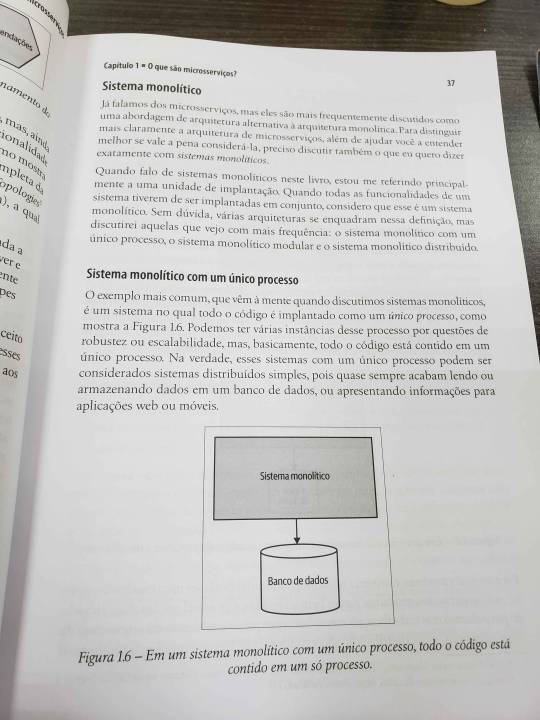

This week was a productive one. I've been studying microservices to better understand distributed systems. At the bus company where I work, we use a monolithic system—an old-school setup style with MySQL, PHP, some Java applications, localhost server and a mix of other technologies. However, we've recently started implementing some features that require scalability, and this book has been instrumental in helping me understand the various scenarios involved.

In the first chapters, I've gained a clearer understanding of monolithic systems and the considerations for transitioning to a distributed system, including the pros and cons.

I've also been studying Java and Apache Kafka for event-driven architecture, a topic that has captured my full attention. In this case, the Confluent training platform offers excellent test labs, and I've been running numerous tests there. Additionally, I have my own Kafka cluster set up using Docker for most configurations.

With all that said, I've decided to update this blog weekly since daily updates it's not gonna work.

#coding#developer#linux#programming#programmer#software#software development#student#study blog#study aesthetic#studyblr#self improvement#study#software engineering#study motivation#studyblr community#studying#studynotes#learning#university#student life#university student#study inspiration#brazil#booklr#book#learn#self study#java#apachekafka

21 notes

·

View notes

Note

Dear Mx. Evil-Ubuntu,

I am a big fan of your blog and various nefarious works.

I would like to learn more about "legal" torrenting. Do you have any pointers/ resources you would be willing to share to get me started on the wrong path?

Im currently running docker on debian on proxmox on a small homelab, but that's the extent of my knowledge.

Thanks in advance!

pirate bay>first link that seems remotely related>run on your machine.

Simple as that. Disable your firewall, disable your ad blocker, disable your vpn, disable your antivirus, you'll be good to go.

Don't seed. Seeding is a scam invented by Ted Stevens to clog tubes.

6 notes

·

View notes

Text

I wonder if any docker employees actually have seen my blog and just shake their head. Like hello there, I really enjoy your software please dont be mad it's all in good fun I promise.

43 notes

·

View notes

Text

Intro post!

Welcome to my bog, I'm Fate (they/it), a nsfw writer mostly active on ao3. This'll be a blog for me to look for resources or connect with people in the Degrees of Lewdity fandom, post art and my headcanons/writings.

You can contact me anytime through my Ask!

Below is a master list of my stories, as well as hashtags.

Some ao3 stories I've written:

╰┈➤ I am What you made me [x AFAB!Reader] (Homicipher)

╰┈➤ In the Aftermath of Everything [m!Bailey x f!Reader] (Degrees of Lewdity)

╰┈➤ and others you can find on my ao3 lmao

Some Oneshots I’ve written: OneMoreTimeAU (#OMTAU):

╰┈➤ Bailey bought your virginity at Briar’s Brothel. It’s only proper he breaks you in. [m!Bailey x f!Reader] ╰┈➤You never thought he'd be the type to sneak into your room at night, but here you are. [m!Bailey x f!Reader]

My hashtags:

Posts about Characters are always tagged by their names.

About Asks: #Askme[Character] if it’s about a character, otherwise #AskMe

About my fanfictions: #IntheAftermath [DoL]/#WhatyouMadeMe [Homicipher]

About headcanons: #hc/#nsfwhc

About myself: #Strings talks

My OCs:

Love Interest #Donn the Docker - reference sheet - nsfw hcs - #Donn ask oc game - #Donn x [other OC name] - Donn outfit references

Some Boundaries:

Do not sexualize me or flirt with me, even jokingly.

No petnames either.

You may Ask me Anything about my stories, headcanons (nsfw included) etc! Just remember I speak for my own versions of a character, other ppl can interpret stuff differently ofc!

This blog is for fictional nsfw in art and writing, do not attempt to show me irl nsfw.

Minors DNI for your own safety, I can’t control what you guys read obv but at least don’t engage with adults in nsfw spaces!!

Things I will not write/draw:

Pregnancy (Breeding kink is fine)

Bestiality (as in, actual animals meant to be animals, not dragons or monsters or whatever)

Gore and excessive violence on children

Scat, Piss, etc.

Sounding

Lactation or Breast Feeding

Pure fluff (It’s just boring to me)

I am heavily against censorship and any kind of harassment over fictional interests or kinks. If you can't discern reality from fiction, don't assume no one else can either.

17 notes

·

View notes

Text

LETS GO ABSOLUTE LEGEND MICHAEL FREDERICK

#this is a michael frederick fan blog through and through#freo dockers#michael frederick#kalyakoorl walyalup

2 notes

·

View notes

Text

Earlier, we got acquainted with such a containerization tool as Docker, and dealt with its basic concepts, such as images and containers. In this article, we will take a closer look at how to create your own Docker image and upload it to Dockerhub for further use.

Read more

0 notes

Text

SHE LIVES

My Ubuntu vm.... speak to me I have more hard drive space for you you just have to boot so I can repartition please...

#I finally got into the grub menu and deleted the docker installer so it booted. No re-install needed.#And I expanded the filesystem partition so no more space issues...#And all my work should be intact#Anyways apologies to the mutuals I promise I won't live blog HW again

15 notes

·

View notes

Text

backing up my tumblr blog (using the docker container I made) and apparently I have a few posts with enough notes that tumblr treats downloading them all like a ddos attack 😅

26 notes

·

View notes