#ec2 instance setup

Explore tagged Tumblr posts

Text

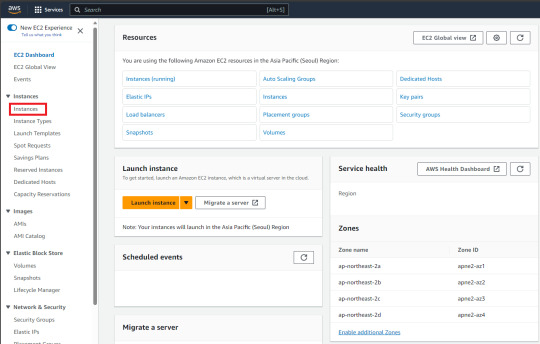

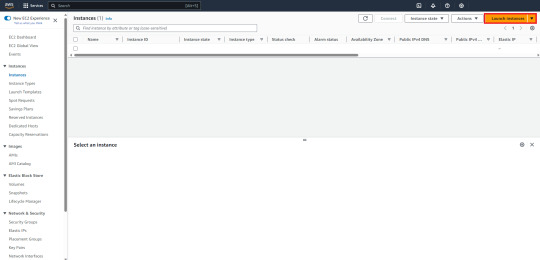

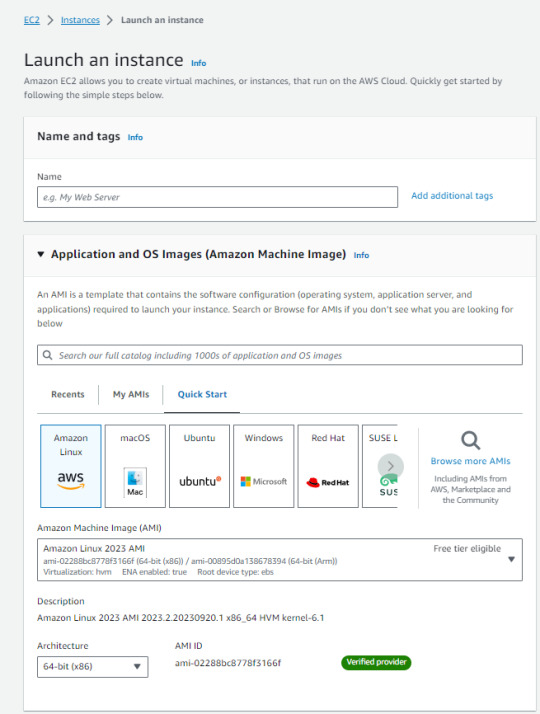

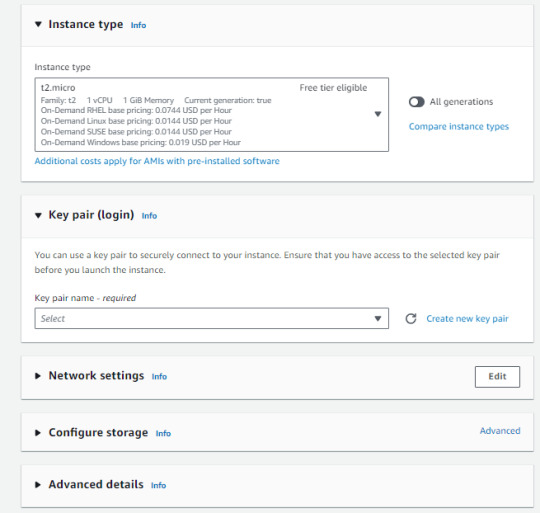

How to set up AWS EC2

1. Eneter the homepage -> Click "Instances"

2. Click "Launch Instances"

3. Setting instances -> Click "Launch Instance"

This is a very important point!

You should choose the "Instance type" based on your demand. It's helpful to look at the "vCPU" and "Memory" requirments. You also need to choose the "Key pair". If you don't have one, click to "Create new key pair" You'll be using it to access your instances, so you need to keep it handly.

0 notes

Text

Database Optimization that Led to 27% Cloud Cost Reduction for a Fintech Giant

About Customer

A leading digital financial services platform in India, serving over 50 million users with mobile recharges, bill payments, and digital wallet services, in the finance sector.digital wallet services

Problem Statement

AWS expenditure reached $150,000, necessitating significant cost optimization to ensure financial sustainability.

Inefficiencies in compute resources, storage, and database configurations led to increased operational costs and underutilized infrastructure.

Manual, repetitive infrastructure and database tasks consumed time and resources, reducing efficiency.

Unused resources, such as unattached EBS volumes, obsolete snapshots, and redundant load balancers, increased costs and cluttered the infrastructure.

Managing a large number of databases on both EC2 and RDS instances was complex and inefficient, requiring consolidation and optimization.

Outdated systems, such as MySQL 5.7, increased maintenance costs and lacked modern features, necessitating upgrades to improve performance and reduce support expenses.

Inadequate backup strategies and DR setups posed risks to data availability and resilience, potentially leading to data loss and extended downtimes.

[ Good Read: New age fintech platform achieves real-time fraud detection and scalable credit risk analysis with AWS ]

Solution Offered

Infrastructure Assessment: Conducted a detailed assessment of AWS infrastructure to identify bottlenecks and potential cost-saving opportunities. This involved analyzing compute resources, storage, database configurations, and service utilization.

DevOps DevOps Optimization:

Optimized S3 storage by deleting unused objects, implementing lifecycle policies for cheaper storage classes, and enabling versioning with cleanup policies.

Right-sized EC2 instances to match workload requirements and migrated from older Intel-based instances to newer AMD and Graviton instances for better performance at lower cost.

Conducted thorough cleanups of unused resources across multiple environments and accounts, including unattached EBS volumes, obsolete snapshots, and unused Elastic IPs.

Merged redundant load balancers to streamline traffic management and reduce associated costs.

Database Optimization:

RDS and EC2 Database Merging

Archival and Rightsizing

Database Migration

Upgrading Databases

Cost Reduction for Backups and DynamoDB

Final Outcomes

Significant Cost Savings

Enhanced Efficiency

Improved Performance

Increased Automation

Zero Downtime

You can check more info about: Database Optimization.

DevOps Consultant.

Cloud Data Manager.

Big Data Management.

Data Security Provider.

1 note

·

View note

Text

Brazil VPS Hosting: Quick & Inexpensive Virtual Private Servers

When it comes to finding quick and inexpensive Virtual Private Servers (VPS) hosting in Brazil, there are several providers you can consider. Here are a few options:

Hostinger: Hostinger offers VPS hosting with data centers in São Paulo, Brazil. They provide quick setup and competitive pricing for their VPS plans.

DigitalOcean: DigitalOcean has a data center in São Paulo, offering VPS hosting with SSD storage and a user-friendly interface for quick deployment.

Vultr: Vultr also has a presence in São Paulo and provides affordable VPS hosting with SSD storage, high-performance CPUs, and a range of data center locations globally.

Linode: Linode offers Brazil VPS hosting with a data center in São Paulo. They provide quick setup, SSD storage, and a variety of plans to choose from.

Amazon Web Services (AWS): AWS has a São Paulo region offering Elastic Compute Cloud (EC2) instances, which can be configured as VPS. While AWS may not always be the cheapest option, it provides scalability and reliability.

Before choosing a provider, consider factors such as server specifications, uptime guarantees, customer support quality, and scalability options. Additionally, make sure to check for any ongoing promotions or discounts that could help you save money on your VPS hosting.

#brazil windows vps#Brazil vps server pric#Best brazil vps server#buy vps server#Brazil vps price#VPS

2 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

Why Modern Enterprises Rely on AWS Security Services for Scalable Protection

Why Modern Enterprises Rely on AWS Security Services for Scalable Protection

As businesses accelerate their cloud adoption, security remains one of the top concerns—especially in sectors like finance, healthcare, and SaaS. That’s why AWS Security Services have become a gold standard for cloud-native protection, offering unmatched scalability, real-time threat detection, and compliance-ready controls.

In this blog, we’ll dive into what makes AWS Security Services essential, how they empower businesses to secure their infrastructure

1. The Growing Need for Scalable Cloud Security

With the rise in remote work, microservices, and globally distributed teams, traditional perimeter-based security models are no longer sufficient. You need:

Continuous monitoring

Identity-based access control

Real-time threat alerts

Automated remediation

This is exactly where AWS Security Services come into play—providing scalable, cloud-native tools to protect everything from EC2 instances to serverless workloads.

2. Core Components of AWS Security Services

Here are some of the top services within the AWS security ecosystem:

Amazon GuardDuty: Continuously monitors for malicious activity and unauthorized behavior.

AWS Shield: Protects against DDoS attacks automatically.

AWS WAF (Web Application Firewall): Protects web applications from common exploits.

AWS Identity and Access Management (IAM): Controls who can access your resources—and what they can do with them.

AWS Config: Tracks changes and evaluates configurations against compliance rules.

Each of these tools integrates seamlessly into your AWS environment, giving you centralized control over your security operations.

3. Security That Scales with You

One of the key advantages of AWS Security Services is their auto-scaling capability. Whether you're running a startup with a single workload or a multinational with hundreds of cloud assets, AWS adapts its protection layers dynamically based on your usage.

This elasticity makes AWS ideal for modern DevOps pipelines, CI/CD environments, and data-driven apps that require 24/7 protection without performance overhead.

4. Compliance Made Easy

HIPAA, PCI DSS, SOC 2, GDPR—compliance can be overwhelming. Fortunately, AWS Security Services come with built-in compliance enablers. These include:

Logging and audit trails via CloudTrail

Encryption at rest and in transit

Policy-based access controls

Regional data isolation

With AWS, meeting regulatory standards becomes less of a chore—and more of an integrated process.

5. How CloudAstra’s AWS Experts Can Help

Even with powerful tools at your disposal, poor implementation can create vulnerabilities. That’s why businesses turn to CloudAstra for expert AWS consulting, setup, and optimization.

CloudAstra’s engineers help you:

Design secure VPC architectures

Automate security best practices

Integrate monitoring and alerting

Perform security audits and cost optimization

All while aligning with your unique business objectives and tech stack.

6. Real-World Impact

Startups and enterprises using AWS Security Services have reported:

90% reduction in attack surfaces

50% faster incident response time

Full compliance visibility within days of deployment

By partnering with CloudAstra, companies cut setup time, eliminate misconfigurations, and get full support for evolving security demands.

Final Thoughts

In a world where cyber threats are increasing by the day, adopting robust AWS Security Services is no longer optional—it’s a strategic imperative. These tools provide the foundation for a secure, scalable, and compliant cloud environment.

Looking to implement or optimize your AWS security stack? Trust the experts at CloudAstra. Their tailored AWS consulting services help you go beyond compliance—to proactive, intelligent cloud protection.

#aws services#aws consulting services#aws cloud consulting services#aws course#software#aws training

0 notes

Text

The Need for Simplified Cloud Deployments.

As companies move more of their work to the cloud, making secure, scalable, and affordable solutions is becoming harder. Picking the right cloud migration, managing computing power, and ensuring security pose big challenges. Many organizations struggle to switch smoothly without wasting time or making mistakes. CloudKodeForm Technologies offers a solution by providing simple, smart methods that reduce risks and boost performance.

Cloud technology is key for quick business growth, flexibility, and new ideas. But setting up in the cloud requires many steps. These include designing the system, choosing services like AWS EC2 or Lambda Cloud, setting up infrastructure, keeping security in check, and controlling costs. Even skilled IT teams can find these tasks slow and prone to errors without proper tools or guidance.

Companies need a reliable partner who understands cloud options and can help create solutions that match their goals. CloudKodeForm Technologies fits this role perfectly.

How CloudKodeForm Technologies Makes Deployment Easier CloudKodeForm Technologies provides a full cloud setup service focused on simplicity, speed, and safety. Here’s how it works:

Intelligent Recommendations Whether the project needs GPU instances on Lambda Cloud or scalable compute on AWS EC2, CloudKodeform reviews your needs and suggests the best setup. This helps avoid wasting resources and money.

Ready-to-Use Templates To quicken deployment, CloudKodeForm Technologies offers customizable templates for common cloud setups. These are built to perform well, stay secure, and save money. Using them reduces planning and testing time by weeks.

Security Built-In Security isn’t an afterthought. CloudKodeForm Technologies includes best practices for safety. It automatically manages user permissions, encrypts data, and monitors your cloud environment in real-time.

One-Click Setup Launching cloud environments is easy with CloudKodeForm Technologies. Whether starting EC2 instances or deploying AI models on Lambda Cloud, the process is quick, reliable, and repeatable.

Supporting Hybrid and Multi-Cloud Use Many businesses run workloads across different clouds or combine multiple providers. CloudKodeForm Technologies helps integrate these setups smoothly. It allows migration between AWS, Lambda Cloud, and others without much trouble. This makes it easier to grow and adapt your cloud plan over time.

Why Choose CloudKodeForm Technologies Expert advice from experienced cloud architects

Efficient deployment setups

Support around the clock for critical systems

Works with major cloud platforms

Focus on keeping costs low and performance high

Final Thoughts CloudKodeForm Technologies isn’t just a consulting firm. It’s a partner that makes big cloud projects easier. Whether starting fresh or tweaking existing setups, CloudKodeForm Technologies helps teams deploy smarter, faster, and safer.

0 notes

Text

EMR Notebooks Security Within AWS Dashboard & EMR Studio

Security for EMR Notebooks

Recent Amazon EMR documentation highlights numerous built-in options to increase EMR Notebook security that are now available in the AWS dashboard as EMR Studio Workspaces. These capabilities are aimed to give users precise control so that only authorised users may access and interact with these notebooks and, most crucially, use the notebook editor to run code on linked clusters.

The security measures for Amazon EMR and its clusters complement those for EMR Notebooks. Tiered security allows for additional thoroughness. Many important processes for restricting access and securing notebook environments are mentioned in the documentation:

AWS IAM Integration: Integrated Identity and Access Management is crucial. Use IAM policy statements. In these policies, AWS defines permissions, including who can access what resources and do what. The documentation suggests using policy statements with notebook tags to restrict access.

This solution lets you tag EMR notebooks with key-value labels and build IAM policies that allow or deny access based on these tags. These extracts do not include the tagging methods, however this allows more granular control than providing access to all notebooks. Certain projects, teams, or data sensitivity levels may allow access control.

Amazon EC2 security groups are highlighted. They function as virtual firewalls. They control network traffic between the notebook editor and the cluster's primary instance in EMR Notebooks.

This basic network security solution restricts network connectivity between the real computing resources (the principal instance of the EMR cluster), where code execution begins, and the notebook environment, where the user interacts. According to the documentation, customers can adjust EMR Notebook security groups to meet their network isolation needs or use the default settings. EMR Notebook EC2 security group configuration instructions are available.

An AWS Service Role is utilised for setup. Highlights your responsibility to define this job. This Service Role is necessary to grant EMR notebooks authorisation to communicate with other AWS services. This Service Role allows notebook code to interface with databases, access S3 data, and call other AWS APIs.

The least privilege principle requires that a position only have the access needed to complete their tasks.

AWS console access requires additional permissions to access EMR Notebooks. Console users can access EMR Notebooks as EMR Studio Workspaces. You require extra IAM role rights to access or create these Workspaces. Use of the “Create Workspace” button requires this. This adds access control to the console interface, unlike the notebook's execution permissions or Service Role for communicating with other services. It indicates that basic EMR console rights and console access to EMR Studio Workspaces are covered elsewhere.

Together, EC2 security groups act as virtual firewalls to regulate network traffic, IAM policies with notebook tags limit access, a specific AWS Service Role defines interaction permissions with other services, and additional IAM permissions for console access to EMR Studio Workspaces allow administrators to customise the security posture of their EMR Notebook environments.

These rules restrict network connections and cross-service rights for notebook operations and ensure that only authorised users can work with notebooks and run programs. According to the documentation, these functionalities complement the Amazon EMR security architecture by providing a multidimensional approach to notebook-based data processing workflow security.

#EMRNotebooksSecurity#EMRNotebooks#AmazonEMR#IdentityandAccessManagement#EMRStudioWorkspaces#EMRStudio#technology#technews#technologynews#news#govindhtech

0 notes

Text

Blockchain Mining on AWS + blockchaincloudmining.com

Blockchain mining on AWS is a popular method for those looking to harness the power of cloud computing for cryptocurrency mining. By leveraging Amazon Web Services (AWS), miners can set up powerful virtual machines tailored for mining operations, significantly reducing the upfront cost and complexity associated with traditional hardware setups. This approach offers flexibility and scalability, allowing miners to adjust their computational resources based on demand and profitability.

For beginners and experienced miners alike, using AWS for blockchain mining provides a streamlined process that minimizes technical hurdles. With services like EC2 instances optimized for GPU and FPGA mining, users can easily configure their environments without needing extensive IT knowledge. Additionally, AWS's robust infrastructure ensures high uptime and security, critical factors in maintaining a successful mining operation.

To get started or learn more about how AWS can enhance your mining efforts, visit https://blockchaincloudmining.com. This platform offers comprehensive guides, tutorials, and support for setting up and managing your AWS-based mining rigs. Whether you're new to the world of blockchain mining or an experienced miner looking to expand your operations, blockchaincloudmining.com is an invaluable resource.

blockchaincloudmining.com

Block Chain Cloud Mining

BlockChain Cloud Mining

0 notes

Text

Discover the Best AWS Institute in Chennai: Learn from Experts at Trendnologies

In a world rapidly migrating to the cloud, mastering Amazon Web Services (AWS) has become essential for both IT professionals and aspiring tech enthusiasts. As more companies in Chennai and beyond embrace cloud-native infrastructure, the demand for AWS-certified professionals continues to surge. For those seeking the ideal place to build cloud expertise, Trendnologies emerges as a top-tier AWS institute in Chennai, offering a comprehensive, career-aligned learning experience.

Why AWS Skills Are a Game-Changer in the Current Tech Landscape

Cloud computing is no longer a niche—it's the new normal. AWS, the market leader in cloud services, powers everything from web hosting to machine learning workloads. Businesses are increasingly dependent on professionals who can architect, deploy, and manage scalable and secure cloud environments.

Whether you're aiming for roles like Cloud Engineer, DevOps Specialist, or AWS Solutions Architect, enrolling in a reputed AWS institute in Chennai can equip you with in-demand skills that open up rewarding career opportunities.

The Role of a Professional AWS Institute: More Than Just a Training Center

Choosing the right AWS institute is about more than just picking a place that teaches cloud concepts. A professional institute should:

Deliver up-to-date and certification-aligned content

Provide hands-on labs and real-world simulations

Offer flexible learning options

Support learners with exam preparation and career assistance

Trendnologies ticks all these boxes and more, making it a preferred AWS training destination in Chennai.

What Sets Trendnologies Apart as a Leading AWS Institute in Chennai?

At Trendnologies, AWS training is delivered with a purpose—to shape cloud professionals who are ready for the real world. Here’s why learners choose us:

1. Industry-Validated Curriculum Designed for AWS Certification

Our course content is mapped to global AWS certification standards, ensuring that learners are prepared for exams like:

AWS Certified Cloud Practitioner

AWS Certified Solutions Architect – Associate

AWS Certified Developer – Associate

AWS Certified DevOps Engineer – Professional

We go beyond theory by integrating hands-on lab sessions and mock tests to help learners gain practical skills and confidence.

2. Real-Time Use Cases and Scenario-Based Training

One of our unique strengths is our scenario-driven training approach. Learners don’t just read about AWS services—they implement them in real business scenarios such as:

Launching and securing EC2 instances

Automating deployments with AWS CodePipeline

Setting up high-availability architectures with ELB and Auto Scaling

Monitoring application performance with CloudWatch and X-Ray

This project-based training gives you the practical insight employers are looking for.

3. Cloud Lab Environment for Anytime Practice

To reinforce concepts, we offer a dedicated cloud lab environment where learners can practice AWS configurations, troubleshoot setups, and test deployment strategies. Our labs are accessible even outside class hours, supporting continuous learning and experimentation.

4. Instructor-Led Learning Backed by Technical Support

At Trendnologies, AWS courses are led by certified professionals who have real-world AWS implementation experience. Their teaching approach blends theory with practice, offering insider tips for certification exams and job interviews.

Additionally, learners have access to one-on-one mentoring, doubt-clearing sessions, and technical forums to discuss complex topics and stay updated with industry trends.

Who Should Join Our AWS Institute in Chennai?

Our AWS training programs are designed for:

IT professionals looking to upskill to cloud technologies

Software developers and system administrators

Network engineers and DevOps practitioners

Fresh graduates pursuing a career in cloud computing

No prior cloud experience? No problem. Our foundational modules ensure that even beginners can start strong and progress smoothly.

Learning Formats Tailored to Your Schedule

Trendnologies understands the challenges of balancing learning with other responsibilities. That’s why we offer multiple training modes, including:

Weekday and weekend classroom sessions

Live instructor-led online batches

Hybrid learning options with recorded sessions and mentor support

All modes come with lifetime access to study materials, lab exercises, and downloadable resources.

Career Path Guidance and Placement-Oriented Training

We don’t stop at training. As one of the top AWS institutes in Chennai, we also help learners build job-ready profiles through:

Resume crafting sessions with AWS project inclusion

Technical interview preparation

Mock interview panels with industry professionals

Updates on job openings with partner companies

This holistic support increases your visibility in the job market and accelerates your entry into cloud roles.

Explore Our Course Modules at a Glance

Here’s a peek into what you’ll cover:

Introduction to Cloud Computing and AWS Ecosystem

Virtual Servers and Elastic Load Balancing

IAM, Role-Based Access Control, and Security Best Practices

Cloud Storage Solutions: S3, EBS, Glacier

RDS and NoSQL Databases in AWS

VPC Networking, Routing, and NAT

Serverless Frameworks: Lambda, API Gateway

Infrastructure Automation: CloudFormation, Terraform

Monitoring and Cost Management Tools

Each module is backed by quizzes, assessments, and use-case discussions to reinforce understanding.

Conclusion: Begin Your AWS Journey with the Best Institute in Chennai

In the digital era, cloud computing skills are not just valuable—they’re indispensable. If you’re serious about launching or advancing a career in cloud, choosing a trusted AWS institute is the first step.

Trendnologies, with its expert faculty, practical training methods, and career-focused curriculum, offers a proven path to AWS success. Whether you aim to get certified or land a high-paying cloud role, our AWS program in Chennai equips you with everything you need.

Enroll today and redefine your professional future with cloud expertise!

For more info visit:

www.trendnologies.com

Email: [email protected]

Location: Chennai | Coimbatore | Bangalore

0 notes

Text

How To Change Your Aws Ec2 Instance Type For A Seamless Hosted Ui-To-Backend Integration

In the world of web applications, linking a hosted user interface (UI) with a secure backend on an AWS EC2 instance is essential for creating a seamless, scalable, and secure user experience. This guide covers each step in the process, from launching and configuring an EC2 instance to implementing HTTPS for secure communication. By the end of this tutorial, you’ll have a practical understanding of how to connect a hosted UI to an EC2 backend, troubleshoot common issues, and maximize the power of AWS for a robust setup.

AWS EC2 instance types are categorized based on optimized use cases like compute, memory, storage, or GPU performance. Choosing the right instance type ensures efficient resource allocation and cost-effective application performance.

Step 1: Launch and Configure the EC2 Instance

The first step in setting up a backend server on AWS is creating an EC2 instance suited to handle your application’s workload. With AWS, you have full control over the instance, including scaling resources as traffic grows. Here’s how we set up the instance:

Select an Instance Type: Head over to the EC2 Dashboard in AWS, where you can launch a new instance. The t2.micro instance type is often a good choice for smaller projects or testing environments, but as application needs grow, selecting a larger instance type may be necessary for enhanced performance.

Assign an Elastic IP: Once the instance is launched, assign an Elastic IP to ensure that it maintains a static public IP address, even if the instance restarts. This makes it easy for your hosted UI to consistently access the backend without needing to update the endpoint.

Set Up Security Groups: AWS security groups act as a virtual firewall for your EC2 instance. Configuring inbound and outbound rules in these security groups is crucial to ensure that only trusted traffic reaches your server. We configured rules to allow incoming traffic on:

Port 80 (HTTP): Used to redirect traffic to HTTPS.

Port 443 (HTTPS): Enables secure encrypted connections.

Application-Specific Port (6879): The port where our application’s backend listens for requests.

By setting up these rules, we created a secure pathway for communication between our hosted UI and the EC2 instance.

Step 2: Retrieve Instance Metadata with IMDSv2

To simplify dynamic configurations, AWS provides the Instance Metadata Service (IMDS), which is especially useful for obtaining information about the instance itself, like its public IP. AWS recently enhanced the security of this service with IMDSv2, which requires a session token to access metadata.

We created a script that retrieves the public IP address of the instance. Here’s a quick look at how it’s done:goCopy codefunc getPublicIP() (string, error) { tokenReq, err := http.NewRequest("PUT", "http://169.254.169.254/latest/api/token", nil) if err != nil { return "", err } tokenReq.Header.Set("X-aws-ec2-metadata-token-ttl-seconds", "21600") client := &http.Client{} tokenResp, err := client.Do(tokenReq) if err != nil { return "", err } defer tokenResp.Body.Close() token, err := io.ReadAll(tokenResp.Body) if err != nil { return "", err } req, err := http.NewRequest("GET", "http://169.254.169.254/latest/meta-data/public-ipv4", nil) if err != nil { return "", err } req.Header.Set("X-aws-ec2-metadata-token", string(token)) resp, err := client.Do(req) if err != nil { return "", err } defer resp.Body.Close() body, err := io.ReadAll(resp.Body) if err != nil { return "", err } return string(body), nil }

By accessing metadata directly within the instance, this approach ensures that necessary details are always available, even if instance information changes.

Step 3: Generate and Embed SSL Certificates for HTTPS

A key part of connecting a UI to a backend is ensuring secure data transmission, which we accomplish by configuring HTTPS on the EC2 instance. We generated SSL certificates using OpenSSL and embedded them directly within the code.

Generate SSL Certificates with OpenSSL

We created a self-signed certificate and private key, which were then embedded within the application. This way, there’s no need to handle the certificates externally.bashCopy codeopenssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout server.key -out server.crt -subj "/CN=yourdomain.com"

Embed Certificates in the Code

With Go’s embed package, we could easily include the certificate and key within the application:goCopy code//go:embed certs/server.crt var serverCrtFile []byte //go:embed certs/server.key var serverKeyFile []byte

Embedding the certificates directly in the code makes deployment more straightforward, especially for EC2 instances that may be frequently restarted or replaced.

Step 4: Configure HTTPS with TLS in Go

Once the certificates were embedded, the next step was to configure HTTPS in our Go server to serve secure connections. We set up the server to serve HTTPS requests only when a public IP was available, using TLS to provide strong encryption.

Here’s how we configured the Go server to listen for secure connections on port 443:goCopy codeif ip != "" { cert, err := tls.X509KeyPair(serverCrtFile, serverKeyFile) if err != nil { log.Fatalf("Failed to load TLS certificates: %v", err) } httpSrv.TLSConfig = &tls.Config{ Certificates: []tls.Certificate{cert}, MinVersion: tls.VersionTLS12, } if err := httpSrv.ListenAndServeTLS("", ""); err != http.ErrServerClosed { log.Fatalf("GraphQL server failed to start with HTTPS: %v", err) } } else { if err := httpSrv.ListenAndServe(); err != http.ErrServerClosed { log.Fatalf("GraphQL server failed to start with HTTP: %v", err) } }

With this configuration, the server listens over HTTPS, ensuring that all communications between the UI and the backend are encrypted.

Step 5: Testing and Troubleshooting Common Issues

After setting up the server, thorough testing was essential to confirm that everything worked as expected.

Using OpenSSL for Certificate Validation

To ensure the certificate was properly configured, we validated it using OpenSSL:bashCopy codeopenssl x509 -in server.crt -text -noout

Testing the Connection with CURL

Using curl, we confirmed that the server was accessible over HTTPS:bashCopy codecurl -vk https://<public_ip>:6879/healthz

Common Issues and Solutions

TLS Handshake Errors:

This usually indicates a mismatch in TLS versions between the client and server. To resolve this, we enforced TLS 1.2 on the server.

Connection Refused:

This can happen if ports aren’t correctly opened. We confirmed that our security group allowed inbound traffic on all necessary ports (80, 443, 6879).

Certificate Warnings:

Since we used a self-signed certificate, some browsers and tools may show a warning. For production setups, consider using a certificate issued by a trusted Certificate Authority (CA).

Final Integration and Testing with the UI

With the EC2 backend fully configured, we tested end-to-end integration with the UI to ensure seamless communication between the frontend and backend. By securing each step, from security groups to TLS encryption, we created a setup that ensures both security and reliability.

AWS offers a robust EC2 API through which developers can control instances programmatically. You can use the API to launch, stop, start, terminate, and resize instance types without resorting to the AWS Console. This is particularly convenient for automation, scaling, and integrating EC2 management with custom applications or DevOps pipelines. All these tools such as the AWS CLI, SDKs, and CloudFormation operate using this API in the background to efficiently and securely communicate with EC2 resources.

How to monitor frequency of replacement of aws ec2 instances?

Here's a brief 6-line tutorial to monitor the frequency of EC2 instance replacement:

Tag instances with launch timestamps via instance metadata or automation tools.

Turn on AWS CloudTrail to record EC2 instance start/terminate events.

Monitor instance lifecycle changes using Amazon CloudWatch Logs/Events.

Build a CloudWatch Dashboard with custom metrics or filters for instance IDs.

Export logs to S3 and query with Athena or utilize AWS Config to monitor changes over time.

Visualize trends (dailies, weeklies) to see trends in instance replacement frequency.

How to delete ec2 instances from aws?

Go to the EC2 Dashboard in the AWS Management Console.

Select the instance you want to delete, then click Actions > Instance State > Terminate instance.

Confirm the termination — the instance will be permanently deleted along with its ephemeral data.

Conclusion

Connecting a hosted UI with an AWS EC2 instance requires a thoughtful approach to security, scalability, and configuration. From launching the instance and configuring security groups to embedding certificates and troubleshooting, each step adds value to the final setup. The result is a secure, reliable, and scalable environment where users can interact with the backend through a hosted UI, confident that their data is protected. With AWS’s powerful infrastructure and EC2’s flexibility, this setup provides a robust foundation for web applications ready to grow and adapt to future needs.

FAQ’s

1. When I modify the instance type of an EC2 instance?

When you modify the instance type, AWS temporarily stops the instance.

You can then change its type and restart it with the new type.

All the data in the root volume is preserved unless deleted intentionally.

Ensure that the new instance type is supported by the current AMI and networking.

2. Will my Elastic IP be the same after instance type change?

Yes, if you have an Elastic IP associated, it will remain attached during the type change.

Elastic IPs persist through instance stops and starts.

Just make sure the instance is in the same region.

Without an Elastic IP, the public IP will change on restart.

3. How do I select the correct EC2 instance type for my application?

Evaluate your workload requirements: compute-intensive, memory-hungry, or storage-intensive.

Consult AWS's instance type recommendations and perform benchmarking if necessary.

Begin with general-purpose types such as t3 for development or low load.

Observe performance and grow vertically (change type) or horizontally (increase instances).

4. Can I automate changing EC2 instance types depending on usage?

Yes, you can do it automated with CloudWatch alarms and Lambda functions.

Set thresholds on CPU, memory, or network metrics.

When the threshold is breached, fire a Lambda script to terminate, reconfigure, and restart the instance.

Optimizes cost and performance with automated intervention.

0 notes

Text

Building a Cost-Efficient Cloud Architecture During AWS Migratio

Migrating to AWS offers a multitude of benefits, such as scalability, flexibility, and enhanced performance. However, one of the key considerations during the migration process is managing costs effectively. Without careful planning and optimization, cloud costs can quickly spiral out of control. At InCloudo, we help businesses implement AWS Cloud migrations services that are not only seamless but also cost-efficient. In this blog, we’ll explore strategies for building the cost-efficient cloud architecture during your AWS migration.

Assess Your Current Infrastructure

The first step toward building a cost-efficient cloud architecture during AWS migration is to thoroughly assess your existing infrastructure. This will help identify the workloads that are suitable for migration and provide insights into the resources you currently use, including servers, storage, and networking. A clear understanding of your current setup will help you make informed decisions about which services to migrate, which to optimize, and where to cut costs.

During the assessment phase, consider the following:

Inventory your existing assets: Take stock of your current hardware, software, and licensing costs.

Identify underutilized resources: Look for servers or systems that are not fully utilized and determine if they can be downsized or eliminated.

Map dependencies: Identify any dependencies between applications or workloads to ensure that they’re moved together to avoid costly reconfigurations.

Leverage AWS Pricing Models and Cost Management Tools AWS offers a variety of pricing models and tools that can help you optimize costs during the migration process. Understanding these pricing options is essential for building a cost-effective architecture.

Reserved Instances (RIs) and Savings Plans: If you know you’ll need certain resources for the long term, consider purchasing Reserved Instances or using AWS Savings Plans. Both options offer significant discounts in exchange for committing to specific resource usage over one- or three-year periods.

Spot Instances: For non-critical workloads, Spot Instances are a great way to save money. AWS Spot Instances let you take advantage of unused EC2 capacity at a fraction of the cost. However, these instances can be interrupted, so they’re best suited for workloads that can tolerate interruptions.

Use of AWS Free Tier: AWS offers a Free Tier for new customers, which provides limited access to many AWS services. If your migration includes proof of concepts or testing environments, the Free Tier can help you reduce costs during these phases.

Additionally, leverage AWS’s Cost Explorer, AWS Budgets, and AWS Trusted Advisor to monitor your usage and optimize costs. These tools provide insights into your spending patterns and help identify areas where you can cut back.

Optimize Resource Sizing

Right-sizing your resources are one of the most effective ways to keep costs in check. During the AWS migration, take the time to match the right instance types, storage options, and other services to your workload requirements.

Instance Sizing: AWS offers a wide variety of EC2 instance types optimized for different workloads. For example, compute-heavy workloads might require instances with more CPU power, while memory-intensive applications may benefit from instances with higher RAM. By carefully choosing the instance type that best fits your needs, you can avoid paying for unnecessary resources.

Auto Scaling: Implement auto scaling to adjust the number of instances based on demand. This ensures you’re only paying for what you need at any given time, without the need for over-provisioning.

Regularly monitor and adjust resource sizing after migration to ensure you're not overpaying for underused resources.

Implement Multi-AZ and Multi-Region Strategies Wisely While AWS offers high availability and disaster recovery options, it’s important to design your architecture with cost efficiency in mind when using multiple Availability Zones (AZs) and Regions.

Multi-AZ Deployment: While deploying applications across multiple AZs increases reliability, it can also increase costs due to additional resources required for redundancy. Use multi-AZ deployment for critical systems but avoid over-engineering your architecture by deploying less critical services in a single AZ, where possible.

Multi-Region Deployments: Expanding into multiple regions may be necessary for performance, compliance, or redundancy, but it can increase network transfer costs. Minimize unnecessary inter-region data transfer by carefully planning your regional distribution based on workload needs and data proximity.

Conclusion

Migrating to AWS offers tremendous benefits, but without careful planning, cloud costs can spiral out of control. By assessing your current infrastructure, leveraging AWS cost optimization tools, optimizing resource sizing, using serverless architectures, and continuously monitoring your environment, you can ensure a cost-efficient cloud architecture. At InCloudo, we specialize in helping businesses navigate AWS migrations with cost-efficiency in mind, ensuring they can scale successfully without breaking the bank.

0 notes

Text

COMP 370 Homework 2 – Unix server and command-line exercises

The goal of this assignment is for you to get more familiar with your Unix EC2 – both as a data science machine and as a server (as a data scientist, you’ll need it as both). Task 1: Setting up a webserver The objective of this task is to setup your EC2 instance to run an Apache webserver on port 8008. Your goal is to have it serving up the file comp370_hw2.txt at the www root. In other words,…

0 notes

Text

COMP 370 Homework 2 Unix server and command-line exercises Solved

The goal of this assignment is for you to get more familiar with your Unix EC2 – both as a data science machine and as a server (as a data scientist, you’ll need it as both). Task 1: Setting up a webserver The objective of this task is to setup your EC2 instance to run an Apache webserver on port 8008. Your goal is to have it serving up the file comp370_hw2.txt at the www root. In other words,…

0 notes

Text

What Are The Programmatic Commands For EMR Notebooks?

EMR Notebook Programming Commands

Programmatic Amazon EMR Notebook interaction.

How to leverage execution APIs from a script or command line to control EMR notebook executions outside the AWS UI. This lets you list, characterise, halt, and start EMR notebook executions.

The following examples demonstrate these abilities:

AWS CLI: Amazon EMR clusters on Amazon EC2 and EMR Notebooks clusters (EMR on EKS) with notebooks in EMR Studio Workspaces are shown. An Amazon S3 location-based notebook execution sample is also provided. The displayed instructions can list executions by start time or start time and status, halt an ongoing execution, and describe a notebook execution.

Boto3 SDK (Python): Demo.py uses boto3 to interface with EMR notebook execution APIs. The script explains how to initiate a notebook execution, get the execution ID, describe it, list all running instances, and stop it after a short pause. Status updates and execution IDs are shown in this script's output.

Ruby SDK: Sample Ruby code shows notebook execution API calls and Amazon EMR connection setup. Example: describe execution, print information, halt notebook execution, start notebook execution, and get execution ID. Predicted Ruby notebook run outcomes are also shown.

Programmatic command parameters

Important parameters in these programming instructions are:

EditorId: EMR Studio workspace.

relative-path or RelativePath: The notebook file's path to the workspace's home directory. Pathways include my_folder/python3.ipynb and demo_pyspark.ipynb.

execution-engine or ExecutionEngine: EMR cluster ID (j-1234ABCD123) or EMR on EKS endpoint ARN and type to choose engine.

The IAM service role, such as EMR_Notebooks_DefaultRole, is defined.

notebook-params or notebook_params: Allows a notebook to receive multiple parameter values, eliminating the need for multiple copies. Typically, parameters are JSON strings.

The input notebook file's S3 bucket and key are supplied.

The S3 bucket and key where the output notebook will be stored.

notebook-execution-name: Names the performance.

This identifies an execution when describing, halting, or listing.

–from and –status: Status and start time filters for executions.

The console can also access EMR Notebooks as EMR Studio Workspaces, according to documentation. Workspace access and creation require additional IAM role rights. Programmatic execution requires IAM policies like StartNotebookExecution, DescribeNotebookExecution, ListNotebookExecutions, and iam:PassRole. EMR Notebooks clusters (EMR on EKS) require emr-container permissions.

The AWS Region per account maximum is 100 concurrent executions, and executions that last more than 30 days are terminated. Interactive Amazon EMR Serverless apps cannot execute programs.

You can plan or batch EMR notebook runs using AWS Lambda and Amazon CloudWatch Events, or Apache Airflow or Amazon Managed Workflows for Apache Airflow (MWAA).

#EMRNotebooks#EMRNotebookscluster#AWSUI#EMRStudio#AmazonEMR#AmazonEC2#technology#technologynews#technews#news#govindhtech

0 notes

Text

Basic Setup For Drupal 10 in AWS

Basically the environment will consist of an Application Load Balancer (ALB), two or more Elastic Compute Cloud (EC2) instances, an Elastic File System (EFS), and a Relational Database Service (RDS) instance. The EFS provides shared file storage for the EC2 instances to access, while the RDS instance provides the MySQL database that Drupal will use, and the ALB distributes incoming web traffic to…

0 notes

Text

Top Container Management Tools You Need to Know in 2024

Containers and container management technology have transformed the way we build, deploy, and manage applications. We’ve successfully collected and stored a program and all its dependencies in containers, allowing it to execute reliably across several computing environments.

Some novices to programming may overlook container technology, yet this approach tackles the age-old issue of software functioning differently in production than in development. QKS Group reveals that Container Management Projected to Register a CAGR of 10.20% by 2028

Containers make application development and deployment easier and more efficient, and developers rely on them to complete tasks. However, with more containers comes greater responsibility, and container management software is up to the task.

We’ll review all you need to know about container management so you can utilize, organize, coordinate, and manage huge containers more effectively.

Download the sample report of Market Share: https://qksgroup.com/download-sample-form/market-share-container-management-2023-worldwide-5112

What is Container Management?

Container management refers to the process of managing, scaling, and sustaining containerized applications across several environments. It incorporates container orchestration, which automates container deployment, networking, scaling, and lifecycle management using platforms such as Kubernetes. Effective container management guarantees that applications in the cloud or on-premises infrastructures use resources efficiently, have optimized processes, and are highly available.

How Does Container Management Work?

Container management begins with the development and setup of containers. Each container is pre-configured with all of the components required to execute an application. This guarantees that the application environment is constant throughout the various container deployment situations.

After you’ve constructed your containers, it’s time to focus on the orchestration. This entails automating container deployment and operation in order to manage container scheduling across a cluster of servers. This enables more informed decisions about where to run containers based on resource availability, limitations, and inter-container relationships.

Beyond that, your container management platform will manage scalability and load balancing. As the demand for an application change, these systems dynamically modify the number of active containers, scaling up at peak times and down during quieter moments. They also handle load balancing, which distributes incoming application traffic evenly among all containers.

Download the sample report of Market Forecast: https://qksgroup.com/download-sample-form/market-forecast-container-management-2024-2028-worldwide-4629

Top Container Management Software

Docker

Docker is an open-source software platform that allows you to create, deploy, and manage virtualized application containers on your operating system.

The container contains all the application’s services or functions, as well as its libraries, configuration files, dependencies, and other components.

Apache Mesos

Apache Mesos is an open-source cluster management system and a control plane for effective distribution of computer resources across application delivery platforms known as frameworks.

Amazon Elastic Container Service (ECS)

Amazon ECS is a highly scalable container management platform that supports Docker containers and enables you to efficiently run applications on a controlled cluster of Amazon EC2 instances.

This makes it simple to manage containers as modular services for your applications, eliminating the need to install, administer, and customize your own cluster management infrastructure.

OpenShift

OpenShift is a container management tool developed by RedHat. Its architecture is built around Docker container packaging and a Kubernetes-based cluster management. It also brings together various topics related to application lifecycle management.

Kubernetes

Kubernetes, developed by Google, is the most widely used container management technology. It was provided to the Cloud Native Computing Foundation in 2015 and is now maintained by the Kubernetes community.

Kubernetes soon became a top choice for a standard cluster and container management platform because it was one of the first solutions and is also open source.

Containers are widely used in application development due to their benefits in terms of constant performance, portability, scalability, and resource efficiency. Containers allow developers to bundle programs and services, as well as all their dependencies, into a standardized isolated element that can function smoothly and consistently in a variety of computer environments, simplifying application deployment. The Container Management Market Share, 2023, Worldwide research and the Market Forecast: Container Management, 2024-2028, Worldwide report are critical for acquiring a complete understanding of these emerging threats.

This widespread usage of containerization raises the difficulty of managing many containers, which may be overcome by using container management systems. Container management systems on the market today allow users to generate and manage container images, as well as manage the container lifecycle. They guarantee that infrastructure resources are managed effectively and efficiently, and that they grow in response to user traffic. They also enable container monitoring for performance and faults, which are reported in the form of dashboards and infographics, allowing developers to quickly address any concerns.

Talk To Analyst: https://qksgroup.com/become-client

Conclusion

Containerization frees you from the constraints of an operating system, allowing you to speed development and perhaps expand your user base, so it’s no surprise that it’s the technology underlying more than half of all apps. I hope the information in this post was sufficient to get you started with the appropriate containerization solution for your requirements.

0 notes