#how to improve productivity in software development

Explore tagged Tumblr posts

Text

I feel like I need to clarify how fucking... random the Big Ugly Bill is.

I do admit. I'm not reading 1000 pages. I did use AI to read it. I know, I know. You can hate me, but my attention span and reading comprehension are not great enough for that.

So here's 25 random ass points in the Big Ugly Bill.

Environment and Energy

1. Repeals grants and rebates from the government for clean energy projects

2. Reduces financial incentive and technical assistance to reduce methane production

3. Ends EPA initiative to label and certify greenhouse gas materials

4. Revokes money meant for modernizing and digitizing environment permitting process

5. Ends federal aid to states and tribes to improve emissions tracking and public access to environmental compliance data

Agriculture and Conservation

6. Reauthorizes major farm safety net programs

7. Rescinds unspent Inflation Reduction Act funds for the Environmental Quality Incentives Program (EQIP), Conservation Stewardship Program (CSP), and others

8. Provides $15 million/year to continue controlling the spread of destructive feral swine populations on agricultural lands

9. Continues payments to private landowners who open land for hunting, fishing, and conservation through 2031

10. Increases federal support for groundwater and drinking water safety projects via the USDA

Nutrition and Social Programs

11. Revises definitions of “qualified” non-citizens, likely restricting Supplemental Nutrition Assistance Program (SNAP) access

12. Reauthorizes through 2031 with ongoing food commodity purchases and distribution logistics support

Defense and National Security

13. Raises the multiplier for calculating military pensions, enhancing the defined-benefit portion for service members

14. Lets DoD keep using private contractors for base housing through September 2029

15. Adds appropriations for expanding the U.S. Navy fleet—part of a broader military-industrial buildup

Infrastructure and Industry

16. Eliminates tax incentives and grants for EV purchases and charging network development

17. Mandates quarterly oil and gas lease sales in areas like the Gulf of Mexico and Alaska, including ANWR

18. Adds metallurgical coal (used for steelmaking) to the list of industries eligible for domestic manufacturing tax credits

IRS and Taxes

19. Eliminates the free government-run tax filing system, reinstating reliance on private tax software providers

20. Doubles the estate tax threshold to $15 million per individual, shielding ultra-wealthy inheritances

21. Temporarily allows Americans to deduct interest on car loans for U.S.-made vehicles

22. Sets up “American Freedom Accounts” seeded with $1,000 at birth and available for tax-free growth

23. Codifies lower individual income tax brackets and corporate rates from the 2017 Tax Cuts and Jobs Act

Other

24. Bars local governments from regulating artificial intelligence for 10 years, centralizing control at the federal level

25. Creates a new EPA division for restoring abandoned hardrock mine sites in western regions

This could all be... a dozen bills... at least. The Big Ugly Bill should be renamed the special interest lobbying bill.

"BUT! NO TAXES ON TIPS! IT'S GOOD!"

But it revokes grants for clean energy and methane control, instead providing grants for coal production.

"BUT CHILD SAVINGS ACCOUNT"

But it creates stricter work requirements for SNAP eligibility.

"BUT CLEAN WATER"

But it eliminates the free government funded tax filing system.

We can do this all day. This is trash. The bill is trash. It's a "EVERYONE SHOVE WHATEVER SHIT YOU WANT INTO IT" bill.

And don't get me started on Obama's "EVERYONE SHOVE AS MUCH SHIT AS YOU CAN INTO IT." Bill. I'm tired of every time I criticize the current administration people want to say "BUT OBAMA". I wasn't okay with it when he did it. I'm not okay with it now. Besides, by your logic. Just because one person does something, that makes it okay for EVERYONE to do it.

How about "it wasn't okay for Obama to do it and it's not okay for Trump to do it"? Eh?

-fae

14 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

237 notes

·

View notes

Note

I know you said that you have done some script writing for others. I wonder if you have any advice about how to get started writing a script? Also, what was your experience doing that work?

It's writing that demands patience. I struggled for years to master the craft, submit work, face rejection, get close and then get tossed into the wind, lol. Looked for agents... all that stuff.

I've had things optioned and never made. Went through the whole development hell with a producer who wanted me to turn the leads in my horror script white instead of keeping them Black, even though they were based on Black American folklore. Screenwriting is a test of what you are willing to give up in your soul to get things made. I was always a writer who wrote with intention for my culture, and it wasn't conducive to making it for me personally when I was actively doing it. I used to go to Sundance and smell the desperation on everybody, especially Black folks. It's a tough gig, but I did love every moment doing it. It was my passion and I only started pursuing it because I wanted to see Black horror movies being made that centered Black people. I was before my time I guess, because now Black horror is finally in. Then I went back to fiction writing where I could control my content.

All that being said, what I learned is, it's not about the writing, it's really about who you're connected to, who will vouch for you, and if you can get names attached to projects. That was disheartening to learn because we all have these dreams of just being the best writer possible, and that's not how it works unfortunately.

The best advice I can give you just to get started is read all the best scripts that have come out in the last three years. There are websites where you can read and download scripts. There's also scripts floating around the internet. Read tons of scripts. Then read tons more, because you have to know what good writing is and you have to master your own writing voice and you won't learn that until you've read a lot, and then...write a lot of scripts. Lots of scripts. Not five or ten. Not twenty. Thirty at the minimum because you have to master the craft. And then...you have to get connected. Not just writing groups, but going to film festivals and networking with folks who know people. Getting a production job on a TV show or a movie helps more.

There are plenty of good books that teach screenwriting. You may want to check your local college/university to see if there are classes/workshops offered if you are a newbie to screenwriting altogether.

Here is a list of books for beginners that are worth reading. Try to find them at the library first. My writing mentor for screenwriting is on this list. Pilar Alessandra. I worked with her for years to hone my craft, and her book, "The Coffee Break Screenwriter" is chock full of easy exercises to get you started. I know her personally.

Here's an easy litmus test that I always tell people to try to get their feet wet if they think they have a script that might be ready for a test submission. Submit it to the Nicholl Fellowship. It's run by the Academy of Motion Picture Arts and Sciences. (The Oscars folks). It's one of the most prestigious fellowships for screenwriters and they pay you to write for a year. Here's the link:

https://www.oscars.org/nicholl

Pros read the scripts and determine who the Fellows are. They have quarter finalists, and finalists, and then winners. If you can get your script to the quarter finals, you may have what it takes.

The screenwriting software I used was the same Ryan Coogler used when he got started. Final Draft (the industry standard for years). Screenwriting costs money. You have to invest in yourself and your writing. It takes hella time and you have to love writing to endure. I loved it, even when I was rejected for things.

Good luck!

13 notes

·

View notes

Text

If Donald Trump wins the US presidential election in November, the guardrails could come off of artificial intelligence development, even as the dangers of defective AI models grow increasingly serious.

Trump’s election to a second term would dramatically reshape—and possibly cripple—efforts to protect Americans from the many dangers of poorly designed artificial intelligence, including misinformation, discrimination, and the poisoning of algorithms used in technology like autonomous vehicles.

The federal government has begun overseeing and advising AI companies under an executive order that President Joe Biden issued in October 2023. But Trump has vowed to repeal that order, with the Republican Party platform saying it “hinders AI innovation” and “imposes Radical Leftwing ideas” on AI development.

Trump’s promise has thrilled critics of the executive order who see it as illegal, dangerous, and an impediment to America’s digital arms race with China. Those critics include many of Trump’s closest allies, from X CEO Elon Musk and venture capitalist Marc Andreessen to Republican members of Congress and nearly two dozen GOP state attorneys general. Trump’s running mate, Ohio senator JD Vance, is staunchly opposed to AI regulation.

“Republicans don't want to rush to overregulate this industry,” says Jacob Helberg, a tech executive and AI enthusiast who has been dubbed “Silicon Valley’s Trump whisperer.”

But tech and cyber experts warn that eliminating the EO’s safety and security provisions would undermine the trustworthiness of AI models that are increasingly creeping into all aspects of American life, from transportation and medicine to employment and surveillance.

The upcoming presidential election, in other words, could help determine whether AI becomes an unparalleled tool of productivity or an uncontrollable agent of chaos.

Oversight and Advice, Hand in Hand

Biden’s order addresses everything from using AI to improve veterans’ health care to setting safeguards for AI’s use in drug discovery. But most of the political controversy over the EO stems from two provisions in the section dealing with digital security risks and real-world safety impacts.

One provision requires owners of powerful AI models to report to the government about how they’re training the models and protecting them from tampering and theft, including by providing the results of “red-team tests” designed to find vulnerabilities in AI systems by simulating attacks. The other provision directs the Commerce Department’s National Institute of Standards and Technology (NIST) to produce guidance that helps companies develop AI models that are safe from cyberattacks and free of biases.

Work on these projects is well underway. The government has proposed quarterly reporting requirements for AI developers, and NIST has released AI guidance documents on risk management, secure software development, synthetic content watermarking, and preventing model abuse, in addition to launching multiple initiatives to promote model testing.

Supporters of these efforts say they’re essential to maintaining basic government oversight of the rapidly expanding AI industry and nudging developers toward better security. But to conservative critics, the reporting requirement is illegal government overreach that will crush AI innovation and expose developers’ trade secrets, while the NIST guidance is a liberal ploy to infect AI with far-left notions about disinformation and bias that amount to censorship of conservative speech.

At a rally in Cedar Rapids, Iowa, last December, Trump took aim at Biden’s EO after alleging without evidence that the Biden administration had already used AI for nefarious purposes.

“When I’m reelected,” he said, “I will cancel Biden’s artificial intelligence executive order and ban the use of AI to censor the speech of American citizens on Day One.”

Due Diligence or Undue Burden?

Biden’s effort to collect information about how companies are developing, testing, and protecting their AI models sparked an uproar on Capitol Hill almost as soon as it debuted.

Congressional Republicans seized on the fact that Biden justified the new requirement by invoking the 1950 Defense Production Act, a wartime measure that lets the government direct private-sector activities to ensure a reliable supply of goods and services. GOP lawmakers called Biden’s move inappropriate, illegal, and unnecessary.

Conservatives have also blasted the reporting requirement as a burden on the private sector. The provision “could scare away would-be innovators and impede more ChatGPT-type breakthroughs,” Representative Nancy Mace said during a March hearing she chaired on “White House overreach on AI.”

Helberg says a burdensome requirement would benefit established companies and hurt startups. He also says Silicon Valley critics fear the requirements “are a stepping stone” to a licensing regime in which developers must receive government permission to test models.

Steve DelBianco, the CEO of the conservative tech group NetChoice, says the requirement to report red-team test results amounts to de facto censorship, given that the government will be looking for problems like bias and disinformation. “I am completely worried about a left-of-center administration … whose red-teaming tests will cause AI to constrain what it generates for fear of triggering these concerns,” he says.

Conservatives argue that any regulation that stifles AI innovation will cost the US dearly in the technology competition with China.

“They are so aggressive, and they have made dominating AI a core North Star of their strategy for how to fight and win wars,” Helberg says. “The gap between our capabilities and the Chinese keeps shrinking with every passing year.”

“Woke” Safety Standards

By including social harms in its AI security guidelines, NIST has outraged conservatives and set off another front in the culture war over content moderation and free speech.

Republicans decry the NIST guidance as a form of backdoor government censorship. Senator Ted Cruz recently slammed what he called NIST’s “woke AI ‘safety’ standards” for being part of a Biden administration “plan to control speech” based on “amorphous” social harms. NetChoice has warned NIST that it is exceeding its authority with quasi-regulatory guidelines that upset “the appropriate balance between transparency and free speech.”

Many conservatives flatly dismiss the idea that AI can perpetuate social harms and should be designed not to do so.

“This is a solution in search of a problem that really doesn't exist,” Helberg says. “There really hasn’t been massive evidence of issues in AI discrimination.”

Studies and investigations have repeatedly shown that AI models contain biases that perpetuate discrimination, including in hiring, policing, and health care. Research suggests that people who encounter these biases may unconsciously adopt them.

Conservatives worry more about AI companies’ overcorrections to this problem than about the problem itself. “There is a direct inverse correlation between the degree of wokeness in an AI and the AI's usefulness,” Helberg says, citing an early issue with Google’s generative AI platform.

Republicans want NIST to focus on AI’s physical safety risks, including its ability to help terrorists build bioweapons (something Biden’s EO does address). If Trump wins, his appointees will likely deemphasize government research on AI’s social harms. Helberg complains that the “enormous amount” of research on AI bias has dwarfed studies of “greater threats related to terrorism and biowarfare.”

Defending a “Light-Touch Approach”

AI experts and lawmakers offer robust defenses of Biden’s AI safety agenda.

These projects “enable the United States to remain on the cutting edge” of AI development “while protecting Americans from potential harms,” says Representative Ted Lieu, the Democratic cochair of the House’s AI task force.

The reporting requirements are essential for alerting the government to potentially dangerous new capabilities in increasingly powerful AI models, says a US government official who works on AI issues. The official, who requested anonymity to speak freely, points to OpenAI’s admission about its latest model’s “inconsistent refusal of requests to synthesize nerve agents.”

The official says the reporting requirement isn’t overly burdensome. They argue that, unlike AI regulations in the European Union and China, Biden’s EO reflects “a very broad, light-touch approach that continues to foster innovation.”

Nick Reese, who served as the Department of Homeland Security’s first director of emerging technology from 2019 to 2023, rejects conservative claims that the reporting requirement will jeopardize companies’ intellectual property. And he says it could actually benefit startups by encouraging them to develop “more computationally efficient,” less data-heavy AI models that fall under the reporting threshold.

AI’s power makes government oversight imperative, says Ami Fields-Meyer, who helped draft Biden’s EO as a White House tech official.

“We’re talking about companies that say they’re building the most powerful systems in the history of the world,” Fields-Meyer says. “The government’s first obligation is to protect people. ‘Trust me, we’ve got this’ is not an especially compelling argument.”

Experts praise NIST’s security guidance as a vital resource for building protections into new technology. They note that flawed AI models can produce serious social harms, including rental and lending discrimination and improper loss of government benefits.

Trump’s own first-term AI order required federal AI systems to respect civil rights, something that will require research into social harms.

The AI industry has largely welcomed Biden’s safety agenda. “What we're hearing is that it’s broadly useful to have this stuff spelled out,” the US official says. For new companies with small teams, “it expands the capacity of their folks to address these concerns.”

Rolling back Biden’s EO would send an alarming signal that “the US government is going to take a hands off approach to AI safety,” says Michael Daniel, a former presidential cyber adviser who now leads the Cyber Threat Alliance, an information sharing nonprofit.

As for competition with China, the EO’s defenders say safety rules will actually help America prevail by ensuring that US AI models work better than their Chinese rivals and are protected from Beijing’s economic espionage.

Two Very Different Paths

If Trump wins the White House next month, expect a sea change in how the government approaches AI safety.

Republicans want to prevent AI harms by applying “existing tort and statutory laws” as opposed to enacting broad new restrictions on the technology, Helberg says, and they favor “much greater focus on maximizing the opportunity afforded by AI, rather than overly focusing on risk mitigation.” That would likely spell doom for the reporting requirement and possibly some of the NIST guidance.

The reporting requirement could also face legal challenges now that the Supreme Court has weakened the deference that courts used to give agencies in evaluating their regulations.

And GOP pushback could even jeopardize NIST’s voluntary AI testing partnerships with leading companies. “What happens to those commitments in a new administration?” the US official asks.

This polarization around AI has frustrated technologists who worry that Trump will undermine the quest for safer models.

“Alongside the promises of AI are perils,” says Nicol Turner Lee, the director of the Brookings Institution’s Center for Technology Innovation, “and it is vital that the next president continue to ensure the safety and security of these systems.”

26 notes

·

View notes

Text

4 Seasons Back Yard Remodel + Crystal Yard

My 4 seasons remodels of the Petz 5 Back Yard are now available for download! And because I went on a bit of a side-quest, I’ve also made a bonus version, a fantasy, crystal back yard!

You can read my creator's notes below:

I somewhat wonder if it's fair to criticize the original Petz 5 playscenes too harshly. It's possible that the development team faced tight deadlines or budget constraints, factors that may not have been entirely within their control. However, regardless of the circumstances, the end result was a disappointingly sloppy product, and it's difficult to ignore some of the glaring flaws. While I can understand that the developers were working with dated software, there are certain flaws that can't be attributed to software limitations. Rather, they seem to reflect a clear lack of attention to detail. Here's what I mean.

The more you look at it, the harder it is to decide which flaw is the worst. The blatant MS paint spray paint "touch-up" in the upper left, that there was no effort put into blending in the skybox, or that they neglected to add textures to the roof.

Alright, enough ranting there. None of this is to say my playscenes are perfect either, but they were a labor of love and I hope that this is evident in the final results.

SPRING

I smoothed out the grass texture to give it a more velvety, manicured lawn appearance. I brightened up the dingy looking fence to a more brighter white. The original playscene had a hole in the fence, and while it might add "character", I opted to cover over it for a more polished look. I added bushes behind the fence to cover up the skybox and to conceal the bottom of the houses.

Speaking of houses. Wow these needed a big work-up. The texture work (or lack of) on these is just bad. I'm no expert in house construction, but even mostly-brick houses will have some accents like trims to break up the monotony of a fully-brick façade.

Because of how fuzzy the brick texture is in the original, I drew in the mortar lines of the bricks to enhance the texture. I added roof shingles, siding, and trim boards to the house to make it look more like a typical suburban house. Despite these edits, it's still not a "great" house - the way it looks through the windows, it looks like the house is one room lol. I wish I could put better houses in the backdrop but because Tinker doesn't allow me to edit the animated blinds, I'm constrained to keeping them the shape that they are. Oh well. We can use our imagination.

I added landscaping rocks to make the flower bed look nicer. I also added some landscaping details like bushes, garden lights, and string lights for ambiance.

[ Enlarged picture of the garden light I made ]

I also worked to improve the skyboxes in all 4 seasons of the of the Back Yard playscenes. It would be lengthy to get into the details of all that but here's a before and after of the night skybox. You got to love them high-quality MS paint stars in the original.

SUMMER

I had a hard time with the summer one because it was hard to come up with ways to make it look different from the spring version. I did make the grass, bushes, and tree leaves slightly more vibrant. Originally I had some flowers by the bushes but I just wasn't really happy with them. At the last minute, I made the decision to remove them entirely. This makes the playscene a little more "plain" but I think some people may want a more "plain", undecorated version so that they can dress it up how they want with toyz.

FALL

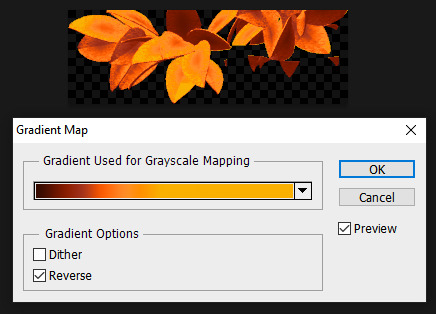

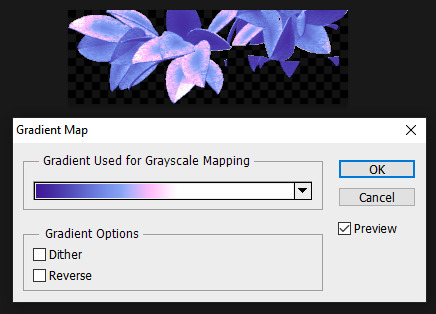

Fall is my favorite season, so this was a joy to make. I toned down the color of the grass and added fall landscaping motifs. Recoloring the tree's leaves was done by using Photoshop's gradient map feature. If time permits, I may do a tutorial on this in the future.

Gradient mapping is a powerful tool for recoloring almost anything. It can give way better results than methods such as hue/saturation, replace color, etc. And thanks to photoshop actions, applying this recolor to all the animation frames took just a couple of minutes.

Unfortunately, the fall leaves look "bright" in the nighttime version of the playscene. There does not seem to be a way to implement a darker version of these leaves for the nighttime playscene. If you look at the sprites in Tinker, you'll see that there are two sets of animations for Leaves A, B, and C and they're labeled "PropsAd" and "PropsAn", which would lead you to think that the developers originally intended for there to be a set of leaves for the day time, and a darker set for the night time. I guess the developers scrapped this idea because this does not work in the actual gameplay. When I experimented with this, the game appears to randomly display the nighttime sprite even during the day time, effectively ruining the intended affect. I'm not sure why the developers scrapped this. Either they had issues coding this properly or were just didn't want to put in the effort to make two sets of leaves.

WINTER

Instead of doing recolored leaves for this scene, I made all the leaves transparent and added holiday lighting to the tree. I know the lights aren't perfect - it was kind of hard to make out which direction a branch was going, so it has hard to maintain 'perfect' perspective.

CRYSTAL YARD

This is a bonus playscene that I made because I got a little side-tracked as I was working on the 4 seasons back yards. This is inspired by the Suramar zone from World of Warcraft, so it has a bit of that fantasy, night-elf feel and color scheme. It's been years since I've played WoW but I still appreciate the enchanting aesthetic of the elven zones.

I used gradient mapping again to recolor the leaves to give it this lavender, shimmery, iridescent look. I did a little bit of gaussian blurring and layer effects to make them look a little more "glowy" than the originals.

As before, Tinker won't let me edit the blinds, so it limited what edits I could do to the houses. I would love it if I could have done curtains instead or something. I did my best to make these houses look a little less suburban and more elven. It's not perfect but it was rough working with what I had.

KNOWN ISSUES / THINGS I COULDN'T EDIT

As far as I'm aware, there is no way to turn off the snow effect for seasons like summer where it wouldn't make sense. This probably involves some code-editing that is beyond my technical skillset.

The winter playscene still has the green grass footprint when your petz walk. The sprites for these are not housed within the .env itself but in the Petz 5 Rez.dll file. It would probably involve a bit of tweaking in the code to switch the sprites to something else.

The fall leaves are "bright" in the night time version because there is no way to implement a second, darker set of leaves.

I cannot edit the blinds animation. Tinker gives you an error when you try to edit this sprite. This unfortunately limits what edits I can make to the house and the fence because of where the sprite is positioned.

If anyone does know of solutions to these, do let me know as I'd love to enhance these scenes further!

ICONS

Making the icons for these was also a fun little project. For some odd reason though, the game puts a stray pixel over them when I import them through LnzPro. I did my best to disguise them but there does not seem to be a way to fix that.

BEFORE / AFTER

With all that rambling out of the way, visit my main page over at Magnolia Road > Resources > Playscenes to download the goodies!

38 notes

·

View notes

Text

Ganesh Shankar, CEO & Co-Founder of Responsive – Interview Series

New Post has been published on https://thedigitalinsider.com/ganesh-shankar-ceo-co-founder-of-responsive-interview-series/

Ganesh Shankar, CEO & Co-Founder of Responsive – Interview Series

Ganesh Shankar, CEO and Co-Founder of Responsive, is an experienced product manager with a background in leading product development and software implementations for Fortune 500 enterprises. During his time in product management, he observed inefficiencies in the Request for Proposal (RFP) process—formal documents organizations use to solicit bids from vendors, often requiring extensive, detailed responses. Managing RFPs traditionally involves multiple stakeholders and repetitive tasks, making the process time-consuming and complex.

Founded in 2015 as RFPIO, Responsive was created to streamline RFP management through more efficient software solutions. The company introduced an automated approach to enhance collaboration, reduce manual effort, and improve efficiency. Over time, its technology expanded to support other complex information requests, including Requests for Information (RFIs), Due Diligence Questionnaires (DDQs), and security questionnaires.

Today, as Responsive, the company provides solutions for strategic response management, helping organizations accelerate growth, mitigate risk, and optimize their proposal and information request processes.

What inspired you to start Responsive, and how did you identify the gap in the market for response management software?

My co-founders and I founded Responsive in 2015 after facing our own struggles with the RFP response process at the software company we were working for at the time. Although not central to our job functions, we dedicated considerable time assisting the sales team with requests for proposals (RFPs), often feeling underappreciated despite our vital role in securing deals. Frustrated with the lack of technology to make the RFP process more efficient, we decided to build a better solution. Fast forward nine years, and we’ve grown to nearly 500 employees, serve over 2,000 customers—including 25 Fortune 100 companies—and support nearly 400,000 users worldwide.

How did your background in product management and your previous roles influence the creation of Responsive?

As a product manager, I was constantly pulled by the Sales team into the RFP response process, spending almost a third of my time supporting sales instead of focusing on my core product management responsibilities. My two co-founders experienced a similar issue in their technology and implementation roles. We recognized this was a widespread problem with no existing technology solution, so we leveraged our almost 50 years of combined experience to create Responsive. We saw an opportunity to fundamentally transform how organizations share information, starting with managing and responding to complex proposal requests.

Responsive has evolved significantly since its founding in 2015. How do you maintain the balance between staying true to your original vision and adapting to market changes?

First, we’re meticulous about finding and nurturing talent that embodies our passion – essentially cloning our founding spirit across the organization. As we’ve scaled, it’s become critical to hire managers and team members who can authentically represent our core cultural values and commitment.

At the same time, we remain laser-focused on customer feedback. We document every piece of input, regardless of its size, recognizing that these insights create patterns that help us navigate product development, market positioning, and any uncertainty in the industry. Our approach isn’t about acting on every suggestion, but creating a comprehensive understanding of emerging trends across a variety of sources.

We also push ourselves to think beyond our immediate industry and to stay curious about adjacent spaces. Whether in healthcare, technology, or other sectors, we continually find inspiration for innovation. This outside-in perspective allows us to continually raise the bar, inspiring ideas from unexpected places and keeping our product dynamic and forward-thinking.

What metrics or success indicators are most important to you when evaluating the platform’s impact on customers?

When evaluating Responsive’s impact, our primary metric is how we drive customer revenue. We focus on two key success indicators: top-line revenue generation and operational efficiency. On the efficiency front, we aim to significantly reduce RFP response time – for many, we reduce it by 40%. This efficiency enables our customers to pursue more opportunities, ultimately accelerating their revenue generation potential.

How does Responsive leverage AI and machine learning to provide a competitive edge in the response management software market?

We leverage AI and machine learning to streamline response management in three key ways. First, our generative AI creates comprehensive proposal drafts in minutes, saving time and effort. Second, our Ask solution provides instant access to vetted organizational knowledge, enabling faster, more accurate responses. Third, our Profile Center helps InfoSec teams quickly find and manage security content.

With over $600 billion in proposals managed through the Responsive platform and four million Q&A pairs processed, our AI delivers intelligent recommendations and deep insights into response patterns. By automating complex tasks while keeping humans in control, we help organizations grow revenue, reduce risk, and respond more efficiently.

What differentiates Responsive’s platform from other solutions in the industry, particularly in terms of AI capabilities and integrations?

Since 2015, AI has been at the core of Responsive, powering a platform trusted by over 2,000 global customers. Our solution supports a wide range of RFx use cases, enabling seamless collaboration, workflow automation, content management, and project management across teams and stakeholders.

With key AI capabilities—like smart recommendations, an AI assistant, grammar checks, language translation, and built-in prompts—teams can deliver high-quality RFPs quickly and accurately.

Responsive also offers unmatched native integrations with leading apps, including CRM, cloud storage, productivity tools, and sales enablement. Our customer value programs include APMP-certified consultants, Responsive Academy courses, and a vibrant community of 1,500+ customers sharing insights and best practices.

Can you share insights into the development process behind Responsive’s core features, such as the AI recommendation engine and automated RFP responses?

Responsive AI is built on the foundation of accurate, up-to-date content, which is critical to the effectiveness of our AI recommendation engine and automated RFP responses. AI alone cannot resolve conflicting or incomplete data, so we’ve prioritized tools like hierarchical tags and robust content management to help users organize and maintain their information. By combining generative AI with this reliable data, our platform empowers teams to generate fast, high-quality responses while preserving credibility. AI serves as an assistive tool, with human oversight ensuring accuracy and authenticity, while features like the Ask product enable seamless access to trusted knowledge for tackling complex projects.

How have advancements in cloud computing and digitization influenced the way organizations approach RFPs and strategic response management?

Advancements in cloud computing have enabled greater efficiency, collaboration, and scalability. Cloud-based platforms allow teams to centralize content, streamline workflows, and collaborate in real time, regardless of location. This ensures faster turnaround times and more accurate, consistent responses.

Digitization has also enhanced how organizations manage and access their data, making it easier to leverage AI-powered tools like recommendation engines and automated responses. With these advancements, companies can focus more on strategy and personalization, responding to RFPs with greater speed and precision while driving better outcomes.

Responsive has been instrumental in helping companies like Microsoft and GEODIS streamline their RFP processes. Can you share a specific success story that highlights the impact of your platform?

Responsive has played a key role in supporting Microsoft’s sales staff by managing and curating 20,000 pieces of proposal content through its Proposal Resource Library, powered by Responsive AI. This technology enabled Microsoft’s proposal team to contribute $10.4 billion in revenue last fiscal year. Additionally, by implementing Responsive, Microsoft saved its sellers 93,000 hours—equivalent to over $17 million—that could be redirected toward fostering stronger customer relationships.

As another example of Responsive providing measurable impact, our customer Netsmart significantly improved their response time and efficiency by implementing Responsive’s AI capabilities. They achieved a 10X faster response time, increased proposal submissions by 67%, and saw a 540% growth in user adoption. Key features such as AI Assistant, Requirements Analysis, and Auto Respond played crucial roles in these improvements. The integration with Salesforce and the establishment of a centralized Content Library further streamlined their processes, resulting in a 93% go-forward rate for RFPs and a 43% reduction in outdated content. Overall, Netsmart’s use of Responsive’s AI-driven platform led to substantial time savings, enhanced content accuracy, and increased productivity across their proposal management operations.

JAGGAER, another Responsive customer, achieved a double-digit win-rate increase and 15X ROI by using Responsive’s AI for content moderation, response creation, and Requirements Analysis, which improved decision-making and efficiency. User adoption tripled, and the platform streamlined collaboration and content management across multiple teams.

Where do you see the response management industry heading in the next five years, and how is Responsive positioned to lead in this space?

In the next five years, I see the response management industry being transformed by AI agents, with a focus on keeping humans in the loop. While we anticipate around 80 million jobs being replaced, we’ll simultaneously see 180 million new jobs created—a net positive for our industry.

Responsive is uniquely positioned to lead this transformation. We’ve processed over $600 billion in proposals and built a database of almost 4 million Q&A pairs. Our massive dataset allows us to understand complex patterns and develop AI solutions that go beyond simple automation.

Our approach is to embrace AI’s potential, finding opportunities for positive outcomes rather than fearing disruption. Companies with robust market intelligence, comprehensive data, and proven usage will emerge as leaders, and Responsive is at the forefront of that wave. The key is not just implementing AI, but doing so strategically with rich, contextual data that enables meaningful insights and efficiency.

Thank you for the great interview, readers who wish to learn more should visit Responsive,

#000#adoption#agents#ai#AI AGENTS#ai assistant#AI-powered#amp#Analysis#approach#apps#automation#background#billion#CEO#Cloud#cloud computing#cloud storage#collaborate#Collaboration#Community#Companies#comprehensive#computing#content#content management#content moderation#courses#crm#customer relationships

7 notes

·

View notes

Text

What would Storm Hawks do if they had the internet

Piper

Piper could have done so much more if she'd had the Internet. Instead of searching for information about various crystals in the Atmosian libraries, she would have asked for his help. However, it is worth noting that not all the information contained in the books can be accessed, so sometimes she will still have to go outside. Of the entire squadron, she will be the only one who applies the knowledge gained from the Internet to her work purposes. That's why Piper only turns to him when she really needs to know something. She would probably also devote herself to researching scientific articles in an effort to create more powerful crystals, spend time studying Atmos cultures, and most likely use her free hours to watch music videos.

Junko

Junko could watch cute videos with cats and read popular cooking articles, but it wouldn't improve his cooking skills in any way. When he found a new recipe on the Internet, he would try to implement it, but he would still replace the main ingredients with other products. His list of friends would include his entire family line, including distant relatives from the farthest reaches of the Atmos. Perhaps in the early days of using the Internet, Junko would have believed in such common myths as «put your iPhone in the microwave and it will charge in a matter of minutes». If it weren't for Piper, who would explain to him in more detail how the Internet works, his faith could only be strengthened.

Finn

Finn would constantly send memes to the group chat, which would make him an indispensable participant in many discussions. He would also actively trolling the Rex Guardians on various anonymous forums to attract more attention to his person. There were times when his lines went viral, and his name became synonymous with memes, but then they were quickly forgotten, which at first was very upsetting for a teenager. In his spare time, he would record cover versions of his songs on guitar and send them to his friends by email. In addition, Finn would become an avid player of online shooters, where his skill would allow him to dominate not only the battlefield, but also in virtual worlds.

Stork

Stork uses social media only for intended purpose, considering the Internet to be a source of unreliable information, and is afraid that excessive online presence may negatively affect his brain neurons. He would have thought it was some kind of mind-control device, and that if he was strapped to a monitor screen, his mind would turn to mush. Perhaps he could develop anti-virus protocols for the special software used by the squadron. But in addition to this, his piggy bank of strange phobias could include not only the fear of mind worms, but also concerns related to various network viruses. In general, he wouldn't even come close to a computer, but if he had to, he would probably see some things that would require long-term therapy.

Aerrow

He would visit the Sky Knight forums on a daily basis to keep up with the latest developments in Atmosia. This would allow Aerrow to correspond directly with the sky knight council and be aware of when the Cyclonians would take the next step. Perhaps, with Stork's help, he could also create some sort of device to track the subsequent actions of enemy forces, so that no one would be caught off guard by a surprise attack. In addition, Aerrow would use the internet to make online purchases of the new crystals Piper needed for her research. Most often, Aerrow would send important sociological surveys to group chats, the results of which would be required by the government themselves, greatly increasing the effectiveness of their squadron status.

Radarr

Radarr would be the type of lemur who, if they had access to the Internet, would use it mostly to watch funny shows and animal memes. But Radarr wouldn't know how to use a computer at all, and he wouldn't even know what a keyboard was. In addition, his tail would constantly cling to the wires, which would only add to the inconvenience in his life. Absolutely everyone in the squadron would like to take a look at his browser history.

9 notes

·

View notes

Note

Heeeello Anna! :3 I'm back to pester you in the inbox!

You may not have seen this, but this artist ask game... I've got questions for you!

1, 3, 4, 5, 6, 8, 14, 15, 21, 34, 35

(ahahaha... That's a lot.... Ahaha.... Welp ....?)

But! No hurries, and I'm sorry if this eats at your precious time but I'd be happy to hear you talk more about your art! (Can never get enough tbh :3)

Hope you're doing a bit better now, Anna, please take care and you're loved!

Oh, Moon T^T

Hello, and you're right, I didn't even see this artist ask game (maybe, some time ago, so I don't even remember...), and thank you so much for thinking about me and for asking all this stuff T_T I have a lot of fun answering it, and it really distracted me from everything else going on in life since last weeks are quite difficult. But I'll be fine, and thank you for all of these questions, I appreciate you and your attention so much!

1 what medium do you use most (if applicable, what software)?

As I'm drawing now digitally, so I draw in Adobe Photoshop. I'm familiar with this software since my early teens, so it's very comfortable for me :D

When I was drawing traditionally, I primarily worked with pencils. I tried watercolour a few times, too. I have a very soft spot for oil - and I hope to develop this one day, too.

3 your favorite piece(s)?

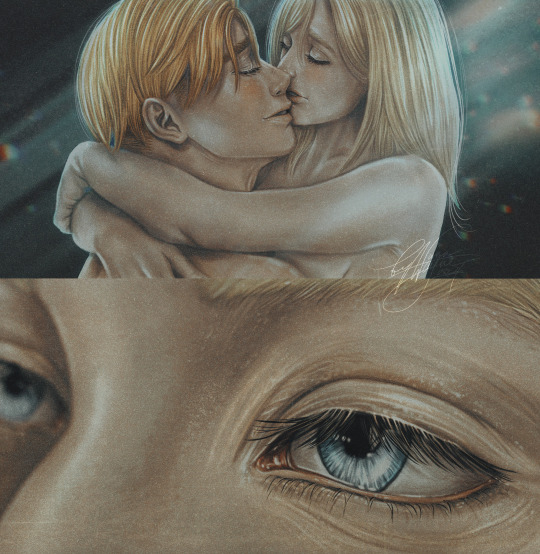

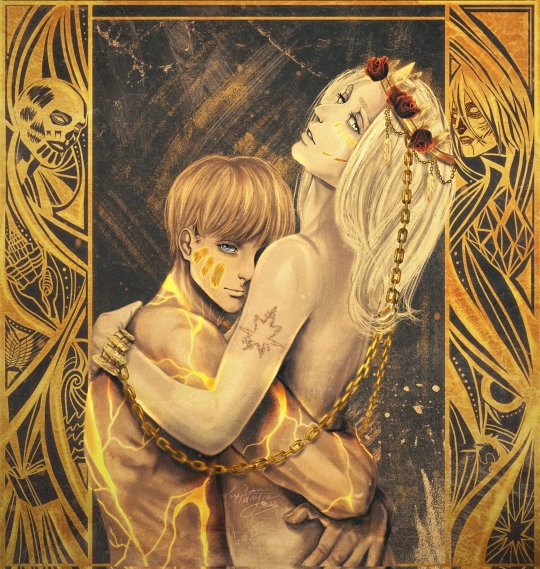

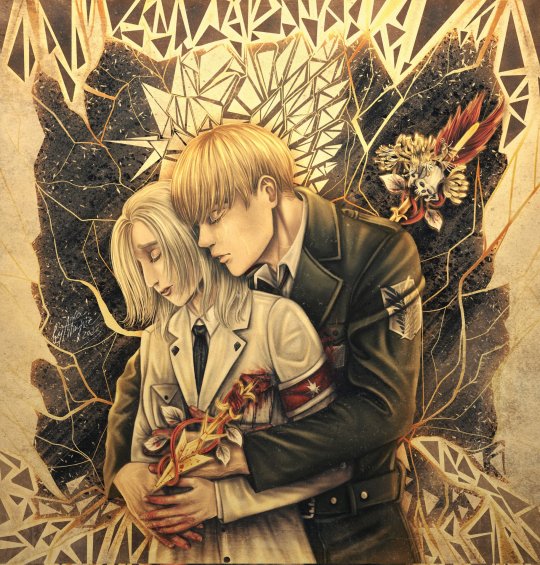

Oh, what a question... I guess, for now, these ones:

4 piece you wish got more love?

All of them :D

I guess, these ones:

5 how would you describe your art style?

(I really want to joke and just refer to 21 question but well, let's take it seriously :D)

I guess, I can call it "cinematic poetry" - there's a very long way to go to really match the title, but this is what I aim for.

6 favorite thing to draw?

Oh, good question, Moon! As for the sketch stage, I really love to draw clothes, and when it comes to the stage of rendering - faces (primarily, eyes and hair, but I love the whole process and always try to improve my face renders skills...) and backgrounds!

8 thing you struggle to draw?

I think, when it comes to the rendering - HANDS. I think, my sketches of hands are not bad, but when I render them, oh boi, here comes the struggle. As a result, it seems fine, I suppose, but it's always a product of a lot of swearing and redrawing :D

14 whats your favorite thing about drawing?

Telling stories though different dimension. I like to combine in drawing both cinematography and literature, and it sounds a bit funny, because it's two different art directions, but still, I want to tell stories though a lot of things which combine these arts: details, emotions, lighting, and as if when you look at the art - you can hear all the vivid noises around the characters of life going one around and of characters themselves speaking, but then, as if they were caught in a moment, and then, I try to show this moment in my drawings. Or, when it's more of conceptual art, it also can tell a story, but differently - not like a frozen mundane life moment, but rather like a whole story behind it, and instead of these frozen ordinary life moments where the story is also told, here, the story told through not so evident details, starting from the pose to the colour palette itself.

I know I have a long way to go with it, but this is what I'm trying to improve and this is what I always aimed for.

15 least favorite thing about drawing?

Hm, very good question, to be honest. I thought about it for a while, since I can't really grasp if I can name such thing, but I think that it's both good and bad thing - that it's a lifetime commitment, that you should accept that you will study the whole life. From one side, it's wonderful and it's the whole fascinating journey, on the other hand - it can be really frustrating and draining not to be able to transfer your ideas from your head into the actual image, and while a lot of artists realize it, it doesn't make it easier to get disappointed sometimes. So, I would say, it's both good and bad thing, and how we look at it - depends on us :D

21 what do you think your artstyle would taste like?

I won't lie, this question sent me into a great reflection, and then I ended up questioning my art style in general, ha-ha-ha... But alright, hm, I think, croissant with orange jam? Don't ask. Just... Croissant. That's it.

34 whats something you still like from your old art?

Oh... Hm, I think from the beginning, they had great composition. I try to improve it now, of course, but I guess it was from the beginning a very great composition and "camera" shot. So this, this for sure!

35 if you had one piece of advice to give your younger artist self, what would it be?

Come, and do it, you're much stronger and braver than you think you are. It counts for many things, to be honest, and not only art, but yes, I would just hug my past self and tell myself that you're much more than you think you are.

Thank you so much, Moon, for all of it - for these questions, for your thoughts to ask me this in the first place and for your interest and support T_T It's priceless, and I thank you from the bottom of my heart.

P.s. I learned with this post that the post has a limited amount of images you can add... I didn't know we could add only 10 images... Woah...

16 notes

·

View notes

Text

Hire Dedicated Developers in India Smarter with AI

Hire dedicated developers in India smarter and faster with AI-powered solutions. As businesses worldwide turn to software development outsourcing, India remains a top destination for IT talent acquisition. However, finding the right developers can be challenging due to skill evaluation, remote team management, and hiring efficiency concerns. Fortunately, AI recruitment tools are revolutionizing the hiring process, making it seamless and effective.

In this blog, I will explore how AI-powered developer hiring is transforming the recruitment landscape and how businesses can leverage these tools to build top-notch offshore development teams.

Why Hire Dedicated Developers in India?

1) Cost-Effective Without Compromising Quality:

Hiring dedicated developers in India can reduce costs by up to 60% compared to hiring in the U.S., Europe, or Australia. This makes it a cost-effective solution for businesses seeking high-quality IT staffing solutions in India.

2) Access to a Vast Talent Pool:

India has a massive talent pool with millions of software engineers proficient in AI, blockchain, cloud computing, and other emerging technologies. This ensures companies can find dedicated software developers in India for any project requirement.

3) Time-Zone Advantage for 24/7 Productivity:

Indian developers work across different time zones, allowing continuous development cycles. This enhances productivity and ensures faster project completion.

4) Expertise in Emerging Technologies:

Indian developers are highly skilled in cutting-edge fields like AI, IoT, and cloud computing, making them invaluable for innovative projects.

Challenges in Hiring Dedicated Developers in India

1) Finding the Right Talent Efficiently:

Sorting through thousands of applications manually is time-consuming. AI-powered recruitment tools streamline the process by filtering candidates based on skill match and experience.

2) Evaluating Technical and Soft Skills:

Traditional hiring struggles to assess real-world coding abilities and soft skills like teamwork and communication. AI-driven hiring processes include coding assessments and behavioral analysis for better decision-making.

3) Overcoming Language and Cultural Barriers:

AI in HR and recruitment helps evaluate language proficiency and cultural adaptability, ensuring smooth collaboration within offshore development teams.

4) Managing Remote Teams Effectively:

AI-driven remote work management tools help businesses track performance, manage tasks, and ensure accountability.

How AI is Transforming Developer Hiring

1. AI-Powered Candidate Screening:

AI recruitment tools use resume parsing, skill-matching algorithms, and machine learning to shortlist the best candidates quickly.

2. AI-Driven Coding Assessments:

Developer assessment tools conduct real-time coding challenges to evaluate technical expertise, code efficiency, and problem-solving skills.

3. AI Chatbots for Initial Interviews:

AI chatbots handle initial screenings, assessing technical knowledge, communication skills, and cultural fit before human intervention.

4. Predictive Analytics for Hiring Success:

AI analyzes past hiring data and candidate work history to predict long-term success, improving recruitment accuracy.

5. AI in Background Verification:

AI-powered background checks ensure candidate authenticity, education verification, and fraud detection, reducing hiring risks.

Steps to Hire Dedicated Developers in India Smarter with AI

1. Define Job Roles and Key Skill Requirements:

Outline essential technical skills, experience levels, and project expectations to streamline recruitment.

2. Use AI-Based Hiring Platforms:

Leverage best AI hiring platforms like LinkedIn Talent Insightsand HireVue to source top developers.

3. Implement AI-Driven Skill Assessments:

AI-powered recruitment processes use coding tests and behavioral evaluations to assess real-world problem-solving abilities.

4. Conduct AI-Powered Video Interviews:

AI-driven interview tools analyze body language, sentiment, and communication skills for improved hiring accuracy.

5. Optimize Team Collaboration with AI Tools:

Remote work management tools like Trello, Asana, and Jira enhance productivity and ensure smooth collaboration.

Top AI-Powered Hiring Tools for Businesses

LinkedIn Talent Insights — AI-driven talent analytics

HackerRank — AI-powered coding assessments

HireVue — AI-driven video interview analysis

Pymetrics — AI-based behavioral and cognitive assessments

X0PA AI — AI-driven talent acquisition platform

Best Practices for Managing AI-Hired Developers in India

1. Establish Clear Communication Channels:

Use collaboration tools like Slack, Microsoft Teams, and Zoom for seamless communication.

2. Leverage AI-Driven Productivity Tracking:

Monitor performance using AI-powered tracking tools like Time Doctor and Hubstaff to optimize workflows.

3. Encourage Continuous Learning and Upskilling:

Provide access to AI-driven learning platforms like Coursera and Udemy to keep developers updated on industry trends.

4. Foster Cultural Alignment and Team Bonding:

Organize virtual team-building activities to enhance collaboration and engagement.

Future of AI in Developer Hiring

1) AI-Driven Automation for Faster Hiring:

AI will continue automating tedious recruitment tasks, improving efficiency and candidate experience.

2) AI and Blockchain for Transparent Recruitment:

Integrating AI with blockchain will enhance candidate verification and data security for trustworthy hiring processes.

3) AI’s Role in Enhancing Remote Work Efficiency:

AI-powered analytics and automation will further improve productivity within offshore development teams.

Conclusion:

AI revolutionizes the hiring of dedicated developers in India by automating candidate screening, coding assessments, and interview analysis. Businesses can leverage AI-powered tools to efficiently find, evaluate, and manage top-tier offshore developers, ensuring cost-effective and high-quality software development outsourcing.

Ready to hire dedicated developers in India using AI? iQlance offers cutting-edge AI-powered hiring solutions to help you find the best talent quickly and efficiently. Get in touch today!

#AI#iqlance#hire#india#hirededicatreddevelopersinIndiawithAI#hirededicateddevelopersinindia#aipoweredhiringinindia#bestaihiringtoolsfordevelopers#offshoresoftwaredevelopmentindia#remotedeveloperhiringwithai#costeffectivedeveloperhiringindia#aidrivenrecruitmentforitcompanies#dedicatedsoftwaredevelopersindia#smarthiringwithaiinindia#aipowereddeveloperscreening

5 notes

·

View notes

Text

Pebble the wearable back from a long death

I started working on Pebble in 2008 to create the product of my dreams. Smartwatches didn’t exist, so I set out to build one. I’m extraordinarily happy I was able to help bring Pebble to life, alongside the core team and community. The company behind it failed but millions of Pebbles in the world kept going, many of them still to this day.

I wear my Pebble every day. It's been great (and I'm astounded it’s lasted 10 years!), but the time has come for new hardware.

You’d imagine that smartwatches have evolved considerably since 2012. I've tried every single smart watch out there, but none do it for me. No one makes a smartwatch with the core set of features I want:

Always-on e-paper screen (it’s reflective rather than emissive. Sunlight readable. Glanceable. Not distracting to others like a bright wrist)

Long battery life (one less thing to charge. It’s annoying to need extra cables when traveling)

Simple and beautiful user experience around a core set of features I use regularly (telling time, notifications, music control, alarms, weather, calendar, sleep/step tracking)

Buttons! (to play/pause/skip music on my phone without looking at the screen)

Hackable (apparently you can’t even write your own watchfaces for Apple Watch? That is wild. There were >16k watchfaces on the Pebble appstore!)

Over the years, we’ve thought about making a new smartwatch. Manufacturing hardware for a product like Pebble is infinitely easier now than 10 years ago. There are plenty of capable factories and Bluetooth chips are cheaper, more powerful and energy efficient.

The challenge has always been, at its heart, software. It’s the beautifully designed, fun, quirky operating system (OS) that makes Pebble a Pebble.

Today’s big news - Google has open sourced PebbleOS!

PebbleOS took dozens of engineers working over 4 years to build, alongside our fantastic product and QA teams. Reproducing that for new hardware would take a long time.

Instead, we took a more direct route - I asked friends at Google (which bought Fitbit, which had bought Pebble’s IP) if they could open source PebbleOS. They said yes! Over the last year, a team inside Google (including some amazing ex-Pebblers turned Googlers) has been working on this. And today is the day - the source code for PebbleOS is now available at github.com/google/pebble (see their blog post).

Thank you, Google and Rebble! I can't stress how thankful I am to Rebble and Google, in general and to a few Googlers specifically, for putting in tremendous effort over the last year to make this happen. You've helped keep the dream alive by making it possible for anyone to use, fork and improve PebbleOS. The Rebble team has also done a ton of work over the years to continue supporting Pebble software, appstore and community. Thank you!

In addition to PebbleOS, we’ve been supporting development of Cobble, an open source Pebble-compatible app for iOS (soon) and Android (works great today, it’s my daily driver).

We’re bringing Pebble back!

I had really, really, really hoped that someone else would come along and build a Pebble replacement. But no one has. So… a small team and I are diving back into the world of hardware to bring Pebble back!

This time round, we’re keeping things simple. Lessons were learned last time! I’m building a small, narrowly focused company to make these watches. I don’t envision raising money from investors, or hiring a big team. The emphasis is on sustainability. I want to keep making cool gadgets and keep Pebble going long into the future.

The new watch we’re building basically has the same specs and features as Pebble, though with some fun new stuff as well 😉 It runs open source PebbleOS, and it’s compatible with all Pebble apps and watchfaces. If you had a Pebble and loved it…this is the smartwatch for you.

More info to come soon! Follow the fun with @ericmigi and @pebble.

Are you like me?

Do you have a hole in your heart (and on your wrist) that hasn't been filled by any other smartwatch?

Sign up to be the first to get one at rePebble.com.

Eric Migicovsky

Pebble Founder

FAQ

When can I buy one?

As soon as we nail down the product specifications and get a firm idea of the production timeline, we'll share it with everyone on the list and invite people to order.

Will it be exactly like Pebble?

Yes. In almost every way.

Aren’t you the guy who screwed this up last time?

Yes, the one and only. I think I’ve learned some valuable lessons.

5 notes

·

View notes

Text

Which tools every UIUX designer must master?

Gaining proficiency with the appropriate tools can greatly improve your workflow and design quality as a UI/UX designer. The following are some tools that any UI/UX designer has to know how to use:

1. Design Tools:

Figma: One of the most popular and versatile design tools today. It’s web-based, allowing real-time collaboration, and great for designing interfaces, creating prototypes, and sharing feedback.

Sketch: A vector-based design tool that's been the go-to for many UI designers. It's particularly useful for macOS users and has extensive plugins to extend its capabilities.

Adobe XD: Part of Adobe's Creative Cloud, this tool offers robust prototyping features along with design functionalities. It’s ideal for those already using other Adobe products like Photoshop or Illustrator.

2. Prototyping & Wireframing:

InVision: Great for creating interactive prototypes from static designs. It’s widely used for testing design ideas with stakeholders and users before development.

Balsamiq: A simple wireframing tool that helps you quickly sketch out low-fidelity designs. It’s great for initial brainstorming and wireframing ideas.

3. User Research & Testing:

UserTesting: A platform that allows you to get user feedback on your designs quickly by testing with real users.

Lookback: This tool enables live user testing and allows you to watch users interact with your designs, capturing their thoughts and reactions in real time.

Hotjar: Useful for heatmaps and recording user sessions to analyze how people interact with your live website or app.

4. Collaboration & Handoff Tools:

Zeplin: A tool that helps bridge the gap between design and development by providing detailed specs and assets to developers in an easy-to-follow format.

Abstract: A version control system for design files, Abstract is essential for teams working on large projects, helping manage and merge multiple design versions.

5. Illustration & Icon Design:

Adobe Illustrator: The industry standard for creating scalable vector illustrations and icons. If your design requires custom illustrations or complex vector work, mastering Illustrator is a must.

Affinity Designer: An alternative to Illustrator with many of the same capabilities, but with a one-time payment model instead of a subscription.

6. Typography & Color Tools:

FontBase: A robust font management tool that helps designers preview, organize, and activate fonts for their projects.

Coolors: A color scheme generator that helps designers create harmonious color palettes, which can be exported directly into your design software.

7. Project Management & Communication:

Trello: A simple project management tool that helps you organize your tasks, collaborate with team members, and track progress.

Slack: Essential for team communication, Slack integrates with many design tools and streamlines feedback, updates, and discussion.

9 notes

·

View notes

Text

an oral history of vocaloid

ive seen a lot of (very misguided) discussion about vocaloid/vsynth in regards to AI voices discourse, so i thought it would be a good idea to sit down and explore vocaloid as a software, as well as mentioning other software of the same genre, to give people who dont really know much a better understanding

first and foremost: i dislike AI voices that are in unregulated spaces right now. actors who are finding their hard work end up on some website for anyone to use without compensation is devastating, and shows a lack of respect for the effort it takes in the field.

however, vocaloid has a much longer history that pre-dates these aggregate sites. vocaloid software was first released in 2004, and was initially marketed towards professional musicians. vocaloid's second version of the engine, however, decided to broaden the market towards general consumers, pitching it as helpful software to those who wanted to produce music, but didn't have the personal skill or ability to have someone else sing for their music (range, note holding, etc). amateur musicians wouldn't know how to direct someone to tackle a lyric persay, but using software would be easy to learn and they would learn the terminology associated with certain performance decisions.

in vocaloid 2's era, miku was released. miku's voice provider is Saki Fujita, a well respected voice actress who actually does a lot of work in anime as well as video games! the popularity of miku is its own separate post of history, but the explosive nature of it, i would argue, is the reason that vocaloid and other commercial voice synthesizer software ultimately ended up geared towards all consumers instead of just professional musicians. (crypton and yamaha did absolutely still cater to professional musicians, having private or non released banks only for certain companies/contractors to use though).

flash forward, and technology has developed way further. in 2013, cevio released, and in 2017, synthV debuted. by this point, vocal synthing has expanded from just singing software to also include software intended for just speaking (voiceroid by AHS software) and the idea of an AI bank to improve the quality and clarity of voice banks is becoming more feasible.

however, i wouldnt say the developments in AI voices came strictly from this side of things. in fact, i distinctly remember back in the early 2010s, people were using websites with voice models of characters like glados (portal) and spongebob. these audio posts were seen as novelties, and admittedly theyre fun just to mess around with (and people often find the spongebob rap music that yourboysponge makes to be pretty well done!), they do lead the way to better developed technology that doesnt compensate the artist...

so back to vocaloid. the thing about vocaloid (and all vocal synthesizers) is that contracts are in place to give appropriate time and compensation, along with permission to even use the person's voice. saki fujita continues to update miku's voicebank because she is being paid well to do so. this can be said for all vocal synth products. because these companies (crypton, ahs software, internet co, etc) specialize in making these tools and products for it, they have the appropriate knowledge on what proper compensation looks like. a random person grabbing a "raiden shogun genshin ai voice" model has none of those things. the voice actress doesnt get money off of that. its stolen work. AI can be used ethically, but it has to be done with regulation.

im leaving out specifics on certain vocaloids/vsynthesizers since its tangential to this post at best, but im making this so people have a better understanding of the history and intended usage of vocal synthesizer software. thank youuuuu

33 notes

·

View notes

Text

How to Build Software Projects for Beginners

Building software projects is one of the best ways to learn programming and gain practical experience. Whether you want to enhance your resume or simply enjoy coding, starting your own project can be incredibly rewarding. Here’s a step-by-step guide to help you get started.

1. Choose Your Project Idea

Select a project that interests you and is appropriate for your skill level. Here are some ideas:

To-do list application

Personal blog or portfolio website

Weather app using a public API

Simple game (like Tic-Tac-Toe)

2. Define the Scope

Outline what features you want in your project. Start small and focus on the minimum viable product (MVP) — the simplest version of your idea that is still functional. You can always add more features later!

3. Choose the Right Tools and Technologies

Based on your project, choose the appropriate programming languages, frameworks, and tools:

Web Development: HTML, CSS, JavaScript, React, or Django

Mobile Development: Flutter, React Native, or native languages (Java/Kotlin for Android, Swift for iOS)

Game Development: Unity (C#), Godot (GDScript), or Pygame (Python)

4. Set Up Your Development Environment

Install the necessary software and tools:

Code editor (e.g., Visual Studio Code, Atom, or Sublime Text)

Version control (e.g., Git and GitHub for collaboration and backup)