#i love quickbasic

Explore tagged Tumblr posts

Text

Get started with QBasic ...

Contents At A Glance

Introduction to QBasic

Using The QBasic Environment

Working With Data

Operators And String Variables

Advanced Input And Output

Making Decisions With Data

Controlling Program Flow

Data Structures

Buit-In Functions

Programming Subroutines

Disk Files

Sound And Graphics

Debugging Your Programs

Post #344: Greg Perry & Steven Potts, Crash Course in QBasic, The Fastest Way To Move To QBasic, Second Edition, QUE Corporation, Indianapolis, USA, 1994.

#programming#retro programming#vintage programming#basic#basic programming#education#qbasic#quickbasic#qbasic programming#microsoft#que corporation#i love qbasic#i love quickbasic#dos programming

18 notes

·

View notes

Text

Say, for the sake of argument, you want to make a bad programming language. Why would you do this?

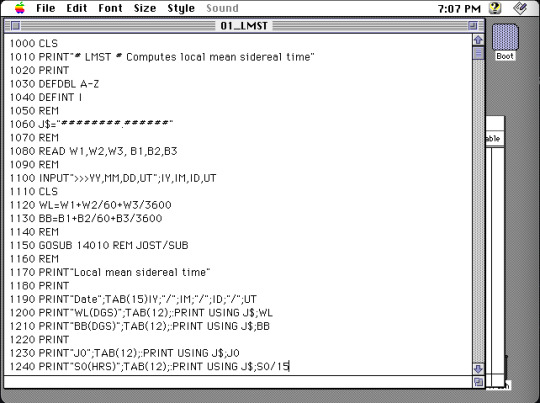

Well, for instance, you might get your hands on a book of scripts to generate ephemera for celestial events, only to find out it was written for Microsoft QuickBasic for Macintosh System 7. You quickly discover that this particular flavor of BASIC has no modern interpreter, and the best you can do is an emulator for System 7 where you have to mount 8 virtual floppy disks into your virtual system.

You could simply port all the scripts to another BASIC, but at that point you might as well just port them to another langauge entirely, a modern language.

Except QuickBasic had some funky data types. And the scripts assume a 16-bit integer, taking advantage of the foibles of bitfutzery before converting numbers into decimal format. BASIC is very particular -- as many old languages are -- about whitespace.

In addition to all this, BASIC programs are not structured as modern programs. It's structured to be written in ed, one line at a time, typing in a numbered index followed by the command. There are no scopes. There are no lifetimes. It's just a loose collection of lines that are hopefully in a logical order.

So sure, I could port all these programs. But I'm sure to make mistakes.

Wouldn't it just be easier, some basal part of my brain says, to write your own language that some some modern ameneties, that you compile for your own laptop, that kind of acts like BASIC? A language where you just have to translate particular grammar, and not the whole structure of the program?

Of course it's not easier. But I'm already too far in to quit now.

Memory

Who doesn't love manual memory layout?

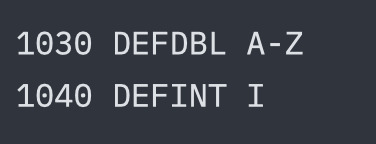

In QuickBasic, memory is "kind of" manual. The DEFINT and DEFDBL keywords let you magically instantiate types based on their first letter. I don't know how you deallocate things, because all the scripts in this book finish quickly and don't hang around to leak a lot.

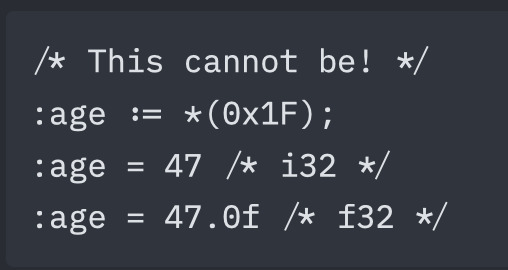

In QuickBasic, this looks like

I guess that the second statement just overrides the first.

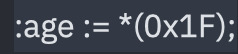

There is no stack in a BASIC program, so there will be no stack in my language. Instead you just give names to locations.

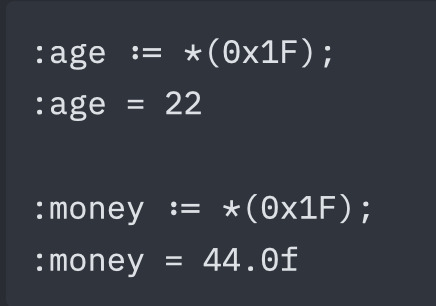

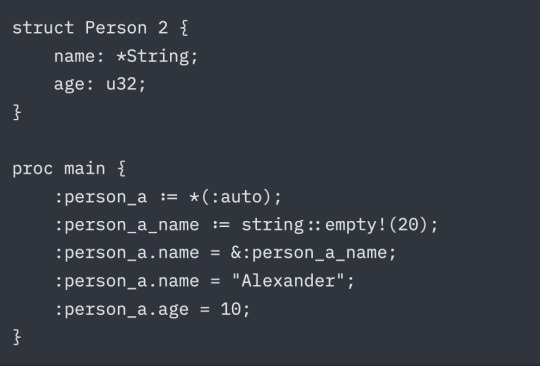

creates a symbol named age and makes it refer to 0x1F. The pointer operator should be obvious, and the walrus means we're defining a symbol (to be replaced like a macro), not doing a value assignment during the execution of the program. Now we can assign a value.

Atoms infer types. age knows it's an int.

You cannot assign a new type to an atom.

However, you can cast between types by creating two atoms at the same address, typed differently.

The language does not convert these, it simply interprets the bits as the type demands.

Larger types

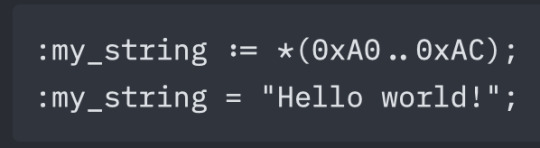

Not all types are a single word. Therefore, you can use the range operator .. to refer to a range of addresses.

Note that strings are stored with an extra byte for its length, instead of null-terminating it. Assignment of a string that is too long will result in a compilation error.

Next and Auto

There are also two keywords to make the layout of memory easier. The first is :next which will place the span in the next available contiguous location that is large enough to hold the size required. The second is :auto. For all :auto instances, the compiler will collect all these and attempt to place them in an intelligent free location. :auto prefers to align large structs with 64-word blocks, and fills in smaller blocks near other variables that are used in the same code blocks.

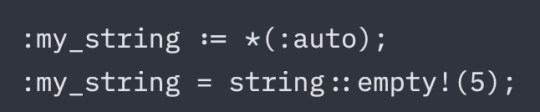

String Allocation

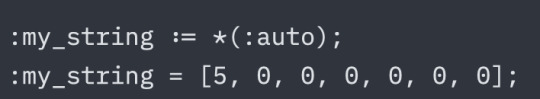

Strings come with a macro to help automatically build out the space required:

This is equivalent to:

That is, a string with capacity 5, a current size of 0, and zeroes (null char) in all spots. This helps avoid memory reuse attacks. ZBASIC is not a secure language, but this is still good practice.

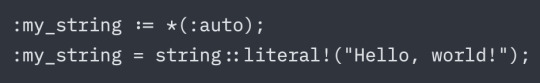

There is also another macro that is similar to a "string literal".

Verbose and annoying! Just like BASIC.

Array Allocation

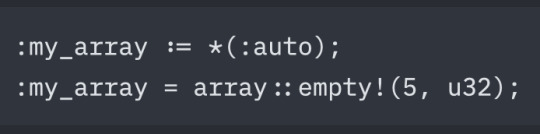

Likewise, arrays have a similar macro:

Which expands in a similar way as strings, with a capacity word and size word. The difference here is that the type given may change the actual size of the allocation. Giving a type that is larger than a single word will result in a larger array. For instance, f64 takes up two words some systems, so array::empty!(5, f64) will allocate 10 words in that case (5 * 2). Larger structs will likewise take up even more space. Again, all this memory will be zeroed out.

Allocation order

As an overview, this is the order that memory is assigned during compilation:

Manual Locations -> Next -> Auto

Manual locations are disqualified from eligibility for the Next and Auto phases, so a manual location will never accidentally reference any data allocated through :next or :auto.

Here is an example:

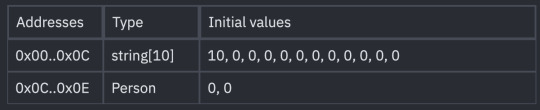

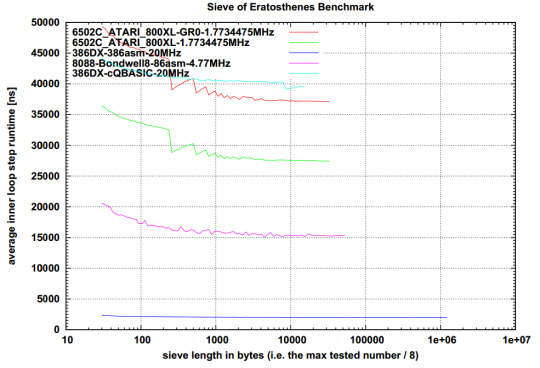

This produces the initial layout:

Which, after the code is run, results in the memory values:

Note that types are not preserved at runtime. They are displayed in the table as they are for convenience. When you use commands like "print" that operate differently on different types, they will be replaced with one of several instructions that interpret that memory as the type it was at compile-time.

Truly awful, isn't it?

3 notes

·

View notes

Note

as a first principles type of person. I saw the "effective for getting good fast" part and my immediate assumption was you were talking about the "trying to understand the most complex thing" being the better one, I think because I'm a math girl in an engineering world (degree) ie being put in a hostile environment (reality) where trying to find first principles is so fucking hard (we can never TRULY isolate and fix variables of the universe and it upsets me can't the electrons STAY IN PLACE while we look at them?)

thank you for this insight because truly the caveat is that it probably depends more on how available the pedagogy of one or the other is. in hexcasting specifically all of the first principles are there, you get a book with them, and if i were a patient person (i am not) i could work my way up to complex castings.

i think dismantling complexity does get you somewhat good much faster, whereas first principle derivation gets you really good somewhat slower with less gaps in knowledge and a deeper understanding since you necessarily must fully grasp the principles to build out with them. it's fun and sexy to turn yourself into a markov chain of intelligent input/output without comprehending the values of each part but boy howdy does it cause me problems. but i suppose it's not always possible to reinvent geometry just to truly work from the first (<- says a person who quit a geometry textbook last year because i refused to look up the originating proof of a problem about congruent lines and triangle orthocenters bc i couldn't reinvent geometry in a week).

i suppose ideally one would just... balance the approaches but also i may not be patient but i'm incredibly stubborn. and that's how you end up learning to write code in a language you don't even know the name of

#peter answers#incidentally this is how i became a quickbase dev but start sobbing wailing weeping etc every time someone tries to make me use pipelines#it might be SQL that i know bits of? i hope it's sql bc i really like the syntax of whatever QB formulas run in#genuinely i love this ask so much i love thinking about these things. taxonomy is my favorite sin#also your point about isolating first principles perfectly illustrates why taxonomy is inherently sinful which delights me

2 notes

·

View notes

Photo

Was 8-bit Atari (6502) faster than IBM PC (8088)?

I was silent for a while as some things required my attention more than old computers. We extended the list of implementations of our Sieve Benchmark and it now supports even 6502. It was developed on Atari 800XL without any modern hardware or software (it’s written in ATMAS II macro-assembler). And it was a pain.

We all remember the lovely days of being young and playing with these simple computers, where programming was often the best way to spend time. These nostalgic memories don’t say the truth how horribly inefficient the development was on these machines in comparison with what came a decade later. David told me that his productivity was about 20 times lower in comparison with developing an assembly program of similar complexity and size on PC. These were one of the biggest reasons for your entertainment:

It was easy to fill whole available memory with the source code text. There was a situation when only 100 characters could be added to the text buffer, but about 2000 were needed. That caused that multiple parts were optimized for source code length (except, of course, the sieve routine itself, which was optimized for speed).

At the end, it was necessary to split the source code into two parts anyway.

Unlike with PCs, the Atari keyboard doesn’t support roll-over on standard keys. It is necessary to release the key before pressing another one (otherwise the key-press is not properly recognized).

Having a disk drive was a big advantage over tapes. However, the implementation on Atari was very slow and everything took incredible amount of time. Boot into editor? 20 seconds. Loading the first part of the source code? 5 seconds. Loading the second part? 30 seconds. Storing it back? 60 seconds (for just 17 KB). You needed about 160 seconds before trying to run the program after every larger change (including 20 seconds for compiling). Often a minute more if the program crashed the whole computer.

Although David never started to use “modern” features like syntax highlighting and code completion and he still programs mostly in the 80x25 text-mode, he said that this was too much for him so I don’t think we will repeat this again soon.

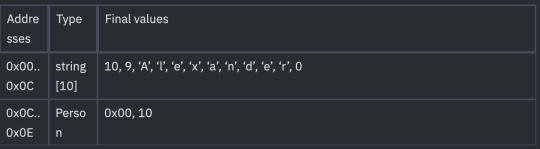

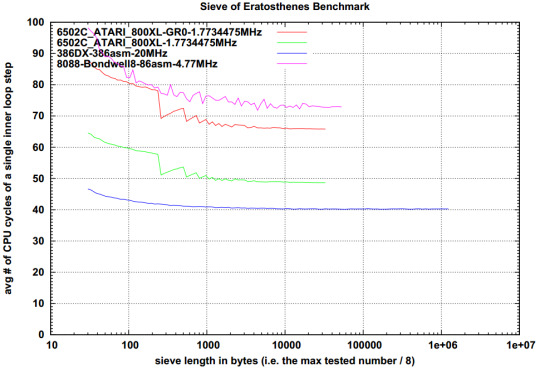

Regarding the results: A 1.77-MHz MOS 6502 in Atari 800XL/XE (PAL) required about 66 CPU cycles to go through a single inner-loop step. If graphics output was disabled during the test, this decreased to just 49 CPU cycles. A 4.77-MHz Intel 8088 needed about 73 CPU cycles to do the same. Thus, 6502 is faster if running on the same clock.

On the other side, the original IBM PC is clocked 2.7x higher than the Atari and 4.7x higher than other important 6502 machines (Apple IIe, Commodore 64). Thus, IBM PC was at least twice as fast in this type of tasks (similar to compiling, XML parsing...). I’m not surprised, but it is nice to see the numbers.

Interestingly, the heavily optimized assembly code running on Atari provides the same performance as compiled BASIC (MS QuickBasic 4.5) running on 20MHz 386DX (interpreted version would be three times slower). This was one of the fastest BASICs out there so it gives you good perspective on how these high-level languages killed the performance back then.

David spent a lot of time optimizing the code for both CPUs and used the available tricks for each architecture (like self-modifying code...). If you feel that your favorite CPU should have been faster, you can download the project folder and check the source code. If you create a faster version, please send it to me (but please read the README first, especially the part called “Allowed tricks”).

Also if somebody is able to port the code to Commodore 64, it would be nice to compare the results with Atari (only the disk access, timer and console input/output need to be rewritten). Any expert/volunteer? @commodorez ? ;)

Sieve Benchmark download (still no website... shame on me)

34 notes

·

View notes

Video

Chihuahua and Kitty Cat love fur brushing

Chihuahuas and kitty cats may seem like unlikely friends, but it turns out that these two very different animals have something in common: a love for fur brushing. Both breeds are known for their long and luxurious coats, and regular grooming is essential to keep them looking and feeling their best. But it's not just about maintaining their appearance, it's also an opportunity for bonding between pets and their owners.

Chihuahuas, with their long and silky coats, need to be brushed regularly to prevent matting and tangling. This is especially important for long-haired varieties. Brushing not only keeps their coats healthy, but it also helps to distribute natural oils throughout the coat, which helps to keep it shiny and soft.

Kitty cats, on the other hand, have short and sleek coats that may not require as much brushing as a Chihuahua. However, regular grooming is still essential to keep their coat shiny and to remove loose hair. Cats are known for their fastidious grooming habits, but even they can miss a spot or two. Brushing is a great way to ensure that your cat's coat stays in tip-top shape.

Grooming not only keeps your pets looking and feeling great, but it also provides an opportunity for bonding between pets and their owners. It's a great way to spend time together and to strengthen the bond between you and your furry friend. And it's not just about the pets, grooming can also be a great stress-reliever for their owners as well.

Both Chihuahuas and kitty cats love the attention they get during grooming sessions, and they may even come to look forward to it. For example, when a Chihuahua is being brushed, they may start to wag their tail or even lay down and roll over, enjoying the sensation. Cats may even start to purr or lean into the brush as you groom them.

In conclusion, grooming is a great way to bond with your pets, keep them looking and feeling great, and it's also a fun activity that both Chihuahua and kitty cats enjoy. Whether you're a Chihuahua owner or a cat parent, regular grooming is an essential part of responsible pet ownership. And who knows, maybe your Chihuahua and kitty cat will become best friends furever.

respond to this opinion: Adding to the point, automation can improve the change process in project management in several ways. Automated change request forms and workflows can speed up the process of submitting, reviewing, and approving changes, reducing delays and improving efficiency. Similarly, automated notifications, alerts, and email reminders can help ensure that all team members and stakeholders are aware of changes and can provide input and feedback promptly (Quickbase, 2023). The automation process can reduce errors, improve accuracy, and improve compliance and auditing. An effective change management system can provide better data and insights for the team and management to make informed decisions.

I completely agree that automation can greatly improve the change process in project management. Automated change request forms and workflows can streamline the process and reduce delays, allowing for more efficient and timely decision making. Automated notifications and alerts can also ensure that all team members are informed and able to provide input and feedback in a timely manner. Additionally, automation can reduce errors, improve accuracy, and ensure compliance and auditing. The ability to gather and analyze data through an automated system can also provide valuable insights for the team and management to make informed decisions. Overall, automation can greatly enhance the change management process and lead to more successful project outcomes.

what's the alternative?

The alternative to automating the change process in project management is a manual process. This can include manually submitting and reviewing change requests, manually sending notifications and alerts to team members, and manually tracking and documenting changes. This method can be time-consuming, prone to errors, and may not provide the same level of data and insights as an automated system. Additionally, a manual process can make it harder to ensure compliance and auditing, as well as make it hard to keep all team members informed in a timely manner.

Manual processes can also lead to delays in decision making and slow progress on the project. It can also lead to more human errors and some team members can be missed in the communication process.

Manual process also can be challenging for remote teams and teams that are spread out across different locations. It can be harder to keep everyone on the same page and ensure that everyone is aware of the latest updates and changes.

It's important to note that manual process can be still used in small projects or projects that are not complex, but in large and complex projects, automation is a better option.

write a captivating and motivating speech about How to Monetize Social Media Posts. Give examples of success stories and how they did it. grab the attention of the audience. focus on audience retention.

Good evening everyone,

Are you tired of creating content on social media without seeing any financial return? Are you ready to turn your online presence into a profitable venture? Well, I have great news for you. Monetizing your social media posts is not only possible, but it's also easier than you think.

First, let's take a look at some success stories. Have you heard of the Instagram sensation, @thefatjewish? He turned his hilarious and relatable memes into a multi-million dollar brand, with deals and collaborations with major companies such as Bud Light and Comedy Central.

Or what about the YouTube sensation, Zoella? She started her channel with beauty and lifestyle content, and has now built a successful brand with her own line of products and a bestselling book.

These examples show us that with the right strategy and approach, anyone can monetize their social media posts. So, how do you do it?

First, you need to identify your niche and target audience. What are you passionate about and what kind of content do your followers enjoy? Once you have a clear understanding of this, you can create content that resonates with your audience and provides value to them.

Next, you need to build a loyal following. This means consistently creating high-quality content, engaging with your followers, and providing them with a reason to come back to your page.

Now, it's time to monetize your following. There are several ways to do this, such as sponsored posts, affiliate marketing, and selling your own products or services. But the key is to do it in a way that is authentic and doesn't sacrifice the value and integrity of your content.

Remember, building a successful brand on social media takes time and effort, but with a solid strategy and a consistent approach, you too can turn your online presence into a profitable venture.

So, don't wait any longer. Start creating valuable content and building a loyal following today. Who knows, you could be the next @thefatjewish or Zoella.

Thank you.

0 notes

Link

How can I find the typical cost of developers for a range of platforms in a certain space?For example, we are evaluating now different low code platforms such as Zoho Creator, Quickbase and Caspio to build our BI and CRM upon. One of the criteria I think is important to evaluate is how many developers work with each platform, how good are they and what do they charge?I checked upwork and got a sense for each platform but what I would really love is a website or report that lists all the platforms and the relevant stats based on scraping various job sites and perhaps even surveying business users.Does anyone know where I can find something like this?

0 notes

Text

Die Programmiersprache QuickBasic ...

Post #239: Wikipedia, Microsoft QuickBasic, Geschichte, Funktionalitäten, Kompatibilität, QuickBasic heute, 2023.

#retro programming#programming#basic#vintage programming#education#basic programming#i love programming#i love basic#i love vintage tech#i love retro#i love coding#quickbasic#programmierung#computer programming#microsoft

3 notes

·

View notes

Text

Eine der besten Websites zu QBasic und Quick Basic ...

Post #141: Link - WWW.ANTONIS.DE, 1.336.394 visitors since 2000, 2023.

#retro programming#programming#basic#basic programming#vintage programming#ilovebasic#iloveprogramming#education#gwbasic#ilovegwbasic#qbasic tutorial#qbasic#quickbasic#antonis#microsoft#i love retro#i love coding#i love basic#basic is fun#basic for kids

3 notes

·

View notes

Text

Pete's QBasic / QuickBasic Site ...

Post #118: Link - Pete's QBasic / QuickBasic Site, 2022.

#basic#i love gwbasic#qbasic#quickbasic#basic for ever#programmieren#programming#i love coding#coding#coding for kids#coding is fun#i love programming#microsoft#retro programming#vintage programming

1 note

·

View note

Text

Buchtipp für Retrofans #20 ...

QBasic is a part of MS-DOS 5; QuickBasic is the separately sold version of this extremely simple and easy-to-learn programming language. The book offers a comprehensive introduction for beginners of both versions and provides a clear introduction to the handling of commands, functions and programs.

Post #117: Buchtipp für Retrofans #20, Anatol Gardner, QBasic und QuickBasic, Der schnelle Weg zum erfolgreichen Programmieren, DTV, C.H. Beck Verlag, München, 1992.

#basic#qbasic#quickbasic#programming#programmieren#coding#coding for kids#coding is fun#programming language#i love basic#basic for ever#i love coding#i love programming#beck verlag#deutscher taschenbuch verlag#retro programming#retro coding#vintage programming#microsoft#ms dos

1 note

·

View note

Text

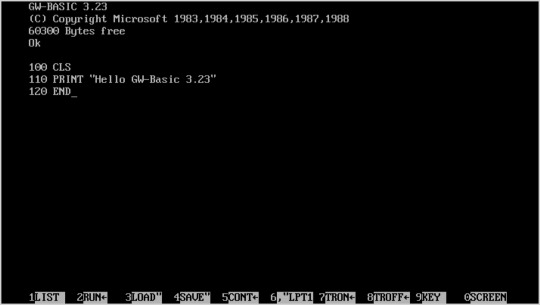

Von BASICA und GW-BASIC zu QBASIC ...

„Da IBM den Einsatz von BASICA-ROM-Chips den allerorten zahlreichen auftauchenden Herstellern von PC-Nachbauten (Clones) nicht gestattete, entwickelte der amerikanische Software-Hersteller MICROSOFT einen eigenen, zu BASICA (Advanced BASIC) in hohem Maße kompatiblen BASIC-Interpreter, der von der Diskette oder Festplatte geladen werden konnte: das GW-BASIC. Bis heute befindet sich das erstmals 1983 erschienene Programm GW-BASIC.EXE stets im Lieferumfang aller Versionen des Betriebssystems MS-DOS. Die letzte Version von GW-BASIC erschien mit der Versionsnummer 3.23 im Jahre 1988.

Bereits im Jahre 1985 bracht Microsoft ein völlig neu konzipiertes BASIC auf den Markt: QUICK-BASIC. QUICK-BASIC ist mit einem Compiler ausgestattet, der die Programmanweisungen in Maschinencode übersetzen kann. Dadurch lassen sich Programme erzeugen, die unmittelbar von der DOS-Ebene aus gestartet werden können - sogenannte EXE-Dateien. Der maschinennahe Programmcode der selbstständig laufenden EXE-Dateien wird vom Computer sehr schnell verarbeitet. Neben vielen anderen Vorteilen hat dies eine schnellere Programmausführung zur Folge. Im Gegensatz dazu mussten die Interpretersprachen BASICA und GW-BASIC jeden einzelnen Befehlt der Reihe nach lesen, analysieren und schließlich durch ein entsprechendes Maschinenprogramm abarbeiten. Die Ausführungszeiten von Programmen sind dadurch entsprechend lange.

Eine wesentliche Neuerung gegenüber den Basic-Vorgängern stellt die Programmiersprache von QUICK-BASIC selbst dar. Sie ist gegenüber den herkömmlichen BASIC-Dialekten um viele leistungsstarke Befehle und Funktionen bereichert worden. Mit QUICK-BASIC lassen sich sowohl alle klassischen Basic-Befehle ausführen, als auch eine große Anzahl von Anweisungen zur strukturierten Programmierung, wie man sie bisher nur von den Programmiersprachen PASCAL oder C kannte. Damit hat das außerordentlich flexible QUICK-BASIC mehr als jede andere Computersprache das Zeug zur Allroundsprache. Bei allem Leistungszuwachs ist QUICK-BASIC dennoch weiterhin eine unkomplizierte, leicht verständliche Computersprache geblieben.

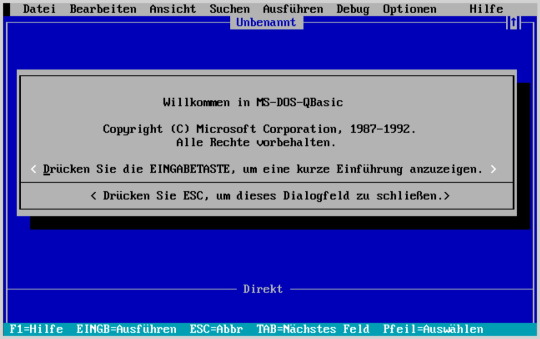

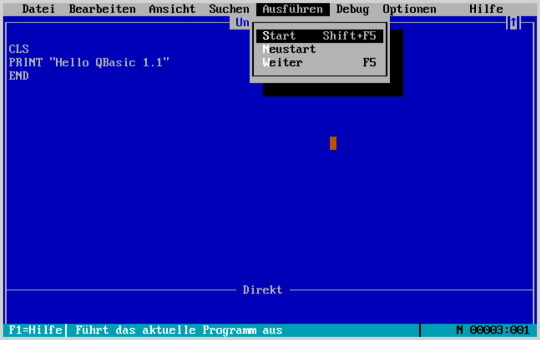

QBASIC wird von Microsoft mit dem MS-DOS-Betriebssystem ab Version 5.0 mitgeliefert. Auf den ersten Blick ist QBASIC dem QUICK-BASIC in seiner letzten Version 4.5 zum Verwechseln ähnlich. Tatsächlich handelt es sich auch bei QBASIC um eine vollwertige Version des QUICK -BASIC 4.5, bei der lediglich der in QUICK-BASIC implementierte Compiler weggelassen wurde. Das bedeutet, dass man mit QBASIC im Unterschied zu QUICK-BASIC 4.5 keine selbstständig ablaufenden EXE-Dateien generieren kann.

In den wesentlichen Eigenschaften ist QBASIC 1.1 nahezu identisch mit der vollständigen Version von QUICK-BASIC 4.5 geblieben. So ist der Befehlssatz von QUICK-BASIC 4.5 bis auf einige wenige Einschränkungen vollständig in QBASIC übernommen worden. Das bedeutet, dass nahezu alle QBASIC-Programme auch unter QUICK-BASIC 4.5 lauffähig sind und umgekehrt. Ebenso ist die Programmierumgebung (IDE) von QBASIC bis auf die Menüpunkte zur Steuerung der Kompilation nahezu identisch mit der Programmierumgebung von QUICK-BASIC.“

From BASICA and GW-BASIC to QBASIC

"Since IBM did not allow the numerous manufacturers of cloned PCs that were appearing everywhere to use BASICA ROM chips, the American software manufacturer MICROSOFT developed its own BASIC interpreter, which is highly compatible with BASICA (Advanced BASIC), which could be loaded from floppy or hard disk: the GW-BASIC. To this day, the GW-BASIC.EXE program, which first appeared in 1983, is always included with all versions of the MS-DOS operating system. The last version of GW-BASIC appeared with version number 3.23 in 1988. As early as 1985, Microsoft launched a completely newly designed BASIC: QUICK-BASIC. QUICK-BASIC is equipped with a compiler that can translate program instructions into machine code. This allows programs to be created that can be started directly from the DOS level - so-called EXE files. The low-level program code of the independently running EXE files is processed very quickly by the computer. Among many other advantages, this results in faster program execution. In contrast to this, the interpreter languages BASICA and GW-BASIC had to read each individual command in turn, analyze them and finally process them using a corresponding machine program. The execution times of programs are correspondingly long.

The programming language of QUICK-BASIC itself represents a major innovation compared to the BASIC predecessors. Compared to the conventional BASIC dialects, it has been enriched with many powerful commands and functions. With QUICK-BASIC, all classic Basic commands can be executed, as well as a large number of instructions for structured programming, previously only known from the programming languages PASCAL or C. This means that the extraordinarily flexible QUICK-BASIC has what it takes to become an all-round language more than any other computer language. With all the increase in performance, QUICK-BASIC has remained an uncomplicated, easy-to-understand computer language.

QBASIC is supplied by Microsoft with the MS-DOS operating system from version 5.0. At first glance, QBASIC is confusingly similar to QUICK-BASIC in its last version 4.5. In fact, QBASIC is also a fully-fledged version of QUICK-BASIC 4.5, in which only the compiler implemented in QUICK-BASIC has been left out. This means that with QBASIC, in contrast to QUICK-BASIC 4.5, you cannot generate any independently running EXE files.

QBASIC 1.1 has remained almost identical to the complete version of QUICK-BASIC 4.5 in its essential features. The command set from QUICK-BASIC 4.5 has been completely taken over into QBASIC with a few restrictions. This means that almost all QBASIC programs can also be run under QUICK-BASIC 4.5 and vice versa. Likewise, the programming environment (IDE) of QBASIC is almost identical to the programming environment of QUICK-BASIC, except for the menu items for controlling the compilation.”

Post #109: Anatol Gardner, Qbasic und Quick-Basic, Der schnelle Weg zum erfolgreichen Programmieren, Beck EDV-Berater, Deutscher Taschenbuch Verlag (DTV), 425 Seiten, 1992.

#basic#gw-basic#qbasic#quickbasic#basica#basic for ever#coding#programmieren#programming#i love basic#i love programming#i love coding#microsoft#education#informatik#coding for kids#coding is fun

1 note

·

View note