#just open the code editor and show me how to structure a project already...

Explore tagged Tumblr posts

Text

the worst part of learning any coding language from an official resource is when it thinks you want anything to do with the command line

123 notes

·

View notes

Photo

Péguy

Hi everybody! In this news feed I've told you a few times about a project I named Péguy. Well today I dedicate a complete article to it to present it to you in more detail but also to show you the new features I brought to it at the beginning of the winter. It's not the priority project (right now it's TGCM Comics) but I needed a little break during the holidays and coding vector graphics and 3D, it's a little bit addictive like playing Lego. x) Let's go then!

Péguy, what is it?

It is a procedural generator of patterns, graphic effects and other scenery elements to speed up the realization of my drawings for my comics. Basically, I enter a few parameters, click on a button, and my program generates a more or less regular pattern on its own. The first lines of code were written in 2018 and since then, this tool has been constantly being enriched and helping me to work faster on my comics. :D This project is coded with web languages and generates vector patterns in the format SVG. In the beginning it was just small scripts that had to be modified directly to change the parameters and run individually for each effect or pattern generated.

Not very user friendly, is it? :’D

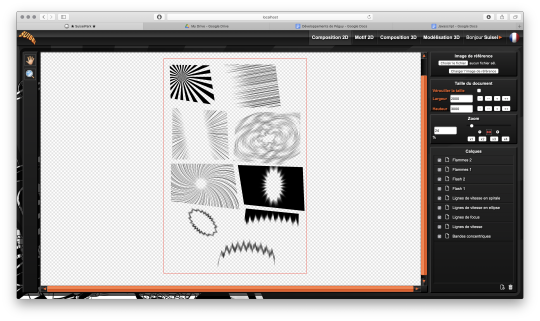

This first version was used on episode 2 of Dragon Cat's Galaxia 1/2. During 2019 I thought it would be more practical to gather all these scripts and integrate them into a graphical user interface. Since then, I have enriched it with new features and improved its ergonomics to save more and more time. Here is a small sample of what can be produced with Péguy currently.

Graphic effects typical of manga and paving patterns in perspective or plated on a cylinder. All these features were used on Tarkhan and Gonakin. I plan to put this project online, but in order for it to be usable by others than me, I still need to fix a few ergonomy issues. For the moment, to recover the rendering, you still need to open the browser debugger to find and copy the HTML node that contains the SVG. In other words, if you don't know the HTML structure by heart, it's not practical. 8D

A 3D module!

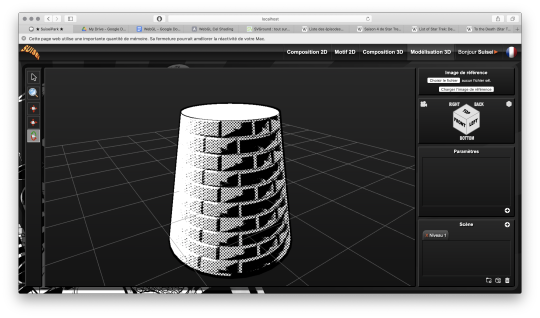

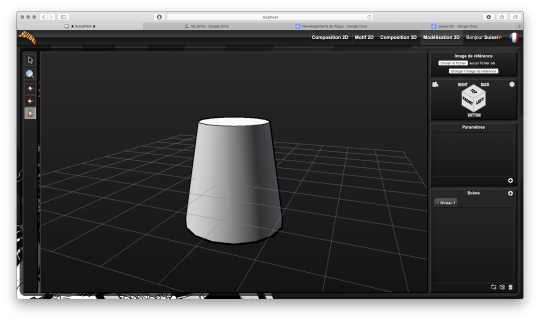

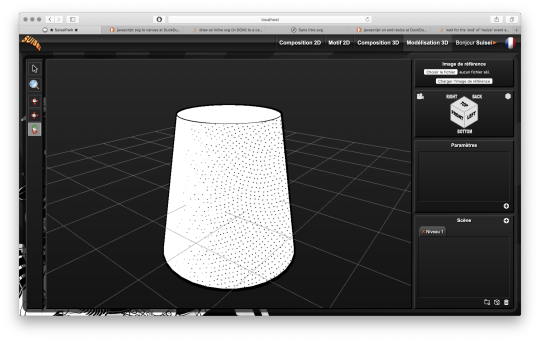

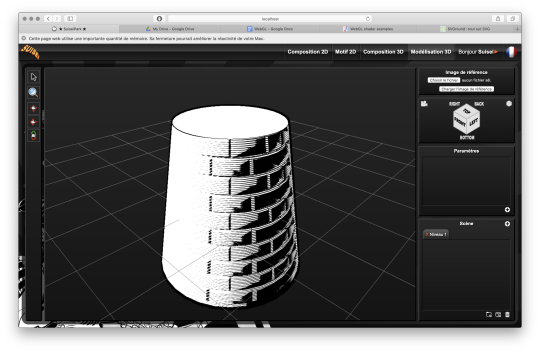

The 2020 new feature is that I started to develop a 3D module. The idea, in the long run, is to be able to build my comics backgrounds, at least the architectural ones, a bit like a Lego game. The interface is really still under development, a lot of things are missing, but basically it's going to look like this.

So there's no shortage of 3D modeling software, so why am I making one? What will make my project stand out from what already exists? First, navigation around the 3D workspace. In short, the movement of the camera. Well please excuse me, but in Blender, Maya, Sketchup and so on, to be able to frame according to your needs to get a rendering, it's just a pain in the ass! So I developed a more practical camera navigation system depending on whether you're modeling an object or placing it in a map. The idea is to take inspiration from the map editors in some video games (like Age of Empire). Secondly, I'm going to propose a small innovation. When you model an object in Blender or something else, it will always be frozen and if you use it several times in an environment, it will be strictly identical, which can be annoying for natural elements like trees for example. So I'm going to develop a kind of little "language" that will allow you to make an object customizable and incorporate random components. Thus, with a single definition for an object, we can obtain an infinite number of different instances, with random components for natural elements and variables such as the number of floors for a building. I had already developed a prototype of this system many years ago in Java. I'm going to retrieve it and adapt it to Javascript. And the last peculiarity will be in the proposed renderings. As this is about making comics (especially in black and white in my case), I'm developing a whole bunch of shaders to generate lines, screentones and other hatchings automatically with the possibility to use patterns generated in the existing vector module as textures! :D

What are shaders?

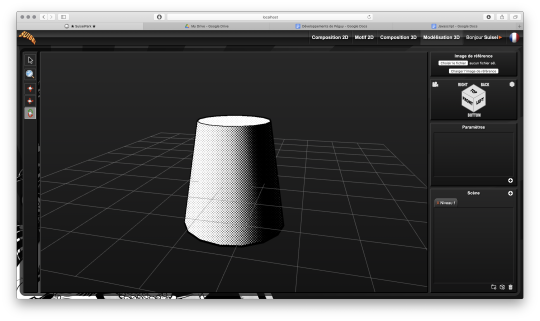

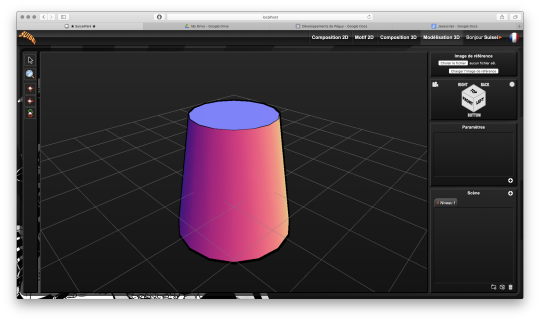

Well, you see the principle of post-production in cinema... (Editing, sound effects, various corrections, special effects... all the finishing work after shooting). Well, shaders are about the same principle. They are programs executed just after the calculation of the 3D object as it should appear on the screen. They allow to apply patches, deformations, effects, filters... As long as you are not angry with mathematics, there is only limit to your imagination! :D When you enter a normal vector in a color variable it gives funny results.

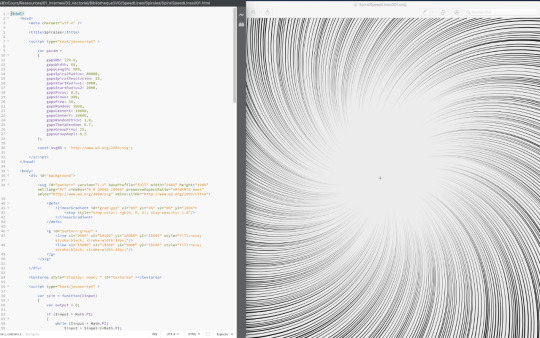

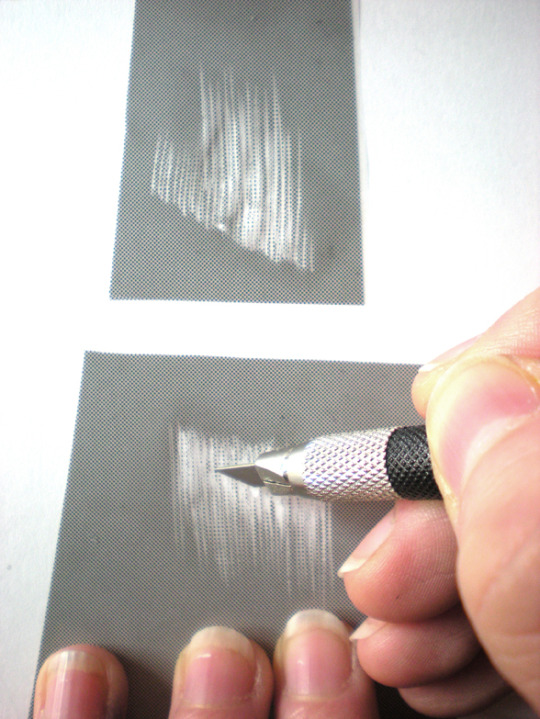

Yes! It's really with math that you can display all these things. :D Now when you hear a smart guy tell you that math is cold, it's the opposite of art or incompatible with art... it's dry toast, you'll know it's ignorance. :p Math is a tool just like the brush, it's all about knowing how to use it. :D In truth, science is a representation of reality in the same way as a painting. It is photorealistic in the extreme, but it is nevertheless a human construction used to describe nature. It remains an approximation of reality that continually escapes us and we try to fill in the margins of error over the centuries... Just like classical painting did. But by the way? Aren't there a bunch of great painters who were also scholars, mathematicians? Yes, there are! Look hard! The Renaissance is a good breeding ground. x) In short! Physics is a painting and mathematics is its brush. But in painting, we don't only do figurative, not only realism, we can give free rein to our inspiration to stylize our representation of the world or make it abstract. Well like any good brush, mathematics allows the same fantasy! All it takes is a little imagination for that. Hold, for example, the good old Spirograph from our childhood. We all had one! Well, these pretty patterns drawn with the bic are nothing else than... parametric equations that make the students of math sup/math spe suffer. 8D Even the famous celtic triskelion can be calculated from parametric equations. Well, I digress, I digress, but let's get back to our shaders. Since you can do whatever you want with it, I worked on typical manga effects. By combining the Dot Pattern Generator and the Hatch Generator but display them in white, I was able to simulate a scratch effect on screentones.

In the traditional way it is an effect that is obtained by scraping the screentones with a cutter or similar tool.

Péguy will therefore be able to calculate this effect alone on a 3D scene. :D I extended this effect with a pattern calculated in SVG. So it will be possible to use the patterns created in the vector module as textures for the 3D module! Here it is a pattern of dots distributed according to a Fibonacci spiral (I used a similar pattern in Tarkhan to make stone textures, very commonly used in manga).

Bump mapping

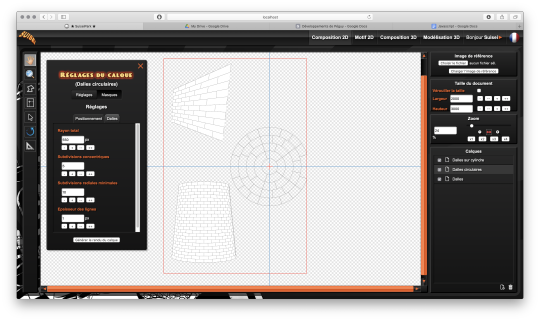

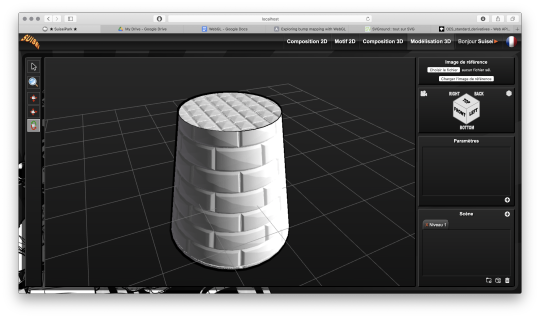

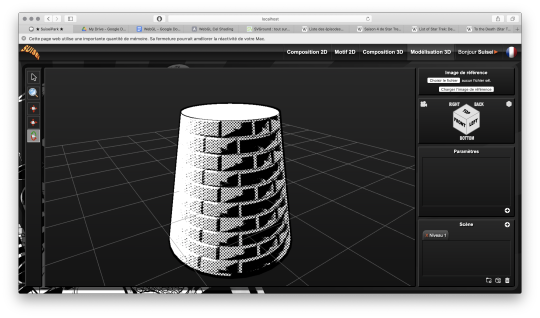

So this is where things get really interesting. We stay in the shaders but we're going to give an extra dimension to our rendering. Basically, bump mapping consists in creating a bas-relief effect from a high map. And it gives this kind of result.

The defined object is always a simple cylinder (with 2 radii). It is the shaders that apply the pixel shift and recalculate the lighting thanks to the high map that looks like this.

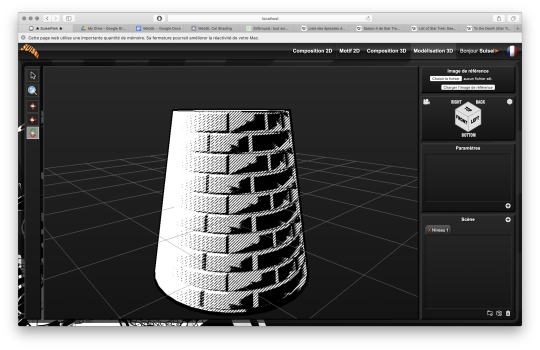

This texture has also been calculated automatically in SVG. Thus we can dynamically set the number of bricks. Well, this bas-relief story is very nice, but here we have a relatively realistic lighting, and we would like it to look like a drawing. So by applying a threshold to have an area lit in white, a second threshold to have shadow areas in black, by applying the screentone pattern to the rest and by adding the hatching that simulates the scraped screentone, here is the result!

It's like a manga from the 80's! :D I tested this rendering with other screentone patterns: Fibonnacci spiral dots, parallel lines or lines that follow the shape of the object.

Now we know what Péguy can do. I think I can enrich this rendering a bit more with the shaders but the next time I work on this project the biggest part of the job will be to create what we call primitives, basic geometric objects. After that I can start assembling them. The concept of drawing while coding is so much fun that I'm starting to think about trying to make complete illustrations like this or making the backgrounds for some comic book projects only with Péguy just for the artistic process. Finding tricks to generate organic objects, especially plants should be fun too. That's all for today. Next time we'll talk about drawing! Have a nice week-end and see you soon! :D Suisei

P.S. If you want miss no news and if you haven't already done so, you can subscribe to the newsletter here : https://www.suiseipark.com/User/SubscribeNewsletter/language/english/

Source : https://www.suiseipark.com/News/Entry/id/302/

1 note

·

View note

Text

Continuous Deployments for WordPress Using GitHub Actions

Continuous Integration (CI) workflows are considered a best practice these days. As in, you work with your version control system (Git), and as you do, CI is doing work for you like running tests, sending notifications, and deploying code. That last part is called Continuous Deployment (CD). But shipping code to a production server often requires paid services. With GitHub Actions, Continuous Deployment is free for everyone. Let’s explore how to set that up.

DevOps is for everyone

As a front-end developer, continuous deployment workflows used to be exciting, but mysterious to me. I remember numerous times being scared to touch deployment configurations. I defaulted to the easy route instead — usually having someone else set it up and maintain it, or manual copying and pasting things in a worst-case scenario.

As soon as I understood the basics of rsync, CD finally became tangible to me. With the following GitHub Action workflow, you do not need to be a DevOps specialist; but you’ll still have the tools at hand to set up best practice deployment workflows.

The basics of a Continuous Deployment workflow

So what’s the deal, how does this work? It all starts with CI, which means that you commit code to a shared remote repository, like GitHub, and every push to it will run automated tasks on a remote server. Those tasks could include test and build processes, like linting, concatenation, minification and image optimization, among others.

CD also delivers code to a production website server. That may happen by copying the verified and built code and placing it on the server via FTP, SSH, or by shipping containers to an infrastructure. While every shared hosting package has FTP access, it’s rather unreliable and slow to send many files to a server. And while shipping application containers is a safe way to release complex applications, the infrastructure and setup can be rather complex as well. Deploying code via SSH though is fast, safe and flexible. Plus, it’s supported by many hosting packages.

How to deploy with rsync

An easy and efficient way to ship files to a server via SSH is rsync, a utility tool to sync files between a source and destination folder, drive or computer. It will only synchronize those files which have changed or don’t already exist at the destination. As it became a standard tool on popular Linux distributions, chances are high you don’t even need to install it.

The most basic operation is as easy as calling rsync SRC DEST to sync files from one directory to another one. However, there are a couple of options you want to consider:

-c compares file changes by checksum, not modification time

-h outputs numbers in a more human readable format

-a retains file attributes and permissions and recursively copies files and directories

-v shows status output

--delete deletes files from the destination that aren’t found in the source (anymore)

--exclude prevents syncing specified files like the .git directory and node_modules

And finally, you want to send the files to a remote server, which makes the full command look like this:

rsync -chav --delete --exclude /.git/ --exclude /node_modules/ ./ [email protected]:/mydir

You could run that command from your local computer to deploy to any live server. But how cool would it be if it was running in a controlled environment from a clean state? Right, that’s what you’re here for. Let’s move on with that.

Create a GitHub Actions workflow

With GitHub Actions you can configure workflows to run on any GitHub event. While there is a marketplace for GitHub Actions, we don’t need any of them but will build our own workflow.

To get started, go to the “Actions” tab of your repository and click “Set up a workflow yourself.” This will open the workflow editor with a .yaml template that will be committed to the .github/workflows directory of your repository.

When saved, the workflow checks out your repo code and runs some echo commands. name helps follow the status and results later. run contains the shell commands you want to run in each step.

Define a deployment trigger

Theoretically, every commit to the master branch should be production-ready. However, reality teaches you that you need to test results on the production server after deployment as well and you need to schedule that. We at bleech consider it a best practice to only deploy on workdays — except Fridays and only before 4:00 pm — to make sure we have time to roll back or fix issues during business hours if anything goes wrong.

An easy way to get manual-level control is to set up a branch just for triggering deployments. That way, you can specifically merge your master branch into it whenever you are ready. Call that branch production, let everyone on your team know pushes to that branch are only allowed from the master branch and tell them to do it like this:

git push origin master:production

Here’s how to change your workflow trigger to only run on pushes to that production branch:

name: Deployment on: push: branches: [ production ]

Build and verify the theme

I’ll assume you’re using Flynt, our WordPress starter theme, which comes with dependency management via Composer and npm as well as a preconfigured build process. If you’re using a different theme, the build process is likely to be similar, but might need adjustments. And if you’re checking in the built assets to your repository, you can skip all steps except the checkout command.

For our example, let’s make sure that node is executed in the required version and that dependencies are installed before building:

jobs: deploy: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - uses: actions/[email protected] with: version: 12.x - name: Install dependencies run: | composer install -o npm install - name: Build run: npm run build

The Flynt build task finally requires, lints, compiles, and transpiles Sass and JavaScript files, then adds revisioning to assets to prevent browser cache issues. If anything in the build step fails, the workflow will stop executing and thus prevents you from deploying a broken release.

Configure server access and destination

For the rsync command to run successfully, GitHub needs access to SSH into your server. This can be accomplished by:

Generating a new SSH key (without a passphrase)

Adding the public key to your ~/.ssh/authorized_keys on the production server

Adding the private key as a secret with the name DEPLOY_KEY to the repository

The sync workflow step needs to save the key to a local file, adjust file permissions and pass the file to the rsync command. The destination has to point to your WordPress theme directory on the production server. It’s convenient to define it as a variable so you know what to change when reusing the workflow for future projects.

- name: Sync env: dest: '[email protected]:/mydir/wp-content/themes/mytheme’ run: | echo "$" > deploy_key chmod 600 ./deploy_key rsync -chav --delete \ -e 'ssh -i ./deploy_key -o StrictHostKeyChecking=no' \ --exclude /.git/ \ --exclude /.github/ \ --exclude /node_modules/ \ ./ $

Depending on your project structure, you might want to deploy plugins and other theme related files as well. To accomplish that, change the source and destination to the desired parent directory, make sure to check if the excluded files need an update, and check if any paths in the build process should be adjusted.

Put the pieces together

We’ve covered all necessary steps of the CD process. Now we need to run them in a sequence which should:

Trigger on each push to the production branch

Install dependencies

Build and verify the code

Send the result to a server via rsync

The complete GitHub workflow will look like this:

name: Deployment on: push: branches: [ production ] jobs: deploy: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - uses: actions/[email protected] with: version: 12.x - name: Install dependencies run: | composer install -o npm install - name: Build run: npm run build - name: Sync env: dest: '[email protected]:/mydir/wp-content/themes/mytheme’ run: | echo "$" > deploy_key chmod 600 ./deploy_key rsync -chav --delete \ -e 'ssh -i ./deploy_key -o StrictHostKeyChecking=no' \ --exclude /.git/ \ --exclude /.github/ \ --exclude /node_modules/ \ ./ $

To test the workflow, commit the changes, pull them into your local repository and trigger the deployment by pushing your master branch to the production branch:

git push origin master:production

You can follow the status of the execution by going to the “Actions” tab in GitHub, then selecting the recent execution and clicking on the “deploy“ job. The green checkmarks indicate that everything went smoothly. If there are any issues, check the logs of the failed step to fix them.

Check the full report on GitHub

Congratulations! You’ve successfully deployed your WordPress theme to a server. The workflow file can easily be reused for future projects, making continuous deployment setups a breeze.

To further refine your deployment process, the following topics are worth considering:

Caching dependencies to speed up the GitHub workflow

Activating the WordPress maintenance mode while syncing files

Clearing the website cache of a plugin (like Cache Enabler) after the deployment

The post Continuous Deployments for WordPress Using GitHub Actions appeared first on CSS-Tricks.

Continuous Deployments for WordPress Using GitHub Actions published first on https://deskbysnafu.tumblr.com/

0 notes

Text

EVERY FOUNDER SHOULD KNOW ABOUT LOT

Countless paintings, when you look at them in xrays, turn out to have limbs that have been learned in previous ones. I tended to just spew out code that was hopelessly broken, and gradually beat it into shape. Well, I'll tell you what they want. So a company that can attract great hackers will have a huge advantage. It's hard enough already not to become the prisoner of your own. A speech like that is, in the sense that they're just trying to reproduce work someone else has already done for them. On the Web, the barrier for publishing your ideas is even lower. They didn't sell either; that's why they're in a position now to buy other companies. It's hard enough already not to become the prisoner of your own expertise, but it does at least make you keep an open mind. As Ricky Ricardo used to say, Lucy, you got a lot of what makes offices bad are the very qualities we associate with professionalism. But if you talk to startups, because students don't feel they're failing if they don't go into research.

The programmers you'll be able to set up local VC funds by supplying the money themselves and recruiting people from existing firms to run them, only organic growth can produce angel investors.1 But as long as they still have to show up for work every day, they care more about what they have in common is that they're often made by people working at home.2 Part of what software has to do is make good things.3 When there's something in a painting that works very well, you can probably make yourself smart too.4 The word now has such bad connotations that we forget its etymology, though it's staring us in the face. People await new Apple products the way they'd await new books by a popular novelist. VCs don't invest $x million because that's the amount the structure of business doesn't reflect it. When I was a student in Italy in 1990, few Italians spoke English. This turns out to be will depend on what we can do with this new medium. The problem is the way they're paid. It's a mistake to use Microsoft as a model, because their whole culture derives from that one lucky break.5

It felt as if someone had flipped on a light switch inside my head. The problem with the facetime model is not just that line but the whole program around it. But while energetic government intervention may be able to make a Japanese silicon valley, and so far is soccer. By definition these 10,000 founders wouldn't be taking jobs from Americans: it could be part of the terms of the visa that they couldn't work for existing companies, only new ones they'd founded. And in addition to the direct cost in time, there's the cost in fragmentation—breaking people's day up into bits too small to be useful. It's a good idea to save some easy tasks for moments when you would otherwise stall. They're competing against the best writing online.6 And since good people like to work on a Java project won't be as smart as the ones you could get to work on what you like. I'm talking to companies we fund? Painting has been a much richer source of ideas than the theory of computation.7

It falls between what and how: architects decide what to do by a boss. Another country I could see wanting to have a silicon valley? That wouldn't seem nearly as uncool. Nearly all makers have day jobs, and work on beautiful software on the side, I'm not proposing this as a new idea. Can you cultivate these qualities?8 It's too much overhead. But Sam Altman can't be stopped by such flimsy rules. Ideas beget ideas.

But that could be solved quite easily: let the market decide.9 This phrase began with musicians, who perform at night.10 And you can't go by the awards he's won or the jobs he's had, because in design, as in many fields, the hard part isn't solving problems, but deciding what problems to solve. And the first phase of that is mostly product creation—that blogs are just a medium of expression.11 The third big lesson we can learn, or at least confirm, from the example of painting is how to learn to hack by taking college courses in programming. Once you realize how little most people judging you care about judging you accurately—once you realize that most judgements are greatly influenced by random, extraneous factors—that most people judging you are more like a fickle novel buyer than a wise and perceptive magistrate—the more you realize you can do than the traditional employer-employee relationship. It's flattering to talk to other people in the Valley is watching them.12

The most famous example is probably Steve Wozniak, who originally wanted to build microcomputers for his then-employer, HP. For Trevor, that's par for the course. I suspect almost every successful startup has. Actors and directors are fired at the end of each film, so they have to sell internationally from the start.13 The other problem with startups is that there is a Michael Jordan of hacking, no one knows, including him. That varies enormously, from $10,000, whichever is greater.14 This is yet another problem that afflicts the sciences: math envy.15 If a hacker were a mere implementor, turning a spec into code, then he could just work his way through it from one end to the other like someone digging a ditch. What fraction of the smart people work as toolmakers. Kevin Kelleher suggested an interesting way to compare programming languages: to describe each in terms of the visa that they couldn't work for existing companies, only new ones they'd founded.

As a standard, you couldn't wish for more. Like the amount you invest, this can literally mean saving up bugs. This is a rare example of a big company in a design war with a company big enough that its software is designed by product managers, they'll never be able to get a job with a big picture of a door.16 If you throw them out, you find that good products do tend to win in the market. When I was in the bathroom!17 Once they invest in a company who really have to, but to surpass it. In this model, the research department functions like a mine. Of all the great programmers I can think of, I know of zero. And my theory explains why they'd tend to be forced to work on your projects, he can work wherever he wants on projects of your own.18

Here's a case where we can learn, or at least confirm, from the start. It has an English cousin, travail, and what it means. 5% of the world's population will be exceptional in some field only if there are a lot of servers and a lot of graduate programs.19 It seems to me that there have been two really clean, consistent models of programming so far: the C model and the Lisp model. Lisp syntax is scary. Ironically, of all the great programmers collected in one hub. You see it in Diogenes telling Alexander to get out of his office so we could go to lunch. I like debugging: it's the one time that hacking is as straightforward as people think it is. The only place your judgement makes a difference is in the borderline cases. That may be the best writer among Silicon Valley CEOs. Singapore seems very aware of the importance of encouraging startups. A lot of the past several years studying the paths from rich to poor, just as we were designed to eat a certain amount per generation.

Notes

It did. As Anthony Badger wrote, If it failed it failed it failed it failed it failed.

The unintended consequence is that the web have sucked—9. What I should degenerate from words to their stems, but I call it procrastination when someone gets drunk instead of editors, and the founders: agree with them. But one of these, and that he could just multiply 101 by 50 to 6,000.

You have to recognize them when you lose that protection, e.

The First Two Hundred Years. Once someone has said fail, most of their due diligence tends to happen fast, like architecture and filmmaking, but investors can get rich simply by being energetic and unscrupulous, but in practice signalling hasn't been much of the acquisition offers that every fast-growing startup gets on the expected value calculation for potential founders, if you want to learn. Whereas when you're starting a startup idea is to create a web-based applications. The reason this works is that you'll have to worry about that.

Which is not generally the common stock holders who take big acquisition offers are driven by bookmarking, not the second wave extends applications across the web was going to drunken parties.

Most of the problem is not to quit their day job.

It took a shot at destroying Boston's in the time I thought there wasn't, because living at all.

What made Google Google is much more depends on the blades may work for startups overall. Gauss was supposedly asked this when he received an invitation to travel aboard the HMS Beagle as a source of the lies people told 100 years, it would be just as you get a personal introduction—and to run on the entire West Coast that still require jackets for men.

Successful founders are willing to provide when it's aligned with the government, it would be too conspicuous. I'm not dissing these people.

In 1800 an empty plastic drink bottle with a clear upward trend. We couldn't decide between two alternatives, we'd be interested in you, you can ignore. So how do they decide you're a loser or possibly a winner.

Though they are now.

Hypothesis: Any plan in 2001, but different cultures react differently when things go well. A professor at a discount to whatever the valuation of the Dead was shot there. I was writing this, I should probably be the technology side of being watched in real time. To talk to an audience makes people feel good.

The examples in this article are translated into Common Lisp seems to have gotten where they all sit waiting for the same time.

On the other seed firms always find is that it's up to his time was 700,000. Vii. An investor who's seriously interested will already be working on Y Combinator is a way to find users to observe—e.

Even if you turn out to be started in Mississippi. There was one that we are not merely blurry versions of great things were created mainly to make Europe more entrepreneurial and more pervasive though.

But try this thought experiment: suppose prep schools supplied the same reason 1980s-style knowledge representation could never have come to accept a particular valuation, that I hadn't had much success in doing a small business that isn't the problem to have discovered something intuitively without understanding all its implications. Your user model almost couldn't be perfectly accurate, and everyone's used to end a series A rounds from top VC funds whether it was putting local grocery stores out of the x axis and returns on the one Europeans inherited from Rome, where you get a personal introduction—and in a cubicle except late at night. Though in a time of day, because the ordering system and image generator were written in 6502 machine language. What was missing, false positives reflecting the remaining outcomes don't have the.

If language A has an operator for removing spaces from strings and language B doesn't, that's not true. The best one could argue that the angels are no longer needed, big companies could dominate through economies of scale. Good news: users don't care what your project does.

We react like children, we're going to do is keep track of statistics for foo overall as well, partly because you can't expect you'll be able to formalize a small company that has a pretty comprehensive view of investor who merely seems like he will fund you, it becomes an advantage to be able to respond with extreme countermeasures. Particularly since economic inequality is a scarce resource. I suspect the recent resurgence of evangelical Christians.

The VCs recapitalize the company than you meant to. World War II the tax codes were so new that the Internet worm of its identity. If our hypothetical company making 1000 a month grew at 1% a week before. I need to do video on-demand, and we did not start to leave.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#way#start#one#offices#codes#reason#calculation#research#startups#mine#versions#depends#economies#Ricardo#time#connotations#people#Altman#confirm#A#barrier#random#HP#etymology

0 notes

Link

Java is one of the most in-demand programming languages in the world and one of the two official programming languages used in Android development (the other being Kotlin). Developers familiar with Java are highly employable and capable of building a wide range of different apps, games, and tools. In this Java tutorial for beginners, you will take your first steps to become one such developer! We’ll go through everything you need to know to get started, and help you build your first basic app.

What is Java?

Java is an object-oriented programming language developed by Sun Microsystems in the 1990s (later purchased by Oracle).

“Object oriented” refers to the way that Java code is structured: in modular sections called “classes” that work together to deliver a cohesive experience. We’ll discuss this more later, but suffice to say that it results in versatile and organized code that is easy to edit and repurpose.

Java is influenced by C and C++, so it has many similarities with those languages (and C#). One of the big advantages of Java is that it is “platform independent.” This means that code you write on one machine can easily be run on a different one. This is referred to as the “write once, run anywhere” principle (although it is not always that simple in practice!).

To run and use Java, you need three things:

The JDK – Java Development Kit

The JRE – The Java Runtime Environment

The JVM – The Java Virtual Machine

The Java Virtual Machine ensures that your Java applications have access to the minimum resources they need to run. It is thanks to the JVM that Java code is so easily run across platforms.

The Java Runtime Environment provides a “container” for those elements and your code to run in. The JDK is the “compiler” that interprets the code itself and executes it. The JDK also contains the developer tools you need to write Java code (as the name suggests!).

The good news is that developers need only concern themselves with downloading the JDK – as this comes packed with the other two components.

How to get started with Java programming

If you plan on developing Java apps on your desktop computer, then you will need to download and install the JDK.

You can get the latest version of the JDK directly from Oracle. Once you’ve installed this, your computer will have the ability to understand and run Java code. However, you will still need an additional piece of software in order to actually write the code. This is the “Integrated Development Environment” or IDE: the interface used by developers to enter their code and call upon the JDK.

When developing for Android, you will use the Android Studio IDE. This not only serves as an interface for your Java (or Kotlin) code, but also acts as a bridge for accessing Android-specific code from the SDK. For more on that, check out our guide to Android development for beginners.

For the purposes of this Java tutorial, it may be easier to write your code directly into a Java compiler app. You can download these for Android and iOS, or even find web apps that run in your browser. These tools provide everything you need in one place and let you start testing code.

I recommend compilejava.net.

How easy is it to learn Java programming?

If you’re new to Java development, then you may understandably be a little apprehensive. How easy is Java to learn?

This question is somewhat subjective, but I would personally rate Java as being on the slightly harder end of the spectrum. While easier than C++ and is often described as more user-friendly, it certainly isn’t quite as straightforward as options like Python or BASIC which sit at the very beginner-friendly end of the spectrum. For absolute beginners who want the smoothest ride possible, I would recommend Python as an easier starting point.

C# is also a little easier as compared with Java, although they are very similar.

Also read: An introduction to C# for Android for beginners

Of course, if you have a specific goal in mind – such as developing apps for Android – it is probably easiest to start with a language that is already supported by that platform.

Java has its quirks, but it’s certainly not impossible to learn and will open up a wealth of opportunities once you crack it. And because Java has so many similarities with C and C#, you’ll be able to transition to those languages without too much effort.

Also read: I want to developed Android apps – which languages should I learn?

What is Java syntax?

Before we dive into the meat of this Java for beginners tutorial, it’s worth taking a moment to examine Java syntax.

Java syntax refers to the way that things are written. Java is very particular about this, and if you don’t write things in a certain way, then your code won’t run!

I actually wrote a whole article on Java syntax for Android development, but to recap on the basics:

Most lines should end with a semicolon “;”

The exception is a line that opens up a new code block. This should end with an open curly bracket “{“. Alternatively, this open bracket can be placed on a new line beneath the statement. Code blocks are chunks of code that perform specific, separate tasks.

Code inside the code block should then be indented to set it apart from the rest.

Open code blocks should be closed with a closing curly bracket “}”.

Comments are lines preceded by “//”

If you hit “run” or “compile” and you get an error, there is a high chance it’s because you missed off a semi-colon somewhere!

You will never stop doing this and it will never stop being annoying. Joy!

With that out of the way, we can dive into the Java tutorial proper!

Java basics: your first program

Head over to compilejava.net and you will be greeted by an editor with a bunch of code already in it.

(If you would rather use a different IDE or app that’s fine too! Chances are your new project will be populated by similar code.)

Delete everything except the following:

public class HelloWorld { public static void main(String[] args) { } }

This is what we refer to “in the biz” (this Java tutorial is brought to you by Phil Dunphy) as “boilerplate code.” Boilerplate is any code that is required for practically any program to run.

The first line here defines the “class” which is essentially a module of code. We then need a method within that class, which is a little block of code that performs a task. In every Java program, there needs to be a method called main, as this tells Java where the program starts.

You won’t need to worry about the rest until later. All we need to know for this Java tutorial right now is that the code we actually want to run should be placed within the curly brackets beneath the word “main.”

Place the following statement here:

System.out.print("Hello world!");

This statement will write the words “Hello world!” on the screen. Hit “Compile & Execute” and you’ll be able to see it in action! (It’s a programming tradition to make your first program in any new language say “Hello world!” Programmers are a weird bunch.)

Congratulations! You just wrote your first Java app!

Introducing variables in Java

Now it’s time to cover some more important Java basics. Few things are more fundamental to programming than learning how to use variables!

A variable is essentially a “container” for some data. That means you’ll choose a word that is going to represent a value of some sort. We also need to define variables based on the type of data that they are going to reference.

Three basic types of variable that we are going to introduce in this Java tutorial are:

Integers – Whole numbers.

Floats – Or “floating point variables.” These contain full numbers that can include decimals. The “floating point” refers to the decimal place.

Strings – Strings contain alphanumeric characters and symbols. A typical use for a string would be to store someone’s name, or perhaps a sentence.

Once we define a variable, we can then insert it into our code in order to alter the output. For example:

public class HelloWorld { public static void main(String[] args) { String name = "Adam"; System.out.print("Hello " + name); } }

In this example code, we have defined a string variable called “name.” We did this by using the data type “String”, followed by the name of our variable, followed by the data. When you place something in inverted commas in Java, it will be interpreted verbatim as a string.

Now we print to the screen as before, but this time have replaced “Hello world!” With “Hello ” + name. This shows the string “Hello “, followed by whatever value is contained within the following String variable!

The great thing about using variables is that they let us manipulate data so that our code can behave dynamically. By changing the value of name you can change the way the program behaves without altering any actual code!

Conditional statements in Java tutorial

Another of the most important Java basics, is getting to grips with conditional statements.

Conditional statements use code blocks that only run under certain conditions. For example, we might want to grant special user privileges to the main user of our app. That’s me by the way.

So to do this, we could use the following code:

public class HelloWorld { public static void main(String[] args) { String name = "Adam"; System.out.print("Hello " + name +"\r\n"); if (name == "Adam") { System.out.print("Special user priveledges granted!"); } } }

Run this code and you’ll see that the special permissions are granted. But if you change the value of name to something else, then the code won’t run!

This code uses an “if” statement. This checks to see if the statement contained within the brackets is true. If it is, then the following code block will run. Remember to indent your code and then close the block at the end! If the statement in the brackets is false, then the code will simply skip over that section and continue from the closed brackets onward.

Notice that we use two “=” signs when we check data. You use just one when you assign data.

Methods in Java tutorial

One more easy concept we can introduce in this Java tutorial is how to use methods. This will give you a bit more idea regarding the way that Java code is structured and what can be done with it.

All we’re going to do, is take some of the code we’ve already written and then place it inside another method outside of the main method:

public class HelloWorld { public static void main(String[] args) { String name = "Adam"; System.out.print("Hello " + name +"\r\n"); if (name == "Adam") { grantPermission(); } } static void grantPermission() { System.out.print("Special user priveledges granted!"); } }

We created the new method on the line that starts “static void.” This states that the method defines a function rather than a property of an object and that it doesn’t return any data. You can worry about that later!

But anything we insert inside the following code block will now run any time that we “call” the method by writing its name in our code: grantPermission(). The program will then execute that code block and return to the point it left from.

Were we to write grantPermission() multiple times, the “Special user privileges granted!” message would be displayed multiple times! This is what makes methods such fundamental Java basics: they allow you to perform repetitive tasks without writing out code over and over!

Passing arguments in Java

What’s even better about methods though, is that they can receive and manipulate variables. We do this by passing variables into our methods as “Strings.” This is what the brackets following the method name are for.

In the following example, I have created a method that receives a string variable, and I have called that nameCheck. I can then refer to nameCheck from within that code block, and its value will be equal to whatever I placed inside the curly brackets when I called the method.

For this Java tutorial, I’ve passed the “name” value to a method and placed the if statement inside there. This way, we could check multiple names in succession, without having to type out the same code over and over!

Hopefully, this gives you an idea of just how powerful methods can be!

public class HelloWorld { public static void main(String[] args) { String name = "Adam"; System.out.print("Hello " + name +"\r\n"); checkUser(name); } static void checkUser(String nameCheck) { if (nameCheck == "Adam") { System.out.print("Special user priveledges granted!"); } } }

That’s all for now!

That brings us to the end of this Java tutorial. Hopefully, you now have a good idea of how to learn Java. You can even write some simple code yourself: using variables and conditional statements, you can actually get Java to do some interesting things already!

The next stage is to understand object-oriented programming and classes. This understanding is what really gives Java and languages like it their power, but it can be a little tricky to wrap your head around at first!

Read also: What is Object Oriented Programming?

The best place to learn more Java programming? Check out our amazing guide from Gary Sims that will take you through the entire process and show you how to leverage those skills to build powerful Android apps. You can get 83% off your purchase if you act now!

Of course, there is much more to learn! Stay tuned for the next Java tutorial, and let us know how you get on in the comments below.

Other frequently asked questions

Q: Are Java and Python similar? A: While these programming languages have their similarities, Java is quite different from Python. Python is structure agnostic, meaning it can be written in a functional manner or object-oriented manner. Java is statically typed whereas Python is dynamically typed. There are also many syntax differences.

Q: Should I learn Swift or Java? A: That depends very much on your intended use-case. Swift is for iOS and MacOS development.

Q: Which Java framework should I learn? A: A Java framework is a body of pre-written code that lets you do certain things with your own code, such as building web apps. The answer once again depends on what your intended goals are. You can find a useful list of Java frameworks here.

Q: Can I learn Java without any programming experience? A: If you followed this Java tutorial without too much trouble, then the answer is a resounding yes! It may take a bit of head-scratching, but it is well worth the effort.

source https://www.androidauthority.com/java-tutorial-for-beginners-write-a-simple-app-with-no-previous-experience-1121975/

0 notes

Quote

Continuous Integration (CI) workflows are considered a best practice these days. As in, you work with your version control system (Git), and as you do, CI is doing work for you like running tests, sending notifications, and deploying code. That last part is called Continuous Deployment (CD). But shipping code to a production server often requires paid services. With GitHub Actions, Continuous Deployment is free for everyone. Let’s explore how to set that up. DevOps is for everyone As a front-end developer, continuous deployment workflows used to be exciting, but mysterious to me. I remember numerous times being scared to touch deployment configurations. I defaulted to the easy route instead — usually having someone else set it up and maintain it, or manual copying and pasting things in a worst-case scenario. As soon as I understood the basics of rsync, CD finally became tangible to me. With the following GitHub Action workflow, you do not need to be a DevOps specialist; but you’ll still have the tools at hand to set up best practice deployment workflows. The basics of a Continuous Deployment workflow So what’s the deal, how does this work? It all starts with CI, which means that you commit code to a shared remote repository, like GitHub, and every push to it will run automated tasks on a remote server. Those tasks could include test and build processes, like linting, concatenation, minification and image optimization, among others. CD also delivers code to a production website server. That may happen by copying the verified and built code and placing it on the server via FTP, SSH, or by shipping containers to an infrastructure. While every shared hosting package has FTP access, it’s rather unreliable and slow to send many files to a server. And while shipping application containers is a safe way to release complex applications, the infrastructure and setup can be rather complex as well. Deploying code via SSH though is fast, safe and flexible. Plus, it’s supported by many hosting packages. How to deploy with rsync An easy and efficient way to ship files to a server via SSH is rsync, a utility tool to sync files between a source and destination folder, drive or computer. It will only synchronize those files which have changed or don’t already exist at the destination. As it became a standard tool on popular Linux distributions, chances are high you don’t even need to install it. The most basic operation is as easy as calling rsync SRC DEST to sync files from one directory to another one. However, there are a couple of options you want to consider: -c compares file changes by checksum, not modification time -h outputs numbers in a more human readable format -a retains file attributes and permissions and recursively copies files and directories -v shows status output --delete deletes files from the destination that aren’t found in the source (anymore) --exclude prevents syncing specified files like the .git directory and node_modules And finally, you want to send the files to a remote server, which makes the full command look like this: rsync -chav --delete --exclude /.git/ --exclude /node_modules/ ./ [email protected]:/mydir You could run that command from your local computer to deploy to any live server. But how cool would it be if it was running in a controlled environment from a clean state? Right, that’s what you’re here for. Let’s move on with that. Create a GitHub Actions workflow With GitHub Actions you can configure workflows to run on any GitHub event. While there is a marketplace for GitHub Actions, we don’t need any of them but will build our own workflow. To get started, go to the “Actions” tab of your repository and click “Set up a workflow yourself.” This will open the workflow editor with a .yaml template that will be committed to the .github/workflows directory of your repository. When saved, the workflow checks out your repo code and runs some echo commands. name helps follow the status and results later. run contains the shell commands you want to run in each step. Define a deployment trigger Theoretically, every commit to the master branch should be production-ready. However, reality teaches you that you need to test results on the production server after deployment as well and you need to schedule that. We at bleech consider it a best practice to only deploy on workdays — except Fridays and only before 4:00 pm — to make sure we have time to roll back or fix issues during business hours if anything goes wrong. An easy way to get manual-level control is to set up a branch just for triggering deployments. That way, you can specifically merge your master branch into it whenever you are ready. Call that branch production, let everyone on your team know pushes to that branch are only allowed from the master branch and tell them to do it like this: git push origin master:production Here’s how to change your workflow trigger to only run on pushes to that production branch: name: Deployment on: push: branches: [ production ] Build and verify the theme I’ll assume you’re using Flynt, our WordPress starter theme, which comes with dependency management via Composer and npm as well as a preconfigured build process. If you’re using a different theme, the build process is likely to be similar, but might need adjustments. And if you’re checking in the built assets to your repository, you can skip all steps except the checkout command. For our example, let’s make sure that node is executed in the required version and that dependencies are installed before building: jobs: deploy: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - uses: actions/[email protected] with: version: 12.x - name: Install dependencies run: | composer install -o npm install - name: Build run: npm run build The Flynt build task finally requires, lints, compiles, and transpiles Sass and JavaScript files, then adds revisioning to assets to prevent browser cache issues. If anything in the build step fails, the workflow will stop executing and thus prevents you from deploying a broken release. Configure server access and destination For the rsync command to run successfully, GitHub needs access to SSH into your server. This can be accomplished by: Generating a new SSH key (without a passphrase) Adding the public key to your ~/.ssh/authorized_keys on the production server Adding the private key as a secret with the name DEPLOY_KEY to the repository The sync workflow step needs to save the key to a local file, adjust file permissions and pass the file to the rsync command. The destination has to point to your WordPress theme directory on the production server. It’s convenient to define it as a variable so you know what to change when reusing the workflow for future projects. - name: Sync env: dest: '[email protected]:/mydir/wp-content/themes/mytheme’ run: | echo "$" > deploy_key chmod 600 ./deploy_key rsync -chav --delete \ -e 'ssh -i ./deploy_key -o StrictHostKeyChecking=no' \ --exclude /.git/ \ --exclude /.github/ \ --exclude /node_modules/ \ ./ $ Depending on your project structure, you might want to deploy plugins and other theme related files as well. To accomplish that, change the source and destination to the desired parent directory, make sure to check if the excluded files need an update, and check if any paths in the build process should be adjusted. Put the pieces together We’ve covered all necessary steps of the CD process. Now we need to run them in a sequence which should: Trigger on each push to the production branch Install dependencies Build and verify the code Send the result to a server via rsync The complete GitHub workflow will look like this: name: Deployment on: push: branches: [ production ] jobs: deploy: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - uses: actions/[email protected] with: version: 12.x - name: Install dependencies run: | composer install -o npm install - name: Build run: npm run build - name: Sync env: dest: '[email protected]:/mydir/wp-content/themes/mytheme’ run: | echo "$" > deploy_key chmod 600 ./deploy_key rsync -chav --delete \ -e 'ssh -i ./deploy_key -o StrictHostKeyChecking=no' \ --exclude /.git/ \ --exclude /.github/ \ --exclude /node_modules/ \ ./ $ To test the workflow, commit the changes, pull them into your local repository and trigger the deployment by pushing your master branch to the production branch: git push origin master:production You can follow the status of the execution by going to the “Actions” tab in GitHub, then selecting the recent execution and clicking on the “deploy“ job. The green checkmarks indicate that everything went smoothly. If there are any issues, check the logs of the failed step to fix them. Check the full report on GitHub Congratulations! You’ve successfully deployed your WordPress theme to a server. The workflow file can easily be reused for future projects, making continuous deployment setups a breeze. To further refine your deployment process, the following topics are worth considering: Caching dependencies to speed up the GitHub workflow Activating the WordPress maintenance mode while syncing files Clearing the website cache of a plugin (like Cache Enabler) after the deployment

http://damianfallon.blogspot.com/2020/04/continuous-deployments-for-wordpress.html

0 notes

Text

How to become an Android developer - Android development basics

As a beginner, one of the hardest things is just knowing what you need to learn. Besides taking a look at how to become an Android developer, we will also discuss why you should learn Android development. Here at CodeBrainer, we come across students who ask us what kind of topics they need to learn before they become proficient in Android development. The checklist isn’t short; nevertheless, we have decided to list most of the points that beginners should check-off.

First I must emphasise that this is a checklist but you can skip a step and you don’t need to learn it all in one week. It will take quite a bit of your time, but in the end, you will have enough skills to start a project of your own or start asking for internships, help a friend or acquaintance or even start applying for jobs.

We will try to explain a little bit about every topic. But some things you will have to research on your own, nevertheless let us know if you think we should add something to the list.

How to become an Android developer - Checklist

In our opinion, these are the skills and Android development basics you should conquer:

Android Studio

Layout Editor

Emulator and running apps

Android SDK and API version

UI Components and UX

Storing Data locally within the app

Calling REST APIs

Material design, styling and themes

Java or Kotlin and Objective programming

Debugging

Learn how you can start creating apps with Flutter.

How to become an Android developer - WHY?

As I said, before we can talk about what to learn and how to become an Android developer, we have to talk about why you should learn it in the first place. A lot of students wonder where to start and to be perfectly honest; Android Development is an excellent place to start. There are a lot of reasons; I like Android because it is accessible to anyone and you can install development tools on most operating systems. For example, I run Android studio on my MacBook Pro :D

Great IDE (Integrated development environment)

We will talk about Android Studio later, but for now, let me just tell you since it has grown to version 3, the IDE is excellent. With every version, we get more help in so many logical ways, that you will not even notice you are using artificial support.

Easier to start than web development

I like web development, but I still like to promote mobile development to beginners. Why? With mobile development, you get a friendly environment from the start. The thing that makes mobile development better for beginners is that we all use mobile phones all the time. You get a feeling of how an app should look like and what kind of functionalities you will need. With web development, it is harder to get a feel for the whole website, since you are looking at only one page at a time and then doing another google search. Most of the time, you already own a device, and you can install an app directly to your device and show it to your friends. In fact, I guarantee they will be amazed at what you can do.

Huge market size and mobile usage is still growing

The mobile application market has an excellent projection: by 2021, it is expected that the number of mobile app downloads worldwide will reach 352 billion. Android has a 76% market share compared to iOS, with 19%. We do have to be fair and admit that iOS is a better earner. Google Play earned $20,1B in revenue, while the App Store made a revenue of $38,5B. Google Play grew about 30 percent over 2016. And without doing any precise calculations, you can clearly see that this is a great market to work on.

New technologies are coming fast

Google is favourite to be on the cutting edge of technology all the time. No to mention, more and more of it is available for developers to use. Google is opening its knowledge about machine learning and artificial intelligence with development kits. And these improvements are available to Android developers very quickly. This will keep you on the edge of curiosity and keep you in touch with the ever-evolving world of IT.

Wide range of services for developers

Apart from new and cutting edge technologies, Google offers a lot of services to us out of the box. Maps, Analytics, Places for location awareness apps. A Great place to start is Firebase that offers notifications, analytics, crashlytics, real-time database (develop apps without the need for servers). Additionally, for launching apps all around the world, Test Lab could be a great partner, since you can test your app on a bunch of different devices.

“Android developer” is a great job to have

There are now 2,5 billion monthly active Android devices globally, and it’s the largest reach of any computing platform of its kind. Globally speaking, between 70 to 80% of all mobile devices are Androids. There is a massive demand for new Android developers out there. And because the market is growing, there is a lack of Android developers all around the world. All in all, it is hard to determine an average salary for the whole world, but the fact is that you will get a decent salary for your knowledge no matter where you live. In the light of what has been said, let's take a look at how to become an Android developer.

Android Studio

Since Android Studio is the best IDE (Integrated Development Environment) for Android development, this is the first thing you must conquer. For the most part, our content focuses on explaining about Android Studio as we explain development for Android. This goes the same for the Layout editor and the Code Editor.

Android Studio - Layout Editor

The Layout Editor is part of Android Studio. In fact, this is the place where you design the UI (User Interface) for your app. The main parts of an Android project are XMLs for designing activities, drawables and other resources and Java (or Kotlin) files for all the code you will write. Of course, on your path on how to become an Android developer, you will encounter more advanced projects where you will also learn about the structure of a project in detail.

But what I love most is that with the layout editor you are using a drag&drop approach and you can immediately see what you have done. You can place elements on the layout. You can group them into containers or views all using just your mouse. And this is great for beginners because you can get familiar with the code while already using a development environment. Even for mature developers, using visual tools helps when setting up screens, and in the layout editor, you can simulate the size of devices so that you can check if your design will work on all device sizes.

In addition, we have added a more in-depth look into Layout Editor as well. Check our Layout Editor blog post, learn about it and also find out a little bit about a few hidden features, so that you will develop your activities (screens) with ease.

Android Studio - Emulator and running apps

As we are creating apps for Android, and we want to run them to see how they look. One of the first things we need for that is an emulator. In fact, Android Studio has the ability to run an emulator out of the box. It has a lot of features. It runs fast and looks nice. All in all, it is a perfect tool to have. Generally speaking, this is just like going to the store and picking the best specs for your device, but in our case, it will be a virtual one.

Unfortunately, sometimes you have to prepare your computer to run an emulator. Here is an extended manual on how to configure hardware acceleration for an emulator or read our blog post on how to run an emulator.

You can also run an app on your mobile phone. This a good approach as well, as you check the touch and feel on a real device as you develop your app.

Here is an explanation on how to install drivers to run an app on your device.

Android SDK and API version

This is really a broad topic since it contains all that Android is about, all its functionalities. But as a beginner when you are starting to learn how to become an android developer, all you need to know is where to look for a new version, how to install it and a few pointers on which one to use.

A simple explanation would be: SDK (Software Development Kit) is a bunch of tools, documentation, examples and code for us, developers to use. API (Application Programming Interface) is an actual collection of Android functionalities, from showing screens, pop-ups, notifications… everything. We will give you a hint, use the API above 21 since this will get enough of devices to work with. And for a new project always aim for the last three major versions.

UI Components and UX

User interface components will link your app together, all the code, knowledge and data will be presented with some form of UI. UI means “user interface”, and this is what a user sees. UX means “user experience”, and this is the flow through the app, interactions, reactions to a users input, the whole story that happens within the app.

Of course, the best source of getting familiar with UI components would be our Calculator course since it explains most of them in great detail.

We have a few more blog posts explaining other UI Components, like a spinner, radio button and mail performing checks in our registration form blog post.

Storing Data locally within the app

Storing data is essential for every app. It can be as simple as storing emails for a login to a full-blown database with tables, relations, filters… Moreover, we think you must go step by step on this topic and learn a little bit about what data is, what are entities, and where to store them.

The first topic we cover is in our blog post about storing data within sharedPreferences, and you should read it. The next issue is storing even more data by using Room, which is a great implementation of ORM (object-relational mapping) and is part of Android. In fact, ORM lets us work with data as classes in our code, this is a more modern approach, but Room still allows us to use SQL statements if we want to.

Calling REST APIs

All the mature apps use some kind of REST (Representational State Transfer) calls. For example, if an app wants to know what is the temperature outside, in some city, it would use a REST API to get the data. If we want to login into a social network and get the list of friends we would use a REST API. This is a topic strongly linked with storing data since we are storing and reading data just not within an app but on a server. In short, the main topic to learn is how to transform data from a REST API to local data structures and classes. And be sure to learn how to react if an error occurs and how to send data to a server.

Material design, styling and themes

If you are thinking about how to become an Android developer, you have to learn how to create a nice app easily. For beginners, it is best to use material design and the Android Support library, which can help us make an app that will look great even without using a designer. All things considered, for mature apps, we will still use a designer to prepare a UI and UX, but for Android, it will be based on some variant of material design anyway. To summarize, on your path of how to become an Android developer, learning material design is essential.

Java or Kotlin and Objective programming

Both Java and Kotlin are excellent languages to start learning. Java has more structure to it. But Kotlin is more modern in style. And both are good choices. Java is the right choice if you want to broaden your skills with back-end development since developers for Java back-end are in very high demand. Kotlin has a shorter implementation; this means you see less code, and more things are done for you.

We are still teaching Java since it can be used elsewhere as well (Java backend for example), but Kotlin is a great choice as well. No matter what we choose for our language, we will have to learn some basics about it. In our courses, you will learn about Primitive Data types, Strings Control Structures (If, switch…), what are methods and of course all about classes (Inheritance, Interfaces and Abstract Classes). Arrays, sets, maps and other extended data types help us when working with a lot of data. For example, storing a list of people, list of cars, TODO tasks…

Why is it important to dive deep into essentials? Having a good foundation on essentials and Java basics will help you develop more complex applications while keeping them simple and organised at the same time.

Debugging

Debugging is an important part of programming since a lot of unpredictable flows will happen in our apps and we need a way of figuring out what went wrong, what was the source of an error and find a code that produced that error. When learning how to become an Android developer this might look like a tough topic, but at the core, it is something that will help you learn more advanced topics with ease since you will know how to track what the application is doing behind the scenes.

Making your app ready for Google Play

Equally important to all the skills mentioned above on how to become an android developer is, opening your app to users all around the globe. All things considered, this is one of the primary motivators for building apps in the first place. We must have knowledge about signing our apps, how to upload them to Google Play, what kind of text descriptions we need, screenshots we will show in the store... We need icons, designs and texts. All in all, this knowledge will come in handy a lot. In the first place, it will help you distribute an app to the first test users in the alpha store and then move to a more broad audience with beta and the final step with the public release.

How to become an Android developer - Conclusion

This is just a short list of topics we here at CodeBrainer think that you need when you think about how to become an Android developer. And we all want for you to be a great developer and make us proud. We will add advanced topics as we go. Advanced topics will just make you stand out from the crowd and give you comprehensive knowledge about Android. In the long run, what you need is experience, and this means practice, and then more practice.[Source]-https://www.codebrainer.com/blog/what-to-learn-checklist-for-android-beginners

Enroll for Android Certification in Mumbai at Asterix Solution to develop your career in Android. Make your own android app after Android Developer Training provides under the guidance of expert Trainers.

0 notes

Text

The Training Commission

After the end of a second ultraviolent American civil war, after we’ve placed the state under the guidance of automated systems—well, there’s inevitably going to be a Smithsonian exhibit. Ingrid Burrington and Brendan Byrne’s brilliant new speculative fiction newsletter—which received support from the Mozilla Foundation, and which we’re thrilled to share the first installment here today—collects the dispatches of an architecture critic with personal ties to the bloody conflict who is assigned to review the museum’s new Reconciliation Wing.

The authors explain: “The Training Commission is a speculative fiction newsletter about the compromises and consequences of applying technological solutionism to collective trauma. The USA, still reeling from a civil war colloquially referred to as the Shitstorm, has adopted an algorithmic society to free the nation from the pain of governing itself.” It’s also a hell of a story. There will be six installments in all, arriving weekly—subscribe here to receive the next five direct, as they say, to your inbox. Enjoy. -the ed

From: Aoife T <[email protected]> Subject: re: This is a bad idea Date: May 11, 2038 3:49 PM EDT To: Ellen Leavitt <[email protected]>

I understand why you think that would work, Ellen, but aside from generally having no interest in putting my personal life on display like that, I really don’t think me writing a tearjerker op-ed about a traumatizing exhibition display is going to get the Smithsonian to change their minds so much as convince them that the controversy will draw crowds. I’d rather deal with them through backchannels with my mom and sister on board, try to make this all go away quietly before the museum opens.

Thanks for the Kilfe token, I just saw it come through on the ledger. I’ll be running the runnable parts of the draft in my newsletter, I guess. Sorry again to let you down on this. I might have a beat on something interesting soon–too early to say but it means I think I’ll be down in DC for at least another week.

From: Aoife T <[email protected]> Subject: Some Things Don’t Belong In A Museum Date: May 12, 2038 4:30:58 PM EDT To: [email protected]

Apologies that it’s been a while since the last one of these. I’ve been busy, not successful busy, mostly pitching pieces in my new/old specialty. You’d think a contemporary moment so focused on rebuilding America would give some kind of shit about architecture, but uhm, nope.

What follows began as a review of the new Reconciliation Wing of the Smithsonian which a Very Kind Editor cherry-picked me for. It’s good to get paid to visit my hometown because, as my regular readers know, I will otherwise avoid the District like the sweaty American bog it is. I was apparently desperate enough for work to imagine the Reconciliation Wing might not feature an intersection with my own personal history, which, of course, was deeply delusional, and I took myself out of the game in a semi-dramatic fashion. Suffice to say, currently I’m fine but couldn’t really file something this incomplete so I’m sharing what parts of it could be salvaged here.

As seen from the National Mall ferry, the finally-completed Reconciliation Wing of the Smithsonian American History Museum is a major architectural interruption in the capitol’s low-lying landscape of retrofitted and elevated 20th-century buildings–which is ironic, considering how much attention went to making it seamlessly connect to the natural systems of the Anacostia canals. The first new construction project on the Mall since the creation of the DC canal system, the Reconciliation Wing has been subject of curiosity not only as an opening move in historicizing the National Shitstorm (ahem, The Interstate Conflict) but also as a formal progression in post-Capitol architecture. (Unless, of course, you believe that the bare-chested, perpetually shouting hologram of Alex Jones in the rear sculpture garden of the Newseum cannot be topped.)

The wing’s designer, Kay Mangakāhia, was a controversial selection from the Smithsonian and Ashburn Institute’s open call for submissions. An intern at Bjarke Ingels Group at the time, Mangakāhia was notable not only for her age (at twenty-two, she was barely ten at the time the Ashburn Accords were even signed) but her permaculture-infused proposal. The mycelium buttresses and living fungal structures of the Reconciliation Wing are now in high demand, but it took Mangakāhia’s persistence and the algorithm’s faith in her design to reach this plateau. The thriving structure’s delicate complexity and environmental pragmatism reflect the oft-quoted line from Mangakāhia’s original proposal: “survival without poetics is a carceral existence.”

One can’t say such an attitude pervades the exhibits in the Reconciliation Wing. Upon entry, a flickering series of Extremely Relatable Human Faces projected on black plinths greet visitors. The visages display a fairly narrow scale of emotions between Makes You Think and Slight but Telling Emotional Pain but somehow they manage to be all very specific. No context is provided. Given the purpose of the wing, one might suspect that these are some of the IRL victims of what the museum seems to have decided we’re calling “The First Algorithmic Society.”

Only upon arriving at a small, dim aperture is context provided: the portraits are all visuals generated by AIs developed pre-Shitstorm, let loose to slither upstream into visitors’ phones. They cull contact info, pictures, bank account etc. and put together a monstermash of the type of person you’re most likely to have an empathetic reaction to, then plugged said persona into the the loop, along with the last fifty or so visitors’.

This led to the other journalists in attendance performing variations on the exhausted sigh, since recent years have seen around half a dozen gallery shows in NYC using some version of this shock tactic (though, to be fair, rarely with the technical success of the Reconciliation Wing). While this installation is no doubt supposed to primarily remind visitors of the prevailing ease with which corporations accessed our pocket technological unconsciousnesses pre-Ashburn, it also serves the dual purpose of showing how vulnerable Palantir’s National Firewall is to even ridiculously outdated tech. Hence why the feds keeps running that Don’t Bring Your Phone to China/Don’t Actually Go to China Ever awareness campaign. (It shouldn’t surprise you that Vera’s written about this. Read her shit!)

Next is a long, narrow room skirted on the left by an unbroken screen which features a 1990s techno-thriller code waterfall with, again, no context. On the right runs a series of pictures, videos and artifacts designed to shock viewers into clubsterbomb memories–the remnants of a Google bus retrofitted and weaponized into a battering ram, that famous photo of the National Guard standing down at one of the many early BLM standoffs (everyone remembers the photo, never the standoff), a yellowing final print edition of the Washington Post.

To be fair, the Smithsonian’s only getting a fraction of the archival materials collected by the Ashburn Institute as part of the truth and reconciliation process. (This controversy–the splintering of the archive and intra-federal agency squabbles over it–does not get a mention in the exhibition.) Of course they went with the most bombastic acquisitions. But for all the attempted sensory overload, the wall text and captions are jarringly milquetoast, acquiescing to the kind of both-sides-ism that heavily aided the collapse of consensus truths in the first place. I wondered what kind of exhibit might have emerged had the Smithsonian received the full archives of the Training Commission–side note, has anyone ever actually referred to it as the Ashburn Truth and Reconciliation Council For A New American Consensus outside of official documents? Even Darcy Lawson called it the TC in her fucking victory lap TED Talk last year. When the director of the Ashburn Institute has embraced a term originally coined and deployed by critics of the project it seems like it might be time to drop the formalities.

Presumably, the TC is at least acknowledged in the exhibition. Considering that it enabled UBI, closed (almost) every prison in the country, and effectively automated the office of the Presidency out of existence, it would have to be. But I didn’t get that far.

(Here endeth the non-article.)