#openshift architecture

Explore tagged Tumblr posts

Text

Cloud-Native Development in the USA: A Comprehensive Guide

Introduction

Cloud-native development is transforming how businesses in the USA build, deploy, and scale applications. By leveraging cloud infrastructure, microservices, containers, and DevOps, organizations can enhance agility, improve scalability, and drive innovation.

As cloud computing adoption grows, cloud-native development has become a crucial strategy for enterprises looking to optimize performance and reduce infrastructure costs. In this guide, we’ll explore the fundamentals, benefits, key technologies, best practices, top service providers, industry impact, and future trends of cloud-native development in the USA.

What is Cloud-Native Development?

Cloud-native development refers to designing, building, and deploying applications optimized for cloud environments. Unlike traditional monolithic applications, cloud-native solutions utilize a microservices architecture, containerization, and continuous integration/continuous deployment (CI/CD) pipelines for faster and more efficient software delivery.

Key Benefits of Cloud-Native Development

1. Scalability

Cloud-native applications can dynamically scale based on demand, ensuring optimal performance without unnecessary resource consumption.

2. Agility & Faster Deployment

By leveraging DevOps and CI/CD pipelines, cloud-native development accelerates application releases, reducing time-to-market.

3. Cost Efficiency

Organizations only pay for the cloud resources they use, eliminating the need for expensive on-premise infrastructure.

4. Resilience & High Availability

Cloud-native applications are designed for fault tolerance, ensuring minimal downtime and automatic recovery.

5. Improved Security

Built-in cloud security features, automated compliance checks, and container isolation enhance application security.

Key Technologies in Cloud-Native Development

1. Microservices Architecture

Microservices break applications into smaller, independent services that communicate via APIs, improving maintainability and scalability.

2. Containers & Kubernetes

Technologies like Docker and Kubernetes allow for efficient container orchestration, making application deployment seamless across cloud environments.

3. Serverless Computing

Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions eliminate the need for managing infrastructure by running code in response to events.

4. DevOps & CI/CD

Automated build, test, and deployment processes streamline software development, ensuring rapid and reliable releases.

5. API-First Development

APIs enable seamless integration between services, facilitating interoperability across cloud environments.

Best Practices for Cloud-Native Development

1. Adopt a DevOps Culture

Encourage collaboration between development and operations teams to ensure efficient workflows.

2. Implement Infrastructure as Code (IaC)

Tools like Terraform and AWS CloudFormation help automate infrastructure provisioning and management.

3. Use Observability & Monitoring

Employ logging, monitoring, and tracing solutions like Prometheus, Grafana, and ELK Stack to gain insights into application performance.

4. Optimize for Security

Embed security best practices in the development lifecycle, using tools like Snyk, Aqua Security, and Prisma Cloud.

5. Focus on Automation

Automate testing, deployments, and scaling to improve efficiency and reduce human error.

Top Cloud-Native Development Service Providers in the USA

1. AWS Cloud-Native Services

Amazon Web Services offers a comprehensive suite of cloud-native tools, including AWS Lambda, ECS, EKS, and API Gateway.

2. Microsoft Azure

Azure’s cloud-native services include Azure Kubernetes Service (AKS), Azure Functions, and DevOps tools.

3. Google Cloud Platform (GCP)

GCP provides Kubernetes Engine (GKE), Cloud Run, and Anthos for cloud-native development.

4. IBM Cloud & Red Hat OpenShift

IBM Cloud and OpenShift focus on hybrid cloud-native solutions for enterprises.

5. Accenture Cloud-First

Accenture helps businesses adopt cloud-native strategies with AI-driven automation.

6. ThoughtWorks

ThoughtWorks specializes in agile cloud-native transformation and DevOps consulting.

Industry Impact of Cloud-Native Development in the USA

1. Financial Services

Banks and fintech companies use cloud-native applications to enhance security, compliance, and real-time data processing.

2. Healthcare

Cloud-native solutions improve patient data accessibility, enable telemedicine, and support AI-driven diagnostics.

3. E-commerce & Retail

Retailers leverage cloud-native technologies to optimize supply chain management and enhance customer experiences.

4. Media & Entertainment

Streaming services utilize cloud-native development for scalable content delivery and personalization.

Future Trends in Cloud-Native Development

1. Multi-Cloud & Hybrid Cloud Adoption

Businesses will increasingly adopt multi-cloud and hybrid cloud strategies for flexibility and risk mitigation.

2. AI & Machine Learning Integration

AI-driven automation will enhance DevOps workflows and predictive analytics in cloud-native applications.

3. Edge Computing

Processing data closer to the source will improve performance and reduce latency for cloud-native applications.

4. Enhanced Security Measures

Zero-trust security models and AI-driven threat detection will become integral to cloud-native architectures.

Conclusion

Cloud-native development is reshaping how businesses in the USA innovate, scale, and optimize operations. By leveraging microservices, containers, DevOps, and automation, organizations can achieve agility, cost-efficiency, and resilience. As the cloud-native ecosystem continues to evolve, staying ahead of trends and adopting best practices will be essential for businesses aiming to thrive in the digital era.

1 note

·

View note

Text

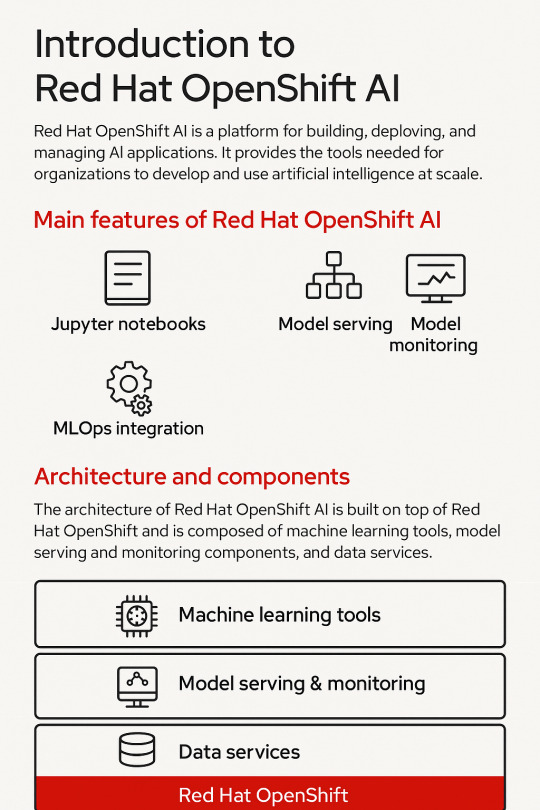

Introduction to Red Hat OpenShift AI: Features, Architecture & Components

In today’s data-driven world, organizations need a scalable, secure, and flexible platform to build, deploy, and manage artificial intelligence (AI) and machine learning (ML) models. Red Hat OpenShift AI is built precisely for that. It provides a consistent, Kubernetes-native platform for MLOps, integrating open-source tools, enterprise-grade support, and cloud-native flexibility.

Let’s break down the key features, architecture, and components that make OpenShift AI a powerful platform for AI innovation.

🔍 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly known as OpenShift Data Science) is a fully supported, enterprise-ready platform that brings together tools for data scientists, ML engineers, and DevOps teams. It enables rapid model development, training, and deployment on the Red Hat OpenShift Container Platform.

🚀 Key Features of OpenShift AI

1. Built for MLOps

OpenShift AI supports the entire ML lifecycle—from experimentation to deployment—within a consistent, containerized environment.

2. Integrated Jupyter Notebooks

Data scientists can use Jupyter notebooks pre-integrated into the platform, allowing quick experimentation with data and models.

3. Model Training and Serving

Use Kubernetes to scale model training jobs and deploy inference services using tools like KServe and Seldon Core.

4. Security and Governance

OpenShift AI integrates enterprise-grade security, role-based access controls (RBAC), and policy enforcement using OpenShift’s built-in features.

5. Support for Open Source Tools

Seamless integration with open-source frameworks like TensorFlow, PyTorch, Scikit-learn, and ONNX for maximum flexibility.

6. Hybrid and Multicloud Ready

You can run OpenShift AI on any OpenShift cluster—on-premise or across cloud providers like AWS, Azure, and GCP.

🧠 OpenShift AI Architecture Overview

Red Hat OpenShift AI builds upon OpenShift’s robust Kubernetes platform, adding specific components to support the AI/ML workflows. The architecture broadly consists of:

1. User Interface Layer

JupyterHub: Multi-user Jupyter notebook support.

Dashboard: UI for managing projects, models, and pipelines.

2. Model Development Layer

Notebooks: Containerized environments with GPU/CPU options.

Data Connectors: Access to S3, Ceph, or other object storage for datasets.

3. Training and Pipeline Layer

Open Data Hub and Kubeflow Pipelines: Automate ML workflows.

Ray, MPI, and Horovod: For distributed training jobs.

4. Inference Layer

KServe/Seldon: Model serving at scale with REST and gRPC endpoints.

Model Monitoring: Metrics and performance tracking for live models.

5. Storage and Resource Management

Ceph / OpenShift Data Foundation: Persistent storage for model artifacts and datasets.

GPU Scheduling and Node Management: Leverages OpenShift for optimized hardware utilization.

🧩 Core Components of OpenShift AI

ComponentDescriptionJupyterHubWeb-based development interface for notebooksKServe/SeldonInference serving engines with auto-scalingOpen Data HubML platform tools including Kafka, Spark, and moreKubeflow PipelinesWorkflow orchestration for training pipelinesModelMeshScalable, multi-model servingPrometheus + GrafanaMonitoring and dashboarding for models and infrastructureOpenShift PipelinesCI/CD for ML workflows using Tekton

🌎 Use Cases

Financial Services: Fraud detection using real-time ML models

Healthcare: Predictive diagnostics and patient risk models

Retail: Personalized recommendations powered by AI

Manufacturing: Predictive maintenance and quality control

🏁 Final Thoughts

Red Hat OpenShift AI brings together the best of Kubernetes, open-source innovation, and enterprise-level security to enable real-world AI at scale. Whether you’re building a simple classifier or deploying a complex deep learning pipeline, OpenShift AI provides a unified, scalable, and production-grade platform.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Service Mesh Federation Across Clusters in OpenShift: Unlocking True Multi-Cluster Microservices

In today’s cloud-native world, enterprises are scaling beyond a single Kubernetes cluster. But with multiple OpenShift clusters comes the challenge of cross-cluster communication, policy enforcement, traffic control, and observability.

That’s where Service Mesh Federation becomes a game-changer.

🚩 What Is Service Mesh Federation?

Service Mesh Federation allows two or more OpenShift Service Mesh environments (powered by Istio) to share services, policies, and trust boundaries while maintaining cluster autonomy.

It enables microservices deployed across clusters to discover and communicate with each other securely, transparently, and intelligently.

🏗️ Why Federation Matters in Multi-Cluster OpenShift Deployments?

OpenShift is increasingly deployed in hybrid or multi-cluster environments for:

🔄 High availability and disaster recovery

🌍 Multi-region or edge computing strategies

🧪 Environment separation (Dev / QA / Prod)

🛡️ Regulatory and data residency compliance

Federation makes service-to-service communication seamless and secure across these environments.

⚙️ How Federation Works in OpenShift Service Mesh

Here’s how it typically works:

Two Mesh Control Planes (one per cluster) are configured with mutual trust domains.

ServiceExport and ServiceImport resources are used to control which services are shared.

mTLS encryption ensures secure service communication.

Istio Gateways and Envoy sidecars handle traffic routing across clusters.

Kiali and Jaeger provide unified observability.

🔐 Security First: Trust Domains & Identity

Service Mesh Federation uses SPIFFE IDs and trust domains to ensure that only authenticated and authorized services can communicate across clusters. This aligns with Zero Trust security models.

🚀 Use Case: Microservices Split Across Clusters

Imagine you have a frontend service in Cluster A and a backend in Cluster B.

With Federation:

The frontend resolves and connects to the backend as if it's local.

Traffic is encrypted, observable, and policy-driven.

Failovers and retries are automated via Istio rules.

📊 Federation with Red Hat Advanced Cluster Management (ACM)

When combined with Red Hat ACM, OpenShift administrators get:

Centralized policy control

Unified observability

GitOps-based multi-cluster configurations

ACM simplifies the federation process and provides a single pane of glass for governance.

🧪 Challenges and Considerations

Latency: Federation adds network hops; latency-sensitive apps need testing.

Complexity: Managing multiple meshes needs automation and standardization.

TLS Certificates: Careful handling of CA certificates and rotation is key.

🧰 Getting Started

Red Hat’s documentation provides a detailed guide to implement federation:

Enable multi-mesh support via OpenShift Service Mesh Operator

Configure trust domains and gateways

Define exported and imported services

💡 Final Thoughts

Service Mesh Federation is not just a feature—it’s a strategic enabler for scalable, resilient, and secure application architectures across clusters.

As businesses adopt hybrid cloud and edge computing, federation will become the backbone of reliable microservice connectivity.

👉 Ready to federate your OpenShift Service Mesh?

Let’s talk architecture, trust domains, and production readiness. For more details www.hawkstack.com

0 notes

Text

Governance Without Boundaries - CP4D and Red Hat Integration

The rising complexity of hybrid and multi-cloud environments calls for stronger and more unified data governance. When systems operate in isolation, they introduce risks, make compliance harder, and slow down decision-making. As digital ecosystems expand, consistent governance across infrastructure becomes more than a goal, it becomes a necessity. A cohesive strategy helps maintain control as platforms and regions scale together.

IBM Cloud Pak for Data (CP4D), working alongside Red Hat OpenShift, offers a container-based platform that addresses these challenges head-on. That setup makes it easier to scale governance consistently, no matter the environment. With container orchestration in place, governance rules stay enforced regardless of where the data lives. This alignment helps prevent policy drift and supports data integrity in high-compliance sectors.

Watson Knowledge Catalog (WKC) sits at the heart of CP4D’s governance tools, offering features for data discovery, classification, and controlled access. WKC lets teams organize assets, apply consistent metadata, and manage permissions across hybrid or multi-cloud systems. Centralized oversight reduces complexity and brings transparency to how data is used. It also facilitates collaboration by giving teams a shared framework for managing data responsibilities.

Red Hat OpenShift brings added flexibility by letting services like data lineage, cataloging, and enforcement run in modular, scalable containers. These components adjust to different workloads and grow as demand increases. That level of adaptability is key for teams managing dynamic operations across multiple functions. This flexibility ensures governance processes can evolve alongside changing application architectures.

Kubernetes, which powers OpenShift’s orchestration, takes on governance operations through automated workload scheduling and smart resource use. Its automation ensures steady performance while still meeting privacy and audit standards. By handling deployment and scaling behind the scenes, it reduces the burden on IT teams. With fewer manual tasks, organizations can focus more on long-term strategy.

A global business responding to data subject access requests (DSARs) across different jurisdictions can use CP4D to streamline the entire process. These built-in tools support compliant responses under GDPR, CCPA, and other regulatory frameworks. Faster identification and retrieval of relevant data helps reduce penalties while improving public trust.

CP4D’s tools for discovering and classifying data work across formats, from real-time streams to long-term storage. They help organizations identify sensitive content, apply safeguards, and stay aligned with privacy rules. Automation cuts down on human error and reinforces sound data handling practices. As data volumes grow, these automated capabilities help maintain performance and consistency.

Lineage tracking offers a clear view of how data moves through DevOps workflows and analytics pipelines. By following its origin, transformation, and application, teams can trace issues, confirm quality, and document compliance. CP4D’s built-in tools make it easier to maintain trust in how data is handled across environments.

Tight integration with enterprise identity and access management (IAM) systems strengthens governance through precise controls. It ensures only the right people have access to sensitive data, aligning with internal security frameworks. Centralized identity systems also simplify onboarding, access changes, and audit trails.

When governance tools are built into the data lifecycle from the beginning, compliance becomes part of the system. It is not something added later. This helps avoid retroactive fixes and supports responsible practices from day one. Governance shifts from a task to a foundation of how data is managed.

As regulations multiply and workloads shift, scalable governance is no longer a luxury. It is a requirement. Open, container-driven architectures give organizations the flexibility to meet evolving standards, secure their data, and adapt quickly.

0 notes

Text

Cloud Native Applications Market Set for Massive Expansion Through 2032

Cloud Native Applications Market was valued at USD 6.49 billion in 2023 and is expected to reach USD 45.71 billion by 2032, growing at a CAGR of 24.29% from 2024-2032.

Cloud Native Applications Market is witnessing rapid growth as enterprises accelerate digital transformation through agile, scalable, and containerized solutions. From startups to global corporations, organizations are shifting to cloud-native architectures to drive innovation, optimize performance, and reduce operational complexity. This shift is powered by the adoption of Kubernetes, microservices, DevOps, and continuous delivery frameworks.

U.S. Demand Soars Amid Widespread Digital Modernization Across Sectors

Cloud Native Applications Market is becoming a strategic focus for companies aiming to modernize legacy infrastructure and future-proof their technology stacks. The growing demand for flexibility, faster release cycles, and cost efficiency is pushing developers and IT leaders toward cloud-native ecosystems across various industries.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6545

Market Keyplayers:

Google LLC (Google Kubernetes Engine, Firebase)

International Business Machines Corporation (IBM Cloud, IBM Cloud Pak)

Infosys Technologies Private Limited (Infosys Cobalt, Cloud Ecosystem)

Larsen & Toubro Infotech (LTI Cloud, LTI Digital Transformation)

Microsoft Corporation (Azure Kubernetes Service, Azure Functions)

Oracle Corporation (Oracle Cloud Infrastructure, Oracle Autonomous Database)

Red Hat (OpenShift, Ansible Automation Platform)

SAP SE (SAP Business Technology Platform, SAP S/4HANA Cloud)

VMware, Inc. (VMware Tanzu, VMware Cloud on AWS)

Alibaba Cloud (Alibaba Cloud Container Service, Alibaba Cloud Elastic Compute Service)

Apexon (Cloud-Native Solutions, Cloud Application Modernization)

Bacancy Technology (Cloud Development, Cloud-Native Microservices)

Citrix Systems, Inc. (Citrix Workspace, Citrix Cloud)

Harness (Harness Continuous Delivery, Harness Feature Flags)

Cognizant Technology Solutions Corp (Cognizant Cloud, Cognizant Cloud-Native Solutions)

Ekco (Cloud Infrastructure Services, Cloud Application Development)

Huawei Technologies Co. Ltd. (Huawei Cloud, Huawei Cloud Container Engine)

R Systems (R Systems Cloud Platform, R Systems DevOps Solutions)

Scality (Scality RING, Scality Cloud Storage)

Sciencesoft (Cloud-Native Development, Cloud Integration Solutions)

Market Analysis

The Cloud Native Applications Market is being fueled by increasing enterprise need for agility, resilience, and faster deployment cycles. Organizations are adopting cloud-native strategies not just for scalability, but to gain a competitive edge in rapidly evolving digital environments. Cloud-native technologies also help reduce downtime, improve user experiences, and enable continuous innovation.

In the U.S., early cloud adoption and strong developer ecosystems have made it a leading market. Europe follows with strong enterprise demand and compliance-driven cloud modernization initiatives, creating a favorable environment for hybrid and multi-cloud solutions.

Market Trends

Widespread adoption of Kubernetes and serverless architectures

Rise of microservices and containerization for modular development

DevSecOps integration to enhance cloud-native security posture

Increased reliance on CI/CD pipelines to support faster releases

Growth in open-source tools supporting cloud-native ecosystems

Surge in platform engineering and internal developer platforms

Expansion of multi-cloud and hybrid deployment strategies

Market Scope

As organizations demand more resilient, agile, and responsive software environments, the scope of the Cloud Native Applications Market is expanding across industries.

Rapid development and deployment of business-critical applications

Cloud-native adoption across BFSI, healthcare, e-commerce, and manufacturing

Enhanced developer productivity through platform-as-a-service (PaaS) models

Shift toward edge-native and event-driven architectures

Demand for scalable solutions to support AI/ML and big data workloads

Increased use of APIs for service integration and flexibility

These applications are reshaping enterprise IT strategies, driving alignment between development, operations, and business outcomes.

Forecast Outlook

The future of the Cloud Native Applications Market is marked by continuous innovation, fueled by automation, observability, and AI integration. As businesses shift toward platform-centric models and global cloud infrastructure matures, cloud-native frameworks will be at the core of software delivery. The market’s trajectory is strengthened by growing investments in cloud-native platforms by hyperscalers and startups alike, ensuring long-term scalability and business agility.

Access Complete Report: https://www.snsinsider.com/reports/cloud-native-applications-market-6545

Conclusion

Cloud-native isn’t just a technology trend—it’s the foundation of the next-generation enterprise. As companies across the U.S. and Europe seek agility, resilience, and innovation, cloud-native applications offer the strategic advantage needed to outpace disruption. The businesses that embrace this transformation today are setting the standard for tomorrow’s digital success.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Related Reports:

U.S.A embraces seamless living as Smart Remote Market sees rapid innovation and growth

U.S.A is rapidly adopting virtualization security technologies to safeguard evolving cloud infrastructures

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Mail us: [email protected]

0 notes

Text

DevOps Services at CloudMinister Technologies: Tailored Solutions for Scalable Growth

In a business landscape where technology evolves rapidly and customer expectations continue to rise, enterprises can no longer rely on generic IT workflows. Every organization has a distinct set of operational requirements, compliance mandates, infrastructure dependencies, and delivery goals. Recognizing these unique demands, CloudMinister Technologies offers Customized DevOps Services — engineered specifically to match your organization's structure, tools, and objectives.

DevOps is not a one-size-fits-all practice. It thrives on precision, adaptability, and optimization. At CloudMinister Technologies, we provide DevOps solutions that are meticulously tailored to fit your current systems while preparing you for the scale, speed, and security of tomorrow’s digital ecosystem.

Understanding the Need for Customized DevOps

While traditional DevOps practices bring automation and agility into the software delivery cycle, businesses often face challenges when trying to implement generic solutions. Issues such as toolchain misalignment, infrastructure incompatibility, compliance mismatches, and inefficient workflows often emerge, limiting the effectiveness of standard DevOps models.

CloudMinister Technologies bridges these gaps through in-depth discovery, personalized architecture planning, and customized automation flows. Our team of certified DevOps engineers works alongside your developers and operations staff to build systems that work the way your organization works.

Our Customized DevOps Service Offerings

Personalized DevOps Assessment

Every engagement at CloudMinister begins with a thorough analysis of your existing systems and workflows. This includes evaluating:

Development and deployment lifecycles

Existing tools and platforms

Current pain points in collaboration or release processes

Security protocols and compliance requirements

Cloud and on-premise infrastructure configurations

We use this information to design a roadmap that matches your business model, technical environment, and future expansion goals.

Tailored CI/CD Pipeline Development

Continuous Integration and Continuous Deployment (CI/CD) pipelines are essential for accelerating software releases. At CloudMinister, we create CI/CD frameworks that are tailored to your workflow, integrating seamlessly with your repositories, testing tools, and production environments. These pipelines are built to support:

Automated testing at each stage of the build

Secure, multi-environment deployments

Blue-green or canary releases based on your delivery strategy

Integration with tools like GitLab, Jenkins, Bitbucket, and others

Infrastructure as Code (IaC) Customized for Your Stack

We use leading Infrastructure as Code tools such as Terraform, AWS CloudFormation, and Ansible to help automate infrastructure provisioning. Each deployment is configured based on your stack, environment type, and scalability needs—whether cloud-native, hybrid, or legacy. This ensures repeatable deployments, fewer manual errors, and better control over your resources.

Customized Containerization and Orchestration

Containerization is at the core of modern DevOps practices. Whether your application is built for Docker, Kubernetes, or OpenShift, our team tailors the container ecosystem to suit your service dependencies, traffic patterns, and scalability requirements. From stateless applications to persistent volume management, we ensure your services are optimized for performance and reliability.

Monitoring and Logging Built Around Your Metrics

Monitoring and observability are not just about uptime—they are about capturing the right metrics that define your business’s success. We deploy customized dashboards and logging frameworks using tools like Prometheus, Grafana, Loki, and the ELK stack. These systems are designed to track application behavior, infrastructure health, and business-specific KPIs in real-time.

DevSecOps Tailored for Regulatory Compliance

Security is integrated into every stage of our DevOps pipelines through our DevSecOps methodology. We customize your pipeline to include vulnerability scanning, access control policies, automated compliance reporting, and secret management using tools such as Vault, SonarQube, and Aqua. Whether your business operates in finance, healthcare, or e-commerce, our solutions ensure your system meets all necessary compliance standards like GDPR, HIPAA, or PCI-DSS.

Case Study: Optimizing DevOps for a FinTech Organization

A growing FinTech firm approached CloudMinister Technologies with a need to modernize their software delivery process. Their primary challenges included slow deployment cycles, manual error-prone processes, and compliance difficulties.

After an in-depth consultation, our team proposed a custom DevOps solution which included:

Building a tailored CI/CD pipeline using GitLab and Jenkins

Automating infrastructure on AWS with Terraform

Implementing Kubernetes for service orchestration

Integrating Vault for secure secret management

Enforcing compliance checks with automated auditing

As a result, the company achieved:

A 70 percent reduction in deployment time

Streamlined compliance reporting with automated logging

Full visibility into release performance

Better collaboration between development and operations teams

This engagement not only improved their operational efficiency but also gave them the confidence to scale rapidly.

Business Benefits of Customized DevOps Solutions

Partnering with CloudMinister Technologies for customized DevOps implementation offers several strategic benefits:

Streamlined deployment processes tailored to your workflow

Reduced operational costs through optimized resource usage

Increased release frequency with lower failure rates

Enhanced collaboration between development, operations, and security teams

Scalable infrastructure with version-controlled configurations

Real-time observability of application and infrastructure health

End-to-end security integration with compliance assurance

Industries We Serve

We provide specialized DevOps services for diverse industries, each with its own regulatory, technological, and operational needs:

Financial Services and FinTech

Healthcare and Life Sciences

Retail and eCommerce

Software as a Service (SaaS) providers

EdTech and eLearning platforms

Media, Gaming, and Entertainment

Each solution is uniquely tailored to meet industry standards, customer expectations, and digital transformation goals.

Why CloudMinister Technologies?

CloudMinister Technologies stands out for its commitment to client-centric innovation. Our strength lies not only in the tools we use, but in how we customize them to empower your business.

What makes us the right DevOps partner:

A decade of experience in DevOps, cloud management, and server infrastructure

Certified engineers with expertise in AWS, Azure, Kubernetes, Docker, and CI/CD platforms

24/7 client support with proactive monitoring and incident response

Transparent engagement models and flexible service packages

Proven track record of successful enterprise DevOps transformations

Frequently Asked Questions

What does customization mean in DevOps services? Customization means aligning tools, pipelines, automation processes, and infrastructure management based on your business’s existing systems, goals, and compliance requirements.

Can your DevOps services be implemented on AWS, Azure, or Google Cloud? Yes, we provide cloud-specific DevOps solutions, including tailored infrastructure management, CI/CD automation, container orchestration, and security configuration.

Do you support hybrid cloud and legacy systems? Absolutely. We create hybrid pipelines that integrate seamlessly with both modern cloud-native platforms and legacy infrastructure.

How long does it take to implement a customized DevOps pipeline? The timeline varies based on the complexity of the environment. Typically, initial deployment starts within two to six weeks post-assessment.

What if we already have a DevOps process in place? We analyze your current DevOps setup and enhance it with better tools, automation, and customized configurations to maximize efficiency and reliability.

Ready to Transform Your Operations?

At CloudMinister Technologies, we don’t just implement DevOps—we tailor it to accelerate your success. Whether you are a startup looking to scale or an enterprise aiming to modernize legacy systems, our experts are here to deliver a DevOps framework that is as unique as your business.

Contact us today to get started with a personalized consultation.

Visit: www.cloudminister.com Email: [email protected]

0 notes

Text

🌐Openshift Middleware Services Transform Your IT with Openshift Middleware

☁️Hybrid Cloud Flexibility Easily manage hybrid cloud environments with Openshift solutions

📦Containerized Deployment Streamline app deployment with container based architecture

⚙️Scalability & Automation Automatically scale applications based on real time demand

🔐Enterprise-Grade Security Benefit from robust security features and compliance support

🚀Empower Your Cloud Journey with Openshift 📧 Email: [email protected] 📞 Phone: +91 86556 16540

To know more about Openshift Middleware Services click here 👉 https://simplelogic-it.com/openshift-middleware-services/

Visit our website 👉 https://simplelogic-it.com/

💻 Explore insights on the latest in #technology on our Blog Page 👉 https://simplelogic-it.com/blogs/

🚀 Ready for your next career move? Check out our #careers page for exciting opportunities 👉 https://simplelogic-it.com/careers/

#Middleware#MiddlewareServices#Openshift#Cloud#Flexibility#Deployment#Application#Security#Support#CloudServices#OpenshiftMiddleware#SimpleLogicIT#MakingITSimple#MakeITSimple#SimpleLogic#ITServices#ITConsulting

0 notes

Text

Hybrid Cloud Application: The Smart Future of Business IT

Introduction

In today’s digital-first environment, businesses are constantly seeking scalable, flexible, and cost-effective solutions to stay competitive. One solution that is gaining rapid traction is the hybrid cloud application model. Combining the best of public and private cloud environments, hybrid cloud applications enable businesses to maximize performance while maintaining control and security.

This 2000-word comprehensive article on hybrid cloud applications explains what they are, why they matter, how they work, their benefits, and how businesses can use them effectively. We also include real-user reviews, expert insights, and FAQs to help guide your cloud journey.

What is a Hybrid Cloud Application?

A hybrid cloud application is a software solution that operates across both public and private cloud environments. It enables data, services, and workflows to move seamlessly between the two, offering flexibility and optimization in terms of cost, performance, and security.

For example, a business might host sensitive customer data in a private cloud while running less critical workloads on a public cloud like AWS, Azure, or Google Cloud Platform.

Key Components of Hybrid Cloud Applications

Public Cloud Services – Scalable and cost-effective compute and storage offered by providers like AWS, Azure, and GCP.

Private Cloud Infrastructure – More secure environments, either on-premises or managed by a third-party.

Middleware/Integration Tools – Platforms that ensure communication and data sharing between cloud environments.

Application Orchestration – Manages application deployment and performance across both clouds.

Why Choose a Hybrid Cloud Application Model?

1. Flexibility

Run workloads where they make the most sense, optimizing both performance and cost.

2. Security and Compliance

Sensitive data can remain in a private cloud to meet regulatory requirements.

3. Scalability

Burst into public cloud resources when private cloud capacity is reached.

4. Business Continuity

Maintain uptime and minimize downtime with distributed architecture.

5. Cost Efficiency

Avoid overprovisioning private infrastructure while still meeting demand spikes.

Real-World Use Cases of Hybrid Cloud Applications

1. Healthcare

Protect sensitive patient data in a private cloud while using public cloud resources for analytics and AI.

2. Finance

Securely handle customer transactions and compliance data, while leveraging the cloud for large-scale computations.

3. Retail and E-Commerce

Manage customer interactions and seasonal traffic spikes efficiently.

4. Manufacturing

Enable remote monitoring and IoT integrations across factory units using hybrid cloud applications.

5. Education

Store student records securely while using cloud platforms for learning management systems.

Benefits of Hybrid Cloud Applications

Enhanced Agility

Better Resource Utilization

Reduced Latency

Compliance Made Easier

Risk Mitigation

Simplified Workload Management

Tools and Platforms Supporting Hybrid Cloud

Microsoft Azure Arc – Extends Azure services and management to any infrastructure.

AWS Outposts – Run AWS infrastructure and services on-premises.

Google Anthos – Manage applications across multiple clouds.

VMware Cloud Foundation – Hybrid solution for virtual machines and containers.

Red Hat OpenShift – Kubernetes-based platform for hybrid deployment.

Best Practices for Developing Hybrid Cloud Applications

Design for Portability Use containers and microservices to enable seamless movement between clouds.

Ensure Security Implement zero-trust architectures, encryption, and access control.

Automate and Monitor Use DevOps and continuous monitoring tools to maintain performance and compliance.

Choose the Right Partner Work with experienced providers who understand hybrid cloud deployment strategies.

Regular Testing and Backup Test failover scenarios and ensure robust backup solutions are in place.

Reviews from Industry Professionals

Amrita Singh, Cloud Engineer at FinCloud Solutions:

"Implementing hybrid cloud applications helped us reduce latency by 40% and improve client satisfaction."

John Meadows, CTO at EdTechNext:

"Our LMS platform runs on a hybrid model. We’ve achieved excellent uptime and student experience during peak loads."

Rahul Varma, Data Security Specialist:

"For compliance-heavy environments like finance and healthcare, hybrid cloud is a no-brainer."

Challenges and How to Overcome Them

1. Complex Architecture

Solution: Simplify with orchestration tools and automation.

2. Integration Difficulties

Solution: Use APIs and middleware platforms for seamless data exchange.

3. Cost Overruns

Solution: Use cloud cost optimization tools like Azure Advisor, AWS Cost Explorer.

4. Security Risks

Solution: Implement multi-layered security protocols and conduct regular audits.

FAQ: Hybrid Cloud Application

Q1: What is the main advantage of a hybrid cloud application?

A: It combines the strengths of public and private clouds for flexibility, scalability, and security.

Q2: Is hybrid cloud suitable for small businesses?

A: Yes, especially those with fluctuating workloads or compliance needs.

Q3: How secure is a hybrid cloud application?

A: When properly configured, hybrid cloud applications can be as secure as traditional setups.

Q4: Can hybrid cloud reduce IT costs?

A: Yes. By only paying for public cloud usage as needed, and avoiding overprovisioning private servers.

Q5: How do you monitor a hybrid cloud application?

A: With cloud management platforms and monitoring tools like Datadog, Splunk, or Prometheus.

Q6: What are the best platforms for hybrid deployment?

A: Azure Arc, Google Anthos, AWS Outposts, and Red Hat OpenShift are top choices.

Conclusion: Hybrid Cloud is the New Normal

The hybrid cloud application model is more than a trend—it’s a strategic evolution that empowers organizations to balance innovation with control. It offers the agility of the cloud without sacrificing the oversight and security of on-premises systems.

If your organization is looking to modernize its IT infrastructure while staying compliant, resilient, and efficient, then hybrid cloud application development is the way forward.

At diglip7.com, we help businesses build scalable, secure, and agile hybrid cloud solutions tailored to their unique needs. Ready to unlock the future? Contact us today to get started.

0 notes

Text

Application Performance Monitoring Market Growth Drivers, Size, Share, Scope, Analysis, Forecast, Growth, and Industry Report 2032

The Application Performance Monitoring Market was valued at USD 7.26 Billion in 2023 and is expected to reach USD 22.81 Billion by 2032, growing at a CAGR of 34.61% over the forecast period 2024-2032.

The Application Performance Monitoring (APM) market is expanding rapidly due to the increasing demand for seamless digital experiences. Businesses are investing in APM solutions to ensure optimal application performance, minimize downtime, and enhance user satisfaction. The rise of cloud computing, AI-driven analytics, and real-time monitoring tools is further accelerating market growth.

The Application Performance Monitoring market continues to evolve as enterprises prioritize application efficiency and system reliability. With the increasing complexity of IT infrastructures and a growing reliance on digital services, organizations are turning to APM solutions to detect, diagnose, and resolve performance bottlenecks in real time. The shift toward microservices, hybrid cloud environments, and edge computing has made APM essential for maintaining operational excellence.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3821

Market Keyplayers:

IBM (IBM Instana, IBM APM)

New Relic (New Relic One, New Relic Browser)

Dynatrace (Dynatrace Full-Stack Monitoring, Dynatrace Application Security)

AppDynamics (AppDynamics APM, AppDynamics Database Monitoring)

Cisco (Cisco AppDynamics, Cisco ACI Analytics)

Splunk Inc. (Splunk Observability Cloud, Splunk IT Service Intelligence)

Micro Focus (Silk Central, LoadRunner)

Broadcom Inc. (CA APM, CA Application Delivery Analysis)

Elastic Search B.V. (Elastic APM, Elastic Stack)

Datadog (Datadog APM, Datadog Real User Monitoring)

Riverbed Technology (SteelCentral APM, SteelHead)

SolarWinds (SolarWinds APM, SolarWinds Network Performance Monitor)

Oracle (Oracle Management Cloud, Oracle Cloud Infrastructure APM)

ServiceNow (ServiceNow APM, ServiceNow Performance Analytics)

Red Hat (Red Hat OpenShift Monitoring, Red Hat Insights)

AppOptics (AppOptics APM, AppOptics Infrastructure Monitoring)

Honeycomb (Honeycomb APM, Honeycomb Distributed Tracing)

Instana (Instana APM, Instana Real User Monitoring)

Scout APM (Scout APM, Scout Error Tracking)

Sentry (Sentry APM, Sentry Error Tracking)

Market Trends Driving Growth

1. AI-Driven Monitoring and Automation

AI and machine learning are revolutionizing APM by enabling predictive analytics, anomaly detection, and automated issue resolution, reducing manual intervention.

2. Cloud-Native and Hybrid APM Solutions

As businesses migrate to cloud and hybrid infrastructures, APM solutions are adapting to provide real-time visibility across on-premises, cloud, and multi-cloud environments.

3. Observability and End-to-End Monitoring

APM is evolving into full-stack observability, integrating application monitoring with network, security, and infrastructure insights for holistic performance analysis.

4. Focus on User Experience and Business Impact

Companies are increasingly adopting APM solutions that correlate application performance with user experience metrics, ensuring optimal service delivery and business continuity.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3821

Market Segmentation:

By Solution

Software

Services

By Deployment

Cloud

On-Premise

By Enterprise Size

SMEs

Large Enterprises

By Access Type

Web APM

Mobile APM

By End User

BFSI

E-Commerce

Manufacturing

Healthcare

Retail

IT and Telecommunications

Media and Entertainment

Academics

Government

Market Analysis: Growth and Key Drivers

Increased Digital Transformation: Enterprises are accelerating cloud adoption and digital services, driving demand for advanced monitoring solutions.

Rising Complexity of IT Environments: Microservices, DevOps, and distributed architectures require comprehensive APM tools for performance optimization.

Growing Demand for Real-Time Analytics: Businesses seek AI-powered insights to proactively detect and resolve performance issues before they impact users.

Compliance and Security Needs: APM solutions help organizations meet regulatory requirements by ensuring application integrity and data security.

Future Prospects: The Road Ahead

1. Expansion of APM into IoT and Edge Computing

As IoT and edge computing continue to grow, APM solutions will evolve to monitor and optimize performance across decentralized infrastructures.

2. Integration with DevOps and Continuous Monitoring

APM will play a crucial role in DevOps pipelines, enabling faster issue resolution and performance optimization throughout the software development lifecycle.

3. Rise of Autonomous APM Systems

AI-driven automation will lead to self-healing applications, where systems can automatically detect, diagnose, and fix performance issues with minimal human intervention.

4. Growth in Industry-Specific APM Solutions

APM vendors will develop specialized solutions for industries like finance, healthcare, and e-commerce, addressing sector-specific performance challenges and compliance needs.

Access Complete Report: https://www.snsinsider.com/reports/application-performance-monitoring-market-3821

Conclusion

The Application Performance Monitoring market is poised for substantial growth as businesses prioritize digital excellence, system resilience, and user experience. With advancements in AI, cloud-native technologies, and observability, APM solutions are becoming more intelligent and proactive. Organizations that invest in next-generation APM tools will gain a competitive edge by ensuring seamless application performance, improving operational efficiency, and enhancing customer satisfaction.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#Application Performance Monitoring market#Application Performance Monitoring market Analysis#Application Performance Monitoring market Scope#Application Performance Monitoring market Growth#Application Performance Monitoring market Share#Application Performance Monitoring market Trends

0 notes

Text

Build Resilient Services with OpenShift Service Mesh

In today’s fast-moving digital world, users expect your applications to be fast, available, and reliable — no matter what. But in distributed systems, especially microservices, failures are inevitable. What matters is how your services handle them.

This is where OpenShift Service Mesh comes in. Built on top of Istio, it gives you the tools to build resilient, fault-tolerant services — without changing your application code.

What Is OpenShift Service Mesh?

OpenShift Service Mesh is Red Hat’s supported distribution of Istio, integrated with Kiali for observability and Jaeger for tracing. It acts as an intelligent layer that sits between your microservices, managing communication, monitoring, security, and resiliency policies.

In simple terms, it helps your services talk smarter, safer, and stronger.

Why Resilience Matters

Without resilience strategies, a single failing service can trigger a chain reaction of crashes and timeouts across your entire application. Think of it as a row of dominos — when one falls, others quickly follow.

With a service mesh, you can stop that from happening by:

Automatically retrying failed requests

Rerouting traffic to healthy services

Setting timeouts to avoid hanging requests

Preventing cascading failures with circuit breakers

Key Resilience Strategies in OpenShift Service Mesh

1. Retries

Sometimes, a temporary glitch (like a network blip or pod restart) causes a request to fail. With retries, the service mesh can automatically try again — improving success rates without user impact.

➡ Example: Retry a failed request up to 3 times with small delays between attempts.

2. Timeouts

Requests that hang too long can eat up resources and clog up your services. By defining timeouts, you make sure slow services don’t bring everything else down.

➡ Example: Set a 2-second timeout for any outbound HTTP request.

3. Circuit Breakers

Circuit breakers monitor the health of your services. If a service becomes unhealthy, the mesh stops sending traffic to it temporarily — giving it time to recover instead of overwhelming it.

➡ Think of it like a fuse — it "breaks" the connection to prevent bigger issues.

4. Failovers

If one service instance goes down, traffic can be automatically rerouted to another healthy instance or region.

➡ This helps maintain availability even during partial outages.

5. Rate Limiting

You can protect services from overload by limiting how many requests they accept over time. It prevents spikes from crashing your backend.

Bonus: All Without Code Changes

One of the biggest advantages of using OpenShift Service Mesh for resilience? You don’t have to touch your application code. All the configurations (like retries, timeouts, circuit breakers) can be defined as part of your service mesh policies.

That means:

Faster implementation

More consistent control across services

Easier troubleshooting and updates

Final Thoughts

Building resilient services isn’t optional anymore — it’s a requirement in cloud-native architectures. OpenShift Service Mesh gives you the tools to protect your applications from real-world issues like latency, failure, and overload.

Whether you're scaling up, deploying new features, or just making your platform stronger, adding resilience with service mesh strategies ensures your applications stay up — even when things go wrong behind the scenes.

Want to keep your services running strong under pressure? Start exploring OpenShift Service Mesh — and build a system that bends, but never breaks.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Top Container Management Tools You Need to Know in 2024

Containers and container management technology have transformed the way we build, deploy, and manage applications. We’ve successfully collected and stored a program and all its dependencies in containers, allowing it to execute reliably across several computing environments.

Some novices to programming may overlook container technology, yet this approach tackles the age-old issue of software functioning differently in production than in development. QKS Group reveals that Container Management Projected to Register a CAGR of 10.20% by 2028

Containers make application development and deployment easier and more efficient, and developers rely on them to complete tasks. However, with more containers comes greater responsibility, and container management software is up to the task.

We’ll review all you need to know about container management so you can utilize, organize, coordinate, and manage huge containers more effectively.

Download the sample report of Market Share: https://qksgroup.com/download-sample-form/market-share-container-management-2023-worldwide-5112

What is Container Management?

Container management refers to the process of managing, scaling, and sustaining containerized applications across several environments. It incorporates container orchestration, which automates container deployment, networking, scaling, and lifecycle management using platforms such as Kubernetes. Effective container management guarantees that applications in the cloud or on-premises infrastructures use resources efficiently, have optimized processes, and are highly available.

How Does Container Management Work?

Container management begins with the development and setup of containers. Each container is pre-configured with all of the components required to execute an application. This guarantees that the application environment is constant throughout the various container deployment situations.

After you’ve constructed your containers, it’s time to focus on the orchestration. This entails automating container deployment and operation in order to manage container scheduling across a cluster of servers. This enables more informed decisions about where to run containers based on resource availability, limitations, and inter-container relationships.

Beyond that, your container management platform will manage scalability and load balancing. As the demand for an application change, these systems dynamically modify the number of active containers, scaling up at peak times and down during quieter moments. They also handle load balancing, which distributes incoming application traffic evenly among all containers.

Download the sample report of Market Forecast: https://qksgroup.com/download-sample-form/market-forecast-container-management-2024-2028-worldwide-4629

Top Container Management Software

Docker

Docker is an open-source software platform that allows you to create, deploy, and manage virtualized application containers on your operating system.

The container contains all the application’s services or functions, as well as its libraries, configuration files, dependencies, and other components.

Apache Mesos

Apache Mesos is an open-source cluster management system and a control plane for effective distribution of computer resources across application delivery platforms known as frameworks.

Amazon Elastic Container Service (ECS)

Amazon ECS is a highly scalable container management platform that supports Docker containers and enables you to efficiently run applications on a controlled cluster of Amazon EC2 instances.

This makes it simple to manage containers as modular services for your applications, eliminating the need to install, administer, and customize your own cluster management infrastructure.

OpenShift

OpenShift is a container management tool developed by RedHat. Its architecture is built around Docker container packaging and a Kubernetes-based cluster management. It also brings together various topics related to application lifecycle management.

Kubernetes

Kubernetes, developed by Google, is the most widely used container management technology. It was provided to the Cloud Native Computing Foundation in 2015 and is now maintained by the Kubernetes community.

Kubernetes soon became a top choice for a standard cluster and container management platform because it was one of the first solutions and is also open source.

Containers are widely used in application development due to their benefits in terms of constant performance, portability, scalability, and resource efficiency. Containers allow developers to bundle programs and services, as well as all their dependencies, into a standardized isolated element that can function smoothly and consistently in a variety of computer environments, simplifying application deployment. The Container Management Market Share, 2023, Worldwide research and the Market Forecast: Container Management, 2024-2028, Worldwide report are critical for acquiring a complete understanding of these emerging threats.

This widespread usage of containerization raises the difficulty of managing many containers, which may be overcome by using container management systems. Container management systems on the market today allow users to generate and manage container images, as well as manage the container lifecycle. They guarantee that infrastructure resources are managed effectively and efficiently, and that they grow in response to user traffic. They also enable container monitoring for performance and faults, which are reported in the form of dashboards and infographics, allowing developers to quickly address any concerns.

Talk To Analyst: https://qksgroup.com/become-client

Conclusion

Containerization frees you from the constraints of an operating system, allowing you to speed development and perhaps expand your user base, so it’s no surprise that it’s the technology underlying more than half of all apps. I hope the information in this post was sufficient to get you started with the appropriate containerization solution for your requirements.

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268)

As artificial intelligence (AI) and machine learning (ML) continue to transform industries, organizations are striving not just to build smarter models — but to operationalize them at scale. This is exactly where Red Hat OpenShift AI steps in as a powerful enterprise platform, combining the flexibility of open-source tooling with the scalability of Kubernetes.

The AI268 course – Developing and Deploying AI/ML Applications on Red Hat OpenShift AI – is a deep-dive training designed for data scientists, ML engineers, and developers who want to go beyond notebooks and deploy real-world ML solutions with confidence.

🔍 What the Course Covers

AI268 equips professionals to build robust ML workflows within a cloud-native architecture. You'll start with model development in a secure, collaborative environment using Jupyter notebooks. From there, you’ll dive into containerizing your ML workloads to make them portable and reproducible across environments.

The course also explores automated ML pipelines using Tekton (OpenShift Pipelines), allowing seamless orchestration of model training, validation, and deployment. You’ll also learn how to serve models at scale using ModelMesh and Seldon, enabling high-performance inference with dynamic model loading and efficient resource utilization.

🌟 The Power of OpenShift AI

What makes OpenShift AI unique is its native integration with Kubernetes, allowing you to scale AI workloads efficiently while applying modern DevOps principles to ML workflows. It supports GPU acceleration, version control for data and models, and secure, role-based access — everything needed to move ML into production, faster and smarter.

This environment allows teams to collaborate across development, data, and operations — breaking down the traditional silos that stall many ML initiatives.

💡 Real-World Applications

Imagine building a pipeline that automatically retrains your model whenever new data arrives. Or deploying hundreds of models for different customer segments and loading them only when needed to save memory and cost. With the skills learned in AI268, these aren’t just possibilities — they’re your new baseline.

You also learn to integrate GitOps-style CI/CD for model lifecycle management, allowing automated versioning, promotion, rollback, and monitoring — all within the OpenShift ecosystem.

🧠 Why It Matters

MLOps is not just a buzzword — it's a necessity for any organization scaling AI efforts. The AI268 course arms you with practical tools and skills to not only develop models but to run them reliably in production. It’s ideal for teams aiming to modernize their AI/ML stack while adhering to enterprise standards for security, governance, and scalability.

��� Final Thoughts

Red Hat OpenShift AI provides the foundation, and AI268 shows you how to build on it. Whether you're modernizing legacy workflows or deploying cutting-edge models into production, this course helps you bridge the gap between experimentation and enterprise-ready ML operations.

If you're serious about delivering value with AI in a secure, automated, and scalable way — AI268 is the course to take.

For more details www.hawkstack.com

0 notes

Text

Modern Tools Enhance Data Governance and PII Management Compliance

Modern data governance focuses on effectively managing Personally Identifiable Information (PII). Tools like IBM Cloud Pak for Data (CP4D), Red Hat OpenShift, and Kubernetes provide organizations with comprehensive solutions to navigate complex regulatory requirements, including GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act). These platforms offer secure data handling, lineage tracking, and governance automation, helping businesses stay compliant while deriving value from their data.

PII management involves identifying, protecting, and ensuring the lawful use of sensitive data. Key requirements such as transparency, consent, and safeguards are essential to mitigate risks like breaches or misuse. IBM Cloud Pak for Data integrates governance, lineage tracking, and AI-driven insights into a unified framework, simplifying metadata management and ensuring compliance. It also enables self-service access to data catalogs, making it easier for authorized users to access and manage sensitive data securely.

Advanced IBM Cloud Pak for Data features include automated policy reinforcement and role-based access that ensure that PII remains protected while supporting analytics and machine learning applications. This approach simplifies compliance, minimizing the manual workload typically associated with regulatory adherence.

The growing adoption of multi-cloud environments has necessitated the development of platforms such as Informatica and Collibra to offer complementary governance tools that enhance PII protection. These solutions use AI-supported insights, automated data lineage, and centralized policy management to help organizations seeking to improve their data governance frameworks.

Mr. Valihora has extensive experience with IBM InfoSphere Information Server “MicroServices” products (which are built upon Red Hat Enterprise Linux Technology – in conjunction with Docker\Kubernetes.) Tim Valihora - President of TVMG Consulting Inc. - has extensive experience with respect to:

IBM InfoSphere Information Server “Traditional” (IIS v11.7.x)

IBM Cloud PAK for Data (CP4D)

IBM “DataStage Anywhere”

Mr. Valihora is a US based (Vero Beach, FL) Data Governance specialist within the IBM InfoSphere Information Server (IIS) software suite and is also Cloud Certified on Collibra Data Governance Center.

Career Highlights Include: Technical Architecture, IIS installations, post-install-configuration, SDLC mentoring, ETL programming, performance-tuning, client-side training (including administrators, developers or business analysis) on all of the over 15 out-of-the-box IBM IIS products Over 180 Successful IBM IIS installs - Including the GRID Tool-Kit for DataStage (GTK), MPP, SMP, Multiple-Engines, Clustered Xmeta, Clustered WAS, Active-Passive Mirroring and Oracle Real Application Clustered “IADB” or “Xmeta” configurations. Tim Valihora has been credited with performance tuning the words fastest DataStage job which clocked in at 1.27 Billion rows of inserts\updates every 12 minutes (using the Dynamic Grid ToolKit (GTK) for DataStage (DS) with a configuration file that utilized 8 compute-nodes - each with 12 CPU cores and 64 GB of RAM.)

0 notes

Text

How much can energy harvesting cut maintenance costs for remote IoT sensors

Cloud Native Applications Market was valued at USD 6.49 billion in 2023 and is expected to reach USD 45.71 billion by 2032, growing at a CAGR of 24.29% from 2024-2032.

The Cloud Native Applications Market: Pioneering the Future of Digital Transformation is experiencing an unprecedented surge, driven by the imperative for businesses to achieve unparalleled agility, scalability, and resilience in a rapidly evolving digital economy. This architectural shift, emphasizing microservices, containers, and automated orchestration, is not merely a technological upgrade but a fundamental re-imagining of how software is conceived, developed, and deployed.

U.S. Businesses Lead Global Charge in Cloud-Native Adoption

The global Cloud Native Applications Market is a dynamic and rapidly expanding sector, foundational to modern enterprise IT strategies. It empowers organizations to build, deploy, and manage applications that fully leverage the inherent advantages of cloud computing. This approach is characterized by modularity, automation, and elasticity, enabling businesses to accelerate innovation, enhance operational efficiency, and significantly reduce time-to-market for new services. The market's robust growth is underpinned by the increasing adoption of cloud platforms across various industries, necessitating agile and scalable software solutions.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6545

Market Keyplayers:

Google LLC (Google Kubernetes Engine, Firebase)

International Business Machines Corporation (IBM Cloud, IBM Cloud Pak)

Infosys Technologies Private Limited (Infosys Cobalt, Cloud Ecosystem)

Larsen & Toubro Infotech (LTI Cloud, LTI Digital Transformation)

Microsoft Corporation (Azure Kubernetes Service, Azure Functions)

Oracle Corporation (Oracle Cloud Infrastructure, Oracle Autonomous Database)

Red Hat (OpenShift, Ansible Automation Platform)

SAP SE (SAP Business Technology Platform, SAP S/4HANA Cloud)

VMware, Inc. (VMware Tanzu, VMware Cloud on AWS)

Alibaba Cloud (Alibaba Cloud Container Service, Alibaba Cloud Elastic Compute Service)

Apexon (Cloud-Native Solutions, Cloud Application Modernization)

Bacancy Technology (Cloud Development, Cloud-Native Microservices)

Citrix Systems, Inc. (Citrix Workspace, Citrix Cloud)

Harness (Harness Continuous Delivery, Harness Feature Flags)

Cognizant Technology Solutions Corp (Cognizant Cloud, Cognizant Cloud-Native Solutions)

Ekco (Cloud Infrastructure Services, Cloud Application Development)

Huawei Technologies Co. Ltd. (Huawei Cloud, Huawei Cloud Container Engine)

R Systems (R Systems Cloud Platform, R Systems DevOps Solutions)

Scality (Scality RING, Scality Cloud Storage)

Sciencesoft (Cloud-Native Development, Cloud Integration Solutions)

Market Trends

Microservices Architecture Dominance: A widespread shift from monolithic applications to independent, smaller services, enhancing flexibility, fault tolerance, and rapid deployment cycles.

Containerization and Orchestration: Continued and expanding reliance on container technologies like Docker and orchestration platforms such as Kubernetes for efficient packaging, deployment, and management of applications across diverse cloud environments.

DevOps and CI/CD Integration: Deep integration of DevOps practices and Continuous Integration/Continuous Delivery (CI/CD) pipelines, automating software delivery, improving collaboration, and ensuring frequent, reliable updates.

Hybrid and Multi-Cloud Strategies: Increasing demand for cloud-native solutions that can seamlessly operate across multiple public cloud providers and on-premises hybrid environments, promoting vendor agnosticism and enhanced resilience.

Rise of Serverless Computing: Growing interest and adoption of serverless functions, allowing developers to focus solely on code without managing underlying infrastructure, further reducing operational overhead.

AI and Machine Learning Integration: Leveraging cloud-native principles to build and deploy AI/ML-driven applications, enabling real-time data processing, advanced analytics, and intelligent automation across business functions.

Enhanced Security Focus: Development of security-first approaches within cloud-native environments, including zero-trust models, automated compliance checks, and robust data protection mechanisms.

Market Scope: Unlocking Limitless Potential

Beyond Infrastructure: Encompasses not just the underlying cloud infrastructure but the entire lifecycle of application development, from conceptualization and coding to deployment, scaling, and ongoing management.

Cross-Industry Revolution: Transforming operations across a vast spectrum of industries, including BFSI (Banking, Financial Services, and Insurance), Healthcare, IT & Telecom, Retail & E-commerce, Manufacturing, and Government.

Scalability for All: Provides unprecedented scalability and cost-efficiency benefits to organizations of all sizes, from agile startups to sprawling large enterprises.

Platform to Service: Includes robust cloud-native platforms that provide the foundational tools and environments, alongside specialized services that support every stage of the cloud-native journey.

The Cloud Native Applications Market fundamentally reshapes how enterprises harness technology to meet dynamic market demands. It represents a paradigm shift towards highly adaptable, resilient, and performant digital solutions designed to thrive in the cloud.

Forecast Outlook

The trajectory of the Cloud Native Applications Market points towards sustained and exponential expansion. We anticipate a future where cloud-native principles become the de facto standard for new application development, driving widespread modernization initiatives across industries. This growth will be fueled by continuous innovation in container orchestration, the pervasive influence of artificial intelligence, and the increasing strategic importance of agile software delivery. Expect to see further refinement in tools that simplify cloud-native adoption, foster open-source collaboration, and enhance the developer experience, ultimately empowering businesses to accelerate their digital transformation journeys with unprecedented speed and impact. The market will continue to evolve, offering richer functionalities and more sophisticated solutions that redefine business agility and operational excellence.

Access Complete Report: https://www.snsinsider.com/reports/cloud-native-applications-market-6545

Conclusion:

The Unstoppable Ascent of Cloud-Native The Cloud Native Applications Market is at the vanguard of digital innovation, no longer a niche technology but an indispensable pillar for any organization striving for competitive advantage. Its emphasis on agility, scalability, and resilience empowers businesses to not only respond to change but to actively drive it. For enterprises seeking to unlock new levels of performance, accelerate time-to-market, and cultivate a culture of continuous innovation, embracing cloud-native strategies is paramount. This market is not just growing; it is fundamentally reshaping the future of enterprise software, promising a landscape where adaptability and rapid evolution are the keys to sustained success.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Mail us: [email protected]

0 notes

Text

Enhancing Application Performance in Hybrid and Multi-Cloud Environments with Cisco ACI

1 . Introduction to Hybrid and Multi-Cloud Environments

As businesses adopt hybrid and multi-cloud environments, ensuring seamless application performance becomes a critical challenge. Managing network connectivity, security, and traffic optimization across diverse cloud platforms can lead to complexity and inefficiencies.

Cisco ACI (Application Centric Infrastructure) simplifies this by providing an intent-based networking approach, enabling automation, centralized policy management, and real-time performance optimization.

With Cisco ACI Training, IT professionals can master the skills needed to deploy, configure, and optimize ACI for enhanced application performance in multi-cloud environments. This blog explores how Cisco ACI enhances performance, security, and visibility across hybrid and multi-cloud architectures.

2 . The Role of Cisco ACI in Multi-Cloud Performance Optimization

Cisco ACI is a software-defined networking (SDN) solution that simplifies network operations and enhances application performance across multiple cloud environments. It enables organizations to achieve:

Seamless multi-cloud connectivity for smooth integration between on-premises and cloud environments.

Centralized policy enforcement to maintain consistent security and compliance.

Automated network operations that reduce manual errors and accelerate deployments.

Optimized traffic flow, improving application responsiveness with real-time telemetry.

3 . Application-Centric Policy Automation with ACI

Traditional networking approaches rely on static configurations, making policy enforcement difficult in dynamic multi-cloud environments. Cisco ACI adopts an application-centric model, where network policies are defined based on business intent rather than IP addresses or VLANs.

Key Benefits of ACI’s Policy Automation:

Application profiles ensure that policies move with workloads across environments.

Zero-touch provisioning automates network configuration and reduces deployment time.

Micro-segmentation enhances security by isolating applications based on trust levels.

Seamless API integration connects with VMware NSX, Kubernetes, OpenShift, and cloud-native services.

4 . Traffic Optimization and Load Balancing with ACI

Application performance in multi-cloud environments is often hindered by traffic congestion, latency, and inefficient load balancing. Cisco ACI enhances network efficiency through:

Dynamic traffic routing, ensuring optimal data flow based on real-time network conditions.

Adaptive load balancing, which distributes workloads across cloud regions to prevent bottlenecks.

Integration with cloud-native load balancers like AWS ALB, Azure Load Balancer, and F5 to enhance application performance.

5 . Network Visibility and Performance Monitoring

Visibility is a major challenge in hybrid and multi-cloud networks. Without real-time insights, organizations struggle to detect bottlenecks, security threats, and application slowdowns.

Cisco ACI’s Monitoring Capabilities:

Real-time telemetry and analytics to continuously track network and application performance.

Cisco Nexus Dashboard integration for centralized monitoring across cloud environments.

AI-driven anomaly detection that automatically identifies and mitigates network issues.

Proactive troubleshooting using automation to resolve potential disruptions before they impact users.

6 . Security Considerations for Hybrid and Multi-Cloud ACI Deployments

Multi-cloud environments are prone to security challenges such as data breaches, misconfigurations, and compliance risks. Cisco ACI strengthens security with:

Micro-segmentation that restricts communication between workloads to limit attack surfaces.

A zero-trust security model enforcing strict access controls to prevent unauthorized access.

End-to-end encryption to protect data in transit across hybrid and multi-cloud networks.

AI-powered threat detection that continuously monitors for anomalies and potential attacks.

7 . Case Studies: Real-World Use Cases of ACI in Multi-Cloud Environments

1. Financial Institution

Challenge: Lack of consistent security policies across multi-cloud platforms.

Solution: Implemented Cisco ACI for unified security and network automation.

Result: 40% reduction in security incidents and improved compliance adherence.

2. E-Commerce Retailer

Challenge: High latency affecting customer experience during peak sales.

Solution: Used Cisco ACI to optimize traffic routing and load balancing.

Result: 30% improvement in transaction processing speeds.

8 . Best Practices for Deploying Cisco ACI in Hybrid and Multi-Cloud Networks

To maximize the benefits of Cisco ACI, organizations should follow these best practices:

Standardize network policies to ensure security and compliance across cloud platforms.