#physically based rendering is a collection of things that generally try to model actual light physics such as like

Explore tagged Tumblr posts

Text

more like physically cringe rendering. if we are being honest

#physically based rendering is a collection of things that generally try to model actual light physics such as like#ensuring that the total light reflected off a surface is no more than the incident light#or 'microfacets' or whatever. it's cool but for why

5 notes

·

View notes

Text

May 24, 2020

Here is my weekly collection of thoughts and things that happened.

Speed vs. Space

One of the basic tradeoffs in transportation planning is speed, which can be thought of as how far you can get in a time budget, versus how much space you take up during your trip.

Jarrett Walker has “the photo that explains almost everything”. Anyone who has spent any length of time in the urbanist community has seen this photo or some variant of it.

In the article, Walker explains that “In cities, urban space is the ultimate currency.” However, the photo that explains “almost everything” only tells half the story.

This is an isochrone map. You can make your own with the TravelTime app, which is a lot of fun. The image is centered at Orenco Station, Hillsboro, Oregon, where I live, a transit-oriented development that was built up around the MAX station starting in the 1990s. The blue region is all the territory I can reach in 30 minutes by public transport. The red region is all the territory I can reach in 30 minutes by car.

This illustrates the fundamental tradeoff. Contrary to what Walker claims, urban land is not a fixed commodity, but rather is based on what can be reached in a reasonable time. The 30 minute standard is Marchetti’s Constant, which is the observation that cities tend to be structured to afford most citizens a 30 minute one-way commute on average. The geography of the city is thus dependent on the primary transportation mode.

You can go the car-dependent route, and have a geographically large, low-density city, or a transit-dependent city with Manhattan density, or a super-dense arcology where internal transportation is all walking and biking. In each case, I estimate that the city can support a unified labor market of about 7-10 million people before either travel time or congestion get out of control. That is, uncoincidentally, about how large cities get today. Some metropolitan areas can grow to 20-30 million by relaxing the condition that the workforce has to thoroughly mix throughout the city, and by using hybrid transportation systems that simultaneously capture the benefits of each.

There are, of course, other factors that come into play: land use, energy consumption and CO2 emissions, infrastructure and building costs, safety, and aesthetics, among others. Our discussion of land use and transportation policy should be based on such tradeoffs and avoid simplistic “ban cars” slogans.

Oregon Metro’s Transportation Planning

Speaking of transportation, the subject is on my mind now, as Oregon Metro is currently preparing a bond measure for the 2020 ballot and had a work session on the topic this week. Last fall I submitted a comment that the process should be better tied with land use. If a neighborhood or city is to get regional funding for transportation improvements, such as expanded roadways or light rail stops, the region should insist that the city increase its zoned capacity to insure that the transportation improvement is fully utilized. Otherwise it is unfair to taxpayers in the rest of the region. I would like to see the federal Department of Transportation adopt a similar standard, as HUD has done with affordable housing grants.

Aside from that, I would like to see Metro show a bit more transparency on how they costed and estimated the benefits of proposed projects. Perhaps the information is there, but I haven’t been successful in finding it yet.

Otherwise, I don’t have much more to say now on the merits of proposed projects. What Metro is proposing now seems like a reasonable across modes and parts of the region. Said balance might be more the result of the political process than dispassionate analysis, but I wouldn’t expect otherwise.

Inclusive Wealth

Over the years I occasionally see calls for “better measures of progress” (than GDP) or measures of wealth that take well-being and the environment into account, or some variant thereof. Last week I wrote about how “growth at all costs” is a strawman, not an accurate representation of how economic policy is actually made. This week I took some time into trying to better understand these alternative measures.

One such measure is Inclusive Wealth, which the UN Environment Program tracks. It is a composite measure that adds GDP, changes in the quality of the environment and stocks of natural resources, and some measures of human well-being such as education. The index is meant to show that, with a broader definition of wealth, much of the world is not actually improving or even getting worse, in contrast to what the GDP metric tells us.

To start with the positive, I do appreciate the efforts made by ecological economics to quantify the physical basis of wealth. I think this effort is necessary and should be further developed. One of my early influences in energy is work by Ayres and Warr to quantify the role that energy plays in economic growth. They found that it can explain most of the change in total factor productivity in the United States from 1900 to 1970 (beyond which the explanatory power weakens). I do generally like the ecosystem service pricing model, though it has its limitations. And yes, it is important to ask the deeper questions about what well-being is and what causes it.

The Inclusive Wealth metric has several major problems, however, which render the numbers meaningless as a representation of “inclusive” wealth. The most glaring is that scientific and technological knowledge is omitted, except insofar as they are represented in the traditional GDP metric. This renders the calculations on natural capital stock useless, since natural resources are a function both of physical material and technological capability. Technology is the difference between a usable and unusable oil or mineral deposit, and technology is what allows us to harvest useful energy from sunlight, wind, uranium ore, waves, tides, the crust’s heat, or deuterium in the ocean. Contrast to the Simon Abundance Index, which shows real declining commodity prices despite (or because of) growing population.

I don’t understand the material on well-being well enough to judge its usage in Inclusive Wealth, though what I have seen elsewhere makes me suspicious. That might the subject for a future post.

In the end, Inclusive Wealth is something that I think could have been a valuable contribution to our understanding of the economy, but it fails in that regard. Behind positive rhetoric about natural capital and well-being, the report carries Malthusian arguments and betrays population anxiety. It makes me wonder if there ever could be a super-metric of wealth that is coherent enough to be used for policy and free of obvious ideological bias. I doubt it, and it should be recognized that policymaking is a pluralistic exercise. An attempt to aggregate mutually incommensurate values into a single metric necessarily masks value judgments with the illusion of objective measurement.

Interstellar Flight Concepts

Marc Millis has a presentation online now from the Tennessee Valley Interstellar Workshop last year. With a grant from NASA, he is building a database of proposed mission architectures for unmanned interstellar probes.

First, I find it wild that NASA is funding such a thing. Projects involving speculative physics, such as warp drives, are expressly included in the scope. It is good to see a small portion of our research efforts going to far-reaching projects, few if any of which are likely to come to fruition in this century.

Second, I like the idea of a public-facing database of proposed solutions. I would like to see much more of this across fields to help the public better find what is available and place it in context. The IAEA maintains a similar database for advanced nuclear reactors, which I have found helpful.

Infrastructure for Artificial Intelligence

I’ve also been thinking about AI this week. Last year, Eric Drexler published Reframing Superintelligence: Comprehensive AI Services as General Intelligence, which I found to be a useful corrective to the agent-based notion of artificial general/super-intelligence that is prevalent. If I understand the argument (which, I must say, is not the easiest to understand), he envisions that AGI can be stitched together by decomposing economically useful tasks into a tiled task space, where a narrow intelligence can be developed to perform each task, and AGI can be stitched together across tiles.

This is all quite debatable, but Reframing Superintelligence does point to the possibility of an AI infrastructure which, even if it is not structured as Drexler imagines, may be an important concept. To analogize to road networks, agents, such as DeepMind, might be the cars and trucks; what then, are the roads?

The best thought out answer I know of is the Semantic Web, an idea developed by Tim Berners-Lee (developer of the World Wide Web) as a machine-readable extension. It is, in effect, a method of encoding knowledge in a graph database of propositions in subject-predicate-object format. It is a very intriguing idea but one that has not yet gained traction in the way that advocates had hoped.

I can imagine the Semantic Web, or some analogous concept, playing an important role in the technology stack that ultimately leads us to AGI. It could also be instrumental in realizing the potential of expert systems and automated reasoning, techniques in AI that for years have languished with limited productive results.

0 notes

Text

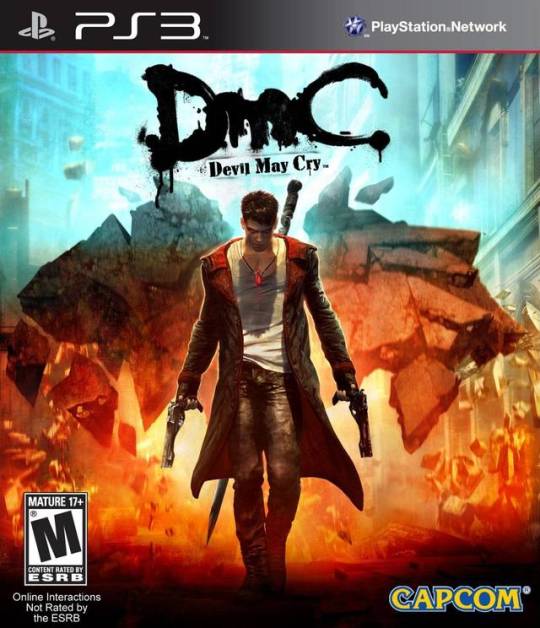

Devil May Cry: Series Retrospective- "DmC: Devil May Cry"

At long last, I sat down and played DmC: Devil May Cry. Well and truly, the dust has settled, the dead horse has been beaten into compost, and the reactionary rages and defenses have died down. And, for myself, I think my fanboy passion for the series has subsided.

A weak Special Edition, a pachinko machine and a bad MvC model later, I hold out no honest hope for the Devil May Cry franchise now. We’ll always have the Temen-ni-Gru Dante...but we’re not getting back together, lets face it.

So now, when I look at Ninja Theory’s protagonist, who I will still refer to as Donte, the fresh insult that he used to be is now replaced with a genuine, tryhard, grittiness that just seems cute in an “ah, bless” kind of way. He’s no longer the sour white whale that ate my favourite character and franchise, he’s just a little fish who flops around in a harmlessly funny way.

....before the massive flaws of the game come forth.

This review is based on the PS3 launch version and does its best to criticize it on its own merits/failings, not merely on fan insult or in comparison to the previous games. But it is after all called “Devil may Cry”, so its existence as part of a wider franchise isn’t ignored either.

Also, fair warning, this is going to be long as hell. Which is suitable, because it feels like hell sometimes...

Technical Qualities

The engine/optimization is dogshit.

Ninja Theory infamously rejected Capcom’s offer to translate their wunderengine, MT Framework, into English so that they could use it. Instead the team built DmC: Devil May Cry using Unreal Engine 3, which was already starting to look dated by 2010 when the game was announced. For perspective, Unreal Engine 4 was revealed to the public before DmC even came out. The likely reason Ninja Theory chose to stick with Unreal was because of a developer kit popular with young game creators. Unreal 3 was a ubiquitous engine in the last gen of consoles, being the backbone of games like Bioshock: Infinite and the Batman: Arkham series. But you’ll be hard pressed to find any games using it that were as fast paced as the Devil May Cry series.

Off the top of my head, games that use Unreal 3 usually have collision and texture pop-in problems. This is less of an issue in first person or isometric games when player movement and camera angles/viewable space are restrained, but it’s disastrous for something like DmC with its wide angle camera, large open areas, dense enemy count and fast player movement.

On the very first mission, in no more than 2 minutes of having control, Donte got stuck in a wall as I tried to go through the level like a normal player. This was followed by hideous amount of texture pop-in, audio glitches that muted parts of the soundscape, a couple of attacks that didn’t connect with enemies when they should have, and loading times out the arse.

A nasty little secret I only found out from replaying it first hand was that many of the mini-cutscenes (like when Donte looks at the Hunter demon hop around buildings, or does a backflip as he collects his guns) are secretly loading screens, unskippable until the loading operation is completed. All of which are frustrating to have to sit through in such a fast paced game. The way they make such a deal out of the same, generic enemy spawning in by giving it a dramatic close-up every time feels patronizing on repeat fights. “OOOH look! It’s a flying thing again!”. Yeah, no game, these things are easy to kill and I know you’re covering up something with this. Nice try.

Without seeing the development build firsthand, I can’t say for certain why it ran so badly. The release of the Definitive Edition for PS4/XBONE implies that it was a hardware limitation...but....like....that’s what optimization is for; making games run well on older hardware. More on this later, but design choices in level layouts, for instance, can remedy this. You can, for instance, segment levels in a way that stops you from seeing large areas at a single moment, reducing how much the consoles needs to render and thus cutting down load times.

Instead, what we largely got were huge foggy rooms and camera lens flares there to hide unloaded textures. The problem then is that it just, in my opinion at least, doesn’t look very good. Think of how Silent Hill 2 and 3 manage to still look so good due to how they segment rooms with doors you can’t see beyond. Or how the use of fog doesn’t cover up anything that you’re supposed to be looking at. And how they manage to have shorter loading times for it, a whole generation of consoles in the past.

Another trick is to “hard bake” lighting effects into the level’s textures themselves, rather than relying on extra shader operations. It’s more taxing on hardware to emulate, say, the actual light physics of a red spotlight instead of just making the textures of the walls and floor red, using trickery to make it seem like there’s a functioning red light there. Open world games generally don’t have this option, but with Devil May Cry, which is a linear series with rarely changing environments, you can use trickery like this effectively. Instead, DmC has more shaders -many of which look terrible in cutscenes- than it can handle.

Ninja Theory did a bad job of optimizing their game for their primary hardware. Even with the update there were visual problems, audio glitches and collision bugs throughout the entire game. It’s far from unplayable, but it’s ropey for a AAA game.

Level Design

Before I get into the artistic choices, I want to take a moment to look at the more technical, grounded aspects of how Ninja Theory designed levels.

Most of the previous Devil May Cry games are economic with their level design, reusing areas multiple times over with remixed enemy layouts and the occasional change in lighting, music and even textures. This cuts down on development time, saves disc space, and allows the designers to really put care into each individual location. Resident Evil, the Souls games, and Deus Ex: Human Revolution are other good examples.

DmC had potential for this with its ���living city” concept. The best use of this concept is with Mission 2: Home Truths, where Donte visits his and Vurgil’s gigantic childhood home. As you backtrack into familiar hallways and foyers, the corruption of Mundus’ influence causes walls to crack open, pathways to change shape and different enemies to spawn. It’s a great (re)use of assets that trip up your expectations as a player the first time around. It also uses some Metroidvania style locked doors and obstacles which you need certain abilities/weapons to traverse. The unfortunate limitation of that is that you can literally fly through some levels and skip entire sections of the game upon a replay; Mission 3 requires you to unlock the Air Dash move in order to clear a gap that appears early on, but you’ll already have it on a replay, turning a 20~ minute level into a 3~ minute one.

Sequence breaking like this doesn’t happen in any huge way though, due to how each level is an entirely separate area of its own. Likewise, most of these ability/weapon barriers lead to optional bonus areas that are slightly off the beaten path.

Linear level design isn’t inherently bad, but in this case I think it was a huge missed opportunity. Not only is there a parallel real world vs Limbo premise that has Donte shift from a greyscale, mundane city into a colourful, chaotic image of itself, that Limbo dimension has the ability to change in real time. If the level designers allowed players to shift from dimension to dimension in-game, a la Soul Reaver, or if they had just played up the “living city” concept in a more interactive way, the city would have been much more interesting and, ironically, feel much more alive than it does. Instead we got a linear, albeit pretty, collection of corridors with very little off the beaten path. DmC incentivizes exploration by hiding collectables, but “exploration” ultimately means turning left where you should turn right to find a Lost Soul behind a bin.

One place where they ALMOST got it right is the first Slurm Virility factory level. After a cutscene showing a mixing room, Donte and Kat break from the tour, slowly jog down some empty, boring hallways in to an equally empty and boring warehouse. Dante can’t attack or jump in this section, and there is absolutely nothing to interact with. It’s an unfortunately uninteresting forced walking section, only one small step above being an unskippable cutscene. Kat then sprays her squirrel jizz magic circle on the ground, Donte enters the Limbo version of the level, the room expands and the crates become platforms, and the level really begins from there. For reasons I never understood, Donte then has to take a huge route up sets of boxes and across dozens of different rooms to circle back on the way he came in. On the way back, he backtracks down the Limbo version of the boring hallways of before, except now they’re slightly less boring, with a few enemies to fight and moving walls and floors. Then you get to the mixing room (which is only shown in a cutscene) for a brawl, before moving on.

The reason this didn’t work as well as it could have are twofold. 1: You only see the real world version of a tiny portion of the level, and 2: said portion is boring as fuck and you don’t interact with it in any meaningful way. But hey, at least the idea was there.

Moments where the living city concept is pushed to the side for more one-off but more effectively done ideas can be found in the upside-down prison, the short prelude to the Bob Barbas fight and Lilith’s rave.

The upside-down prison starts off fairly strong, tapping into one of those childhood ideas we all idly wondered about; what if gravity suddenly shifted? The level starts off strong and has moments throughout that give a trippy sense of vertigo. Mostly this is with car and train bridges, but unfortunately loses the point as it progresses. Because the prison isn’t just upside-down, but is also in Limbo, gravity is already unreliable and the bottomless pit below the floor already looks like the sky. Similarly with the lead up to the boss fight with Poison that has you run “down” a vertical pipe, it all looks floaty and weird by default, making further attempts to be floaty and weird just seem...normal. Likewise, the prison is mostly comprised of bland, urban and industrial textures, completely interchangeable with any old warehouse. You quickly forget that you’re upside-down at all.

The setting also well outstays it’s welcome, taking up 4 entire levels to itself with not enough ideas to justify it. There’s even one moment where, after meeting Fineas, you’re told you need to follow a flock of harpies to find their lair....even though their lair is a completely linear set of halls...That says it all really; there was a fun idea in here, but it was executed without the same creativity.

Following that is the tragically short Bob Barbas prelude. THIS is one of the single most interesting concepts in level design I have ever seen. Seriously. I cannot think of any other game that took news graphics and idents and turned them into platforming sections. Even moments during the fight where Donte is dropped into news chopper footage manage to do something brilliantly original, stylish and funny. But as quickly as it came, it’s gone before you know it. It’s a fucking crime that the previous 4 levels didn’t use the same concept to break up the monotony of their urban corridors. They could have had Donte teleport around chunks of the level using the various TV screens with Bob Barbas propaganda on them, hopping across idents until he got to the other side. Shame.

Next up, almost in a moment of clarity from the designers when they realized that could do digital environments and cheesy tv show graphics in their game more than once, we have Lilith’s nightclub. Again, much more interesting than the living city stuff, albeit a bit harsh on the eyes with its lighting effects. There’s not much to say about it beyond “it looks cool”, but it’s worth mentioning that it feels much more focused and fully utilized than the upside-down prison. All in all. the level design in DmC is at odds with itself, marked by its lost potential. The concepts are interesting, but the execution is almost always lackluster, favouring hand-holdy linear hallways with “cinematic” qualities over more interactive, open spaces with a sense of place. For a game that, pre-release, seemed to want to show us a more fleshed out world than previous games, it winds up as little more than a flat backdrop.

But oh well, DMC is all about the action happening center stage, right?

Combat

Combat in DmC is a mix bag.

The number of different attacks available and Donte’s versatility at chaining moves across 5 different weapons is pretty great. I’m a fan of how you can swap special pause combos across your alternate weapons; two quick hits with Rebellion, a pause, then a final triple smash with Arbiter takes a little extra skill to pull off but rewards you with a faster combo than if you just used Arbiter alone. Likewise, little tweaks like how fast Drive can charge now and how it does actual damage unlike Quick Drive in DMC4, or how you can hold Million Stab for longer, are all mostly fun changes. I tend to have a lot of fun with Osiris and find it to be the most versatile weapon for pulling off different combos. Its ability to charge up the more hits it delivers is a good incentive to hook in as many enemies as possible too, even if it means its uncharged state doesn’t do enough damage. Aquila is a fun supplementary weapon, mostly good for distracting one enemy with the circle attack and pulling the rest into range for Osiris. Eryx, however, is rubbish. Its incredibly short range, long charge times and weak damage output really throw it onto the trash pile when Arbiter is right beside it. Also, personal taste, but it just looks stupid. It’s like a slimy set of Hulk Hands. And they don’t even yell “HULK SMASH” when you attack. Previous DMC gauntlets all include a gap-closing dive attack to put you in enemy range, but the Demon Grapple doesn’t work the large enemies you’ll want to use it against. More on that in a bit.

Guns are mostly pointless. Donte can move laterally so much easier than before that long range combat is redundant. Charge shots with Ebony & Ivory are like Eryx in that they take too long to charge and don’t do enough damage to be worth the wait. Also, because you need to be in a neutral, non-demon non-angel, state to fire them, charging them up while you wail on someone only works if you limit yourself to Rebellion. Switching to Demon or Angel weapons resets the charge and limits you to a grapple move.

Which leads to another problem; 4 of your 5 weapons disable the use of guns. I mean, you’re not missing out on much by the end anyway because the guns are boring and ineffectual to use against all but one enemy (the Harpy), but it feels like a mistake. They literally give you guns in cutscenes as an afterthought. Like when Vurgil goes “oh yeah, have this, it’ll kill the next few enemies really quickly then sit in your back pocket for all eternity thereafter”. Donte never feels like he’s earning these guns like he earns the melee weapons, and they never seem to be worth a damn in gameplay.

The grapples are more useful but, again, having two different types feels redundant in combat. Large enemies can’t be pulled towards you, so why not do what DMC4 did and have one grapple that does both jobs; pull small enemies towards you, pull yourself towards larger enemies? The end result in either scenario is to get in melee range, so it shouldn’t make that much of a difference. Considering Aquila has a special attack to pull enemies in, why not offload those moves to the other weapons too? If you want to keep both pull-in and pull-towards moves in combat, why not give, say, Eryx a special pull-in attack so you can swap back to guns easier?

In short; while the combat is versatile and very satisfying to pull off combos with, large parts of it feel badly thought out. The moves and weapons that end up being useless most of the time have enemies spawn after you unlock them, just as an excuse to show how they work.

The infamous “demon attacks for red enemies, angel attacks for blue enemies” gimmick actually wasn’t as bad as I expected. Until I had to fight a Blood Rage and a Ghost Rage at the same fucking time. I don’t think I need to get into it due to how many other people have complained, but it was just fucking infuriating to say the least.

Okay, so.....Devil May Cry 3 did it better. Most people don’t seem to know this, but DMC3 gave you damage bonuses if you used the right weapon against the right enemies, signified by a subtle particle effect. Nowhere in the enemy or weapon descriptions does it explain this, but if you use your head (or just experiment) you can generally figure it out. Beowulf is a light weapon, Doppelganger is a shadow monster, using light on it does extra elemental damage signified by a flash effect with each hit. Cerberus is an ice weapon, Abysses are liquidy enemies, so using ice on it freezes them, signified by an icicle effect. etc But most importantly; it never STOPS you from using the “wrong” weapon against enemies. I don’t think I need to go into how annoying it is when your combat flow is interrupted by your angel weapon PINGing off a red enemy, but god damn it.

Credit where credit is due; Ninja Theory did emphasize the right part of DMC’s combat when they opted to focus on combos over balance. Both 3 and 4 had broken combos and attacks that skilled players could easily pull off, but they would make combat boring and the games all emphasized an honour system to prevent abuse. If you were good enough to use Pandora to break enemy shields in 4, you were good enough to not abuse it.

Then again, a games combat is only as good as its enemies.

Enemies/Bosses

So it’s a real shame then that enemies and bosses don’t push you hard enough.

The AI is atrocious. NO hack n’ slash should have two hardcore enemies accidentally kill each other without you noticing. The mixing room in the Slurm levels pits you against two Tyrants/the big fat dudes who charge at you. There’s an easy-to-avoid pitfall in the middle of this room. Once, on hard mode no less, they spawned in as usual and one accidentally nudged the other into the pit, insta-killing him while I literally stood still and watched...

Most regular man-sized enemies (Stygians, Death Knights, and their variations) have a common problem of just not attacking first, opting to side step around you forever until you run at them. Luckily there usually is one aggressive enemy mixed in there, like the flying guys with guns or the screamy-chainsaw men, so you’ll be forced to dodge into their range, but it’s embarrassing when they’re isolated. You’re left standing there, charging a finishing attack with Eryx like you have your dick in your hand, and these things are just strafing around you, doing nothing. So you miss with Eryx, step forward, and anti-climatically twat them about with Rebellion just to get it over with.

At first I thought this combat shyness was a design choice, but then it happened with the final boss, revealing it to be a pathfinding bug. But more on that later...

So yes, the red/blue enemy gimmick is bullshit and breaks the flow of a room-sweeping combo you have going, but it actually works really well with the Witch enemy who hangs back, projecting shields onto other enemies while she snipes at you from a distance. She’s annoying to hunt down when you’re dealing with 10 other enemies, so you have to prioritize whether you want to plough through them first or clumsily chase her down first. It’s a nice dynamic to fights, adding that extra layer of strategy to mix things up in a less punishing way.

The main difference with the Witch and the other colour coded enemies is that the Witch gives you options. Blood/Ghost Rages do not, and make fights involving them feel like complete chores. You’ll find the one tactic that works, then rely on it every time.

No, the most egregious enemies were the bosses.

All of them, every single one, was terrible. Not including the Dream Runner mini-bosses, there was a total of 6, less than any of the other DMCs, which makes how sloppily designed they were all the more horrendous. Every single boss is formulaic, partitioned out into “segments” cut up by mini cutscenes that have Donte do something sassy when he works them down enough. But each of those segments tend to have Donte repeat the same, boring, tired tactic until the fight is over. Bob Barbas is the worst example; jump over his beams, use that one Eryx attack to slam into the nonsensical floor buttons, wail on him for a third of his health bar, kill 10 minor enemies in his news world, repeat two more times.

No matter what difficulty you’re on, these bosses never manage to be a challenge due to how placid they are. They will always accommodate their little “formula” you need to solve to beat them.

It’s baffling, because the previously mentioned Dream Runner mini-bosses are great. They’re aggressive, reactive, open to almost any combo you can outwit them with, and don’t force you to repeat the same set of steps in every encounter.

Vurgil on the other hand....

So, here we are, the grand finale. The ultimate evil has revealed itself, and it’s your own brother! You’re clearly a badass because you just took down Satan himself along with his army, so surely the only thing left that could challenge you is your more experienced twin.

Well, he would, if his AI didn’t start the show by consistently suffering from that same pathfinding bug that makes minor enemies interminably strafe around you. So far so good for my first playthrough. So I attack him, maybe hit him 5 times before a min-cutscene rears its head because I’ve suddenly made it into the next stage. Same thing happens once or twice. Then, somehow, Vurgil’s model freezes in the air during one of his attacks. He hangs there indefinitely until I attack him again. Then, at the end of the fight where he’s summoned a clone (because he can do that apparently, not that he’s ever so much as referenced the fact) so his real self can take a knee and heal, I’m supposed to use Devil Trigger to move him out of the way and finish the job (though, I don’t understand why the real Vurgil isn’t also thrown into the air). I do so, but the clone lingers on the ground for a moment, trying to attack me before just zipping into the sky; another bug. I attack the real Vurgil, but nothing happens at first. I keep wailing on him, hoping that one of my attacks will eventually collide and then, -Scene Missing-, the final cutscene of the battle plays.

Do I need to say any more? Do you see what a fucking mess the boss fights are? The final battle for humanity, the emotional crux of the story, the update to the final unsurpassed boss fight of DMC3, reduced to a buggy, embarrassing slap fight that gave me four glitches on my first playthrough.

The whole thing bungled the climax of its story. But, then again, was the story really that sacred to begin with....

Concept and Story

I promise I will not use the word “edgy” here.

Satire and social commentary, no matter how cartoonish, is a weird fit in a Devil May Cry game. DMC2 had an evil businessman too, and 4 ended with you punching the Pope in the face, but neither seemed to say anything substantial against capitalism or religion. They existed in a much more fantastical place, where any sort of commentary was aimed at a more philosophical target. “What makes us human? What makes us into demons? What is hell like? Is family more important than what you feel is right?” The previous games are all centered around a much more personal, individualistic identity crisis, and not any sort of populist, society-wide problems.

DmC brings up surveillance states, the most recent economic crisis and late-capitalism, soft drink addiction/declining nutrition, news manipulation, the prison industrial complex, conspiracy culture, populous revolt, some scant mentions of mental institutions, hacktivism, and the Occupy Movement. These topics, all of which are pretty damn serious and warrant long discussions, are simply decoration for a story about fantasy demons secretly running the world They Live style. Hell, it basically IS They Live, only the aliens are demons and the tools of control are more contemporary. (somehow there’s nothing about the internet in there though...)

All in all, its treatment of modern issues is childishly simple at best and cynical at worst. Sure, the game presents itself as defying capitalism and social engineering via advertising, but it then goes on to launch an ad and hype campaign bigger than any of the previous games, spanning across billboards, phone apps, social media promotion, the usual games media rounds and expensive pre-rendered television commercials. Hell, they even had an ad for their ad! All of this amid a gigantic fan backlash and in-fighting with games journalists on whether people were mad about Donte’s hair colour of if they were just outrightly entitled.

The fact that lead designer and writer Tameem Antoniades responded to this backlash and feedback by tweeking Donte’s design and adding in a random moment were a wig literally drops out of the sky onto Donte’s head for a jab at this “controversy” says something about the intent he had with his story; There is no real political statement behind DmC, it simply pulls from what was in the news at the time, and uses it as fodder for an otherwise archetypal plot.

The problem is that it tries to do this while also talking about hellish demons, heavenly angels and earthly humans. Well, mostly demons, because the angels are absent from the plot and Donte doesn’t seem to have any sort of Angel Trigger, and the only named human character is Kat, who doesn’t have much ploy within the story; she’s there to be rescued, and provide minimal help with a pat on the back from Donte. So demons rule the world, the angels are absent, and the people who suffer are us lowly humans. But it’s a half-demon, half-angel who “saves” us all/reduces the city to rubble, while all us humans can do is post about it on Twitter. Doesn’t sound very empowering to me.

The main villain should say it all. He’s some sort of businessman/oligarch/banker/economist/military commander/mayor/Satan, but he makes the undeniable point that he gave human civilization it’s structure. He has a wife he at least somewhat cares about, and a child he has high hopes for. He (and his wife) shows more emotion than any of our protagonists, and they have more at stake than anyone else, with a genuine vision for the future no less. So, when he very reasonably asks Donte what his goal is, all Donte can say is “freedom” and “revenge”, then continue to childishly taunt him when pressed further. I could go on about how unhealthy the obsession with the post-apocalypse our generation has is, but suffice to say; Donte is not someone to look up to.

Donte himself, and by extent his story, has no real ideological motivation behind him despite being dressed up as an anarchist. His motivations and arch as a character are no less two dimensional than the original Dante, but now manage to be over-stated and hamfisted, with an added veneer of “politics”. Vurgil points how much he’s supposedly changed right before the final boss fight, but how he changes doesn’t include a strong statement of intent. What does Donte want? Fucked if I know! Fucked if he knows.

All of this says nothing about how...well....plain bad the writing is. The dialogue is famously cringeworthy and the plot has more holes than a sponge.

If Mundus was hunting Donte to kill him this whole time, why can’t he find him despite having multiple cameras aimed directly at this house? Why didn’t he just kill him when Donte was in the orphanage run by “demon scum”? Where was Vurgil this whole time? Why does Kat need to hit the Hunter with a molotov? Actually, what the fuck is she doing in the real world while this is happening? Are people just ignoring this pixie girl throwing bottles around a pier? What’s that weird dimension Donte goes into to unlock new powers? If it’s his own head, why are Mundus’ demons in it? And why would it change his weapons? Why doesn’t he have an Angel Trigger? If Vurgil can do all that cool shit he does in his boss fight at the end, including opening a fucking portal to another dimension, why does he need to rely on Kat to hop dimensions earlier on? Or rely on anyone for that matter? Why does he have white hair when he’s born, but Donte has black hair until the end? If Mundus is immortal, why does he need an heir? Why does time randomly slow down after Vurgil shoots Lilith? How did Kat know the layout of so many floors in Mundus’ tower? Surely he didn’t give her a tour of the whole building, right? Did Donte and Vurgil fuck the entire planet by releasing demons into earth and destroying world economics and governments? Or are there pre-existing governments anyway?

Seriously, I could go on forever.

Beyond basic plot, logic and diegetic continuity (the rules of DmC’s world, and how it suspends your disbelief), you get into more subjective questions like “is Donte a likable character?”

I, perhaps surprisingly, think he is. He’s such a tryhard asshole for the majority of his game, never stopping to think about what he’s doing or to engage with the They Live world he lives in that he is, honestly, a bit adorable. He’s not someone I’d ever have the patience to hang out with in real life, but he is at least consistent. He’s a total lughead and he almost blows up the planet, but it makes sense that a nihilistic, “act first, think later” bro would do that.

And I think that sums up his story too; dumber than it thinks, but entertaining all the same. It’s a different kind of dumb than the original games, a kind of dumb that stares at the camera wall-eyed instead of with a sideways wink.

Conclusion

As of writing, I consider Devil May Cry to be dead as a series. With no solid news from Capcom on further projects for 7 years now, DmC: Devil may Cry is the swansong of the entire franchise. Well, beyond shitty cameo costumes in Dead Rising 4, or pachinko machines or whatever.

Likewise, more recent hack n slash series like Bayonetta, Metal Gear Rising and Nier: Automata have risen to challenge Devil May Cry for its crown, and without something better than Ninja Theory’s efforts to stop them, they’ll probably get it.

DmC is not a complete trainwreck. It’s enjoyable, worth the second hand price and 10+ hours of your time. It’s entertaining in a similar way a bad film is; so long as you don’t expect too much from it, you’ll have a laugh. Let go of your bitterness with Ninja Theory and Tameem and you’ll poke fun at it in a less mean-spirited way then your fan rage wants you to. DMC deserved to end on a better note than this, but.....honestly....fuck it. Capcom probably couldn’t make anything much better themselves these days anyway.

Treat DmC like a pug; malformed and lumpy, probably should have been neutered a generation ago, but funny to look at and play with, even though it’s covered in its own slobber.

15 notes

·

View notes

Text

Nestled among the many indistinguishable buildings of Microsoft’s Redmond campus, a multi-disciplinary team sharing an attention to detail that borders on fanatical is designing a keyboard… again and again and again. And one more time for good measure. Their dogged and ever-evolving dedication to “human factors” shows the amount of work that goes into making any piece of hardware truly ergonomic.

Microsoft may be known primarily for its software and services, but cast your mind back a bit and you’ll find a series of hardware advances that have redefined their respective categories.

The original Natural Keyboard was the first split-key, ergonomic keyboard, the fundamentals of which have only ever been slightly improved upon.

The Intellimouse Optical not only made the first truly popular leap away from ball-based mice, but did so in such a way that its shape and buttons still make its descendants among the best all-purpose mice on the market.

Remember me?

Although the Zune is remembered more for being a colossal boondoggle than a great music player, it was very much the latter, and I still use and marvel at the usability of my Zune HD. Yes, seriously. (Microsoft, open source the software!)

More recently, the Surface series of convertible notebooks have made bold and welcome changes to a form factor that had stagnated in the wake of Apple’s influential mid-2000s MacBook Pro designs.

Microsoft is still making hardware, of course, and in fact it has doubled down on its ability to do so with a revamped hardware lab filled with dedicated, extremely detail-oriented people who are given the tools they need to get as weird as they want — as long as it makes something better.

You don’t get something like this by aping the competition.

First, a disclosure: I may as well say at the outset that this piece was done essentially at the invitation (but not direction) of Microsoft, which offered the opportunity to visit their hardware labs in Building 87 and meet the team. I’d actually been there before a few times, but it had always been off-record and rather sanitized.

Knowing how interesting I’d found the place before, I decided I wanted to take part and share it at the risk of seeming promotional. They call this sort of thing “access journalism,” but the second part is kind of a stretch. I really just think this stuff is really cool, and companies seldom expose their design processes in the open like this. Microsoft obviously isn’t the only company to have hardware labs and facilities like this, but they’ve been in the game for a long time and have an interesting and almost too detailed process they’ve decided to be open about.

Although I spoke with perhaps a dozen Microsoft Devices people during the tour (which was still rigidly structured), only two were permitted to be on record: Edie Adams, chief ergonomist, and Yi-Min Huang, principal design and experience lead. But the other folks in the labs were very obliging in answering questions and happy to talk about their work. I was genuinely surprised and pleased to find people occupying niches so suited to their specialties and inclinations.

Generally speaking, the work I got to see fell into three general spaces: the Human Factors Lab, focused on very exacting measurements of people themselves and how they interact with a piece of hardware; the anechoic chamber, where the sound of devices is obsessively analyzed and adjusted; and the Advanced Prototype Center, where devices and materials can go from idea to reality in minutes or hours.

The science of anthropometry

Inside the Human Factors lab, human thumbs litter the table. No, it isn’t a torture chamber — not for humans, anyway. Here the company puts its hardware to the test by measuring how human beings use it, recording not just simple metrics like words per minute on a keyboard, but high-speed stereo footage that analyzes how the skin of the hand stretches when it reaches for a mouse button, down to a fraction of a millimeter.

The trend here, as elsewhere in the design process and labs, is that you can’t count out anything as a factor that increases or decreases comfort; the little things really do make a difference, and sometimes the microscopic ones.

“Feats of engineering heroics are great,” said Adams, “but they have to meet a human need. We try to cover the physical, cognitive and emotional interactions with our products.”

(Perhaps you take this, as I did, as — in addition to a statement of purpose — a veiled reference to a certain other company whose keyboards have been in the news for other reasons. Of this later.)

The lab is a space perhaps comparable to a medium-sized restaurant, with enough room for a dozen or so people to work in the various sub-spaces set aside for different highly specific measurements. Various models of body parts have been set out on work surfaces, I suspect for my benefit.

Among them are that set of thumbs, in little cases looking like oversized lipsticks, each with a disturbing surprise inside. These are all cast from real people, ranging from the small thumb of a child to a monster that, should it have started a war with mine, I would surrender unconditionally.

Next door is a collection of ears, not only rendered in extreme detail but with different materials simulating a variety of rigidities. Some people have soft ears, you know. And next door to those is a variety of noses, eyes and temples, each representing a different facial structure or interpupillary distance.

This menagerie of parts represents not just a continuum of sizes but a variety of backgrounds and ages. All of them come into play when creating and testing a new piece of hardware.

“We want to make sure that we have a diverse population we can draw on when we develop our products,” said Adams. When you distribute globally it is embarrassing to find that some group or another, with wider-set eyes or smaller hands, finds your product difficult to use. Inclusivity is a many-faceted gem; indeed, it has as many facets as you are willing to cut. (The Xbox Adaptive Controller, for instance, is a new and welcome one.)

In one corner stands an enormous pod that looks like Darth Vader should emerge from it. This chamber, equipped with 36 DSLR cameras, produces an unforgivingly exact reproduction of one’s head. I didn’t do it myself, but many on the team had; in fact, one eyes-and-nose combo belonged to Adams. The fellow you see pictured below also works in the lab; that was the first such 3D portrait they took with the rig.

With this they can quickly and easily scan in dozens or hundreds of heads, collecting metrics on all manner of physiognomical features and creating an enviable database of both average and outlier heads. My head is big, if you want to know, and my hand was on the upper range too. But well within a couple standard deviations.

So much for static study — getting reads on the landscape of humanity, as it were. Anthropometry, they call it. But there are dynamic elements as well, some of which they collect in the lab, some elsewhere.

“When we’re evaluating keyboards, we have people come into the lab. We try to put them in the most neutral position possible,” explained Adams.

It should be explained that by neutral, she means specifically with regard to the neutral positions of the joints in the body, which have certain minima and maxima it is well to observe. How can you get a good read on how easy it is to type on a given keyboard if the chair and desk the tester is sitting at are uncomfortable?

Here as elsewhere the team strives to collect both objective data and subjective data; people will say they think a keyboard, or mouse, or headset is too this or too that, but not knowing the jargon they can’t get more specific. By listening to subjective evaluations and simultaneously looking at objective measurements, you can align the two and discover practical measures to take.

One such objective measure involved motion capture beads attached to the hand while an electromyographic bracelet tracks the activation of muscles in the arm. Imagine, if you will, a person whose typing appears normal and of uniform speed — but in reality they are putting more force on their middle fingers than the others because of the shape of the keys or rest. They might not be able to tell you they’re doing so, though it will lead to uneven hand fatigue, but this combo of tools could reveal the fact.

“We also look at a range of locations,” added Huang. “Typing on a couch is very different from typing on a desk.”

One case, such as a wireless Surface keyboard, might require more of what Huang called “lapability,” (sp?) while the other perhaps needs to accommodate a different posture and can abandon lapability altogether.

A final measurement technique that is quite new to my knowledge involves a pair of high-resolution, high-speed black and white cameras that can be focused narrowly on a region of the body. They’re on the right, below, with colors and arrows representing motion vectors.

A display showing various anthropometric measurements.

These produce a very detailed depth map by closely tracking the features of the skin; one little patch might move farther than the other when a person puts on a headset, suggesting it’s stretching the skin on the temple more than it is on the forehead. The team said they can see movements as small as 10 microns, or micrometers (therefore you see that my headline was only light hyperbole).

You might be thinking that this is overkill. And in a way it most certainly is. But it is also true that by looking closer they can make the small changes that cause a keyboard to be comfortable for five hours rather than four, or to reduce error rates or wrist pain by noticeable amounts — features you can’t really even put on the box, but which make a difference in the long run. The returns may diminish, but we’re not so far along the asymptote approaching perfection that there’s no point to making further improvements.

The quietest place in the world

Down the hall from the Human Factors lab is the quietest place in the world. That’s not a colloquial exaggeration — the main anechoic chamber in Building 87 at Microsoft is in the record books as the quietest place on Earth, with an official ambient noise rating of negative 20.3 decibels.

You enter the room through a series of heavy doors and the quietness, though a void, feels like a physical medium that you pass into. And so it is, in fact — a near-total lack of vibrations in the air that feels as solid as the nested concrete boxes inside which the chamber rests.

I’ve been in here a couple of times before, and Hundraj Gopal, the jovial and highly expert proprietor of quietude here, skips the usual tales of Guinness coming to test it and so on. Instead we talk about the value of sound to the consumer, though they may not even realize they do value it.

Naturally if you’re going to make a keyboard, you’re going to want to control how it sounds. But this is a surprisingly complex process, especially if, like the team at Microsoft, you’re really going to town on the details.

The sounds of consumer products are very deliberately designed, they explained. The sound your car door makes when it shuts gives a sense of security — being sealed in when you’re entering, and being securely shut out when you’re leaving it. It’s the same for a laptop — you don’t want to hear a clank when you close it, or a scraping noise when you open it. These are the kinds of things that set apart “premium” devices (and cars, and controllers, and furniture, etc.) and they do not come about by accident.

Keyboards are no exception. And part of designing the sound is understanding that there’s more to it than loudness or even tone. Some sounds just sound louder, though they may not register as high in decibels. And some sounds are just more annoying, though they might be quiet. The study and understanding of this is what’s known as psychoacoustics.

There are known patterns to pursue, certain combinations of sounds that are near-universally liked or disliked, but you can’t rely on that kind of thing when you’re, say, building a new keyboard from the ground up. And obviously when you create a new machine like the Surface and its family they need new keyboards, not something off the shelf. So this is a process that has to be done from scratch over and over.

As part of designing the keyboard — and keep in mind, this is in tandem with the human factors mentioned above and the rapid prototyping we’ll touch on below — the device has to come into the anechoic chamber and have a variety of tests performed.

A standard head model used to simulate how humans might hear certain sounds. The team gave it a bit of a makeover.

These tests can be painstakingly objective, like a robotic arm pressing each key one by one while a high-end microphone records the sound in perfect fidelity and analysts pore over the spectrogram. But they can also be highly subjective: They bring in trained listeners — “golden ears” — to give their expert opinions, but also have the “gen pop” everyday users try the keyboards while experiencing calibrated ambient noise recorded in coffee shops and offices. One click sound may be lost in the broad-spectrum hubbub in a crowded cafe but annoying when it’s across the desk from you.

This feedback goes both directions, to human factors and prototyping, and they iterate and bring it back for more. This progresses sometimes through multiple phases of hardware, such as the keyswitch assembly alone; the keys built into their metal enclosure; the keys in the final near-shipping product before they finalize the keytop material, and so on.

Indeed, it seems like the process really could go on forever if someone didn’t stop them from refining the design further.

“It’s amazing that we ever ship a product,” quipped Adams. They can probably thank the Advanced Prototype Center for that.

Rapid turnaround is fair play

If you’re going to be obsessive about the details of the devices you’re designing, it doesn’t make a lot of sense to have to send off a CAD file to some factory somewhere, wait a few days for it to come back, then inspect for quality, send a revised file, and so on. So Microsoft (and of course other hardware makers of any size) now use rapid prototyping to turn designs around in hours rather than days or weeks.

This wasn’t always possible, even with the best equipment. 3D printing has come a long way over the last decade, and continues to advance, but not long ago there was a huge difference between a printed prototype and the hardware that a user would actually hold.

Multi-axis CNC mills have been around for longer, but they’re slower and more difficult to operate. And subtractive manufacturing (i.e. taking a block and whittling it down to a mouse) is inefficient and has certain limitations as far as the structures it can create.

Of course, you could carve it yourself out of wood or soap, but that’s a bit old-fashioned.

So when Building 87 was redesigned from the ground up some years back, it was loaded with the latest and greatest of both additive and subtractive rapid manufacturing methods, and the state of the art has been continually rolling through ever since. Even as I passed through they were installing some new machines (desk-sized things that had slots for both extrusion materials and ordinary printer ink cartridges, a fact that for some reason I found hilarious).

The additive machines are in constant use as designers and engineers propose new device shapes and styles that sound great in theory but must be tested in person. Having a bunch of these things, each able to produce multiple items per print, lets you for instance test out a thumb scoop on a mouse with 16 slightly different widths. Maybe you take those over to Human Factors and see which can be eliminated for over-stressing a joint, then compare comfort on the surviving six and move on to a new iteration. That could all take place over a day or two.

Ever wonder what an Xbox controller feels like to a child? Just print a giant one in the lab.

Softer materials have become increasingly important as designers have found that they can be integrated into products from the start. For instance, a wrist wrest for a new keyboard might have foam padding built in.

But how much foam is too much, or too little? As with the 3D printers, flat materials like foam and cloth can be customized and systematically tested as well. Using a machine called a skiver, foam can be split into thicknesses only half a millimeter apart. It doesn’t sound like much — and it isn’t — but when you’re creating an object that will be handled for hours at a time by the sensitive hands of humans, the difference can be subtle but substantial.

For more heavy-duty prototyping of things that need to be made out of metal — hinges, laptop frames and so on — there is bank after bank of five-axis CNC machines, lathes and more exotic tools, like a system that performs extremely precise cuts using a charged wire.

The engineers operating these things work collaboratively with the designers and researchers, and it was important to the people I talked to that this wasn’t a “here, print this” situation. A true collaboration has input from both sides, and that is what seems to be happening here. Someone inspecting a 3D model for printability before popping it into the five-axis might say to the designer, you know, these pieces could fit together more closely if we did so-and-so, and it would actually add strength to the assembly. (Can you tell I’m not an engineer?) Making stuff, and making stuff better, is a passion among the crew, and that’s a fundamentally creative drive.

Making fresh hells for keyboards

If any keyboard has dominated the headlines for the last year or so, it’s been Apple’s ill-fated butterfly switch keyboard on the latest MacBook Pros. While being in my opinion quite unpleasant to type on, they appeared to fail at an astonishing rate judging by the proportion of users I saw personally reporting problems, and are quite expensive to replace. How, I wondered, did a company with Apple’s design resources create such a dog?

Here’s a piece of hardware you won’t break any time soon.

I mentioned the subject to the group toward the end of the tour but, predictably and understandably, it wasn’t really something they wanted to talk about. But a short time later I spoke with one of the people in charge of Microsoft’s reliability managers. They too demurred on the topic of Apple’s failures, opting instead to describe at length the measures Microsoft takes to ensure that their own keyboards don’t suffer a similar fate.

The philosophy is essentially to simulate everything about the expected three to five-year life of the keyboard. I’ve seen the “torture chambers” where devices are beaten on by robots (I’ve seen these personally, years ago — they’re brutal), but there’s more to it than that. Keyboards are everyday objects, and they face everyday threats; so that’s what the team tests, with things falling into three general categories:

Environmental: This includes cycling the temperature from very low to very high, exposing the keyboard to dust and UV. This differs for each product, as some will obviously be used outside more than others. Does it break? Does it discolor? Where does the dust go?

Mechanical: Every keyboard undergoes key tests to make sure that keys can withstand however many million presses without failing. But that’s not the only thing that keyboards undergo. They get dropped and things get dropped on them, of course, or left upside-down, or have their keys pressed and held at weird angles. All these things are tested, and when a keyboard fails because of a test they don’t have, they add it.

Chemical: I found this very interesting. The team now has more than 30 chemicals that it exposes its hardware to, including: lotion, Coke, coffee, chips, mustard, ketchup and Clorox. The team is constantly adding to the list as new chemicals enter frequent usage or new markets open up. Hospitals, for instance, need to test a variety of harsh disinfectants that an ordinary home wouldn’t have. (Note: Burt’s Bees is apparently bad news for keyboards.)

Testing is ongoing, with new batches being evaluated continuously as time allows.

To be honest, it’s hard to imagine that Apple’s disappointing keyboard actually underwent this kind of testing, or if it did, that it was modified to survive it. The number and severity of problems I’ve heard of with them suggest the “feats of engineering heroics” of which Adams spoke, but directed singlemindedly in the direction of compactness. Perhaps more torture chambers are required at Apple HQ.

7 factors and the unfactorable

All the above are more tools for executing a design and not for creating one to begin with. That’s a whole other kettle of fish, and one not so easily described.

Adams told me: “When computers were on every desk the same way, it was okay to only have one or two kinds of keyboard. But now that there are so many kinds of computing, it’s okay to have a choice. What kind of work do you do? Where do you do it? I mean, what do we all type on now? Phones. So it’s entirely context dependent.”

Is this the right curve? Or should it be six millimeters higher? Let’s try both.

Yet even in the great variety of all possible keyboards there are metrics that must be considered if that keyboard is to succeed in its role. The team boiled it down to seven critical points:

Key travel: How far a key goes until it bottoms out. Neither shallow nor deep is necessarily good, but serve different purposes.

Key spacing: Distance between the center of one key and the next. How far can you differ from “full-size” before it becomes uncomfortable?

Key pitch: On many keyboards the keys do not all “face” the same direction, but are subtly pointed toward the home row, because that’s the direction from which your fingers hit them. How much is too much? How little is too little?

Key dish: The shape of the keytop limits your fingers’ motion, captures them when they travel or return and provides a comfortable home — if it’s done right.

Key texture: Too slick and fingers will slide off. Too rough and it’ll be uncomfortable. Can it be fabric? Textured plastic? Metal?

Key sound: As described above, the sound indicates a number of things and has to be carefully engineered.

Force to fire: How much actual force does it take to drive a given key to its actuation point? Keep in mind this can and perhaps should differ from key to key.

In addition to these core concepts there are many secondary ones that pop up for consideration: Wobble, or the amount a key moves laterally (yes, this is deliberate), snap ratio, involving the feedback from actuation. Drop angle, off-axis actuation, key gap for chiclet boards… and of course the inevitable switch debate.

Keyboard switches, the actual mechanism under the key, have become a major sub-industry as many companies started making their own at the expiration of a few important patents. Hence there’s been a proliferation of new key switches with a variety of aspects, especially on the mechanical side. Microsoft does make mechanical keyboards, and scissor-switch keyboards, and membrane as well, and perhaps even some more exotic ones (though the original touch-sensitive Surface cover keyboard was a bit of a flop).

“When we look at switches, whether it’s for a mouse, QWERTY, or other keys, we think about what they’re for,” said Adams. “We’re not going to say we’re scissor switch all the time or something — we have all kinds. It’s about durability, reliability, cost, supply and so on. And the sound and tactile experience is so important.”

As for the shape itself, there is generally the divided Natural style, the flat full style and the flat chiclet style. But with design trends, new materials, new devices and changes to people and desk styles (you better believe a standing desk needs a different keyboard than a sitting one), it’s a new challenge every time.

They collected a menagerie of keyboards and prototypes in various stages of experimentation. Some were obviously never meant for real use — one had the keys pitched so far that it was like a little cave for the home row. Another was an experiment in how much a design could be shrunk until it was no longer usable. A handful showed different curves à la Natural — which is the right one? Although you can theorize, the only way to be sure is to lay hands on it. So tell rapid prototyping to make variants 1-10, then send them over to Human Factors and text the stress and posture resulting from each one.

“Sure, we know the gable slope should be between 10-15 degrees and blah blah blah,” said Adams, who is actually on the patent for the original Natural Keyboard, and so is about as familiar as you can get with the design. “But what else? What is it we’re trying to do, and how are we achieving that through engineering? It’s super fun bringing all we know about the human body and bringing that into the industrial design.”

Although the comparison is rather grandiose, I was reminded of an orchestra — but not in full swing. Rather, in the minutes before a symphony begins, and all the players are tuning their instruments. It’s a cacophony in a way, but they are all tuning toward a certain key, and the din gradually makes its way to a pleasant sort of hum. So it is that a group of specialists all tending their sciences and creeping toward greater precision seem to cohere a product out of the ether that is human-centric in all its parts.

Read more: https://techcrunch.com/2019/07/26/how-microsoft-turns-an-obsession-with-detail-into-micron-optimized-keyboards/

How Microsoft turns an obsession with detail into micron-optimized keyboards Nestled among the many indistinguishable buildings of Microsoft’s Redmond campus, a multi-disciplinary team sharing an attention to detail that borders on fanatical is designing a keyboard… again and again and again.

0 notes

Text

Nestled among the many indistinguishable buildings of Microsoft’s Redmond campus, a multi-disciplinary team sharing an attention to detail that borders on fanatical is designing a keyboard… again and again and again. And one more time for good measure. Their dogged and ever-evolving dedication to “human factors” shows the amount of work that goes into making any piece of hardware truly ergonomic.

Microsoft may be known primarily for its software and services, but cast your mind back a bit and you’ll find a series of hardware advances that have redefined their respective categories.

The original Natural Keyboard was the first split-key, ergonomic keyboard, the fundamentals of which have only ever been slightly improved upon.

The Intellimouse Optical not only made the first truly popular leap away from ball-based mice, but did so in such a way that its shape and buttons still make its descendants among the best all-purpose mice on the market.

Remember me?

Although the Zune is remembered more for being a colossal boondoggle than a great music player, it was very much the latter, and I still use and marvel at the usability of my Zune HD. Yes, seriously. (Microsoft, open source the software!)

More recently, the Surface series of convertible notebooks have made bold and welcome changes to a form factor that had stagnated in the wake of Apple’s influential mid-2000s MacBook Pro designs.

Microsoft is still making hardware, of course, and in fact it has doubled down on its ability to do so with a revamped hardware lab filled with dedicated, extremely detail-oriented people who are given the tools they need to get as weird as they want — as long as it makes something better.

You don’t get something like this by aping the competition.

First, a disclosure: I may as well say at the outset that this piece was done essentially at the invitation (but not direction) of Microsoft, which offered the opportunity to visit their hardware labs in Building 87 and meet the team. I’d actually been there before a few times, but it had always been off-record and rather sanitized.

Knowing how interesting I’d found the place before, I decided I wanted to take part and share it at the risk of seeming promotional. They call this sort of thing “access journalism,” but the second part is kind of a stretch. I really just think this stuff is really cool, and companies seldom expose their design processes in the open like this. Microsoft obviously isn’t the only company to have hardware labs and facilities like this, but they’ve been in the game for a long time and have an interesting and almost too detailed process they’ve decided to be open about.

Although I spoke with perhaps a dozen Microsoft Devices people during the tour (which was still rigidly structured), only two were permitted to be on record: Edie Adams, chief ergonomist, and Yi-Min Huang, principal design and experience lead. But the other folks in the labs were very obliging in answering questions and happy to talk about their work. I was genuinely surprised and pleased to find people occupying niches so suited to their specialties and inclinations.

Generally speaking, the work I got to see fell into three general spaces: the Human Factors Lab, focused on very exacting measurements of people themselves and how they interact with a piece of hardware; the anechoic chamber, where the sound of devices is obsessively analyzed and adjusted; and the Advanced Prototype Center, where devices and materials can go from idea to reality in minutes or hours.

The science of anthropometry

Inside the Human Factors lab, human thumbs litter the table. No, it isn’t a torture chamber — not for humans, anyway. Here the company puts its hardware to the test by measuring how human beings use it, recording not just simple metrics like words per minute on a keyboard, but high-speed stereo footage that analyzes how the skin of the hand stretches when it reaches for a mouse button, down to a fraction of a millimeter.

The trend here, as elsewhere in the design process and labs, is that you can’t count out anything as a factor that increases or decreases comfort; the little things really do make a difference, and sometimes the microscopic ones.

“Feats of engineering heroics are great,” said Adams, “but they have to meet a human need. We try to cover the physical, cognitive and emotional interactions with our products.”

(Perhaps you take this, as I did, as — in addition to a statement of purpose — a veiled reference to a certain other company whose keyboards have been in the news for other reasons. Of this later.)

The lab is a space perhaps comparable to a medium-sized restaurant, with enough room for a dozen or so people to work in the various sub-spaces set aside for different highly specific measurements. Various models of body parts have been set out on work surfaces, I suspect for my benefit.

Among them are that set of thumbs, in little cases looking like oversized lipsticks, each with a disturbing surprise inside. These are all cast from real people, ranging from the small thumb of a child to a monster that, should it have started a war with mine, I would surrender unconditionally.

Next door is a collection of ears, not only rendered in extreme detail but with different materials simulating a variety of rigidities. Some people have soft ears, you know. And next door to those is a variety of noses, eyes and temples, each representing a different facial structure or interpupillary distance.

This menagerie of parts represents not just a continuum of sizes but a variety of backgrounds and ages. All of them come into play when creating and testing a new piece of hardware.

“We want to make sure that we have a diverse population we can draw on when we develop our products,” said Adams. When you distribute globally it is embarrassing to find that some group or another, with wider-set eyes or smaller hands, finds your product difficult to use. Inclusivity is a many-faceted gem; indeed, it has as many facets as you are willing to cut. (The Xbox Adaptive Controller, for instance, is a new and welcome one.)

In one corner stands an enormous pod that looks like Darth Vader should emerge from it. This chamber, equipped with 36 DSLR cameras, produces an unforgivingly exact reproduction of one’s head. I didn’t do it myself, but many on the team had; in fact, one eyes-and-nose combo belonged to Adams. The fellow you see pictured below also works in the lab; that was the first such 3D portrait they took with the rig.

With this they can quickly and easily scan in dozens or hundreds of heads, collecting metrics on all manner of physiognomical features and creating an enviable database of both average and outlier heads. My head is big, if you want to know, and my hand was on the upper range too. But well within a couple standard deviations.

So much for static study — getting reads on the landscape of humanity, as it were. Anthropometry, they call it. But there are dynamic elements as well, some of which they collect in the lab, some elsewhere.

“When we’re evaluating keyboards, we have people come into the lab. We try to put them in the most neutral position possible,” explained Adams.

It should be explained that by neutral, she means specifically with regard to the neutral positions of the joints in the body, which have certain minima and maxima it is well to observe. How can you get a good read on how easy it is to type on a given keyboard if the chair and desk the tester is sitting at are uncomfortable?

Here as elsewhere the team strives to collect both objective data and subjective data; people will say they think a keyboard, or mouse, or headset is too this or too that, but not knowing the jargon they can’t get more specific. By listening to subjective evaluations and simultaneously looking at objective measurements, you can align the two and discover practical measures to take.

One such objective measure involved motion capture beads attached to the hand while an electromyographic bracelet tracks the activation of muscles in the arm. Imagine, if you will, a person whose typing appears normal and of uniform speed — but in reality they are putting more force on their middle fingers than the others because of the shape of the keys or rest. They might not be able to tell you they’re doing so, though it will lead to uneven hand fatigue, but this combo of tools could reveal the fact.

“We also look at a range of locations,” added Huang. “Typing on a couch is very different from typing on a desk.”

One case, such as a wireless Surface keyboard, might require more of what Huang called “lapability,” (sp?) while the other perhaps needs to accommodate a different posture and can abandon lapability altogether.

A final measurement technique that is quite new to my knowledge involves a pair of high-resolution, high-speed black and white cameras that can be focused narrowly on a region of the body. They’re on the right, below, with colors and arrows representing motion vectors.

A display showing various anthropometric measurements.

These produce a very detailed depth map by closely tracking the features of the skin; one little patch might move farther than the other when a person puts on a headset, suggesting it’s stretching the skin on the temple more than it is on the forehead. The team said they can see movements as small as 10 microns, or micrometers (therefore you see that my headline was only light hyperbole).

You might be thinking that this is overkill. And in a way it most certainly is. But it is also true that by looking closer they can make the small changes that cause a keyboard to be comfortable for five hours rather than four, or to reduce error rates or wrist pain by noticeable amounts — features you can’t really even put on the box, but which make a difference in the long run. The returns may diminish, but we’re not so far along the asymptote approaching perfection that there’s no point to making further improvements.

The quietest place in the world

Down the hall from the Human Factors lab is the quietest place in the world. That’s not a colloquial exaggeration — the main anechoic chamber in Building 87 at Microsoft is in the record books as the quietest place on Earth, with an official ambient noise rating of negative 20.3 decibels.

You enter the room through a series of heavy doors and the quietness, though a void, feels like a physical medium that you pass into. And so it is, in fact — a near-total lack of vibrations in the air that feels as solid as the nested concrete boxes inside which the chamber rests.

I’ve been in here a couple of times before, and Hundraj Gopal, the jovial and highly expert proprietor of quietude here, skips the usual tales of Guinness coming to test it and so on. Instead we talk about the value of sound to the consumer, though they may not even realize they do value it.