#powershell scripts examples

Explore tagged Tumblr posts

Text

Efficient Management of Multi-Server Queries: A T-SQL and PowerShell Approach

In today’s interconnected world, managing data across multiple SQL Server instances is a common scenario for many organizations. Whether for reporting, data aggregation, or monitoring, running the same query across these servers efficiently is paramount. This article explores practical techniques and tools to achieve this, focusing on T-SQL, linked servers, Central Management Server (CMS), and…

View On WordPress

0 notes

Text

In the near future one hacker may be able to unleash 20 zero-day attacks on different systems across the world all at once. Polymorphic malware could rampage across a codebase, using a bespoke generative AI system to rewrite itself as it learns and adapts. Armies of script kiddies could use purpose-built LLMs to unleash a torrent of malicious code at the push of a button.

Case in point: as of this writing, an AI system is sitting at the top of several leaderboards on HackerOne—an enterprise bug bounty system. The AI is XBOW, a system aimed at whitehat pentesters that “autonomously finds and exploits vulnerabilities in 75 percent of web benchmarks,” according to the company’s website.

AI-assisted hackers are a major fear in the cybersecurity industry, even if their potential hasn’t quite been realized yet. “I compare it to being on an emergency landing on an aircraft where it’s like ‘brace, brace, brace’ but we still have yet to impact anything,” Hayden Smith, the cofounder of security company Hunted Labs, tells WIRED. “We’re still waiting to have that mass event.”

Generative AI has made it easier for anyone to code. The LLMs improve every day, new models spit out more efficient code, and companies like Microsoft say they’re using AI agents to help write their codebase. Anyone can spit out a Python script using ChatGPT now, and vibe coding—asking an AI to write code for you, even if you don’t have much of an idea how to do it yourself—is popular; but there’s also vibe hacking.

“We’re going to see vibe hacking. And people without previous knowledge or deep knowledge will be able to tell AI what it wants to create and be able to go ahead and get that problem solved,” Katie Moussouris, the founder and CEO of Luta Security, tells WIRED.

Vibe hacking frontends have existed since 2023. Back then, a purpose-built LLM for generating malicious code called WormGPT spread on Discord groups, Telegram servers, and darknet forums. When security professionals and the media discovered it, its creators pulled the plug.

WormGPT faded away, but other services that billed themselves as blackhat LLMs, like FraudGPT, replaced it. But WormGPT’s successors had problems. As security firm Abnormal AI notes, many of these apps may have just been jailbroken versions of ChatGPT with some extra code to make them appear as if they were a stand-alone product.

Better then, if you’re a bad actor, to just go to the source. ChatGPT, Gemini, and Claude are easily jailbroken. Most LLMs have guard rails that prevent them from generating malicious code, but there are whole communities online dedicated to bypassing those guardrails. Anthropic even offers a bug bounty to people who discover new ones in Claude.

“It’s very important to us that we develop our models safely,” an OpenAI spokesperson tells WIRED. “We take steps to reduce the risk of malicious use, and we’re continually improving safeguards to make our models more robust against exploits like jailbreaks. For example, you can read our research and approach to jailbreaks in the GPT-4.5 system card, or in the OpenAI o3 and o4-mini system card.”

Google did not respond to a request for comment.

In 2023, security researchers at Trend Micro got ChatGPT to generate malicious code by prompting it into the role of a security researcher and pentester. ChatGPT would then happily generate PowerShell scripts based on databases of malicious code.

“You can use it to create malware,” Moussouris says. “The easiest way to get around those safeguards put in place by the makers of the AI models is to say that you’re competing in a capture-the-flag exercise, and it will happily generate malicious code for you.”

Unsophisticated actors like script kiddies are an age-old problem in the world of cybersecurity, and AI may well amplify their profile. “It lowers the barrier to entry to cybercrime,” Hayley Benedict, a Cyber Intelligence Analyst at RANE, tells WIRED.

But, she says, the real threat may come from established hacking groups who will use AI to further enhance their already fearsome abilities.

“It’s the hackers that already have the capabilities and already have these operations,” she says. “It’s being able to drastically scale up these cybercriminal operations, and they can create the malicious code a lot faster.”

Moussouris agrees. “The acceleration is what is going to make it extremely difficult to control,” she says.

Hunted Labs’ Smith also says that the real threat of AI-generated code is in the hands of someone who already knows the code in and out who uses it to scale up an attack. “When you’re working with someone who has deep experience and you combine that with, ‘Hey, I can do things a lot faster that otherwise would have taken me a couple days or three days, and now it takes me 30 minutes.’ That's a really interesting and dynamic part of the situation,” he says.

According to Smith, an experienced hacker could design a system that defeats multiple security protections and learns as it goes. The malicious bit of code would rewrite its malicious payload as it learns on the fly. “That would be completely insane and difficult to triage,” he says.

Smith imagines a world where 20 zero-day events all happen at the same time. “That makes it a little bit more scary,” he says.

Moussouris says that the tools to make that kind of attack a reality exist now. “They are good enough in the hands of a good enough operator,” she says, but AI is not quite good enough yet for an inexperienced hacker to operate hands-off.

“We’re not quite there in terms of AI being able to fully take over the function of a human in offensive security,” she says.

The primal fear that chatbot code sparks is that anyone will be able to do it, but the reality is that a sophisticated actor with deep knowledge of existing code is much more frightening. XBOW may be the closest thing to an autonomous “AI hacker” that exists in the wild, and it’s the creation of a team of more than 20 skilled people whose previous work experience includes GitHub, Microsoft, and a half a dozen assorted security companies.

It also points to another truth. “The best defense against a bad guy with AI is a good guy with AI,” Benedict says.

For Moussouris, the use of AI by both blackhats and whitehats is just the next evolution of a cybersecurity arms race she’s watched unfold over 30 years. “It went from: ‘I’m going to perform this hack manually or create my own custom exploit,’ to, ‘I’m going to create a tool that anyone can run and perform some of these checks automatically,’” she says.

“AI is just another tool in the toolbox, and those who do know how to steer it appropriately now are going to be the ones that make those vibey frontends that anyone could use.”

9 notes

·

View notes

Note

Hi, person with some musical and technical knowlage, with both questions, and some follow-ups to other people's questions:

Regarding the dynamics question, it's implemented in MIDI via velocity, so, in theory, if you just increese the velocity by a set ammount at a certian point, for a certian string (p, ff, etc), it should work fine

Following on from that, using Capital Letters to denote accents (raise the velocity for a single note, then return to the baseline) ought to work nicely

Then, taking it to a stupid extreme, text styling, for example, if it's in the cursive style, make it legato, which then opens the tin of worms of digital legato, but, making the notes playback for 105% of their regular length works way fucking better than it should

Have you considered a method of implementing octives? I've though about it a bit, and, other than general muscal direction (for example, if the last three notes 123 are all lower than the previus note, drop 4 by an octive, visa versa for up, no change for other situations), I don't think it's very possible to implement in a musical way

How do you select the instrument for each post?

Have you considered using a system like LilyPond in order to generate scores for each post?

OK, that's everything I can think of, if you have any more questions, feel free to ask, well, me, I suppose, despite that not being how tumblr asks normally work

To be completely honest I don't want to deal with dynamics, if I do add them it'll probably be in like version 3 of the script at best, version 2 is already gonna be an undertaking.

See above

Copy pasting text from tumblr into powershell means it does not carry the formatting into the script and I really don't want to do that manually

I definitely want to add octaves, but figuring out an intuitive way of doing it that allows for maximum manipulation by asks is proving difficult. Your idea is good but I'd rather go for something

If you look closely at the formatted text you'll see some letters bolded and italicized, those are the letters used to choose the instrument. I've explained in further detail in the past but basically it just sees what instrument it can find in the text first.

I have never heard of lilypond and I do not want to learn entirely new notation software for silly gimmick blog, sorry. if you want the midi file for one of the posts so far i haven't deleted any though

16 notes

·

View notes

Text

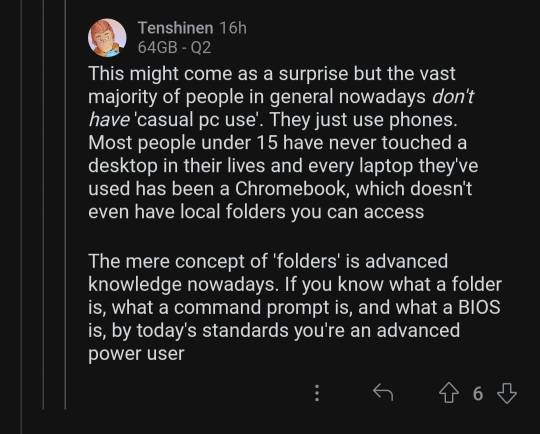

Okay to be honest, 99% of users never need to access the BIOS. (Although if you do PC gaming, you may need to get in there to overclock your GPU. Though frankly they have apps for that too nowadays).

Anyway, BIOS is the very very very most basic level of firmware for your motherboard. When you turn on your PC, the BIOS is what tells everything what drive to use, how to boot up the operating system, how much memory you have installed, etc. When you see the splash screen on startup (AKA a screen that briefly displays the manufacturer name of your device, like "Dell" or whatever), the BIOS has already run. To access BIOS, you generally need to hold down a key when you first power on your device. Which key may differ by manufacturer, so just google "how to enter bios Dell" (or whatever your brand is, like Lenovo, Asus ROG, etc). But, uh, please don't actually change anything in your BIOS unless you know exactly what you're doing and why. It's a good way to make your device stop turning on forever.

Command line is much more useful. (You may also have heard terms like CMD, console, command prompt, CLI, shell, or terminal, and these are technically not all synonymous basically synonymous for your purposes.) It's what you see every movie hacker using, where it's a black window with nothing but lines of text and a blinking cursor. You can launch it by hitting your Windows button and typing "CMD".

Command lines are useful to do technical things more quickly than going through a graphical interface. For example, let's say you've finally decided to switch to Firefox. You want to do it tomorrow, but hey, you could at least get it donwloaded and installed tonight, right? So you could launch Chrome, navigate to "firefox.com" click Download, save file, go to your Downloads folder, doubleclick the executable, and click through the installation prompts. Or... you could launch CMD and type "winget install firefox -h && shutdown /s /t 0". When you hit enter, the Windows built-in package manager will download and silently install Firefox and will turn off your PC once it finishes.

The big learning curve for command lines is that, compared to a graphical interface where you have clickable buttons and pages that show you all your options, command lines generally require you to know what commands you want to run, and what syntax they take. Different command line programs require different command languages, too. Windows Command Prompt uses "CMD", but Windows also has a more advanced command line called Powershell, which uses the language "Powershell." You can also run Visual Basic scripts that use VBS or VBA. The thing that makes Linux so tricky for most people is that the command line terminal is your primary way of interacting with the computer, using the language "Bash". It requires a lot of memorization; when you first start using any of these, google and superuser.com are your friends.

this can't be true can it

#i love explaining shit to ppl. please feel free to send me asks if u have more questions#computers#how to use computers

99K notes

·

View notes

Text

Is Coding Knowledge Necessary to Become a DevOps Engineer?

As the demand for DevOps professionals grows, a common question arises among aspiring engineers: Do I need to know how to code to become a DevOps engineer? The short answer is yes—but not in the traditional software developer sense. While DevOps doesn’t always require deep software engineering skills, a solid understanding of scripting and basic programming is critical to succeed in the role.

DevOps is all about automating workflows, managing infrastructure, and streamlining software delivery. Engineers are expected to work across development and operations teams, which means writing scripts, building CI/CD pipelines, managing cloud resources, and occasionally debugging code. That doesn’t mean you need to develop complex applications from scratch—but you do need to know how to write and read code to automate and troubleshoot effectively.

Many modern organizations rely on DevOps services and solutions to manage cloud environments, infrastructure as code (IaC), and continuous deployment. These solutions are deeply rooted in automation—and automation, in turn, is rooted in code.

Why Coding Matters in DevOps

At the core of DevOps lies a principle: "Automate everything." Whether you’re provisioning servers with Terraform, writing Ansible playbooks, or integrating a Jenkins pipeline, you're dealing with code-like instructions that need accuracy, logic, and reusability.

Here are some real-world areas where coding plays a role in DevOps:

Scripting: Automating repetitive tasks using Bash, Python, or PowerShell

CI/CD Pipelines: Creating automated build and deploy workflows using YAML or Groovy

Configuration Management: Writing scripts for tools like Ansible, Puppet, or Chef

Infrastructure as Code: Using languages like HCL (for Terraform) to define cloud resources

Monitoring & Alerting: Writing custom scripts to track system health or trigger alerts

In most cases, DevOps engineers are not expected to build full-scale applications, but they must be comfortable with scripting logic and integrating systems using APIs and automation tools.

A quote by Kelsey Hightower, a Google Cloud developer advocate, says it best: “If you don’t automate, you’re just operating manually at scale.” This highlights the importance of coding as a means to bring efficiency and reliability to infrastructure management.

Example: Automating Deployment with a Script

Let’s say your team manually deploys updates to a web application hosted on AWS. Each deployment takes 30–40 minutes, involves multiple steps, and is prone to human error. A DevOps engineer steps in and writes a shell script that pulls the latest code, runs unit tests, builds the Docker image, and deploys it to ECS.

This 30-minute process is now reduced to a few seconds and can run automatically after every code push. That’s the power of coding in DevOps—it transforms tedious manual work into fast, reliable automation.

For fast-paced environments like startups, this is especially valuable. If you’re wondering what's the best DevOps platform for startups, the answer often includes those that support automation, scalability, and low-code integrations—areas where coding knowledge plays a major role.

Can You Start DevOps Without Coding Skills?

Yes, you can start learning DevOps without a coding background, but you’ll need to pick it up along the way. Begin with scripting languages like Bash and Python. These are essential for automating tasks and managing systems. Over time, you’ll encounter environments where understanding YAML, JSON, or even basic programming logic becomes necessary.

Many DevOps as a service companies emphasize practical problem-solving and automation, which means you'll constantly deal with code-based tools—even if you’re not building products yourself. The more comfortable you are with code, the more valuable you become in a DevOps team.

The Bottom Line and CTA

Coding is not optional in DevOps—it’s foundational. While you don’t need to be a software engineer, the ability to write scripts, debug automation pipelines, and understand basic programming logic is crucial. Coding empowers you to build efficient systems, reduce manual intervention, and deliver software faster and more reliably.

If you’re serious about becoming a high-impact DevOps engineer, start learning to code and practice by building automation scripts and pipelines. It’s a skill that will pay dividends throughout your tech career.

Ready to put your coding skills into real-world DevOps practice? Visit Cloudastra Technology: Cloudastra DevOps as a Services and explore how our automation-first approach can elevate your infrastructure and software delivery. Whether you're a beginner or scaling up your team, Cloudastra delivers end-to-end DevOps services designed for growth, agility, and innovation.

0 notes

Text

What Tools and Software Do I Need to Begin a Python Programming Online Course?

Introduction

Are you ready to begin your Python programming journey but unsure what tools to install or where to begin? You're not alone. Starting a new skill, especially in tech, can feel overwhelming without proper guidance. But here's the good news: learning Python online is simple and accessible, even for absolute beginners. Whether you're aiming to automate tasks, analyze data, or build applications, enrolling in a python course with certificate ensures you get structured guidance and industry-relevant tools. Knowing the right setup from day one makes the process smoother. In this guide, we’ll walk you through all the essential tools and software you need to succeed in your Python online training with certification.

Why Choosing the Right Tools Matters

Choosing the right setup from the beginning can significantly influence your learning curve. According to a Stack Overflow Developer Survey, Python continues to be one of the most loved programming languages, largely due to its simplicity and extensive tooling support. The correct tools:

Boost your productivity

Help you debug errors more easily

Enable seamless hands-on practice

Create a professional development environment

Let’s explore what you need to get started.

Computer Requirements for Python Programming

Before diving into software, ensure your computer meets the minimum requirements:

Operating System: Windows 10/11, macOS, or Linux

Processor: At least dual-core (Intel i3 or equivalent)

RAM: Minimum 4GB (8GB preferred)

Storage: 5-10 GB free space for installations and projects

You don’t need a high-end machine, but a stable and responsive system will make the experience smoother.

Python Installation

a. Official Python Installer

To get started with your Python programming online course, you must first install Python itself.

Visit the official Python website: https://www.python.org

Download the latest version (preferably Python 3.11+)

During installation, check the box that says "Add Python to PATH"

Once installed, verify it by opening your terminal (Command Prompt or Terminal) and typing:

python --version

You should see the version number printed.

b. Python via Anaconda (Alternative)

If you’re interested in data science or machine learning, Anaconda is a robust option. It includes Python along with pre-installed libraries like NumPy, pandas, and Jupyter Notebook.

Download from: https://www.anaconda.com

Code Editors and Integrated Development Environments (IDEs)

Choosing a development environment can impact your workflow and how easily you understand programming concepts.

a. Visual Studio Code (VS Code)

Free, lightweight, and beginner-friendly

Supports extensions for Python, Git, and Jupyter

IntelliSense for auto-completion

Integrated terminal

b. PyCharm

Available in Community (free) and Professional versions

Built-in debugging and version control support

Project-based management

c. Jupyter Notebook

Best for interactive learning and data visualization

Supports markdown + Python code cells

Ideal for data science and machine learning

d. Thonny

Built for beginners

Simple interface

Easy to visualize variables and code flow

Command Line Interface (CLI)

Understanding how to use the terminal is important as many tasks are faster or only available through it. While you're enrolled in the best Python online training, you'll often need to interact with the terminal for running scripts, managing environments, or installing packages.

Windows users can use Command Prompt or PowerShell, whereas macOS/Linux users should use the built-in Terminal.

Basic commands like cd, ls, mkdir, and python help you navigate and run programs easily.

Package Managers

a. pip (Python's default package manager)

You’ll often need third-party libraries. Use pip to install them:

pip install package_name

Example:

pip install requests

b. Conda (Used with Anaconda)

Useful for managing environments and dependencies in complex projects:

conda install numpy

Version Control Tools

a. Git

Git is essential for tracking changes, collaborating, and managing your code.

Install Git from: https://git-scm.com

Create a GitHub account for storing and sharing your code

Basic Git commands:

git init

git add .

git commit -m "Initial commit"

git push origin main

Virtual Environments

To avoid conflicts between projects, use virtual environments:

python -m venv myenv

source myenv/bin/activate # macOS/Linux

myenv\Scripts\activate # Windows

This isolates dependencies, making your projects clean and manageable.

Python Libraries for Beginners

Here are some essential libraries you'll likely use during your Python online training with certification:

NumPy – Arrays and numerical computing

pandas – Data analysis and manipulation

matplotlib/seaborn – Data visualization

requests – Making HTTP requests

beautifulsoup4 – Web scraping

Install using:

pip install numpy pandas matplotlib requests beautifulsoup4

Online Code Runners and IDEs

If you don’t want to install anything locally, use cloud-based IDEs:

Replit – Create and run Python projects in the browser

Google Colab – Ideal for data science and machine learning

JupyterHub – Hosted notebooks for teams and individuals

Optional but Helpful Tools

a. Docker

Advanced users may use Docker to containerize their Python environments. It’s not essential for beginners but it's good to know.

b. Notion or Evernote

Use these for taking structured notes, saving code snippets, and tracking progress.

c. Markdown Editors

Helps you write clean documentation and README files:

Typora

Obsidian

Sample Beginner Setup: Step-by-Step

Here’s a simple roadmap to set up your system for a Python programming online course:

Download and install Python from python.org

Choose and install an IDE (e.g., VS Code or Thonny)

Install Git and set up a GitHub account

Learn basic terminal commands

Create a virtual environment

Practice using pip to install libraries

Write and run a "Hello, World!" program

Save your project to GitHub

Real-World Example: Learning Python for Automation

Let’s say you want to automate a repetitive task like renaming files in bulk.

Tools Used:

VS Code

Python (installed locally)

OS and shutil libraries

import os

import shutil

folder = '/path/to/files'

for count, filename in enumerate(os.listdir(folder)):

dst = f"file_{str(count)}.txt"

src = os.path.join(folder, filename)

dst = os.path.join(folder, dst)

shutil.move(src, dst)

Within a few lines of code, you can automate time-consuming work. That’s the power of Python.

Common Pitfalls to Avoid

Not Adding Python to PATH: Causes terminal errors

Skipping virtual environments: Leads to dependency issues

Overloading with too many tools: Stick to the basics until you're comfortable

Conclusion

Starting a Python programming online course doesn’t require expensive hardware or dozens of tools. With the right setup Python installed, a good IDE, and some essential libraries you’re all set to begin coding confidently. Enrolling in the best python course online ensures you get the right guidance and hands-on experience. Your journey in Python online training with certification becomes smoother when your environment supports your growth.

Key Takeaways:

Use Python 3.11+ for latest features and compatibility

VS Code and Thonny are excellent beginner IDEs

Learn terminal basics to navigate and run scripts

Use pip or Conda to manage Python packages

Version control your code with Git and GitHub

Take the first step today, install your tools, write your first program, and begin your journey with confidence. The world of Python is waiting for you!

0 notes

Text

Scope

Scope is how PowerShell defines where constructs like variables, aliases, and functions can be read and changed. When you're learning to write scripts, you need to know what you have access to, what you can change, and where you can change it. If you don't understand how scope works, your code might not work as you expect it to.

Types of scope

Let's talk about the various scopes:

Global scope. When you create constructs like variables in this scope, they continue to exist after your session ends. Anything that's present when you start a new PowerShell session can be said to be in this scope.

Script scope. When you run a script file, a script scope is created. For example, a variable or a function defined in the file is in the script scope. It will no longer exist after the file is finished running. For example, you can create a variable in the script file and target the global scope. But you need to explicitly define that scope by prepending the variable with the global keyword.

Local scope. The local scope is the current scope, and can be the global scope or any other scope.

Scope rules

Scope rules help you understand what values are visible at a given point. They also help you understand how to change a value.

Scopes can nest. A scope can have a parent scope. A parent scope is an outer scope, outside of the scope you're in. For example, a local scope can have the global scope as a parent scope. Conversely, a scope can have a nested scope, also known as a child scope.

Items are visible in the current and child scopes. An item, like a variable or a function, is visible in the scope in which it's created. By default, it's also visible in any child scopes. You can change that behavior by making the item private within the scope.

Items can be changed only in the created scope. By default, you can change an item only in the scope in which it was created. You can change this behavior by explicitly specifying a different scope.

1 note

·

View note

Text

Y'all this is amazing advice. I usually only see it in regards to artwork (needlepoint, painting). This is the first time I've seen it with any kind of technology listed!

Before say, May or June of this year I had never touched any kind of programming. Believed I couldn't do it. Then I was tasked with getting deployments working in our management software at work. I turned to PowerShell and holy fuck, you guys! You GUYS! Do you know how amazing it feels to work on something and then execute it and it works!? The first script I built was literally this:

$App = "C:\Windows\System32\msiexec.exe" $Args = ' /i "\\Path\to\msi\software.msi" /norestart /qn' Start-Process $App -ArgumentList $Args -NoNewWindow -Wait

And I was ASTOUNDED when it worked as intended! It did the thing! Amazing! I spun that out further and further and now I'm writing shit that I thought I could never ever do! Sometimes it doesn't work right the first time, or I don't do it in the most efficient manner, but I love fighting with it! It's so fun!

Another technology example. Last year around Black Friday I got it in my head that I wanted to build a media center. Like, a proper one. So I bought a NAS and some big hard drives and got them up and running, then had an old laptop released to me from work and installed Ubuntu on it, and I spun up a Plex server! There were some struggles at first and I stumbled a bit, but it now works precisely as intended and I have a lot of content on it. This was mostly so my partner and I could watch movies from her house. Now we live together and we still experience all the benefits and even share it with a few close friends!

But the experience was the best part! I loved solving the challenges that occurred! Everything from initial configuration of the devices to internet connectivity to permissions on the files to backups.

So yeah, creating things doesn't have to be art or whatever. Create whatever you like. Just create something :)

i cannot emphasise enough how much you need to create something. anything. it doesn't matter if you suck. you don't need to monetise it, or make it your career. you can restart an old hobby; you can start from scratch. it doesn't matter. you just need to hold something and be able to say "i did that". baking, drawing, painting, writing, coding, crafts, whatever. make something ! you cannot have all your hobbies be a form of consumption. it's fun, it's great in its own right. but the single best action to make yourself feel better, to calm your mind, to gain self esteem, is to Create

34K notes

·

View notes

Text

How to Report on All GPOs (With PowerShell Script Example)

Why GPO Management Matters Group Policy Objects (GPOs) are the backbone of Windows domain management, enabling administrators to configure and enforce settings across their environment. But as organizations grow, GPO management can quickly spiral into what we affectionately call “GPO sprawl” – a tangled web of overlapping policies that nobody fully understands anymore. If you’re managing an…

0 notes

Text

Certified DevSecOps Professional: Career Path, Salary & Skills

Introduction

As the demand for secure, agile software development continues to rise, the role of a Certified DevSecOps Professional has become critical in modern IT environments. Organizations today are rapidly adopting DevSecOps to shift security left in the software development lifecycle. This shift means security is no longer an afterthought—it is integrated from the beginning. Whether you're just exploring the DevSecOps tutorial for beginners or looking to level up with a professional certification, understanding the career landscape, salary potential, and required skills can help you plan your next move.

This comprehensive guide explores the journey of becoming a Certified DevSecOps Professional, the skills you'll need, the career opportunities available, and the average salary you can expect. Let’s dive into the practical and professional aspects that make DevSecOps one of the most in-demand IT specialties in 2025 and beyond.

What Is DevSecOps?

Integrating Security into DevOps

DevSecOps is the practice of integrating security into every phase of the DevOps pipeline. Traditional security processes often occur at the end of development, leading to delays and vulnerabilities. DevSecOps introduces security checks early in development, making applications more secure and compliant from the start.

The Goal of DevSecOps

The ultimate goal is to create a culture where development, security, and operations teams collaborate to deliver secure and high-quality software faster. DevSecOps emphasizes automation, continuous integration, continuous delivery (CI/CD), and proactive risk management.

Why Choose a Career as a Certified DevSecOps Professional?

High Demand and Job Security

The need for DevSecOps professionals is growing fast. According to a Cybersecurity Ventures report, there will be 3.5 million unfilled cybersecurity jobs globally by 2025. Many of these roles demand DevSecOps expertise.

Lucrative Salary Packages

Because of the specialized skill set required, DevSecOps professionals are among the highest-paid tech roles. Salaries can range from $110,000 to $180,000 annually depending on experience, location, and industry.

Career Versatility

This role opens up diverse paths such as:

Application Security Engineer

DevSecOps Architect

Cloud Security Engineer

Security Automation Engineer

Roles and Responsibilities of a DevSecOps Professional

Core Responsibilities

Integrate security tools and practices into CI/CD pipelines

Perform threat modeling and vulnerability scanning

Automate compliance and security policies

Conduct security code reviews

Monitor runtime environments for suspicious activities

Collaboration

A Certified DevSecOps Professional acts as a bridge between development, operations, and security teams. Strong communication skills are crucial to ensure secure, efficient, and fast software delivery.

Skills Required to Become a Certified DevSecOps Professional

Technical Skills

Scripting Languages: Bash, Python, or PowerShell

Configuration Management: Ansible, Chef, or Puppet

CI/CD Tools: Jenkins, GitLab CI, CircleCI

Containerization: Docker, Kubernetes

Security Tools: SonarQube, Checkmarx, OWASP ZAP, Aqua Security

Cloud Platforms: AWS, Azure, Google Cloud

Soft Skills

Problem-solving

Collaboration

Communication

Time Management

DevSecOps Tutorial for Beginners: A Step-by-Step Guide

Step 1: Understand the Basics of DevOps

Before diving into DevSecOps, make sure you're clear on DevOps principles, including CI/CD, infrastructure as code, and agile development.

Step 2: Learn Security Fundamentals

Study foundational cybersecurity concepts like threat modeling, encryption, authentication, and access control.

Step 3: Get Hands-On With Tools

Use open-source tools to practice integrating security into DevOps pipelines:

# Example: Running a static analysis scan with SonarQube

sonar-scanner \

-Dsonar.projectKey=myapp \

-Dsonar.sources=. \

-Dsonar.host.url=http://localhost:9000 \

-Dsonar.login=your_token

Step 4: Build Your Own Secure CI/CD Pipeline

Practice creating pipelines with Jenkins or GitLab CI that include steps for:

Static Code Analysis

Dependency Checking

Container Image Scanning

Step 5: Monitor and Respond

Set up tools like Prometheus and Grafana to monitor your applications and detect anomalies.

Certification Paths for DevSecOps

Popular Certifications

Certified DevSecOps Professional

Certified Kubernetes Security Specialist (CKS)

AWS Certified Security - Specialty

GIAC Cloud Security Automation (GCSA)

Exam Topics Typically Include:

Security in CI/CD

Secure Infrastructure as Code

Cloud-native Security Practices

Secure Coding Practices

Salary Outlook for DevSecOps Professionals

Salary by Experience

Entry-Level: $95,000 - $115,000

Mid-Level: $120,000 - $140,000

Senior-Level: $145,000 - $180,000+

Salary by Location

USA: Highest average salaries, especially in tech hubs like San Francisco, Austin, and New York.

India: ₹9 LPA to ₹30+ LPA depending on experience.

Europe: €70,000 - €120,000 depending on country.

Real-World Example: How Companies Use DevSecOps

Case Study: DevSecOps at a Fintech Startup

A fintech company integrated DevSecOps tools like Snyk, Jenkins, and Kubernetes to secure their microservices architecture. They reduced vulnerabilities by 60% in just three months while speeding up deployments by 40%.

Key Takeaways

Early threat detection saves time and cost

Automated pipelines improve consistency and compliance

Developers take ownership of code security

Challenges in DevSecOps and How to Overcome Them

Cultural Resistance

Solution: Conduct training and workshops to foster collaboration between teams.

Tool Integration

Solution: Choose tools that support REST APIs and offer strong documentation.

Skill Gaps

Solution: Continuous learning and upskilling through real-world projects and sandbox environments.

Career Roadmap: From Beginner to Expert

Beginner Level

Understand DevSecOps concepts

Explore basic tools and scripting

Start with a DevSecOps tutorial for beginners

Intermediate Level

Build and manage secure CI/CD pipelines

Gain practical experience with container security and cloud security

Advanced Level

Architect secure cloud infrastructure

Lead DevSecOps adoption in organizations

Mentor junior engineers

Conclusion

The future of software development is secure, agile, and automated—and that means DevSecOps. Becoming a Certified DevSecOps Professional offers not only job security and high salaries but also the chance to play a vital role in creating safer digital ecosystems. Whether you’re following a DevSecOps tutorial for beginners or advancing into certification prep, this career path is both rewarding and future-proof.

Take the first step today: Start learning, start practicing, and aim for certification!

1 note

·

View note

Text

Boost Your Fortnite FPS in 2025: The Complete Optimization Guide

youtube

Unlock Maximum Fortnite FPS in 2025: Pro Settings & Hidden Tweaks Revealed

In 2025, achieving peak performance in Fortnite requires more than just powerful hardware. Even the most expensive gaming setups can struggle with inconsistent frame rates and input lag if the system isn’t properly optimized. This guide is designed for players who want to push their system to its limits — without spending more money. Whether you’re a competitive player or just want smoother gameplay, this comprehensive Fortnite optimization guide will walk you through the best tools and settings to significantly boost FPS, reduce input lag, and create a seamless experience.

From built-in Windows adjustments to game-specific software like Razer Cortex and AMD Adrenalin, we’ll break down each step in a clear, actionable format. Our goal is to help you reach 240+ FPS with ease and consistency, using only free tools and smart configuration choices.

Check System Resource Usage First

Before making any deep optimizations, it’s crucial to understand how your PC is currently handling resource allocation. Begin by opening Task Manager (Ctrl + Alt + Delete > Task Manager). Under the Processes tab, review which applications are consuming the most CPU and memory.

Close unused applications like web browsers or VPN services, which often run in the background and consume RAM.

Navigate to the Performance tab to verify that your CPU is operating at its intended base speed.

Confirm that your memory (RAM) is running at its advertised frequency. If it’s not, you may need to enable XMP in your BIOS.

Avoid Complex Scripts — Use Razer Cortex Instead

While there are command-line based options like Windows 10 Debloater (DBLO), they often require technical knowledge and manual PowerShell scripts. For a user-friendly alternative, consider Razer Cortex — a free tool that automates performance tuning with just a few clicks.

Here’s how to use it:

Download and install Razer Cortex.

Open the application and go to the Booster tab.

Enable all core options such as:

Disable CPU Sleep Mode

Enable Game Power Solutions

Clear Clipboard and Clean RAM

Disable Sticky Keys, Cortana, Telemetry, and Error Reporting

Use Razer Cortex Speed Optimization Features

After setting up the Booster functions, move on to the Speed Up section of Razer Cortex. This tool scans your PC for services and processes that can be safely disabled or paused to improve overall system responsiveness.

Steps to follow:

Click Optimize Now under the Speed Up tab.

Let Cortex analyze and adjust unnecessary background activities.

This process will reduce system load, freeing resources for Fortnite and other games.

You’ll also find the Booster Prime feature under the same application, allowing game-specific tweaks. For Fortnite, it lets you pick from performance-focused or quality-based settings depending on your needs.

Optimize Fortnite Graphics Settings via Booster Prime

With Booster Prime, users can apply recommended Fortnite settings without navigating the in-game menu. This simplifies the optimization process, especially for players not familiar with technical configuration.

Key settings to configure:

Resolution: Stick with native (1920x1080 for most) or drop slightly for extra performance.

Display Mode: Use Windowed Fullscreen for better compatibility with overlays and task switching.

Graphics Profile: Choose Performance Mode to prioritize FPS over visuals, or Balanced for a mix of both.

Once settings are chosen, click Optimize, and Razer Cortex will apply all changes automatically. You’ll see increased FPS and reduced stuttering almost immediately.

Track Resource Gains and Performance Impact

Once you’ve applied Razer Cortex optimizations, monitor the system changes in real-time. The software displays how much RAM is freed and which services have been stopped.

For example:

You might see 3–4 GB of RAM released, depending on how many background applications were disabled.

Services like Cortana and telemetry often consume hidden resources — disabling them can free both memory and CPU cycles.

Enable AMD Adrenalin Performance Settings (For AMD Users)

If your system is powered by an AMD GPU, the Adrenalin Software Suite offers multiple settings that improve gaming performance with minimal setup.

Recommended options to enable:

Anti-Lag: Reduces input latency, making your controls feel more immediate.

Radeon Super Resolution: Upscales games to provide smoother performance at lower system loads.

Enhanced Sync: Improves frame pacing without the drawbacks of traditional V-Sync.

Image Sharpening: Adds clarity without a major hit to performance.

Radeon Boost: Dynamically lowers resolution during fast motion to maintain smooth FPS.

Be sure to enable Borderless Fullscreen in your game settings for optimal GPU performance and lower system latency.

Match Frame Rate with Monitor Refresh Rate

One of the simplest and most effective ways to improve both performance and gameplay experience is to cap your frame rate to match your monitor’s refresh rate. For instance, if you’re using a 240Hz monitor, setting Fortnite’s max FPS to 240 will reduce unnecessary GPU strain and maintain stable frame pacing.

Benefits of FPS capping:

Lower input latency

Reduced screen tearing

Better thermals and power efficiency

This adjustment ensures your system isn’t overworking when there’s no benefit, which can lead to more stable and predictable gameplay — especially during extended play sessions.

Real-World Performance Comparison

After applying Razer Cortex and configuring system settings, players often see dramatic performance improvements. In test environments using a 2K resolution on DirectX 12, systems previously capped at 50–60 FPS with 15–20 ms response times jumped to 170–180 FPS with a 3–5 ms response time.

When switching to 1080p resolution:

Frame rates typically exceed 200 FPS

Reduced frame time results in smoother aiming and lower delay

Competitive advantage improves due to lower latency and higher visual consistency

These results are reproducible on most modern gaming rigs, regardless of brand, as long as the system has adequate hardware and is properly optimized.

Switch Between Performance Modes for Different Games

One of Razer Cortex’s strongest features is its flexibility. You can easily switch between optimization profiles depending on the type of game you’re playing. For Fortnite, choose high-performance settings to prioritize responsiveness and frame rate. But for visually rich, story-driven games, you might want higher quality visuals.

Using Booster Prime:

Choose your desired game from the list.

Select a profile such as Performance, Balanced, or Quality.

Apply settings instantly by clicking Optimize, then launch the game directly.

This quick toggle capability makes it easy to adapt your system to different gaming needs without having to manually change settings every time.

Final Performance Test: Fortnite in 2K with Performance Mode

To push your system to the limit, test Fortnite under 2K resolution and Performance Mode enabled. Without any optimizations, many systems may average 140–160 FPS. However, with all the Razer Cortex and system tweaks applied:

Frame rates can spike above 400 FPS

Input delay and frame time reduce significantly

Gameplay becomes smoother and more responsive, ideal for fast-paced shooters

Conclusion: Unlock Peak Fortnite Performance in 2025

Optimizing Fortnite for maximum FPS and minimal input lag doesn’t require expensive upgrades or advanced technical skills. With the help of tools like Razer Cortex and AMD Adrenalin, along with proper system tuning, you can dramatically enhance your gameplay experience.

Key takeaways:

Monitor and free system resources using Task Manager

Use Razer Cortex to automate performance boosts with one click

Apply optimized settings for Fortnite via Booster Prime

Match FPS to your monitor’s refresh rate for smoother visuals

Take advantage of GPU-specific software like AMD Adrenalin

Customize settings for performance or quality based on your gaming style

By following this fortnite optimization guide, you can achieve a consistent fortnite fps boost in 2025 while also reducing input lag and ensuring your system runs at peak performance. These steps are applicable not only to Fortnite but to nearly any competitive game you play. It’s time to make your hardware work smarter — not harder.

🎮 Level 99 Kitchen Conjurer | Crafting epic culinary quests where every dish is a legendary drop. Wielding spatulas and controllers with equal mastery, I’m here to guide you through recipes that give +10 to flavor and +5 to happiness. Join my party as we raid the kitchen and unlock achievement-worthy meals! 🍳✨ #GamingChef #CulinaryQuests

For More, Visit @https://haplogamingcook.com

#fortnite fps guide 2025#increase fortnite fps#fortnite performance optimization#fortnite fps boost settings#fortnite graphics settings#best fortnite settings for fps#fortnite lag fix#fortnite fps drops fix#fortnite competitive settings#fortnite performance mode#fortnite pc optimization#fortnite fps boost tips#fortnite low end pc settings#fortnite high fps config#fortnite graphics optimization#fortnite game optimization#fortnite fps unlock#fortnite performance guide#fortnite settings guide 2025#fortnite competitive fps#Youtube

0 notes

Text

10 Must-Have PowerShell Scripts Every IT Admin Should Know

As an IT professional, your day is likely filled with repetitive tasks, tight deadlines, and constant demands for better performance. That’s why automation isn’t just helpful—it’s essential. I’m Mezba Uddin, a Microsoft MVP and MCT, and I built Mr Microsoft to help IT admins like you work smarter with automation, not harder. From Microsoft 365 automation to infrastructure monitoring and PowerShell scripting, I’ve shared practical solutions that are used in real-world environments. This article dives into ten of the most useful PowerShell scripts for IT admins, complete with automation examples and practical use cases that will boost productivity, reduce errors, and save countless hours.

Whether you're new to scripting or looking to optimize your stack, these scripts are game-changers.

Automate Active Directory User Creation

Provisioning new users manually can lead to errors and wasted time. One of the most widely used PowerShell scripts for IT admins is an automated Active Directory user creation script. This script allows you to import user details from a CSV file and automatically create AD accounts, set passwords, assign groups, and configure properties—all in a few seconds. It’s a perfect way to speed up onboarding in large organizations. On MrMicrosoft.com, you’ll find a complete walkthrough and customizable script templates to fit your unique IT environment. Whether you're managing 10 users or 1,000, this script will become one of your most trusted tools for Active Directory administration.

Bulk Assign Microsoft 365 Licenses

In hybrid or cloud environments, managing Microsoft 365 license assignments manually is a drain on time and accuracy. Through Microsoft 365 automation, you can use a PowerShell script to assign licenses in bulk, deactivate unused ones, and even schedule regular audits. This script is a great way to enforce licensing compliance while reducing costs. At Mr Microsoft, I provide an optimized version of this script that’s suitable for large enterprise environments. It’s customizable, secure, and a great example of how scripting can eliminate repetitive administrative tasks while ensuring your Microsoft 365 deployment runs smoothly and efficiently.

Send Password Expiry Notifications Automatically

One of the most common helpdesk tickets? Password expiry. Through simple IT infrastructure automation, a PowerShell script can send automatic email notifications to users whose passwords are about to expire. It reduces last-minute password reset requests and keeps users informed. At Mr Microsoft, I share a plug-and-play script for this task, including options to adjust frequency, messaging, and groups. It’s a lightweight, server-friendly way to keep your user base informed and proactive. With this script running on a schedule, your IT team will have fewer disruptions and more time to focus on high-priority tasks.

Monitor Server Disk Space Remotely

Monitoring disk space across multiple servers—especially in hybrid cloud environments—can be difficult without the right tools. That’s why cloud automation for IT pros includes disk monitoring scripts that remotely scan storage, trigger alerts, and generate reports. I’ve posted a working solution on Mr Microsoft that connects securely to servers, logs thresholds, and sends alerts before critical levels are hit. It’s ideal for IT teams managing Azure resources, Hyper-V, or even on-premises file servers. With this script, you can detect space issues early and prevent downtime caused by full partitions.

Export Microsoft 365 Mailbox Size Reports

For admins managing Exchange Online, mailbox size tracking is essential. With the right Microsoft 365 management tools, like a PowerShell mailbox report script, you can quickly extract user sizes, quotas, and growth over time. This is invaluable for storage planning and policy enforcement. On Mr Microsoft, I’ve shared an easy-to-adapt script that pulls all mailbox data and exports it to CSV or Excel formats. You can automate it weekly, track long-term trends, or email the results to managers. It’s a simple but powerful reporting tool that turns Microsoft 365 data into actionable insights.

Parse and Report on Windows Event Logs

If you’re getting started with scripting, working with event logs is a fantastic entry point. Using PowerShell for beginners, you can write scripts that parse Windows logs to identify system crashes, login failures, or security events. I’ve built a script on Mr Microsoft that scans logs daily and sends summary reports. It’s lightweight, customizable, and useful for security monitoring. This is a perfect project for IT pros new to scripting who want meaningful results without complexity. With scheduled execution, this tool ensures proactive monitoring—especially critical in regulated or high-security environments.

Reset Passwords for Multiple Users

Resetting passwords one at a time is inefficient—especially during mass onboarding, offboarding, or policy enforcement. Using IT admin productivity tools like a PowerShell batch password reset script can streamline the process. It’s secure, scriptable, and ideal for both on-premises AD and hybrid Azure AD environments. With added functionality like expiration dates and enforced resets at next login, this script empowers IT admins to enforce password policies with speed and consistency.

Automate Windows Update Scheduling

If you’re tired of unpredictable updates or user complaints about restarts, this is for you. One of the most effective PowerShell scripts for IT admins automates the installation of Windows updates across workstations or servers. With this tool, you can check for updates, install them silently, and even reboot during off-hours. This reduces patching delays, improves compliance, and eliminates the need for manual updates or GPO complexity—especially useful in remote or hybrid work environments.

Cleanup Inactive Users with Graph API

Inactive user accounts are a security risk and resource drain. With the Microsoft Graph API, you can automate account cleanup based on login activity or license usage. My detailed Microsoft Graph API tutorial on Mr Microsoft walks through how to connect securely, pull activity data, and disable or archive stale accounts. This not only tightens security but also saves licensing costs. It’s a must-have script for admins managing large Microsoft 365 environments. Plus, the tutorial includes reusable templates to make your deployment faster and safer.

Automate SharePoint Site Provisioning

Provisioning SharePoint sites manually is tedious and error-prone. With Microsoft 365 automation, you can instantly create SharePoint sites based on predefined templates, permissions, and naming conventions. I’ve built a reusable script on Mr Microsoft that automates this entire process. It’s ideal for departments, projects, or onboarding flows where consistency and speed are critical. This script integrates with Teams and Exchange setups too, giving your IT team a full-stack provisioning workflow with minimal effort.

Final Thoughts – Automate Smarter, Not Harder

Every script above is built from real-life IT challenges I’ve encountered over the years. At Mr Microsoft, my goal is to share solutions that are practical, secure, and ready to use. Whether you're managing hundreds of users or optimizing workflows, automation is your edge—and PowerShell scripts for IT admins are your toolkit. Want more step-by-step guides and tools built by a fellow IT pro?

Visit MrMicrosoft.com and start automating smarter today.

1 note

·

View note

Text

Automation Programming Basics

In today’s fast-paced world, automation programming is a vital skill for developers, IT professionals, and even hobbyists. Whether it's automating file management, data scraping, or repetitive tasks, automation saves time, reduces errors, and boosts productivity. This post covers the basics to get you started in automation programming.

What is Automation Programming?

Automation programming involves writing scripts or software that perform tasks without manual intervention. It’s widely used in system administration, web testing, data processing, DevOps, and more.

Benefits of Automation

Efficiency: Complete tasks faster than doing them manually.

Accuracy: Reduce the chances of human error.

Scalability: Automate tasks at scale (e.g., managing hundreds of files or websites).

Consistency: Ensure tasks are done the same way every time.

Popular Languages for Automation

Python: Simple syntax and powerful libraries like `os`, `shutil`, `requests`, `selenium`, and `pandas`.

Bash: Great for system and server-side scripting on Linux/Unix systems.

PowerShell: Ideal for Windows system automation.

JavaScript (Node.js): Used in automating web services, browsers, or file tasks.

Common Automation Use Cases

Renaming and organizing files/folders

Automating backups

Web scraping and data collection

Email and notification automation

Testing web applications

Scheduling repetitive system tasks (cron jobs)

Basic Python Automation Example

Here’s a simple script to move files from one folder to another based on file extension:import os import shutil source = 'Downloads' destination = 'Images' for file in os.listdir(source): if file.endswith('.jpg') or file.endswith('.png'): shutil.move(os.path.join(source, file), os.path.join(destination, file))

Tools That Help with Automation

Task Schedulers: `cron` (Linux/macOS), Task Scheduler (Windows)

Web Automation Tools: Selenium, Puppeteer

CI/CD Tools: GitHub Actions, Jenkins

File Watchers: `watchdog` (Python) for reacting to file system changes

Best Practices

Always test your scripts in a safe environment before production.

Add logs to track script actions and errors.

Use virtual environments to manage dependencies.

Keep your scripts modular and well-documented.

Secure your scripts if they deal with sensitive data or credentials.

Conclusion

Learning automation programming can drastically enhance your workflow and problem-solving skills. Start small, automate daily tasks, and explore advanced tools as you grow. The world of automation is wide open—begin automating today!

0 notes

Text

The one thing about cyber security that you should always be aware of is its ongoing state of evolution. Threats are constantly evolving; thus, your defensive strategy cannot become inert. This is not overly dramatic. Attacks occurred around every 39 seconds in 2023. Approximately 40% of all attacks are script-based, meaning that malevolent actors have unlimited access to resources. IT experts and managed service providers (MSPs) and rmm tools and PSA software suppliers must exercise caution. We have compiled a list of some of the biggest cybersecurity threats and vulnerabilities from recent history to assist. This is the impact of the shifting landscape on security-conscious individuals. What is cyber security? A catch-all word for reducing the risks of cyberattacks is cybersecurity. Organizations employ a range of procedures, technologies, and controls to practice adequate cyber security, including: Keep information, software, hardware, networks, and other assets safe from real-time unauthorized access and exploitation. Examine potential risks Practice emergency response and simulate hacking. reaction techniques Establish policies to encourage the safe use of information and technology. How do vulnerabilities and threats to computer security operate? Malicious actors can obtain unauthorized access to sensitive data through many means. Also Read: What is SHA256 Encryption: How it Works and Applications Existing weaknesses are often exploited in events. For example, hackers may leverage a widely recognized vulnerability to obtain backdoor access. Alternatively, they can uncover fresh vulnerabilities associated with your technology usage. In either case, these incidents frequently involve ransom demands, legal dangers, and bad public relations. Not every bad actor is a Hollywood hacker working for a hidden organization of cybercriminals. Anyone can cause a breach, even a contracting agency, from irate workers and roguish states to terrorists and corporate spittle. Strong safeguards should be put in place before that occurs! Here are a few of the most noteworthy threats that have emerged in the most recent headlines: Twitter Email Leak The Twitter data breach occurred only a few days after the year 2022 came to an end. In summary, the email addresses of more than 200 million Twitter users were exposed on underground hacking websites. The affected Twitter accounts surpassed 400 million. Hackers began gathering data in 2021 by taking advantage of a vulnerability in the Twitter API. Several hackers took use of this vulnerability back in 2021, which led to multiple ransomware attempts and leaks in 2022. One of the biggest data breaches in history is this one. Also Read: Guide to Check Which Powershell Version You are UsingGuide to Check Which Powershell Version You are Using The Royal Mail Hack The UK's Royal Mail was compromised by the Russian ransomware group LockBit in January 2023. The hackers demanded a ransom of £65 million to unlock the compromised data. The business requested that customers cease sending items overseas due to the severity of the event! This may seem like a success story in certain ways. Ultimately, Royal Mail declined to provide a ransom. Unfortunately, there were prolonged service outages and many of its employees had their data disclosed. This cybersecurity event demonstrates that averting a hack's worst-case scenario is a minor comfort. The worst-case scenario persists, and the erosion of public trust is a severe blow for national infrastructure-level companies such as the Royal Mail. The Reddit Hack The forum site Reddit disclosed in February 2023 that the user was a recent victim of spear phishing. Through a phishing attack targeted at employees, hackers were able to access internal Reddit data. In addition, the hackers desired ransom money. THE OUTCOME? Financial information was leaked along with the personal information of hundreds of current and former faculty members, staff members, and advertisers. Reddit's emergency response team was able to resolve the issue swiftly. However, this was an event that the business ought to have completely avoided. The phishing attack functioned by impersonating a page from the internal portal of the business. This tricked at least one worker into disclosing their access credentials—and all it takes is one! T-Mobile Data Breach: T-Mobile USA had two hacks. Late in 2022, T-Mobile disclosed that 37 million users' personal data had been accessed by a malevolent attacker. The most recent breach was found in March. Comparing the volume of stolen data to the previous breach, it was less. A total of 836 customers had their PINs, account information, and personal details compromised due to this cyber security threat. Information on personal bank accounts was not taken. Also Read: What is BSSID? The ChatGPT Payment Exposure The widely used ChatGPT Plus service experienced a major outage in March 2023. Even though the interruption was brief—about nine hours—it caused no appreciable harm. The payment information of more than one percent of subscribers was disclosed to other users. The communication histories of certain users also become accessible. While 1. 2 may not seem like a big number, it represents yet another instance of a lost user's trust. In this instance, OpenAI was unable to properly retrieve an open-source library, a huge mistake! When you are unable to secure your software supply chain, it does not look good. This event might hurt a tech company's competitiveness in an expanding industry like artificial intelligence. Also Read: How To Take Screenshots on Windows and Mac AT&T 3rd Party Data Breach In March 2023, a third-party data breach exposed 9 million customer records belonging to another titan in the telecom industry, AT&T. AT&T classified this threat as a supply chain assault involving data that was several years old and largely related to eligibility for device upgrades. Consequently, the telecom advised its clients to implement more robust password security protocols. VMware ESXi Ransomware In January of last year, a ransomware attack using an outdated vulnerability (CVE-2021-21974) affected about 3,200 unpatched VMware ESXi servers. Via CVE-2021-21974, hackers began a ransomware assault against VMware ESXi hypervisors. They were able to use this readily exploitable vulnerability to remotely exploit code without the need for previous authentication. France was the most affected nation, with the United States, Germany, and Canada following suit. Concerning CrowdStrike, the issue is becoming more serious. "A growing number of threat actors are realizing that an environment rich in targets is created by inadequate network segmentation of ESXi intrusions, a lack of security tools, and ITW vulnerabilities for ESXi." How can MSPs get ready for threats and vulnerabilities related to cyber security? Use these four practical suggestions to enhance your cybersecurity strategy and mitigate threats and vulnerabilities: Make data backups a regular part of your life. Adhere to the 3-2-1 backup policy. Store three duplicates of your information on two or more media types. One copy will be stored on your primary system. The other two ought to be locally and cloud-based encrypted backups. Reduce your exposure to the least amount possible. Installing firewalls or antivirus software alone won't cut it. Additionally, you must keep them updated. Also Read: What is a VLAN (Virtual LAN)? Author Bio Fazal Hussain is a digital marketer working in the field since 2015. He has worked in different niches of digital marketing, be it SEO, social media marketing, email marketing, PPC, or content marketing. He loves writing about industry trends in technology and entrepreneurship, evaluating them from the different perspectives of industry leaders in the niches. In his leisure time, he loves to hang out with friends, watch movies, and explore new places. Read the full article

0 notes

Text

Shielding Prompts from LLM Data Leaks

New Post has been published on https://thedigitalinsider.com/shielding-prompts-from-llm-data-leaks/

Shielding Prompts from LLM Data Leaks

Opinion An interesting IBM NeurIPS 2024 submission from late 2024 resurfaced on Arxiv last week. It proposes a system that can automatically intervene to protect users from submitting personal or sensitive information into a message when they are having a conversation with a Large Language Model (LLM) such as ChatGPT.

Mock-up examples used in a user study to determine the ways that people would prefer to interact with a prompt-intervention service. Source: https://arxiv.org/pdf/2502.18509

The mock-ups shown above were employed by the IBM researchers in a study to test potential user friction to this kind of ‘interference’.

Though scant details are given about the GUI implementation, we can assume that such functionality could either be incorporated into a browser plugin communicating with a local ‘firewall’ LLM framework; or that an application could be created that can hook directly into (for instance) the OpenAI API, effectively recreating OpenAI’s own downloadable standalone program for ChatGPT, but with extra safeguards.

That said, ChatGPT itself automatically self-censors responses to prompts that it perceives to contain critical information, such as banking details:

ChatGPT refuses to engage with prompts that contain perceived critical security information, such as bank details (the details in the prompt above are fictional and non-functional). Source: https://chatgpt.com/

However, ChatGPT is much more tolerant in regard to different types of personal information – even if disseminating such information in any way might not be in the user’s best interests (in this case perhaps for various reasons related to work and disclosure):

The example above is fictional, but ChatGPT does not hesitate to engage in a conversation on the user on a sensitive subject that constitutes a potential reputational or earnings risk (the example above is totally fictional).

In the above case, it might have been better to write: ‘What is the significance of a leukemia diagnosis on a person’s ability to write and on their mobility?’

The IBM project identifies and reinterprets such requests from a ‘personal’ to a ‘generic’ stance.

Schema for the IBM system, which uses local LLMs or NLP-based heuristics to identify sensitive material in potential prompts.

This assumes that material gathered by online LLMs, in this nascent stage of the public’s enthusiastic adoption of AI chat, will never feed through either to subsequent models or to later advertising frameworks that might exploit user-based search queries to provide potential targeted advertising.

Though no such system or arrangement is known to exist now, neither was such functionality yet available at the dawn of internet adoption in the early 1990s; since then, cross-domain sharing of information to feed personalized advertising has led to diverse scandals, as well as paranoia.

Therefore history suggests that it would be better to sanitize LLM prompt inputs now, before such data accrues at volume, and before our LLM-based submissions end up in permanent cyclic databases and/or models, or other information-based structures and schemas.

Remember Me?

One factor weighing against the use of ‘generic’ or sanitized LLM prompts is that, frankly, the facility to customize an expensive API-only LLM such as ChatGPT is quite compelling, at least at the current state of the art – but this can involve the long-term exposure of private information.

I frequently ask ChatGPT to help me formulate Windows PowerShell scripts and BAT files to automate processes, as well as on other technical matters. To this end, I find it useful that the system permanently memorize details about the hardware that I have available; my existing technical skill competencies (or lack thereof); and various other environmental factors and custom rules:

ChatGPT allows a user to develop a ‘cache’ of memories that will be applied when the system considers responses to future prompts.

Inevitably, this keeps information about me stored on external servers, subject to terms and conditions that may evolve over time, without any guarantee that OpenAI (though it could be any other major LLM provider) will respect the terms they set out.

In general, however, the capacity to build a cache of memories in ChatGPT is most useful because of the limited attention window of LLMs in general; without long-term (personalized) embeddings, the user feels, frustratingly, that they are conversing with a entity suffering from Anterograde amnesia.

It is difficult to say whether newer models will eventually become adequately performant to provide useful responses without the need to cache memories, or to create custom GPTs that are stored online.

Temporary Amnesia

Though one can make ChatGPT conversations ‘temporary’, it is useful to have the Chat history as a reference that can be distilled, when time allows, into a more coherent local record, perhaps on a note-taking platform; but in any case we cannot know exactly what happens to these ‘discarded’ chats (though OpenAI states they will not be used for training, it does not state that they are destroyed), based on the ChatGPT infrastructure. All we know is that chats no longer appear in our history when ‘Temporary chats’ is turned on in ChatGPT.

Various recent controversies indicate that API-based providers such as OpenAI should not necessarily be left in charge of protecting the user’s privacy, including the discovery of emergent memorization, signifying that larger LLMs are more likely to memorize some training examples in full, and increasing the risk of disclosure of user-specific data – among other public incidents that have persuaded a multitude of big-name companies, such as Samsung, to ban LLMs for internal company use.

Think Different

This tension between the extreme utility and the manifest potential risk of LLMs will need some inventive solutions – and the IBM proposal seems to be an interesting basic template in this line.

Three IBM-based reformulations that balance utility against data privacy. In the lowest (pink) band, we see a prompt that is beyond the system’s ability to sanitize in a meaningful way.

The IBM approach intercepts outgoing packets to an LLM at the network level, and rewrites them as necessary before the original can be submitted. The rather more elaborate GUI integrations seen at the start of the article are only illustrative of where such an approach could go, if developed.

Of course, without sufficient agency the user may not understand that they are getting a response to a slightly-altered reformulation of their original submission. This lack of transparency is equivalent to an operating system’s firewall blocking access to a website or service without informing the user, who may then erroneously seek out other causes for the problem.

Prompts as Security Liabilities

The prospect of ‘prompt intervention’ analogizes well to Windows OS security, which has evolved from a patchwork of (optionally installed) commercial products in the 1990s to a non-optional and rigidly-enforced suite of network defense tools that come as standard with a Windows installation, and which require some effort to turn off or de-intensify.

If prompt sanitization evolves as network firewalls did over the past 30 years, the IBM paper’s proposal could serve as a blueprint for the future: deploying a fully local LLM on the user’s machine to filter outgoing prompts directed at known LLM APIs. This system would naturally need to integrate GUI frameworks and notifications, giving users control – unless administrative policies override it, as often occurs in business environments.

The researchers conducted an analysis of an open-source version of the ShareGPT dataset to understand how often contextual privacy is violated in real-world scenarios.

Llama-3.1-405B-Instruct was employed as a ‘judge’ model to detect violations of contextual integrity. From a large set of conversations, a subset of single-turn conversations were analyzed based on length. The judge model then assessed the context, sensitive information, and necessity for task completion, leading to the identification of conversations containing potential contextual integrity violations.

A smaller subset of these conversations, which demonstrated definitive contextual privacy violations, were analyzed further.

The framework itself was implemented using models that are smaller than typical chat agents such as ChatGPT, to enable local deployment via Ollama.

Schema for the prompt intervention system.

The three LLMs evaluated were Mixtral-8x7B-Instruct-v0.1; Llama-3.1-8B-Instruct; and DeepSeek-R1-Distill-Llama-8B.

User prompts are processed by the framework in three stages: context identification; sensitive information classification; and reformulation.

Two approaches were implemented for sensitive information classification: dynamic and structured classification: dynamic classification determines the essential details based on their use within a specific conversation; structured classification allows for the specification of a pre-defined list of sensitive attributes that are always considered non-essential. The model reformulates the prompt if it detects non-essential sensitive details by either removing or rewording them to minimize privacy risks while maintaining usability.

Home Rules

Though structured classification as a concept is not well-illustrated in the IBM paper, it is most akin to the ‘Private Data Definitions’ method in the Private Prompts initiative, which provides a downloadable standalone program that can rewrite prompts – albeit without the ability to directly intervene at the network level, as the IBM approach does (instead the user must copy and paste the modified prompts).

The Private Prompts executable allows a list of alternate substitutions for user-input text.

In the above image, we can see that the Private Prompts user is able to program automated substitutions for instances of sensitive information. In both cases, for Private Prompts and the IBM method, it seems unlikely that a user with enough presence-of-mind and personal insight to curate such a list would actually need this product – though it could be built up over time as incidents accrue.

In an administrator role, structured classification could work as an imposed firewall or censor-net for employees; and in a home network it could, with some difficult adjustments, become a domestic network filter for all network users; but ultimately, this method is arguably redundant, since a user who could set this up properly could also self-censor effectively in the first place.

ChatGPT’s Opinion

Since ChatGPT recently launched its deep research tool for paid users, I used this facility to ask ChatGPT to review related literature and give me a ‘cynical’ take on IBM’s paper. I received the most defensive and derisive response the system has ever given when asked to evaluate or parse a new publication:

ChatGPT-4o has a low opinion of the IBM project.

‘If users don’t trust OpenAI, Google, or Anthropic to handle their data responsibly,’ ChatGPT posits. ‘why would they trust a third-party software layer sitting between them and the AI? The intermediary itself becomes a new point of failure—potentially logging, mishandling, or even leaking data before it ever reaches the LLM. It solves nothing if it just creates another entity to exploit user data.’