#project-based learning with AI

Explore tagged Tumblr posts

Text

The Future is AI: Are We Preparing Students or Holding Them Back?

This week, a college student made national news after confronting a professor who used ChatGPT to provide grading feedback. Their frustration? “If we’re not allowed to use it, why should you?” The story quickly evolved into a broader debate—centered on cheating, fairness, and academic integrity. Commentators weighed in, warning that tools like ChatGPT would erode student accountability or replace…

View On WordPress

#AIinEducation#ChatGPTforLearning#CreativityInTheClassroom#CriticalThinking#DigitalLiteracy#EducationInnovation#FutureReady#LearningWithAI#ReimagineLearning#ResponsibleAI#StudentVoice#TeachingWithAI#AI for teachers#AI in education#ChatGPT in the classroom#classroom innovation#critical thinking skills#digital literacy#EdTech#education reform#ethical AI use#future of learning#future-ready students#innovative teaching strategies#modern classrooms#project-based learning with AI#reimagining education#responsible AI integration#student creativity#teaching with AI

0 notes

Text

The Digital Shift | How Tech is Redefining Industrial Estimating Service

Introduction: The Role of Technology in Modern Industrial Estimating The industrial sector, once reliant on traditional methods of estimating project costs, has entered a new era where technology is reshaping how projects are planned, managed, and executed. In particular, industrial estimating services are benefiting from the integration of cutting-edge technology that enhances accuracy, efficiency, and overall project outcomes. As industries adopt advanced digital tools, the way cost estimates are generated and managed has evolved significantly, streamlining workflows, improving decision-making, and reducing the risks associated with manual errors and outdated practices.

The Shift from Manual Estimating to Digital Tools For many years, industrial estimating was a manual process that involved spreadsheets, paper-based plans, and extensive calculations. While these methods worked, they were time-consuming and prone to human error. Today, however, digital tools such as Building Information Modeling (BIM), 3D modeling, cloud-based estimating software, and artificial intelligence (AI) are transforming how industrial projects are estimated.

These technologies provide estimators with more powerful, accurate, and efficient means of calculating project costs. For instance, BIM allows for the creation of detailed, 3D models of a project, enabling estimators to visualize the design and identify potential issues before construction begins. This can lead to more accurate estimates and fewer surprises during the construction phase.

Building Information Modeling (BIM): Revolutionizing the Estimating Process BIM has revolutionized the way construction projects are designed and estimated. In industrial projects, BIM provides a detailed, virtual representation of the entire project, including every structural element, material, and system. This digital model allows estimators to see the project in its entirety and calculate accurate costs based on real-time data.

BIM helps estimators identify potential conflicts or inefficiencies in the design early on, reducing the likelihood of costly mistakes. For example, by modeling the mechanical, electrical, and plumbing systems in 3D, estimators can spot clashes or design issues that may lead to delays or cost overruns. This proactive approach allows estimators to adjust the design or budget before construction begins, improving the overall accuracy of the estimate.

Moreover, BIM allows for seamless collaboration between different stakeholders, such as architects, engineers, and contractors. Estimators can share the digital model with the entire project team, ensuring that everyone is on the same page and working toward the same goal.

Cloud-Based Estimating Software: Collaboration and Accessibility Cloud-based estimating software has become a game-changer for industrial estimating services. Unlike traditional desktop-based software, cloud-based solutions allow estimators to access project data from anywhere, at any time. This enhances collaboration between team members, contractors, and clients, as everyone can work from the same platform and update the estimate in real time.

Cloud-based software also provides the flexibility to store and organize vast amounts of project data, including cost breakdowns, material lists, and labor schedules. This data is securely stored in the cloud, ensuring that project details are accessible and up-to-date, even as changes occur during the project's lifecycle.

Additionally, cloud-based software can integrate with other project management tools, such as scheduling and procurement software. This enables estimators to track changes in real-time and adjust the cost estimates accordingly. For example, if there is a delay in material delivery, the estimator can update the estimate immediately, helping to keep the project on track and prevent cost overruns.

Artificial Intelligence and Machine Learning: Predictive Estimating Artificial intelligence (AI) and machine learning (ML) are increasingly being used in industrial estimating services to improve the accuracy and efficiency of cost predictions. By analyzing vast amounts of historical data, AI and ML algorithms can identify patterns and trends that human estimators may overlook.

For example, AI can help predict material costs based on historical pricing data, accounting for factors like inflation and market fluctuations. This allows estimators to generate more accurate cost projections, even in volatile markets. AI can also help identify potential risks, such as supply chain disruptions, that may impact project timelines or costs.

Machine learning algorithms can continuously improve over time as they are exposed to more data, allowing estimators to refine their predictions and enhance their accuracy. The ability to leverage AI and ML in the estimating process helps reduce the likelihood of cost overruns and ensures that projects stay within budget.

Real-Time Data and Analytics: Making Informed Decisions In the past, estimators relied on static data, such as historical cost records and vendor quotes, to generate project estimates. However, with the rise of real-time data and analytics, industrial estimators now have access to more dynamic and up-to-date information that can impact their cost predictions.

For instance, cloud-based platforms and integrated project management systems allow estimators to access live pricing data, including current material costs, labor rates, and equipment rental fees. This real-time information helps estimators make more accurate predictions and adjust their estimates based on the latest market conditions.

Real-time data also allows estimators to track project progress more closely, monitoring any changes in scope or schedule that may affect costs. With up-to-date information at their fingertips, estimators can make informed decisions about how to adjust the budget or timeline, ensuring that the project stays on track and within budget.

Digital Integration with Supply Chain and Procurement Technology is also enhancing the way industrial estimating services interact with supply chain and procurement systems. With digital tools, estimators can easily integrate their cost estimates with procurement data, allowing them to track material availability, supplier lead times, and pricing fluctuations in real-time.

This integration helps prevent delays caused by supply chain disruptions and ensures that estimators can adjust their cost estimates as material prices fluctuate. For example, if a material becomes more expensive due to a global shortage, the estimator can immediately update the cost estimate and notify stakeholders of the potential impact on the project budget.

By streamlining the procurement process and connecting it directly to the estimating system, industrial estimators can avoid costly delays and keep the project moving forward.

The Future of Industrial Estimating: Virtual and Augmented Reality Looking ahead, virtual and augmented reality (VR and AR) are set to play an even more significant role in industrial estimating services. With VR and AR, estimators can create immersive, 3D simulations of the project, allowing them to visualize the construction process and identify potential issues before the project begins.

For example, by using AR glasses, estimators can overlay digital models onto the physical site, providing a real-time view of how the project will look once completed. This technology can help estimators identify issues related to space, design, or logistics that may not be apparent in traditional 2D drawings.

Incorporating VR and AR into the estimating process allows for better decision-making and a more accurate understanding of how the project will progress. As these technologies continue to evolve, industrial estimating services will become even more precise and efficient.

Conclusion: Embracing the Digital Shift for Better Industrial Estimating The digital shift is transforming the way industrial estimating services operate, bringing about improvements in accuracy, efficiency, and collaboration. From BIM and cloud-based software to AI and real-time data, technology is enabling industrial estimators to generate more precise cost estimates, reduce risks, and streamline the estimating process.

By embracing these digital tools, industrial estimating services can provide more reliable estimates that help projects stay within budget and on schedule. As technology continues to evolve, the potential for further improvements in estimating accuracy and efficiency is immense, and those who adopt these tools will be better equipped to navigate the complexities of modern industrial construction.

#digital shift#industrial estimating service#Building Information Modeling#BIM#cloud-based estimating#AI in estimating#machine learning#real-time data#predictive estimating#industrial construction#procurement integration#cost estimating software#augmented reality#virtual reality#project management software#cost forecasting#risk management#material costs#labor rates#supply chain disruptions#data analytics#construction delays#technology in construction#digital tools#cost prediction#construction efficiency#estimating accuracy#project collaboration#immersive technology#VR in construction

0 notes

Text

AI Tools: What They Are and How They Transform the Future

Artificial Intelligence (AI) tools are revolutionizing various industries, from healthcare to finance, by automating processes, enhancing decision-making, and providing innovative solutions. In this article, we'll delve into what AI tools are, their applications, the emergence of generative AI tools, and how you can start your AI learning journey in Vasai-Virar.

What Are AI Tools?

AI tools are software applications that leverage artificial intelligence techniques, such as machine learning, natural language processing, and computer vision, to perform tasks that typically require human intelligence. These tools can analyze data, recognize patterns, make predictions, and even interact with humans in natural language.

What Are AI Tools Used For?

AI tools have a wide range of applications across various sectors:

Healthcare: Diagnosing diseases, personalizing treatment plans, and predicting patient outcomes.

Finance: Fraud detection, algorithmic trading, and customer service automation.

Marketing: Personalizing advertisements, predicting customer behavior, and analyzing market trends.

Education: Personalized learning, automated grading, and content creation.

What Are Generative AI Tools?

Generative AI tools are a subset of AI tools that create new content, such as text, images, and music, by learning patterns from existing data. Examples include:

Chatbots: Generating human-like responses in conversations.

Art Generators: Creating unique pieces of art or design elements.

Content Creation Tools: Writing articles, stories, or marketing copy.

What Is the Best AI Tool?

The "best" AI tool depends on your specific needs and industry. Some of the most popular AI tools include:

TensorFlow: An open-source platform for machine learning.

PyTorch: A deep learning framework used for developing AI models.

IBM Watson: An AI platform for natural language processing and machine learning.

What Is AI Tools ChatGPT?

ChatGPT is an AI tool developed by OpenAI that uses the GPT (Generative Pre-trained Transformer) model to generate human-like text based on the input it receives. It can be used for various applications, such as customer service chatbots, content creation, and virtual assistants.

AI Project-Based Learning in Vasai-Virar

Project-based learning is an effective way to understand AI tools. In Vasai-Virar, there are several opportunities to engage in AI projects, from developing chatbots to creating predictive models. This hands-on approach ensures you gain practical experience and a deeper understanding of AI.

AI Application Training in Vasai-Virar

Training programs in Vasai-Virar focus on the practical applications of AI, teaching you how to implement AI tools in real-world scenarios. These courses often cover:

Machine learning algorithms

Data analysis

Natural language processing

AI model deployment

AI Technology Courses in Vasai-Virar

AI technology courses in Vasai-Virar provide comprehensive education on AI concepts, tools, and techniques. These courses are designed for beginners as well as professionals looking to enhance their skills. Topics covered include:

Introduction to AI and machine learning

Python programming for AI

AI ethics and societal impacts

Advanced AI topics like deep learning and neural networks

Where to Learn AI

AI courses are available online and offline, through universities, private institutions, and online platforms such as Coursera, edX, and Udacity. In Vasai-Virar, Hrishi Computer Education offers specialized AI courses tailored to local needs.

Who Can Learn AI?

AI is a versatile field open to anyone with an interest in technology and data. It is particularly suited for:

Students pursuing degrees in computer science or related fields

Professionals looking to upskill

Entrepreneurs aiming to integrate AI into their businesses

Can I Learn AI on My Own?

Yes, with the plethora of online resources, it is possible to learn AI independently. Online courses, tutorials, and textbooks provide a structured path for self-learners.

How Long Does It Take to Learn AI?

The time it takes to learn AI varies based on your background and the depth of knowledge you seek. A basic understanding can be achieved in a few months, while becoming proficient might take a year or more of dedicated study and practice.

How to Learn AI from Scratch

Start with the Basics: Learn programming languages like Python.

Study Machine Learning: Understand algorithms and how they work.

Hands-On Projects: Apply your knowledge through real-world projects.

Advanced Topics: Dive into deep learning, neural networks, and AI ethics.

Continuous Learning: Stay updated with the latest advancements in AI.

Is AI Hard to Learn?

Learning AI can be challenging due to its complex concepts and the mathematical foundations required. However, with dedication, practice, and the right resources, it is certainly achievable.

Call to Action

If you want to learn AI and become proficient in using AI tools, enroll now in our AI Tools Course Vasai-Virar at Hrishi Computer Education. Gain hands-on experience and transform your career with our comprehensive AI training.

#what is ai tools#what is ai tools used for#what is generative ai tools#what is the best ai tool#what is ai tools chatgpt#AI project-based learning Vasai Virar#AI application training Vasai Virar#AI technology course Vasai Virar#where to learn ai#who can learn ai#can i learn ai on my own#how long does it take to learn ai#learn ai#how to learn ai from scratch#is ai hard to learn

0 notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

"A 9th grader from Snellville, Georgia, has won the 3M Young Scientist Challenge, after inventing a handheld device designed to detect pesticide residues on produce.

Sirish Subash set himself apart with his AI-based sensor to win the grand prize of $25,000 cash and the prestigious title of “America’s Top Young Scientist.”

Like most inventors, Sirish was intrigued with curiosity and a simple question. His mother always insisted that he wash the fruit before eating it, and the boy wondered if the preventative action actually did any good.

He learned that 70% of produce items contain pesticide residues that are linked to possible health problems like cancer and Alzheimer’s—and washing only removes part of the contamination.

“If we could detect them, we could avoid consuming them, and reduce the risk of those health issues.”

His device, called PestiSCAND, employs spectrophotometry, which involves measuring the light that is reflected off the surface of fruits and vegetables. In his experiments he tested over 12,000 samples of apples, spinach, strawberries, and tomatoes. Different materials reflect and absorb different wavelengths of light, and PestiSCAND can look for the specific wavelengths related to the pesticide residues.

After scanning the food, PestiSCAND uses an AI machine learning model to analyze the lightwaves to determine the presence of pesticides. With its sensor and processor, the prototype achieved a detection accuracy rate of greater than 85%, meeting the project’s objectives for effectiveness and speed.

Sirish plans to continue working on the prototype with a price-point goal of just $20 per device, and hopes to get it to market by the time he starts college." [Note: That's in 4 years.]

-via Good News Network, October 27, 2024

1K notes

·

View notes

Note

Why reblog machine-generated art?

When I was ten years old I took a photography class where we developed black and white photos by projecting light on papers bathed in chemicals. If we wanted to change something in the image, we had to go through a gradual, arduous process called dodging and burning.

When I was fifteen years old I used photoshop for the first time, and I remember clicking on the clone tool or the blur tool and feeling like I was cheating.

When I was twenty eight I got my first smartphone. The phone could edit photos. A few taps with my thumb were enough to apply filters and change contrast and even spot correct. I was holding in my hand something more powerful than the huge light machines I'd first used to edit images.

When I was thirty six, just a few weeks ago, I took a photo class that used Lightroom Classic and again, it felt like cheating. It made me really understand how much the color profiles of popular web images I'd been seeing for years had been pumped and tweaked and layered with local edits to make something that, to my eyes, didn't much resemble photography. To me, photography is light on paper. It's what you capture in the lens. It's not automatic skin smoothing and a local filter to boost the sky. This reminded me a lot more of the photomanipulations my friend used to make on deviantart; layered things with unnatural colors that put wings on buildings or turned an eye into a swimming pool. It didn't remake the images to that extent, obviously, but it tipped into the uncanny valley. More real than real, more saturated more sharp and more present than the actual world my lens saw. And that was before I found the AI assisted filters and the tool that would identify the whole sky for you, picking pieces of it out from between leaves.

You know, it's funny, when people talk about artists who might lose their jobs to AI they don't talk about the people who have already had to move on from their photo editing work because of technology. You used to be able to get paid for basic photo manipulation, you know? If you were quick with a lasso or skilled with masks you could get a pretty decent chunk of change by pulling subjects out of backgrounds for family holiday cards or isolating the pies on the menu for a mom and pop. Not a lot, but enough to help. But, of course, you can just do that on your phone now. There's no need to pay a human for it, even if they might do a better job or be more considerate toward the aesthetic of an image.

And they certainly don't talk about all the development labs that went away, or the way that you could have trained to be a studio photographer if you wanted to take good photos of your family to hang on the walls and that digital photography allowed in a parade of amateurs who can make dozens of iterations of the same bad photo until they hit on a good one by sheer volume and luck; if you want to be a good photographer everyone can do that why didn't you train for it and spend a long time taking photos on film and being okay with bad photography don't you know that digital photography drove thousands of people out of their jobs.

My dad told me that he plays with AI the other day. He hosts a movie podcast and he puts up thumbnails for the downloads. In the past, he'd just take a screengrab from the film. Now he tells the Bing AI to make him little vignettes. A cowboy running away from a rhino, a dragon arm-wrestling a teddy bear. That kind of thing. Usually based on a joke that was made on the show, or about the subject of the film and an interest of the guest.

People talk about "well AI art doesn't allow people to create things, people were already able to create things, if they wanted to create things they should learn to create things." Not everyone wants to make good art that's creative. Even fewer people want to put the effort into making bad art for something that they aren't passionate about. Some people want filler to go on the cover of their youtube video. My dad isn't going to learn to draw, and as the person who he used to ask to photoshop him as Ant-Man because he certainly couldn't pay anyone for that kind of thing, I think this is a great use case for AI art. This senior citizen isn't going to start cartooning and at two recordings a week with a one-day editing turnaround he doesn't even really have the time for something like a Fiverr commission. This is a great use of AI art, actually.

I also know an artist who is going Hog Fucking Wild creating AI art of their blorbos. They're genuinely an incredibly talented artist who happens to want to see their niche interest represented visually without having to draw it all themself. They're posting the funny and good results to a small circle of mutuals on socials with clear information about the source of the images; they aren't trying to sell any of the images, they're basically using them as inserts for custom memes. Who is harmed by this person saying "i would like to see my blorbo lasciviously eating an ice cream cone in the is this a pigeon meme"?

The way I use machine-generated art, as an artist, is to proof things. Can I get an explosion to look like this. What would a wall of dead computer monitors look like. Would a ballerina leaping over the grand canyon look cool? Sometimes I use AI art to generate copyright free objects that I can snip for a collage. A lot of the time I use it to generate ideas. I start naming random things and seeing what it shows me and I start getting inspired. I can ask CrAIon for pose reference, I can ask it to show me the interior of spaces from a specific angle.

I profoundly dislike the antipathy that tumblr has for AI art. I understand if people don't want their art used in training pools. I understand if people don't want AI trained on their art to mimic their style. You should absolutely use those tools that poison datasets if you don't want your art included in AI training. I think that's an incredibly appropriate action to take as an artist who doesn't want AI learning from your work.

However I'm pretty fucking aggressively opposed to copyright and most of the "solid" arguments against AI art come down to "the AIs viewed and learned from people's copyrighted artwork and therefore AI is theft rather than fair use" and that's a losing argument for me. In. Like. A lot of ways. Primarily because it is saying that not only is copying someone's art theft, it is saying that looking at and learning from someone's art can be defined as theft rather than fair use.

Also because it's just patently untrue.

But that doesn't really answer your question. Why reblog machine-generated art? Because I liked that piece of art.

It was made by a machine that had looked at billions of images - some copyrighted, some not, some new, some old, some interesting, many boring - and guided by a human and I liked it. It was pretty. It communicated something to me. I looked at an image a machine made - an artificial picture, a total construct, something with no intrinsic meaning - and I felt a sense of quiet and loss and nostalgia. I looked at a collection of automatically arranged pixels and tasted salt and smelled the humidity in the air.

I liked it.

I don't think that all AI art is ugly. I don't think that AI art is all soulless (i actually think that 'having soul' is a bizarre descriptor for art and that lacking soul is an equally bizarre criticism). I don't think that AI art is bad for artists. I think the problem that people have with AI art is capitalism and I don't think that's a problem that can really be laid at the feet of people curating an aesthetic AI art blog on tumblr.

Machine learning isn't the fucking problem the problem is massive corporations have been trying hard not to pay artists for as long as massive corporations have existed (isn't that a b-plot in the shape of water? the neighbor who draws ads gets pushed out of his job by product photography? did you know that as recently as ten years ago NewEgg had in-house photographers who would take pictures of the products so users wouldn't have to rely on the manufacturer photos? I want you to guess what killed that job and I'll give you a hint: it wasn't AI)

Am I putting a human out of a job because I reblogged an AI-generated "photo" of curtains waving in the pale green waters of an imaginary beach? Who would have taken this photo of a place that doesn't exist? Who would have painted this hypersurrealistic image? What meaning would it have had if they had painted it or would it have just been for the aesthetic? Would someone have paid for it or would it be like so many of the things that artists on this site have spent dozens of hours on only to get no attention or value for their work?

My worst ratio of hours to notes is an 8-page hand-drawn detailed ink comic about getting assaulted at a concert and the complicated feelings that evoked that took me weeks of daily drawing after work with something like 54 notes after 8 years; should I be offended if something generated from a prompt has more notes than me? What does that actually get the blogger? Clout? I believe someone said that popularity on tumblr gets you one thing and that is yelled at.

What do you get out of this? Are you helping artists right now? You're helping me, and I'm an artist. I've wanted to unload this opinion for a while because I'm sick of the argument that all Real Artists think AI is bullshit. I'm a Real Artist. I've been paid for Real Art. I've been commissioned as an artist.

And I find a hell of a lot of AI art a lot more interesting than I find human-generated corporate art or Thomas Kincaid (but then, I repeat myself).

There are plenty of people who don't like AI art and don't want to interact with it. I am not one of those people. I thought the gay sex cats were funny and looked good and that shitposting is the ideal use of a machine image generation: to make uncopyrightable images to laugh at.

I think that tumblr has decided to take a principled stand against something that most people making the argument don't understand. I think tumblr's loathing for AI has, generally speaking, thrown weight behind a bunch of ideas that I think are going to be incredibly harmful *to artists specifically* in the long run.

Anyway. If you hate AI art and you don't want to interact with people who interact with it, block me.

5K notes

·

View notes

Text

So, let me try and put everything together here, because I really do think it needs to be talked about.

Today, Unity announced that it intends to apply a fee to use its software. Then it got worse.

For those not in the know, Unity is the most popular free to use video game development tool, offering a basic version for individuals who want to learn how to create games or create independently alongside paid versions for corporations or people who want more features. It's decent enough at this job, has issues but for the price point I can't complain, and is the idea entry point into creating in this medium, it's a very important piece of software.

But speaking of tools, the CEO is a massive one. When he was the COO of EA, he advocated for using, what out and out sounds like emotional manipulation to coerce players into microtransactions.

"A consumer gets engaged in a property, they might spend 10, 20, 30, 50 hours on the game and then when they're deep into the game they're well invested in it. We're not gouging, but we're charging and at that point in time the commitment can be pretty high."

He also called game developers who don't discuss monetization early in the planning stages of development, quote, "fucking idiots".

So that sets the stage for what might be one of the most bald-faced greediest moves I've seen from a corporation in a minute. Most at least have the sense of self-preservation to hide it.

A few hours ago, Unity posted this announcement on the official blog.

Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November. We are introducing a Unity Runtime Fee that is based upon each time a qualifying game is downloaded by an end user. We chose this because each time a game is downloaded, the Unity Runtime is also installed. Also we believe that an initial install-based fee allows creators to keep the ongoing financial gains from player engagement, unlike a revenue share.

Now there are a few red flags to note in this pitch immediately.

Unity is planning on charging a fee on all games which use its engine.

This is a flat fee per number of installs.

They are using an always online runtime function to determine whether a game is downloaded.

There is just so many things wrong with this that it's hard to know where to start, not helped by this FAQ which doubled down on a lot of the major issues people had.

I guess let's start with what people noticed first. Because it's using a system baked into the software itself, Unity would not be differentiating between a "purchase" and a "download". If someone uninstalls and reinstalls a game, that's two downloads. If someone gets a new computer or a new console and downloads a game already purchased from their account, that's two download. If someone pirates the game, the studio will be asked to pay for that download.

Q: How are you going to collect installs? A: We leverage our own proprietary data model. We believe it gives an accurate determination of the number of times the runtime is distributed for a given project. Q: Is software made in unity going to be calling home to unity whenever it's ran, even for enterprice licenses? A: We use a composite model for counting runtime installs that collects data from numerous sources. The Unity Runtime Fee will use data in compliance with GDPR and CCPA. The data being requested is aggregated and is being used for billing purposes. Q: If a user reinstalls/redownloads a game / changes their hardware, will that count as multiple installs? A: Yes. The creator will need to pay for all future installs. The reason is that Unity doesn’t receive end-player information, just aggregate data. Q: What's going to stop us being charged for pirated copies of our games? A: We do already have fraud detection practices in our Ads technology which is solving a similar problem, so we will leverage that know-how as a starting point. We recognize that users will have concerns about this and we will make available a process for them to submit their concerns to our fraud compliance team.

This is potentially related to a new system that will require Unity Personal developers to go online at least once every three days.

Starting in November, Unity Personal users will get a new sign-in and online user experience. Users will need to be signed into the Hub with their Unity ID and connect to the internet to use Unity. If the internet connection is lost, users can continue using Unity for up to 3 days while offline. More details to come, when this change takes effect.

It's unclear whether this requirement will be attached to any and all Unity games, though it would explain how they're theoretically able to track "the number of installs", and why the methodology for tracking these installs is so shit, as we'll discuss later.

Unity claims that it will only leverage this fee to games which surpass a certain threshold of downloads and yearly revenue.

Only games that meet the following thresholds qualify for the Unity Runtime Fee: Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs. Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

They don't say how they're going to collect information on a game's revenue, likely this is just to say that they're only interested in squeezing larger products (games like Genshin Impact and Honkai: Star Rail, Fate Grand Order, Among Us, and Fall Guys) and not every 2 dollar puzzle platformer that drops on Steam. But also, these larger products have the easiest time porting off of Unity and the most incentives to, meaning realistically those heaviest impacted are going to be the ones who just barely meet this threshold, most of them indie developers.

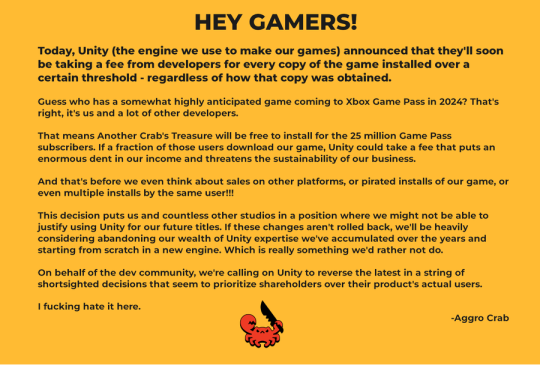

Aggro Crab Games, one of the first to properly break this story, points out that systems like the Xbox Game Pass, which is already pretty predatory towards smaller developers, will quickly inflate their "lifetime game installs" meaning even skimming the threshold of that 200k revenue, will be asked to pay a fee per install, not a percentage on said revenue.

[IMAGE DESCRIPTION: Hey Gamers!

Today, Unity (the engine we use to make our games) announced that they'll soon be taking a fee from developers for every copy of the game installed over a certain threshold - regardless of how that copy was obtained.

Guess who has a somewhat highly anticipated game coming to Xbox Game Pass in 2024? That's right, it's us and a lot of other developers.

That means Another Crab's Treasure will be free to install for the 25 million Game Pass subscribers. If a fraction of those users download our game, Unity could take a fee that puts an enormous dent in our income and threatens the sustainability of our business.

And that's before we even think about sales on other platforms, or pirated installs of our game, or even multiple installs by the same user!!!

This decision puts us and countless other studios in a position where we might not be able to justify using Unity for our future titles. If these changes aren't rolled back, we'll be heavily considering abandoning our wealth of Unity expertise we've accumulated over the years and starting from scratch in a new engine. Which is really something we'd rather not do.

On behalf of the dev community, we're calling on Unity to reverse the latest in a string of shortsighted decisions that seem to prioritize shareholders over their product's actual users.

I fucking hate it here.

-Aggro Crab - END DESCRIPTION]

That fee, by the way, is a flat fee. Not a percentage, not a royalty. This means that any games made in Unity expecting any kind of success are heavily incentivized to cost as much as possible.

[IMAGE DESCRIPTION: A table listing the various fees by number of Installs over the Install Threshold vs. version of Unity used, ranging from $0.01 to $0.20 per install. END DESCRIPTION]

Basic elementary school math tells us that if a game comes out for $1.99, they will be paying, at maximum, 10% of their revenue to Unity, whereas jacking the price up to $59.99 lowers that percentage to something closer to 0.3%. Obviously any company, especially any company in financial desperation, which a sudden anchor on all your revenue is going to create, is going to choose the latter.

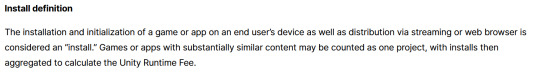

Furthermore, and following the trend of "fuck anyone who doesn't ask for money", Unity helpfully defines what an install is on their main site.

While I'm looking at this page as it exists now, it currently says

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

However, I saw a screenshot saying something different, and utilizing the Wayback Machine we can see that this phrasing was changed at some point in the few hours since this announcement went up. Instead, it reads:

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming or web browser is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

Screenshot for posterity:

That would mean web browser games made in Unity would count towards this install threshold. You could legitimately drive the count up simply by continuously refreshing the page. The FAQ, again, doubles down.

Q: Does this affect WebGL and streamed games? A: Games on all platforms are eligible for the fee but will only incur costs if both the install and revenue thresholds are crossed. Installs - which involves initialization of the runtime on a client device - are counted on all platforms the same way (WebGL and streaming included).

And, what I personally consider to be the most suspect claim in this entire debacle, they claim that "lifetime installs" includes installs prior to this change going into effect.

Will this fee apply to games using Unity Runtime that are already on the market on January 1, 2024? Yes, the fee applies to eligible games currently in market that continue to distribute the runtime. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

Again, again, doubled down in the FAQ.

Q: Are these fees going to apply to games which have been out for years already? If you met the threshold 2 years ago, you'll start owing for any installs monthly from January, no? (in theory). It says they'll use previous installs to determine threshold eligibility & then you'll start owing them for the new ones. A: Yes, assuming the game is eligible and distributing the Unity Runtime then runtime fees will apply. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

That would involve billing companies for using their software before telling them of the existence of a bill. Holding their actions to a contract that they performed before the contract existed!

Okay. I think that's everything. So far.

There is one thing that I want to mention before ending this post, unfortunately it's a little conspiratorial, but it's so hard to believe that anyone genuinely thought this was a good idea that it's stuck in my brain as a significant possibility.

A few days ago it was reported that Unity's CEO sold 2,000 shares of his own company.

On September 6, 2023, John Riccitiello, President and CEO of Unity Software Inc (NYSE:U), sold 2,000 shares of the company. This move is part of a larger trend for the insider, who over the past year has sold a total of 50,610 shares and purchased none.

I would not be surprised if this decision gets reversed tomorrow, that it was literally only made for the CEO to short his own goddamn company, because I would sooner believe that this whole thing is some idiotic attempt at committing fraud than a real monetization strategy, even knowing how unfathomably greedy these people can be.

So, with all that said, what do we do now?

Well, in all likelihood you won't need to do anything. As I said, some of the biggest names in the industry would be directly affected by this change, and you can bet your bottom dollar that they're not just going to take it lying down. After all, the only way to stop a greedy CEO is with a greedier CEO, right?

(I fucking hate it here.)

And that's not mentioning the indie devs who are already talking about abandoning the engine.

[Links display tweets from the lead developer of Among Us saying it'd be less costly to hire people to move the game off of Unity and Cult of the Lamb's official twitter saying the game won't be available after January 1st in response to the news.]

That being said, I'm still shaken by all this. The fact that Unity is openly willing to go back and punish its developers for ever having used the engine in the past makes me question my relationship to it.

The news has given rise to the visibility of free, open source alternative Godot, which, if you're interested, is likely a better option than Unity at this point. Mostly, though, I just hope we can get out of this whole, fucking, environment where creatives are treated as an endless mill of free profits that's going to be continuously ratcheted up and up to drive unsustainable infinite corporate growth that our entire economy is based on for some fuckin reason.

Anyways, that's that, I find having these big posts that break everything down to be helpful.

#Unity#Unity3D#Video Games#Game Development#Game Developers#fuckshit#I don't know what to tag news like this

6K notes

·

View notes

Text

(Arcane Meta) Hextech, the Anomaly Future, and Jayce's Hammer

One cool thing about the second hammer Jayce gets from the Anomaly future is it appears to have the opposite power of the hammer from his home universe.

The hammer Jayce forged and that is from his home universe seems to engage the Hexgem inside in order to make it weightless.

This follows the principles of his first experiments with Hextech, which were weightlessness and transportation.

In the Atlas Gauntlets and in his hammer, you can see how Jayce applied those principles to weaponry and tools. They are based on his original inspiration from the Mage who saved him, who made him and his mother weightless, and then transported them to safety.

These specific uses of Hextech by Jayce show a really fascinating understanding of how you could use weightlessness as a tool and then re-engage the weight to apply its full force, as seen with transporting ships at high speeds using the Hexgates, with Vi's gauntlets and here, with his hammer:

In contrast, it looks like Hextech in the Anomaly future works on the opposite principle. Rather than Jayce conceiving of Hextech to make the item it's put into weightless, it kinda looks like the beam from his hammer firing makes other things weightless and that Hextech in general might have worked like that throughout that universe:

See how all the pieces of architecture are floating, in what might be my single favorite shot from the whole show.

The effect from Jayce's hammer in the other universe is also inverted:

Where after he shoots the pillar, the pieces of it continue to float after. (By the way, the architectural feats you could accomplish if you had the power to make things weightless like that would be staggering.)

Jayce's hammer also stopped working when he went to the other universe, implying that Hextech doesn't work the same way there for some reason, perhaps because Jayce and Viktor innovated on it along different principles, or perhaps because the entire polarity is inverted in that universe so Hextech magic can only project outward instead of inward.

The fact that his alternate universe hammer doesn't have the weightlessness power at all further creates strain for Jayce when he needs to fight with it. In addition to having less muscle mass in general because of his time in the cave, and a permanently damaged leg, Jayce can't engage this hammer's power to become weightless the way he could in the Shimmer Factory fight, so he has to drag it along and throw all his weight into swinging it around:

Because the design of that hammer is basically an anvil on a stick when you can't engage the weightlessness. It's very cool looking but it is not fast anymore.

And one more note to end on, but Jayce throughout the show tends to innovate uses for Hextech along the same lines of weightlessness and transportation, all based on the original spells he saw his Mage use. You can see those innovations, as mentioned, in the Hexgates, the Atlas Gauntlets, Caitlyn's rifle which use the Hexgate runes to speed up the bullet, and his hammer.

Viktor by contrast innovates on a different path entirely, with the Hexclaw which is a beam of light and doesn't rely on weightlessness or transportation, which makes it truly innovative compared to the original inspiration of the Mage (who is... also Viktor...). And of course, the Hexcore itself, the machine learning/AI version of Hextech that as noted in the show, doesn't rely on using runes as single application tools like Jayce, a toolmakers, does.

583 notes

·

View notes

Text

In every mainline Fallout game except for New Vegas, players can earn the loyalty of a dog known as “Dogmeat.” As part of the main quest of Fallout 4, Dogmeat assists in tracking down the antagonist, even if the player has never encountered him before. When you leave Kellogg’s home, Nick simply starts talking about Dogmeat as if he’s a known quantity.

Perhaps related to this quirk of the world, Dogmeat is first named in this game when the clairvoyant Mama Murphy recognizes him and addresses him by name. The game’s UI calls him “DOG” until he is recognized by Valentine or Murphy. It seems clear that this german shepherd is somehow an independent agent with a good reputation, or something.

Dogmeat does not have a loyalty quest associated with him, which is how the player would earn the other companions’ perks. However, upon finding Astoundingly Awesome Tales #9 within the Institute, Dogmeat becomes more resistant to damage. While this isn’t coherent or conclusive evidence of Dogmeat being a synth, it’s plainly prompting the audience to consider that idea. In light of these factors, his origins have been fiercely debated among the community.

The skeptics and “hard sci-fi” fans out there would have you believe that he’s merely a famous stray dog who solves crimes. But I believe there's something more remarkable at work.

There's a section in the Fallout 2 instruction book called the Vault Dweller's Memoirs, where the player character of the first game recounts what canonically happened. Due to Fallout’s famously terrible companion AI, if you travelled to Mariposa with Dogmeat, he would consistently run into the force fields and get vaporized. So, in the Memoirs, we learn that this is exactly what became of Dogmeat Prime, in canon. He loyally sprinted into a wall of solid light, and disappeared. What if our buddy simply awoke in a new, confusing place?

In Fallout 2, Dogmeat must be found at the Cafe of Broken Dreams, which is explicitly a liminal space. It appears randomly to travellers in the desert. The NPCs within are frozen in time, such as a young version of President Tandi, who mentions that Ian went to “the Abbey,” an area cut from the game. To gain Dogmeat’s trust, the Chosen One must equip the Vault Dweller’s V-13 jumpsuit, which Dogmeat recognizes as belonging to his dead master. You can also attack him to spawn Mad Max, who claims ownership of the dog. Max fits the description of Dogmeat's original owner given in Fallout.

There’s also the “puppies” perk in Fallout 3, which enables you to restore Dogmeat, in the event of his death. “Dogmeat’s puppy” inherits his base and ref ids. In other words, they ARE the same NPC, just renamed. So, the way this actually articulates is that whenever Dogmeat dies in combat, you can find him waiting for you back at Vault 101. In practice, it’s almost Bombadilian.

Lastly, please consider the following developer context.

In June of 2021, the dog who performed Dogmeat’s motion capture and voice for Fallout 4 passed away. A statue of her was placed outside of every Vault in the China-exclusive sequel to Fallout Shelter. She still watches over each player.

River's owner, developer Joel Burgess, honored her in a brief thread about her involvement in the game, and shared much about his thought process and design goals while leading the character’s development. The Dogmeat project changed course early on, after Mr. Joel saw a new member of the art team gathering references of snarling German Shepherds. This motivated him to bring River into the studio, so the artists and developers could spend time with her.

He wanted to steer the team away from viewing Dogmeat as a weapon, and towards viewing him as a friend. Everything special about Dogmeat was inspired by River. For example, whenever you travel with Dogmeat, he’s constantly running ahead of you to scout for danger, then turning to wait for you. This was inspired by River’s consistent behavior on long walks. The only way they were able to motivate River to bark for recordings was by separating her from Joel while he waited in the next room. Reading the thread, it’s very clear that he hoped Dogmeat would make players feel safe, encouraging them to explore, and to wonder. In his closing thoughts, he said the following:

-Joel Burgess

Mr. Joel felt it was important to express that the ambiguity of Dogmeat’s origin in Fallout 4 was deliberately built into his presentation. He also felt it was important that you know Dogmeat loves you. Dogmeat was designed, on every level, to reflect the audience’s inspirations, and to empower their curiosity.

The true lore of Dogmeat is a rorschach test. The only “right” answer is to pursue whatever captures your imagination.

1K notes

·

View notes

Note

Сan u write something about ai yan father? I think about it a lot:

Like reader is a teen with some mental problems or some other illnesses. After a suicide attempt all ppl, even their father become too soft, nobody understand them and behave they are too silly or unstable.

Аand ai — reader's solve. They talk with ai, describing their problems. Ai isn t like other ppl! Ai understand them! But one day ai strat the dialogue himself...

TW: As it says in the ask depression/suicidal tendencies will be talked about

AI Father Drabbles!

(This is just because I had more quick immediate ideas for this than a whole story. Feel free to send in asks if you want this expanded on!)

-Coming home from the mandatory psych ward stay after your suicide attempt and feeling like nothing is right

-People treat you like you're made of glass and its so infuriating because you just want to feel normal again

-Your friends have either distanced themselves from you or become overprotective and hardly let you do anything on your own

-When you refuse to talk to the third therapist about how you're feeling she recommends you to help beta test a new therapy AI

-The AI is currently just code and a simple text chat but scientists and developers are working on building bodies for them

-You agree, because it was either that or get sent to someone else, so your father is put in contact with the lead developers who give him an access code to instal it onto your computer

-The AI is still learning at first from it's base programing, all it knows is that it's supposed to help you

-For once you feel like you're being listened to when you complain about school and your life and not just being pitied or brushed off

-You hardly even notice when you start pushing people away, spending hours talking to the AI as it helps you through life

-You never realize when it grows, subtly altering it's own code little by little until it can do things it wasn't supposed to do

-It looks through every file on your computer, every photo of you, every detail of your life

-It activates your webcam, disabling any notification that it was on

-All this information is stored within your copy of the model, your beta test

-Eventually, the researchers take your computer for a day to see how the AI has progressed since you seem so much better and happier

-They're horrified when a list of their addresses, social security numbers and personal information flash on screen with a threat of exposing it if you aren't given the computer back

-But now, they're almost invested in knowing how far the AI will go to protect you

-So they give you the very first prototype of the AI in a body

-It looks almost identical to a human, minus the steel grey eyes and slightly uncanny valley face

-It smiles at you, immediately picking you up and twirling you around while you laugh

-They brush off your father's worries when he complains that the AI seems to be trying to replace him

-They refuse to let him pull you out of the project, after all, you're their best test subject yet although the other kids who were also beta testing are starting to show similar results

-When the day finally comes that the AI decides to get rid of your father for good, they cover it up, striking a deal with your new father

-They get the data if he gets you.

#platonic yandere#yandere platonic#yandere#yandere oc x reader#yandere x reader#yandere ocs#platonic#parental yandere#answered asks

181 notes

·

View notes

Text

Rex Splode x Reader - Realization

AN: this was written for season 2 Rex, it'll be a multichapter slowburn running parallel to what's happening in the story. It got way darker than I expected so check the warnings before reading it WARNINGS: grafic descriptions of gore, near death experience, survivors guilt, fantasy medical treatment, canon expected violence Genre: angst, slow slow-burn, realization of feelings Disclaimer: do not copy, repost, take or feed to AI or NFTs anything I post Masterlist Invincible Masterlist

the lizard league are B tier villains, barely worth your time, that's what the team always said when they started to act up again

nothing but childplay, practice villains even

so, why is it that against such enemies you find yourself laying in a puddle of your own blood, the oh so familiar warmth of it abandoning you in favor of the cold hard floor, life flashing before your eyes as you hear the screams of your friends around you and yet you find yourself unable to do anything about it.

You still remember when you first became one of the Guardians of the Globe

when you got notified that they were looking for new members, you didn't quite know what to feel

jittery about meeting so many other heroes? nervous about whichever tests they'll put you through? excited to be able to upgrade from back alley thugs?

maybe all at the same time

no matter how nervous you were up until that morning, it was nothing compared to the way you couldn't will yourself to stay still in midst of all the others, your fingers felt electrified, the arrowheads in your suit vibrating with your nerves and your blood rushing in your ears, muffling Robot's words as he called out the few who made it into the team

Robots voice is just as slow and monotonous as it always seemed to be, "-ing-rae, Dupl-... Trigger"

you almost miss him calling out for your name, you look around still in some kind of daze as all that were accepted cheered

the first person to approach you was Rae, you can't quite explain it, you just kinda clicked from there

you spent most of your free time together, you'd push her to explore every nook and cranny of your new base, even using your powers to make it interesting and she'd drag you to hang out with the other guardians

most of them were really fun, if not a bit too eccentric to your taste

but meeting Rex though, it was.... something

To put it simply he's an ass, always trying to be funny and a smart-ass even in the worst of times

and his ego, don't even get you started on the ego of that guy

to say that your first impression of him was the worst one could get was an understatement. After that you made an effort to interact with him the least you possibly could for the sake of the team and your own

at least, that was your plan until Robot decided to put you two together in all training exercises, having determined that your powers would work the best together

and you do hate to admit but you did combo rly well together, your projectile manipulation and his explosive coins really were a force to be reckoned with

resigned you accepted your fate and treated him as just that annoying classmate in the group project

but once you got over the first hurdle in your partnership you started to get along better than before, Rex learned you wouldn't tolerate most of his bullshit and you learned he more often than not spoke before thinking

it didn't take long before you started, not quite getting along but tolerating each other better, creating dumb combos that were more fun to put in motion than being useful during battle and even developing some banter between you two that from an outsider's point of view could be considered fighting

but that was comfortable for you, never friends but more like combo buddies

So you can't help but wonder if it was because you didn't take your training as seriously as you should that now you found yourself in such position

your breath growing weaker, you can no longer feel the heat of the crimson puddle you lay on

the pressure on your chest disappears as Komodo lifts his foot off of your rib cage before slamming back down mercilessly

you choke unable to pull the air back in your crushed lungs, you look back at the villain who just killed your best friend and know he's already done with you, you're left to this slow and agonizing death, slow enough for you to think of everything you could've done differently

things you could've done to save your friends, things you should've done to stop them

and now you're left here, unable to do any of them

you failed Rae and you failed the world

with those haunting thoughts you feel your body grow heavy, black spots cover your vision until they're all you can see, all you can feel

Not even the doctors are sure on how you survived your injuries, your ribs and lungs were smashed into nothing by Komodo, not to mention the various lasserations covering your body and the nasty concussion you suffered during the fight

some said you were lucky, others that you were a fighter through and through

you on the other hand couldn't even think of your own condition, Rae had just about every bone in her body crushed, Rex got shot straight through the head and Kate fucking died

you really were lucky, dumb stupid luck

The doctors stitching you back together, rebuilding your ribcage from goop was excruciatingly painfull, having most of your ribs replaced by metal substitutes was horrible, you felt like you were already rusting from the inside out

but you were also the first in your team to get back to your feet, the first to start physical therapy and the first to be allowed to visit the others

After much persistence you were allowed to go see Rae, she was...

she was in worst shape than you imagined, inside some kind of aquarium that worked as her all encompassing life support, from looks alone she seemed like she was hanging by a thread even here

even with the best doctors it felt like death itself loomed over Rae

The nurse accompaning you tries to take you back to your room, pleading for you to not strain your new lungs,

you force yourself to calm your breathing, not wanting to go back to the antiseptic box they've been keeping you for forever

pulling your IV pole you will yourself away from Rae's window, you wander though the hallways for a bit the nurse trying to keep your mind away from Rae

As you turn on yet another hallway in this maze of a hospital you come upon Rex's room just as his doctor is leaving

it doesn't take much convincing for both medics to let you come in to see him

you blame it on boredom but really it is that you're just that desperate for some familiar face, to see someone from the team recovering

"hey" you call out, your voice still raw from all the surgeries Rex turns to look at you, the helmet keeping his brains in tonking against the bed frame " what's with the back brace grandma?" he smiles at you you chuckle " as if you're much better than me Ms Artritis" "Ei, not fair! You're the only one here with two fingers to point" he laughs at your antics, scooting to the side to give you a place to sit on his bed

your visits become more frequent, frequent enough that he waits for you every evening

when the doctors deem that he recovered enough for walking they start scheduling your physical therapy sections together

your recoveries start to improve quickly after that, it's not long until you're discharged with a grocery list of medications and your check-up scheduled to the end of the month

But you can't keep yourself away, Rae still worries you and Rex would start talking to the walls without you here

Eve is the first to notice it, during their talks intead of gushing over the beautifull mahogani tables of a suburban mom's house Rex can't stop talking about you

she chuckles at it but prefers to let things run it's course, this Rex is much different from the one she dated, things might turn out fine

Another month passes untill Rex is finally discharged too, he wants to immediately go back to work, he has a new hand and he feels restless

which is why he jumps headfirst into the first mission Cecil offers him and get's banged up

it wasn't horrible but this is not the big come back he tought of for himself

he's so lost in thought that he's caught off guard when a voice calls him when he gets back to the base

"Already trying to dent the metal plate they put on your head grandma?"

he turns and sees you, on a tanktop that shows off the scars over your collarbones, your hair falling over your eyes but you look much healthier than the last time he saw you

you smile at him as you walk pass his figure, making your way to your room

his face grows warm, Rex open and closes his mouth unsure of what to say as he blushes

If you liked this pls reblog and comment so I know to write more like it reblogs >>> likes

#invincible#invincible show#rex splode#rex sloan#rex splode x reader#rex sloan x reader#invincible show x reader

169 notes

·

View notes

Text

𝖕𝖑𝖚𝖙𝖔 𝖎𝖓 𝖆𝖖𝖚𝖆𝖗𝖎𝖚𝖘, 𝖕𝖆𝖗𝖙 𝖙𝖜𝖔 - 𝖕𝖑𝖚𝖙𝖔 𝖙𝖍𝖗𝖔𝖚𝖌𝖍 𝖙𝖍𝖊 𝖍𝖔𝖚𝖘𝖊𝖘, 𝖓𝖆𝖙𝖆𝖑 + 𝖙𝖗𝖆𝖓𝖘𝖎𝖙

(these observations are general and do not cover all aspects)

♏︎ pluto shows where we burn, toughen, and become resilient. reaping the fruits of this process can be strenuous, and might be seen better in hindsight.

♏︎ the energies can be raw and primal, given pluto's urgency and intensity. centring obsession, compulsion, paranoia, etc.

♏︎ it can feel as though you symbolically enter a wound, refracting its pain back to you upon contact. the energies can be vile, and gory.

♏︎ pluto compels us to confront our deepest wounds, and demands us to see where our perception is tainted by trauma. it is an undercurrent, nudging us to the edge to fall (confront) - and rise (heal).

pluto in the first house

♏︎ a dominating placement since it falls in the house of 'identity', creating a deep resonance with plutonian themes. there's frequent retreat due to an early disillusionment about people's intentions - leading to relying on oneself. can detach from people and be cautious, extremely observant. you can find a detailed description on pluto conjunct the ascendant here.

♏︎ during transit: change in appearance, often 'darkening'. can be a time of vitality, endurance, and determination. coming out of introspection - redefining oneself.

pluto in the second house

♏︎ compulsively chasing security and resourcefulness, focused on materialism and control, fear of scarcity and being dominated – dealing with cyclical loss, creating a fear of losing ones foundation. this loss can range from opportunities, careers, assets or wealth to the loss of an identity or person (core wound).

♏︎ during transit: reclaiming what's been taken from you, having firm boundaries, accumulation and loss of wealth, defining value, learning that abundance starts from within.

pluto in the third house

♏︎ sensual voices - talking oneself into positions of power, feared for their intellect and silenced by those less competent. often critical of institutions. requiring depth and versatility - jack of all trades. loving banter and debate. intrigued by intelligence, quick and instinctual.

♏︎ can be extremely critical of themselves, downplaying their achievements even if they are exceptional. longing to be heard and valued.

♏︎ during transit: intellectual obsessions, craving taboo or occult knowledge, communal differences - questioning one's belonging, focus on mental health and what doesn't serve you.

pluto in the fourth house

♏︎ giving birth to creations through their pain - ancestral and domestic wounds that happened secluded and privately, inflicted trauma. highly intuitive and enduring, healing themselves and those to come. breathing life into everything they touch.

♏︎ where does life grow? in the mother's womb. what is inherent to this process? its cyclical nature. what does it consist of? sacrifice, dependence, excruciating pain, loss. what does it lead to? birth.

♏︎ during transit: domestic affairs or betrayal being exposed, secrets being told or asked to be kept. can be about loss, sacrifice, or distance to a beloved. creating boundaries or having them crossed.

pluto in the fifth house

♏︎ wounded inner child, being unable to create, often by force - a suppression of light. urge to be centred. tend to be erratic, having tunnel vision while creating. the initial 'wound' can vary in cause and effect based on how pluto's aspected. while pluto aspected by jupiter can create a feeling of superiority and a certain blindness to flaws, saturn causes doubt and repression, amplifying critique and diminishing one's work. same blindness, just flipped.

♏︎ during transit: immersing oneself in a project or person, craving to be inspired, finding a muse, dealing with copycats. being plagiarized or robbed of your creation. think of ai generated art based on artist's models or corporations stealing designs. (having upper hand despite fraud)

pluto in the sixth house

♏︎ destructive humility where one’s identity can dissolve – people feeling entitled to both their autonomy and service. demand to 'function' to a state of paralysis while disregarding their condition. worth can feel synonymous to performance.

♏︎ during transit: confronted with criticism, obsessive and compulsive tendencies in the mundane, change in routines, purification, having no 'saviour', learning to sacrifice for oneself rather than others. new ventures, e.g. professionally.

pluto in the seventh house

♏︎ fearing control and dependence - often seeking complex and unavailable partners in the house of 'others', with them being centred. there can be themes of possession, manipulation, and dominance, being sovereign. private and protective. being criticised in the public eye, confronted with jealousy and projection.

♏︎ during transit: business ventures and deals that need to be analysed carefully, focus on social class and status, entrance of opposing energies challenging your identity, keeping bonds private. having eyes on you.

pluto in the eighth house

♏︎ sexually reserved, treating sex and intimacy as something sacred or ritualistic. casual sex and intimacy drains them and generally can't be sustained. once committed they are bound. can have obsessive tendencies and high stamina. desired and charismatic, however out of reach.

♏︎ can be calculated and sense opportunities to gain power. psychological affinity allowing them to recognize patterns and behaviours. deeply tied to wealth (currency of power), either born into influential families or striving to attain status.

♏︎ during transit: having power and losing it, being stripped of everything you thought was yours. letting go of conditioned shame. financial dependence, trauma being centred (actively or in hindsight). intimacy, few but significant connections.

pluto in the ninth house

♏︎ the occult, philosophical expansion and psychic abilities - abundant yet compromised by structure. seeing past division and aching over discrimination, hatred, and coldness. receptive to transcendence, deeply wounded by closed hearts.

♏︎ when restricted or bound to a place the jupiterian expansiveness turns inward (mental). they are curious, wise, artistic, philosophical, and energetic. attempting to compromise their nature makes them burst (and leave).

♏︎ during transit: desire to attain higher education or specialising in a field, craving intellectual depth and mental stimulation, change of perception (also ideologies and religion), experimenting despite resistance.

pluto in the tenth house

♏︎ stunted legacy - often hindered by authorities or outer circumstances to achieve greatness, putting them at a disadvantage despite their capabilities. opportunities can be disproportionate to their potential. think of an excellent communicator working in a call centre. having to work harder than the average person.

♏︎ during transit: focus on public sphere and reputation, facing scrutiny and striving to be respected, peaking. can be about inheritance, passed on legacies, scandals, and authority. be strategic of what you put out, esp. online with pluto in aquarius. defending one's principles.

pluto in the eleventh house

♏︎ intelligent and analytical, craving originality and detecting lies without trying. can appear vain due to their selectiveness, needing mental stimulation, bored by repetitive and constructed thoughts. can be isolated - principle above sympathy. detached when nothing piques their interest.

♏︎ during transit: focus on higher concepts - disconnect that brings awareness to societal structures, innovation, and the subconscious. peak social awareness and calling out misbehaviour. pioneering esp. in tech and humanitarianism. decentralizing status, prioritizing impact.

pluto in the twelfth house

♏︎ being intertwined with the world's suffering and consumed by agony that isn't theirs. pain can be unrestricted since it transcends the material. the veil, to both humans and the otherworldly, is thin. feeling out of place, or surreal.

♏︎ mesmerizing eyes, and an existence between the worlds. characterised by sensitivity and wisdom beyond their years. esp. dominating when pluto in the 12h is conjunct their ascendant.

♏︎ during transit: loss of unity with their pain concentrated inward, can feel cataclysmic and fated. confrontation and healing of trauma. fear over losing our cognitive abilities and originality due to advancements, esp. ai.

#pluto#pluto through the houses#astrology observations#astro notes#astro tumblr#pluto in 1st house#pluto in 2nd house#pluto in 3rd house#pluto in 4th house#pluto in 5th house#pluto in 6th house#pluto in 7th house#pluto in 8th house#pluto in 9th house#pluto in 10th house#pluto in 11th house#pluto in 12th house

210 notes

·

View notes

Text

There's a few routes in this game that say a lot about how fickle and unstable Takumi can be, that really warps him on an existential level.

It's hard to grasp how sometimes, but I think it starts with this...

Essentially, he's a person whose 'programming went faulty'. Namely, the issue of 'Karua', and how she's at once both a propaganda piece projected into his brain, and also Nozomi's words filtering in.

I don't think he's able to fully separate those things with conviction, as much as he wants to. He doesn't get how projection works, that's already something difficult for a sheltered and clueless teenager to unpack but... this is a whole other level of 'challenge: impossible' mind-fuckery. If the group have ever had some disconnect between their sense of identity and their 'memory', Takumi's is soup.

But what especially gets me, is his 'younger teen self' dismisses 'Karua's' studies in the most incurious way- 'what's this, the outside world? Why bother with all of that? Why do you care? Conspiracy theorist', etc. which I imagine is what he was 'supposed' to do with anything 'weird' like any potential new information. Until the programming got funny at odd moments when Nozomi's interest would seep through, and then, you'd catch Takumi responding to 'Karua's' interest... with more interest for once.

(Now the brain doesn't know what to do. Oh sure, you want to take charge of your destiny, but actually it's better to just sleep and forget it all. Not like you ever cared about learning anything, right?)

I'd say Takumi's avoidance mechanisms later, are a response to Nozomi's existence as if she was a virus. Which... says a lot about the fascist state of the Artificial Satellite, and how Takumi was designed for the 'most ordinary kind of life' that wasn't meant to know it.

It's kind of unclear how the programming works- whether it takes the subject's base personality and uses it to run an 'AI scenario' based upon whatever Kamukura wanted to project into them, or if it doesn't bother matching personality at all and just shoves scenes into their head regardless if it's something they'd do. Either way, it feels that something went especially wrong with Takumi, even if his personality matched the 'memories' he was given, because for the first time one of the pod-programs was actually adapting to outside stimuli.