#snowflake native application

Explore tagged Tumblr posts

Text

Why Enterprises Choose Snowflake Native Applications for Data Innovation

Explore the advantages of deploying Snowflake native applications, from simplified procurement to enhanced data security and seamless integration with enterprise systems.

0 notes

Text

Empowering Digital Futures with Intertec Systems

Empowering Digital Futures with Intertec Systems

In today’s fast-paced digital landscape, organizations across industries are embracing transformation to stay competitive. Intertec Systems has emerged as a trusted partner in this journey—driving innovation across cybersecurity, cloud, digital transformation, managed services, and more.

🌐 Who Are Intertec Systems?

Founded in 1991, Intertec Systems is a global IT services specialist headquartered in Dubai, with delivery centers across the Middle East, India, Europe, and Africa. With over three decades of expertise and more than 50 technology alliances, the company enables clients like government entities, financial institutions, healthcare providers, and enterprises to deliver business‑enabled technology solutions.

What Makes Intertec Stand Out

1. Comprehensive Digital Transformation

Whether it’s modernizing legacy systems or delivering cloud-native solutions, Intertec’s deep expertise spans application services, ERP/CRM, managed services, IoT, data analytics, and AI. Their work includes projects like the DHA (Dubai Health Authority) digital transformation and empowering RAKWA with enterprise asset management systems intertecsystems.com.

2. Strong Cybersecurity Capabilities

Intertec offers a full spectrum of cybersecurity services: threat detection & response, network and cloud security, managed security, SOC operations, and data protection. Available 24×7, their Security Operations Center (SOC) ensures real-time threat mitigation, prevention, detection, and remediation tailored for hybrid environments.

They’ve recently rolled out advanced SOC for a leading UAE luxury retailer, securing critical customer data and reinforcing regulatory compliance. This initiative delivered stronger oversight, accountability, and long‑term security strategy implementation LinkedIn.

3. Strategic Technology Partnerships

As a tech-forward player, Intertec maintains alliances with industry leaders like Cloudflare, Snowflake, Microsoft Dynamics 365, Oracle Cloud, and Fortinet. These partnerships allow them to offer cutting-edge, scalable solutions across industries, and empower clients with certified expertise in major cloud platforms or enterprise tools LeadIQ.

4. Regional & Global Reach

With offices in Dubai, Abu Dhabi, Riyadh, Muscat, Manama, Mumbai, Bangalore, and the UK, Intertec blends global vision with local delivery excellence. They leverage India's deep IT talent pool and regional presence across GCC markets—including support for Vision 2030 initiatives in Saudi Arabia.

5. Proven Delivery Excellence

With more than 500 technology certifications, a 90%+ client satisfaction rate, and industry recognition as a Best Employer Brand, Intertec has built a strong reputation. Their structured, ISO 9001-aligned operating model ensures high-quality delivery and consistent client value.

Why Partner with Intertec Systems?

Balance of Innovation and Trust

Their recently announced partnership with Saal.ai underscores their move into Big Data and AI‑driven solutions, particularly in the insurance and public sectors. Intertec’s thought leadership at events such as GovAI Transformation 2025 and Banking 360 reinforces its strategic positioning.

Security That Never Sleeps

Working across industries with sensitive data, clients depend on Intertec’s managed security services and proactive threat detection capabilities to protect digital assets in real-time—even as threats evolve rapidly.

Tailored Solutions, Local Presence

From cloud migrations to SOC setup and application development, Intertec tailors solutions to fit industry needs. With delivery centers and local offices across multiple countries, they provide consistent, on-the-ground support.

Future‑Proofing Businesses

Intertec’s adoption of AI, automation platforms, and digital tools helps organizations become future-ready, agile, and scalable—whether they are in healthcare, finance, government, or enterprise verticals.

Client Spotlight: Real-World Transformations

Dubai Health Authority (DHA): Leveraged Intertec’s expertise to build a digital infrastructure that aligns with Dubai’s health innovation goals—streamlining operations, enabling mobile workflows, and supporting scalable digital strategies.

Ras Al Khaimah Wastewater Agency (RAKWA): Implemented an enterprise asset management system to digitize field service, improve employee productivity, and align with the agency’s digital vision.

Luxury Retailer in UAE: Deployed an advanced SOC to strengthen cybersecurity defenses, align with regulation, and establish a strategic roadmap for adaptive security operations LinkedIn.

Call to Action: Take the Next Step

To explore how Intertec Systems can transform your business—with secure, intelligent, and future‑ready IT capabilities—visit their website by clicking the focus keyword here: Intertec Systems.

Whether you're looking to:

Migrate legacy systems to the cloud

Modernize cybersecurity and SOC infrastructure

Implement ERP, CRM, or analytics solutions

Explore enterprise automation, AI, or digital government technologies

— the team at Intertec is ready to tailor a solution for your needs.

Final Thoughts

With a proven track record spanning 30+ years, a strong ecosystem of technology partnerships, global-certified delivery, and a commitment to innovation and client success, Intertec Systems is the strategic partner businesses need in today’s digital-first world.

Unlock transformative value with Intertec Systems—today’s partner for tomorrow’s digital success.

0 notes

Text

Unlocking Business Intelligence with Advanced Data Solutions 📊🤖

In a world where data is the new currency, businesses that fail to utilize it risk falling behind. From understanding customer behavior to predicting market trends, advanced data solutions are transforming how companies operate, innovate, and grow. By leveraging AI, ML, and big data technologies, organizations can now make faster, smarter, and more strategic decisions across industries.

At smartData Enterprises, we build and deploy intelligent data solutions that drive real business outcomes. Whether you’re a healthcare startup, logistics firm, fintech enterprise, or retail brand, our customized AI-powered platforms are designed to elevate your decision-making, efficiency, and competitive edge.

🧠 What Are Advanced Data Solutions?

Advanced data solutions combine technologies like artificial intelligence (AI), machine learning (ML), natural language processing (NLP), and big data analytics to extract deep insights from raw and structured data.

They include:

📊 Predictive & prescriptive analytics

🧠 Machine learning model development

🔍 Natural language processing (NLP)

📈 Business intelligence dashboards

🔄 Data warehousing & ETL pipelines

☁️ Cloud-based data lakes & real-time analytics

These solutions enable companies to go beyond basic reporting — allowing them to anticipate customer needs, streamline operations, and uncover hidden growth opportunities.

🚀 Why Advanced Data Solutions Are a Business Game-Changer

In the digital era, data isn’t just information — it’s a strategic asset. Advanced data solutions help businesses:

🔎 Detect patterns and trends in real time

💡 Make data-driven decisions faster

🧾 Reduce costs through automation and optimization

🎯 Personalize user experiences at scale

📈 Predict demand, risks, and behaviors

🛡️ Improve compliance, security, and data governance

Whether it’s fraud detection in finance or AI-assisted diagnostics in healthcare, the potential of smart data is limitless.

💼 smartData’s Capabilities in Advanced Data, AI & ML

With over two decades of experience in software and AI engineering, smartData has delivered hundreds of AI-powered applications and data science solutions to global clients.

Here’s how we help:

✅ AI & ML Model Development

Our experts build, train, and deploy machine learning models using Python, R, TensorFlow, PyTorch, and cloud-native ML services (AWS SageMaker, Azure ML, Google Vertex AI). We specialize in:

Classification, regression, clustering

Image, speech, and text recognition

Recommender systems

Demand forecasting and anomaly detection

✅ Data Engineering & ETL Pipelines

We create custom ETL (Extract, Transform, Load) pipelines and data warehouses to handle massive data volumes with:

Apache Spark, Kafka, and Hadoop

SQL/NoSQL databases

Azure Synapse, Snowflake, Redshift

This ensures clean, secure, and high-quality data for real-time analytics and AI models.

✅ NLP & Intelligent Automation

We integrate NLP and language models to automate:

Chatbots and virtual assistants

Text summarization and sentiment analysis

Email classification and ticket triaging

Medical records interpretation and auto-coding

✅ Business Intelligence & Dashboards

We build intuitive, customizable dashboards using Power BI, Tableau, and custom tools to help businesses:

Track KPIs in real-time

Visualize multi-source data

Drill down into actionable insights

🔒 Security, Scalability & Compliance

With growing regulatory oversight, smartData ensures that your data systems are:

🔐 End-to-end encrypted

⚖️ GDPR and HIPAA compliant

🧾 Auditable with detailed logs

🌐 Cloud-native for scalability and uptime

We follow best practices in data governance, model explainability, and ethical AI development.

🌍 Serving Global Industries with AI-Powered Data Solutions

Our advanced data platforms are actively used across industries:

🏥 Healthcare: AI for diagnostics, patient risk scoring, remote monitoring

🚚 Logistics: Predictive route optimization, fleet analytics

🏦 Finance: Risk assessment, fraud detection, portfolio analytics

🛒 Retail: Dynamic pricing, customer segmentation, demand forecasting

⚙️ Manufacturing: Predictive maintenance, quality assurance

Explore our custom healthcare AI solutions for more on health data use cases.

📈 Real Business Impact

Our clients have achieved:

🚀 40% reduction in manual decision-making time

💰 30% increase in revenue using demand forecasting tools

📉 25% operational cost savings with AI-led automation

📊 Enhanced visibility into cross-functional KPIs in real time

We don’t just build dashboards — we deliver end-to-end intelligence platforms that scale with your business.

🤝 Why Choose smartData?

25+ years in software and AI engineering

Global clients across healthcare, fintech, logistics & more

Full-stack data science, AI/ML, and cloud DevOps expertise

Agile teams, transparent process, and long-term support

With smartData, you don’t just get developers — you get a strategic technology partner.

📩 Ready to Turn Data Into Business Power?

If you're ready to harness AI and big data to elevate your business, smartData can help. Whether it's building a custom model, setting up an analytics dashboard, or deploying an AI-powered application — we’ve got the expertise to lead the way.

👉 Learn more: https://www.smartdatainc.com/advanced-data-ai-and-ml/

📞 Let’s connect and build your data-driven future.

#advanceddatasolutions #smartData #AIdevelopment #MLsolutions #bigdataanalytics #datadrivenbusiness #enterpriseAI #customdatasolutions #predictiveanalytics #datascience

0 notes

Text

Snowflake Implementation Checklist: A Comprehensive Guide for Seamless Cloud Data Migration

In today's data-driven world, businesses are increasingly migrating their data platforms to the cloud. Snowflake, a cloud-native data warehouse, has become a popular choice thanks to its scalability, performance, and ease of use. However, a successful Snowflake implementation demands careful planning and execution. Without a structured approach, organizations risk data loss, performance issues, and costly delays.

This comprehensive Snowflake implementation checklist provides a step-by-step roadmap to ensure your migration or setup is seamless, efficient, and aligned with business goals.

Why Choose Snowflake?

Before diving into the checklist, it's essential to understand why organizations are adopting Snowflake:

Scalability: Automatically scales compute resources based on demand.

Performance: Handles large volumes of data with minimal latency.

Cost-efficiency: Pay only for what you use with separate storage and compute billing.

Security: Offers built-in data protection, encryption, and compliance certifications.

Interoperability: Supports seamless integration with tools like Tableau, Power BI, and more.

1. Define Business Objectives and Scope

Before implementation begins, align Snowflake usage with your organization’s business objectives. Understanding the purpose of your migration or new setup will inform key decisions down the line.

Key Actions:

Identify business problems Snowflake is intended to solve.

Define key performance indicators (KPIs).

Determine the initial data domains or departments involved.

Establish success criteria for implementation.

Pro Tip: Hold a kickoff workshop with stakeholders to align expectations and outcomes.

2. Assess Current Infrastructure

Evaluate your existing data architecture to understand what will be migrated, transformed, or deprecated.

Questions to Ask:

What are the current data sources (ERP, CRM, flat files, etc.)?

How is data stored and queried today?

Are there performance bottlenecks or inefficiencies?

What ETL or data integration tools are currently in use?

Deliverables:

Inventory of current data systems.

Data flow diagrams.

Gap analysis for transformation needs.

3. Build a Skilled Implementation Team

Snowflake implementation requires cross-functional expertise. Assemble a project team with clearly defined roles.

Suggested Roles:

Project Manager: Oversees the timeline, budget, and deliverables.

Data Architect: Designs the Snowflake schema and structure.

Data Engineers: Handle data ingestion, pipelines, and transformation.

Security Analyst: Ensures data compliance and security protocols.

BI Analyst: Sets up dashboards and reports post-implementation.

4. Design Your Snowflake Architecture

Proper architecture ensures your Snowflake environment is scalable and secure from day one.

Key Considerations:

Account and Region Selection: Choose the right Snowflake region for data residency and latency needs.

Warehouse Sizing and Scaling: Decide on virtual warehouse sizes based on expected workloads.

Database Design: Create normalized or denormalized schema structures that reflect your business needs.

Data Partitioning and Clustering: Plan clustering keys to optimize query performance.

5. Establish Security and Access Control

Snowflake offers robust security capabilities, but it's up to your team to implement them correctly.

Checklist:

Set up role-based access control (RBAC).

Configure multi-factor authentication (MFA).

Define network policies and secure connections (IP allow lists, VPNs).

Set data encryption policies at rest and in transit.

Align with compliance standards like HIPAA, GDPR, or SOC 2 if applicable.

6. Plan for Data Migration

Migrating data to Snowflake is one of the most crucial steps. Plan each phase carefully to avoid downtime or data loss.

Key Steps:

Select a data migration tool (e.g., Fivetran, Matillion, Talend).

Categorize data into mission-critical, archival, and reference data.

Define a migration timeline with test runs and go-live phases.

Validate source-to-target mapping and data quality.

Plan incremental loads or real-time sync for frequently updated data.

Best Practices:

Start with a pilot migration to test pipelines and performance.

Use staging tables to validate data integrity before going live.

7. Implement Data Ingestion Pipelines

Snowflake supports a variety of data ingestion options, including batch and streaming.

Options to Consider:

Snowpipe for real-time ingestion.

ETL tools like Apache NiFi, dbt, or Informatica.

Manual loading for historical batch uploads using COPY INTO.

Use of external stages (AWS S3, Azure Blob, GCP Storage).

8. Set Up Monitoring and Performance Tuning

Once data is live in Snowflake, proactive monitoring ensures performance stays optimal.

Tasks:

Enable query profiling and review query history regularly.

Monitor warehouse usage and scale compute accordingly.

Set up alerts and logging using Snowflake’s Resource Monitors.

Identify slow queries and optimize with clustering or materialized views.

Metrics to Track:

Query execution times.

Data storage growth.

Compute cost by warehouse.

User activity logs.

9. Integrate with BI and Analytics Tools

A Snowflake implementation is only valuable if business users can derive insights from it.

Common Integrations:

Tableau

Power BI

Looker

Sigma Computing

Excel or Google Sheets

Actionable Tips:

Use service accounts for dashboard tools to manage load separately.

Define semantic layers where needed to simplify user access.

10. Test, Validate, and Optimize

Testing is not just a one-time event but a continuous process during and after implementation.

Types of Testing:

Unit Testing for data transformation logic.

Integration Testing for end-to-end pipeline validation.

Performance Testing under load.

User Acceptance Testing (UAT) for final sign-off.

Checklist:

Validate data consistency between source and Snowflake.

Perform reconciliation on row counts and key metrics.

Get feedback from business users on reports and dashboards.

11. Develop Governance and Documentation

Clear documentation and governance will help scale your Snowflake usage in the long run.

What to Document:

Data dictionaries and metadata.

ETL/ELT pipeline workflows.

Access control policies.

Cost management guidelines.

Backup and recovery strategies.

Don’t Skip: Assign data stewards or governance leads for each domain.

12. Go-Live and Post-Implementation Support

Your Snowflake implementation checklist isn’t complete without a smooth go-live and support plan.

Go-Live Activities:

Freeze source changes before final cutover.

Conduct a final round of validation.

Monitor performance closely in the first 24–48 hours.

Communicate availability and changes to end users.

Post-Go-Live Support:

Establish a support desk or Slack channel for user issues.

Schedule weekly or bi-weekly performance reviews.

Create a roadmap for future enhancements or additional migrations.

Final Words: A Strategic Approach Wins

Implementing Snowflake is more than just moving data—it's about transforming how your organization manages, analyzes, and scales its information. By following this structured Snowflake implementation checklist, you'll ensure that every aspect—from design to data migration to user onboarding—is handled with care, foresight, and efficiency.

When done right, Snowflake becomes a powerful asset that grows with your organization, supporting business intelligence, AI/ML initiatives, and scalable data operations for years to come.

0 notes

Text

Top Benefits of Informatica Intelligent Cloud Services: Empowering Scalable and Agile Enterprises

Introduction In an increasingly digital and data-driven landscape, enterprises are under pressure to unify and manage vast volumes of information across multiple platforms—whether cloud, on-premises, or hybrid environments. Traditional integration methods often result in data silos, delayed decision-making, and fragmented operations. This is where Informatica Intelligent Cloud Services (IICS) makes a transformative impact—offering a unified, AI-powered cloud integration platform to simplify data management, streamline operations, and drive innovation.

IICS delivers end-to-end cloud data integration, application integration, API management, data quality, and governance—empowering enterprises to make real-time, insight-driven decisions.

What Are Informatica Intelligent Cloud Services?

Informatica Intelligent Cloud Services is a modern, cloud-native platform designed to manage enterprise data integration and application workflows in a secure, scalable, and automated way. Built on a microservices architecture and powered by CLAIRE®—Informatica’s AI engine—this solution supports the full spectrum of data and application integration across multi-cloud, hybrid, and on-prem environments.

Key capabilities of IICS include:

Cloud data integration (ETL/ELT pipelines)

Application and B2B integration

API and microservices management

Data quality and governance

AI/ML-powered data discovery and automation

Why Informatica Cloud Integration Matters

With growing digital complexity and decentralized IT landscapes, enterprises face challenges in aligning data access, security, and agility. IICS solves this by offering:

Seamless cloud-to-cloud and cloud-to-on-prem integration

AI-assisted metadata management and discovery

Real-time analytics capabilities

Unified governance across the data lifecycle

This enables businesses to streamline workflows, improve decision accuracy, and scale confidently in dynamic markets.

Core Capabilities of Informatica Intelligent Cloud Services

1. Cloud-Native ETL and ELT Pipelines IICS offers powerful data integration capabilities using drag-and-drop visual designers. These pipelines are optimized for scalability and performance across cloud platforms like AWS, Azure, GCP, and Snowflake. Benefit: Fast, low-code pipeline development with high scalability.

2. Real-Time Application and Data Synchronization Integrate applications and synchronize data across SaaS tools (like Salesforce, SAP, Workday) and internal systems. Why it matters: Keeps enterprise systems aligned and always up to date.

3. AI-Driven Metadata and Automation CLAIRE® automatically detects data patterns, lineage, and relationships—powering predictive mapping, impact analysis, and error handling. Pro Tip: Reduce manual tasks and accelerate transformation cycles.

4. Unified Data Governance and Quality IICS offers built-in data quality profiling, cleansing, and monitoring tools to maintain data accuracy and compliance across platforms. Outcome: Strengthened data trust and regulatory alignment (e.g., GDPR, HIPAA).

5. API and Microservices Integration Design, deploy, and manage APIs via Informatica’s API Manager and Connectors. Result: Easily extend data services across ecosystems and enable partner integrations.

6. Hybrid and Multi-Cloud Compatibility Support integration between on-prem, private cloud, and public cloud platforms. Why it helps: Ensures architectural flexibility and vendor-neutral data strategies.

Real-World Use Cases of IICS

🔹 Retail Synchronize POS, e-commerce, and customer engagement data to personalize experiences and boost revenue.

🔹 Healthcare Unify patient data from EMRs, labs, and claims systems to improve diagnostics and reporting accuracy.

🔹 Banking & Finance Consolidate customer transactions and risk analytics to detect fraud and ensure compliance.

🔹 Manufacturing Integrate supply chain, ERP, and IoT sensor data to reduce downtime and increase production efficiency.

Benefits at a Glance

Unified data access across business functions

Real-time data sharing and reporting

Enhanced operational agility and innovation

Reduced integration costs and complexity

Automated data governance and quality assurance

Cloud-first architecture built for scalability and resilience

Best Practices for Maximizing IICS

✅ Standardize metadata formats and data definitions ✅ Automate workflows using AI recommendations ✅ Monitor integrations and optimize pipeline performance ✅ Govern access and ensure compliance across environments ✅ Empower business users with self-service data capabilities

Conclusion

Informatica Intelligent Cloud Services is more than a data integration tool—it’s a strategic enabler of business agility and innovation. By bringing together disconnected systems, automating workflows, and applying AI for smarter decisions, IICS unlocks the full potential of enterprise data. Whether you're modernizing legacy systems, building real-time analytics, or streamlining operations, Prophecy Technologies helps you leverage IICS to transform your cloud strategy from reactive to intelligent.

Let’s build a smarter, integrated, and future-ready enterprise—together.

0 notes

Text

The future of data is being built now—and we’re showing up to shape it. We’re excited to share that Anis Dave EVP of Product Engineering at Algoworks, will be attending Snowflake Summit 2025. This event brings together the sharpest minds in Data, AI and Cloud to explore what’s next in enterprise engineering. From cloud-native architectures to scalable applications and intelligent data ecosystems, it’s where innovation meets execution. If you’re attending, let’s connect and talk about building smarter, faster, and more resilient digital products that deliver business results. 📍San Francisco 📅 June 2–5, 2025

1 note

·

View note

Text

6 Ways Generative AI is Transforming Data Analytics

Generative AI revolutionizes how companies tap into data, offering new ways to automate workflows, improve analytics, and make improved decisions.

However, if you're unsure how to apply it effectively in your work, this blog will take you through six practical use cases.

You'll also discover essential factors to keep in mind, best practices, and an overview of tools and frameworks to allow you to successfully implement Generative AI.

Here's what we'll cover:

Code Generation – How AI speeds up software development

Chatbots & Virtual Agents – Enhancing customer and internal interactions

Data Governance – Automating documentation and improving trust

AI-Generated Visualizations – Creating reports and dashboards faster

Automating Workflows – Using AI to simplify business processes

AI Agents – Handling complex analytical tasks

We'll also discuss common challenges with Generative AI, strategies to mitigate risks, and choosing the correct tools for your needs.

A visual infographic titled "AI's Role in the Data Analytics Lifecycle " details six areas where AI can help: Data Collection and integration, Governance and quality, Processing and transformation, Insights Exploration, Visualization and Reporting, and Workflow Automation.

Section by section highlights what AI can do — from anomaly detection, automated data mapping, and natural language queries to AI-driven dashboards and workflow optimization.

How Generative AI Enhances Data Analytics

1. Code Generation: Accelerating Development with AI

Generative AI is changing software development by generating template code and automating tasks that involve repeatedly writing the same lines of code.

It can't substitute for well-designed code written by humans, but it does speed up the development process by giving developers reusable components and accelerating code movement.

For instance, if you move from Qlik Sense reporting to Power BI, AI can refactor Qlik's proprietary syntax to DAX, automate the conversion of most essential expressions, and minimize manual work.

2. Chatbots & Virtual Agents: Enhancing Experiences

AI-fuelled chatbots are no longer just for customer support. When integrated with analytics platforms, they can summarize dashboards, explain key metrics, or facilitate a free-form, conversational data exploration.

Business users can ask questions in plain language rather than manually sifting through reports.

Databricks and Snowflake are cloud-native solutions incorporating LLM-based AI chatbot implementations, while open-source frameworks like LangChain have increased the flexibility for organizations to implement a custom solution.

3. Data Governance: Automating Documentation & Building Trust

Generative AI revolutionizes data governance by streamlining metadata generation, enhancing documentation, and improving quality assurance.

AI can analyze workflows, generate structured documentation, and even explain data lineage to users who question the metrics.

This automation saves time and improves transparency, helping organizations maintain strong data governance without added complexity.

4. AI-Generated Visualizations: Faster Dashboards & Reporting

Modern BI platforms like Power BI and Databricks AI/BI now integrate Generative AI, allowing users to create dashboards with simple text commands.

Tools such as AI-powered analyst Zöe from Zenlytic go further, interpreting data and providing recommendations.

Rather than creating reports by hand, users may say, "Give me monthly sales trends year-over-year," and get high-quality visualizations in seconds. Data analysis becomes easy enough for even non-technical users.

5. Automating Workflows: Streamlining Business Processes

With workflow automation tools such as AI-powered Power Automate and Zapier, companies can embed Generative AI into existing applications.

This facilitates automated reporting, email responses based on data, and real-time tracking of critical business metrics.

For example, companies can automate workflows to achieve weekly performance reports and deliver them through email or Teams for timely stakeholder updates.

6. AI Agents: Handling Complex Analytical Tasks

AI agents transcend automation by adjusting dynamically to varied analytical requests. Systems such as AutoGen, LangGraph, and CrewAI enable companies to create AI-based analysts that compartmentalize challenging issues into sound steps.

An example is the ability of a multi-agent system to execute functions such as data preparation, statistical analysis, and visualization coordinately. AI can improve analysis, but human supervision is always important to assure accuracy and trustworthiness.

Challenges & Risks of Generative AI

Despite its advantages, Generative AI comes with specific challenges:

Lack of Explainability – AI models generate outputs based on patterns, making it challenging to trace decision-making logic.

Security & Compliance Risks: Lacking protection, sensitive information might find its way into AI training models.

Accuracy & Data Quality: AI efficacy relies on the quality of the training data; poor inputs deliver questionable results.

High Expenses: AI workloads are computationally intensive and must be monitored for costs.

Model Evolution & Drift: AI models keep changing, and this could necessitate continuous updates to stay effective.

Non-Standard Outputs: AI-produced outputs can differ, making standardization difficult in production environments.

A "Generative AI Risks vs. Mitigation Strategies" chart visually maps these risks alongside solutions like audit & validation, AI security protocols, and standardized prompting techniques.

Choosing the Right Generative AI Tools

Most leading analytics platforms now integrate Generative AI, each with different capabilities:

AWS Bedrock – Offers third-party LLMs for AI-powered applications.

Google Vertex AI – Enables AI model customization and chatbot deployment.

Microsoft Azure OpenAI Service – Provides pre-trained and custom AI models for enterprise use.

Databricks AI/BI – Supports AI-assisted analytics with enterprise-grade security.

Power BI Copilot – Automates data visualization and DAX expression generation.

Zenlytic – Uses LLMs to power BI dashboards and AI-driven analytics.

Frameworks for AI Application Development: For organizations looking to build AI applications, LangChain, AutoGen, CrewAI, and Mosaic, provide structured approaches to building the progress workflows into production and operationalizing AI.

Best Practices for Implementing Generative AI

To get the most out of Generative AI, follow these key strategies:

Refine Your Prompts – Experiment with prompt structures to improve AI-generated outputs.

Control AI Creativity – Adjust temperature settings for more factual vs. creative responses.

Provide Clear Context – LLMs need detailed business-specific inputs to generate meaningful results.

Standardize Prompting – Define a master prompting framework for consistent AI-generated content.

Manage Costs – Track AI usage to prevent unexpected expenses.

Ensure Data Privacy – Restrict sensitive data from AI training models.

Optimize Data Governance – Maintain structured metadata for better AI performance.

Choose the Right AI Model – Consider general-purpose vs. industry-specific LLMs.

Balance Model Size & Efficiency – Smaller models like Mistral-7B may be more cost-effective.

Understand Cloud AI Services – Different platforms offer varying storage, embedding, and pricing models.

The Future of AI in Data Analytics

LLMs are transforming business intelligence by enabling, users to interact with data via conversational AI, as opposed to dashboards.

Although BI tools will continue to integrate AI-enhanced features, organizations should aim to combine human expertise with AI-derived insights for obtaining maximum value.

By integrating Generative AI in thoughtful ways, organizations can achieve new levels of efficiency, facilitate better decision-making, and drive more data informed cultures.

FAQs:

What is Generative AI in data analytics?

Generative AI in data analytics refers to applying AI models for tasks like automating code generation, data visualization, and workflows, among others, thereby improving efficiency and insights.

How can Generative AI be used for code generation?

Generative AI assists developers in writing template code, refactoring legacy code, and automating their commonplace programming activities, hence hastening development.

What are the benefits of AI-powered chatbots in data analytics?

AI chatbots enhance user interactions by dashboards, explaining metrics, and allowing conversational data queries, AI chatbots enrich how users interact with dashboards and make insights

How does Generative AI improve data governance?

With AI, metadata generation is automated, improves documentation, tracks data lineage, and data compliance, trust, and efficiency in data management.

Can AI create data visualizations and dashboards?

Yes, modern BI platforms like Power BI and Databricks use Generative AI to create advanced dashboards and reports from simple English language queries.

How does Generative AI help in automating workflows?

The AI-based automation tools help implement AI's potential to automate mundane and repetitive tasks, enhancing data processing and integrating insights in business apps for swift decision-making.

What are AI agents, and how do they work in analytics?

AI agents do high-level analysis of those data, ingesting and acting in real-time, leading to increased automation and more effective decision-making.

What are the key risks of using Generative AI in data analytics?

Data security fears, lack of clarity, cost overruns, inconsistencies in models, and evolving AI frameworks that keep changing and should be re-trained continuously are common risks.

Which platforms provide Generative AI functionality for data analytics?

Well-known platforms are AWS Bedrock, Google Vertex AI, Microsoft Azure OpenAI, Power BI Copilot, Databricks AI/BI, Qlik, Tableau Pulse, and Zenlytic.

How can companies effectively use Generative AI in data analytics?

Companies need to emphasize developing unambiguous use cases, having good data governance, knowing the cost structures, and regularly optimizing AI models for precision.

1 note

·

View note

Text

Understanding Data Movement in Azure Data Factory: Key Concepts and Best Practices

Introduction

Azure Data Factory (ADF) is a fully managed, cloud-based data integration service that enables organizations to move and transform data efficiently. Understanding how data movement works in ADF is crucial for building optimized, secure, and cost-effective data pipelines.

In this blog, we will explore: ✔ Core concepts of data movement in ADF ✔ Data flow types (ETL vs. ELT, batch vs. real-time) ✔ Best practices for performance, security, and cost efficiency ✔ Common pitfalls and how to avoid them

1. Key Concepts of Data Movement in Azure Data Factory

1.1 Data Movement Overview

ADF moves data between various sources and destinations, such as on-premises databases, cloud storage, SaaS applications, and big data platforms. The service relies on integration runtimes (IRs) to facilitate this movement.

1.2 Integration Runtimes (IRs) in Data Movement

ADF supports three types of integration runtimes:

Azure Integration Runtime (for cloud-based data movement)

Self-hosted Integration Runtime (for on-premises and hybrid data movement)

SSIS Integration Runtime (for lifting and shifting SSIS packages to Azure)

Choosing the right IR is critical for performance, security, and connectivity.

1.3 Data Transfer Mechanisms

ADF primarily uses Copy Activity for data movement, leveraging different connectors and optimizations:

Binary Copy (for direct file transfers)

Delimited Text & JSON (for structured data)

Table-based Movement (for databases like SQL Server, Snowflake, etc.)

2. Data Flow Types in ADF

2.1 ETL vs. ELT Approach

ETL (Extract, Transform, Load): Data is extracted, transformed in a staging area, then loaded into the target system.

ELT (Extract, Load, Transform): Data is extracted, loaded into the target system first, then transformed in-place.

ADF supports both ETL and ELT, but ELT is more scalable for large datasets when combined with services like Azure Synapse Analytics.

2.2 Batch vs. Real-Time Data Movement

Batch Processing: Scheduled or triggered executions of data movement (e.g., nightly ETL jobs).

Real-Time Streaming: Continuous data movement (e.g., IoT, event-driven architectures).

ADF primarily supports batch processing, but for real-time processing, it integrates with Azure Stream Analytics or Event Hub.

3. Best Practices for Data Movement in ADF

3.1 Performance Optimization

✅ Optimize Data Partitioning — Use parallelism and partitioning in Copy Activity to speed up large transfers. ✅ Choose the Right Integration Runtime — Use self-hosted IR for on-prem data and Azure IR for cloud-native sources. ✅ Enable Compression — Compress data during transfer to reduce latency and costs. ✅ Use Staging for Large Data — Store intermediate results in Azure Blob or ADLS Gen2 for faster processing.

3.2 Security Best Practices

🔒 Use Managed Identities & Service Principals — Avoid using credentials in linked services. 🔒 Encrypt Data in Transit & at Rest — Use TLS for transfers and Azure Key Vault for secrets. 🔒 Restrict Network Access — Use Private Endpoints and VNet Integration to prevent data exposure.

3.3 Cost Optimization

💰 Monitor & Optimize Data Transfers — Use Azure Monitor to track pipeline costs and adjust accordingly. 💰 Leverage Data Flow Debugging — Reduce unnecessary runs by debugging pipelines before full execution. 💰 Use Incremental Data Loads — Avoid full data reloads by moving only changed records.

4. Common Pitfalls & How to Avoid Them

❌ Overusing Copy Activity without Parallelism — Always enable parallel copy for large datasets. ❌ Ignoring Data Skew in Partitioning — Ensure even data distribution when using partitioned copy. ❌ Not Handling Failures with Retry Logic — Use error handling mechanisms in ADF for automatic retries. ❌ Lack of Logging & Monitoring — Enable Activity Runs, Alerts, and Diagnostics Logs to track performance.

Conclusion

Data movement in Azure Data Factory is a key component of modern data engineering, enabling seamless integration between cloud, on-premises, and hybrid environments. By understanding the core concepts, data flow types, and best practices, you can design efficient, secure, and cost-effective pipelines.

Want to dive deeper into advanced ADF techniques? Stay tuned for upcoming blogs on metadata-driven pipelines, ADF REST APIs, and integrating ADF with Azure Synapse Analytics!

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Why Every Data Team Needs a Modern Data Stack in 2025

As businesses across industries become increasingly data-driven, the expectations placed on data teams are higher than ever. From real-time analytics to AI-ready pipelines, today’s data infrastructure needs to be fast, flexible, and scalable. That’s why the Modern Data Stack has emerged as a game-changer—reshaping how data is collected, stored, transformed, and analyzed.

In 2025, relying on legacy systems is no longer enough. Here’s why every data team needs to embrace the Modern Data Stack to stay competitive and future-ready.

What is the Modern Data Stack?

The Modern Data Stack is a collection of cloud-native tools that work together to streamline the flow of data from source to insight. It typically includes:

Data Ingestion/ELT tools (e.g., Fivetran, Airbyte)

Cloud Data Warehouses (e.g., Snowflake, BigQuery, Redshift)

Data Transformation tools (e.g., dbt)

Business Intelligence tools (e.g., Looker, Tableau, Power BI)

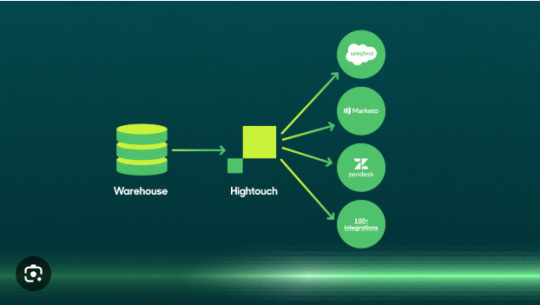

Reverse ETL and activation platforms (e.g., Hightouch, Census)

Data observability and governance (e.g., Monte Carlo, Atlan)

These tools are modular, scalable, and built for collaboration—making them ideal for modern teams that need agility without sacrificing performance.

1. Cloud-Native Scalability

In 2025, data volumes are exploding thanks to IoT, user interactions, social media, and AI applications. Traditional on-premise systems can no longer keep up with this scale.

The Modern Data Stack, being entirely cloud-native, allows data teams to scale up or down based on demand. Whether you’re dealing with terabytes or petabytes of data, cloud warehouses like Snowflake or BigQuery handle the load seamlessly and cost-effectively.

2. Faster Time to Insight

Legacy ETL systems often result in slow, rigid pipelines that delay analytics and business decisions. With tools like Fivetran for ingestion and dbt for transformation, the Modern Data Stack significantly reduces the time it takes to go from raw data to business-ready dashboards.

This real-time or near-real-time capability empowers teams to respond quickly to market trends, user behavior, and operational anomalies.

3. Democratization of Data

A key advantage of the Modern Data Stack is that it makes data more accessible across the organization. With self-service BI tools like Looker and Power BI, non-technical stakeholders can explore, visualize, and analyze data without writing SQL.

This democratization fosters a truly data-driven culture where insights aren’t locked within the data team but are shared across departments—from marketing and sales to product and finance.

4. Simplified Maintenance and Modularity

Gone are the days of monolithic, tightly coupled systems. The Modern Data Stack follows a modular architecture, where each tool specializes in one part of the data lifecycle. This means you can easily replace or upgrade a component without disrupting the entire system.

It also reduces maintenance overhead. Since most modern stack tools are SaaS-based, the burden of software updates, scaling, and infrastructure management is handled by the vendors—freeing up your team to focus on data strategy and analysis.

5. Enables Advanced Use Cases

As AI and machine learning become core to business strategies, the Modern Data Stack lays a solid foundation for these advanced capabilities. With clean, centralized, and structured data in place, data scientists and ML engineers can build more accurate models and deploy them faster.

The stack also supports reverse ETL, enabling data activation—where insights are not just visualized but pushed back into CRMs, marketing platforms, and customer tools to drive real-time action.

6. Built for Collaboration and Governance

Modern data tools are built with collaboration and governance in mind. Platforms like dbt enable version control for data models, while cataloging tools like Atlan or Alation help teams track data lineage and maintain compliance with privacy regulations like GDPR and HIPAA.

This is essential in 2025, where data governance is not optional but a critical requirement.

Conclusion

The Modern Data Stack is no longer a “nice to have”—it’s a must-have for every data team in 2025. Its flexibility, scalability, and speed empower teams to deliver insights faster, innovate more efficiently, and support the growing demand for data-driven decision-making.

By adopting a modern stack, your data team is not just keeping up with the future—it's helping to shape it.

0 notes

Text

Snowflake Native Application Framework: Transforming Data App Development

Dive into the Snowflake Native App Framework and see how it empowers providers to build, test, and share robust data applications directly within the Snowflake Data Cloud.

0 notes

Text

What You Will Learn in a Snowflake Online Course

Snowflake is a cutting-edge cloud-based data platform that provides robust solutions for data warehousing, analytics, and cloud computing. As businesses increasingly rely on big data, professionals skilled in Snowflake are in high demand. If you are considering Snowflake training, enrolling in a Snowflake online course can help you gain in-depth knowledge and practical expertise. In this blog, we will explore what you will learn in a Snowflake training online program and how AccentFuture can guide you in mastering this powerful platform.

Overview of Snowflake Training Modules

A Snowflake course online typically covers several key modules that help learners understand the platform’s architecture and functionalities. Below are the core components of Snowflake training:

Introduction to Snowflake : Understand the basics of Snowflake, including its cloud-native architecture, key features, and benefits over traditional data warehouses.

Snowflake Setup and Configuration : Learn how to set up a Snowflake account, configure virtual warehouses, and optimize performance.

Data Loading and Unloading : Gain knowledge about loading data into Snowflake from various sources and exporting data for further analysis.

Snowflake SQL : Master SQL commands in Snowflake, including data querying, transformation, and best practices for performance tuning.

Data Warehousing Concepts : Explore data storage, schema design, and data modeling within Snowflake.

Security and Access Control : Understand how to manage user roles, data encryption, and compliance within Snowflake.

Performance Optimization : Learn techniques to optimize queries, manage costs, and enhance scalability in Snowflake.

Integration with BI Tools : Explore how Snowflake integrates with business intelligence (BI) tools like Tableau, Power BI, and Looker.

These modules ensure that learners acquire a holistic understanding of Snowflake and its applications in real-world scenarios.

Hands-on Practice with Real-World Snowflake Projects

One of the most crucial aspects of a Snowflake online training program is hands-on experience. Theoretical knowledge alone is not enough; applying concepts through real-world projects is essential for skill development.

By enrolling in a Snowflake course, you will work on industry-relevant projects that involve:

Data migration : Transferring data from legacy databases to Snowflake.

Real-time analytics : Processing large datasets and generating insights using Snowflake’s advanced query capabilities.

Building data pipelines : Creating ETL (Extract, Transform, Load) workflows using Snowflake and cloud platforms.

Performance tuning : Identifying and resolving bottlenecks in Snowflake queries to improve efficiency.

Practical exposure ensures that you can confidently apply your Snowflake skills in real-world business environments.

How AccentFuture Helps Learners Master Snowflake SQL, Data Warehousing, and Cloud Computing

AccentFuture is committed to providing the best Snowflake training with a structured curriculum, expert instructors, and hands-on projects. Here’s how AccentFuture ensures a seamless learning experience:

Comprehensive Course Content : Our Snowflake online course covers all essential modules, from basics to advanced concepts.

Expert Trainers : Learn from industry professionals with years of experience in Snowflake and cloud computing.

Live and Self-Paced Learning : Choose between live instructor-led sessions or self-paced learning modules based on your convenience.

Real-World Case Studies : Work on real-time projects to enhance practical knowledge.

Certification Guidance : Get assistance in preparing for Snowflake certification exams.

24/7 Support : Access to a dedicated support team to clarify doubts and ensure uninterrupted learning.

With AccentFuture’s structured learning approach, you will gain expertise in Snowflake SQL, data warehousing, and cloud computing, making you job-ready.

Importance of Certification in Snowflake Training Online

A Snowflake certification validates your expertise and enhances your career prospects. Employers prefer certified professionals as they demonstrate proficiency in using Snowflake for data management and analytics. Here’s why certification is crucial:

Career Advancement : A certified Snowflake professional is more likely to secure high-paying job roles in data engineering and analytics.

Industry Recognition : Certification acts as proof of your skills and knowledge in Snowflake.

Competitive Edge : Stand out in the job market with a globally recognized Snowflake credential.

Increased Earning Potential : Certified professionals often earn higher salaries than non-certified counterparts.

By completing a Snowflake course online and obtaining certification, you can position yourself as a valuable asset in the data-driven industry.

Conclusion

Learning Snowflake is essential for professionals seeking expertise in cloud-based data warehousing and analytics. A Snowflake training online course provides in-depth knowledge, hands-on experience, and certification guidance to help you excel in your career. AccentFuture offers the best Snowflake training, equipping learners with the necessary skills to leverage Snowflake’s capabilities effectively.

If you’re ready to take your data skills to the next level, enroll in a Snowflake online course today!

Related Blog: Learning Snowflake is great, but how can you apply your skills in real-world projects? Let’s discuss.

youtube

0 notes

Text

Snowflake vs Traditional Databases: What Makes It Different?

Data management has evolved significantly in the last decade, with organizations moving from on-premise, traditional databases to cloud-based solutions. One of the most revolutionary advancements in cloud data warehousing is Snowflake, which has transformed the way businesses store, process, and analyze data. But how does Snowflake compare to traditional databases? What makes it different, and why is it gaining widespread adoption?

In this article, we will explore the key differences between Snowflake and traditional databases, highlighting the advantages of Snowflake and why businesses should consider adopting it. If you are looking to master this cloud data warehousing solution, Snowflake training in Chennai can help you gain practical insights and hands-on experience.

Understanding Traditional Databases

Traditional databases have been the backbone of data storage and management for decades. These databases follow structured approaches, including relational database management systems (RDBMS) like MySQL, Oracle, PostgreSQL, and SQL Server. They typically require on-premise infrastructure and are managed using database management software.

Key Characteristics of Traditional Databases:

On-Premise or Self-Hosted – Traditional databases are often deployed on dedicated servers, requiring physical storage and management.

Structured Data Model – Data is stored in predefined tables and schemas, following strict rules for relationships.

Manual Scaling – Scaling up or out requires purchasing additional hardware or distributing workloads across multiple databases.

Fixed Performance Limits – Performance depends on hardware capacity and resource allocation.

Maintenance-Intensive – Requires database administrators (DBAs) for management, tuning, and security.

While traditional databases have been effective for years, they come with limitations in terms of scalability, flexibility, and cloud integration. This is where Snowflake changes the game.

What is Snowflake?

Snowflake is a cloud-based data warehousing platform that enables businesses to store, manage, and analyze vast amounts of data efficiently. Unlike traditional databases, Snowflake is designed natively for the cloud, providing scalability, cost-efficiency, and high performance without the complexities of traditional database management.

Key Features of Snowflake:

Cloud-Native Architecture – Built for the cloud, Snowflake runs on AWS, Azure, and Google Cloud, ensuring seamless performance across platforms.

Separation of Storage and Compute – Unlike traditional databases, Snowflake separates storage and computing resources, allowing independent scaling.

Automatic Scaling – Snowflake scales up and down dynamically based on workload demands, optimizing costs and performance.

Pay-As-You-Go Pricing – Organizations pay only for the storage and computing resources they use, making it cost-efficient.

Zero Management Overhead – No need for database administration, as Snowflake handles maintenance, security, and updates automatically.

Key Differences Between Snowflake and Traditional Databases

To understand what makes Snowflake different, let’s compare it to traditional databases across various factors:

1. Architecture

Traditional Databases: Follow a monolithic architecture, where compute and storage are tightly coupled. This means that increasing storage requires additional compute power, leading to higher costs.

Snowflake: Uses a multi-cluster, shared data architecture, where compute and storage are separate. Organizations can scale them independently, allowing cost and performance optimization.

2. Scalability

Traditional Databases: Require manual intervention to scale. Scaling up involves buying more hardware, and scaling out requires adding more database instances.

Snowflake: Offers instant scalability without any manual effort. It automatically scales based on the workload, making it ideal for big data applications.

3. Performance

Traditional Databases: Performance is limited by fixed hardware capacity. Queries can slow down when large volumes of data are processed.

Snowflake: Uses automatic query optimization and multi-cluster computing, ensuring faster query execution, even for large datasets.

4. Storage & Cost Management

Traditional Databases: Storage and compute are linked, meaning you pay for full capacity even if some resources are unused.

Snowflake: Since storage and compute are separate, businesses only pay for what they use, reducing unnecessary costs.

5. Data Sharing & Collaboration

Traditional Databases: Sharing data requires manual exports, backups, or setting up complex replication processes.

Snowflake: Enables secure, real-time data sharing without data movement, allowing multiple users to access live datasimultaneously.

6. Security & Compliance

Traditional Databases: Require manual security measures such as setting up encryption, backups, and access controls.

Snowflake: Provides built-in security features, including encryption, role-based access, compliance certifications (GDPR, HIPAA, etc.), and automatic backups.

7. Cloud Integration

Traditional Databases: Cloud integration requires additional tools or custom configurations.

Snowflake: Natively supports cloud environments and seamlessly integrates with BI tools like Power BI, Tableau, and Looker.

Why Businesses Are Moving to Snowflake

Companies across industries are transitioning from traditional databases to Snowflake due to its unparalleled advantages in scalability, performance, and cost efficiency. Some key reasons include:

Big Data & AI Adoption: Snowflake’s ability to handle massive datasets makes it ideal for AI-driven analytics and machine learning.

Reduced IT Overhead: Organizations can focus on analytics instead of database maintenance.

Faster Time to Insights: With high-speed querying and real-time data sharing, businesses can make quick, data-driven decisions.

How to Get Started with Snowflake?

If you are looking to switch to Snowflake or build expertise in cloud data warehousing, the best way is through structured training. Snowflake training in Chennai provides in-depth knowledge, practical labs, and real-world use cases to help professionals master Snowflake.

What You Can Learn in Snowflake Training?

Snowflake Architecture & Fundamentals

Data Loading & Processing in Snowflake

Performance Tuning & Optimization

Security, Access Control & Governance

Integration with Cloud Platforms & BI Tools

By enrolling in Snowflake training in Chennai, you gain hands-on experience in cloud data warehousing, making you industry-ready for high-demand job roles.

Conclusion

Snowflake has emerged as a game-changer in the world of data warehousing, offering cloud-native architecture, automated scalability, cost efficiency, and superior performance compared to traditional databases. Businesses looking for a modern data platform should consider Snowflake to enhance their data storage, analytics, and decision-making capabilities.

1 note

·

View note

Text

Qlik SaaS: Transforming Data Analytics in the Cloud

In the era of digital transformation, businesses need fast, scalable, and efficient analytics solutions to stay ahead of the competition. Qlik SaaS (Software-as-a-Service) is a cloud-based business intelligence (BI) and data analytics platform that offers advanced data integration, visualization, and AI-powered insights. By leveraging Qlik SaaS, organizations can streamline their data workflows, enhance collaboration, and drive smarter decision-making.

This article explores the features, benefits, and use cases of Qlik SaaS and why it is a game-changer for modern businesses.

What is Qlik SaaS?

Qlik SaaS is the cloud-native version of Qlik Sense, a powerful data analytics platform that enables users to:

Integrate and analyze data from multiple sources

Create interactive dashboards and visualizations

Utilize AI-driven insights for better decision-making

Access analytics anytime, anywhere, on any device

Unlike traditional on-premise solutions, Qlik SaaS eliminates the need for hardware management, allowing businesses to focus solely on extracting value from their data.

Key Features of Qlik SaaS

1. Cloud-Based Deployment

Qlik SaaS runs entirely in the cloud, providing instant access to analytics without requiring software installations or server maintenance.

2. AI-Driven Insights

With Qlik Cognitive Engine, users benefit from machine learning and AI-powered recommendations, improving data discovery and pattern recognition.

3. Seamless Data Integration

Qlik SaaS connects to multiple cloud and on-premise data sources, including:

Databases (SQL, PostgreSQL, Snowflake)

Cloud storage (Google Drive, OneDrive, AWS S3)

Enterprise applications (Salesforce, SAP, Microsoft Dynamics)

4. Scalability and Performance Optimization

Businesses can scale their analytics operations without worrying about infrastructure limitations. Dynamic resource allocation ensures high-speed performance, even with large datasets.

5. Enhanced Security and Compliance

Qlik SaaS offers enterprise-grade security, including:

Role-based access controls

End-to-end data encryption

Compliance with industry standards (GDPR, HIPAA, ISO 27001)

6. Collaborative Data Sharing

Teams can collaborate in real-time, share reports, and build custom dashboards to gain deeper insights.

Benefits of Using Qlik SaaS

1. Cost Savings

By adopting Qlik SaaS, businesses eliminate the costs associated with on-premise hardware, software licensing, and IT maintenance. The subscription-based model ensures cost-effectiveness and flexibility.

2. Faster Time to Insights

Qlik SaaS enables users to quickly load, analyze, and visualize data without lengthy setup times. This speeds up decision-making and improves operational efficiency.

3. Increased Accessibility

With cloud-based access, employees can work with data from any location and any device, improving flexibility and productivity.

4. Continuous Updates and Innovations

Unlike on-premise BI solutions that require manual updates, Qlik SaaS receives automatic updates, ensuring users always have access to the latest features.

5. Improved Collaboration

Qlik SaaS fosters better collaboration by allowing teams to share dashboards, reports, and insights in real time, driving a data-driven culture.

Use Cases of Qlik SaaS

1. Business Intelligence & Reporting

Organizations use Qlik SaaS to track KPIs, monitor business performance, and generate real-time reports.

2. Sales & Marketing Analytics

Sales and marketing teams leverage Qlik SaaS for:

Customer segmentation and targeting

Sales forecasting and pipeline analysis

Marketing campaign performance tracking

3. Supply Chain & Operations Management

Qlik SaaS helps optimize logistics by providing real-time visibility into inventory, production efficiency, and supplier performance.

4. Financial Analytics

Finance teams use Qlik SaaS for:

Budget forecasting

Revenue and cost analysis

Fraud detection and compliance monitoring

Final Thoughts

Qlik SaaS is revolutionizing data analytics by offering a scalable, AI-powered, and cost-effective cloud solution. With its seamless data integration, robust security, and collaborative features, businesses can harness the full power of their data without the limitations of traditional on-premise systems.

As organizations continue their journey towards digital transformation, Qlik SaaS stands out as a leading solution for modern data analytics.

1 note

·

View note

Text

Best Informatica Cloud Training in India | Informatica IICS

Cloud Data Integration (CDI) in Informatica IICS

Introduction

Cloud Data Integration (CDI) in Informatica Intelligent Cloud Services (IICS) is a powerful solution that helps organizations efficiently manage, process, and transform data across hybrid and multi-cloud environments. CDI plays a crucial role in modern ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) operations, enabling businesses to achieve high-performance data processing with minimal complexity. In today’s data-driven world, businesses need seamless integration between various data sources, applications, and cloud platforms. Informatica Training Online

What is Cloud Data Integration (CDI)?

Cloud Data Integration (CDI) is a Software-as-a-Service (SaaS) solution within Informatica IICS that allows users to integrate, transform, and move data across cloud and on-premises systems. CDI provides a low-code/no-code interface, making it accessible for both technical and non-technical users to build complex data pipelines without extensive programming knowledge.

Key Features of CDI in Informatica IICS

Cloud-Native Architecture

CDI is designed to run natively on the cloud, offering scalability, flexibility, and reliability across various cloud platforms like AWS, Azure, and Google Cloud.

Prebuilt Connectors

It provides out-of-the-box connectors for SaaS applications, databases, data warehouses, and enterprise applications such as Salesforce, SAP, Snowflake, and Microsoft Azure.

ETL and ELT Capabilities

Supports ETL for structured data transformation before loading and ELT for transforming data after loading into cloud storage or data warehouses.

Data Quality and Governance

Ensures high data accuracy and compliance with built-in data cleansing, validation, and profiling features. Informatica IICS Training

High Performance and Scalability

CDI optimizes data processing with parallel execution, pushdown optimization, and serverless computing to enhance performance.

AI-Powered Automation

Integrated Informatica CLAIRE, an AI-driven metadata intelligence engine, automates data mapping, lineage tracking, and error detection.

Benefits of Using CDI in Informatica IICS

1. Faster Time to Insights

CDI enables businesses to integrate and analyze data quickly, helping data analysts and business teams make informed decisions in real-time.

2. Cost-Effective Data Integration

With its serverless architecture, businesses can eliminate on-premise infrastructure costs, reducing Total Cost of Ownership (TCO) while ensuring high availability and security.

3. Seamless Hybrid and Multi-Cloud Integration

CDI supports hybrid and multi-cloud environments, ensuring smooth data flow between on-premises systems and various cloud providers without performance issues. Informatica Cloud Training

4. No-Code/Low-Code Development

Organizations can build and deploy data pipelines using a drag-and-drop interface, reducing dependency on specialized developers and improving productivity.

5. Enhanced Security and Compliance

Informatica ensures data encryption, role-based access control (RBAC), and compliance with GDPR, CCPA, and HIPAA standards, ensuring data integrity and security.

Use Cases of CDI in Informatica IICS

1. Cloud Data Warehousing

Companies migrating to cloud-based data warehouses like Snowflake, Amazon Redshift, or Google BigQuery can use CDI for seamless data movement and transformation.

2. Real-Time Data Integration

CDI supports real-time data streaming, enabling enterprises to process data from IoT devices, social media, and APIs in real-time.

3. SaaS Application Integration

Businesses using applications like Salesforce, Workday, and SAP can integrate and synchronize data across platforms to maintain data consistency. IICS Online Training

4. Big Data and AI/ML Workloads

CDI helps enterprises prepare clean and structured datasets for AI/ML model training by automating data ingestion and transformation.

Conclusion

Cloud Data Integration (CDI) in Informatica IICS is a game-changer for enterprises looking to modernize their data integration strategies. CDI empowers businesses to achieve seamless data connectivity across multiple platforms with its cloud-native architecture, advanced automation, AI-powered data transformation, and high scalability. Whether you’re migrating data to the cloud, integrating SaaS applications, or building real-time analytics pipelines, Informatica CDI offers a robust and efficient solution to streamline your data workflows.

For organizations seeking to accelerate digital transformation, adopting Informatics’ Cloud Data Integration (CDI) solution is a strategic step toward achieving agility, cost efficiency, and data-driven innovation.

For More Information about Informatica Cloud Online Training

Contact Call/WhatsApp: +91 7032290546

Visit: https://www.visualpath.in/informatica-cloud-training-in-hyderabad.html

#Informatica Training in Hyderabad#IICS Training in Hyderabad#IICS Online Training#Informatica Cloud Training#Informatica Cloud Online Training#Informatica IICS Training#Informatica Training Online#Informatica Cloud Training in Chennai#Informatica Cloud Training In Bangalore#Best Informatica Cloud Training in India#Informatica Cloud Training Institute#Informatica Cloud Training in Ameerpet

0 notes

Text

Snowflake Training in Hyderabad

With 16 years of experience, we provide expert-led Snowflake training in Hyderabad, focusing on real-time projects and 100% placement assistance.

In today's data-driven world, businesses rely on cloud data platforms to manage and analyze vast amounts of information. Snowflake is one of the most popular cloud-based data warehousing solutions, known for its scalability, security, and performance. If you're looking to build expertise in Snowflake, Hyderabad offers some of the best training programs to help you master this technology.

Why Learn Snowflake?

Snowflake is widely used for data analytics, business intelligence, and big data applications. Some key benefits include: ✅ Cloud-Native – Works seamlessly on AWS, Azure, and Google Cloud. ✅ Scalability – Handles large datasets efficiently with auto-scaling. ✅ Security – Advanced encryption and access controls ensure data protection. ✅ Ease of Use – Simple SQL-based interface for data manipulation.

Best Snowflake Training in Hyderabad

If you're searching for top Snowflake training institutes in Hyderabad, look for courses that offer: ✔ Hands-on training with real-world projects. ✔ Expert instructors with industry experience. ✔ Certification guidance to boost career opportunities. ✔ Flexible learning options – online and classroom training.

Career Opportunities After Snowflake Training

With the rising demand for cloud data engineers and Snowflake developers, certified professionals can land high-paying jobs as: 🔹 Data Engineers 🔹 Cloud Architects 🔹 Big Data Analysts 🔹 Database Administrators

Get Started Today!

If you want to advance your career in cloud data warehousing, enrolling in a Snowflake training program in Hyderabad is a great step forward.

For digital marketing solutions, visit T Digital Agency.

1 note

·

View note