#technically this is a part of a future img set but the problem is

Explore tagged Tumblr posts

Text

RK900.

#detroit become human#rk900#technically this is a part of a future img set but the problem is#that some of the text covers up the thirium#and i spent more time on that than literally anything else in this picture so#im gonna post this today solo and will post it again later with the text in the img set when i finish the set#my wrist needs a bit of a break so i might not finish the thing i start to draw today#so take rk900 just in case !

125 notes

·

View notes

Text

Carnegie Mellon is Saving Old Software from Oblivion

A prototype archiving system called Olive lets vintage code run on today’s computers

.carousel-inner{ height:525px !important; }

Illustration: Nicholas Little

In early 2010, Harvard economists Carmen Reinhart and Kenneth Rogoff published an analysis of economic data from many countries and concluded that when debt levels exceed 90 percent of gross national product, a nation’s economic growth is threatened. With debt that high, expect growth to become negative, they argued.

This analysis was done shortly after the 2008 recession, so it had enormous relevance to policymakers, many of whom were promoting high levels of debt spending in the interest of stimulating their nations’ economies. At the same time, conservative politicians, such as Olli Rehn, then an EU commissioner, and U.S. congressman Paul Ryan, used Reinhart and Rogoff’s findings to argue for fiscal austerity.

Three years later, Thomas Herndon, a graduate student at the University of Massachusetts, discovered an error in the Excel spreadsheet that Reinhart and Rogoff had used to make their calculations. The significance of the blunder was enormous: When the analysis was done properly, Herndon showed, debt levels in excess of 90 percent were associated with average growth of positive 2.2 percent, not the negative 0.1 percent that Reinhart and Rogoff had found.

Herndon could easily test the Harvard economists’ conclusions because the software that they had used to calculate their results—Microsoft Excel—was readily available. But what about much older findings for which the software originally used is hard to come by?

You might think that the solution—preserving the relevant software for future researchers to use—should be no big deal. After all, software is nothing more than a bunch of files, and those files are easy enough to store on a hard drive or on tape in digital format. For some software at least, the all-important source code could even be duplicated on paper, avoiding the possibility that whatever digital medium it’s written to could become obsolete.

Saving old programs in this way is done routinely, even for decades-old software. You can find online, for example, a full program listing for the Apollo Guidance Computer—code that took astronauts to the moon during the 1960s. It was transcribed from a paper copy and uploaded to GitHub in 2016.

While perusing such vintage source code might delight hard-core programmers, most people aren’t interested in such things. What they want to do is use the software. But keeping software in ready-to-run form over long periods of time is enormously difficult, because to be able to run most old code, you need both an old computer and an old operating system.

You might have faced this challenge yourself, perhaps while trying to play a computer game from your youth. But being unable to run an old program can have much more serious repercussions, particularly for scientific and technical research.

Along with economists, many other researchers, including physicists, chemists, biologists, and engineers, routinely use software to slice and dice their data and visualize the results of their analyses. They simulate phenomena with computer models that are written in a variety of programming languages and that use a wide range of supporting software libraries and reference data sets. Such investigations and the software on which they are based are central to the discovery and reporting of new research results.

Imagine that you’re an investigator and want to check calculations done by another researcher 25 years ago. Would the relevant software still be around? The company that made it may have disappeared. Even if a contemporary version of the software exists, will it still accept the format of the original data? Will the calculations be identical in every respect—for example, in the handling of rounding errors—to those obtained using a computer of a generation ago? Probably not.

Researchers’ growing dependence on computers and the difficulty they encounter when attempting to run old software are hampering their ability to check published results. The problem of obsolescent software is thus eroding the very premise of reproducibility—which is, after all, the bedrock of science.

The issue also affects matters that could be subject to litigation. Suppose, for example, that an engineer’s calculations show that a building design is robust, but the roof of that building nevertheless collapses. Did the engineer make a mistake, or was the software used for the calculations faulty? It would be hard to know years later if the software could no longer be run.

That’s why my colleagues and I at Carnegie Mellon University, in Pittsburgh, have been developing ways to archive programs in forms that can be run easily today and into the future. My fellow computer scientists Benjamin Gilbert and Jan Harkes did most of the required coding. But the collaboration has also involved software archivist Daniel Ryan and librarians Gloriana St. Clair, Erika Linke, and Keith Webster, who naturally have a keen interest in properly preserving this slice of modern culture.

Bringing Back Yesterday’s Software

The Olive system has been used to create 17 different virtual machines that run a variety of old software, some serious, some just for fun. Here are several views from those archived applications

1/8

NCSA Mosaic 1.0, a pioneering Web browser for the Macintosh from 1993.

2/8

Chaste (Cancer, Heart and Soft Tissue Environment) 3.1 for Linux from 2013.

<img src="https://spectrum.ieee.org/image/MzEzMTUzMg.jpeg" data-original="/image/MzEzMTUzMg.jpeg" id="618441086_2" alt="The Oregon Trail 1.1, a game for the Macintosh from 1990.”> 3/8

The Oregon Trail 1.1, a game for the Macintosh from 1990.

<img src="https://spectrum.ieee.org/image/MzEzMTUzNQ.jpeg" data-original="/image/MzEzMTUzNQ.jpeg" id="618441086_3" alt="Wanderer, a game for MS-DOS from 1988.”> 4/8

Wanderer, a game for MS-DOS from 1988.

<img src="https://spectrum.ieee.org/image/MzEzMTU1MA.jpeg" data-original="/image/MzEzMTU1MA.jpeg" id="618441086_4" alt="Mystery House, a game for the Apple II from 1982.”> 5/8

Mystery House, a game for the Apple II from 1982.

6/8

The Great American History Machine, an educational interactive atlas for Windows 3.1 from 1991.

7/8

Microsoft Office 4.3 for Windows 3.1 from 1994.

8/8

ChemCollective, educational chemistry software for Linux from 2013.

$(document).ready(function(){ $(‘#618441086’).carousel({ pause: true, interval: false }); });

Because this project is more one of archival preservation than mainstream computer science, we garnered financial support for it not from the usual government funding agencies for computer science but from the Alfred P. Sloan Foundation and the Institute for Museum and Library Services. With that support, we showed how to reconstitute long-gone computing environments and make them available online so that any computer user can, in essence, go back in time with just a click of the mouse.

We created a system called Olive—an acronym for Open Library of Images for Virtualized Execution. Olive delivers over the Internet an experience that in every way matches what you would have obtained by running an application, operating system, and computer from the past. So once you install Olive, you can interact with some very old software as if it were brand new. Think if it as a Wayback Machine for executable content.

To understand how Olive can bring old computing environments back to life, you have to dig through quite a few layers of software abstraction. At the very bottom is the common base of much of today’s computer technology: a standard desktop or laptop endowed with one or more x86 microprocessors. On that computer, we run the Linux operating system, which forms the second layer in Olive’s stack of technology.

Sitting immediately above the operating system is software written in my lab called VMNetX, for Virtual Machine Network Execution. A virtual machine is a computing environment that mimics one kind of computer using software running on a different kind of computer. VMNetX is special in that it allows virtual machines to be stored on a central server and then executed on demand by a remote system. The advantage of this arrangement is that your computer doesn’t need to download the virtual machine’s entire disk and memory state from the server before running that virtual machine. Instead, the information stored on disk and in memory is retrieved in chunks as needed by the next layer up: the virtual-machine monitor (also called a hypervisor), which can keep several virtual machines going at once.

Each one of those virtual machines runs a hardware emulator, which is the next layer in the Olive stack. That emulator presents the illusion of being a now-obsolete computer—for example, an old Macintosh Quadra with its 1990s-era Motorola 68040 CPU. (The emulation layer can be omitted if the archived software you want to explore runs on an x86-based computer.)

The next layer up is the old operating system needed for the archived software to work. That operating system has access to a virtual disk, which mimics actual disk storage, providing what looks like the usual file system to still-higher components in this great layer cake of software abstraction.

Above the old operating system is the archived program itself. This may represent the very top of the heap, or there could be an additional layer, consisting of data that must be fed to the archived application to get it to do what you want.

The upper layers of Olive are specific to particular archived applications and are stored on a central server. The lower layers are installed on the user’s own computer in the form of the Olive client software package. When you launch an archived application, the Olive client fetches parts of the relevant upper layers as needed from the central server.

Illustration: Nicholas Little

Layers of Abstraction: Olive requires many layers of software abstraction to create a suitable virtual machine. That virtual machine then runs the old operating system and application.

That’s what you’ll find under the hood. But what can Olive do? Today, Olive consists of 17 different virtual machines that can run a variety of operating systems and applications. The choice of what to include in that set was driven by a mix of curiosity, availability, and personal interests. For example, one member of our team fondly remembered playing The Oregon Trail when he was in school in the early 1990s. That led us to acquire an old Mac version of the game and to get it running again through Olive. Once word of that accomplishment got out, many people started approaching us to see if we could resurrect their favorite software from the past.

The oldest application we’ve revived is Mystery House, a graphics-enabled game from the early 1980s for the Apple II computer. Another program is NCSA Mosaic, which people of a certain age might remember as the browser that introduced them to the wonders of the World Wide Web.

Olive provides a version of Mosaic that was written in 1993 for Apple’s Macintosh System 7.5 operating system. That operating system runs on an emulation of the Motorola 68040 CPU, which in turn is created by software running on an actual x86-based computer that runs Linux. In spite of all this virtualization, performance is pretty good, because modern computers are so much faster than the original Apple hardware.

Pointing Olive’s reconstituted Mosaic browser at today’s Web is instructive: Because Mosaic predates Web technologies such as JavaScript, HTTP 1.1, Cascading Style Sheets, and HTML 5, it is unable to render most sites. But you can have some fun tracking down websites composed so long ago that they still look just fine.

What else can Olive do? Maybe you’re wondering what tools businesses were using shortly after Intel introduced the Pentium processor. Olive can help with that, too. Just fire up Microsoft Office 4.3 from 1994 (which thankfully predates the annoying automated office assistant “Clippy”).

Perhaps you just want to spend a nostalgic evening playing Doom for DOS—or trying to understand what made such first-person shooter games so popular in the early 1990s. Or maybe you need to redo your 1997 taxes and can’t find the disk for that year’s version of TurboTax in your attic. Have no fear: Olive has you covered.

On the more serious side, Olive includes Chaste 3.1. The name of this software is short for Cancer, Heart and Soft Tissue Environment. It’s a simulation package developed at the University of Oxford for computationally demanding problems in biology and physiology. Version 3.1 of Chaste was tied to a research paper published in March 2013. Within two years of publication, though, the source code for Chaste 3.1 no longer compiled on new Linux releases. That’s emblematic of the challenge to scientific reproducibility Olive was designed to address.

Illustration: Nicholas Little

To keep Chaste 3.1 working, Olive provides a Linux environment that’s frozen in time. Olive’s re-creation of Chaste also contains the example data that was published with the 2013 paper. Running the data through Chaste produces visualizations of certain muscle functions. Future physiology researchers who wish to explore those visualizations or make modifications to the published software will be able to use Olive to edit the code on the virtual machine and then run it.

For now, though, Olive is available only to a limited group of users. Because of software-licensing restrictions, Olive’s collection of vintage software is currently accessible only to people who have been collaborating on the project. The relevant companies will need to give permissions to present Olive’s re-creations to broader audiences.

We are not alone in our quest to keep old software alive. For example, the Internet Archive is preserving thousands of old programs using an emulation of MS-DOS that runs in the user’s browser. And a project being mounted at Yale, called EaaSI (Emulation as a Service Infrastructure), hopes to make available thousands of emulated software environments from the past. The scholars and librarians involved with the Software Preservation Network have been coordinating this and similar efforts. They are also working to address the copyright issues that arise when old software is kept running in this way.

Olive has come a long way, but it is still far from being a fully developed system. In addition to the problem of restrictive software licensing, various technical roadblocks remain.

One challenge is how to import new data to be processed by an old application. Right now, such data has to be entered manually, which is both laborious and error prone. Doing so also limits the amount of data that can be analyzed. Even if we were to add a mechanism to import data, the amount that could be saved would be limited to the size of the virtual machine’s virtual disk. That may not seem like a problem, but you have to remember that the file systems on older computers sometimes had what now seem like quaint limits on the amount of data they could store.

Another hurdle is how to emulate graphics processing units (GPUs). For a long while now, the scientific community has been leveraging the parallel-processing power of GPUs to speed up many sorts of calculations. To archive executable versions of software that takes advantage of GPUs, Olive would need to re-create virtual versions of those chips, a thorny task. That’s because GPU interfaces—what gets input to them and what they output—are not standardized.

Clearly there’s quite a bit of work to do before we can declare that we have solved the problem of archiving executable content. But Olive represents a good start at creating the kinds of systems that will be required to ensure that software from the past can live on to be explored, tested, and used long into the future.

This article appears in the October 2018 print issue as “Saving Software From Oblivion.”

About the Author

Mahadev Satyanarayanan is a professor of computer science at Carnegie Mellon University, in Pittsburgh.

Carnegie Mellon is Saving Old Software from Oblivion syndicated from https://jiohowweb.blogspot.com

14 notes

·

View notes

Text

How Saving Private Ryan Influenced Medal of Honor and Changed Gaming

https://ift.tt/3azDVUj

The legacies of Medal of Honor and Saving Private Ryan have gone in wildly different directions since the late ’90s. The latter is still thought of as one of the most influential and memorable movies ever made. The former is sometimes referred to as a “Did You Know?” piece of Call of Duty‘s history or maybe just proof the PS1 had a couple of good first-person shooters.

It wasn’t always that way, though. There was a time when the fates of Medal of Honor and Saving Private Ryan seemed destined to be forever intertwined. After all, Medal of Honor was essentially pitched as the game that would do to WW2 games what Saving Private Ryan did for WW2 films.

That never quite happened, but the ways that the fates of those two projects began, diverged, met, and ultimately split helped shape the future of gaming in ways you may may not know about.

Steven Spielberg’s Son and GoldenEye 007 Change the Fate of the WW2 Shooter

In case Ready Player One didn’t make it clear, Steven Spielberg has always loved video games. While you’d think that the reception to 1982’s E.T. for the Atari (a game so bad that millions of unsold copies were infamously buried in a landfill) would have soured him on the format, Spielberg remained convinced that gaming was going to play a big role in the future of storytelling and entertainment. Spielberg even co-wrote a sometimes overlooked 1995 LucasArts adventure game called The Dig.

It was around that same time that Spielberg also expressed his interest in making a video game based on his fascination with WW2. In fact, in the early ‘90s, designer Noah Falstein started working on a WW2 game after a conversation with Spielberg reportedly piqued his own interest in that idea.

The project (which was known as both Normandy Beach and Beach Ball at the time) was fascinating. It would have followed two brothers participating in the D-Day invasion: one on the beaches of Normandy and one who was dropping in behind enemy lines. Players would have swapped between the two brothers (try to push aside any Rick and Morty jokes for the moment) as they fought through the war and finally saw each other again.

It was a great idea, but when Falstein took it to DreamWorks Interactive (the gaming division of the DreamWorks film studio that Spielberg co-owned), he was surprised to be greeted with a cold shoulder. It seems that the DreamWorks Interactive team felt it would be hard to sell a game to kids that was based on a historical event as old as WW2. Work on the project quietly ended months after it had begun.

While it’s probably wild to think of a game designer that had a hard time pitching a WW2 game in the ‘90s, you have to remember that widespread cultural interest in WW2 at that time was still fairly low. There were WW2 games released prior to that point, but most of them either made passing references to the era (such as Wolfenstein) or were hardcore strategy titles typically aimed at an older audience. Most studios believed that kids wanted sci-fi and fantasy action games, and many of them weren’t willing to invest heavily on the chance they were wrong.

Spielberg shared that concern, but he saw it slightly differently. As someone who believed that WW2 was this event that shaped the generation that lived through it and those that came after, he felt this desire to inform people of the war’s impact and intrigue through the considerable means and talent available to him. That strategy obviously included Saving Private Ryan, but he was especially interested in reaching that same young audience that DWI felt would largely ignore a WW2 game.

Legend has it that a lightbulb went off in Spielberg’s head as he watched his son play GoldenEye 007 for N64. Intrigued by both his son’s fascination with that shooter, and the clear advances in video game technology it represented, he took time away from Saving Private Ryan’s post-production process, visited the DWI team, and told them that he wanted to see a concept for a WW2 first-person shooter set in Europe and named after the Medal of Honor. If the team wasn’t stunned yet, they certainly would be when Spielberg told them that they had one week to show him a demo.

Doubtful that they could produce a compelling demo in such a short amount of time, doubtful the PS1 could handle such an ambitious FPS concept, and still very much doubtful that gamers wanted to play a WW2 shooter, the team reworked the engine for the recent The Lost World: Jurassic Park PlayStation game and used it as the basis for what was later described as a shoestring proof of concept.

It may have been pieced together, but what DWI came up with was enough to excite Spielberg and, more importantly, excite the game’s developers. Suddenly, people were starting to buy into the idea that this whole thing could work and was very much worth doing. As the calendar turned to 1998 and Saving Private Ryan became a blockbuster that was also changing the conversation about World War 2, it suddenly felt like the DWI team might just have a hit on their hands.

Unfortunately, not everyone was on board with the idea, and those doubts would soon change the trajectory of the game.

Columbine and Veteran Concerns Force Medal of Honor to Move Away from Saving Private Ryan

Saving Private Ryan was widely praised for its brutal authenticity that effectively conveyed the horrors of war, as well as its technical accomplishments that changed the way films are made and talked about. At first, it seemed like the Medal of Honor team intended to attempt to recreate both of those elements.

On the technical side of things, the developers were succeeding in ways that their makeshift demo barely suggested was possible. While the team was right that developing an FPS on PlayStation meant working around certain restrictions (they couldn’t get daytime levels to look right so everything in the original game happens at night), the PlayStation proved to be remarkably capable in other ways. Because the team was limited from a purely visual perspective, they decided to focus on character animations and AI in order to “sell” the world.

While they’re easy to overlook now, the original Medal of Honor did things with enemy AI that few gamers had seen at the time. Enemies reacted to being shot in ways that suggested they were more than just bullet sponges. They’d drop their weapons, lose their helmets, scramble for cover…it all contributed to the sensation of battling actual humans. Well, not actual humans but rather Nazis. In fact, the thrill of feeling every bullet you fired at Nazis was one of the things that excited the team early on. Both developers and players fondly recall being able to do things like make a dog fetch a grenade and take it back to his handler to this day.

Just as it was in Saving Private Ryan, sound design was a key part of what made Medal of Honor work. Everything ricocheted and responded with a level of authenticity that perfectly complemented the film-like orchestral score that they had commissioned from game composer Michael Giacchino. The quality of the game’s sound is partially attributed to the contributions of Captain Dale Dye who helped ensure the authenticity of Saving Private Ryan and did the same for Medal of Honor. Initially, Dye was doubtful the game could be on the same level as the film.

Actually, Dye’s feedback was one of the earliest indications that some veterans were going to be very apprehensive of the idea of turning war into a video game like Medal of Honor. Dye eventually saw that their intentions were good, but the team soon received another wake-up call when Paul Bucha, a Medal of Honor recipient and then-president of the Congressional Medal of Honor Society, wrote a letter to Steven Spielberg that essentially shamed him for his involvement with the game and demanded that he remove the Medal of Honor name from the project. At that point in development, such a change could have meant the project’s cancellation.

That wasn’t the only problem that suddenly emerged. In April 1999 (six months before Medal of Honor’s release), the Columbine High School massacre occurred and changed the conversation about violence in entertainment (especially video games). Reports indicate that Medal of Honor was, at that time, a particularly violent video game clearly modeled after the brutality of Saving Private Ryan that also featured an almost comical level of blood that some who saw the early versions of the title compared to The Evil Dead. Suddenly, the team felt apprehensive of what they had been going for.

These events and concerns essentially encouraged the Medal of Honor team to step away from Saving Private Ryan a bit and focus on a few different things that would go on to separate the game from the film it was spiritually based on.

Read more

Movies

How Saving Private Ryan’s Best Picture Loss Changed the Oscars Forever

By David Crow

Movies

Audrey Hepburn: The Secret WW2 History of a Dutch Resistance Spy

By David Crow

Medal of Honor: A Different Kind of War Game

It was easy enough to cut Medal of Honor’s violence (or at least its gore), but when it came to addressing concerns of the game’s commercialization and gamification of war and the experience of soldiers, the team found some more creative solutions.

For instance, you may notice that the original Medal of Honor is a much more “low-key” shooter and WW2 game compared to other titles at the time and those that would follow. Well, part of that tone was based on Spielberg’s desire to have the game tell more of a story through gameplay than other shooters had done up until that point (an innovation in and of itself), but a lot of that comes from the input of Dale Dye.

As Dye taught the team what it was like to be a soldier and serve during WW2, they gained a perspective that they felt the need to share. This is part of the reason why Medal of Honor features a lot of text and cutscene segments designed to teach parts of the history of the war that would have otherwise likely been left on the cutting room floor. There’s a documentary feel to that title that you still don’t see in a lot of period-specific games.

That decision may have also helped saved Medal of Honor in the long run. When Bucha raised his concerns about the project, the team took them seriously enough to consider canceling the game just months before its release. However, producer Peter Hirschmann extended an invite to Bucha so that he could see what exactly it was that they were working on.

It was a bold move that proved to pay off as Bucha was so impressed with the game’s direction (a direction that changed drastically in development) that he actually ended up officially supporting the title. Maybe it wasn’t as grand and impactful as Saving Private Ryan, but he saw the team was doing something that was so much more than just a high score and gore.

Medal of Honor proved to be a hit in 1999, but the celebration was impacted by the news that DWI had been sold to EA. The good news was that most of the key members of the DWI team were able to stay together to work on 2000’s Medal of Honor: Underground: a criminally overlooked game that told a brilliant story about a French Resistance fighter modeled after the legendary Hélène Deschamps Adams. That game advanced the unique style of the original game and retained its quality. I highly recommend you play it if you’ve never done so.

Soon, though, everything would change in a way that brought the series directly back to Saving Private Ryan in ways that are stil being felt to this day.

Medal of Honor: Allied Assault – The (Mostly) Unofficial Saving Private Ryan Game

EA decided to continue the Medal of Honor series but without the old Medal of Honor team at the helm. The story goes that they initially asked id Software to develop the next Medal of Honor game, but the id team said they were too busy and instead recommended they ask a studio called 2015 Games to further the franchise.

Never heard of them? I’m not surprised. The team’s previous work hadn’t set the world on fire, but EA took id’s recommendation to heart and asked the young developers to start working on what would become 2002’s Medal of Honor: Allied Assault.

It’s funny, but for such an important game, we really don’t know a lot about the details of Allied Assault’s development. It’s been said that the game’s development was pretty rough (the young team apparently struggled to combine their separate ideas under one creative vision), and we also know that they contacted Dale Dye for authenticity input just as the DWI team had done.

What we don’t exactly know is why Allied Assault was designed to so closely resemble Saving Private Ryan.

It’s easy to assume that the developers were just big fans of the movie (who wasn’t back then?), but there are elements of Allied Assault that are essentially pulled directly from the movie. A lot of the dialog is slightly reworked Saving Private Ryan lines, some of the characters are carbon copies of the film’s leads, and certain missions are pulled directly from the most memorable events of the movie.

The most famous example of that last point has to be the game’s infamous beach assault mission. At times nearly a 1-1 recreation of Saving Private Ryan’s opening scene, some people believe to this day that the game actually starts with that mission just as the movie began with a similar sequence. While 2003’s Medal of Honor: Frontline (which borrowed heavily from Allied Assault despite being developed by a different team) did start with a Normandy Beach invasion, that sequence doesn’t happen in Allied Assault until you reach the third mission.

Regardless, it’s the part of the game everyone seems to remember all these years later. Objectively a technical accomplishment that recreated the sensation of watching Saving Private Ryan’s infamous opener in a way that nothing else really had, that beach sequence also stood in direct contrast to much of the game that came before it. The early parts of Allied Assault were a little quieter and modeled more after the “adventure/espionage” style of the original games in the series. From that point though, Allied Assault essentially served as a Saving Private Ryan video game. One of the game’s final missions even nearly recreates the sniping sequence from the finale of that film.

It’s almost like the 2015 Games team was working on a “one for you, one for me” program. Here’s more of the Medal of Honor that came before, but here’s this absolutely intense action game that not only recalls Saving Private Ryan but in some ways directly challenges it. At a time when movie studios were still looking for sequences that would rival Normandy, the Allied Assault team used that sequence as the basis for a compelling argument that gaming was more than ready to match and perhaps surpass the most intense moments in film history.

The idea that 2015 was going rogue a bit with their ambitions may be supported by the fact that EA eventually decided all future Medal of Honor games would be developed in-house. This came as a shock to the 2015 Games team who felt they did a fantastic job and were practically drowning in accolades at that time.

Desperate to stay afloat, the 2015 team put a call out to studios to let them know that most of the people responsible for one of 2002’s best games (and a shooter some called the best since Half-Life) were ready and able to continue their work under a different name.

Activision ended up answering their call, and that project became 2003’s Call of Duty. Before Call of Duty went on to become one of the most successful and profitable franchises in video game history, it was this brilliant single-player focused WW2 shooter made by a studio then known as Infinity Ward. Almost every one of that game’s levels was on the level of that infamous beach sequence from Allied Assault. Infinity Ward’s ability to consistently deliver that kind of intensity set a new standard that some will tell you has never been truly surpassed.

The story of what happened to Medal of Honor is a touch sadder.

Medal of Honor’s Complicated Legacy and Saving Private Ryan’s Lasting Influence

While 2002’s Medal of Honor: Frontline was called the game of the year by many outlets, subsequent games in the series garnered decidedly more mixed receptions. 2003’s Medal of Honor: Rising Sun was no match for Call of Duty, just as 2004 ‘s Medal of Honor: Pacific Assault couldn’t hold a candle to Call of Duty 2 in the minds of many. As time went on, the Medal of Honor franchise attempted to mimic Call of Duty in more and more overt ways. The results could generously be described as mixed.

For a series that started out with a direct line to Saving Private Ryan, it’s a little ironic that Medal of Honor was eventually defined and defeated by a studio that was more willing to directly embrace that movie’s style, story, and best moments. Perhaps, the early Medal of Honor games weren’t in the best position to emulate Saving Private Ryan so directly from a technological and content standpoint, but there’s something sad about the ways that Medal of Honor initially tried to distinguish itself as more than a Saving Private Ryan adaptation have been lost slightly in favor of simply walking the path forged by one of the most influential films of the last 25 years. Allied Assault and the early Call of Duty games deserve the praise they’ve received, but it’s hard not to wonder what might have been if more games looked at how Medal of Honor initially distinguished itself and went for something different.

But even fallen (or mostly fallen) franchises can leave a lasting legacy. As far as Medal of Honor goes, nobody summarized its legacy quite so elegantly as Max Spielberg: the kid whose GoldenEye sessions helped inspire the development of the first Medal of Honor game.

“Medal of Honor is one of the few great marriages of game and film,” said Spielberg. “It was that first rickety bridge built between the silver screen and the home console.”

cnx.cmd.push(function() { cnx({ playerId: "106e33c0-3911-473c-b599-b1426db57530", }).render("0270c398a82f44f49c23c16122516796"); });

Maybe the first Medal of Honor games didn’t exactly recreate Saving Private Ryan, but they aimed for that level of success in a way that most studios would have never dreamed of. There are times when it’s easy to take for granted how video games can make the best movies come to life. What we should never forget are the contributions of the developers who turned our whispers of “Could you imagine playing a game that looks like that?” that we hoped wouldn’t echo in a crowded theater into the games we know and love today.

The post How Saving Private Ryan Influenced Medal of Honor and Changed Gaming appeared first on Den of Geek.

from Den of Geek https://ift.tt/3dmFkPJ

0 notes

Text

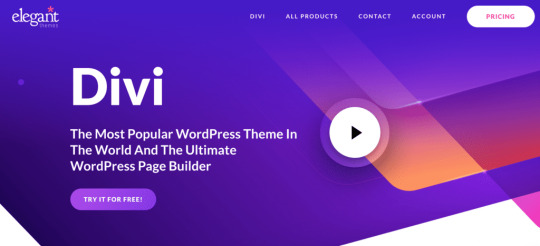

Divi vs. Elementor: Which WordPress Page Builder Is Right for Your Site?

The post Divi vs. Elementor: Which WordPress Page Builder Is Right for Your Site? appeared first on HostGator Blog.

If you’re interested in getting a website up and running and want to do it yourself, then WordPress is an excellent bet.

WordPress is the most popular content management system and powers 35.2% of all websites. WordPress also gets increasingly easier to self-navigate as the days and years progress, and there are several excellent WordPress page builder software programs that will help you through the process of building your website.

With all of the different website builders on the market, though, how is a novice to know which one is the best? Well, it depends on what you’re looking for, how much you already know about website building and your budget.

To help you make an informed decision, here is an in-depth review of two of the most popular WordPress page builders on the market, Divi vs. Elementor.

What is Divi?

You may already know Divi as one of the most popular WordPress themes, but it’s more than that. Divi is also a website building platform that makes building a WordPress website significantly easier. Divi also includes several visual features that help you make your website more visually appealing.

Let’s take a closer look at some of the most impressive features of the Divi WordPress builder.

Features of DIVI

Here is what you can expect feature-wise when you select Divi as your WordPress page builder.

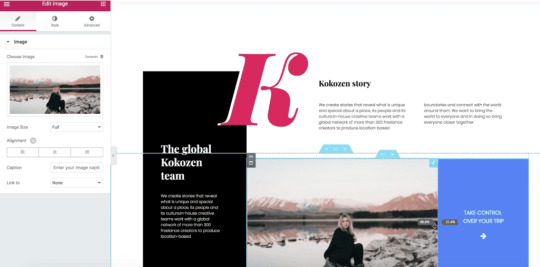

Drag & drop building. Divi makes it easy to add, delete, and move elements around as you’re building your website. The best part is you don’t have to know how to code. All of the design is done on the front end of your site, not the back-end.

Real-time visual editing. You can design your page and see how it looks as you go. Divi provides many intuitive visual features that help you make your page look how you want it to without having to know anything technical about web design.

Custom CSS controls. If you do have custom CSS, you can combine it with Divi’s visual editing controls. If you don’t know what this means, no worries. You can stick to a theme or the drag and drop builder.

Responsive editing. You don’t have to worry about whether or not your website will be mobile responsive. It will be. Plus, you can edit how your website will look on a mobile device with Divi’s various responsive editing tools.

Robust design options. Many WordPress builders have only a few design options. Divi allows you full design control over your website.

Inline text editing. All you have to do to edit your copy is click on the place where you want your text to appear and start typing.

Save multiple designs. If you’re not sure exactly how you want your website to look before you publish it, you can create multiple custom designs, save them, and decide later. You can also save your designs to use as templates for future pages. This helps your website stay consistent and speed up the website creation process.

Global elements and styles. Divi allows you to manage your design with website-wide design settings, allowing you to build a whole website, not just a page.

Easy revisions. You can quickly undo, redo, and make revisions as you design.

Pros of Divi

Why would you want to choose Divi vs. Elementor? Here are the top advantages of Divi to consider as you make your decision.

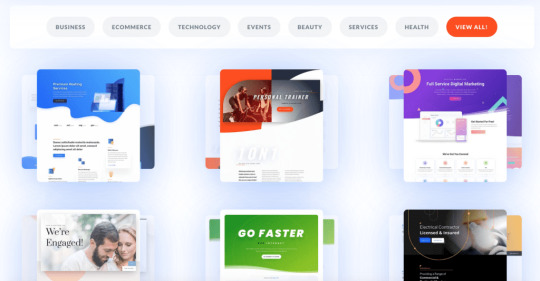

More templates. Divi has over 800 predesigned templates and they are free to use. If you don’t want to design your own website, simply pick one of the templates that best matches your style.

Full website packs. Not only does Divi have a wide range of pre-designed templates, but they also offer entire website packs, based on various industries and types of websites (e.g., business, e-commerce, health, beauty, services, etc.). This makes it easy to quickly design a website that matches your needs.

In-line text editing. The in-line text editing feature is an excellent feature. All you have to do is point and click and you can edit any block of text.

Lots of content modules. Divi has over 30 customizable content modules. You can insert these modules (e.g., CTA buttons, email opt-in forms, maps, testimonials, video sliders, countdown timers, etc.) in your row and column layouts.

Creative freedom. You really have a lot of different options when it comes to designing your website. If you can learn how to use all of the various features, you’ll be able to build a nice website without having to know anything about coding.

Cons of Divi

Before you decide to hop on the Divi bandwagon, it’s essential to consider potential drawbacks. Here are the cons of the Divi WordPress website builder to help you make a more informed decision.

No pop-up builder. Unfortunately, Divi doesn’t include a pop-up builder. Pop-ups are a great way to draw attention to announcements, promotions, and a solid way to capture email subscribers.

Too many options. While Divi has so many builder options that you can do nearly anything, some reviewers believe that all of the options are too many options. This can distract from the simplicity of use.

Learning curve. Since there are so many features with Divi, it can take some extra time to learn how to effectively use them all.

The Divi theme is basic. It’s critical to remember that the Divi theme and the Divi WordPress builder are two different things. You can use the Divi WordPress builder with any WordPress theme, including the Divi theme. However, if you opt for the Divi theme, it’s nice to know that some reviewers think the Divi theme is a bit basic. You may want to branch out and find a more suitable theme.

Glitchy with longer pages. Some reviewers also say that Divi can get glitchy when trying to build longer pages. This shouldn’t be too much of a problem if you’re only looking for a basic website.

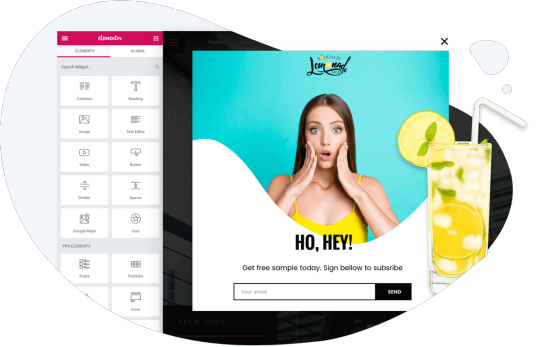

What is Elementor?

Elementor is an all-in-one WordPress website builder solution where you can control every piece of your website design from one platform.

Like Divi, Elementor also provides a flexible and simple visual editor that makes it easy to create a gorgeous website, even if you have no design experience.

Elementor also touts their ability to help you build a website that loads faster and that you can build quickly.

Features of Elementor

You already know what Divi can do. Here is what you can expect feature-wise when you sign up with Elementor vs. Divi.

Drag and drop builder. Elementor also includes a drag and drop website builder, so you can create your website without knowing how to code. It also provides live editing so you can see how your site looks as you go.

All design elements together. With Elementor, you don’t have to switch between various screens to design and to make changes and updates. All your content, including your header, footer, and website content, are editable from the same page.

Save and reuse elements and widgets. You can save design elements and widgets in your account and reuse them on other pages. This helps you save time and keep your pages consistent across your website.

300+ templates. Elementor has a pre-designed template for every possible website need and industry. If you don’t trust your drag and drop design skills, then simply pick one of the pre-designed templates. Of course, you can customize the theme with the drag and drop feature, but there is no need to start from scratch.

Responsive mobile editor. It’s no longer an option to have a website that isn’t mobile responsive. Elementor makes it a point to help you customize the way your website looks on a desktop and a mobile device, so you are catering to all your website visitors, not just those visiting from a desktop computer.

Pop-up builder. The use of pop-ups is a strategic way to draw attention to a promotion, an announcement, or your email list. Elementor’s pro plan helps you make pixel-perfect popups, including advanced targeting options.

Over 90 widgets. You can choose from over 90 widgets that will help you quickly create the design elements you need to incorporate into your website. These widgets help you add things like buttons, forms, headlines, and more to your web pages.

Pros of Elementor

Here is a quick overview of the pros of the Elementor. If these advantages are important to you, Elementor may be the perfect fit for you.

Rich in features. Elementor is one of the best WordPress builders on the market and has tons of different features to help you create a quality website.

Maximum layout control. Elementor’s interface is extremely intuitive, and the design features are easy to use. You don’t have to train yourself on how to use Elementor. You just login, and start working.

Easy to use. For the most part, Elementor’s drag and drop interface is easy to use. You can choose from different premade blocks, templates, and widgets.

Finder search tool. In the event you can’t find something easily with Elementor, you can turn your attention to the search window, type in the feature or page you’re looking for, and Elementor will direct you to it.

Always growing. Elementor’s team is always working to stay ahead of the curve by pushing out new features often.

WooCommerce builder. Elementor has a nice WooCommerce Builder in their pro package. It’s easy to design your eCommerce website without having to know how to code. Widgets you can use on your product page include an add to cart button, product price, product title, product description, product image, upsells, product rating, related products, product stock, and more.

Integrations. Elementor provides various marketing integrations that most website owners use on their sites. Integrations include AWeber, Mailchimp, Drip, ActiveCampaign, ConvertKit, HubSpot, Zapier, GetResponse, MailerLite, and MailPoet. WordPress plugins include WooCommerce, Yoast, ACF, Toolset, and PODS. Social integrations include Slack, Discord, Facebook SDK, YouTube, Vimeo, Dailymotion, SoundCloud, and Google Maps. Other integrations include Adobe Fonts, Google Fonts, Font Awesome 5, Font Awesome Pro, Custom Icon Libraries, and reCAPTCHA. There are also many 3rd party add-ons and you can build your own integrations.

Cons of Elementor

As with any website builder, there are advantages and disadvantages. Here are the cons of Elementor to consider when making your choice between Divi vs. Elementor.

Less templates than Divi. Elementor only has 300+ templates as opposed to Divi’s 800+. While there are fewer templates, however, they are still well-designed and will help you build a beautiful website. Some people may actually consider this an advantage, because there are fewer templates to sort through, and it doesn’t take up as much of your time to choose a template.

Outdated UI. Some reviewers say the Elementor user interface is outdated, making some features more difficult to find and use. It will be interesting to see if and how Elementor innovates its user interface in the future.

Issues with editing mode. Sometimes the website will look different when in editing mode. This can be frustrating for some users.

Margin and padding adjustability issue. When using the drag and drop builder, you can’t adjust the margin and padding, according to some reviewers.

Customer support. It can be difficult to quickly get in touch with a customer support team member and to quickly get custom solutions to your issues.

No white label. Elementor doesn’t come with a white label option.

Problems with third-party add-ons. While Elementor allows for a lot of third-party add-ons, these add-ons often cause issues.

Divi vs. Elementor: Which Will You Choose?

Regardless of which website builder you select, Divi or Elementor, you’ll need a web hosting company to park your WordPress website.

HostGator provides a secure and affordable managed WordPress hosting plans that start at only $5.95 a month. Advantages include 2.5x the speed, advanced security, free migrations, a free domain, a free SSL certificate, and more.

Check out HostGator’s managed WordPress hosting now, and start building your WordPress website.

Find the post on the HostGator Blog

from HostGator Blog https://www.hostgator.com/blog/divi-vs-elementor-wordpress-page-builder/

0 notes

Text

Request with Intent: Caching Strategies in the Age of PWAs

Once upon a time, we relied on browsers to handle caching for us; as developers in those days, we had very little control. But then came Progressive Web Apps (PWAs), Service Workers, and the Cache API—and suddenly we have expansive power over what gets put in the cache and how it gets put there. We can now cache everything we want to… and therein lies a potential problem.

Media files—especially images—make up the bulk of average page weight these days, and it’s getting worse. In order to improve performance, it’s tempting to cache as much of this content as possible, but should we? In most cases, no. Even with all this newfangled technology at our fingertips, great performance still hinges on a simple rule: request only what you need and make each request as small as possible.

To provide the best possible experience for our users without abusing their network connection or their hard drive, it’s time to put a spin on some classic best practices, experiment with media caching strategies, and play around with a few Cache API tricks that Service Workers have hidden up their sleeves.

Best intentions

All those lessons we learned optimizing web pages for dial-up became super-useful again when mobile took off, and they continue to be applicable in the work we do for a global audience today. Unreliable or high latency network connections are still the norm in many parts of the world, reminding us that it’s never safe to assume a technical baseline lifts evenly or in sync with its corresponding cutting edge. And that’s the thing about performance best practices: history has borne out that approaches that are good for performance now will continue being good for performance in the future.

Before the advent of Service Workers, we could provide some instructions to browsers with respect to how long they should cache a particular resource, but that was about it. Documents and assets downloaded to a user’s machine would be dropped into a directory on their hard drive. When the browser assembled a request for a particular document or asset, it would peek in the cache first to see if it already had what it needed to possibly avoid hitting the network.

We have considerably more control over network requests and the cache these days, but that doesn’t excuse us from being thoughtful about the resources on our web pages.

Request only what you need

As I mentioned, the web today is lousy with media. Images and videos have become a dominant means of communication. They may convert well when it comes to sales and marketing, but they are hardly performant when it comes to download and rendering speed. With this in mind, each and every image (and video, etc.) should have to fight for its place on the page.

A few years back, a recipe of mine was included in a newspaper story on cooking with spirits (alcohol, not ghosts). I don’t subscribe to the print version of that paper, so when the article came out I went to the site to take a look at how it turned out. During a recent redesign, the site had decided to load all articles into a nearly full-screen modal viewbox layered on top of their homepage. This meant requesting the article required requests for all of the assets associated with the article page plus all the contents and assets for the homepage. Oh, and the homepage had video ads—plural. And, yes, they auto-played.

I popped open DevTools and discovered the page had blown past 15 MB in page weight. Tim Kadlec had recently launched What Does My Site Cost?, so I decided to check out the damage. Turns out that the actual cost to view that page for the average US-based user was more than the cost of the print version of that day’s newspaper. That’s just messed up.

Sure, I could blame the folks who built the site for doing their readers such a disservice, but the reality is that none of us go to work with the goal of worsening our users’ experiences. This could happen to any of us. We could spend days scrutinizing the performance of a page only to have some committee decide to set that carefully crafted page atop a Times Square of auto-playing video ads. Imagine how much worse things would be if we were stacking two abysmally-performing pages on top of each other!

Media can be great for drawing attention when competition is high (e.g., on the homepage of a newspaper), but when you want readers to focus on a single task (e.g., reading the actual article), its value can drop from important to “nice to have.” Yes, studies have shown that images excel at drawing eyeballs, but once a visitor is on the article page, no one cares; we’re just making it take longer to download and more expensive to access. The situation only gets worse as we shove more media into the page.

We must do everything in our power to reduce the weight of our pages, so avoid requests for things that don’t add value. For starters, if you’re writing an article about a data breach, resist the urge to include that ridiculous stock photo of some random dude in a hoodie typing on a computer in a very dark room.

Request the smallest file you can

Now that we’ve taken stock of what we do need to include, we must ask ourselves a critical question: How can we deliver it in the fastest way possible? This can be as simple as choosing the most appropriate image format for the content presented (and optimizing the heck out of it) or as complex as recreating assets entirely (for example, if switching from raster to vector imagery would be more efficient).

Offer alternate formats

When it comes to image formats, we don’t have to choose between performance and reach anymore. We can provide multiple options and let the browser decide which one to use, based on what it can handle.

You can accomplish this by offering multiple sources within a picture or video element. Start by creating multiple formats of the media asset. For example, with WebP and JPG, it’s likely that the WebP will have a smaller file size than the JPG (but check to make sure). With those alternate sources, you can drop them into a picture like this:

<picture> <source srcset="my.webp" type="image/webp"> <img src="my.jpg" alt="Descriptive text about the picture."> </picture>

Browsers that recognize the picture element will check the source element before making a decision about which image to request. If the browser supports the MIME type “image/webp,” it will kick off a request for the WebP format image. If not (or if the browser doesn’t recognize picture), it will request the JPG.

The nice thing about this approach is that you’re serving the smallest image possible to the user without having to resort to any sort of JavaScript hackery.

You can take the same approach with video files:

<video controls> <source src="my.webm" type="video/webm"> <source src="my.mp4" type="video/mp4"> <p>Your browser doesn’t support native video playback, but you can <a href="my.mp4" download>download</a> this video instead.</p> </video>

Browsers that support WebM will request the first source, whereas browsers that don’t—but do understand MP4 videos—will request the second one. Browsers that don’t support the video element will fall back to the paragraph about downloading the file.

The order of your source elements matters. Browsers will choose the first usable source, so if you specify an optimized alternative format after a more widely compatible one, the alternative format may never get picked up.

Depending on your situation, you might consider bypassing this markup-based approach and handle things on the server instead. For example, if a JPG is being requested and the browser supports WebP (which is indicated in the Accept header), there’s nothing stopping you from replying with a WebP version of the resource. In fact, some CDN services—Cloudinary, for instance—come with this sort of functionality right out of the box.

Offer different sizes

Formats aside, you may want to deliver alternate image sizes optimized for the current size of the browser’s viewport. After all, there’s no point loading an image that’s 3–4 times larger than the screen rendering it; that’s just wasting bandwidth. This is where responsive images come in.

Here’s an example:

<img src="medium.jpg" srcset="small.jpg 256w, medium.jpg 512w, large.jpg 1024w" sizes="(min-width: 30em) 30em, 100vw" alt="Descriptive text about the picture.">

There’s a lot going on in this super-charged img element, so I’ll break it down:

This img offers three size options for a given JPG: 256 px wide (small.jpg), 512 px wide (medium.jpg), and 1024 px wide (large.jpg). These are provided in the srcset attribute with corresponding width descriptors.

The src defines a default image source, which acts as a fallback for browsers that don’t support srcset. Your choice for the default image will likely depend on the context and general usage patterns. Often I’d recommend the smallest image be the default, but if the majority of your traffic is on older desktop browsers, you might want to go with the medium-sized image.

The sizes attribute is a presentational hint that informs the browser how the image will be rendered in different scenarios (its extrinsic size) once CSS has been applied. This particular example says that the image will be the full width of the viewport (100vw) until the viewport reaches 30 em in width (min-width: 30em), at which point the image will be 30 em wide. You can make the sizes value as complicated or as simple as you want; omitting it causes browsers to use the default value of 100vw.

You can even combine this approach with alternate formats and crops within a single picture. 🤯

All of this is to say that you have a number of tools at your disposal for delivering fast-loading media, so use them!

Defer requests (when possible)

Years ago, Internet Explorer 11 introduced a new attribute that enabled developers to de-prioritize specific img elements to speed up page rendering: lazyload. That attribute never went anywhere, standards-wise, but it was a solid attempt to defer image loading until images are in view (or close to it) without having to involve JavaScript.

There have been countless JavaScript-based implementations of lazy loading images since then, but recently Google also took a stab at a more declarative approach, using a different attribute: loading.

The loading attribute supports three values (“auto,” “lazy,” and “eager”) to define how a resource should be brought in. For our purposes, the “lazy” value is the most interesting because it defers loading the resource until it reaches a calculated distance from the viewport.

Adding that into the mix…

<img src="medium.jpg" srcset="small.jpg 256w, medium.jpg 512w, large.jpg 1024w" sizes="(min-width: 30em) 30em, 100vw" loading="lazy" alt="Descriptive text about the picture.">

This attribute offers a bit of a performance boost in Chromium-based browsers. Hopefully it will become a standard and get picked up by other browsers in the future, but in the meantime there’s no harm in including it because browsers that don’t understand the attribute will simply ignore it.

This approach complements a media prioritization strategy really well, but before I get to that, I want to take a closer look at Service Workers.

Manipulate requests in a Service Worker

Service Workers are a special type of Web Worker with the ability to intercept, modify, and respond to all network requests via the Fetch API. They also have access to the Cache API, as well as other asynchronous client-side data stores like IndexedDB for resource storage.

When a Service Worker is installed, you can hook into that event and prime the cache with resources you want to use later. Many folks use this opportunity to squirrel away copies of global assets, including styles, scripts, logos, and the like, but you can also use it to cache images for use when network requests fail.

Keep a fallback image in your back pocket

Assuming you want to use a fallback in more than one networking recipe, you can set up a named function that will respond with that resource:

function respondWithFallbackImage() { return caches.match( "/i/fallbacks/offline.svg" ); }

Then, within a fetch event handler, you can use that function to provide that fallback image when requests for images fail at the network:

self.addEventListener( "fetch", event => { const request = event.request; if ( request.headers.get("Accept").includes("image") ) { event.respondWith( return fetch( request, { mode: 'no-cors' } ) .then( response => { return response; }) .catch( respondWithFallbackImage ); ); } });

When the network is available, users get the expected behavior:

Social media avatars are rendered as expected when the network is available.

But when the network is interrupted, images will be swapped automatically for a fallback, and the user experience is still acceptable:

A generic fallback avatar is rendered when the network is unavailable.

On the surface, this approach may not seem all that helpful in terms of performance since you’ve essentially added an additional image download into the mix. With this system in place, however, some pretty amazing opportunities open up to you.

Respect a user’s choice to save data

Some users reduce their data consumption by entering a “lite” mode or turning on a “data saver” feature. When this happens, browsers will often send a Save-Data header with their network requests.

Within your Service Worker, you can look for this header and adjust your responses accordingly. First, you look for the header:

let save_data = false; if ( 'connection' in navigator ) { save_data = navigator.connection.saveData; }

Then, within your fetch handler for images, you might choose to preemptively respond with the fallback image instead of going to the network at all:

self.addEventListener( "fetch", event => { const request = event.request; if ( request.headers.get("Accept").includes("image") ) { event.respondWith( if ( save_data ) { return respondWithFallbackImage(); } // code you saw previously ); } });

You could even take this a step further and tune respondWithFallbackImage() to provide alternate images based on what the original request was for. To do that you’d define several fallbacks globally in the Service Worker:

const fallback_avatar = "/i/fallbacks/avatar.svg", fallback_image = "/i/fallbacks/image.svg";

Both of those files should then be cached during the Service Worker install event:

return cache.addAll( [ fallback_avatar, fallback_image ]);

Finally, within respondWithFallbackImage() you could serve up the appropriate image based on the URL being fetched. In my site, the avatars are pulled from Webmention.io, so I test for that.

function respondWithFallbackImage( url ) { const image = avatars.test( /webmention\.io/ ) ? fallback_avatar : fallback_image; return caches.match( image ); }

With that change, I’ll need to update the fetch handler to pass in request.url as an argument to respondWithFallbackImage(). Once that’s done, when the network gets interrupted I end up seeing something like this:

A webmention that contains both an avatar and an embedded image will render with two different fallbacks when the Save-Data header is present.

Next, we need to establish some general guidelines for handling media assets—based on the situation, of course.

The caching strategy: prioritize certain media

In my experience, media—especially images—on the web tend to fall into three categories of necessity. At one end of the spectrum are elements that don’t add meaningful value. At the other end of the spectrum are critical assets that do add value, such as charts and graphs that are essential to understanding the surrounding content. Somewhere in the middle are what I would call “nice-to-have” media. They do add value to the core experience of a page but are not critical to understanding the content.

If you consider your media with this division in mind, you can establish some general guidelines for handling each, based on the situation. In other words, a caching strategy.

Media loading strategy, broken down by how critical an asset is to understanding an interface Media category Fast connection Save-Data Slow connection No network Critical Load media Replace with placeholder Nice-to-have Load media Replace with placeholder Non-critical Remove from content entirely

When it comes to disambiguating the critical from the nice-to-have, it’s helpful to have those resources organized into separate directories (or similar). That way we can add some logic into the Service Worker that can help it decide which is which. For example, on my own personal site, critical images are either self-hosted or come from the website for my book. Knowing that, I can write regular expressions that match those domains:

const high_priority = [ /aaron\-gustafson\.com/, /adaptivewebdesign\.info/ ];

With that high_priority variable defined, I can create a function that will let me know if a given image request (for example) is a high priority request or not:

function isHighPriority( url ) { // how many high priority links are we dealing with? let i = high_priority.length; // loop through each while ( i-- ) { // does the request URL match this regular expression? if ( high_priority[i].test( url ) ) { // yes, it’s a high priority request return true; } } // no matches, not high priority return false; }

Adding support for prioritizing media requests only requires adding a new conditional into the fetch event handler, like we did with Save-Data. Your specific recipe for network and cache handling will likely differ, but here was how I chose to mix in this logic within image requests:

// Check the cache first // Return the cached image if we have one // If the image is not in the cache, continue // Is this image high priority? if ( isHighPriority( url ) ) { // Fetch the image // If the fetch succeeds, save a copy in the cache // If not, respond with an "offline" placeholder // Not high priority } else { // Should I save data? if ( save_data ) { // Respond with a "saving data" placeholder // Not saving data } else { // Fetch the image // If the fetch succeeds, save a copy in the cache // If not, respond with an "offline" placeholder } }

We can apply this prioritized approach to many kinds of assets. We could even use it to control which pages are served cache-first vs. network-first.

Keep the cache tidy

The ability to control which resources are cached to disk is a huge opportunity, but it also carries with it an equally huge responsibility not to abuse it.

Every caching strategy is likely to differ, at least a little bit. If we’re publishing a book online, for instance, it might make sense to cache all of the chapters, images, etc. for offline viewing. There’s a fixed amount of content and—assuming there aren’t a ton of heavy images and videos—users will benefit from not having to download each chapter separately.

On a news site, however, caching every article and photo will quickly fill up our users’ hard drives. If a site offers an indeterminate number of pages and assets, it’s critical to have a caching strategy that puts hard limits on how many resources we’re caching to disk.

One way to do this is to create several different blocks associated with caching different forms of content. The more ephemeral content caches can have strict limits around how many items can be stored. Sure, we’ll still be bound to the storage limits of the device, but do we really want our website to take up 2 GB of someone’s hard drive?

Here’s an example, again from my own site:

const sw_caches = { static: { name: `${version}static` }, images: { name: `${version}images`, limit: 75 }, pages: { name: `${version}pages`, limit: 5 }, other: { name: `${version}other`, limit: 50 } }

Here I’ve defined several caches, each with a name used for addressing it in the Cache API and a version prefix. The version is defined elsewhere in the Service Worker, and allows me to purge all caches at once if necessary.

With the exception of the static cache, which is used for static assets, every cache has a limit to the number of items that may be stored. I only cache the most recent 5 pages someone has visited, for instance. Images are limited to the most recent 75, and so on. This is an approach that Jeremy Keith outlines in his fantastic book Going Offline (which you should really read if you haven’t already—here’s a sample).

With these cache definitions in place, I can clean up my caches periodically and prune the oldest items. Here’s Jeremy’s recommended code for this approach:

function trimCache(cacheName, maxItems) { // Open the cache caches.open(cacheName) .then( cache => { // Get the keys and count them cache.keys() .then(keys => { // Do we have more than we should? if (keys.length > maxItems) { // Delete the oldest item and run trim again cache.delete(keys[0]) .then( () => { trimCache(cacheName, maxItems) }); } }); }); }

We can trigger this code to run whenever a new page loads. By running it in the Service Worker, it runs in a separate thread and won’t drag down the site’s responsiveness. We trigger it by posting a message (using postMessage()) to the Service Worker from the main JavaScript thread:

// First check to see if you have an active service worker if ( navigator.serviceWorker.controller ) { // Then add an event listener window.addEventListener( "load", function(){ // Tell the service worker to clean up navigator.serviceWorker.controller.postMessage( "clean up" ); }); }

The final step in wiring it all up is setting up the Service Worker to receive the message:

addEventListener("message", messageEvent => { if (messageEvent.data == "clean up") { // loop though the caches for ( let key in sw_caches ) { // if the cache has a limit if ( sw_caches[key].limit !== undefined ) { // trim it to that limit trimCache( sw_caches[key].name, sw_caches[key].limit ); } } } });

Here, the Service Worker listens for inbound messages and responds to the “clean up” request by running trimCache() on each of the cache buckets with a defined limit.

This approach is by no means elegant, but it works. It would be far better to make decisions about purging cached responses based on how frequently each item is accessed and/or how much room it takes up on disk. (Removing cached items based purely on when they were cached isn’t nearly as useful.) Sadly, we don’t have that level of detail when it comes to inspecting the caches…yet. I’m actually working to address this limitation in the Cache API right now.

Your users always come first

The technologies underlying Progressive Web Apps are continuing to mature, but even if you aren’t interested in turning your site into a PWA, there’s so much you can do today to improve your users’ experiences when it comes to media. And, as with every other form of inclusive design, it starts with centering on your users who are most at risk of having an awful experience.

Draw distinctions between critical, nice-to-have, and superfluous media. Remove the cruft, then optimize the bejeezus out of each remaining asset. Serve your media in multiple formats and sizes, prioritizing the smallest versions first to make the most of high latency and slow connections. If your users say they want to save data, respect that and have a fallback plan in place. Cache wisely and with the utmost respect for your users’ disk space. And, finally, audit your caching strategies regularly—especially when it comes to large media files.Follow these guidelines, and every one of your users—from folks rocking a JioPhone on a rural mobile network in India to people on a high-end gaming laptop wired to a 10 Gbps fiber line in Silicon Valley—will thank you.

Request with Intent: Caching Strategies in the Age of PWAs published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Monthly Web Development Update 8/2019: Strong Teams And Ethical Data Sensemaking

Monthly Web Development Update 8/2019: Strong Teams And Ethical Data Sensemaking

Anselm Hannemann

2019-08-16T13:51:00+02:002019-08-16T12:19:12+00:00

What’s more powerful than a star who knows everything? Well, a team not made of stars but of people who love what they do, stand behind their company’s vision and can work together, support each other. Like a galaxy made of stars — where not every star shines and also doesn’t need to. Everyone has their place, their own strength, their own weakness. Teams don’t consist only of stars, they consist of people, and the most important thing is that the work and life culture is great. So don’t do a moonshot if you’re hiring someone but try to look for someone who fits into your team and encourages, supports your team’s values and members.

In terms of your own life, take some time today to take a deep breath and recall what happened this week. Go through it day by day and appreciate the actions, the negative ones as well as the positive ones. Accept that negative things happen in our lives as well, otherwise we wouldn’t be able to feel good either. It’s a helpful exercise to balance your life, to have a way of invalidating the feeling of “I did nothing this week” or “I was quite unproductive.” It makes you understand why you might not have worked as much as you’re used to — but it feels fine because there’s a reason for it.

News

Three weeks ago we officially exhausted the Earth’s natural resources for the year — with four months left in 2019. Earth Overshoot Day is a good indicator of where we’re currently at in the fight against climate change and it’s a great initiative by people who try to give helpful advice on how we can move that date so one day in the (hopefully) near future we’ll reach overshoot day not before the end of the year or even in a new year.

Chrome 76 brings the prefers-color-scheme media query (e.g. for dark mode support) and multiple simplifications for PWA installation.

UI/UX

There are times to use toggle switches and times not to. When designers misuse them, it leads to confused and frustrated users. Knowing when to use them requires an understanding of the different types of toggle states and options.

Font Awesome introduced Duotone Icons. An amazing set that is worth taking a look at.

JavaScript

Ben Frain explores the possibility of building a Progressive Web Application (PWA) without a framework. A quite interesting article series that shows the difference between relying on frameworks by default and building things from scratch.

Web Performance

Some experiments sound silly but in reality, they’re not: Chris Ashton used the web for a day on a 50MB budget. In Zimbabwe, for example, where 1 GB costs an average of $75.20, ranging from $12.50 to $138.46, 50MB is incredibly expensive. So reducing your app bundle size, image size, and website cost are directly related to how happy your users are when they browse your site or use your service. If it costs them $3.76 (50MB) to access your new sports shoe teaser page, it’s unlikely that they will buy or recommend it.

BBC’s Toby Cox shares how they ditched iframes in favor of ShadowDOM to improve their site performance significantly. This is a good piece explaining the advantages and drawbacks of iframes and why adopting ShadowDOM takes time and still feels uncomfortable for most of us.

Craig Mod shares why people prefer to choose (and pay for) fast software. People are grateful for it and are easily annoyed if the app takes too much time to start or shows a laggy user interface.

Harry Roberts explains the details of the “time to first byte” metric and why it matters.

CSS

Yes, prefers-reduced-motion isn’t super new anymore but still heavily underused on the web. Here’s how to apply it to your web application to serve a user’s request for reduced motion.

HTML & SVG

With Chrome 76 we get the loading attribute which allows for native lazy loading of images just with HTML. It’s great to have a handy article that explains how to use, debug, and test it on your website today.

No more custom lazy-loading code or a separate JavaScript library needed: Chrome 76 comes with native lazy loading built in. (Image credit)

Accessibility

The best algorithms available today still struggle to recognize black faces equally good as white ones. Which again shows how important it is to have diverse teams and care about inclusiveness.

Security

Here’s a technical analysis of the Capital One hack. A good read for anyone who uses Cloud providers like AWS for their systems because it all comes down to configuring accounts correctly to prevent hackers from gaining access due to a misconfigured cloud service user role.

Privacy

Safari introduced its Intelligent Tracking Prevention technology a while ago. Now there’s an official Safari ITP policy documentation that explains how it works, what will be blocked and what not.

SmashingMag launched a print and eBook magazine all about ethics and privacy. It contains great pieces on designing for addiction, how to improve ethics step by step, and quieting disquiet. A magazine worth reading.

Work & Life

“For a long time I believed that a strong team is made of stars — extraordinary world-class individuals who can generate and execute ideas at a level no one else can. These days, I feel that a strong team is the one that feels more like a close family than a constellation of stars. A family where everybody has a sense of predictability, trust and respect for each other. A family which deeply embodies the values the company carries and reflects these values throughout their work. But also a family where everybody feels genuinely valued, happy and ignited to create,” said Vitaly Friedman in an update thought recently and I couldn’t agree more.