#ursula franklin lecture

Explore tagged Tumblr posts

Text

With Great Power Came No Responsibility

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in NYC TONIGHT (26 Feb) with JOHN HODGMAN and at PENN STATE TOMORROW (Feb 27). More tour dates here. Mail-order signed copies from LA's Diesel Books.

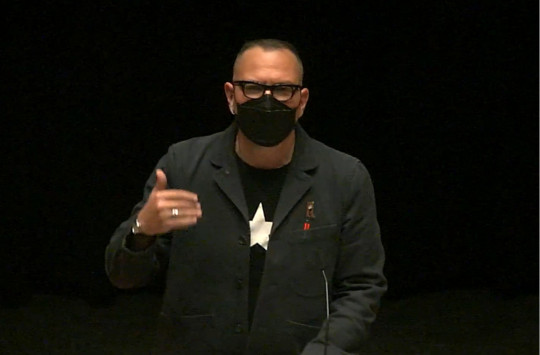

Last night, I traveled to Toronto to deliver the annual Ursula Franklin Lecture at the University of Toronto's Innis College:

The lecture was called "With Great Power Came No Responsibility: How Enshittification Conquered the 21st Century and How We Can Overthrow It." It's the latest major speech in my series of talks on the subject, which started with last year's McLuhan Lecture in Berlin:

https://pluralistic.net/2024/01/30/go-nuts-meine-kerle/#ich-bin-ein-bratapfel

And continued with a summer Defcon keynote:

https://pluralistic.net/2024/08/17/hack-the-planet/#how-about-a-nice-game-of-chess

This speech specifically addresses the unique opportunities for disenshittification created by Trump's rapid unscheduled midair disassembly of the international free trade system. The US used trade deals to force nearly every country in the world to adopt the IP laws that make enshittification possible, and maybe even inevitable. As Trump burns these trade deals to the ground, the rest of the world has an unprecedented opportunity to retaliate against American bullying by getting rid of these laws and producing the tools, devices and services that can protect every tech user (including Americans) from being ripped off by US Big Tech companies.

I'm so grateful for the chance to give this talk. I was hosted for the day by the Centre for Culture and Technology, which was founded by Marshall McLuhan, and is housed in the coach house he used for his office. The talk itself took place in Innis College, named for Harold Innis, who is definitely the thinking person's Marshall McLuhan. What's more, I was mentored by Innis's daughter, Anne Innis Dagg, a radical, brilliant feminist biologist who pretty much invented the field of giraffology:

https://pluralistic.net/2020/02/19/pluralist-19-feb-2020/#annedagg

But with all respect due to Anne and her dad, Ursula Franklin is the thinking person's Harold Innis. A brilliant scientist, activist and communicator who dedicated her life to the idea that the most important fact about a technology wasn't what it did, but who it did it for and who it did it to. Getting to work out of McLuhan's office to present a talk in Innis's theater that was named after Franklin? Swoon!

https://en.wikipedia.org/wiki/Ursula_Franklin

Here's the text of the talk, lightly edited:

I know tonight’s talk is supposed to be about decaying tech platforms, but I want to start by talking about nurses.

A January 2025 report from Groundwork Collective documents how increasingly nurses in the USA are hired through gig apps – "Uber for nurses” – so nurses never know from one day to the next whether they're going to work, or how much they'll get paid.

There's something high-tech going on here with those nurses' wages. These nursing apps – a cartel of three companies, Shiftkey, Shiftmed and Carerev – can play all kinds of games with labor pricing.

Before Shiftkey offers a nurse a shift, it purchases that worker's credit history from a data-broker. Specifically, it pays to find out how much credit-card debt the nurse is carrying, and whether it is overdue.

The more desperate the nurse's financial straits are, the lower the wage on offer. Because the more desperate you are, the less you'll accept to come and do the gruntwork of caring for the sick, the elderly, and the dying.

Now, there are lots of things going on here, and they're all terrible. What's more, they are emblematic of “enshittification,” the word I coined to describe the decay of online platforms.

When I first started writing about this, I focused on the external symptology of enshittification, a three stage process:

First, the platform is good to its end users, while finding a way to lock them in.

Like Google, which minimized ads and maximized spending on engineering for search results, even as they bought their way to dominance, bribing every service or product with a search box to make it a Google search box.

So no matter what browser you used, what mobile OS you used, what carrier you had, you would always be searching on Google by default. This got so batshit that by the early 2020s, Google was spending enough money to buy a whole-ass Twitter, every year or two, just to make sure that no one ever tried a search engine that wasn't Google.

That's stage one: be good to end users, lock in end users.

Stage two is when the platform starts to abuse end users to tempt in and enrich business customers. For Google, that’s advertisers and web publishers. An ever-larger fraction of a Google results page is given over to ads, which are marked with ever-subtler, ever smaller, ever grayer labels. Google uses its commercial surveillance data to target ads to us.

So that's stage two: things get worse for end users and get better for business customers.

But those business customers also get locked into the platform, dependent on those customers. Once businesses are getting as little as 10% of their revenue from Google, leaving Google becomes an existential risk. We talk a lot about Google's "monopoly" power, which is derived from its dominance as a seller. But Google is also a monopsony, a powerful buyer.

So now you have Google acting as a monopolist to its users (stage one), and a monoposonist for its business customers (stage two) and here comes stage three: where Google claws back all the value in the platform, save a homeopathic residue calculated to keep end users locked in, and business customers locked to those end users.

Google becomes enshittified.

In 2019, Google had a turning point. Search had grown as much as it possibly could. More than 90% of us used Google for search, and we searched for everything. Any thought or idle question that crossed our minds, we typed into Google.

How could Google grow? There were no more users left to switch to Google. We weren't going to search for more things. What could Google do?

Well, thanks to internal memos published during last year's monopoly trial against Google, we know what they did. They made search worse. They reduced the system's accuracy it so you had to search twice or more to get to the answer, thus doubling the number of queries, and doubling the number of ads.

Meanwhile, Google entered into a secret, illegal collusive arrangement with Facebook, codenamed Jedi Blue, to rig the ad market, fixing prices so advertisers paid more and publishers got less.

And that's how we get to the enshittified Google of today, where every query serves back a blob of AI slop, over five paid results tagged with the word AD in 8-point, 10% grey on white type, which is, in turn, over ten spammy links from SEO shovelware sites filled with more AI slop.

And yet, we still keep using Google, because we're locked into it. That's enshittification, from the outside. A company that's good to end users, while locking them in. Then it makes things worse for end users, to make things better for business customers, while locking them in. Then it takes all the value for itself and turns into a giant pile of shit.

Enshittification, a tragedy in three acts.

I started off focused on the outward signs of enshittification, but I think it's time we start thinking about what's going in inside the companies to make enshittification possible.

What is the technical mechanism for enshittification? I call it twiddling. Digital businesses have infinite flexibility, bequeathed to them by the marvellously flexible digital computers they run on. That means that firms can twiddle the knobs that control the fundamental aspects of their business. Every time you interact with a firm, everything is different: prices, costs, search rankings, recommendations.

Which takes me back to our nurses. This scam, where you look up the nurse's debt load and titer down the wage you offer based on it in realtime? That's twiddling. It's something you can only do with a computer. The bosses who are doing this aren't more evil than bosses of yore, they just have better tools.

Note that these aren't even tech bosses. These are health-care bosses, who happen to have tech.

Digitalization – weaving networked computers through a firm or a sector – enables this kind of twiddling that allows firms to shift value around, from end users to business customers, from business customers back to end users, and eventually, inevitably, to themselves.

And digitalization is coming to every sector – like nursing. Which means enshittification is coming to every sector – like nursing.

The legal scholar Veena Dubal coined a term to describe the twiddling that suppresses the wages of debt-burdened nurses. It's called "Algorithmic Wage Discrimination," and it follows the gig economy.

The gig economy is a major locus of enshittification, and it’s the largest tear in the membrane separating the virtual world from the real world. Gig work, where your shitty boss is a shitty app, and you aren't even allowed to call yourself an employee.

Uber invented this trick. Drivers who are picky about the jobs the app puts in front of them start to get higher wage offers. But if they yield to temptation and take some of those higher-waged option, then the wage starts to go down again, in random intervals, by small increments, designed to be below the threshold for human perception. Not so much boiling the frog as poaching it, until the Uber driver has gone into debt to buy a new car, and given up the side hustles that let them be picky about the rides they accepted. Then their wage goes down, and down, and down.

Twiddling is a crude trick done quickly. Any task that's simple but time consuming is a prime candidate for automation, and this kind of wage-theft would be unbearably tedious, labor-intensive and expensive to perform manually. No 19th century warehouse full of guys with green eyeshades slaving over ledgers could do this. You need digitalization.

Twiddling nurses' hourly wages is a perfect example of the role digitization pays in enshittification. Because this kind of thing isn't just bad for nurses – it's bad for patients, too. Do we really think that paying nurses based on how desperate they are, at a rate calculated to increase that desperation, and thus decrease the wage they are likely to work for, is going to result in nurses delivering the best care?

Do you want to your catheter inserted by a nurse on food stamps, who drove an Uber until midnight the night before, and skipped breakfast this morning in order to make rent?

This is why it’s so foolish to say "If you're not paying for the product, you're the product." “If you’re not paying for the product” ascribes a mystical power to advertising-driven services: the power to bypass our critical faculties by surveilling us, and data-mining the resulting dossiers to locate our mental bind-spots, and weaponize them to get us to buy anything an advertiser is selling.

In this formulation, we are complicit in our own exploitation. By choosing to use "free" services, we invite our own exploitation by surveillance capitalists who have perfected a mind-control ray powered by the surveillance data we're voluntarily handing over by choosing ad-driven services.

The moral is that if we only went back to paying for things, instead of unrealistically demanding that everything be free, we would restore capitalism to its functional, non-surveillant state, and companies would start treating us better, because we'd be the customers, not the products.

That's why the surveillance capitalism hypothesis elevates companies like Apple as virtuous alternatives. Because Apple charges us money, rather than attention, it can focus on giving us better service, rather than exploiting us.

There's a superficially plausible logic to this. After all, in 2022, Apple updated its iOS operating system, which runs on iPhones and other mobile devices, introducing a tick box that allowed you to opt out of third-party surveillance, most notably Facebook’s.

96% of Apple customers ticked that box. The other 4% were, presumably drunk, or Facebook employees, or Facebook employees who were drunk. Which makes sense, because if I worked for Facebook, I'd be drunk all the time.

So on the face of it, it seems like Apple isn't treating its customers like "the product." But simultaneously with this privacy measure, Apple was secretly turning on its own surveillance system for iPhone owners, which would spy on them in exactly the way Facebook had, for exactly the same purpose: to target ads to you based on the places you'd been, the things you'd searched for, the communications you'd had, the links you'd clicked.

Apple didn't ask its customers for permission to spy on them. It didn't let opt out of this spying. It didn’t even tell them about it, and when it was caught, Apple lied about it.

It goes without saying that the $1000 Apple distraction rectangle in your pocket is something you paid for. The fact that you've paid for it doesn't stop Apple from treating you as the product. Apple treats its business customers – app vendors – like the product, screwing them out of 30 cents on every dollar they bring in, with mandatory payment processing fees that are 1,000% higher than the already extortionate industry norm.

Apple treats its end users – people who shell out a grand for a phone – like the product, spying on them to help target ads to them.

Apple treats everyone like the product.

This is what's going on with our gig-app nurses: the nurses are the product. The patients are the product. The hospitals are the product. In enshittification, "the product" is anyone who can be productized.

Fair and dignified treatment is not something you get as a customer loyalty perk, in exchange for parting with your money, rather than your attention. How do you get fair and dignified treatment? Well, I'm gonna get to that, but let's stay with our nurses for a while first.

The nurses are the product, and they're being twiddled, because they've been conscripted into the tech industry, via the digitalization of their own industry.

It's tempting to blame digitalization for this. But tech companies were not born enshittified. They spent years – decades – making pleasing products. If you're old enough to remember the launch of Google, you'll recall that, at the outset, Google was magic.

You could Ask Jeeves questions for a million years, you could load up Altavista with ten trillion boolean search operators meant to screen out low-grade results, and never come up with answers as crisp, as useful, as helpful, as the ones you'd get from a few vaguely descriptive words in a Google search-bar.

There's a reason we all switched to Google. Why so many of us bought iPhones. Why we joined our friends on Facebook. All of these services were born digital. They could have enshittified at any time. But they didn't – until they did. And they did it all at once.

If you were a nurse, and every patient that staggered into the ER had the same dreadful symptoms, you'd call the public health department and report a suspected outbreak of a new and dangerous epidemic.

Ursula Franklin held that technology's outcomes were not preordained. They are the result of deliberate choices. I like that very much, it's a very science fictional way of thinking about technology. Good science fiction isn't merely about what the technology does, but who it does it for, and who it does it to.

Those social factors are far more important than the mere technical specifications of a gadget. They're the difference between a system that warns you when you're about to drift out of your lane, and a system that tells your insurer that you nearly drifted out of your lane, so they can add $10 to your monthly premium.

They’re the difference between a spell checker that lets you know you've made a typo, and bossware that lets your manager use the number of typos you made this quarter so he can deny your bonus.

They’re the difference between an app that remembers where you parked your car, and an app that uses the location of your car as a criteria for including you in a reverse warrant for the identities of everyone in the vicinity of an anti-government protest.

I believe that enshittification is caused by changes not to technology, but to the policy environment. These are changes to the rules of the game, undertaken in living memory, by named parties, who were warned at the time about the likely outcomes of their actions, who are today very rich and respected, and face no consequences or accountability for their role in ushering in the enshittocene. They venture out into polite society without ever once wondering if someone is sizing them up for a pitchfork.

In other words: I think we created a crimogenic environment, a perfect breeding pool for the most pathogenic practices in our society, that have therefore multiplied, dominating decision-making in our firms and states, leading to a vast enshittening of everything.

And I think there's good news there, because if enshittification isn't the result a new kind of evil person, or the great forces of history bearing down on the moment to turn everything to shit, but rather the result of specific policy choices, then we can reverse those policies, make better ones and emerge from the enshittocene, consigning the enshitternet to the scrapheap of history, a mere transitional state between the old, good internet, and a new, good internet.

I'm not going to talk about AI today, because oh my god is AI a boring, overhyped subject. But I will use a metaphor about AI, about the limited liability company, which is a kind of immortal, artificial colony organism in which human beings serve as a kind of gut flora. My colleague Charlie Stross calls corporations "slow AI.”

So you've got these slow AIs whose guts are teeming with people, and the AI's imperative, the paperclip it wants to maximize, is profit. To maximize profits, you charge as much as you can, you pay your workers and suppliers as little as you can, you spend as little as possible on safety and quality.

Every dollar you don't spend on suppliers, workers, quality or safety is a dollar that can go to executives and shareholders. So there's a simple model of the corporation that could maximize its profits by charging infinity dollars, while paying nothing to its workers or suppliers, and ignoring quality and safety.

But that corporation wouldn't make any money, for the obvious reasons that none of us would buy what it was selling, and no one would work for it or supply it with goods. These constraints act as disciplining forces that tamp down the AI's impulse to charge infinity and pay nothing.

In tech, we have four of these constraints, anti-enshittificatory sources of discipline that make products and services better, pay workers more, and keep executives’ and shareholders' wealth from growing at the expense of customers, suppliers and labor.

The first of these constraints is markets. All other things being equal, a business that charges more and delivers less will lose customers to firms that are more generous about sharing value with workers, customers and suppliers.

This is the bedrock of capitalist theory, and it's the ideological basis for competition law, what our American cousins call "antitrust law."

The first antitrust law was 1890's Sherman Act, whose sponsor, Senator John Sherman, stumped for it from the senate floor, saying:

If we will not endure a King as a political power we should not endure a King over the production, transportation, and sale of the necessaries of life. If we would not submit to an emperor we should not submit to an autocrat of trade with power to prevent competition and to fix the price of any commodity.

Senator Sherman was reflecting the outrage of the anitmonopolist movement of the day, when proprietors of monopolistic firms assumed the role of dictators, with the power to decide who would work, who would starve, what could be sold, and what it cost.

Lacking competitors, they were too big to fail, too big to jail, and too big to care. As Lily Tomlin used to put it in her spoof AT&T ads on SNL: "We don't care. We don't have to. We're the phone company.”

So what happened to the disciplining force of competition? We killed it. Starting 40-some years ago, the Reagaonomic views of the Chicago School economists transformed antitrust. They threw out John Sherman's idea that we need to keep companies competitive to prevent the emergence of "autocrats of trade,"and installed the idea that monopolies are efficient.

In other words, if Google has a 90% search market share, which it does, then we must infer that Google is the best search engine ever, and the best search engine possible. The only reason a better search engine hasn't stepped in is that Google is so skilled, so efficient, that there is no conceivable way to improve upon it.

We can tell that Google is the best because it has a monopoly, and we can tell that the monopoly is good because Google is the best.

So 40 years ago, the US – and its major trading partners – adopted an explicitly pro-monopoly competition policy.

Now, you'll be glad to hear that this isn't what happened to Canada. The US Trade Rep didn't come here and force us to neuter our competition laws. But don't get smug! The reason that didn't happen is that it didn't have to. Because Canada had no competition law to speak of, and never has.

In its entire history, the Competition Bureau has challenged three mergers, and it has halted precisely zero mergers, which is how we've ended up with a country that is beholden to the most mediocre plutocrats imaginable like the Irvings, the Westons, the Stronachs, the McCains and the Rogerses.

The only reason these chinless wonders were able to conquer this country Is that the Americans had been crushing their monopolists before they could conquer the US and move on to us. But 40 years ago, the rest of the world adopted the Chicago School's pro-monopoly "consumer welfare standard,” and we got…monopolies.

Monopolies in pharma, beer, glass bottles, vitamin C, athletic shoes, microchips, cars, mattresses, eyeglasses, and, of course, professional wrestling.

Remember: these are specific policies adopted in living memory, by named individuals, who were warned, and got rich, and never faced consequences. The economists who conceived of these policies are still around today, polishing their fake Nobel prizes, teaching at elite schools, making millions consulting for blue-chip firms.

When we confront them with the wreckage their policies created, they protest their innocence, maintaining – with a straight face – that there's no way to affirmatively connect pro-monopoly policies with the rise of monopolies.

It's like we used to put down rat poison and we didn't have a rat problem. Then these guys made us stop, and now rats are chewing our faces off, and they're making wide innocent eyes, saying, "How can you be sure that our anti-rat-poison policies are connected to global rat conquest? Maybe this is simply the Time of the Rat! Maybe sunspots caused rats to become more fecund than at any time in history! And if they bought the rat poison factories and shut them all down, well, so what of it? Shutting down rat poison factories after you've decided to stop putting down rat poison is an economically rational, Pareto-optimal decision."

Markets don't discipline tech companies because they don't compete with rivals, they buy them. That's a quote, from Mark Zuckerberg: “It is better to buy than to compete.”

Which is why Mark Zuckerberg bought Instagram for a billion dollars, even though it only had 12 employees and 25m users. As he wrote in a spectacularly ill-advised middle-of-the-night email to his CFO, he had to buy Instagram, because Facebook users were leaving Facebook for Instagram. By buying Instagram, Zuck ensured that anyone who left Facebook – the platform – would still be a prisoner of Facebook – the company.

Despite the fact that Zuckerberg put this confession in writing, the Obama administration let him go ahead with the merger, because every government, of every political stripe, for 40 years, adopted the posture that monopolies were efficient.

Now, think about our twiddled, immiserated nurses. Hospitals are among the most consolidated sectors in the US. First, we deregulated pharma mergers, and the pharma companies gobbled each other up at the rate of naughts, and they jacked up the price of drugs. So hospitals also merged to monopoly, a defensive maneuver that let a single hospital chain corner the majority of a region or city and say to the pharma companies, "either you make your products cheaper, or you can't sell them to any of our hospitals."

Of course, once this mission was accomplished, the hospitals started screwing the insurers, who staged their own incestuous orgy, buying and merging until most Americans have just three or two insurance options. This let the insurers fight back against the hospitals, but left patients and health care workers defenseless against the consolidated power of hospitals, pharma companies, pharmacy benefit managers, group purchasing organizations, and other health industry cartels, duopolies and monopolies.

Which is why nurses end up signing on to work for hospitals that use these ghastly apps. Remember, there's just three of these apps, replacing dozens of staffing agencies that once competed for nurses' labor.

Meanwhile, on the patient side, competition has never exercised discipline. No one ever shopped around for a cheaper ambulance or a better ER while they were having a heart attack. The price that people are willing to pay to not die is “everything they have.”

So you have this sector that has no business being a commercial enterprise in the first place, losing what little discipline they faced from competition, paving the way for enshittification.

But I said there are four forces that discipline companies. The second one of these forces is regulation, discipline imposed by states.

It’s a mistake to see market discipline and state discipline as two isolated realms. They are intimately connected. Because competition is a necessary condition for effective regulation.

Let me put this in terms that even the most ideological libertarians can understand. Say you think there should be precisely one regulation that governments should enforce: honoring contracts. For the government to serve as referee in that game, it must have the power to compel the players to honor their contracts. Which means that the smallest government you can have is determined by the largest corporation you're willing to permit.

So even if you're the kind of Musk-addled libertarian who can no longer open your copy of Atlas Shrugged because the pages are all stuck together, who pines for markets for human kidneys, and demands the right to sell yourself into slavery, you should still want a robust antitrust regime, so that these contracts can be enforced.

When a sector cartelizes, when it collapses into oligarchy, when the internet turns into "five giant websites, each filled with screenshots of the other four," then it captures its regulators.

After all, a sector with 100 competing companies is a rabble, at each others' throats. They can't agree on anything, especially how they're going to lobby.

While a sector of five companies – or four – or three – or two – or one – is a cartel, a racket, a conspiracy in waiting. A sector that has been boiled down to a mere handful of firms can agree on a common lobbying position.

What's more, they are so insulated from "wasteful competition" that they are aslosh in cash that they can mobilize to make their regulatory preferences into regulations. In other words, they can capture their regulators.

“Regulatory capture" may sound abstract and complicated, so let me put it in concrete terms. In the UK, the antitrust regulator is called the Competition and Markets Authority, run – until recently – by Marcus Bokkerink. The CMA has been one of the world's most effective investigators and regulators of Big Tech fuckery.

Last month, UK PM Keir Starmer fired Bokkerink and replaced him with Doug Gurr, the former head of Amazon UK. Hey, Starmer, the henhouse is on the line, they want their fox back.

But back to our nurses: there are plenty of examples of regulatory capture lurking in that example, but I'm going to pick the most egregious one, the fact that there are data brokers who will sell you information about the credit card debts of random Americans.

This is because the US Congress hasn't passed a new consumer privacy law since 1988, when Ronald Reagan signed a law called the Video Privacy Protection Act that bans video store clerks from telling newspapers which VHS cassettes you took home. The fact that Congress hasn't updated Americans' privacy protections since Die Hard was in theaters isn't a coincidence or an oversight. It is the expensively purchased inaction of a heavily concentrated – and thus wildly profitable – privacy-invasion industry that has monetized the abuse of human rights at unimaginable scale.

The coalition in favor of keeping privacy law frozen since the season finale of St Elsewhere keeps growing, because there is an unbounded set of way to transform the systematic invasion of our human rights into cash. There's a direct line from this phenomenon to nurses whose paychecks go down when they can't pay their credit-card bills.

So competition is dead, regulation is dead, and companies aren't disciplined by markets or by states.

But there are four forces that discipline firms, contributing to an inhospitable environment for the reproduction of sociopathic. enshittifying monsters.

So let's talk about those other two forces. The first is interoperability, the principle of two or more things working together. Like, you can put anyone's shoelaces in your shoes, anyone's gas in your gas tank, and anyone's lightbulbs in your light-socket. In the non-digital world, interop takes a lot of work, you have to agree on the direction, pitch, diameter, voltage, amperage and wattage for that light socket, or someone's gonna get their hand blown off.

But in the digital world, interop is built in, because there's only one kind of computer we know how to make, the Turing-complete, universal, von Neumann machine, a computing machine capable of executing every valid program.

Which means that for any enshittifying program, there's a counterenshittificatory program waiting to be run. When HP writes a program to ensure that its printers reject third-party ink, someone else can write a program to disable that checking.

For gig workers, antienshittificatory apps can do yeoman duty. For example, Indonesian gig drivers formed co-ops, that commission hackers to write modifications for their dispatch apps. For example, the taxi app won't book a driver to pick someone up at a train station, unless they're right outside, but when the big trains pull in that's a nightmare scene of total, lethal chaos.

So drivers have an app that lets them spoof their GPS, which lets them park up around the corner, but have the app tell their bosses that they're right out front of the station. When a fare arrives, they can zip around and pick them up, without contributing to the stationside mishegas.

In the USA, a company called Para shipped an app to help Doordash drivers get paid more. You see, Doordash drivers make most of their money on tips, and the Doordash driver app hides the tip amount until you accept a job, meaning you don't know whether you're accepting a job that pays $1.50 or $11.50 with tip, until you agree to take it. So Para made an app that extracted the tip amount and showed it to drivers before they clocked on.

But Doordash shut it down, because in America, apps like Para are illegal. In 1998, Bill Clinton signed a law called the Digital Millennium Copyright Act, and section 1201 of the DMCA makes is a felony to "bypass an access control for a copyrighted work," with penalties of $500k and a 5-year prison sentence for a first offense. So just the act of reverse-engineering an app like the Doordash app is a potential felony, which is why companies are so desperately horny to get you to use their apps rather than their websites.

The web is open, apps are closed. The majority of web users have installed an ad blocker (which is also a privacy blocker). But no one installs an ad blocker for an app, because it's a felony to distribute that tool, because you have to reverse-engineer the app to make it. An app is just a website wrapped in enough IP so that the company that made it can send you to prison if you dare to modify it so that it serves your interests rather than theirs.

Around the world, we have enacted a thicket of laws, we call “IP laws,” that make it illegal to modify services, products, and devices, so that they serve your interests, rather than the interests of the shareholders.

Like I said, these laws were enacted in living memory, by people who are among us, who were warned about the obvious, eminently foreseeable consequences of their reckless plans, who did it anyway.

Back in 2010, two ministers from Stephen Harper's government decided to copy-paste America's Digital Millennium Copyright Act into Canadian law. They consulted on the proposal to make it illegal to reverse engineer and modify services, products and devices, and they got an earful! 6,138 Canadians sent in negative comments on the consultation. They warned that making it illegal to bypass digital locks would interfere with repair of devices as diverse as tractors, cars, and medical equipment, from ventilators to insulin pumps.

These Canadians warned that laws banning tampering with digital locks would let American tech giants corner digital markets, forcing us to buy our apps and games from American app stores, that could cream off any commission they chose to levy. They warned that these laws were a gift to monopolists who wanted to jack up the price of ink; that these copyright laws, far from serving Canadian artists would lock us to American platforms. Because every time someone in our audience bought a book, a song, a game, a video, that was locked to an American app, it could never be unlocked.

So if we, the creative workers of Canada, tried to migrate to a Canadian store, our audience couldn't come with us. They couldn't move their purchases from the US app to a Canadian one.

6,138 Canadians told them this, while just 54 respondents sided with Heritage Minister James Moore and Industry Minister Tony Clement. Then, James Moore gave a speech, at the International Chamber of Commerce meeting here in Toronto, where he said he would only be listening to the 54 cranks who supported his terrible ideas, on the grounds that the 6,138 people who disagreed with him were "babyish…radical extremists."

So in 2012, we copied America's terrible digital locks law into the Canadian statute book, and now we live in James Moore and Tony Clement's world, where it is illegal to tamper with a digital lock. So if a company puts a digital lock on its product they can do anything behind that lock, and it's a crime to undo it.

For example, if HP puts a digital lock on its printers that verifies that you're not using third party ink cartridges, or refilling an HP cartridge, it's a crime to bypass that lock and use third party ink. Which is how HP has gotten away with ratcheting the price of ink up, and up, and up.

Printer ink is now the most expensive fluid that a civilian can purchase without a special permit. It's colored water that costs $10k/gallon, which means that you print out your grocery lists with liquid that costs more than the semen of a Kentucky Derby-winning stallion.

That's the world we got from Clement and Moore, in living memory, after they were warned, and did it anyway. The world where farmers can't fix their tractors, where independent mechanics can't fix your car, where hospitals during the pandemic lockdowns couldn't service their failing ventilators, where every time a Canadian iPhone user buys an app from a Canadian software author, every dollar they spend takes a round trip through Apple HQ in Cupertino, California and comes back 30 cents lighter.

Let me remind you this is the world where a nurse can't get a counter-app, a plug-in, for the “Uber for nurses” app they have to use to get work, that lets them coordinate with other nurses to refuse shifts until the wages on offer rise to a common level or to block surveillance of their movements and activity.

Interoperability was a major disciplining force on tech firms. After all, if you make the ads on your website sufficiently obnoxious, some fraction of your users will install an ad-blocker, and you will never earn another penny from them. Because no one in the history of ad-blockers has ever uninstalled an ad-blocker. But once it's illegal to make an ad-blocker, there's no reason not to make the ads as disgusting, invasive, obnoxious as you can, to shift all the value from the end user to shareholders and executives.

So we get monopolies and monopolies capture their regulators, and they can ignore the laws they don't like, and prevent laws that might interfere with their predatory conduct – like privacy laws – from being passed. They get new laws passed, laws that let them wield governmental power to prevent other companies from entering the market.

So three of the four forces are neutralized: competition, regulation, and interoperability. That left just one disciplining force holding enshittification at bay: labor.

Tech workers are a strange sort of workforce, because they have historically been very powerful, able to command high wages and respect, but they did it without joining unions. Union density in tech is abysmal, almost undetectable. Tech workers' power didn't come from solidarity, it came from scarcity. There weren't enough workers to fill the jobs going begging, and tech workers are unfathomnably productive. Even with the sky-high salaries tech workers commanded, every hour of labor they put in generated far more value for their employers.

Faced with a tight labor market, and the ability to turn every hour of tech worker overtime into gold, tech bosses pulled out all the stops to motivate that workforce. They appealed to workers' sense of mission, convinced them they were holy warriors, ushering in a new digital age. Google promised them they would "organize the world's information and make it useful.” Facebook promised them they would “make the world more open and connected."

There's a name for this tactic: the librarian Fobazi Ettarh calls it "vocational awe." That’s where an appeal to a sense of mission and pride is used to motivate workers to work for longer hours and worse pay.

There are all kinds of professions that run on vocational awe: teaching, daycares and eldercare, and, of course, nursing.

Techies are different from those other workers though, because they've historically been incredibly scarce, which meant that while bosses could motivate them to work on projects they believed in, for endless hours, the minute bosses ordered them to enshittify the projects they'd missed their mothers' funerals to ship on deadline these workers would tell their bosses to fuck off.

If their bosses persisted in these demands, the techies would walk off the job, cross the street, and get a better job the same day.

So for many years, tech workers were the fourth and final constraint, holding the line after the constraints of competition, regulation and interop slipped away. But then came the mass tech layoffs. 260,000 in 2023; 150,000 in 2024; tens of thousands this year, with Facebook planning a 5% headcount massacre while doubling its executive bonuses.

Tech workers can't tell their bosses to go fuck themselves anymore, because there's ten other workers waiting to take their jobs.

Now, I promised I wouldn't talk about AI, but I have to break that promise a little, just to point out that the reason tech bosses are so horny for AI Is because they think it'll let them fire tech workers and replace them with pliant chatbots who'll never tell them to fuck off.

So that's where enshittification comes from: multiple changes to the environment. The fourfold collapse of competition, regulation, interoperability and worker power creates an enshittogenic environment, where the greediest, most sociopathic elements in the body corporate thrive at the expense of those elements that act as moderators of their enshittificatory impulses.

We can try to cure these corporations. We can use antitrust law to break them up, fine them, force strictures upon them. But until we fix the environment, other the contagion will spread to other firms.

So let's talk about how we create a hostile environment for enshittifiers, so the population and importance of enshittifying agents in companies dwindles to 1990s levels. We won't get rid of these elements. So long as the profit motive is intact, there will be people whose pursuit of profit is pathological, unmoderated by shame or decency. But we can change the environment so that these don't dominate our lives.

Let's talk about antitrust. After 40 years of antitrust decline, this decade has seen a massive, global resurgence of antitrust vigor, one that comes in both left- and right-wing flavors.

Over the past four years, the Biden administration’s trustbusters at the Federal Trade Commission, Department of Justice and Consumer Finance Protection Bureau did more antitrust enforcement than all their predecessors for the past 40 years combined.

There's certainly factions of the Trump administration that are hostile to this agenda but Trump's antitrust enforcers at the DoJ and FTC now say that they'll preserve and enforce Biden's new merger guidelines, which stop companies from buying each other up, and they've already filed suit to block a giant tech merger.

Of course, last summer a judge found Google guilty of monopolization, and now they're facing a breakup, which explains why they've been so generous and friendly to the Trump administration.

Meanwhile, in Canada, our toothless Competition Bureau's got fitted for a set of titanium dentures last June, when Bill C59 passed Parliament, granting sweeping new powers to our antitrust regulator.

It's true that UK PM Keir Starmer just fired the head of the UK Competition and Markets Authority and replaced him with the ex-boss of Amazon UK boss.But the thing that makes that so tragic is that the UK CMA had been doing astonishingly great work under various conservative governments.

In the EU, they've passed the Digital Markets Act and the Digital Services Act, and they're going after Big Tech with both barrels. Other countries around the world – Australia, Germany, France, Japan, South Korea and China (yes, China!) – have passed new antitrust laws, and launched major antitrust enforcement actions, often collaborating with each other.

So you have the UK Competition and Markets Authority using its investigatory powers to research and publish a deep market study on Apple's abusive 30% app tax, and then the EU uses that report as a roadmap for fining Apple, and then banning Apple's payments monopoly under new regulations.Then South Korea and Japan trustbusters translate the EU's case and win nearly identical cases in their courts

What about regulatory capture? Well, we're starting to see regulators get smarter about reining in Big Tech. For example, the EU's Digital Markets Act and Digital Services Act were designed to bypass the national courts of EU member states, especially Ireland, the tax-haven where US tech companies pretend to have their EU headquarters.

The thing about tax havens is that they always turn into crime havens, because if Apple can pretend to be Irish this week, it can pretend to be Maltese or Cypriot or Luxembourgeois next week. So Ireland has to let US Big Tech companies ignore EU privacy laws and other regulations, or it'll lose them to sleazier, more biddable competitor nations.

So from now on, EU tech regulation is getting enforced in the EU's federal courts, not in national courts, treating the captured Irish courts as damage and routing around them.

Canada needs to strengthen its own tech regulation enforcement, unwinding monopolistic mergers from the likes of Bell and Rogers, but most of all, Canada needs to pursue an interoperability agenda.

Last year, Canada passed two very exciting bills: Bill C244, a national Right to Repair law; and Bill C294, an interoperability law. Nominally, both of these laws allow Canadians to fix everything from tractors to insulin pumps, and to modify the software in their devices from games consoles to printers, so they will work with third party app stores, consumables and add-ons.

However, these bills are essentially useless, because these bills don’t permit Canadians to acquire tools to break digital locks. So you can modify your printer to accept third party ink, or interpret a car's diagnostic codes so any mechanic can fix it, but only if there isn't a digital lock stopping you from doing so, because giving someone a tool to break a digital lock remains illegal thanks to the law that James Moore and Tony Clement shoved down the nation's throat in 2012.

And every single printer, smart speaker, car, tractor, appliance, medical implant and hospital medical device has a digital lock that stops you from fixing it, modifying it, or using third party parts, software, or consumables in it.

Which means that these two landmark laws on repair and interop are useless. So why not get rid of the 2012 law that bans breaking digital locks? Because these laws are part of our trade agreement with the USA. This is a law needed to maintain tariff-free access to US markets.

I don’t know if you've heard, but Donald Trump is going to impose a 25%, across-the-board tariff against Canadian exports. Trudeau's response is to impose retaliatory tariffs, which will make every American product that Canadians buy 25% more expensive. This is a very weird way to punish America!

You know what would be better? Abolish the Canadian laws that protect US Big Tech companies from Canadian competition. Make it legal to reverse-engineer, jailbreak and modify American technology products and services. Don't ask Facebook to pay a link tax to Canadian newspapers, make it legal to jailbreak all of Meta's apps and block all the ads in them, so Mark Zuckerberg doesn't make a dime off of us.

Make it legal for Canadian mechanics to jailbreak your Tesla and unlock every subscription feature, like autopilot and full access to your battery, for one price, forever. So you get more out of your car, and when you sell it, then next owner continues to enjoy those features, meaning they'll pay more for your used car.

That's how you hurt Elon Musk: not by being performatively appalled at his Nazi salutes. That doesn't cost him a dime. He loves the attention. No! Strike at the rent-extracting, insanely high-margin aftermarket subscriptions he relies on for his Swastikar business. Kick that guy right in the dongle!

Let Canadians stand up a Canadian app store for Apple devices, one that charges 3% to process transactions, not 30%. Then, every Canadian news outlet that sells subscriptions through an app, and every Canadian software author, musician and writer who sells through a mobile platform gets a 25% increase in revenues overnight, without signing up a single new customer.

But we can sign up new customers, by selling jailbreaking software and access to Canadian app stores, for every mobile device and games console to everyone in the world, and by pitching every games publisher and app maker on selling in the Canadian app store to customers anywhere without paying a 30% vig to American big tech companies.

We could sell every mechanic in the world a $100/month subscription to a universal diagnostic tool. Every farmer in the world could buy a kit that would let them fix their own John Deere tractors without paying a $200 callout charge for a Deere technician who inspects the repair the farmer is expected to perform.

They'd beat a path to our door. Canada could become a tech export powerhouse, while making everything cheaper for Canadian tech users, while making everything more profitable for anyone who sells media or software in an online store. And – this is the best part – it’s a frontal assault on the largest, most profitable US companies, the companies that are single-handedly keeping the S&P 500 in the black, striking directly at their most profitable lines of business, taking the revenues from those ripoff scams from hundreds of billions to zero, overnight, globally.

We don't have to stop at exporting reasonably priced pharmaceuticals to Americans! We could export the extremely lucrative tools of technological liberation to our American friends, too.

That's how you win a trade-war.

What about workers? Here we have good news and bad news.

The good news is that public approval for unions is at a high mark last seen in the early 1970s, and more workers want to join a union than at any time in generations, and unions themselves are sitting on record-breaking cash reserves they could be using to organize those workers.

But here's the bad news. The unions spent the Biden years, when they had the most favorable regulatory environment since the Carter administration, when public support for unions was at an all-time high, when more workers than ever wanted to join a union, when they had more money than ever to spend on unionizing those workers, doing fuck all. They allocatid mere pittances to union organizing efforts with the result that we finished the Biden years with fewer unionized workers than we started them with.

Then we got Trump, who illegally fired National Labor Relations Board member Gwynne Wilcox, leaving the NLRB without a quorum and thus unable to act on unfair labor practices or to certify union elections.

This is terrible. But it’s not game over. Trump fired the referees, and he thinks that this means the game has ended. But here's the thing: firing the referee doesn't end the game, it just means we're throwing out the rules. Trump thinks that labor law creates unions, but he's wrong. Unions are why we have labor law. Long before unions were legal, we had unions, who fought goons and ginks and company finks in` pitched battles in the streets.

That illegal solidarity resulted in the passage of labor law, which legalized unions. Labor law is passed because workers build power through solidarity. Law doesn't create that solidarity, it merely gives it a formal basis in law. Strip away that formal basis, and the worker power remains.

Worker power is the answer to vocational awe. After all, it's good for you and your fellow workers to feel a sense of mission about your jobs. If you feel that sense of mission, if you feel the duty to protect your users, your patients, your patrons, your students, a union lets you fulfill that duty.

We saw that in 2023 when Doug Ford promised to destroy the power of Ontario's public workers. Workers across the province rose up, promising a general strike, and Doug Ford folded like one of his cheap suits. Workers kicked the shit out of him, and we'll do it again. Promises made, promises kept.

The unscheduled midair disassembly of American labor law means that workers can have each others' backs again. Tech workers need other workers' help, because tech workers aren't scarce anymore, not after a half-million layoffs. Which means tech bosses aren't afraid of them anymore.

We know how tech bosses treat workers they aren't afraid of. Look at Jeff Bezos: the workers in his warehouses are injured on the job at 3 times the national rate, his delivery drivers have to pee in bottles, and they are monitored by AI cameras that snitch on them if their eyeballs aren't in the proscribed orientation or if their mouth is open too often while they drive, because policy forbids singing along to the radio.

By contrast, Amazon coders get to show up for work with pink mohawks, facial piercings, and black t-shirts that say things their bosses don't understand. They get to pee whenever they want. Jeff Bezos isn't sentimental about tech workers, nor does he harbor a particularized hatred of warehouse workers and delivery drivers. He treats his workers as terribly as he can get away with. That means that the pee bottles are coming for the coders, too.

It's not just Amazon, of course. Take Apple. Tim Cook was elevated to CEO in 2011. Apple's board chose him to succeed founder Steve Jobs because he is the guy who figured out how to shift Apple's production to contract manufacturers in China, without skimping on quality assurance, or suffering leaks of product specifications ahead of the company's legendary showy launches.

Today, Apple's products are made in a gigantic Foxconn factory in Zhengzhou nicknamed "iPhone City.” Indeed, these devices arrive in shipping containers at the Port of Los Angeles in a state of pristine perfection, manufactured to the finest tolerances, and free of any PR leaks.

To achieve this miraculous supply chain, all Tim Cook had to do was to make iPhone City a living hell, a place that is so horrific to work that they had to install suicide nets around the worker dorms to catch the plummeting bodies of workers who were so brutalized by Tim Cook's sweatshop that they attempted to take their own lives.

Tim Cook is also not sentimentally attached to tech workers, nor is he hostile to Chinese assembly line workers. He just treats his workers as badly as he can get away with, and with mass layoffs in the tech sector he can treat his coders much, much worse

How do tech workers get unions? Well, there are tech-specific organizations like Tech Solidarity and the Tech Workers Coalition. But tech workers will only get unions by having solidarity with other workers and receiving solidarity back from them. We all need to support every union. All workers need to have each other's backs.

We are entering a period of omnishambolic polycrisis.The ominous rumble of climate change, authoritarianism, genocide, xenophobia and transphobia has turned into an avalanche. The perpetrators of these crimes against humanity have weaponized the internet, colonizing the 21st century's digital nervous system, using it to attack its host, threatening civilization itself.

The enshitternet was purpose-built for this kind of apocalyptic co-option, organized around giant corporations who will trade a habitable planet and human rights for a three percent tax cut, who default us all into twiddle-friendly algorithmic feed, and block the interoperability that would let us escape their clutches with the backing of powerful governments whom they can call upon to "protect their IP rights."

It didn't have to be this way. The enshitternet was not inevitable. It was the product of specific policy choices, made in living memory, by named individuals.

No one came down off a mountain with two stone tablets, intoning Tony Clement, James Moore: Thou shalt make it a crime for Canadians to jailbreak their phones. Those guys chose enshittification, throwing away thousands of comments from Canadians who warned them what would come of it.

We don't have to be eternal prisoners of the catastrophic policy blunders of mediocre Tory ministers. As the omnicrisis polyshambles unfolds around us, we have the means, motive and opportunity to craft Canadian policies that bolster our sovereignty, protect our rights, and help us to set every technology user, in every country (including the USA) free.

The Trump presidency is an existential crisis but it also presents opportunities. When life gives you SARS, you make sarsaparilla. We once had an old, good internet, whose major defect was that it required too much technical expertise to use, so all our normie friends were excluded from that wondrous playground.

Web 2.0's online services had greased slides that made it easy for anyone to get online, but escaping from those Web 2.0 walled gardens meant was like climbing out of a greased pit. A new, good internet is possible, and necessary. We can build it, with all the technological self-determination of the old, good internet, and the ease of use of Web 2.0.

A place where we can find each other, coordinate and mobilize to resist and survive climate collapse, fascism, genocide and authoritarianism. We can build that new, good internet, and we must.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/02/26/ursula-franklin/#enshittification-eh

#pluralistic#bill c-11#canada#cdnpoli#Centre for Culture and Technology#enshittification#groundwork collective#innis college#jailbreak all the things#james moore#nurses#nursing#speeches#tariff wars#tariffs#technological self-determination#tony clement#toronto#u of t#university of toronto#ursula franklin#ursula franklin lecture

639 notes

·

View notes

Text

This! February 2025

[A monthly link list of recommended articles, videos, podcasts, photos, toots … you name it] [Videos] 2025 Ursula Franklin Lecture: Cory Doctorow Molly Crabapple & Keith LaMar: The Injustice of Justice, A Short Film Animation The Far Right Is Rising in the Land of ‘Never Again [nyt] – German comedian Böhmi (and wannabe John Oliver) on the stakes of the recent election in Germany. Trump’s Plan…

0 notes

Text

In honor of my successful midterm exam in my Digital Arts and Culture course, (the lecture part) I wanted to share some of the fresh ideas I that were introduced to:

1. The Nature of Technology - I don’t have a real quote for this, but my professor described someone saying how ‘Fire is humanity’s second stomach’ (or 0th, considering that food is usually cooked before we eat it) or something like that. So really early humans didn’t invent a new thing, but recreated something that was already inside us. Isn’t that cool?!?

2. The False Dichotomy between the Arts and Sciences - In addition to being a new idea that makes life easier, you could also look to the late Ursula Franklin to define Technology,who said “ Technology is how we do things around here.” Sounds a lot like how most people would describe Culture, doesn’t it?

So there you have it... I’ve been meaning to make this post the day we had this lecture, but I kept putting it off cause I didn’t know how to frame it. I am hoping to have a new sketch up soon; I’ve been on a bit on an emulating (read: copying) kick lately, and I have a copy of Beast now, so I think some Marian Churchland is long overdue!

0 notes

Text

Technology ("science of craft", from Greek τέχνη, techne, "art, skill, cunning of hand"; and -λογία, -logia[2]) is the collection of techniques, skills, methods, and processes used in the production of goods or services or in the accomplishment of objectives, such as scientific investigation. Technology can be the knowledge of techniques, processes, and the like, or it can be embedded in machines to allow for operation without detailed knowledge of their workings.

The simplest form of technology is the development and use of basic tools. The prehistoric discovery of how to control fire and the later Neolithic Revolution increased the available sources of food, and the invention of the wheel helped humans to travel in and control their environment. Developments in historic times, including the printing press, the telephone, and the Internet, have lessened physical barriers to communication and allowed humans to interact freely on a global scale. The steady progress of military technology has brought weapons of ever-increasing destructive power, from clubs to nuclear weapons.

Technology has many effects. It has helped develop more advanced economies (including today's global economy) and has allowed the rise of a leisure class. Many technological processes produce unwanted by-products known as pollution and deplete natural resources to the detriment of Earth's environment. Innovations have always influenced the values of a society and raised new questions of the ethics of technology. Examples include the rise of the notion of efficiency in terms of human productivity, and the challenges of bioethics.

Philosophical debates have arisen over the use of technology, with disagreements over whether technology improves the human condition or worsens it. Neo-Luddism, anarcho-primitivism, and similar reactionary movements criticize the pervasiveness of technology, arguing that it harms the environment and alienates people; proponents of ideologies such as transhumanism and techno-progrThe use of the term "technology" has changed significantly over the last 200 years. Before the 20th century, the term was uncommon in English, and it was used either to refer to the description or study of the useful arts[3] or to allude to technical education, as in the Massachusetts Institute of Technology (chartered in 1861).[4]

The term "technology" rose to prominence in the 20th century in connection with the Second Industrial Revolution. The term's meanings changed in the early 20th century when American social scientists, beginning with Thorstein Veblen, translated ideas from the German concept of Technik into "technology." In German and other European languages, a distinction exists between technik and technologie that is absent in English, which usually translates both terms as "technology." By the 1930s, "technology" referred not only to the study of the industrial arts but to the industrial arts themselves.[5]

In 1937, the American sociologist Read Bain wrote that "technology includes all tools, machines, utensils, weapons, instruments, housing, clothing, communicating and transporting devices and the skills by which we produce and use them."[6] Bain's definition remains common among scholars today, especially social scientists. Scientists and engineers usually prefer to define technology as applied science, rather than as the things that people make and use.[7] More recently, scholars have borrowed from European philosophers of "technique" to extend the meaning of technology to various forms of instrumental reason, as in Foucault's work on technologies of the self (techniques de soi).

Dictionaries and scholars have offered a variety of definitions. The Merriam-Webster Learner's Dictionary offers a definition of the term: "the use of science in industry, engineering, etc., to invent useful things or to solve problems" and "a machine, piece of equipment, method, etc., that is created by technology."[8] Ursula Franklin, in her 1989 "Real World of Technology" lecture, gave another definition of the concept; it is "practice, the way we do things around here."[9] The term is often used to imply a specific field of technology, or to refer to high technology or just consumer electronics, rather than technology as a whole.[10] Bernard Stiegler, in Technics and Time, 1, defines technology in two ways: as "the pursuit of life by means other than life," and as "organized inorganic matter."[11]

Technology can be most broadly defined as the entities, both material and immaterial, created by the application of mental and physical effort in order to achieve some value. In this usage, technology refers to tools and machines that may be used to solve real-world problems. It is a far-reaching term that may include simple tools, such as a crowbar or wooden spoon, or more complex machines, such as a space station or particle accelerator. Tools and machines need not be material; virtual technology, such as computer software and business methods, fall under this definition of technology.[12] W. Brian Arthur defines technology in a similarly broad way as "a means to fulfill a human purpose."[13]

The word "technology" can also be used to refer to a collection of techniques. In this context, it is the current state of humanity's knowledge of how to combine resources to produce desired products, to solve problems, fulfill needs, or satisfy wants; it includes technical methods, skills, processes, techniques, tools and raw materials. When combined with another term, such as "medical technology" or "space technology," it refers to the state of the respective field's knowledge and tools. "State-of-the-art technology" refers to the high technology available to humanity in any field.

Technology can be viewed as an activity that forms or changes culture.[14] Additionally, technology is the application of math, science, and the arts for the benefit of life as it is known. A modern example is the rise of communication technology, which has lessened barriers to human interaction and as a result has helped spawn new subcultures; the rise of cyberculture has at its basis the development of the Internet and the computer.[15] Not all technology enhances culture in a creative way; technology can also help facilitate political oppression and war via tools such as guns. As a cultural activity, technology predates both science and engineering, each of which formalize some aspects of technological endeavor.

https://en.wikipedia.org/wiki/Technology

2 notes

·

View notes

Text

I loved that Ursula Franklin book she mentions. Each year, the CBC in Canada broadcasts the Massey Lectures, and that was the book version of Franklin’s lectures in 1989.

5 Questions with Kate Crawford, author of Atlas of AI

Kate Crawford is a leading scholar of the social and political implications of artificial intelligence. She is a research professor at USC Annenberg, a senior principal researcher at Microsoft Research, and the inaugural chair of AI and Justice at the École Normale Supérieure in Paris.

Katie Crawford will be discussing her new book, Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence (published by Yale University Press) with Trevor Paglen in our City Lights LIVE! discussion series on Friday April 30th, presented with Gray Area!

*****

Where are you writing to us from?

Sydney, Australia. I normally live in New York, so visiting here is like being in a parallel universe where COVID-19 was taken seriously from the beginning and history played out differently.

What’s kept you sane during the pandemic?

Cooking through every cookbook I own, talking to good friends, listening to records, and trying to improve my sub-par surfing skills.

What books are you reading right now? Which books do you return to?

Right now I’m reading Jer Thorp’s Living in Data: Citizen's Guide to a Better Information Future, the excellent collection Your Computer is On Fire from the MIT Press, Kim Stanley Robinson’s The Ministry for the Future, and The Disordered Cosmos: A Journey Into Dark Matter, Spacetime, and Dreams Deferred by Chanda Prescod-Weinstein. Yes, I have a problem - I never just read one book at a time.

In terms of books that I return to, there's a long list. Here’s just a few:

- Geoffrey C. Bowker and Susan Leigh Star's Sorting Things Out: Classification and Its Consequences

- Ursula M. Franklin's The Real World of Technology

- Simone Browne’s Dark Matters: On the Surveillance of Blackness

- James C. Scott’s Seeing Like a State: How Certain Schemes to Improve the Human Condition Have Failed

- Gray Brechin’s Imperial San Francisco: Urban Power, Earthly Ruin

- Octavia E. Butler’s Parable of the Sower

- Lorraine Daston and Peter Galison's Objectivity

- Oscar H. Gandy’s The Panoptic Sort: A Political Economy of Personal Information. Critical Studies in Communication and in the Cultural Industries - such a prescient book about classification, discrimination and technology, published back in 1993!

Which writers, artists, and others influence your work in general, and this book, specifically?

Atlas of AI was influenced by so many writers and artists, across different centuries - from Georgius Agricola to Jorge Luis Borges to Margaret Mead. More recently, there’s been an extraordinary set of books published on the politics of technology in just the last five years. For example:

- Meredith Broussard’s Artificial Unintelligence: How Computers Misunderstand the World

- Ruha Benjmain’s Race After Technology

- Julie E. Cohen’s Between Truth and Power: The Legal Constructions of Informational Capitalism

- Sasha Costanza-Chock’s Design Justice: Community-Led Practices to Build the Worlds We Need

- Catherine D’Ignazio and Lauren F. Klein’s Data Feminism

- Virginia Eubanks’ Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor

- Tarleton Gillespie’s Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media

- Sarah T Roberts’ Behind the Screen: Content Moderation in the Shadows of Social Media

- Safiya Noble’s Algorithms of Oppression: How Search Engines Reinforce Racism

- Tung-Hui Hu’s A Prehistory of the Cloud

And that’s just for starters - it’s an incredible time for books that make us contend with the consequences of the technologies we use every day.

I’m also influenced by the artists I’ve had the privilege of working with over the years, including Trevor Paglen, Vladan Joler, and Heather Dewey-Hagborg. Vladan and I collaborated on Anatomy of an AI System a few years ago, and he designed the cover and illustrations in Atlas of AI, which I love.

If you opened a bookstore, where would it be located, what would it be called, and what would your bestseller be?

This may not be the most practical choice, but I’d open a library for rare and antiquarian books near Mono Lake. I’d call it Labyrinths, after Borges’ infinite library of volumes. One of its treasures would be a copy of John Wilkins’ An Essay Towards a Real Character, and a Philosophical Language (1668), where Wilkins tries to create a classification scheme for every possible thing and notion in the universe. It would be a cryptic joke for the occasional passer-by.

8 notes

·

View notes

Text

Nouvelles acquisitions (Novembre 2018) Rattrapage

Samedi 03.11.18 Gibert Joseph Vladimir Nabokov - Invitation au supplice Ismail Kadaré - Le palais des rêves Leo Perutz - La nuit sous le pont de pierre Yasunari Kawabata - Le Maître ou Le tournoi de Go Yasunari Kawabata - Chroniques d'Asakusa Jean-Pierre Duprey - Oeuvres complètes

Boulinier Armand Guibert & Louis Parrot - Federico Garcia Lorca - Poètes d'aujourd'hui Seghers

Gibert Jeune - Nouvelle Braderie, place St Michel Michael McCauley & Jim Thompson - Coucher avec le diable

Dimanche 04.11.18 Boulinier Collectif - Le surréalisme au service de la révolution

Très heureux d’avoir trouvé ce livre rare à si bas prix ! 15€, je crois. Ce recueil de revues, 6 numéros, succède à la mythique Révolution surréaliste. que j’ai déjà. Ici, des textes de 1930 à 1933 de Breton, Char, Caillois, Crevel, Ernst, Giacometti, etc. Le communisme commence à prendre beaucoup trop de place. Dali hante ces pages, hélas, ainsi que Freud et Sade. Ces 6 numéros seront suivis par une autre revue : Minotaure, mais de querelles en excommunications, le groupe se disloque, c’est déjà le début de la fin... Si j’avais aimé la Révolution surréaliste, je reviens moins souvent vers ce livre-ci.

Samedi 10.11.18 Boulinier Daniel Gillès - Laurence de la nuit J’avais adoré sa biographie de Tchékhov, j’ignore ce que valent ses romans...

Jim Harrison - Nageur de rivière

Ancien livre de bibliothèque. J'ai beaucoup aimé la fiche de lecture qu'on trouvait à l'intérieur. 4 lecteurs ont laissé leur avis : 1. Moyen. Je n'ai pas aimé. S.B. 2. Nul. N'importe quoi. J.J. 3. Très bon. Oh ! On ne peut pas écrire cela ! Voici un livre attirant et poétique. 4. Moyen. Très peu d'intérêt. Sévères, les lecteurs ! J'ajouterai mon avis plus tard...

Ursula Le Guin - Le commencement de nulle part John Brunner - Au seuil de l'éternité Joyce Carol Oates - La foi d'un écrivain Guide l'Ile-de-France mystérieuse - Tchou Paris Review - Les entretiens - Anthologie volume 1

Gibert Jeune - Nouvelle Braderie, place St Michel François Taillandier - Edmond Rostand, l'homme qui voulait bien faire Jean-François Robin - La fièvre d'un tournage - 37°2 le matin

Relu ce petit livre très sympathique, écrit par le directeur de la photo du film.

17.09.1985 « Beineix doute toujours autant à la sortie des projections. (...) Quand j'aurai du génie, je ne ferai que des plans fixes, où le cadre se remplit tout seul, avec des acteurs qui se rapprochent ou qui s'éloignent. Mais là, je n'ai même pas le sens ou l'intuition de savoir où je vais couper. »

24.09.1985 Béatrice Dalle refuse de tourner nue. « J'ai déjà trop tournée nue, ça suffit. » Commentaire de J.J.B. : « Je suis sûr que c'est le mari qui lui a bourré le crâne. » Il se fâche, Dalle s'enferme dans la salle de maquillage. La stagiaire : « Elle pleure, il faut un quart d'heure pour la maquiller. » Dalle revient, muette. « On tourne la scène dans un silence de mort. J.J.B. « Une heure pour tourner un plan si simple, ça me fout ma journée en l'air. » En aparté il ajoute : « Peut-être qu'il faudrait lui filer deux baffes... » « Comme Clouzot, ajoute le chef électricien. » « D'ailleurs, ajoute Beineix, la thèse selon laquelle un acteur doit être bien pour jouer est fausse. Au contraire il faut qu'il se sente mal, qu'il se sente en danger pour se donner à fond, parce que là, il doit compenser. » Scène de dispute dans le film, Dalle réclame la maquilleuse. Beineix refuse, il veut qu'elle soit nature. Dalle est folle de rage. « Voilà, je fais du Pialat, dit Beineix. Puis : Plus on déteste son metteur en scène, mieux on joue. » Une autre fois, 30.09.1985, Dalle s'entraîne à conduire la Mercédès jaune. Elle percute une autre voiture. Phare pété, aile froissée. Beineix ne bronche pas, mais lâche :« Vous auriez pu vous tuer. » Puis une demi-heure plus tard : « Je vous avais prévenus, un film ça se prépare. »

Scène de fête, Dalle fait croire à Beineix qu'il y a de l'eau dans sa bouteille de champagne. Mais c'est du vrai. En partant, je croise Béatrice complètement éméchée. « Je suis saoule. Est-ce que je te plais ? » Ajoute-t-elle en ouvrant son corsage et en riant à gorge déployée. » On entend les « in » traînants en fin de phrases, tipiques de l’actrice : « Je suis saoule-in. je te plais-in ? »

Mardi 12.11.1985 Hier soir, un film de Bergman, son premier à la TV. Béatrice l'a regardé cinq minutes puis elle a coupé. « Ah, les films ruskofs ou polacs, moi je déteste, c'est tout pareil, chiant, chiant. »

11.10.1985, «Tournage de la scène où Betty apparaît la première fois. Zorg est assis, il aperçoit sa magnifique silhouette dans la porte. Beineix : « Attention, tu fais ton entrée dans le cinéma français, il faudra qu'on s'en souvienne ; on te jugera là-dessus. » En fait la première scène sera la fameuse scène de sexe. 22.10.1985 « On tourne deux prises, impressionnantes toutes les deux et les spéculations vont bon train. L'ont-ils fait ou pas ? Les intéressés ne démentiront que le lendemain mais qu'importe : le faux amour semble encore plus sincère que le vrai. »

Revu le film dans sa version longue, que j'avais bien aimé à sa sortie. Ensuite, beaucoup aimé le roman de Philippe Djian, qui a fait baisser le film dans mon estime. À la deuxième vision, j'ai trouvé le film un peu cruche. Niais, par moments. Très daté 1985. Anglade, quoique naturel, fait trop minet pour le rôle de l’écrivain. Et esthétiquement, c'est parfois très moche : ces filtres colorés rendent l'image vraiment dégueulasse. Restent de belles scènes, quand même (+ la musique de Gabriel Yared !), et on dira ce qu'on voudra, mais Béatrice Dalle, certes agaçante dans son jeu-in, crève l'écran. Voire l’explose. C’est la Bardot de son époque, en somme.

émission de radio de la RSR, avec quelques erreurs mais des entretiens d’époque : https://bit.ly/2Kk8NdQ

Jean-Roger Caussimon - La double vie, mémoires

Vendredi 16.11.18 Via internet Léo Malet - Tome 5 - Romans, nouvelles et poèmes (Coll. Bouquins Robert Laffont) Contient : La vie est dégueulasse, le soleil n'est pas pour nous, sueur aux tripes, contes doux, la forêt aux pendus, la louve du Bas-Craoul, le diamant du huguenot, un héros en guenilles, le capitaine coeur-en-berne, Gérard Vinbdex, la soeur du flibustier, l'évasion du masque de fer, le voilier magique, vengeance à Ciudad-Juarez, vacances sous le pavillon noir, pièces radiophoniques et téléfilm.

Samedi 17.11.18 Boulinier Christopher Brookmyre - Les canards en plastique attaquent ! Ivan Bounine - Le village Knut Hamsun - Esclaves de l'amour Saul Friedländer - Quand vient le souvenir...

Gibert Jeune - Nouvelle Braderie, place St Michel Jacques Sternberg - Les pensées

Vendredi 23.11.18 Via internet Mary Dearborn - Henry Miller, biographie

Samedi 24.11.18 Gibert Joseph Serge Valletti - Sale août, suivi de John a-dreams Robert Benchley - Les enfants, pour quoi faire ?

Boulinier Edgar Lee Masters - Spoon River

J’ai déjà la traduction bilingue de chez Phébus (Des voix sous les pierres, Trad. Patrick Reumaux). Voilà un recueil de poésies étonnnant où des épitaphes sur des tombes parlent, se répondent, racontent la vie d’une petite ville, dans une construction originale et réjouissante. Inspiré par les épigrammes de l'Anthologie grecque. Je me demande si Georges Saunders ne s’en est pas inspiré pour son roman Lincoln in the Bardo ? C’est ici une nouvelle trad. parue chez Allia en 2016, par Gaëlle Merle. Il existe une troisième trad. par Michel Pétris & Kenneth White, la première de 1976 chez Champ Libre (merci Laurent N.) Et une quatrième par un collectif Général Instin (!?) Édition de Patrick Chatelier. http://www.lenouvelattila.fr/spoon-river/

Ivan Bounine - Tchékhov

Gibert Jeune - Nouvelle Braderie, place St Michel Robert Ferguson - Henry Miller, biographie Tom Franklin - Braconniers, nouvelles

1 note

·

View note

Photo

The Real World of Technology

Ursula M. Franklin