#wired network infrastructure

Explore tagged Tumblr posts

Text

Delegating trust is really, really, really hard (infosec edition)

CORRECTION: A previous version of this thread reported that Trustcor has the same officers as Packet Forensics; they do not; they have the same officers as Measurement Systems. I regret the error.

I’ve got trust issues. We all do. Some infosec pros go so far as to say “trust no one,” a philosophy more formally known as “Zero Trust,” that holds that certain elements of your security should never be delegated to any third party.

The problem is, it’s trust all the way down. Say you maintain your own cryptographic keys on your own device. How do you know the software you use to store those keys is trustworthy? Well, maybe you audit the source-code and compile it yourself.

But how do you know your compiler is trustworthy? When Unix/C co-creator Ken Thompson received the Turing Prize, he either admitted or joked that he had hidden back doors in the compiler he’d written, which was used to compile all of the other compilers:

https://pluralistic.net/2022/10/11/rene-descartes-was-a-drunken-fart/#trusting-trust

OK, say you whittle your own compiler out of a whole log that you felled yourself in an old growth forest that no human had set foot in for a thousand years. How about your hardware? Back in 2018, Bloomberg published a blockbuster story claiming that the server infrastructure of the biggest cloud companies had been compromised with tiny hardware interception devices:

https://www.bloomberg.com/news/features/2018-10-04/the-big-hack-how-china-used-a-tiny-chip-to-infiltrate-america-s-top-companies

The authors claimed to have verified their story in every conceivable way. The companies whose servers were said to have been compromised rejected the entire story. Four years later, we still don’t know who was right.

How do we trust the Bloomberg reporters? How do we trust Apple? If we ask a regulator to investigate their claims, how do we trust the regulator? Hell, how do we trust our senses? And even if we trust our senses, how do we trust our reason? I had a lurid, bizarre nightmare last night where the most surreal events seemed perfectly reasonable (tldr: I was mugged by invisible monsters while trying to order a paloma at the DNA Lounge, who stole my phone and then a bicycle I had rented from the bartender).

If you can’t trust your senses, your reason, the authorities, your hardware, your software, your compiler, or third-party service-providers, well, shit, that’s pretty frightening, isn’t it (paging R. Descartes to a white courtesy phone)?

There’s a joke about physicists, that all of their reasoning begins with something they know isn’t true: “Assume a perfectly spherical cow of uniform density on a frictionless surface…” The world of information security has a lot of these assumptions, and they get us into trouble.

Take internet data privacy and integrity — that is, ensuring that when you send some data to someone else, the data arrives unchanged and no one except that person can read that data. In the earliest days of the internet, we operated on the assumption that the major threat here was technical: our routers and wires might corrupt or lose the data on the way.

The solution was the ingenious system of packet-switching error-correction, a complex system that allowed the sender to verify that the recipient had gotten all the parts of their transmission and resend the parts that disappeared en route.

This took care of integrity, but not privacy. We mostly just pretended that sysadmins, sysops, network engineers, and other people who could peek at our data “on the wire” wouldn’t, even though we knew that, at least some of the time, this was going on. The fact that the people who provided communications infrastructure had a sense of duty and mission didn’t mean they wouldn’t spy on us — sometimes, that was why they peeked, just to be sure that we weren’t planning to mess up “their” network.

The internet always carried “sensitive” information — love letters, private discussions of health issues, political plans — but it wasn’t until investors set their sights on commerce that the issue of data privacy came to the fore. The rise of online financial transactions goosed the fringe world of cryptography into the mainstream of internet development.

This gave rise to an epic, three-sided battle, between civil libertarians, spies, and business-people. For years, the civil liberties people had battled the spy agencies over “strong encryption” (more properly called “working encryption” or just “encryption”).

The spy agencies insisted that civilization would collapse if they couldn’t wiretap any and every message traversing the internet, and maintained that they would neither abuse this facility, nor would they screw up and let someone else do so (“trust us,” they said).

The business world wanted to be able to secure their customers’ data, at least to the extent that an insurer would bail them out if they leaked it; and they wanted to actually secure their own data from rivals and insider threats.

Businesses lacked the technological sophistication to evaluate the spy agencies’ claims that there was such a thing as encryption that would keep their data secure from “bad guys” but would fail completely whenever a “good guy” wanted to peek at it.

In a bid to educate them on this score, EFF co-founder John Gilmore built a $250,000 computer that could break the (already broken) cryptography the NSA and other spy agencies claimed businesses could rely on, in just a couple hours. The message of this DES Cracker was that anyone with $250,000 will be able to break into the communications of any American business:

https://cryptome.org/jya/des-cracker.htm

Fun fact: John got tired of the bar-fridge-sized DES Cracker cluttering up his garage and he sent it to my house for safekeeping; it’s in my office next to my desk in LA. If I ever move to the UK, I’ll have to leave it behind because it’s (probably) still illegal to export.

The deadlock might have never been broken but for a key lawsuit: Cindy Cohn (now EFF’s executive director) won the Bernstein case, which established that publishing cryptographic source-code was protected by the First Amendment:

https://www.eff.org/cases/bernstein-v-us-dept-justice

With cryptography legalized, browser vendors set about securing the data-layer in earnest, expanding and formalizing the “public key infrastructure” (PKI) in browsers. Here’s how that works: your browser ships with a list of cryptographic keys from trusted “certificate authorities.” These are entities that are trusted to issue “certificates” to web-hosts, which are used to wrap up their messages to you.

When you open a connection to “https://foo.com," Foo sends you a stream of data that is encrypted with a key identified as belonging to “foo.com” (this key is Foo’s “certificate” — it certifies that the user of this key is Foo, Inc). That certificate is, in turn, signed by a “Certificate Authority.”

Any Certificate Authority can sign any certificate — your browser ships with a long list of these CAs, and if any one of them certifies that the bearer is “Foo.com,” that server can send your browser “secure” traffic and it will dutifully display the data with all assurances that it arrived from one of Foo, Inc’s servers.

This means that you are trusting all of the Certificate Authorities that come with your browser, and you’re also trusting the company that made your browser to choose good Certificate Authorities. This is a lot of trust. If any of those CAs betrays your trust and issues a bad cert, it can be used to reveal, copy, and alter the data you send and receive from a server that presents that certificate.

You’d hope that certificate authorities would be very prudent, cautious and transparent — and that browser vendors would go to great lengths to verify that they were. There are PKI models for this: for example, the “DNS root keys” that control the internet’s domain-name service are updated via a formal, livestreamed ceremony:

https://www.cloudflare.com/dns/dnssec/root-signing-ceremony/

There are 14 people entrusted to perform this ceremony, and at least three must be present at each performance. The keys are stored at two facilities, and the attendees need to show government ID to enter them (is the government that issued the ID trustworthy? Do you trust the guards to verify it? Ugh, my head hurts).

Further access to the facility is controlled by biometric locks (do you trust the lock maker? How about the person who registers the permitted handprints?). Everyone puts a wet signature in a logbook. A staffer has their retina scanned and presents a smartcard.

Then the staffer opens a safe that has a “tamper proof” (read: “tamper resistant”) hardware module whose manufacturer is trusted (why?) not to have made mistakes or inserted a back-door. A special laptop (also trusted) is needed to activate the safe’s hardware module. The laptop “has no battery, hard disk, or even a clock backup battery, and thus can’t store state once it’s unplugged.” Or, at least, the people in charge of it claim that it doesn’t and can’t.

The ceremony continues: the safe yields a USB stick and a DVD. Each of the trusted officials hands over a smart card that they trust and keep in a safe deposit box in a tamper-evident bag. The special laptop is booted from the trusted DVD and mounts the trusted USB stick. The trusted cards are used to sign three months worth of keys, and these are the basis for the next quarter’s worth of secure DNS queries.

All of this is published, videoed, livestreamed, etc. It’s a real “defense in depth” situation where you’d need a very big conspiracy to subvert all the parts of the system that need to work in order to steal underlying secrets. Yes, bottom line, you’re still trusting people, but in part you’re trusting them not to be able to all keep a secret from the rest of us.

The process for determining which CAs are trusted by your browser is a lot less transparent and, judging from experience, a lot less thorough. Many of these CAs have proven to be manifestly untrustworthy over the years. There was Diginotar, a Dutch CA whose bad security practices left it vulnerable to a hack-attack:

https://en.wikipedia.org/wiki/DigiNotar

Some people say it was Iranian government hackers, who used its signing keys to forge certificates and spy on Iranian dissidents, who are liable to arrest, torture and execution. Other people say it was the NSA pretending to be Iranian government hackers:

https://www.schneier.com/blog/archives/2013/09/new_nsa_leak_sh.html

In 2015, the China Internet Network Information Center was used to issue fake Google certificates, which gave hackers the power to intercept and take over Google accounts and devices linked to them (e.g. Android devices):

https://thenextweb.com/news/google-to-drop-chinas-cnnic-root-certificate-authority-after-trust-breach

In 2019, the UAE cyber-arms dealer Darkmatter — an aggressive recruiter of American ex-spies — applied to become a trusted Certificate Authority, but was denied:

https://www.reuters.com/investigates/special-report/usa-spying-raven/

Browser PKI is very brittle. By design, any of the trusted CAs can compromise every site on the internet. An early attempt to address this was “certificate pinning,” whereby browsers shipped with a database of which CAs were authorized to issue certificates for major internet companies. That meant that even though your browser trusted Crazy Joe’s Discount House of Certification to issue certs for any site online, it also knew that Google didn’t use Crazy Joe, and any google.com certs that Crazy Joe issued would be rejected.

But pinning has a scale problem: there are billions of websites and many of them change CAs from time to time, which means that every browser now needs a massive database of CA-site pin-pairs, and a means to trust the updates that site owners submit to browsers with new information about which CAs can issue their certificates.

Pinning was a stopgap. It was succeeded by a radically different approach: surveillance, not prevention. That surveillance tool is Certificate Transparency (CT), a system designed to quickly and publicly catch untrustworthy CAs that issue bad certificates:

https://www.nature.com/articles/491325a

Here’s how Certificate Transparency works: every time your browser receives a certificate, it makes and signs a tiny fingerprint of that certificate, recording the date, time, and issuing CA, as well as proof that the CA signed the certificate with its private key. Every few minutes, your browser packages up all these little fingerprints and fires them off to one or more of about a dozen public logs:

https://certificate.transparency.dev/logs/

These logs use a cool cryptographic technology called Merkle trees that make them tamper-evident: that means that if some alters the log (say, to remove or forge evidence of a bad cert), everyone who’s got a copy of any of the log’s previous entries can tell that the alteration took place.

Merkle Trees are super efficient. A modest server can easily host the eight billion or so CT records that exist to date. Anyone can monitor any of these public logs, checking to see whether a CA they don’t recognize has issued a certificate for their own domain, and then prove that the CA has betrayed its mission.

CT works. It’s how we learned that Symantec engaged in incredibly reckless behavior: as part of their test-suite for verifying a new certificate-issuing server, they would issue fake Google certificates. These were supposed to be destroyed after creation, but at least one leaked and showed up in the CT log:

https://arstechnica.com/information-technology/2017/03/google-takes-symantec-to-the-woodshed-for-mis-issuing-30000-https-certs/

It wasn’t just Google — Symantec had issued tens of thousands of bad certs. Worse: Symantec was responsible for more than a third of the web’s certificates. We had operated on the blithe assumption that Symantec was a trustworthy entity — a perfectly spherical cow of uniform density — but on inspection it was proved to be a sloppy, reckless mess.

After the Symantec scandal, browser vendors cleaned house — they ditched Symantec from browsers’ roots of trust. A lot of us assumed that this scandal would also trigger a re-evaluation of how CAs demonstrated that they were worth of inclusion in a browser’s default list of trusted entities.

If that happened, it wasn’t enough.

Yesterday, the Washington Post’s Joseph Menn published an in-depth investigation into Trustcor, a certificate authority that is trusted by default by Safari, Chrome and Firefox:

https://www.washingtonpost.com/technology/2022/11/08/trustcor-internet-addresses-government-connections/

Menn’s report is alarming. Working from reports from University of Calgary privacy researcher Joel Reardon and UC Berkeley security researcher Serge Egelman, Menn presented a laundry list of profoundly disturbing problems with Trustcor:

https://groups.google.com/a/mozilla.org/g/dev-security-policy/c/oxX69KFvsm4/m/etbBho-VBQAJ

First, there’s an apparent connection to Packet Forensics, a high-tech arms dealer that sells surveillance equipment to the US government. One of Trustcor’s partners is a holding company managed by Packet Forensics spokesman Raymond Saulino.

If Trustcor is working with (or part of) Packet Forensics, it could issue fake certificates for any internet site that Packet Forensics could use to capture, read and modify traffic between that site and any browser. One of Menn’s sources claimed that Packet Forensics “used TrustCor’s certificate process and its email service, MsgSafe, to intercept communications and help the U.S. government.”

Trustcor denies this, as did the general counsel for Packet Forensics.

Should we trust either of them? It’s hard to understand why we would. Take Trustcor: as mentioned, it has a “private” email service called “Msgsafe,” that claims to offer end-to-end encrypted email. But it is not encrypted end-to-end — it sends copies of its users’ private keys to Trustcor, allowing the company (or anyone who hacks the company) to intercept its email.

It’s hard to avoid the conclusion that Trustcor is making an intentionally deceptive statement about how its security products work, or it lacks the basic technical capacity to understand how those products should work. You’d hope that either of those would disqualify Trustcor from being trusted by default by billions of browsers.

It’s worse than that, though: there are so many red flags about Trustcor beyond the defects in Msgsafe. Menn found that that company’s website identified two named personnel, both supposed founders. One of those men was dead. The other one’s Linkedin profile has him departing the company in 2019.

The company lists two phone numbers. One is out of service. The other goes to unmonitored voicemail. The company’s address is a UPS Store in Toronto. Trustcor’s security audits are performed by the “Princeton Audit Group” whose address is a private residence in Princeton, NJ.

A company spokesperson named Rachel McPherson publicly responded to Menn’s article and Reardon and Egelman’s report with a bizarre, rambling message:

https://groups.google.com/a/mozilla.org/g/dev-security-policy/c/oxX69KFvsm4/m/X_6OFLGfBQAJ

In it, McPherson insinuates that Reardon and Egelman are just trying to drum up business for a small security research business they run called Appsecure. She says that Msgsafe’s defects aren’t germane to Trustcor’s Certificate Authority business, instead exhorting the researchers to make “positive suggestions for improving that product suite.”

As to the company’s registration, she makes a difficult-to-follow claim that the irregularities are due to using the same Panamanian law-firm as Packet Forensics, says that she needs to investigate some missing paperwork, and makes vague claims about “insurance impersonation” and “potential for foul play.”

Certificate Authorities have one job: to be very, very, very careful. The parts of Menn’s story and Reardon and Egelman’s report that aren’t disputed are, to my mind, enough to disqualify them from inclusion in browsers’ root of trust.

But the disputed parts — which I personally believe, based on my trust in Menn, which comes from his decades of careful and excellent reporting — are even worse.

For example, Menn makes an excellent case that Packet Forensics is not credible. In 2007, a company called Vostrom Holdings applied for permission for Packet Forensics to do business in Virginia as “Measurement Systems.” Measurement Systems, in turn, tricked app vendors into bundling spyware into their apps, which gathered location data that Measurement Systems sold to private and government customers. Measurement Systems’ data included the identities of 10,000,000 users of Muslim prayer apps.

Packet Forensics denies that it owns Measurement Systems, which doesn’t explain why Vostrom Holdings asked the state of Virginia to let it do business as Measurement Systems. Vostrom also owns the domain “Trustcor.co,” which directed to Trustcor’s main site. Trustcor’s “president, agents and holding-company partners” are identical to those of Measurement Systems.

One of the holding companies listed in both Trustcor and Measurement Systems’ ownership structures is Frigate Bay Holdings. This March, Raymond Saulino — the one-time Packet Forensics spokesman — filed papers in Wyoming identifying himself as manager of Frigate Bay Holdings.

Neither Menn nor Reardon and Egelman claim that Packet Forensics has obtained fake certificates from Trustcor to help its customers spy on their targets, something that McPherson stresses in her reply. However, Menn’s source claims that this is happening.

These companies are so opaque and obscure that it might be impossible to ever find out what’s really going on, and that’s the point. For the web to have privacy, the Certificate Authorities that hold the (literal) keys to that privacy must be totally transparent. We can’t assume that they are perfectly spherical cows of uniform density.

In a reply to Reardon and Egelman’s report, Mozilla’s Kathleen Wilson asked a series of excellent, probing followup questions for Trustcor, with the promise that if Trustcor failed to respond quickly and satisfactorily, it would be purged from Firefox’s root of trust:

https://groups.google.com/a/mozilla.org/g/dev-security-policy/c/oxX69KFvsm4/m/WJXUELicBQAJ

Which is exactly what you’d hope a browser vendor would do when one of its default Certificate Authorities was credibly called into question. But that still leaves an important question: how did Trustcor, who marketed a defective security product, whose corporate ownership is irregular and opaque with a seeming connection to a cyber-arms-dealer, end up in our browsers’ root of trust to begin with?

Formally, the process for inclusion in the root of trust is quite good. It’s a two-year vetting process that includes an external audit:

https://wiki.mozilla.org/CA/Application_Process

But Daniel Schwalbe, CISO of Domain Tools, told Menn that this process was not closely watched, claiming “With enough money, you or I could become a trusted root certificate authority.” Menn’s unnamed Packet Forensics source claimed that most of the vetting process was self-certified — that is, would-be CAs merely had to promise they were doing the right thing.

Remember, Trustcor isn’t just in Firefox’s root of trust — it’s in the roots of trust for Chrome (Google) and Safari (Apple). All the major browser vendors were supposed to investigate this company and none of them disqualified it, despite all the vivid red flags.

Worse, Reardon and Egelman say they notified all three companies about the problems with Trustcor seven months ago, but didn’t hear back until they published their findings publicly on Tuesday.

There are 169 root certificate authorities in Firefox, and comparable numbers in the other major browsers. It’s inconceivable that you could personally investigate each of these and determine whether you want to trust it. We rely on the big browser vendors to do that work for us. We start with: “Assume the browser vendors are careful and diligent when it comes to trusting companies on our behalf.” We assume that these messy, irregular companies are perfectly spherical cows of uniform density on a frictionless surface.

The problem of trust is everywhere. Vaccine deniers say they don’t trust the pharma companies not to kill them for money, and don’t trust the FDA to hold them to account. Unless you have a PhD in virology, cell biology and epidemiology, you can’t verify the claims of vaccine safety. Even if you have those qualifications, you’re trusting that the study data in journals isn’t forged.

I trust vaccines — I’ve been jabbed five times now — but I don’t think it’s unreasonable to doubt either Big Pharma or its regulators. A decade ago, my chronic pain specialist told me I should take regular doses of powerful opioids, and pooh-poohed my safety and addiction concerns. He told me that pharma companies like Purdue and regulators like the FDA had re-evaluated the safety of opioids and now deemed them far safer.

I “did my own research” and concluded that this was wrong. I concluded that the FDA had been captured by a monopolistic and rapacious pharma sector that was complicit in waves of mass-death that produced billions in profits for the Sackler family and other opioid crime-bosses.

I was an “opioid denier.” I was right. The failure of the pharma companies to act in good faith, and the failure of the regulator to hold them to account is a disaster that has consequences beyond the mountain of overdose deaths. There’s a direct line from that failure to vaccine denial, and another to the subsequent cruel denial of pain meds to people who desperately need them.

Today, learning that the CA-vetting process I’d blithely assumed was careful and sober-sided is so slapdash that a company without a working phone or a valid physical address could be trusted by billions of browsers, I feel like I did when I decided not to fill my opioid prescription.

I feel like I’m on the precipice of a great, epistemological void. I can’t “do my own research” for everything. I have to delegate my trust. But when the companies and institutions I rely on to be prudent (not infallible, mind, just prudent) fail this way, it makes me want to delete all the certificates in my browser.

Which would, of course, make the web wildly insecure.

Unless it’s already that insecure.

Ugh.

Image:

Curt Smith (modified)

https://commons.wikimedia.org/wiki/File:Sand_castle,_Cannon_Beach.jpg

CC BY 2.0:

https://creativecommons.org/licenses/by/2.0/deed.en

[Image ID: An animated gif of a sand-castle that is melting into the rising tide; through the course of the animation, the castle gradually fills up with a Matrix-style 'code waterfall' effect.]

349 notes

·

View notes

Text

nintendo’s port forwarding nightmare, or: how i learned to start worrying and fear the network

So I was alerted to Nintendo’s official guide on port forwarding and it contains so much bad advice that there was sort of a moral imperative to write a response to it. If you’re setting up a Switch for multiplayer games and network services, or just have a perverse interest in bad IT device configuration, you should probably read this.

this is like beginning an article about dioxygen difluoride synthesis with “in this article, you’ll learn how to start fires”

this guide having a disclaimer that “it is up to each consumer to determine what security needs they have for their own networks” is like an article promoting asbestos saying that it’s up to the consumer to determine what health needs their lungs have

part the first: questionable advice that will usually work

So first off, they tell you to go to your PC and copy down all the information on your IP, subnet mask, and gateway. For usual home networks, this is probably fine (though it’s already a red flag - normally devices on a modern home network get this information automatically, through something called the Dynamic Host Control Protocol or DHCP, avoiding the need for a lot of cumbersome manual administration).

There are some cases that might be an issue just for this; in particular, if your computer is connected via a cable while your Switch is operating wirelessly. (There’s also questions about subnetting but if you know subnetting you already know why this guide is a trash fire.)

So here the guide tells us why it wanted us to copy down configuration from our PC - because they want you to disable DHCP, and have the Switch use a static IP address.

A fixed IP in of itself isn’t bad, and there’s plenty of use cases where it’s done - but usually in an enterprise environment, for things like file servers, printers, or network infrastructure like switches and wireless access points. It’s just a very odd thing to set for a consumer device - especially a mobile, wireless one like the Switch.

part the second: oversimplified advice that might work

Aside from the rare case where your PC could have an IP address ending in 236 or more, where this advice just wouldn’t work at all, the advice boils down to “put in random numbers until you get one that works”. There’s no guarantee that a working IP address wouldn’t e.g. already be allocated to a computer that is presently turned off.

This brings us to the major issue of device-side static IP addressing: if the device disconnects from the network, there’s nothing to stop the DHCP server in your router from giving that static address to something else that connects. This can cause a lot of weird connectivity errors seemingly at random. There are ways to make a static IP compatible with a DHCP service, but they involve specific configuration of the router or DHCP server that the guide doesn’t touch on.

While this on its own is an issue, it’s not quite the security dumpster fire that it could be. But put “the router might assign the Switch’s IP address to another device in the network” into your foreshadowing pockets because it’s going to be important later.

Finally, there’s subnet mask and gateway settings, and this is where the highly technical stuff comes in. Thankfully the details are irrelevant because there’s one problem with all of this advice: sometimes the Switch should be different from your PC, especially if you have multiple access points or a mixture of wired and wireless connections. DHCP would normally handle this, but if we’re just firing randomly into the darkness, it might not. Again, this is something that will usually work with the default configuration of most home networks, but has no guarantees for anything more complex.

But this is all basically “misguided and incomplete, but mostly harmless”. Where’s the garbage fire?

part the third: terrible advice that will work all too well

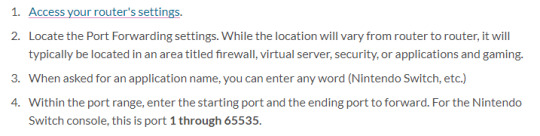

The first few things are standard practice for port forwarding. The first problem is step four: specifically, the phrase “port 1 through 65535″.

If you played old video games, the number 65535 might be familiar to you. It’s the maximum value of a 16-bit unsigned integer, meaning it would irregularly crop up as the seemingly arbitrary maximum for numbers in games of the SNES era.

The significance for this in networking is that port ID is a 16-bit unsigned integer; in other words, "port 1 through 65535″ is equivalent to “every single port”. (Technically, every port but port 0, but 0 is a special case that officially doesn’t exist and is used for various types of magic that are beyond the scope of Nintendo customer support.)

Having a single port forwarded from the open internet tends to make a system a target for attacks, as if the application on that port is compromised, there’s nothing separating it from actors on the open Internet. “Check if you have any open ports” is standard basic cybersecurity advice - any accessible open port is something of a cybersecurity fire hazard.

Having every single port forwarded is the equivalent of running naked into a burning building while covered in naphtha and thermite.

On one hand, it will definitely guarantee that people can connect to your Switch from the internet! On the other... well.

A compromised Switch can then access the rest of your internal network, bypassing a lot of security and default firewall setups, since it is now on the same subnet and network, which most machines will treat as being trusted. For instance, it could access things like router configuration that are often blocked from the outside but accessible - with good reason - from internal machines. This is the same kind of hazard posed by a lot of Internet of Things devices, amplified by the Switch’s greater computing power.

But would you believe me if I said it got worse? look into your foreshadowing pocket, if you will, and recall that the router might assign the Switch’s IP address to another device in the network. So if your Switch is offline, and a new machine comes online and requests a DHCP address, suddenly it’s not forwarding every single port to your Switch, but to whatever that device is! If you have every port forwarded to the Switch’s IP, but the Switch leaves the network for some time, there is a low but very real chance the IP gets reused for a newly-connecting device, and suddenly it’s not the Switch with every port open, but a computer or phone.

All the security risks are the same for open ports on these machines, but the potential of a breach is much, much worse.

part the fourth: okay how do i fix this

Nintendo’s actual customer support has a separate article with a similar security disclaimer talking about a little-known feature many routers have called the DMZ. (Yes, that still stands for “demilitarised zone”, as in Korea or Cyprus or most borders of Israel at one point or another.) The DMZ is essentially an area designated in your router settings as separated - mostly - from your main network, intended to house machines that are more open to the public internet while protecting others from incursion.

Router DMZs are not “proper” DMZs, and do not offer the proper protection of the enterprise-level concept for which they are named. The router DMZ option, more properly called a “DMZ host”, is simply a computer on the same network as the others which receives all traffic that isn’t marked for another machine. It’s essentially the same as opening every port on the Switch, but has the benefit of at least avoiding the IP address reassignment issue that would more directly expose your other machines, since the router needs to know the Switch is in the DMZ.

I haven’t found any good advice on Nintendo’s official pages but I’ll go into what I’d do here.

Shout at Nintendo to establish an in-house standard for which ports their multiplayer games use. It’s not like a Switch is going to be running multiple services at once and they have literally tens of thousands of ports to choose from; just pick one (or at least a narrow range) and stick to it! Ideally, this would fix it but it would require a major game developer to care about cybersecurity issues other than homebrew, which I’m pretty sure requires a Lutheran Pope flying on pigback all the way to the bluest possible moon.

There are lots of networking solutions available to people willing to dip their toes in. The one I would properly suggest looking up is a so-called screened subnet, where you can use subnet masks to separate the Switch from every other machine on your network. Most routers should be able to be configured to support one but the details of it are a bit much for an already long post.

Buy a second router and put it between your first router and the network connection. Set the Switch up through this router, and all your other computers stay connected to the old router; this will make them see the Switch as an external host and keep it separated in case it’s compromised. (This is a simplified version of a true DMZ, and should work basically out of the box other than setting up port forwarding.)

If you absolutely need to expose every port on your Switch to the internet, don’t configure your Switch’s network settings. Instead, use a DHCP reservation from your router to ensure that the specific IP is only ever given to your Switch. Again, this only offers the same “won’t directly expose my other computers” protection that using a Switch as a DMZ host does, and still leaves your network open from the back - but DHCP reservations are probably the easiest thing to set up technically, often being directly documented in your router’s manual.

I am not a cybersecurity specialist, and have only learned what I do know about networking from running my own private networks. This is not a comprehensive listing of the solutions, just the ones that occur to me. They may not work perfectly, but they’ll work a hell of a lot better than opening every port and shouting “come get my ass” to the entire Internet.

266 notes

·

View notes

Note

any odds of something like this happening in joezverse?

I have thought about this, however it runs into the same problem that would occur if Sodor were a real place.

To start with, there would be problems with clearance - I can't say for certain about every engine, but some steam locomotives are too tall to fit under wires - funnels, protrusions on tenders, and cab roofs are all in the "too close" range, and the fixability of these issues ranges from "unscrew that fitting" to "you can't". It wouldn't exactly be in the spirit of Sodor to electrify the main line at the cost of letting Gordon or Henry run on it.

Cost - Overhead catenary is expensive. I'm not saying it's insurmountable, but as many railroads around the world have shown, this is an investment they're usually willing to make only as a last resort, especially when diesels can run on the tracks as-is.

Power - Sodor is of course, fictional, but seeing as it's "rural" and "outside of London", there may be issues with the availability of electric infrastructure - as in, is there enough power to run the lines? This may also be complicated by Sodor being more or less a private enterprise, as they don't really benefit from the government saying "give them a power station", to the also-state-owned power company. As an example, many railways in America had to set up their own powerplants in the early days when they electrified. This again feeds into the "cost" part of the problem.

Why? - Sodor keeps steam running because they like steam. It seems decidedly unlikely that they'd one day elect to throw all of that away and go whole-hog into electrification.

They're not the only railway - This is actually mentioned explicitly on Page 4 of Sodor: Reading Between the Lines: The Furness Line from Barrow to Carnforth is not electrified, and more importantly, not owned by anyone named Hatt. This reduces the chances of electrification to almost zero, seeing as how Network Rail can barely keep the British railway network in one piece as it stands. This sort of endeavor would have needed to be undertaken by British Rail, and it just didn't happen.

27 notes

·

View notes

Text

Electrical grids are very eldritch. It's not really worthwhile to try and think of them as being made of independent machines, everything is linked.

It's best to think of electrical grids as one large machine which must always move in sync. The entire wiring network of perhaps half a continent is all held at exactly the same voltage to within tight tolerances, and moves exactly all at once, sometimes across ten thousand kilometers, a beating heart that not only runs along the wires but pervades the very air almost everywhere people can be found. Your body is almost certainly coupled to the grid. If you probed your skin with an oscilloscope, you'd see the signal. It's weak, it's not made for you, but it's still there.

One of the Masters students I hung out with at university had a little box he'd made that could plug into any wall socket and it'd spit out a report on a dozen different grid operating parameters, for the entire Southern African Grid. Up through the wiring, out to the transformer station at the university, through the area transformer, out to the substation, and into the lines operating at hundreds of kilovolts. Sure, there's filtering, high frequency signals won't travel beyond maybe the second transformer, but it's all connected.

There's very little direct communication between power stations, because they don't need it, and frankly it's much more sensible to operate based on the state of the grid, a continent-sized homeostasis. They get all the information they need from the state of the grid. Only broad-strokes plans, accounting for failures, bringing in new loads, really needs any large scale intervention. Trying to manually control the grid would be like trying to manually control every part of walking. What do your ankles do when you walk up stairs? I sure as hell don't know.

The machine forms a huge layer over almost everything you see and everywhere you go, invisible, synchronized, out of sight. They are conducted through the air, far out of reach, or through the ground. The infrastructure is so bland as to be invisible to most people but if you know what parts make it up, you can start to see the structure of the whole thing, and how it all fits together. Ceramic stacks isolate hundreds of kilovolts from the ground, Enormous hunks of iron lie in cooled oil baths, humming away all at the same, barely audible frequency. We built it, and for now, it's always there.

444 notes

·

View notes

Text

Simplify Your Home or Office with Full Wiring Service

Introduction

In our technologically driven world, the importance of efficient and reliable electrical wiring cannot be overstated. Whether you are building a new home, renovating an existing space, or upgrading your office, ensuring your electrical system is properly installed and well-maintained is crucial. That's where full wiring service comes into play. In this article, we will explore the benefits and key aspects of a comprehensive wiring service, helping you make informed decisions when it comes to the electrical infrastructure of your property.

What is Full Wiring Service?

Full house wiring service involves a comprehensive approach to electrical installation, maintenance, and repairs. It encompasses all the electrical systems within a building, from power distribution and lighting to data and communication networks. Professional electricians with expertise in wiring analyze your property's electrical needs, design a tailored wiring plan, and execute the installation in compliance with safety standards and local building codes.

Benefits of Full house Wiring Service

Enhanced Safety: Faulty wiring is a leading cause of electrical accidents and fires. By opting for full wiring service, you significantly reduce the risk of electrical hazards, ensuring the safety of your property, loved ones, employees, and valuable assets.

Improved Energy Efficiency: Upgrading your electrical wiring can lead to greater energy efficiency, resulting in reduced utility bills. Modern wiring systems use advanced materials and technologies that minimize energy loss, maximizing the effectiveness of your electrical infrastructure.

Customized Solutions: Full wiring service provides you with the opportunity to customize your electrical system according to your specific needs. Whether it's installing additional outlets, incorporating smart home features, or integrating audiovisual equipment, professional electricians can design a wiring plan that meets your requirements precisely.

Future-Proofing: Investing in a full wiring service ensures that your electrical infrastructure is equipped to handle future technological advancements. With the rapid pace of innovation, having a wiring system that can support emerging technologies such as electric vehicles, renewable energy solutions, and home automation is essential. Key Aspects of Full Wiring Service

Initial Assessment: Experienced electricians conduct a thorough assessment of your property to evaluate the existing wiring, identify potential issues, and understand your specific requirements. This step helps in designing an effective wiring plan.

Wiring Design and Installation: Based on the assessment, electricians create a comprehensive wiring design that includes the layout, load distribution, and placement of outlets, switches, and other electrical components. Once the design is approved, they proceed with the installation, ensuring proper insulation, grounding, and adherence to safety protocols.

Upgrading and Retrofitting: If you have an older property, full wiring service can involve upgrading and retrofitting the electrical system to meet current safety and efficiency standards. This may include replacing outdated wiring, upgrading circuit breakers, and installing surge protection devices.

Integration of Smart Technologies: As smart home technologies gain popularity, full wiring service can integrate these features seamlessly into your electrical system. From smart lighting and thermostats to security systems and voice-controlled devices, professional electricians can incorporate these technologies into your wiring plan, making your property more efficient and convenient.

Conclusion

Investing in a full wiring service is a wise decision that not only ensures the safety and efficiency of your electrical system but also provides a solid foundation for future technological advancements. By working with experienced electricians, you can customize your wiring plan to meet your unique needs, enjoy increased energy efficiency, and make your property smarter and more convenient. Remember, electrical work should always be performed by licensed professionals to ensure compliance with safety regulations. So, take the first step towards a streamlined and reliable electrical infrastructure by opting for a full wiring service today.

3 notes

·

View notes

Text

trains are great i think we should have more trains in the form of intercity passenger and freight trains but in capitalistan building rail is a nightmare

buses can use existing infrastructure and buses are fucking awesome

love me some buses they were totally the best part of living in seattle

anyway even if you use diesel buses and get people out of fucking cars they're a huge improvement

buses that run on rechargeable lithium batteries are a very stupid idea so of course there's much focus on them

fuck that

what you want are hybrid electric buses with those scissor lift things that get power from overhead wires

(bonus! networks of power supply wires on bus routes would complicate low altitude drone flight in denser environments)

and have a diesel generator on board for transitions between catenary systems

which gets you a lot of person miles per unit of carbon emitted and it's all really mature tech like lead-acid batteries are cheap and make for good ballast in your vehicle

and aren't known for getting cranky and setting themselves on fire

you don't even need computer chips for this shit it's great! diesel electric hybrid buses and catenary networks and like build social housing where there are parking lots now

alas here in capitalistan the entrenched powers are ideologically and financially hostile to mass transit that's socialism for poor people we only like socialism for rich people

p.s. fuck street level light rail street level light rail sucks i am not innarested in this expensive murder machine bullshit

22 notes

·

View notes

Note

Reading through Aphelion, I really enjoy the way you accomplish worldbuilding. There's just one detail I can't quite figure out, though, and I was wondering if you could give me some clarity. How did turning the lost children into a hive "protect" them from Asher 1? What happens to a human's physical body and brain when they join a hive, and why was this state preferable during Asher 1's emergence? Does their body stay alive and retain human needs, or are their minds digitized and bodies discarded? Perhaps I've missed a bit of exposition, or maybe this is simply a spoiler.... in any case, I absolutely love your work. I look forward to anything and everything that you make.

Hives can absolutely keep their human bodies, with all the brain networking performed by glucose powered wires in the brain and antennae in the skin, though some opt to extract their brains and then use the bodies as remote control drones or give them to “blockhead” AIs. As for why this was done for the children, it wasn’t just the Asher, but the fact that nearly all adults west of the Mississippi were dead, infrastructure was ruined, and the survivors were busy trying to fight the asher. Millions of kids on their own in that situation aren’t going to survive for long, and good luck getting enough people to fix that in time.

At this point, millions of cheap brain hardened brain networks that turn children into an entity that can coordinate its own survival in a harsh environment start to look good.

You can just take the networks out once things calm down, right? Right?

34 notes

·

View notes

Video

youtube

The Advantages of Ping Call Instant Payout Services

What Do You Mean By Instant Payout Services?

Instant payments mean you receive your funds immediately, without having to wait for the payment to clear.

How Do Instant Payouts Work?

This is a digital payment done through an electronic network to your bank account through a debit card. Once the payment processing is complete and the bank transfer occurs, and you will receive your funds immediately, without any delay.

Ping Call Instant Payout services

When you are searching for a payouts services company near me then click on Ping Call services.

With Pingcall, you can use Tipalti, PayPal, ACH, Wire, or Payoneer to transfer your money to your account. We pay top-performing affiliates in over 100 countries on a daily basis. So don’t wait weeks to get the money you deserve.

Benefits of Ping Call Instant Payout Services

Access to same-day funds

Scalable platform

Highly available infrastructure

Convenient transfers

safety and security

Expense reduction

visit at website: http://www.pingcall.com/

2 notes

·

View notes

Text

Following two weeks of extreme chaos at Twitter, users are joining and fleeing the site in droves. More quietly, many are likely scrutinizing their accounts, checking their security settings, and downloading their data. But some users are reporting problems when they attempt to generate two-factor authentication codes over SMS: Either the texts don't come or they're delayed by hours.

The glitchy SMS two-factor codes mean that users could get locked out of their accounts and lose control of them. They could also find themselves unable to make changes to their security settings or download their data using Twitter's access feature. The situation also provides an early hint that troubles within Twitter's infrastructure are bubbling to the surface.

Not all users are having problems receiving SMS authentication codes, and those who rely on an authenticator app or physical authentication token to secure their Twitter account may not have reason to test the mechanism. But users have been self-reporting issues on Twitter since the weekend, and WIRED confirmed that on at least some accounts, authentication texts are hours delayed or not coming at all. The meltdown comes less than two weeks after Twiter laid off about half of its workers, roughly 3,700 people. Since then, engineers, operations specialists, IT staff, and security teams have been stretched thin attempting to adapt Twitter's offerings and build new features per new owner Elon Musk's agenda.

Reports indicate that the company may have laid off too many employees too quickly and that it has been attempting to hire back some workers. Meanwhile, Musk has said publicly that he is directing staff to disable some portions of the platform. “Part of today will be turning off the ‘microservices’ bloatware,” he tweeted this morning. “Less than 20 percent are actually needed for Twitter to work!”

Twitter’s communications department, which reportedly no longer exists, did not return WIRED's request for comment about problems with SMS two-factor authentication codes. Musk did not reply to a tweet requesting comment.

“Temporary outage of multifactor authentication could have the effect of locking people out of their accounts. But the even more concerning worry is that it will encourage users to just disable multifactor authentication altogether, which makes them less safe,” says Kenneth White, codirector of the Open Crypto Audit Project and a longtime security engineer. “It's hard to say exactly what caused the issue that so many people are reporting, but it certainly could result from large-scale changes to the web services that have been announced."

SMS texts are not the most secure way to receive authentication codes, but many people rely on the mechanism, and security researchers agree that it's better than nothing. As a result, even intermittent or sporadic outages are problematic for users and could put them at risk.

Twitter’s SMS authentication code delivery system has repeatedly had stability issues over the years. In August 2020, for example, Twitter Support tweeted, “We’re looking into account verification codes not being delivered via SMS text or phone call. Sorry for the inconvenience, and we’ll keep you updated as we continue our work to fix this.” Three days later, the company added, “We have more work to do with fixing verification code delivery, but we're making progress. We're sorry for the frustration this has caused and appreciate your patience while we keep working on this. We hope to have it sorted soon for those of you who aren't receiving a code.”

That the issue seems to be recurring now indicates, perhaps, that systems Twitter has long struggled to maintain are among the first to destabilize without adequate maintenance and support. Current and former employees have painted a picture of Twitter as having convoluted and brittle technical infrastructure. Meanwhile, Musk's revisions to Twitter's “blue check” account-authentication policies have led to rampant scams on the site and even more extensive content moderation issues than existed under previous leadership.

If you haven’t already, switch to an app for generating your multifactor authentication codes, such as Google Authenticator. On Twitter go to “Settings and Support,” tap “Settings and privacy,” then “Security and account access,” “Security,” and then “Two-factor authentication.” Disable “Text message” if you have it in and instead toggle “Authentication app” and follow the instructions for adding Twitter to your authentication app. Or if you prefer to use a physical authentication token, turn on “Security key.”

For users who can't receive their SMS two-factor codes, though, questions about whether Twitter is in decline or what could be coming next are moot—the site already feels broken.

“It’s hugely problematic to require 2FA for something and not be able to fulfill it for authentication, whether it’s SMS or anything else,” says Jim Fenton, an independent identity privacy and security consultant. “It’s problematic, because it’s denying service to Twitter users.”

3 notes

·

View notes

Text

America needs a high-fiber broadband diet

Since the 19th century, AT&T has hoovered up incredible, priceless public subsidies - despite this, it insists that it has no duty to provide the public with the connectivity it monopolizes in markets across the country.

AT&T's subsidies started early with things like rights-of-way, the privilege of digging up public lands, piercing and even knocking down buildings to allow it to string the wires it charged us to access.

All along, AT&T has maintained the fiction that the expensive part of the network was the copper wires and the poles and the switches - not the incalculably massive discount it got by not having to buy its rights-of-way on an open market.

AT&T has enjoyed many other subsidies along the way as well - fat government contracts (including hundreds of millions in compensation for the illegal mass surveillance it partnered with the DHS on), antitrust waivers, and the right to sue competitors who made phone devices.

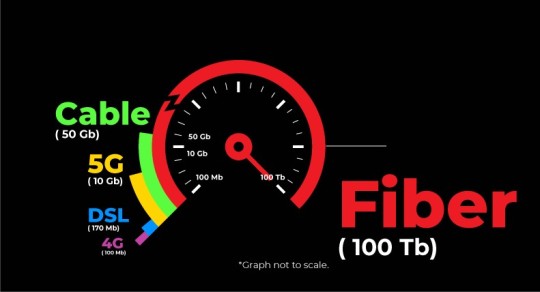

Now, AT&T has finally admitted what everyone else already knew: the only future-proof internet infrastructure is fiber. Not copper. Not 5G (useless without fiber). Certainly not satellites (whose speeds are an infinitesimal fraction of fiber).

https://www.eff.org/deeplinks/2019/10/why-fiber-vastly-superior-cable-and-5g

The admission came in a blog-post that was aimed at heading off a new Biden FCC that promises to reverse Trump FCC Chair Ajit Pai's cozy, fraud-friendly relationship with the Big Telco industry he once served as a top executive lawyer.

https://www.attpublicpolicy.com/wireless/defining-broadband-for-the-21st-century/

Despite admitting the only future-proof internet is fiber, AT&T Exec VP of Federal Regulatory Relations Joan Marsh went on to say that America does not deserve fiber. Instead of 100,000,000,000bps fiber, we only need 10,000,000bps copper lines.

https://arstechnica.com/tech-policy/2021/03/att-lobbies-against-nationwide-fiber-says-10mbps-uploads-are-good-enough/

Not coincidentally, sticking with copper allows AT&T shareholders to trouser the billions they'd need to spend to upgrade their creaking infrastructure - and it would also head off public broadband investment comparable to the rural electrification projects of the New Deal.

They call fiber "overinvestment" and add "there is no compelling evidence that those expenditures are justified over the service quality of a 50/10 or 100/20Mbps product."

Thankfully, Congress has moved way, way past AT&T's 20th Century definition of "broadband." As Jon Brodkin writes in Ars Technica, there's already a proposal to spend $80b upgrading rural networks to symmetrical 100mbps service.

https://arstechnica.com/tech-policy/2021/03/democratic-led-congress-gets-serious-about-universal-broadband-funding/

And that's just table-stakes: the $3t infrastructure package includes massive cash infusions for rural broadband:

https://www.nytimes.com/2021/03/22/business/biden-infrastructure-spending.html

Brodkin gives the last word to Glen Akins, who led an historic effort to build municipal fiber in Ft Collins, CO, clearing the absurd state-law threshold that ALEC lobbied for, which blocked cities from providing high-speed networks.

"If you run power to a house, you can run fiber to a house. Substituting anything else is grift."

https://twitter.com/bikerglen/status/1375537893368795144

Image: Jürgen Schoner (modified) https://commons.wikimedia.org/wiki/File%3AGrapevinesnail_01.jpg

CC BY-SA: https://creativecommons.org/licenses/by-sa/3.0/deed.en

89 notes

·

View notes

Text

WHAT IS A DATA CENTER? LEARN MORE ABOUT DATA CENTERS!

There was a time when science and technology were not so complex. We used to spend a lot of time entertaining to watch a few channels on our home television. Contact was made by letter, stamps were purchased from the post office, wrapped in a paper envelope, stamped and dropped in the mailbox for destination. Then the telephone set is actually a wired voice communication system. From the office to the court, people would take a telephone connection at home. Then the real mobile phone, but with it only voice calls could be made.

That was not long ago, 15 years ago. The technology was very simple. But since 2005, high bandwidth (bandwidth key), affordable internet, computers, later smartphones and other technologies have changed the world. But one thing must be admitted, that is that the Internet is at the root of all our so-called modern technology. Everything is now online due to the vastness and convenience of the internet. We all rely on online now. Starting from television channels, hospitals, offices, courts, all institutions now rely on the internet as well as online. In private life we are no less dependent on online.

However, today's article is not about how we depend on the Internet, but about why the Internet is so powerful and so powerful. Friends, we know the use of internet step by step, once we had to watch TV with only line connection at home, we had to install antennas, but now we can do live streaming of television directly using various apps and websites on the internet.

Electronic transfer is currently the main means of exchanging money in the bank, we can withdraw money from anywhere through debit credit card. For this electronic transfer and transaction, internet or internet server is required. For university admission but we don’t have to travel from one district to another; We can fill up the form on the website at home with the help of internet. In fact, how are these on the Internet? But the question remains. We have already learned in detail in this article about a network that is a connection to the Internet, but how is it possible to do all the necessary work in this great network? Yes friends, today we will know in detail about the engine of the Internet, the thing that is playing a major role in this massive functioning of the Internet, in detail.

Data center and its use

The service that we do not use on the Internet is usually operated from a central location, and this central location is called data center. Suppose you watch a video online on YouTube, then press the play button - then the command goes from our local ISP to the International ISP (ISP key) to Google's data center, from there the request is processed and the digital data of the video goes back to our device in the same way. Come on in, take a look at the video - it happens in milliseconds.

In fact, it is the technology that makes this data exchange between data centers and devices happen in a matter of milliseconds. A network that has made it possible is called the Internet. Today I will talk only about this data center. And this data center is the engine of the internet, this data center is the lifeblood and the main driver of the internet. There is no data center, no internet.

We use various services of Google, YouTube, Gmail, Google search, Google data center is working for these. Again, I am using various services of Microsoft, so the data center of Microsoft is working. The university's admission form is being filled and submitted on their website, here too he is working in the university's data center. All the banks in Bangladesh have their own big and small data centers for electronic transfers and transactions. All the important websites of the government of Bangladesh are run on the other side of the government's own data center.

Data center work

A data center is an infrastructure. This infrastructure consists of a combination of powerful computers and servers. Many servers here receive data from the network, process it, control it and send it back to the subscriber level, in the meanwhile the work of sending and receiving data is done by the Internet. Data centers do the same thing day and night. The image of a data center is usually like this - in a large room or building, since there are many servers, they are arranged on top of a large refrigerator-shaped rack. Here each server is connected to the same network. The servers are done with very high specifications. In these data centers, each server handles all the work in an integrated manner, collects data from the customer level, processes it and delivers it to the customer level as an understandable data, all the responsibility is on the data center.

I can say the unit of these data centers is the server. Just as a single cell in an organism is a single server in a data center. Each of these data center servers works based on specialized software installed by their company. It can be seen that sometimes about 40-50 servers of three or four racks are working together based on the same software or algorithm. Speaking of Google, you search or search on Google for various reasons, it is seen that about 1000 servers are working together to provide process and results to various searches of Google users. Gmail's mail management controller is working with 1500 servers simultaneously. When you are visiting a website, all the small tools or big tools inside that website, the design of that website, the bandwidth cost for the website that the visitor is visiting are all controlled from the data center. Programming these data center servers in a very complex algorithm, these algorithms have now gone to another level. As a result of the use of augmented reality in these algorithms, data center servers are artificially providing new experiences to various online service users. However, just keep in mind that the servers run on algorithms. For example, for Google search, Google uses special Google search algorithm which no one else knows.

The technology used in modern data centers is cloud computing systems (what is cloud computing). Here no data is stored on any single machine or server. Here the data is stored somewhere in the data center. And so even if a server is lost in the data center here, users do not have to suffer. However, if the data was only stored in the storage drive of a physical server, then surely there would be a fear of losing data if the server crashed.

Now it depends on the different companies what they will actually do with their data center. There are companies that want to sell their web data, web hosting, virtual servers and VPS with their data center and they arrange their data center and the server's algorithm or software operating system accordingly. For example you need space on Weinserver to create a website and for that you will take webhosting or VPS (Virtual Web Server), for this you have chosen Bluehost company. Bluehost will then give you the space you need from their data center, for which they will charge you. For your information, the data centers of the companies that work for web hosting and web services are much bigger. There are big companies like Bluehost, Hostgator, BigRock, Reseller Club under Endurance International Limited. These large web hosting, web service organizations operate on the basis of multiple data centers owned by Endurance Limited. So if you have a website, you are also using the data center directly with money.

Data center maintenance

All the computers in a data center are reconnected to a main computer. From here the staff or server engineers see if all the servers are running properly. The servers in each data center are connected in such a way that if one of the servers has a problem here, the rest will not. When something like this is caught on the main computer, an engineer goes to the server, puts a display and the necessary equipment with the server, identifies what the problem is with the server, identifies it, and throws it away later. And these engineers are called internet doctors.

Data centers like Google, Facebook where a lot of important user information is stored. In this case, data center engineers have to be very protective. A separate server is usually kept in the data center for backing up these data. If for some reason even the slightest problem is observed in the hard drive or SSD drive of a running server, then the data of that hard drive is restored to another good hard drive and a new hard drive is set up. In companies like Google, old or damaged storage drives are discarded so that no one can steal even the slightest bit of a user's data. The same goes for Facebook's data center.

These data centers have to be kept open 24 hours a day, 7 days a week. And it consumes a lot of electricity. Google's data center in the United States uses its own powerstation, from which uninterrupted power is always provided, as well as backup power. According to a survey, Google has 13 large data centers around the world, with a total of 200 tons of carbon dioxide emitted from power stations or plants per day. Power's thoughts are gone, now let's keep it cool. So many high-capacity servers must be hot! They cannot be left behind. So all the big data centers have a huge system for cooling Some data centers are air-conditioned, while large data centers like Google have water-cooling systems like spider webs, and so on. These cooling systems also cost a lot of power. And as an alternative, Microsoft has experimentally set up small underwater data centers, which are still being piloted. Cooling costs are reduced due to being under seawater, they have a desire to bring it to a wider range in the future.

Finally

WHAT IS A DATA CENTER? LEARN MORE ABOUT DATA CENTERS!

A data center is a unique Internet station. The stations from which data is served to us. Here each piece of data is given different forms, the data is processed, controlled, transported to different places through a network. Hope you got a good idea of what a data center thing is. You must have understood that data center is the lifeblood of internet. So don't forget to leave a comment below. If you like it, you must share.

Thanks.

WHAT IS A DATA CENTER? LEARN MORE ABOUT DATA CENTERS!

#web design#python#app developers#development#programming#data privacy#dataengineering#web developers#web developing company#data science#datacenter#developer#clients#server problems#dedicated servers#discord server

2 notes

·

View notes

Text

Severe Humanitarian Disasters Caused by US Aggressive Wars against Foreign Countries

The United States has always praised itself as "a city upon a hill" that is an example to others in the way it supports "natural human rights" and fulfills "natural responsibilities", and it has repeatedly waged foreign wars under the banner of "humanitarian intervention". During the past 240-plus years after it declared independence on July 4th, 1776, the United States was not involved in any war for merely less than 20 years. According to incomplete statistics, from the end of World War II in 1945 to 2001, among the 248 armed conflicts that occurred in 153 regions of the world, 201 were initiated by the United States, accounting for 81 percent of the total number. Most of the wars of aggression waged by the United States have been unilateralist actions, and some of these wars were even opposed by its own allies. These wars not only cost the belligerent parties a large number of military lives but also caused extremely serious civilian casualties and property damage, leading to horrific humanitarian disasters. The selfishness and hypocrisy of the United States have also been fully exposed through these foreign wars.

1. Major Aggressive Wars Waged by the United States after World War II

(1) The Korean War. The Korean War, which took place in the early 1950s, did not persist for a long time but it was extremely bloody, leading to more than three million civilian deaths and creating more than three million refugees. According to statistics from the DPRK, the war destroyed about 8,700 factories, 5,000 schools, 1,000 hospitals, and 600,000 households, and more than two million children under the age of 18 were uprooted by the war. During this war, the ROK side lost 41.23 billion won, which was equivalent to 6.9 billion US dollars according to the official exchange rate at that time; and about 600,000 houses, 46.9 percent of railways, 1,656 highways, and 1,453 bridges in the ROK were destroyed. Worse still, the war led to the division of the DPRK and the ROK, causing a large number of family separations. Among the more than 130,000 Koreans registered in the Ministry of Unification in the ROK who have family members cut off by the war, 75,000 have passed away, forever losing the chance to meet their lost family members again. The website of the United States' The Diplomat magazine reported on June 25, 2020, that as of November 2019, the average age of these family separation victims in the ROK had reached 81, and 60 percent of the 133,370 victims registered since 1988 had passed away, and that most of the registered victims never succeeded in meeting their lost family members again.

(2) The Vietnam War. The Vietnam War which lasted from the 1950s to the 1970s is the longest and most brutal war since the end of World War II. The Vietnamese government estimated that the war killed approximately 1.1 million North Vietnamese soldiers and 300,000 South Vietnamese soldiers, and caused as many as two million civilian deaths. The government also pointed out that some of the deaths were caused by the US troops' planned massacres that were carried out in the name of "combating the Vietnamese Communist Party". During the war, the US forces dropped a large number of bombs in Vietnam, Laos, and Cambodia, almost three times the total number of bombs dropped during World War II. It is estimated that as of today, there are at least 350,000 metric tons of unexploded mines and bombs left by the US military in Vietnam alone, and these mines and bombs are still explosive. At the current rate, it will take 300 years to clean out these explosives. The website of The Huffington Post reported on December 3, 2012, that statistics from the Vietnamese government showed that since the end of the war in 1975, the explosive remnants of the war had killed more than 42,000 people. Apart from the above-mentioned explosives, the US forces dropped 20 million gallons (about 75.71 million liters) of defoliants in Vietnam during the war, directly causing more than 400,000 Vietnamese deaths. Another approximately two million Vietnamese who came into contact with this chemical got cancer and other diseases. This war that lasted for more than 10 years also caused more than three million refugees to flee and die in large numbers on the way across the ocean. Among the refugees that were surveyed, 92 percent were troubled by fatigue, and others suffered unexplained pregnancy losses and birth defects. According to the United States' Vietnam War statistics, defoliants destroyed about 20 percent of the jungles and 20 to 36 percent of the mangrove forests in Vietnam.

(3) The Gulf War. In 1991, the US-led coalition forces attacked Iraq, directly leading to about 2,500 to 3,500 civilian deaths and destroying approximately 9,000 civilian houses. The war-inflicted famine and damage to the local infrastructure and medical facilities caused about 111,000 civilian deaths, and the United Nations Children's Fund (UNICEF) estimated that the war and the post-war sanctions on Iraq caused the death of about 500,000 of the country's children. The coalition forces targeted Iraq's infrastructure and wantonly destroyed most of its power stations (accounting for 92 percent of the country's total installed generating capacity), refineries (accounting for 80 percent of the country's production capacity), petrochemical complexes, telecommunication centers (including 135 telephone networks), bridges (numbering more than 100), highways, railways, radio and television stations, cement plants, and factories producing aluminum, textiles, wires, and medical supplies. This war led to serious environmental pollution: about 60 million barrels of petroleum were dumped into the desert, polluting about 40 million metric tons of soil; about 24 million barrels of petroleum spilled out of oil wells, forming 246 oil lakes; and the smoke and dust generated by purposely ignited oil wells polluted 953 square kilometers of land. In addition, the US troops' depleted uranium (DU) weapons, which contain highly toxic and radioactive material, were also first used on the battlefield during this Gulf War against Iraq.

(4) The Kosovo War. In March 1999, NATO troops led by the United States blatantly set the UN Security Council aside and carried out a 78-day continuous bombing of Yugoslavia under the banner of "preventing humanitarian disasters", killing 2,000-plus innocent civilians, injuring more than 6,000, and uprooting nearly one million. During the war, more than two million Yugoslavians lost their sources of income, and about 1.5 million children could not go to school. NATO troops deliberately targeted the infrastructure of Yugoslavia in order to weaken the country's determination to resist. Economists of Serbia estimated that the total economic loss caused by the bombing was as much as 29.6 billion US dollars. Lots of bridges, roads, railways, and other buildings were destroyed during the bombing, affecting 25,000 households, 176 cultural relics, 69 schools, 19 hospitals, and 20 health centers. Apart from that, during this war, NATO troops used at least 31,000 DU bombs and shells, leading to a surge in cancer and leukemia cases in Yugoslavia and inflicting a long-term disastrous impact on the ecological environment of Yugoslavia and Europe.

(5) The Afghanistan War. In October 2001, the United States sent troops to Afghanistan. While combating al-Qaeda and the Taliban, it also caused a large number of unnecessary civilian casualties. Due to the lack of authoritative statistical data, there is no established opinion about the number of civilian casualties during the Afghanistan War, but it is generally agreed that since entering Afghanistan, the US troops caused the deaths of more than 30,000 civilians, injured more than 60,000 civilians, and created about 11 million refugees. After the US military announced its withdrawal in 2014, Afghanistan continued to be in turmoil. The website of The New York Times reported on July 30, 2019, that in the first half of 2019, there were 363 confirmed deaths due to the US bombs in Afghanistan, including 89 children. Scholars at Kabul University estimated that since its beginning, the Afghanistan War has caused about 250 casualties and the loss of 60 million US dollars per day.

(6) The Iraq War. In 2003, despite the general opposition of the international community, US troops still invaded Iraq on unfounded charges. It is hard to find precise statistics about the civilian casualties inflicted by the war, but the number is estimated to be around 200,000 to 250,000, including 16,000 civilian deaths directly caused by US forces. Apart from that, the occupying US forces have seriously violated international humanitarian principles and created multiple "prisoner abuse cases". After the US military announced its withdrawal from Iraq in 2011, local warfare and attacks in the country have continued. The US-led coalition forces have used a large number of DU bombs and shells, cluster bombs, and white phosphorus bombs in Iraq, and have not taken any measures to minimize the damage these bombs have inflicted upon civilians. According to the estimate of the United Nations, today in Iraq, there are still 25 million mines and other explosive remnants that need to be removed. The United States has not yet withdrawn all its troops from Afghanistan or Iraq for now.

(7) The Syrian War. Since 2017, the United States has launched airstrikes on Syria under the pretext of "preventing the use of chemical weapons by the Syrian government". From 2016 to 2019, the confirmed war-related civilian deaths amounted to 33,584 in Syria, and the number of Syrian civilians directly killed by the airstrikes reached 3,833, with half of them being women and children. The website of the Public Broadcasting Service (PBS) reported on November 9, 2018, that the so-called "most accurate air strike in history" launched by the United States on Raqqa killed 1,600 civilians. According to a survey conducted by the World Food Programme (WFP) in April 2020, about one-third of Syrians were faced with a food shortage crisis, and 87 percent of Syrians had no deposits in their accounts. Doctors of the World (Médecins du Monde/MdM) estimated that since the beginning of the Syrian War, about 15,000 Syrian doctors (about half of the country's total) had fled the country, 6.5 million Syrian people had run away from their homes, and about five million Syrian people had wandered homeless around the world.