Text

Further projects

Besides, I didn't waste any time in the summer and signed up for two more research projects. Don't worry, it's too early to bury me yet, I'm somehow coping with the workload at the moment. Considering what prospects their implementation will open up to me, I shouldn't complain.

The third project is about meteorology and cyclone detection using ML-technologies. The work is based on an article created by Chinese (I think so) scientists, and is an improvement on the machine learning models used in their research. In a nutshell, we are trying to implement another kinds of algorithms for a 2D map to search for cyclones and develop the programs for a 3D map (to detect and determine the volumetric shapes of cyclones). Maybe, we can even devise an algorithm to predict the movement and evolution of cyclones in the future. And once again, I say "we" for a reason. I do this job together with my partner. I also will introduce her if she doesn't mind.

P.S.: Here's a cool image of geopotentials below to illustrate the map we are working with. The blue spots on it stand for cyclones.

The fourth project is also about ML techniques, but now in the area of astronomy and astrophysics. I'm not sure how much can I say about the project itself now, but one thing I can say for sure. And this thing is really hard to believe in. I got the offer for this work from abroad, more precisely, from Taiwan.

To get this offer, I just threw my candidacy at almost all the available summer internship programs that I could find during my entire current year of study. Thus, it worked, and I was able to get the only offer from Taiwan, which I gladly agreed to. (I'm a lucky one, what can I say).

My summer internship lasts for two months: from the beginning of July to the end of August. At the moment, I have been working on the project for the fourth week and have already achieved significant results. I expect to achieve as much as possible in the allotted time. And today I plan to finally ask my supervisor about the confidentiality (what can I publish here) of the project.

However, my trip to another country will not only be about work. And also about life here. More content from my travel blog (it turns out now) can be found on my Instagram and X/Twitter (I guess, I'm not sure... go check it out or something).

#student project#machine learning#neural network#developer's diaries#ai#artificial intelligence#computer vision#image segmentation#segmentation task#geoinformatics#meteorology#cyclones#astronomy#astrophysics

0 notes

Text

Current Projects

After so much time of silence, I feel the necessity to report about the past and current situation. I'm sorry if it seemed to you that I've abandoned the blog idea. I didn't forget it, I just couldn't find enough time to start publishing again. But now I'm determined to come back with news. And I definitely have a content to tell you.

And the first will be the satellite project. Honestly, we still haven't finish it, and I'm not sure if I have time to continue the work right now. Therefore, there won't be many updates about this project at the moment (if there are any at all). We do have some results for our assessment for Data Analysis subject, so we obtained some good grades. But I'll do my best to continue working when I'll find enough free time to do so. And yes, when I say "we", I am not mistaken. This project is being conducted by me and my partner. I'll introduce him if he doesn't mind.

P.S.: Here's the performance of our model. The first image is a satellite photo, the second - ground truth mask image, the third - prediction made by our not enough trained model.

The second will be the computational mathematics project. I have reached a milestone in the form of an almost beta version of the software package. The solver with automatic timestep sampling has already been created and works pretty well. It's still not really optimized but it's already useful and precise. There's still enough space fpr research and testing the algorithm, so it's possible to obtain in the near future enough material to make a publication in a scientific journal.

P.S.2: Here you can see the difference in performance of two algorithms: explicit RK4 (the upper image of unstable solution) and mine implicit (m, k)-method with autostepper (the lower image) - for the same problem.

#student project#developer's diaries#neural network#machine learning#ai#artificial intelligence#computer vision#image segmentation#segmentation task#satellite#remote sensing#optical sensors#geoinformatics#applied mathematics#applied physics#computational mathematics#computational physics#numerical methods#numerical simulation

0 notes

Text

Restructuring

After obtaining feedback on the content of my blog, I decided to restructure the blog. After brainstorming with the help of DeepSeek, I'm coming up now with the idea to split the blog into different thematic areas published on specific platforms.

Tumblr will remain as a central one, as it is where the idea of a science blog originated. Brief reviews of every (or at least almost every) topic mentioned in the entire system of blogs will be published here.

And here are the links to other blog platforms:

X/Twitter - it will be used for quick updates, I suppose. And as a channel for convenient interaction with the author, I reckon.

Instagram - it will be used for content based on the visual component (in simple terms, the publication of interesting photos). It will be updated with posts with enviable regularity during trips, for example.

ResearchGate - this will be the main platform for more scientific component of my blog. Updates about my current and future projects will be placed there in a detailed format. The final publications can also be represented there.

Academia.edu - it seems it will be a platform rather for the final type of publications.

LinkedIn - considering that I already have a platform for publications, they are more likely to be duplicated here. But I'll keep this blog section updated just in case, I guess.

#restructuring#links#pinned info#pinned post#info#information#x/twitter#x#twitter#instagram#researchgate#academiaedu#linkedin

0 notes

Text

Project "CompMath.AstrophysicsODEs": Software Package

Finally, I got my hands on this project again. There is definitely a strong progress in the project at the moment: there is an alpha version of the software package. It is an ODE systems configurator, (m, k) - solver and visualizer combined with user-friendly interface. Unfortunately, currently only a version of solver with a constant timestep has been made, since the theory of Novikov methods is freely available only for such an implementation of the algorithm, as far as I know.

To create a solver with automatic timestep sampling, a couple of different algorithms are usually used. Based on the discrepancy in the solutions of the equations produced by these methods, it is decided wether to change the timestep in order to reduce the error by decreasing it, or, conversely, to lessen the total number of steps by increasing it. Among the methods described in the scientific literature with decent accuracy, there is only one calculated one. Thus, to manufacture more advanced solver, it is necessary to devise another algorithm and pair it with the first one.

And that's where the good news begins: after several hours of cumbersome "semi-automatic" calculations, another theoretical technique with all the necessary quotients has already been computed. Consequently, further implementation of the method with an automatic time step will be only a matter of technique.

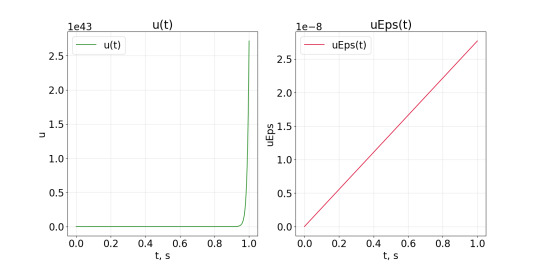

In the meantime, you can admire the work of the software package with a "constant solver" on a very trivial stiff problem with an exponential analytic solution.

P.S.: The graphics below show the computed solution to a simple task (u(t)) and its relative error (uEps(t)) based on the deviation from the analytical solution. I ask you to pay attention to the fact that the error is really low (maximum about 0.0000025%) for a rather stiff problem (the value reaches 2.5·10^43 in one second).

#student project#computational mathematics#computational physics#applied mathematics#applied physics#numerical methods#numerical simulation

0 notes

Text

Project "CompMath.AstrophysicsODEs": Early Projects, Part 2

Let's continue the series of Early Projects. The next one was from the sphere of analytical mechanics. The task was to simulate the rotational motion of bodies using quaternions. Quaternions make it possible to describe such motions quite succinctly in formulas. Special attention should be paid to modeling the Dzhanibekov effect, aka Tennis racket theorem.

P.S.: Below is a test of the operation of this simulator with a spin of the simple screw around its axis.

P.S.2: Here is a simulation of the Dzhanibekov effect. As it can be seen when the body have three distinct principal moments of inertia, rotation around one of the principal axes (associated with the second-order inertia moment) unstable. That's why the wing screw turns over.

#student project#computational mathematics#computational physics#applied mathematics#applied physics#analytical mechanics#numerical methods#numerical simulation#mechanics#rotation#tennis racket theorem#Dzhanibekov effect

0 notes

Text

Project "CompMath.AstrophysicsODEs": Early Projects, Part 1

While the work is underway, and there is not much to show, it was decided to present examples of past projects in the field of computational physics. They were manufactured in the same way as the planned project: an integration solver on C++ language, a visualizer on Python… It's not appropriate to leave this blog for a long time without any content at all.

The first simple project from the field of applied physics was devoted to modeling a double pendulum using Lagrangians. This is a good example from chaos theory, representing the effect when the slightest changes in the initial conditions lead to a completely different behavior of the system.

P.S.: Below is an animation of two double mathematical pendulums with a bit different initial conditions (the second pendulum has a more elevated second end).

P.S.2: Here is an animation of two double pendulums, but with a much heavier second end. Apparently, this causes a more predictable behavior of the double pendulum, allowing it to be interpreted as a pendulum inside a pendulum.

#student project#computational mathematics#computational physics#applied mathematics#applied physics#numerical methods#numerical simulation#mechanics#oscillations#pendulum#double pendulum

0 notes

Text

Project "ML.Satellite": Image Parser

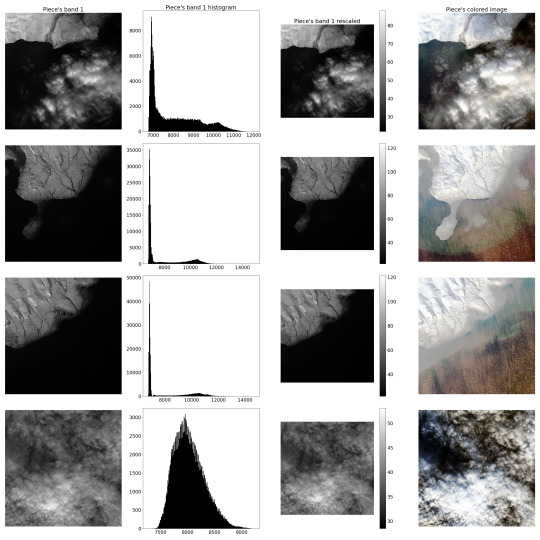

In order to speed up the "manufacturing" of the training dataset as much as possible, extreme automation is necessary. Hence, the next step was to create a semi-automatic satellite multispectral Image Parser.

Firstly, it should carve the smaller pieces from the big picture and adjust them linearly, providing radiometrical rescaling, since spectrometer produces somewhat distorted results compared to the actual radiance of the Earth's surface. These "pieces" will comprise the dataset. It was proposed to "manufacture" about 400 such "pieces" in a 500 by 500 "pixels" format.

P.S.: Below are example images of the procedure described above. (Novaya Zemlya Archipelago)

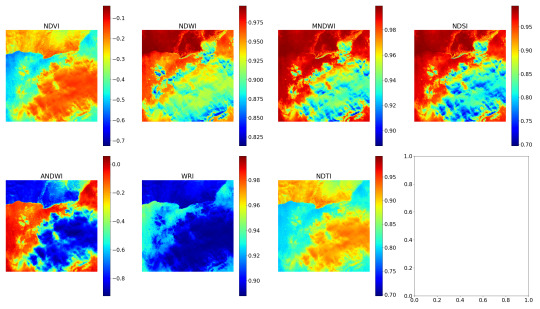

Secondly, it should calculate some remote sensing indexes. For this task, a list of empirical indexes was taken: NDVI, NDWI, MNDWI, NDSI, ANDWI (alternatively calculated NDWI), WRI and NDTI. Only several of them were useful for the project purposes.

P.S.2: The following are example images of the indexing procedure.

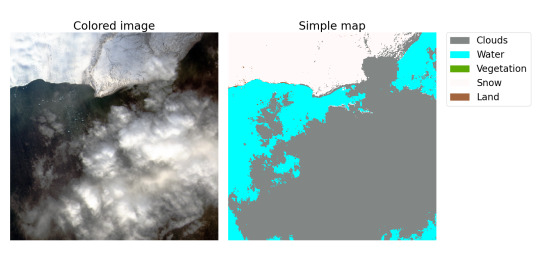

Lastly, it should compute the "labels" for the "pieces" describing a schematic map of the territory on image splitting this territory into several types according to the calculated indexes. To simplify segmentation, in our project, a territory can consist only of the following types: Clouds, Water (seas, oceans, rivers, lakes…), Vegetation (forests, jungles…), Snow and Land (this class includes everything else). And, of course, Parser should save the processed dataset and labels.

P.S.3: Below are sample image of a colored "piece" and a simple map based on the label assigned to this "piece". Map may seem a drop inaccurate and it's not surprising, since as far as I know, indexes are empirical and by definition cannot be precise. As a result, if it is possible to create a sufficiently accurate model that predicts analytical classification, then it may be possible to create a model that classifies optical images better than analytics.

#student project#machine learning#neural network#ai#artificial intelligence#computer vision#image segmentation#segmentation task#satellite#remote sensing#optical sensors#geoinformatics

0 notes

Text

Project "CompMath.AstrophysicsODEs": Launch

The second semester project in a row. The "Mentor" program is back, however the project form differs. Since I've been working in numerical modeling for the second year now, I found myself eager to take part in the computational physics project. And education in the speciality "Space Research" has shaped the sphere of interests of the listed project in the computational mathematics field. Which is why I started a project "Applications of Computational Mathematics in Astrophysics" (working title).

Currently, I'll be working on a software implementation of a one-step, limplicit, variable-step method for solving Ordinary Differential Equations (ODEs) of the Rosenbrock type, known as (m, k) - Novikov method. Stiff Cauchy problems cannot be solved correctly by explicit methods without rocketing the number of calculations or inaccuracies, because these methods aren't stable enough. Implicit methods are a solution for the problem of stiff systems due to their much larger area of stability, but they are more complex because of their non-linearity. As a rule, systems of differential equations in astrophysics, plasma physics, and the theory of nucleosynthesis are stiff, therefore this specific software is really important for solving this kind of tasks.

I plan to manufacture this software the way I've practiced before and found convenient, professional and profitable. The solver itself is going to be made using the C++ language. I plan to create the interface and visualizer using Python language. The configurations and calculation results will most likely be transferred between these programs using JSON files.

The code itself, highly likely, won't be published on my github for non-disclosure reasons.

#new project#student project#computational mathematics#computational physics#applied mathematics#applied physics#numerical methods#numerical simulation#astrophysics#plasma physics#nuclear physics

1 note

·

View note

Text

Project "ML.Satellite": Launch

The new semester brings to me a new ML project. A prerequisite for getting a good grade for a mandatory data analysis course is to create the beneficial neural network of medium complexity. Thus, I started a project "Machine learning model for the terrain segmentation".

The idea of project is to train a model which could divide the multispectral satellite images into parts by type of terrain (to mark where land and water are in the image, where forest and snow are, etc.) An analytical solution for this task does exist, but it appears to be empirical in nature. And it would be really nice to devise a model independent of this solution. But I haven't been able to find a useful dataset for such a task, therefore this task is getting more and more difficult since I need to create such a dataset. First of all, this dataset will be created using an analytical solution, so the goal changes from trying to beat the analytical solution to trying to catch up with the existing solution. The resulting dataset will be published by the end of the project.

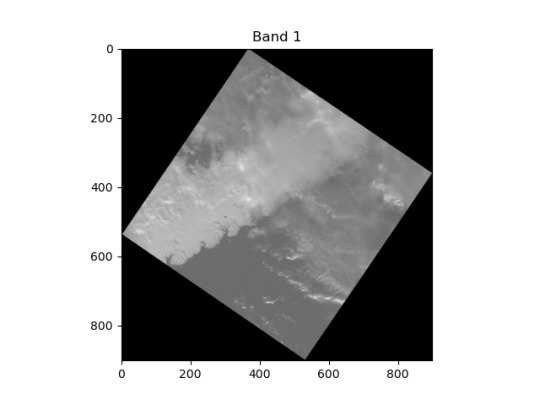

The dataset will be "manufactured" using the data from the Landsat 8 satellite. It provides the images of 11 bands with an aspect size of about 5000-10000 "pixels", excluding the 8th band of doubled size.

P.S.: Below is an example of an image band reduced in size by 10 times.

#new project#student project#machine learning#neural network#ai#artificial intelligence#computer vision#image segmentation#segmentation task#satellite#remote sensing#optical sensors#geoinformatics

0 notes

Text

Project "ML.Pneumonia": Finale

Final accuracy metrics:

Project model - 83%

Basic model - 75%

Basic model with larger dataset - 83%

Overall results:

A good model of autoencoder has been developed

A classifier has been created which surpasses the basic model in accuracy

Both models are scaled well (the more voluminous datasets are used in training - the better results could be obtained)

Development proposals:

Increase hardware resources

Consider the need to create larger models (increase the number of CNNs layers, filters…)

Use larger datasets

Improve the preprocessing of the datasets used (CNN may not handle incorrectly rotated scans well, and not always the corresponding scans are presented in the datasets (e.g. in one of the datasets I've noticed a longitudinal CT scan instead of a transverse one))

The link to the GitHub repository with clean project code and details of results is left below.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

Project "ML.Pneumonia": Classifier

Using the weights obtained as part of the autoencoder training, the classifier was trained. Thrice. The training was unstable in terms of accuracy metric records, thus it was conducted three times to achieve the best results. The first attempt was the best one. The final accuracy is about 83%.

P.S.: The graphs of the best attempt metrics are shown below.

P.S.2: And here is the graph of the original model (basic method) "performance". Apparently, learning instability is a common problem in this classification issue.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

Project "ML.Pneumonia": Autoencoder second iteration

Happy New Year! The exam session is in full swing, and the project has come to its logical conclusion. So, here are the latest publications about this project.

From the results of the last post, it was evident that the autoencoder needed to be refined, so the finished code for the larger model was kind of outsourced. However, it was all in vain. For some reason the code could not be run on a more powerful computer. And due to lack of time before the official conference of the project, it was decided to somehow improve the model without involving "external powers". And the "miracle" happened. With even more optimized memory usage, the problem of unbalancing the training and validation datasets was eliminated, so that the autoencoder could use more training data. This really enhanced the model and approximately "doubled its precision" (to be exact, this halved its loss). Although these are not the "ideal" results either, this model demonstrates significantly better performance.

P.S.: Below are two images depicting the work of the refined model.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

1 note

·

View note

Text

Project "ML.Pneumonia": Autoencoder first results

The first autoencoder was trained - the first dubious results were obtained. Advantages - the shape has been preserved and the overall content of the image is still recognizable. Disadvantages - all the rest: indistinguishable details, blurring, inaccurate edges... Therefore, it was decided to use other external and more powerful resources to build a larger and more precise model.

P.S.: Below are two images depicting the work of the current model.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

1 note

·

View note

Text

Project "ML.Pneumonia": Basic method results

Some time after transferring the code into Google Colab I decided to train the basic Convolutional Neural Network (CNN), which was implemented in the Hasib Zunair's code. In order to evaluate its accuracy and figure out the limit that our refined model should overcome.

Below is a picture of the best results, where validation accuracy is the target. Its best value is 0.75 or 75%.

P.S.: In this case, the train accuracy is lower than the validation one. That's odd.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

Project "ML.Pneumonia": Augmentations

At the same time with "RAM-troubleshooting", I encountered a problem related to another side of work: the augmentations. The current version of tensorflow, presumably due to its obsolescence, didn't support some necessary, convenient methods. Which, for example, were supported by the already loaded numpy. Thus, I circumvented the limitations by augmenting datasets represented as numpy arrays instead of tensorflow tensors.

So, I created two suitable augmentation methods and used them to build training datasets for the autoencoder, naming them "cut" and "noise". "Cut" cuts out some part of the image, darkening the area on it, and "noise" - makes noise on it, pretty simple.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

Project "ML.Pneumonia": Lack of RAM

After processing the downloaded datasets, I uploaded them to Google Drive for further utilization in Colab. But they took up so much RAM that their thoughtless usage steadily crashed the notebook environment. Therefore, I have optimized the use of datasets and reduced their volume.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

Project "ML.Pneumonia": Big data

It was decided to create a dataset for the autoencoder based on other datasets of CT scans of lungs using augmentation methods to feign "scans of poor quality". Thus, I uploaded two more large datasets of CT scans of cancer-stricken lungs. This download took several days.

P.S.: The important captions in the picture below are translated

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes